Abstract

In this paper, we propose a novel nature-inspired meta-heuristic algorithm for continuous global optimization, named the phase transition-based optimization algorithm (PTBO). It mimics three completely different kinds of motion characteristics of elements in three different phases, which are the unstable phase, the meta-stable phase, and the stable phase. Three corresponding operators, which are the stochastic operator of the unstable phase, the shrinkage operator in the meta-stable phase, and the vibration operator of the stable phase, are designed in the proposed algorithm. In PTBO, the three different phases of elements dynamically execute different search tasks according to their phase in each generation. It makes it such that PTBO not only has a wide range of exploration capabilities, but also has the ability to quickly exploit them. Numerical experiments are carried out on twenty-eight functions of the CEC 2013 benchmark suite. The simulation results demonstrate its better performance compared with that of other state-of-the-art optimization algorithms.

1. Introduction

Nature-inspired optimization algorithms have become of increasing interest to many researchers in optimization fields in recent years [1]. After half a century of development, nature-inspired optimization algorithms have formed a great family. It not only has a wide range of contact with biological, physical, and other basic science, but also involves many fields such as artificial intelligence, artificial life, and computer science.

Due to the differences in natural phenomena, these optimization algorithms can be roughly divided into three types. These are algorithms based on biological evolution, algorithms based on swarm behavior, and algorithms based on physical phenomena. Typical biological evolutionary algorithms are Evolutionary Strategies (ES) [2], Evolutionary Programming (EP) [3], the Genetic Algorithm (GA) [4,5,6], Genetic Programming (GP) [7], Differential Evolution (DE) [8], the Backtracking Search Algorithm (BSA) [9], Biogeography-Based Optimization (BBO) [10,11], and the Differential Search Algorithm (DSA) [12].

In the last decade, swarm intelligence, as a branch of intelligent computation models, has been gradually rising [13]. Swarm intelligence algorithms mainly simulate biological habits or behavior, including foraging behavior, search behavior and migratory behavior, brooding behavior, and mating behavior. Inspired by these phenomena, researchers have designed many intelligent algorithms, such as Ant Colony Optimization (ACO) [14], Particle Swarm Optimization (PSO) [15], Bacterial Foraging (BFA) [16], Artificial Bee Colony (ABC) [17,18,19], Group Search Optimization (GSO) [20], Cuckoo Search (CS) [21,22], Seeker Optimization (SOA) [23], the Bat Algorithm (BA) [24], Bird Mating Optimization (BMO) [25], Brain Storm Optimization [26,27] and the Grey Wolf Optimizer (GWO) [28].

In recent years, in addition to the above two kinds of algorithms, intelligent algorithms simulating physical phenomenon have also attracted a great deal of researchers’ attention, such as the Gravitational Search Algorithm (GSA) [29], the Harmony Search Algorithm (HSA) [30], the Water Cycle Algorithm (WCA) [31], the Intelligent Water Drops Algorithm (IWDA) [32], Water Wave Optimization (WWO) [33], and States of Matter Search (SMS) [34]. The classical optimization algorithm about a physical phenomenon is simulated annealing, which is based on the annealing process of metal [35,36].

Though these meta-heuristic algorithms that have many advantages over traditional algorithms, especially in NP hard problems, have been proposed to tackle many challenging complex optimization problems in science and industry, there is no single approach that is optimal for all optimization problems [37]. In other words, an approach may be suitable for solving these problems, but it is not suitable for solving those problems. This is especially true as global optimization problems have become more and more complex, from simple uni-modal functions to hybrid rotated shifted multimodal functions [38]. Hence, more innovative and effective optimization algorithms are always needed.

A natural phenomenon provides a rich source of inspiration to researchers to develop a diverse range of optimization algorithms with different degrees of usefulness and practicability. In this paper, a new meta-heuristic optimization algorithm inspired by the phase transition phenomenon of elements in a natural system for continuous global optimization is proposed, which is termed phase transition-based optimization (PTBO). From a statistical mechanics point of view, a phase transition is a non-trivial macroscopic form of collective behavior in a system composed of a number of elements that follow simple microscopic laws [39]. Phase transitions play an important role in the probabilistic analysis of combinatorial optimization problems, and are successfully applied in Random Graph, Satisfiability, and Traveling Salesman Problems [40]. However, there are few algorithms with the mechanism of phase transition for solving continuous global optimization problems in the literature.

Phase transition is ubiquitous in nature or in social life. For example, at atmospheric pressure, water boils at a critical temperature of 100 °C. When the temperature is lower than 100 °C, water is a liquid, while above 100 °C, it is a gas. Besides this, there are also a lot of examples of phase transition. Everybody knows that magnets attract nails made out of iron. However, the attraction force disappears when the temperature of the nail is raised above 770 °C. When the temperature is above 770 °C, the nail enters a paramagnetic phase. Moreover, it is well-known that mercury is a good conductor for its weak resistance. Nevertheless, the electrical resistance of mercury falls abruptly down to zero when the temperature passes through 4.2 K (kelvin temperature). From a macroscopical view, a phase transition is the transformation of a system from one phase to another one, depending on the values of control parameters, such as temperature, pressure, and other outside interference.

From the above examples, we can see that each system or matter has different phases and related critical points for those phases. We can also observe that all phase transitions have a common law, and we may think that phase transition is the competitive result of two kinds of tendencies: stable order and unstable disorder. In complex system theory, phase transition is related to the self-organized process by which a system transforms from disorder to order. From this point of view, phase transition implicates a search process of optimization. The thermal motion of an element is a source of disorder [41]. The interaction of elements is the cause of order.

In the proposed PTBO algorithm, the degree of order or disorder is described by stability. We used the value of an objective function to depict the level of stability. For the sake of generality, we extract three kinds of phases in the process of transition from disorder to order, i.e., an unstable phase, a meta-stable phase [42] and a stable phase. From a microscopic viewpoint, the diverse motion characteristics of elements in different phases provide us with novel inspiration to develop a new meta-heuristic algorithm for solving complex continuous optimization problems.

This paper is organized as follows. In Section 2, we briefly introduce the prerequisite preparation for the phase transition-based optimization algorithm. In Section 3, the phase transition-based optimization algorithm and its operators are described. An analysis of PTBO and a comparative study are presented in Section 4. In Section 5, the experimental results with those of other state-of-the-art algorithms are demonstrated. Finally, Section 6 draws conclusions.

2. Prerequisite Preparation

2.1. Fundamental Concepts

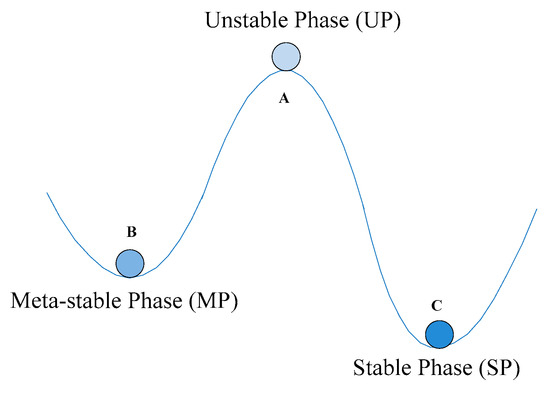

Nature itself is a large complex system. In a natural system, the motion of elements transforming from an unstable phase (disorder) to a stable phase (order) is an eternal natural law. In this paper, we uniformly call a molecule in water, an atom in iron, an electron in mercury, etc., an element. The phase of an element in a system may be divided into an unstable phase, a meta-stable phase, and a stable phase. Figure 1 shows three possible positions of elements in a system.

Figure 1.

Three possible positions of elements in a system.

From Figure 1, we can observe that an element is most unstable in the position of point A, and we intuitively call it an unstable phase. On the contrary, in the position of point C, the element is most stable, and we name it a stable phase. The position of point B, between point A and point C, is the transition phase, and we term it a meta-stable phase. The definitions of the three phases are as follows.

Definition 1.

Unstable Phase (UP). The element is in a phase of complete disorder, and moves freely towards an arbitrary direction. In the case of this phase, the element has a large range of motion, and has the ability of global divergence.

Definition 2.

Meta-stable Phase (MP). The element is in a phase between disorder and order, and moves according to a certain law, such as towards the lowest point. In the case of this phase, the element has a moderate activity, and possesses the ability of local shrinkage.

Definition 3.

Stable Phase (SP). The element is in a very regular phase of orderly motion. In the case of this phase, the element has a very small range of activity and has the ability of fine tuning.

According to the above definitions, we can give a more detailed description and examples about the characteristics of the three phases. These motion characteristics in three phases provide us with rich potential to develop the proposed PTBO algorithm. The following Table 1 summarizes the motional characteristics of the unstable phase, the meta-stable phase, and the stable phase.

Table 1.

The motional characteristics of elements in the three phases.

2.2. The Determination of Critical Interval about Three Phases

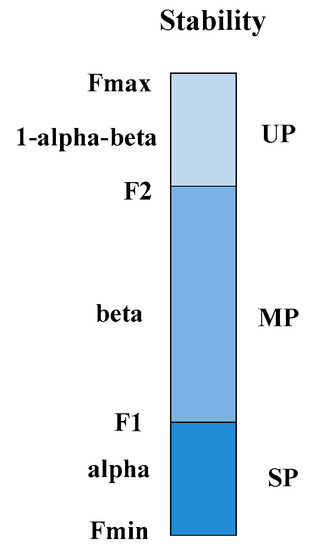

As mentioned before, we use stability, which is depicted by the fitness value of an objective function, to describe the degree of order or disorder of an element. In our proposed algorithm, the higher the fitness value of an element is, the worse the stability is. The question of how to divide the critical intervals of the unstable phase, the meta-stable phase, and the stable phase is a primary problem that must be addressed before the proposed algorithm is designed.

For simplicity, we use to denote a maximum fitness value, and we say this element is in the most unstable phase. On the contrary, denotes a minimum fitness value. Then, we set up that the stable phase accounts for , and the proportion of the meta-stable phase is . So, the proportion of the unstable phase is . The ratio of three critical intervals is shown in Figure 2.

Figure 2.

The critical intervals of the three phases.

Although and are dynamically changed in each generation, which means the iteration process of phase transition, the basic relationship between the phase of the elements and the critical intervals is shown in Table 2.

Table 2.

The relationship between the intervals and the three phases.

3. Phase Transition-Based Optimization Algorithm

3.1. Basic Idea of the PTBO Algorithm

In this work, the motion of elements from an unstable phase to another relative stable phase in PTBO is as natural selection in GA. Many of these iterations from an unstable phase to another relative stable phase can eventually make an element reach absolute stability. The diverse motional characteristics of elements in the three phases are the core of the PTBO algorithm to simulate this phase transition process of elements. In the PTBO algorithm, three corresponding operators are designed. An appropriate combination of the three operators makes an effective search for the global minimum in the solution space.

3.2. The Correspondence of PTBO and the Phase Transition Process

Based on the basic law of elements transitioning from an unstable phase (disorder) to a stable phase (order), the correspondence of PTBO and phase transition can be summarized in Table 3.

Table 3.

The correspondence of phase transition-based optimization (PTBO) algorithm and phase transition.

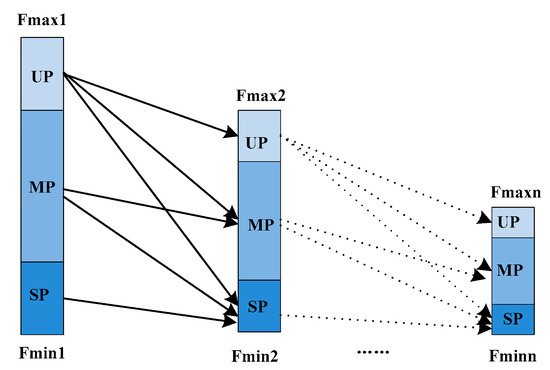

3.3. The Overall Design of the PTBO Algorithm

The simplified cyclic diagram of the phase transition process in our PTBO algorithm is shown in Figure 3. It is a complete cyclic process of phase transition from an unstable phase to a stable phase. Firstly, in the first generation, we calculate the maximum and minimum fitness value for each element, respectively, and divide the critical intervals of the unstable phase, the meta-stable phase, and the stable phase according to the rules in Table 2. Secondly, the element will perform the relevant search according to its own phase. If the result of the new degree of stability is better than that of the original phase, the motion will be reserved. Otherwise, we will abandon this operation. That is to say, if the original phase of an element is UP, the movement direction may be towards UP, MP, and SP. However, if the original phase of an element is MP, the movement direction may be towards MP and SP. Of course, if the original phase of an element is SP, the movement direction is towards only SP. Finally, after much iteration, elements will eventually obtain an absolute stability.

Figure 3.

A phase transition from an unstable phase to a stable phase.

Broadly speaking, we may think of PTBO as an algorithmic framework. We simply define the general operations of the whole algorithm about the motion of elements in the phase transition. In a word, PTBO is flexible for skilled users to customize it according to a specific scene of phase transition.

According to the above complete cyclic process of the phase transition, the whole operating process of PTBO can be summarized as three procedures: population initialization, iterations of three operators, and individual selection. The three operators in the iterations include the stochastic operator, the shrinkage operator, and the vibration operator.

3.3.1. Population Initialization

PTBO is also a population-based meta-heuristic algorithm. Like other evolutionary algorithms (EAs), PTBO starts with an initialization of a population, which contains a population size (the size is ) of element individuals. The current generation evolves into the next generation through the three operators described as below (see Section 3.3.2). That is to say, the population continually evolves along with the proceeding generation until the termination condition is met. Here, we initialize the j-th dimensional component of the i-th individual as

where is an uniformly distributed random number between 0 and 1, and are the upper boundary and lower boundary of j-th dimension of each individual, respectively.

3.3.2. Iterations of the Three Operators

Now we simply give some certain implementation details about the three operators in the three different phases.

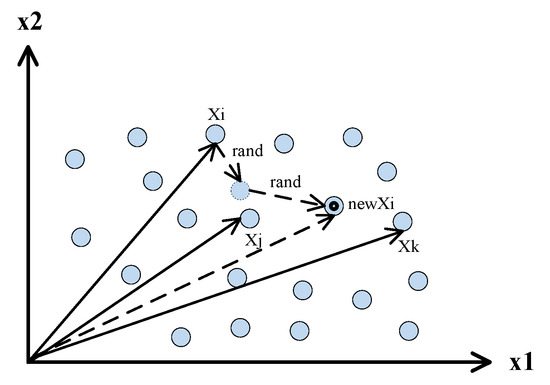

(1) Stochastic operator

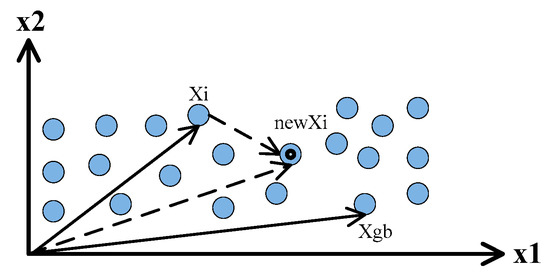

Stochastic diffusion is a common operation in which elements randomly move and pass one another in an unstable phase. Although the movement of elements is chaotic, it actually obeys a certain law from a statistical point of view. We can use the mean free path [43], which is a distance between an element and two other elements in two successive collisions, to represent the stochastic motion characteristic of elements in an unstable phase. Figure 4 simply shows the process of the free walking path of elements.

Figure 4.

Two-dimensional example showing the process of the free walking path of elements.

The free walking path of elements is the distance traveled by an element and other individuals through two collisions. Therefore, the stochastic operator of elements may be implemented as follows:

where is the new position of after the stochastic motion, and are two random vectors, where each element is a random number in the range (0, 1), and the indices j and k are mutually exclusive integers randomly chosen from the range between 1 and N that is also different from the indices i.

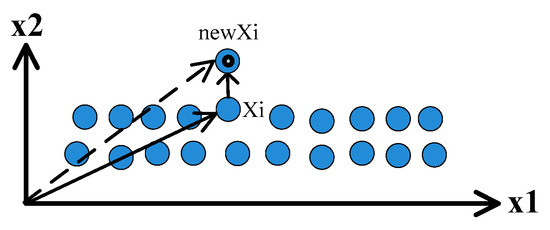

(2) Shrinkage operator

In a meta-stable phase, an element will be inclined to move closer to the optimal one. From a statistical standpoint, the geometric center is a very important digital characteristic and represents the shrinkage trend of elements in a certain degree. Figure 5 briefly gives the shrinkage trend of elements towards the optimal point.

Figure 5.

The shrinkage trend of elements towards the optimal point.

Hence, the gradual shrinkage to the central position is the best motion to elements in a meta-stable phase. So, the shrinkage operator of elements may be implemented as follows:

where is the new position of after the shrinkage operation, is the best individual in the population, and is a normal random number with mean 0 and standard deviation 1. The Normal distribution is an important family of continuous probability distributions applied in many fields.

(3) Vibration operator

Elements in a stable phase will be apt to only vibrate about their equilibrium positions. Figure 6 briefly shows the vibration of elements.

Figure 6.

The vibration of elements in an equilibrium position.

Hence, the vibration operator of elements may be implemented as follows:

where is the new position of after the vibration operation, is a uniformly distributed random number in the range (0, 1), and is the control parameter which regulates the amplitude of jitter with a process of evolutionary generation. With the evolution of the phase transition, the amplitude of vibration will gradually become smaller. is described as follows:

where the and denote the maximum number of iterations and current number of iteration respectively, and stands for the exponential function.

3.3.3. Individual Selection

In the PTBO algorithm, like other EAs, one-to-one greedy selection is employed by comparing a parent individual and its new generated corresponding offspring. In addition, this greedy selection strategy may raise diversity compared with other strategies, such as tournament selection and rank-based selection. The selection operation at the k-th generation is described as follows:

where is the objective function value of each individual.

3.4. Flowchart and Implementation Steps of PTBO

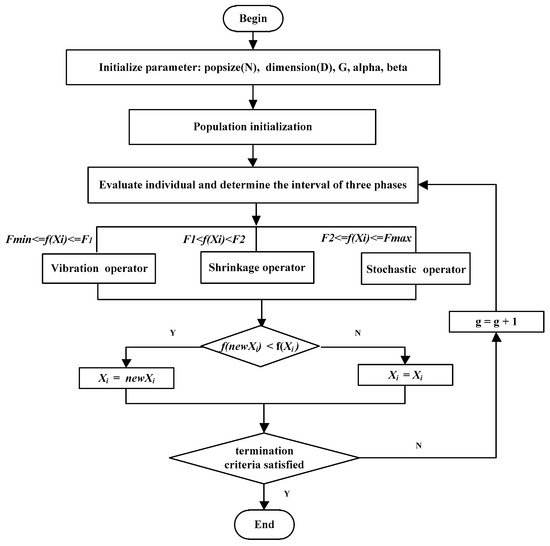

As described above, the main flowchart of the PTBO algorithm is given in Figure 7.

Figure 7.

The main flowchart of PTBO.

The implementation steps of PTBO are summarized as follows:

Step 1. Initialization: set up algorithm parameters N, D, alpha and beta, randomly generate initial population of elements, and set ;

Step 2. Evaluation and partition interval: calculate the fitness values of all individuals and obtain the and , and divide the critical interval of UP, MP and SP according to Table 2;

Step 3. Stochastic operator: using Formula (2) to create ;

Step 4. Shrinkage operator: using Formula (3) to update ;

Step 5. Vibration operator: using Formula (4) and (5) to update ;

Step 6. Individual selection: accept if is better than ;

Step 7. Termination judgment: if termination condition is satisfied, stop the algorithm; otherwise, , go to Step 3.

4. The Analysis of PTBO and a Comparative Study

4.1. The Analysis of Time Complexity

For PTBO, the main operations include the operation of population initialization and the stochastic operator, shrinkage operator, and vibration operator. The time complexity of each operation in a single iteration can be computed as follows:

- 1

- Population initialization operation: .

- 2

- Stochastic operator: .

- 3

- Shrinkage operator: .

- 4

- Vibration operator: .

Therefore, the total worst time complexity of PTBO in one iteration is . According to the operational rules of the symbol O, the worst time complexity of one iteration for PTBO can be simplified as . It is worth noting that PTBO has the similar time complexity to some popular meta-heuristic algorithms such as PSO ().

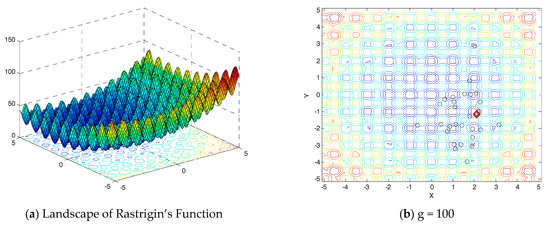

4.2. The Dynamic Implementation Analysis of PTBO

In this section, the step-wise procedure for the implementation of PTBO for optimization is presented. For the demonstration of the process, Rastrigin’s function [44] is herein considered as an example. Rastrigin’s function is a classic test function in optimization theory in which the point of global minimum is surrounded by a large number of local minima. To converge to the global minimum without being stuck at one of these local minima, however, is extremely difficult. Some numerical solvers need to take a long time to converge to it. Three-dimensional contour plot for Rastrigin’s function is shown in Figure 8a. Rastrigin’s function is described as follows:

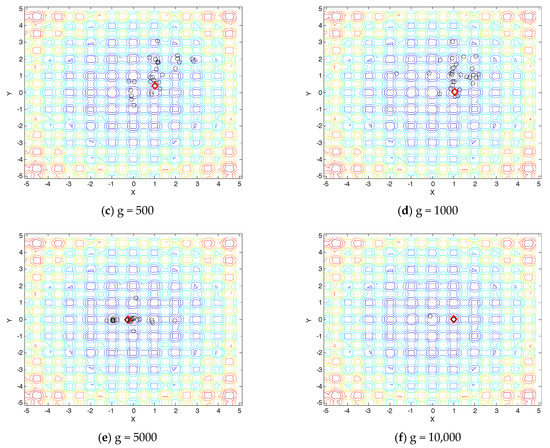

Figure 8.

Population distribution at various generations in an evolutionary process of PTBO.

In this experiment, we use 30 individuals to solve the above minimization problem, and the population distribution at various generations in an evolutionary process is shown in Figure 8b–f, with D = 2, alpha = 0.1 and beta = 0.8. In Figure 8b–f, the labels of red diamond represent the optimal point.

From Figure 8b–f, we can observe that the population distribution information can significantly vary at various generations during the run time. PTBO can effectively adapt to a time-varying search space or landscapes.

4.3. The Differences between PTBO and Other Algorithms

4.3.1. The Differences between PTBO and PSO

Like PSO (Particle Swarm Optimization), PTBO is also introduced to deal with unconstrained global optimization problems with continuous variables. In the representational form of implementation, we can certainly think that PTBO is also based on a particle system in the same way as PSO. However, according to the overall design of the PTBO algorithm, there are some differences between PTBO and the classical PSO.

Firstly, in heuristic thought, PSO is inspired by biological behavior or habits for simulating animal swarm behavior, such as fish schooling and bird flocking, while PTBO is inspired by the phase transition phenomenon of elements in nature. Secondly, in PSO, the direction of a particle is calculated by two best positions, and . However, the motion direction of an element in PTBO is arbitrarily derived from the other two elements that are different from each other. It may enhance the diversity of the population and ensure the avoidance of premature convergence. Thirdly, in the design of the operators, each particle in PSO contains a velocity item. Nevertheless, in PTBO the concept of velocity does not exist. Besides, PSO uses the position information of and to record the updating of velocity or position. However, PTBO uses only the position information about and the position of elements is not considered.

4.3.2. The Differences between PTBO and SMS

SMS (States of Matter Search) is a nature-inspired optimization algorithm based on the simulation of the states of matter phenomenon [35]. Specifically, SMS is devised by considering each state of matter at one different exploration–exploitation ratio by dividing the whole search process into three stages, i.e., gas, liquid, and solid.

Although the sources of inspiration for PBTO and SMS are similar, which are taken from a physical phenomenon about the states of matter, the evolution processes of PTBO and SMS are completely different. The evolution process of SMS is as follows. At first, all individuals in the population perform exploration in the mode of the gas state. Then, after 50% of the iterations, the search mode is changed into the liquid state for 40% of the iterations, i.e., the search between exploration and exploitation. Finally, the evolutionary process enters the stage of exploitation (liquid state) for 10% of the iterations. However, in PTBO, the three phases are coexistent in the entire search process. In other words, the three different phases of individuals execute dynamically different search tasks according to their phase in each generation. Hence, the implementation about the balance of exploration and exploitation between PTBO and SMS is completely different. Besides, the operators of PTBO and SMS are also completely different. In summary, it can be said that there are fundamental differences between PTBO and SMS.

5. Experimental Results

5.1. Benchmark Functions

In order to verify the effectiveness and robustness of the proposed PTBO algorithm, PTBO is applied in experimental simulation studies for finding the global minimum of the entire 28 test functions of the CEC 2013 special session [44]. The CEC 2013’s test suite, which has been improved on the basis of CEC 2005 [38], covers various types of function optimization, and is summarized in Table 4.

Table 4.

Benchmark functions tested by PTBO (CEC2013).

The search range of all functions is between −100 and 100 in every dimension. These problems are shifted or rotated to increase their complexity, and are treated as black-box problems. The explicit equations of the problems are not allowed to be used. The test suite of Table 4 consists of five uni-modal functions (F01 to F05), 15 multimodal functions (F06 to F20) and eight composition functions (F21 to F28).

5.2. Parameters Determination of the Interval Ratio of PTBO

In our PTBO algorithm, there are two parameters, the alpha and the beta, that need to be allocated to determine the critical intervals about the three phases. In a natural system, we can observe that the elements in the middle meta-stable phase account for the majority, and the elements in the unstable and stable phases occupy only a small proportion. This phenomenon is consistent with the two-eight law (or the 1/5th rule) [2]. For the case of simplicity, we give a value of 0.8 to beta, which is the proportion of the elements in the meta-stable phase. So, the elements in the unstable and stable phases account for 0.2 in total. The specific interval ratio settings of the three phases are shown in Table 5.

Table 5.

Different interval ratio settings of the three phases.

In order to determine which interval ratio is the most suitable for the PTBO algorithm, we conducted some compared experiments with 50 independent runs according to Table 5. The compared results of different interval ratios are listed in Table 6. In Table 6, the mean values are listed in the first line, and the standard deviations are displayed in the second line. We can intuitively observe that the ratio of prop4 has the best accuracy results compared with the other three ratios. Hence, in the subsequent experiments, we choose the value of beta to be 0.8, and the proportion of the unstable and stable phases is a random ratio of 0.2 in total.

Table 6.

Compared results of different interval ratios.

5.3. Experimental Platform and Algorithms’ Parameter Settings

For a fair comparison, all of the experiments are conducted on the same machine with an Intel 3.4 GHz central processing unit (CPU) and 4GB memory. The operating system is Windows 7 with MATLAB 8.0.0.783(R2012b).

On the test functions, we compare PTBO with classic PSO, DE, and six other recent popular meta-heuristic algorithms, which are BA, CS, BSO, WWO, WCA, and SMS. The first three algorithms belong to the second category, i.e., swarm intelligence, and the remaining three algorithms belong to the third category, i.e., intelligent algorithms simulating physical phenomena. The parameters adopted for the PTBO algorithm and the compared algorithms are given in Table 7.

Table 7.

The parameter settings of the compared algorithms.

5.4. The Compared Experimental Results

Each of the experiments was repeated for 50 independent runs with different random seeds, and the average function values of the best solutions were recorded.

5.4.1. Comparisons on Solution Accuracy

The accuracy results of the uni-modal, multimodal, and composition functions are given in Table 8, Table 9 and Table 10, respectively. The accuracy results are in terms of the mean optimum solution and the standard deviation of the solutions obtained by each algorithm over 300,000 function evaluation times (FES) or 10,000 maximum generations. In all experiments, the dimensions of all problems are 30. The best results among the algorithms are shown in bold. In each row of the three tables, the mean values are listed in the first line, and the standard deviations are displayed in the second line. A two-tailed t-test [45] is performed with a 0.05 significance level to evaluate whether the median fitness values of two sets of obtained results are statistically different from each other. In the below three tables, if PTBO significantly outperforms another algorithm, a ‘ǂ’ is labeled in the back of the corresponding result obtained by this algorithm. Corresponding to ‘ξ’, ‘ξ’ denotes that PTBO is worse than other algorithms, and ‘~’ denotes that there is no significance between PTBO and the compared algorithm. At the last row of each table, a summary of the total number of ‘ǂ’, ‘ξ’, and ‘~’ is calculated.

Table 8.

The results of solution accuracy for the uni-modal functions.

Table 9.

The results of solution accuracy for the multimodal functions.

Table 10.

The results of solution accuracy for the composition functions.

(1) The accuracy results of uni-modal functions

The results of the uni-modal functions are shown in Table 8 in terms of the mean optimum solution and the standard deviation of the solutions.

In Table 8, among the five functions, PTBO has yielded the best results on two of them (F03 and F05). Although PTBO is worse than WCA, which obtains the best results on F01, F02, and F04, we observe from the statistical results that the performance of PTBO in uni-modal functions is significantly better than PSO, DE, BA, CS, BSO, WWO, and SMS.

(2) The accuracy results of multimodal functions

From Table 9, it can be observed that the mean value and the standard deviation value of PSO displays the best results for the function F15. DE obtains the best results on F07, F09, and F12, and BA has the best result for the function F06. BSO has the best result for the function F16. WCA obtains the best results on F8 and F10. CS, WWO, and SMS do not obtain any best result except for the function F8. With regard to the function F8, there is no big difference about all eight algorithms. The PTBO algorithm performs well for the functions F11, F13, F14, F17, F18, and F20, and according to the data of the last row in Table 9, it can be concluded that the PTBO algorithm has good performance in solution accuracy for multimodal benchmark functions.

(3) The accuracy results of composition functions

It can be seen from Table 10 that DE acquires the best results on F25, and WWO obtains the best results on F26. However, PTBO obtains the best results on F21, F22, F23, F24, F27, and F28. In general, PTBO shows the best overall performance from the statistical results according to the data of the last row in Table 10.

(4) The total results of solution accuracy of the 28 functions

A summary of the total number of ‘ǂ’, ‘ξ’, and ‘~’ about the solution accuracy of 28 functions is given in Table 11. From Table 11, it can be observed that PTBO has the best performance of the nine algorithms.

Table 11.

The total results of solution accuracy for the 28 functions.

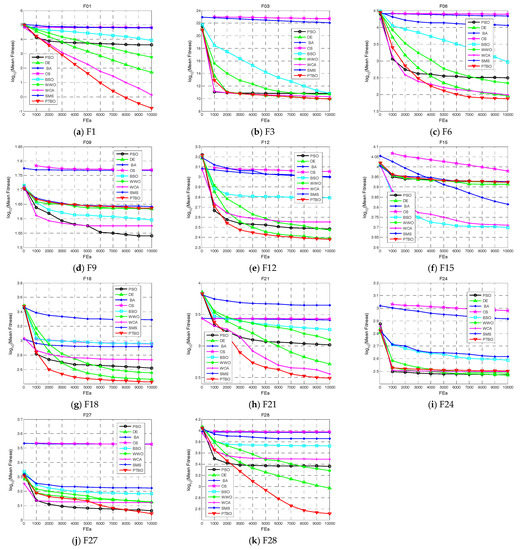

5.4.2. The Comparison Results of Convergence Speed

Due to page limitation, Figure 9 presents the convergence graphs of parts of the 28 test functions in terms of the mean fitness values achieved by each of the nine algorithms for 50 runs. From Figure 9, it can be observed that PTBO converges towards the optimal values faster than the other algorithms in most cases, i.e., F1, F6, F12, F18, F21, F27, and F28.

Figure 9.

Convergence performance of the compared algorithms on parts of functions.

5.4.3. The Comparison Results of Wilcoxon Signed-Rank Test

To further statistically compare PTBO with the other eight algorithms, a Wilcoxon signed-rank test [45] has been carried out to show the differences between PTBO and the other algorithms. The p-values on every function by a two-tailed test with a significance level of 0.05 between PTBO and other algorithms are given in Table 12.

Table 12.

Wilcoxon signed-rank test of 28 functions.

From the results of the signed-rank test in Table 12, we can observe that our PTBO algorithm has a significant advantage over seven other algorithms in p-value, which are PSO, BA, CS, BSO, WWO, WCA, and SMS. Although PTBO has no significant advantage over DE, PTBO significantly outperforms DE in R+ value. R+ is the sum of ranks for the functions on which the first algorithm outperformed the second [46], and the differences are ranked according to their absolute values. So, according to the statistical results, it can be concluded that PTBO generally offered better performance than other algorithms for all 28 functions.

The above comparisons between PTBO and other nature-based meta-heuristic algorithms may offer a possible explanation for why PTBO could obtain better results on some optimization problems and that it is possible for PTBO to deal with more complex problems better.

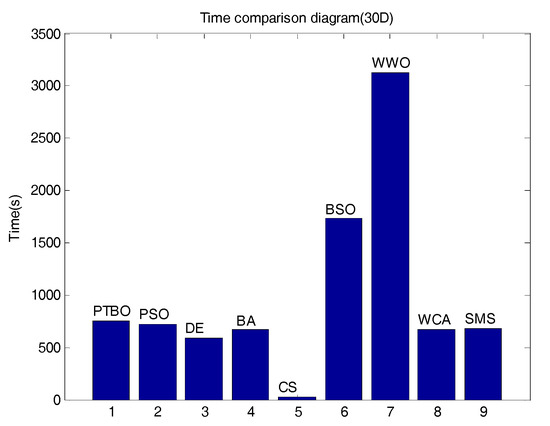

5.4.4. The Comparison Results of Time Complexity

The total comparisons of mean time complexity of the 28 functions about the nine algorithms are given in Figure 10. From Figure 10, we can observe that PTBO is ranked sixth, and is only better than BSO and WWO. However, we can find that the mean CPU time of PTBO is slightly worse than PSO and DE, and it also confirms the analysis in Section 4.1.

Figure 10.

The mean central processing unit (CPU) time of the nine compared algorithms. 30D: 30 dimensions.

6. Conclusions

In this work, a new meta-heuristic optimization algorithm simulating the phase transition of elements in a natural system, named PTBO, has been described. Although the proposed PTBO algorithm is an algorithm with some similarities to the SMS and PSO algorithms, the main concepts are slightly different. It is very simple and very flexible when compared to the existing nature-inspired algorithms. It is also very robust, at least for the test problems considered in this work. From the numerical simulation results and comparisons, it is concluded that PTBO can be used for solving uni-modal and multimodal numerical optimization problems, and is similarly effective and efficient compared with other optimization algorithms. It is worth noting that PTBO performs slightly worse than PSO and DE in time complexity. In the future, further research contents include (1) developing a more effective division method for the three phases, (2) combining the PTBO algorithm with other evolution algorithms, and (3) applying PTBO to real-world optimization problems, such as the reliability–redundancy allocation problem and structural engineering design optimization problems.

Acknowledgments

This research was partially supported by Industrial Science and technology project of Shaanxi Science and Technology Department (Grant No. 2016GY-088) and the Project of Department of Education Science Research of Shaanxi Province (Grant No. 17JK0371). Besides those, this research was partially supported by the fund of National Laboratory of Network and Detection Control (Grant No. GSYSJ2016007). The author declares that the above funding does not lead to any conflict of interests regarding the publication of this manuscript.

Author Contributions

Lei Wang conceived and designed the framework; Zijian Cao performed the experiments and wrote the paper.

Conflicts of Interest

The author declares that there is no conflict of interests regarding the publication of this paper.

References

- Yang, X.S. Nature-Inspired Optimization Algorithms; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Beyer, H.G.; Schwefel, H.P. Evolution strategies—A comprehensive introduction. Nat. Comput. 2002, 1, 3–52. [Google Scholar] [CrossRef]

- Fogel, L.J.; Owens, A.J.; Walsh, M.J. Artificial Intelligence through Simulated Evolution; Wiley: New York, NY, USA, 1966. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems; University of Michigan Press, The MIT Press: London, UK, 1975. [Google Scholar]

- Garg, H. A hybrid PSO-GA algorithm for constrained optimization problems. Appl. Math. Comput. 2016, 274, 292–305. [Google Scholar] [CrossRef]

- Garg, H. A hybrid GA-GSA algorithm for optimizing the performance of an industrial system by utilizing uncertain data. In Handbook of Research on Artificial Intelligence Techniques and Algorithms; IGI Global: Hershey, PA, USA, 2015; pp. 620–654. [Google Scholar]

- Holland, J.R. Genetic Programming: On the Programming of Computers by Means of Natural Selection; MIT Press: London, UK, 1992. [Google Scholar]

- Storn, R.; Price, K. Differential evolution—A simple and efficient heuristic strategy for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Civicioglu, P. Backtracking search optimization algorithm for numerical optimization problems. Appl. Math. Comput. 2013, 219, 8121–8144. [Google Scholar] [CrossRef]

- Simon, D. Biogeography-based optimization. IEEE Trans. Evolut. Comput. 2008, 12, 702–713. [Google Scholar] [CrossRef]

- Garg, H. An efficient biogeography based optimization algorithm for solving reliability optimization problems. Swarm Evolut. Comput. 2015, 24, 1–10. [Google Scholar] [CrossRef]

- Civicioglu, P. Transforming geocentric cartesian coordinates to geodetic coordinates by using differential search algorithm. Comput. Geosci. 2012, 46, 229–247. [Google Scholar] [CrossRef]

- Rozenberg, G.; Bäck, T.; Kok, J.N. Handbook of Natural Computing; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Dorigo, M. Optimization, Learning and Natural Algorithms. Ph.D. Thesis, Politecnico di Milano, Milano, Italy, 1992. (In Italian). [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks (ICNN), Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Passino, K.M. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst. 2002, 22, 52–67. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Garg, H.; Rani, M.; Sharma, S.P. An efficient two phase approach for solving reliability–redundancy allocation problem using artificial bee colony technique. Comput. Oper. Res. 2013, 40, 2961–2969. [Google Scholar] [CrossRef]

- Garg, H. Solving structural engineering design optimization problems using an artificial bee colony algorithm. J. Ind. Manag. Optim. 2014, 10, 777–794. [Google Scholar] [CrossRef]

- He, S.; Wu, Q.H.; Saunders, J.R. Group search optimizer: An optimization algorithm inspired by animal searching behavior. IEEE Trans. Evolut. Comput. 2009, 13, 973–990. [Google Scholar] [CrossRef]

- Yang, X.S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the IEEE World Congress on Nature & Biologically Inspired Computing (NaBIC 2009), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Garg, H. An approach for solving constrained reliability-redundancy allocation problems using cuckoo search algorithm. Beni-Suef Univ. J. Basic Appl. Sci. 2015, 4, 14–25. [Google Scholar] [CrossRef]

- Dai, C.H.; Chen, W.R.; Song, Y.H.; Zhu, Y.H. Seeker optimization algorithm: A novel stochastic search algorithm for global numerical optimization. J. Syst. Eng. Electron. 2011, 21, 300–311. [Google Scholar] [CrossRef]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Gandom, A. Bird mating optimizer: An optimization algorithm inspired by bird mating strategies. Commun. Nonlinear Sci. Numer. Simul. 2014, 19, 1213–1228. [Google Scholar]

- Shi, Y.H. Brain storm optimization algorithm. In Proceedings of the Second International Conference of Swarm Intelligence, Chongqing, China, 12–15 June 2011; pp. 303–309. [Google Scholar]

- Shi, Y.H. An optimization algorithm based on brainstorming process. Int. J. Swarm Intell. Res. 2011, 2, 35–62. [Google Scholar] [CrossRef]

- Mirjalili, S. How effective is the Grey Wolf optimizer in training multi-layer perceptrons. Appl. Intell. 2015, 43, 150–161. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Lee, K.S.; Geem, Z.W. A new meta-heuristic algorithm for continuous engineering optimization: Harmony search theory and practice. Comput. Methods Appl. Mech. Eng. 2005, 194, 3902–3933. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm—A novel meta-heuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Shah-Hosseini, H. The intelligent water drops algorithm: A nature-inspired swarm-based optimization algorithm. Int. J. Bio-Inspired Comput. 2009, 1, 71–79. [Google Scholar] [CrossRef]

- Zheng, Y.J. Water wave optimization: A new nature-inspired meta-heuristic. Comput. Oper. Res. 2015, 55, 1–11. [Google Scholar] [CrossRef]

- Cuevas, E.; Echavarría, A.; Ramírez-Ortegón, M.A. An optimization algorithm inspired by the States of Matter that improves the balance between exploration and exploitation. Appl. Intell. 2014, 40, 256–272. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, J.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Granville, V.; Krivanek, M.; Rasson, J.P. Simulated annealing: A proof of convergence. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 652–656. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evolut. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.P.; Auger, A.; Tiwari, S. Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization. KanGAL Rep. 2005, 2005, 2005005. [Google Scholar]

- Martin, O.C.; Monasson, R.; Zecchina, R. Statistical mechanics methods and phase transitions in optimization problems. Theor. Comput. Sci. 2001, 265, 3–67. [Google Scholar] [CrossRef]

- Barbosa, V.C.; Ferreira, R.G. On the phase transitions of graph coloring and independent sets. Phys. A Stat. Mech. Appl. 2004, 343, 401–423. [Google Scholar] [CrossRef]

- Mitchell, M.; Newman, M. Complex systems theory and evolution. In Encyclopedia of Evolution; Oxford University Press: New York, NY, USA, 2002; pp. 1–5. [Google Scholar]

- Metastability. Available online: https://en.wikipedia.org/wiki/Metastability (accessed on 10 January 2017).

- Sondheimer, E.H. The mean free path of electrons in metals. Adv. Phys. 2001, 50, 499–537. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.; Suganthan, P.N.; Hernández-Díaz, A.G. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization; Technical Report, 201212; Computational Intelligence Laboratory, Zhengzhou University: Zhengzhou, China; Nanyang Technological University: Singapore, 2012. [Google Scholar]

- Gibbons, J.D.; Chakraborti, S. Nonparametric Statistical Inference; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Garcı’a, S.; Molina, D.; Lozano, M.; Herrera, F. A study on the use of non-parametric tests for analyzing the evolutionary algorithms’ behaviour: A case study on the CEC’2005 special session on real parameter optimization. J. Heuristics 2009, 15, 617–644. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).