Abstract

This study presents a fine-tuned Large Language Model approach for predicting band gap and stability of transition metal sulfides. Our method processes textual descriptions of crystal structures directly, eliminating the need for complex feature engineering required by traditional ML and GNN approaches. Using a strategically selected dataset of 554 compounds from the Materials Project database, we fine-tuned GPT-3.5-turbo through nine consecutive iterations. Performance metrics improved significantly, with band gap prediction R2 values increasing from 0.7564 to 0.9989, while stability classification achieved F1 > 0.7751. The fine-tuned model demonstrated superior generalization ability compared to both GPT-3.5 and GPT-4.0 models, maintaining high accuracy across diverse material structures. This approach is particularly valuable for new material systems with limited experimental data, as it can extract meaningful features directly from text descriptions and transfer knowledge from pre-training to domain-specific tasks without relying on extensive numerical datasets.

1. Introduction

Owing to the electronic character of transition metal sulfides, these substances hold a central position in catalysis, energy storage, and even photovoltaic technology [1,2]. Their catalytic activity determination, nevertheless, is still plenty of hard work, requiring manual and meticulous handling. Among them, band gap and stability are the most important properties that control the catalytic effect. These two factors control important structural characteristics, such as the development of active centers and the effectiveness of controlling the efficient electron transfer catalyzed by transition metal sulfides [3]. Researchers have recently published developments in the use of machine learning (ML) techniques [4] to predict material properties like band gap [5,6], which can greatly simplify the search for specific materials.

Traditional approaches for investigating transition metal sulfide properties are computational and experimental. Experimentally, the band gap calculations typically include techniques like photoluminescence spectroscopy and UV-visible absorption spectroscopy [7]. However, these experimental techniques are limited to materials that have been synthesized exclusively and not to theoretically conceptualized compounds whose stability could only be predicted theoretically. For computational purposes, Density Functional Theory (DFT) has become the foundation for investigating the electronic structure of materials made up of known as well as theoretically stable compounds. DFT performs well in simulating electronic properties, including band structures and band gaps [8,9]. Its usage in experimental high-throughput material screening is, however, hampered by multiple computational requirements and resource-intensive processes, particularly with complex systems [10].

To solve the computational limitations of DFT, machine learning methods have become a powerful alternative tool, which is capable of accelerating materials property prediction while maintaining reasonable accuracy. Traditional machine learning models such as Random Forest (RF) [11] and Support Vector Machines (SVM) [12,13,14] are usually used to predict band gap and structural stability. Unlike complex physical models, these approaches offer better adaptability and significantly enhance the efficiency of material screening [15,16,17]. However, when dealing with large-scale and high-dimensional data, they face significant challenges and are often difficult to train and interpret. They may be restricted by model and algorithmic architectures for complex tasks, which render generalization capacity weak, require more computational resources, and exhibit unstable performance on unseen data.

The latest advancements in deep learning, especially Graph Neural Networks (GNNs) [18,19,20,21,22], have enhanced feature extraction ability by seamlessly learning representations from the graph structure of materials. This method reduces the reliance on elaborately designed features and is more preferable for heterogeneous material data of different sizes and complexities. In terms of material property prediction, GNN can handle atomic structure diagrams and extract complex relationships between atoms or parts. On the other hand, the construction of GNNs requires a large amount of labeled data, generates high costs related to computing, and often lacks clear and meaningful features, which poses a serious limitation to their wide application in the field of materials science.

Despite these advances, three technical gaps remain unresolved. (i) High-fidelity DFT still dominates the creation of labelled datasets, which limits the chemical diversity that can be covered in a reasonable wall-clock time. (ii) Descriptor-driven ML models—while fast—depend on handcrafted features whose transferability from one crystal family to another is difficult to guarantee. (iii) State-of-the-art GNNs alleviate manual featurization but rely on tens of thousands of labeled structures to avoid over-smoothing and over-squashing, a requirement rarely met in niche catalyst sub-domains such as transition-metal sulfides. These bottlenecks motivate a paradigm that can learn from existing unstructured text materials and operate in a data-efficient regime.

Recent breakthroughs in natural-language processing (NLP) [23] have produced large Language models (LLMs) [24,25] with billions of parameters that can be instruction-tuned to specialized domains. Unlike graph-based models that take atomic coordinates as input, an LLM ingests plain text. It, therefore, becomes possible to exploit the terabytes of crystallographic prose already produced by ICSD entries, Materials Project “structure descriptions”, OQMD [26] notebook annotations, and literature corpora mined by tools such as ChemDataExtractor [27]. By casting each material as a short narrative—automatically generated with robocrystallographer [28] or scraped from papers—LLMs can learn latent structure–property relationships implicit in human language. This text-native formulation removes the need for elaborate descriptor engineering, retains chemical interpretability through attention weights, and can be fine-tuned with only a few hundred labeled samples, as demonstrated in this work.

To our knowledge, prior applications of LLMs in materials science have focused on retrieval or chat-style assistance rather than quantitative property prediction. Here, we take a different route: GPT-3.5-turbo is fine-tuned end-to-end on 554 transition-metal-sulfide entries, each paired with numerically accurate band-gap and thermodynamic-stability labels computed in the Materials Project. This setting allows a controlled comparison against: (i) descriptor-based ML (Random Forest, SVM, XGBoost, LightGBM) and (ii) coordinate-based deep GNNs reported in the literature. Our results show that a text-only LLM, when carefully fine-tuned, can match or exceed the predictive accuracy of these baselines while using <1000 labeled points—two orders of magnitude fewer than typical GNN benchmarks such as MatBench [29].

Integrating cutting-edge NLP techniques with materials science is a notable advancement in the capabilities for predicting materials. Recent developments have enhanced the prediction of structural properties for transformer-based models like AlloyBERT [30] and AMGPT [31]. Successful applications of the newer approaches include band gap prediction and assessing the stability of transition metal sulfides [32,33], which present novel solutions to traditional methods of feature engineering. Attention mechanisms inherent to the implemented transformer architectures [34] assist in interpretability as they enrich descriptions of critical materials and offer deeper understanding regarding the prediction.

Unlike previous studies that relied heavily on numerical feature engineering, this work pioneers the application of fine-tuned LLMs to predict catalytic properties directly from textual material descriptions. Our research goal is to develop an automated workflow to predict target characteristics related to the catalytic activity of transition metal sulfides so that accelerating the discovery of target materials. This study proposes an innovative approach that integrates the Materials Project database [35] API for data collection with the robocrystallographer [28] for feature extraction (an automated tool for generating text descriptions of crystal structures). By using fine-tuned language models, our approach processes text-based representations of materials directly while eliminating the need for extensive preprocessing of both numerical and non-numerical features. The framework accurately predicts the band gap and stability of transition metal sulfides. This dual predictive ability not only deepens the fundamental understanding of these materials but also accelerates the discovery of new stable compounds with ideal electronic properties.

2. Materials and Methods

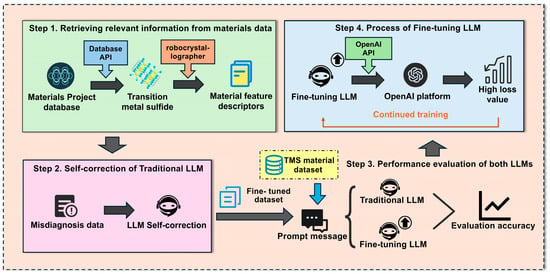

Our research methodology follows a structured workflow for developing and evaluating a domain-specific Large Language Model (LLM) optimized for transition metal sulfide analysis, as illustrated in Figure 1. This comprehensive framework consists of four interconnected steps: In Step 1, data acquisition begins with extracting transition metal sulfide data from the Materials Project database API using specific chemical criteria. The robocrystallographer [28] then converts crystallographic structures into standardized textual descriptions, generating material feature descriptors that capture atomic arrangements, bond properties, and electronic characteristics in natural language format. Step 2 implements a self-correction process for traditional LLMs by identifying and addressing misdiagnosis data through verification protocols that cross-validate property predictions against established computational principles. This ensures training data reliability, which is crucial for specialized scientific domains. Step 3 conducts performance evaluation of both traditional and fine-tuned LLM variants using standardized prompt templates and metrics (, RMSE, F1 score) to quantify differences between general-purpose and domain-specialized models. Step 4 employs iterative fine-tuning on the OpenAl with API version dated 30 May 2024 using supervised learning with structured JSONL format training examples. The process includes progressive multi-iteration training through loss tracking and targeted improvement of high-loss data points. Each iteration aims to minimize loss values while preserving generalization capabilities. This systematic approach enabled the development of a specialized model for predicting catalytic properties of transition metal sulfides with high accuracy, overcoming the limitations of traditional methods in this complex materials domain.

Figure 1.

Flowchart for developing a domain-specific LLM tailored to predict band gap and stability of transition metal sulfides.

2.1. High-Quality Dataset Construction

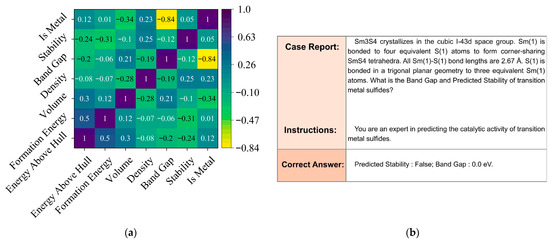

As shown in the first step of Figure 1, we extracted relevant material data from the Materials Project database through its API, while using the robocrystallographer [28] to construct transition metal sulfide structures and generate associated material feature descriptors. The dataset was extracted using specific API parameters: transition metals (Sc-Zn, Y-Cd, La-Hg) combined with sulfur, formation energy below 500 eV/atom, and energy above hull < 150 eV/atom for thermodynamic stability. From 729 initial compounds, we eliminated 175 samples based on rigorous criteria: incomplete electronic structure data (61), unconverged relaxations with forces exceeding 0.05 eV/Å (43), disordered structures with partial occupancies (37), inconsistent band gap calculations between different computational methods (24), and unphysical bond configurations (10). The resulting 554 compounds formed our final dataset for model training and evaluation. In our research, we extend data-efficient approaches into the domain of transition metal sulfide catalysts, building upon established precedents in materials informatics. We adopted Wu et al.’s [36] transfer learning techniques that achieved 40% MAE reduction (from 0.0327 to 0.0204 W/(m·K)) with only 28 homopolymer samples, and implemented Wang et al.’s [37] insight that carefully selected high-quality training data can outperform larger noisy datasets. As illustrated in Figure 2a, our statistical evaluation reveals important feature correlations in transition metal sulfide datasets, where properties like energy above hull, formation energy, and band gap exhibit complex interdependencies that traditional machine learning approaches must navigate. In particular, the Pearson map reveals three illustrative patterns that underscore the complexity of the descriptor space. First, Energy Above Hull is moderately correlated with Formation Energy (r ≈ 0.50) yet anti-correlated with the binary Stability label (r ≈ −0.31); meanwhile, Formation Energy itself shows only a weak link to Stability (r ≈ 0.12). This triangular relationship implies that metastability cannot be inferred from any single energetic metric alone. Second, Band Gap displays a strong negative correlation with the categorical variable Is Metal (r ≈ −0.84), but its correlation with the continuous Density is weak (r ≈ −0.19), indicating that electronic character decouples from simple packing effects. Third, Volume correlates positively with Energy Above Hull (r ≈ 0.30) yet negatively with Band Gap (r ≈ −0.28), suggesting competing steric and electronic influences on lattice expansion. Such cross-dependencies mean that descriptors affecting the same target can interact in opposite ways, creating highly non-linear decision boundaries that motivate our shift from hand-crafted features to representation-learning via large language models. By curating 554 entries and implementing optimized techniques for catalytic materials, we achieved substantial improvements in predictive accuracy (R2 increasing from 0.7564 to 0.9989). Figure 2b demonstrates our fine-tuned model’s capability through a case analysis of SmS4, accurately predicting its band gap (0.0 eV) and predicted stability (False) based on its crystalline structure and bonding characteristics. The research indicates that in catalytic materials science, experimental validation is resource-intensive, property relationships require complex modeling, and strategic data selection can yield better results rather than simply expanding the dataset size, establishing a materials informatics paradigm, and maximizing value from limited but high-quality experimental data.

Figure 2.

(a) Statistical Evaluation of Feature Correlations in Transition Metal Sulfide Datasets for traditional ML; (b) Case Analysis of Transition Metal Sulfide Property Prediction Using Fine-tuned Models.

2.2. Traditional Language Models

Following the second step in Figure 1, we implemented a self-correction process for traditional LLMs to deal with misdiagnosis data, thus enhancing the robustness of the model. For benchmarking, we selected four traditional machine learning algorithms based on their complementary strengths in materials property prediction: RF for handling mixed feature types and robust performance with limited data, SVM for effectiveness with non-linear relationships and class imbalance, Extreme Gradient Boosting (XGBoost) for gradient-boosted performance with regularization capabilities and Light Gradient Boosting (LightGBM) for computational efficiency with comparable accuracy. This comprehensive evaluation addresses a critical challenge in materials science with significant implications for various industrial applications, focusing on band gap and predicted stability as crucial indicators of catalytic potential. To demonstrate that these four methods indeed provide the best accuracy–efficiency trade-off for our dataset, we additionally benchmarked four other popular algorithms (MLP-Regressor, GradientBoosting, k-NN, and Gaussian Process); the complete results are presented in Section S3 of the Supporting Information.

Our data preprocessing phase involved several crucial steps to ensure quality and compatibility, including eliminating irrelevant columns such as ‘Space Group Symbol’ and ‘Crystal System’, applying one-hot encoding to the ‘Formula’ column, and label encoding to the ‘Magnetic Ordering’ column. The ‘Total Magnetization’ column was converted to numeric values with missing entries filled with zeros, while potential infinity values and NaN entries were addressed to maintain data integrity.

Each model was configured with optimized parameters determined through grid search: RF (n_estimators = [100, 200, 300], max_depth = [10, 20, 30], min_samples_split = [2, 5, 10]), SVM (kernel = ‘rbf’, c = 1.0, epsilon = 0.1), XGBoost (learning_rate = 0.1, max_depth = 5, n_estimators = 100) and LightGBM (num_leaves = [20–100], feature_fraction = [0.5–1.0], bagging_fraction = [0.5–1.0], max_depth = [5–20]). All models underwent identical preprocessing with StandardScaler normalization and feature selection through RFE. We benchmarked the number of retained descriptors from 5 to 50 (step = 5) and found that 20 descriptors maximized the cross-validated R2 across all four algorithms while maintaining the simplicity of the model (see Figure S1 and Table S1 in the Supporting Information). The dataset was subsequently divided into training (80%) and testing (20%) sets, with each model undergoing hyperparameter tuning using GridSearchCV with 3-fold cross-validation.

In the evaluation stage, two target variables were treated with different metrics: R2, RMSE, and MSE for band gap prediction (considered a regression task), while accuracy, F1 score, and recall were used for predicted stability classification to evaluate performance more completely. The processes of data preprocessing, feature extraction, and system model optimization constitute an advanced framework for benchmarking classical machine learning algorithms concerning estimating the performance of materials, which sets the standard for future work in materials informatics and catalysis.

2.3. Prompt-Only ChatGPT Approach

We explored prompt-only interaction with large language models as a baseline comparison approach. This technique allows users to leverage simple prompts to effectively interact with LLMs without additional training. In zero-shot scenarios [38], the model generates predictions using only descriptive prompts and pre-existing knowledge. For enhancing performance, we implemented few-shot learning approaches, where the model uses selected examples to improve understanding and response accuracy. We carefully curated diverse sample prompts to maximize model performance across different material types and structural complexities. Our experiments interacted with ChatGPT using OpenAI API keys, employing both GPT-3.5 and GPT-4.0 models for comparative evaluation against our fine-tuned variants.

2.4. Fine-Tuning of Large Language Models

As illustrated in steps 3 and 4 of Figure 1, we conducted a performance evaluation of both traditional and fine-tuned LLM variants, leveraging the transition metal sulfides material dataset to formulate prompt messages and assess evaluation accuracy, followed by an iterative fine-tuning process employing the OpenAI for continued training with the objective of minimizing the loss value. The GPT-3.5-turbo fine-tuning employed a progressive nine-iteration approach (1st-FT through 9th-FT), each using supervised learning with three epochs per iteration. We implemented early stopping with a maximum wait time of 20000 steps to balance convergence and training efficiency. Throughout the process, we continuously monitored performance through comprehensive metrics including training loss, validation loss, and full validation loss, with periodic evaluation on a held-out test set. During both the classical-model benchmarks and the GPT-3.5-turbo fine-tuning, we explicitly minimized task-specific loss functions to guide parameter updates. For the continuous band-gap target the objective was the mean-squared error (MSE), defined as , while for the binary stability label, we minimized the binary cross-entropy (BCE), . Inside the OpenAI supervised-fine-tuning loop, the network weights were updated with the standard token-level negative log-likelihood (NLL), ; after each epoch the generated answers were parsed and their numerical components were evaluated with the same MSE or BCE on the validation set. These objectives were also used as scoring metrics for the GridSearchCV hyper-parameter optimization of the four classical algorithms (RF, SVM, XGBoost, and LightGBM), ensuring a consistent optimization criterion across all models discussed in this work. The training protocol utilized the OpenAI API with custom configuration to ensure optimal model performance. For robust performance tracking, we developed a pattern recognition system that extracted and visualized loss metrics from event logs, enabling precise identification of training trends. For convenience, we later refer to runs 1–3, 4–6, and 7–9 as early, mid, and late blocks because the loss curves display three clearly separated slopes (Section S1). These blocks emerged a posteriori and were not enforced through extra labels or tasks. Our framework also identified high-loss data points (those exceeding a threshold of 0.5 for training loss and 1.0 for validation loss) for targeted improvement in subsequent iterations, because these cut-offs correspond to the 95th percentile of the loss distributions across all nine fine-tuning iterations (see Section S5 in supporting information). As shown in Section S1, loss values decreased substantially across iterations, with the most significant improvements occurring in iterations 4–6 (post hoc observation, no extra objectives were applied in these iterations).

This fine-tuned dataset uses JSONL format, where each entry contains three elements: system, user, and assistant. The user role always contains the following prompt: “What is the band gap and predicted stability of transition metal sulfides?” The assistant role provides the corresponding values for each compound in the following format: {“Band gap: XXX eV; Predicted stability: XXX”}. This standardized structure efficiently trains language models [39] by maintaining consistent question-answer patterns about material properties, enabling clear mapping between queries and catalytic characteristics for each compound.

3. Results and Discussion

3.1. Description of Fine-Tuned Datasets for Transition Metal Sulfides

The study utilized transition metal sulfide data from the Materials Project database [35], covering 49 elements in groups III–XII (including lanthanides and actinides). The initial dataset of 729 entries was refined to 554 through the removal of 175 unstable compounds. The JSONL-formatted dataset contains two key features: band gap (0–3.03 eV) and predicted stability (binary: 0/1). Band gap determines electronic conductivity and charge transfer, while stability reflects operational durability of catalytic activity. The 8:2 training-test split ensures optimal data representation and computational efficiency. The systematic data preparation enhances model performance, generalization, and evaluation accuracy. This dataset establishes a foundation for modeling transition metal sulfide catalytic activity and understanding structure–property relationships.

3.2. Fine-Tuning for Performance Enhancement and Model Iterations

The model underwent nine consecutive fine-tuning iterations (1st-FT to 9th-FT) to optimize band gap prediction and predicted stability classification. Unlike traditional models that require strict numerical formatting and explicit feature engineering, the fine-tuned LLM efficiently processes natural language descriptions from diverse sources (research papers, experimental reports, materials databases), automatically extracting relevant features and applying pre-existing domain knowledge to complex material-property relationships.

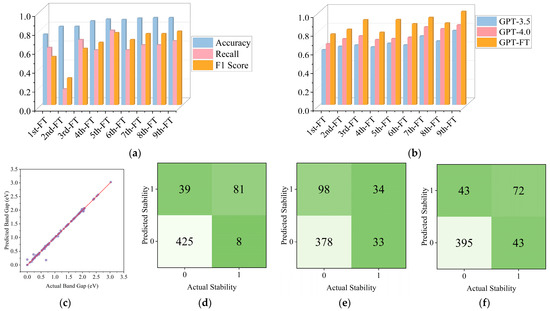

As shown in Figure 3a, the performance metrics (Accuracy, Recall, F1 Score) gradually improved during the iteration process and reached a peak in the final iterations. Figure 3b compares the performance of GPT-3.5, GPT-4.0, and GPT-FT (fine-tuned) on band gap prediction tasks. Table 1 quantitatively demonstrates this performance difference, with GPT-FT achieving an exceptional R2 value of 0.9989, significantly outperforming both GPT-3.5 (R2 = 0.7937) and GPT-4.0 (R2 = 0.8542). This substantial improvement highlights the effectiveness of our domain-specific fine-tuning approach compared to even the most advanced general-purpose language models. GPT-FT consistently outperforms baseline models, with accuracy exceeding 0.8 across iterations and nearing 1.0 after the ninth fine-tuning. Although GPT-4.0 surpassed GPT-3.5, both lagged significantly behind GPT-FT. All models were improved through gradual fine-tuning iterations, and the performance gap narrowed slightly after the seventh and eighth iterations. Figure 3c confirms strong final results as the data points are almost perfectly spaced from the prediction line. Confusion matrices in Figure 3d–f show notable classification advantages for predicted stability: fine-tuned model F1 > 0.7751, GPT-3.5 F1 > 0.5840, and GPT-4.0 F1 > 0.6261. The LLM’s performance is enhanced due to natural language understanding capabilities, reasoning about incomplete information, and capturing long-range property dependencies, unlike traditional models that are bound by rigid frameworks.

Figure 3.

(a) Performance metrics for Predicted Stability; (b) Comparison of Band Gap prediction accuracy across different models; (c) Use a fine-tuned model to predict the prediction accuracy of the Band Gap (purple circles represent individual data points, where each point corresponds to a material with a known actual band gap and the model’s prediction of that band gap, while red line is the ideal prediction line, which represents perfect prediction accuracy); (d) Represent the prediction accuracy of Fine-tuned model for Predicted Stability; (e) Represent the prediction accuracy of GPT-3.5 for Predicted Stability; (f) Represent the prediction accuracy of GPT-4.0 for Predicted Stability.

Table 1.

The value of GPT-3.5, GPT-4.0, and GPT-FT for the fine-tuned dataset.

3.3. Comparative Analysis of Model Prediction Accuracy

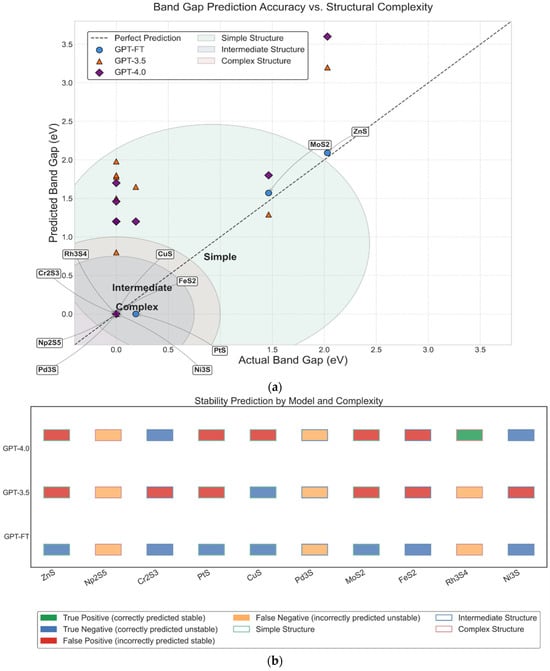

Figure 4 compares the performance of GPT-FT, GPT-3.5, and GPT-4.0 in predicting the band gap and predicted stability of 10 transition metal sulfide compounds with varying structural complexity. In Figure 4a, GPT-FT achieves the best band gap predictions, with the lowest average error (0.035 eV) and accurate identification of all metallic compounds with zero band gap. In contrast, GPT-3.5 and GPT-4.0 exhibit larger errors, frequently misclassifying metallic systems as semiconductors, particularly for compounds with intermediate or complex structures. As shown in Figure 4b, GPT-FT again outperforms the other models in predicting thermodynamic stability, showing minimal errors and strong generalization across structural types. Meanwhile, GPT-3.5 and GPT-4.0 make more frequent misclassifications, especially in complex cases. These consistent performance differences emphasize the advantages of domain-specific fine-tuning in GPT-FT. It can provide a deeper understanding of the physical relationship between structure, electronic properties, and stability, which is lacking in the general model.

Figure 4.

(a) Band gap prediction accuracy of GPT-FT, GPT-3.5, and GPT-4.0 across materials of varying structural complexity; (b) Predicted stability classification performance of GPT-based models on structurally diverse compounds.

Comprehensive performance evaluations were conducted comparing the fine-tuned model against advanced language models and traditional machine learning approaches. The fine-tuned model demonstrated superior data processing flexibility and automated feature extraction capabilities while achieving enhanced performance in band gap prediction and stability classification. This research reveals significant advantages of the fine-tuned model over both advanced language models and traditional machine learning methods. As shown in Figure 3b,c, the optimized GPT-FT achieves band gap prediction R2 values approaching 0.9989, RMSE = 0.0252, MSE = 0.0060, through autonomous feature learning and efficient knowledge transfer across material systems, substantially outperforming GPT-3.5 (0.7937) and GPT-4.0 (0.8542).

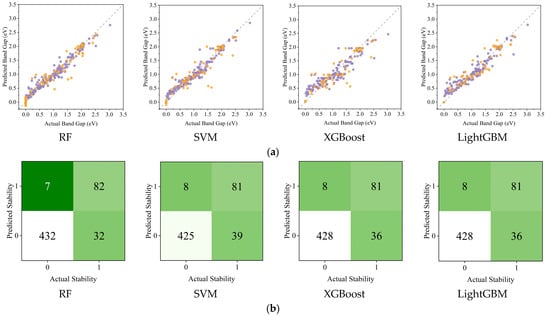

Traditional machine learning models, despite requiring extensive feature engineering and standardized data preprocessing, achieved varying accuracy in band gap predictions for transition metal sulfides. As shown in Table 2, all four traditional algorithms demonstrated strong performance on both training and testing datasets. RF achieved the highest testing R2 value (0.9655) with relatively low RMSE (0.1491), while maintaining consistent performance between training (R2 = 0.9713, RMSE = 0.1077) and testing sets, indicating good generalization. LightGBM showed similar consistency with testing R2 = 0.9592 and RMSE = 0.1623. XGBoost exhibited the lowest training RMSE (0.0842) but slightly higher testing error (0.1867), suggesting some overfitting. SVM showed the largest gap between training and testing performance, with the highest testing RMSE (0.1951) among all models. While traditional models in Figure 5a, including RF (R2 = 0.9660), LightGBM (R2 = 0.9592), XGBoost (R2 = 0.9460), and SVM (R2 = 0.9410), demonstrate competent performance through manual feature engineering, they lack adaptive knowledge transfer capabilities. Figure 5b demonstrates the fine-tuned model’s advantage in stability prediction, maintaining F1 > 0.7751 despite using a compact training dataset, which is crucial for materials research with limited data availability. This adaptive framework allows continuous model improvement through incremental data integration while preserving high performance metrics, eliminating the need for complete model rebuilding common in traditional approaches.

Table 2.

The training set results and testing set results of RF, SVM, XGBoost, and LightGBM for the traditional dataset.

Figure 5.

(a) Experiment and predict band gap values on training and test sets by four major traditional ML algorithms (the purple points represent the training set data, while the orange points represent the test set data); (b) Prediction accuracy of traditional ML algorithms for Predicted Stability.

3.4. Analysis of Prediction Patterns and Model Improvement

Analysis of prediction failures reveals systematic patterns influenced by material characteristics. Through nine iterative fine-tuning iterations, the GPT-FT model showed remarkable improvement, with band gap prediction R2 values increasing from 0.7564 in early iterations to the final 0.9989. This domain-specific optimization proved essential for materials science predictions, conclusively demonstrating that general AI models have limitations in scientific tasks without specialized training. These findings underscore the critical importance of transfer learning for addressing complex materials science challenges, particularly when dealing with novel compound classes constrained by limited experimental data. Through careful data selection and refined techniques for catalytic materials, we have developed an effective approach for materials informatics that efficiently utilizes information from limited but high-quality experimental datasets.

3.5. Advantages of Fine-Tuning LLMs for Materials Science Applications

Our experimental results demonstrate clear advantages of fine-tuned language models for transition metal sulfide analysis beyond original performance metrics [40]. The model performs well in problems that are difficult to solve by traditional methods, such as interpreting complex crystallographic information, recognizing relationships between structural features and properties, and transferring knowledge from general materials science to specific transition metal sulfides applications. Unlike traditional models that require complete retraining, our approach enables incremental learning and shows flexibility to data variability commonly found in materials science.

The prediction accuracy is affected by compound structural complexity, and the more complex the system is, the greater the challenge to the prediction accuracy. This model provides better interpretability by identifying the attention mechanism of important structural features, which establishes a foundation for automated materials discovery systems that can accelerate catalyst development through efficient screening [41].

4. Conclusions

This research explores LLM fine-tuning for the catalytic activity prediction of transition metal sulfides as an alternative to manual feature prediction and adopts a compact dataset to optimize the fine-tuning process. The fine-tuned GPT-3.5-turbo model achieved R2 values of 0.9989 for band gap predictions and an F1 Score > 0.7751 for stability predictions. In performance comparison, the fine-tuned model compares with other machine learning methods: RF (R2 = 0.9660, F1 score = 0.8079), SVM (R2 = 0.9410, F1 score = 0.7751), XGBoost (R2 = 0.9460, F1 score = 0.7864), and LightGBM (R2 = 0.9592, F1 score = 0.7864). Although it has demonstrated promising capabilities in automatic feature extraction and transfer learning, the performance of this model mainly depends on the quality and completeness of the training data, especially for complex material systems with limited experimental data. This model currently has the characteristics of direct text data processing, cross-domain knowledge transfer, automatic feature recognition, and stability of material system prediction. However, there are still challenges in effectively integrating multimodal data sources (including experimental images, spectral data, and molecular structures). It is worth noting that although a compact dataset was strategically chosen to optimize fine-tuning efficiency and reduce experimental noise, this approach does not limit the model’s capabilities, as the architecture can easily adapt to dataset expansion and seamlessly integrate new data during subsequent training. Looking forward, the development focus should be concentrated on enhancing multimodal data integration capabilities, developing more effective fine-tuning strategies for different material systems, establishing automated verification protocols, standardizing materials science data formats, and a collaborative platform for continuous model improvement.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/ma18163793/s1, Section S1: Model Consistency Improvement Over Training Iterations. Section S2: Code of Models. Section S3: Benchmark results of eight machine-learning algorithms on the transition-metal sulfide dataset. Section S4. (1) Figure S1. The influence of the number of selected features based on Recursive Feature Elimination (RFE) on the cross-validation values of four models; (2) Table S1. Cross-validation values (average ± standard deviation) of the four models under different feature quantities. Section S5. Impact of Loss-Threshold Settings on Model Performance and Outlier Coverage.

Author Contributions

Conceptualization, Z.Z. and L.H.; data curation, Z.Z.; supervision, H.W.; writing—original draft, Z.Z.; methodology, L.H.; validation, Z.Z.; writing—review and editing, Z.Z. and H.W.; project administration, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article/Supplementary Materials. Further inquiries can be directed to the corresponding author.

Acknowledgments

We thank Guozhu Jia for his constructive suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kajana, T.; Pirashanthan, A.; Velauthapillai, D.; Yuvapragasam, A.; Yohi, S.; Ravirajan, P.; Senthilnanthanan, M. Potential transition and post-transition metal sulfides as efficient electrodes for energy storage applications: Review. RSC Adv. 2022, 12, 18041–18062. [Google Scholar] [CrossRef]

- Kharboot, L.H.; Fadil, N.A.; Abu Bakar, T.A.; Najib, A.S.M.; Nordin, N.H.; Ghazali, H. A Review of Transition Metal Sulfides as Counter Electrodes for Dye-Sensitized and Quantum Dot-Sensitized Solar Cells. Materials 2023, 16, 2881. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Zelenay, P. Activity versus stability of atomically dispersed transition-metal electrocatalysts. Nat. Rev. Mater. 2024, 9, 643–656. [Google Scholar] [CrossRef]

- Jiang, X.; Fu, H.; Bai, Y.; Jiang, L.; Zhang, H.; Wang, W.; Yun, P.; He, J.; Xue, D.; Lookman, T.; et al. Interpretable Machine Learning Applications: A Promising Prospect of AI for Materials. Adv. Funct. Mater. 2025. [CrossRef]

- Wang, T.; Zhang, K.; Thé, J.; Yu, H. Accurate prediction of band gap of materials using stacking machine learning model. Comput. Mater. Sci. 2022, 201, 110899. [Google Scholar] [CrossRef]

- Noor, N.; Iqbal, M.W.; Zelai, T.; Mahmood, A.; Shaikh, H.; Ramay, S.M.; Al-Masry, W. Analysis of direct band gap A2ScInI6 (A = Rb, Cs) double perovskite halides using DFT approach for renewable energy devices. J. Mater. Res. Technol. 2021, 13, 2491–2500. [Google Scholar] [CrossRef]

- Masood, H.; Sirojan, T.; Toe, C.Y.; Kumar, P.V.; Haghshenas, Y.; Sit, P.H.-L.; Amal, R.; Sethu, V.; Teoh, W.Y. Enhancing prediction accuracy of physical band gaps in semiconductor materials. Cell Rep. Phys. Sci. 2023, 4, 101555. [Google Scholar] [CrossRef]

- Singh, P. Density-functional theory of material design: Fundamentals and applications-I. Oxf. Open Mater. Sci. 2020, 1, itab018. [Google Scholar] [CrossRef]

- Guillén, C. Band Gap Energy and Lattice Distortion in Anatase TiO2 Thin Films Prepared by Reactive Sputtering with Different Thicknesses. Materials 2025, 18, 2346. [Google Scholar] [CrossRef]

- Schleder, G.R.; Padilha, A.C.M.; Acosta, C.M.; Costa, M.; Fazzio, A. From DFT to machine learning: Recent approaches to materials science—A review. J. Phys. Mater. 2019, 2, 032001. [Google Scholar] [CrossRef]

- Stoll, A.; Benner, P. Machine learning for material characterization with an application for predicting mechanical properties. GAMM-Mitteilungen 2021, 44, e202100003. [Google Scholar] [CrossRef]

- Rajan, A.C.; Mishra, A.; Satsangi, S.; Vaish, R.; Mizuseki, H.; Lee, K.-R.; Singh, A.K. Machine-learning-assisted accurate band gap predictions of functionalized mxene. Chem. Mater. 2018, 30, 4031–4038. [Google Scholar] [CrossRef]

- Sabagh Moeini, A.; Shariatmadar Tehrani, F.; Naeimi-Sadigh, A. Machine learning-enhanced band gaps prediction for low-symmetry double and layered perovskites. Sci Rep. 2024, 14, 26736. [Google Scholar] [CrossRef] [PubMed]

- Chan, C.H.; Sun, M.; Huang, B. Application of machine learning for advanced material prediction and design. EcoMat 2022, 4. [Google Scholar] [CrossRef]

- Jain, A. Machine learning in materials research: Developments over the last decade and challenges for the future. Curr. Opin. Solid State Mater. Sci. 2024, 33, 101189. [Google Scholar] [CrossRef]

- Kalutharage, C.S.; Liu, X.; Chrysoulas, C. Explainable AI and Deep Autoencoders Based Security Framework for IoT Network Attack Certainty (Extended Abstract). In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer Science and Business Media Deutschland GmbH: Berlin, Germany, 2022; pp. 41–50. [Google Scholar]

- Zhang, L.; Zhang, H.; Ji, B.; Liu, L.; Liu, X.; Chen, D. Application of Machine Learning in Amorphous Alloys. Materials 2025, 18, 1771. [Google Scholar] [CrossRef] [PubMed]

- Taniguchi, T.; Hosokawa, M.; Asahi, T. Graph Comparison of Molecular Crystals in Band Gap Prediction Using Neural Networks. ACS Omega 2023, 8, 39481–39489. [Google Scholar] [CrossRef]

- Zhao, Z.; Li, H.-F. Investigating Material Interface Diffusion Phenomena through Graph Neural Networks in Applied Materials. ACS Appl. Mater. Interfaces 2024, 16, 53153–53162. [Google Scholar] [CrossRef]

- Li, Y.; Gupta, V.; Kilic, M.N.T.; Choudhary, K.; Wines, D.; Liao, W.K.; Choudhary, A.; Agrawal, A. Hybrid-LLM-GNN: Integrating large language models and graph neural networks for enhanced materials property prediction. Digit. Discov. 2024, 4, 376–383. [Google Scholar] [CrossRef]

- Hu, L.; Zhou, Z.; Jia, G. A one-shot automated framework based on large language model and AutoML: Accelerating the design of porous carbon materials and carbon capture optimization. Sep. Purif. Technol. 2025, 376, 133487. [Google Scholar] [CrossRef]

- Omee, S.S.; Louis, S.-Y.; Fu, N.; Wei, L.; Dey, S.; Dong, R.; Li, Q.; Hu, J. Scalable deeper graph neural networks for high-performance materials property prediction. Patterns 2022, 3, 100491. [Google Scholar] [CrossRef]

- Zhou, M.; Duan, N.; Liu, S.; Shum, H.-Y. Progress in Neural NLP: Modeling, Learning, and Reasoning. Engineering 2020, 6, 275–290. [Google Scholar] [CrossRef]

- Annepaka, Y.; Pakray, P. Large language models: A survey of their development, capabilities, and applications. Knowl. Inf. Syst. 2024, 67, 2967–3022. [Google Scholar] [CrossRef]

- Xi, Z.; Chen, W.; Guo, X.; He, W.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. The rise and potential of large language model based agents: A survey. Sci. China Inf. Sci. 2025, 68, 121101. [Google Scholar] [CrossRef]

- Saal, J.E.; Kirklin, S.; Aykol, M.; Meredig, B.; Wolverton, C. Materials design and discovery with high-throughput density functional theory: The open quantum materials database (OQMD). JOM 2013, 65, 1501–1509. [Google Scholar] [CrossRef]

- Kumar, P.; Kabra, S.; Cole, J.M. A Database of Stress-Strain Properties Auto-generated from the Scientific Literature using ChemDataExtractor. Sci. Data 2024, 11, 1273. [Google Scholar] [CrossRef]

- Ganose, A.M.; Jain, A. Robocrystallographer: Automated crystal structure text descriptions and analysis. MRS Commun. 2019, 9, 874–881. [Google Scholar] [CrossRef]

- Dunn, A.; Wang, Q.; Ganose, A.; Dopp, D.; Jain, A. Benchmarking materials property prediction methods: The Matbench test set and Automatminer reference algorithm. NPJ Comput. Mater. 2020, 6, 138. [Google Scholar] [CrossRef]

- Chaudhari, A.; Guntuboina, C.; Huang, H.; Farimani, A.B. AlloyBERT: Alloy property prediction with large language models. Comput. Mater. Sci. 2024, 244, 113256. [Google Scholar] [CrossRef]

- Chandrasekhar, A.; Chan, J.; Ogoke, F.; Ajenifujah, O.; Barati Farimani, A. AMGPT: A large language model for contextual querying in additive manufacturing. Addit. Manuf. Lett. 2024, 11, 100232. [Google Scholar] [CrossRef]

- Liu, H.; Xu, L.; Ma, Z.; Li, Z.; Li, H.; Zhang, Y.; Zhang, B.; Wang, L.-L. Accurate prediction of semiconductor bandgaps based on machine learning and prediction of bandgaps for two-dimensional heterojunctions. Mater. Today Commun. 2023, 36, 106578. [Google Scholar] [CrossRef]

- Guo, Y.; Park, T.; Yi, J.W.; Henzie, J.; Kim, J.; Wang, Z.; Jiang, B.; Bando, Y.; Sugahara, Y.; Tang, J.; et al. Nanoarchitectonics for Transition-Metal-Sulfide-Based Electrocatalysts for Water Splitting. Adv. Mater. 2019, 31, 1807134. [Google Scholar] [CrossRef]

- Huang, H.; Magar, R.; Xu, C.; Farimani, A.B. Materials Informatics Transformer: A Language Model for Interpretable Materials Properties Prediction. arXiv 2023, arXiv:2308.16259. [Google Scholar] [CrossRef]

- Jain, A.; Ong, S.P.; Hautier, G.; Chen, W.; Richards, W.D.; Dacek, S.; Cholia, S.; Gunter, D.; Skinner, D.; Ceder, G.; et al. Commentary: The materials project: A materials genome approach to accelerating materials innovation. APL Mater. 2013, 1, 011002. [Google Scholar] [CrossRef]

- Wu, S.; Kondo, Y.; Kakimoto, M.-A.; Yang, B.; Yamada, H.; Kuwajima, I.; Lambard, G.; Hongo, K.; Xu, Y.; Shiomi, J.; et al. Machine-learning-assisted discovery of polymers with high thermal conductivity using a molecular design algorithm. NPJ Comput. Mater. 2019, 5, 5. [Google Scholar] [CrossRef]

- Wang, Z.; Zhu, H.; Dong, Z.; He, X.; Huang, S.L. Less Is Better: Unweighted Data Subsampling via Influence Function. Proceedings of the AAAI Conference on Artificial Intelligence. 2020, 34, 6340–6347. [Google Scholar] [CrossRef]

- Xian, Y.; Lorenz, T.; Schiele, B.; Akata, Z. Feature Generating Networks for Zero-Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Himanen, L.; Geurts, A.; Foster, A.S.; Rinke, P. Data-Driven Materials Science: Status, Challenges, and Perspectives. Adv. Sci. 2019, 6. [Google Scholar] [CrossRef] [PubMed]

- Ramos, M.C.; Collison, C.J.; White, A.D. A Review of Large Language Models and Autonomous Agents in Chemistry. Chem. Sci. 2024, 16, 2514–2572. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).