1. Introduction

Corrosion is one of the most significant challenges faced by metallic structures in marine engineering [

1,

2,

3]. In marine environments, various metal structures, including ships, offshore platforms, and subsea pipelines, are particularly vulnerable to corrosion. Such failures can result in severe safety hazards and substantial economic losses [

4,

5,

6]. Consequently, developing effective corrosion prediction methods is essential to enhance the safety and durability of marine structures.

Marine corrosion is a complex physical process influenced by the material elements and dynamic marine conditions [

7,

8,

9]. As the demand for higher prediction accuracy increases, machine learning models have emerged as the primary approach for corrosion prediction [

10,

11]. However, the high time and economic costs associated with corrosion measurements often lead to datasets with small sample sizes [

12,

13,

14]. With regard to the metal marine corrosion prediction with small sample sizes, current machine learning methods primarily encounter two key challenges: (1) the optimization of model hyperparameters and generalization ability; (2) the limitations on model prediction performance imposed by the sparsity of the small sample space.

In terms of model optimization, the hyperparameters of machine learning models largely determine their generalization ability and stability. In the domain of small-sample corrosion prediction, commonly used machine learning algorithms include partial least square (PLS) [

15,

16], support vector regression (SVR) [

17,

18,

19], Gaussian process regression (GPR) [

20,

21], artificial neural networks (ANNs) [

22,

23,

24], and ensemble learning models [

8,

21,

25,

26]. These algorithms perform well in corrosion prediction modeling due to their ability to capture complex relationships between input features and corrosion outcomes. However, their performance in small-sample problems is highly sensitive to hyperparameters. To enhance their performance, efficient hyperparameter optimization is crucial [

27,

28]. Traditional hyperparameter optimization methods, such as grid search and random search, are often inefficient in high-dimensional and small-sample settings. These approaches can become computationally expensive and are prone to being trapped in local optima, reducing their effectiveness [

29,

30]. In contrast, evolutionary algorithms, such as genetic algorithms, are well suited for tackling large-scale, high-dimensional optimization problems. The ability of evolutionary algorithms to search global optima and handle complex, non-linear optimization landscapes makes it an ideal candidate for improving model selection [

31,

32,

33]. Although evolutionary algorithms have been gradually applied in regression prediction fields, there is considerable potential for expanding their use in small-sample marine corrosion prediction.

In terms of sample space sparsity, it is the primary constraint influencing further improvements in the accuracy of small-sample corrosion prediction. Virtual sample generation (VSG) technology, as an effective soft computing method, plays a key role in addressing the high-dimensional sample sparsity problem [

34,

35,

36]. By utilizing a small number of real data, VSG technology generates new virtual samples based on statistical models or algorithms, thereby expanding the dataset and improving the performance of prediction models [

37]. Currently, VSG techniques are primarily categorized into on noise injection-based, sample distribution-based, feature mapping-based, and generative adversarial network (GAN)-based methods. The noise introduction method typically generates new virtual sample inputs by adding random or white noise to the original samples [

38,

39]. The sample distribution-based method estimates the probability distribution of the original inputs and generates new virtual samples by randomly sampling from this estimated distribution [

34,

40,

41,

42]. The feature mapping-based method is analogous to sampling approaches. However, it first projects the original feature space into a specific feature space, followed by interpolation or sampling within this transformed space to generate virtual samples [

43,

44,

45]. The GAN-based method trains a generator and a discriminator using the original dataset. Virtual samples are generated by sampling from the noise space and feeding them into the generator. Common variants of this approach include Conditional GAN (CGAN) and Regression GAN (RegGAN) [

46,

47,

48,

49,

50]. Despite the advancements made by VSG in the domain of soft computing, its application in marine corrosion prediction remains considerably underdeveloped. Sutojo et al. [

14] proposed a linear interpolation-based virtual sample generation method, which was validated in the assessment of corrosion inhibitor performance. Shen and Qian [

51] utilized a Gaussian mixture model (GMM) for virtual sample generation and applied it to the degradation of rubber materials, resulting in a significant enhancement of aging modeling accuracy. These studies underscore the potential of VSG in addressing small-sample corrosion prediction challenges. However, further advancements are required in the validation of virtual sample effectiveness, particularly with regard to the multi-modal distribution characteristics inherent in marine corrosion processes.

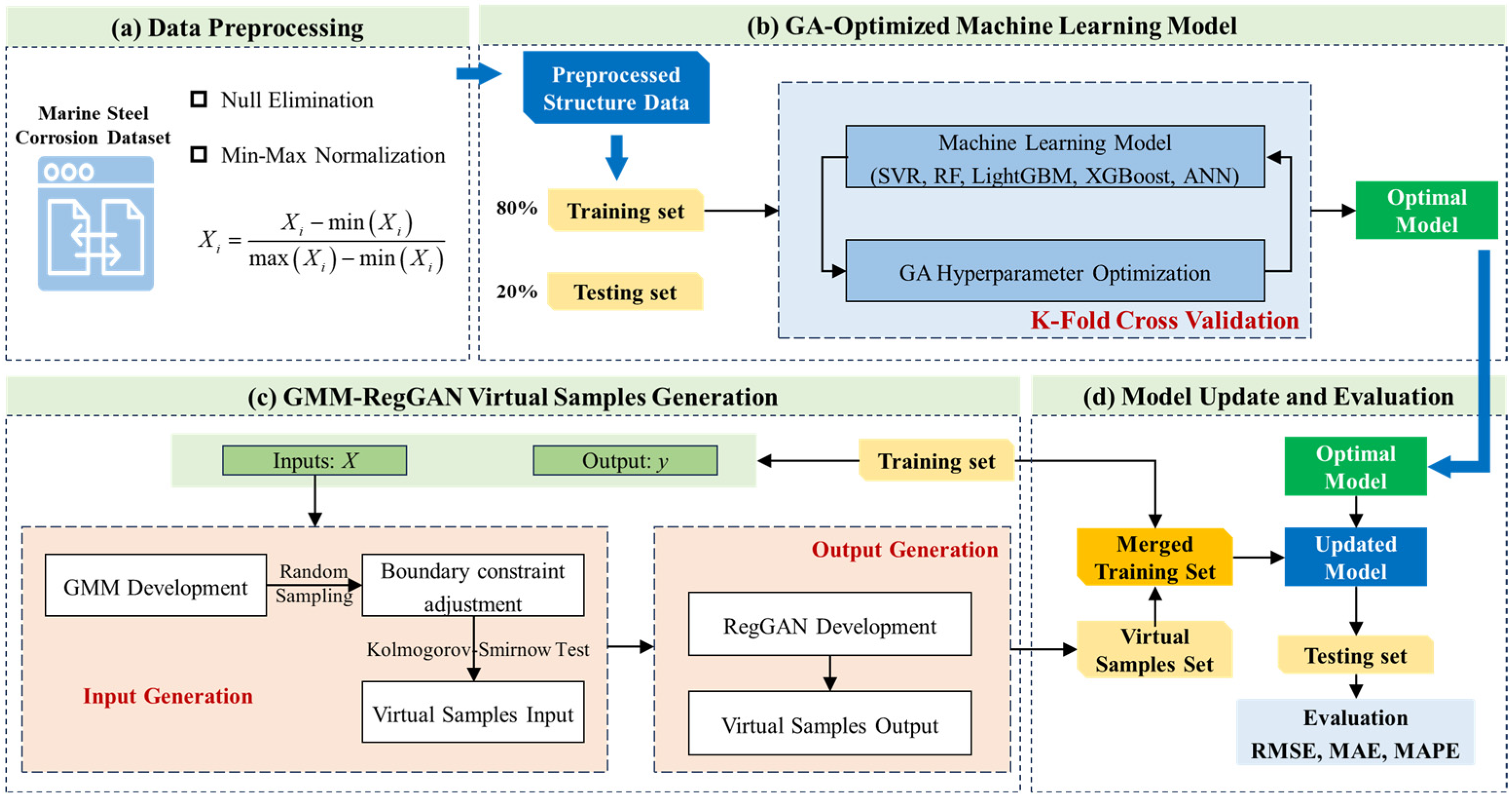

Therefore, we propose an integrated machine learning framework for marine steel corrosion prediction that addresses two fundamental challenges inherent to small-sample scenarios: model generalization and predictive accuracy. The proposed framework combines GA-XGBoost and GMM-RegGAN. Initially, a genetic algorithm (GA) is employed to optimize the hyperparameters of the XGBoost model, thereby enhancing its generalization capability. Subsequently, a virtual sample generation method based on Gaussian Mixture Models and Regression Generative Adversarial Networks (GMM-RegGAN) is utilized to alleviate sample space sparsity and improve predictive performance. Through this integration, the proposed model aims to enable accurate and robust prediction of marine steel corrosion under limited data conditions. The proposed method was validated on the small-sample marine steel corrosion dataset collected in this study and achieved good modeling results.

4. Results

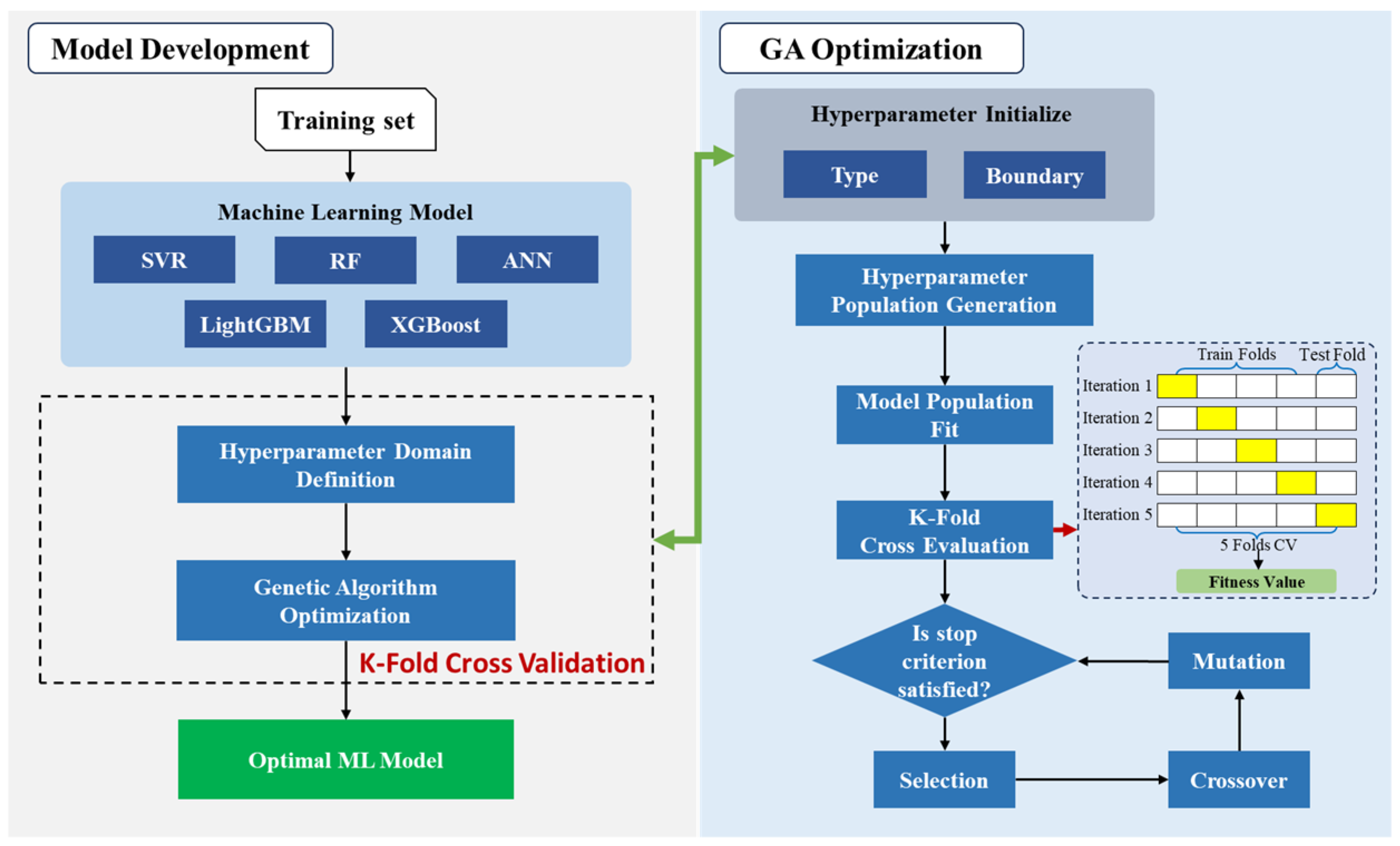

4.1. GA-Optimized Machine Learning Model Development and Validation

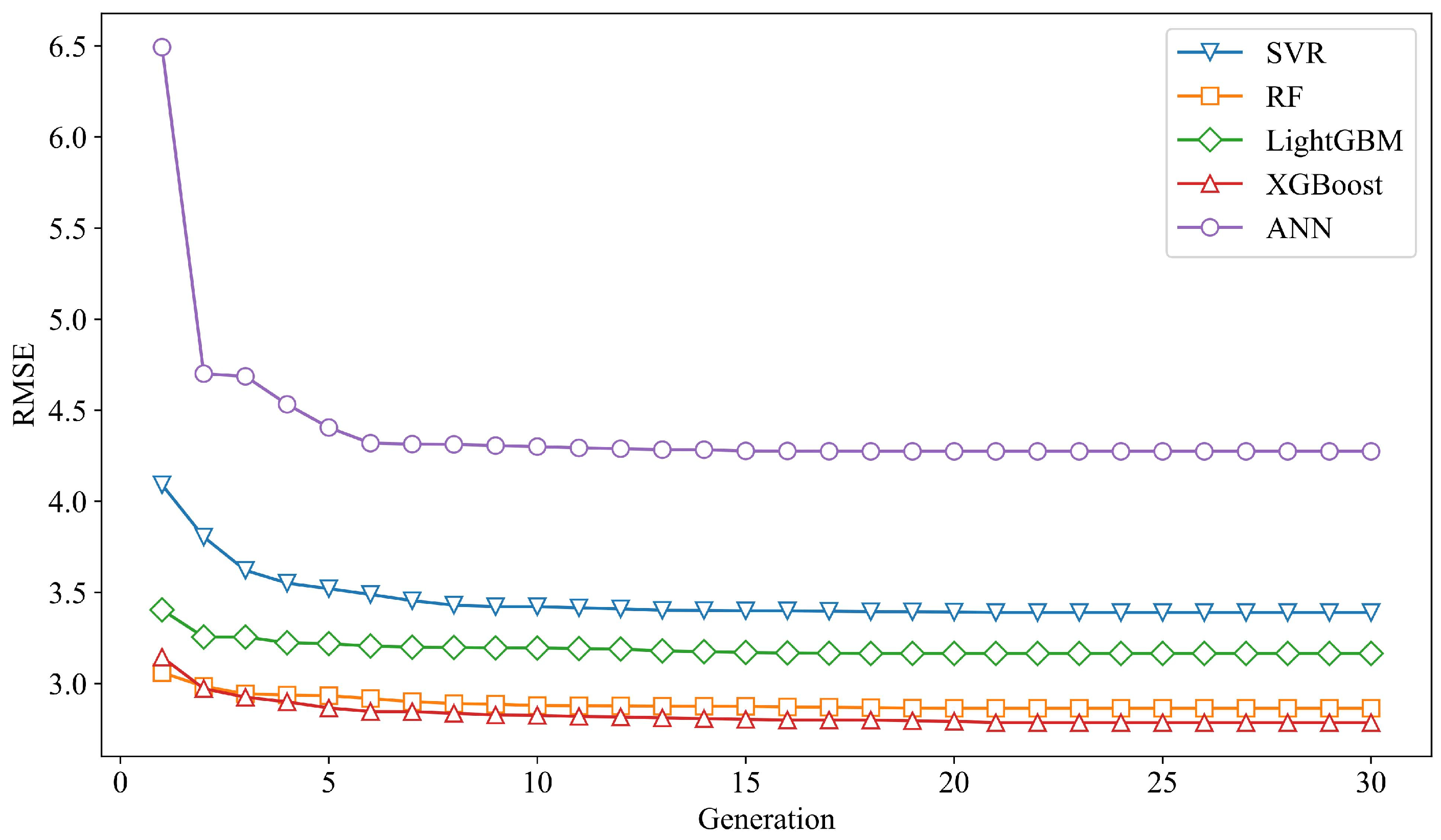

The marine steel corrosion dataset was divided into a training set and a test set, with 80% allocated for training and 20% for testing. Following the GA-based machine learning optimization framework proposed in

Section 3.2, we developed and fine-tuned five classical machine learning algorithms: SVR, RF, LightGBM, XGBoost, and ANN. During the hyperparameter optimization process, 5-fold cross-validation was employed to evaluate model performance, with the root mean square error (RMSE) used as the optimization objective. The performance variations of the five algorithms during the GA optimization process are illustrated in

Figure 5.

As shown in the figure, the RMSE values of all five models decrease rapidly during the initial generations of the GA iterations, followed by a gradual deceleration in the rate of decline. With continued iterations, the RMSE values of the five models progressively stabilize and eventually converge.

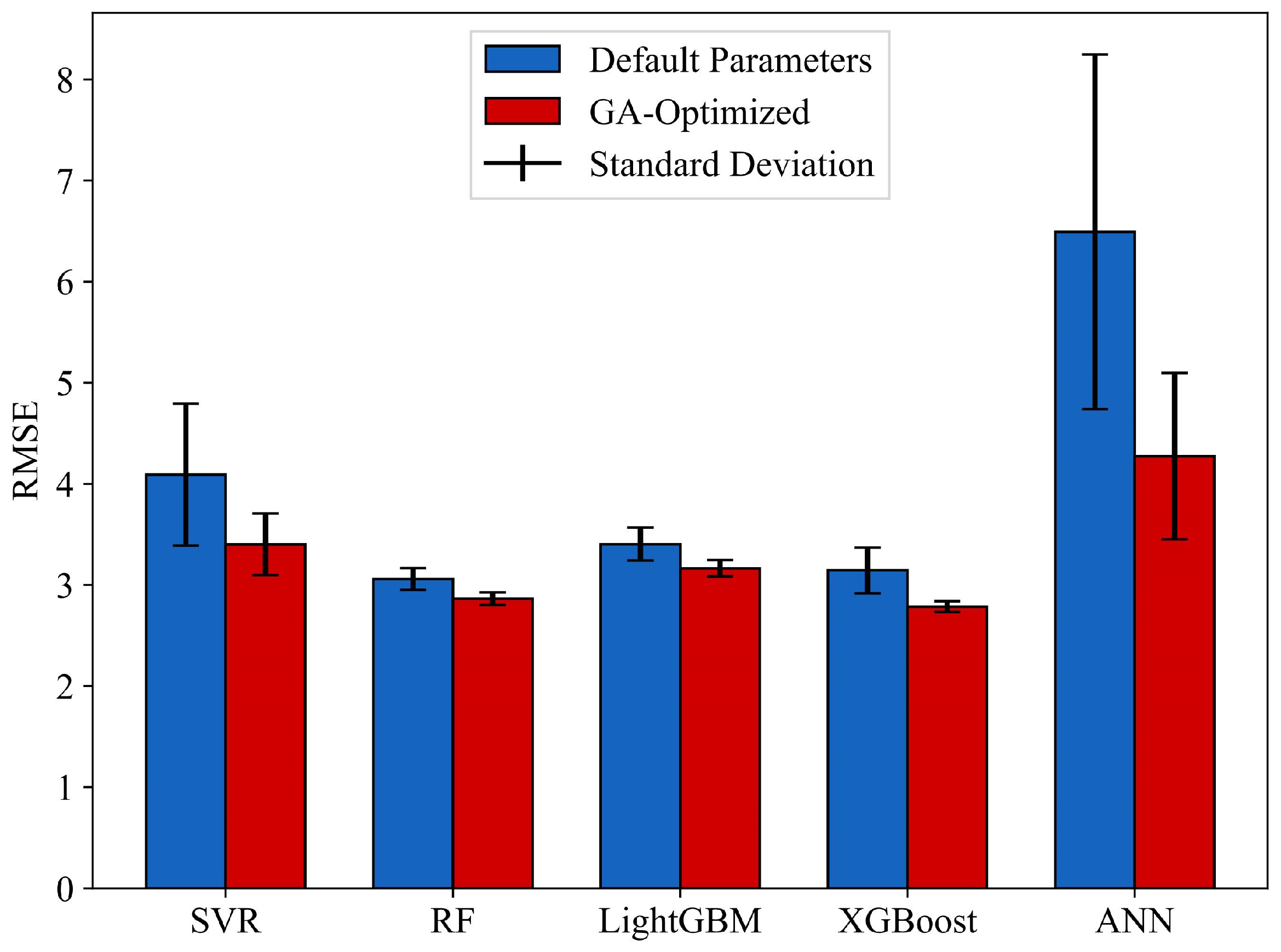

Table S2 lists the final hyperparameters of the five models after GA-based optimization. In addition, we compared the mean and variance of the cross-validated RMSE on the training set before and after GA optimization for each model. The corresponding results are presented in

Figure 6 and

Table 5.

As can be seen from the figure, after GA optimization, the performance of all five models significantly improved, and their variance decreased substantially. The results suggest that GA-based hyperparameter optimization significantly enhanced both model performance and stability. Meanwhile, RF, LightGBM, and XGBoost outperformed SVR and ANN, highlighting the advantages of ensemble learning models in small-sample problems. Under default hyperparameters, the RF model achieved the best performance, with a mean RMSE of 3.060 and a standard deviation of 0.107. Among the optimized models, the GA-XGBoost model demonstrated the best performance, with a cross-validation RMSE of 2.785 and a standard deviation of 0.054. Based on the results, GA-XGBoost was selected as the optimal model for developing the marine steel corrosion prediction model.

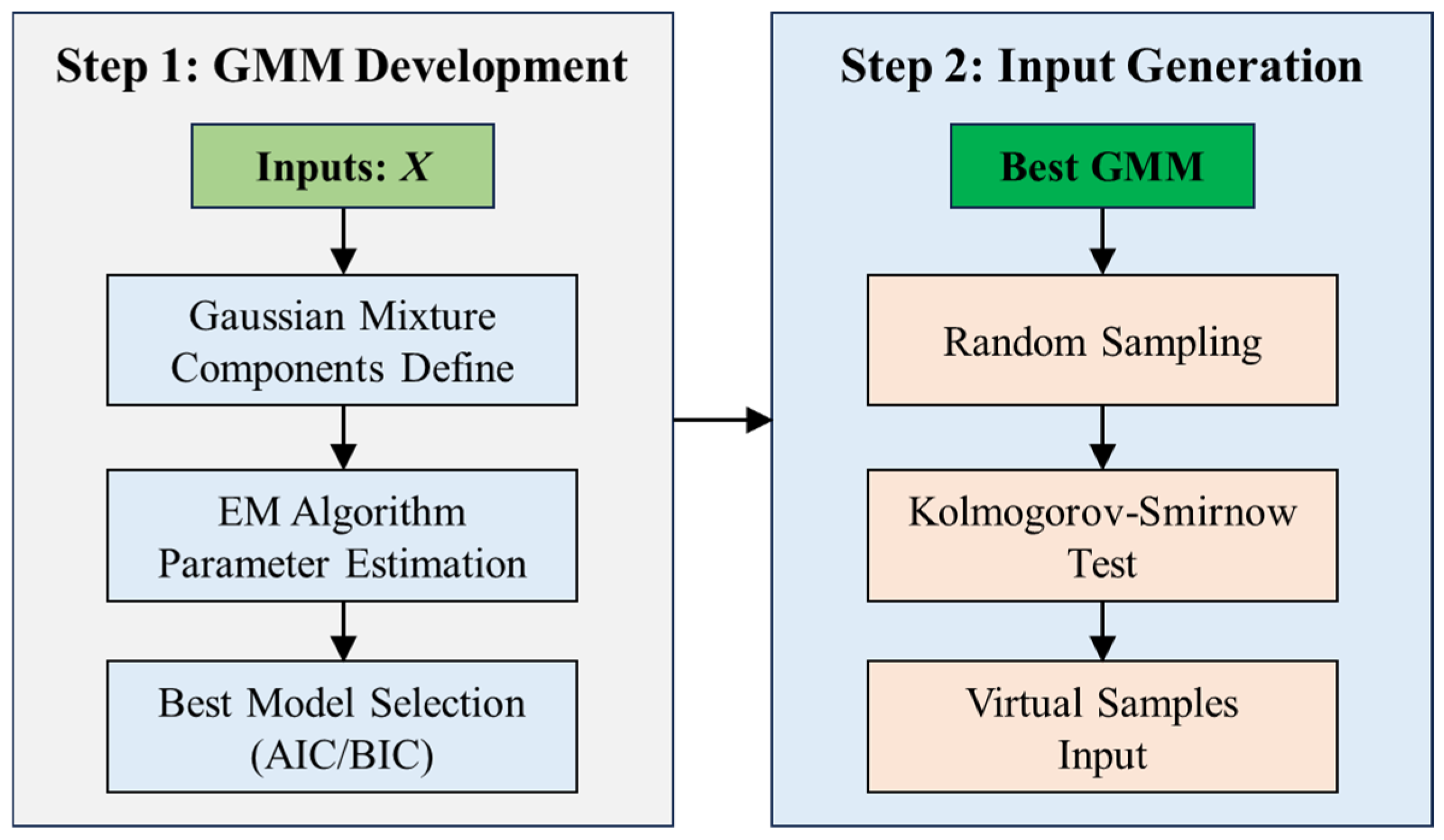

4.2. GMM Development and VSG Input Analysis

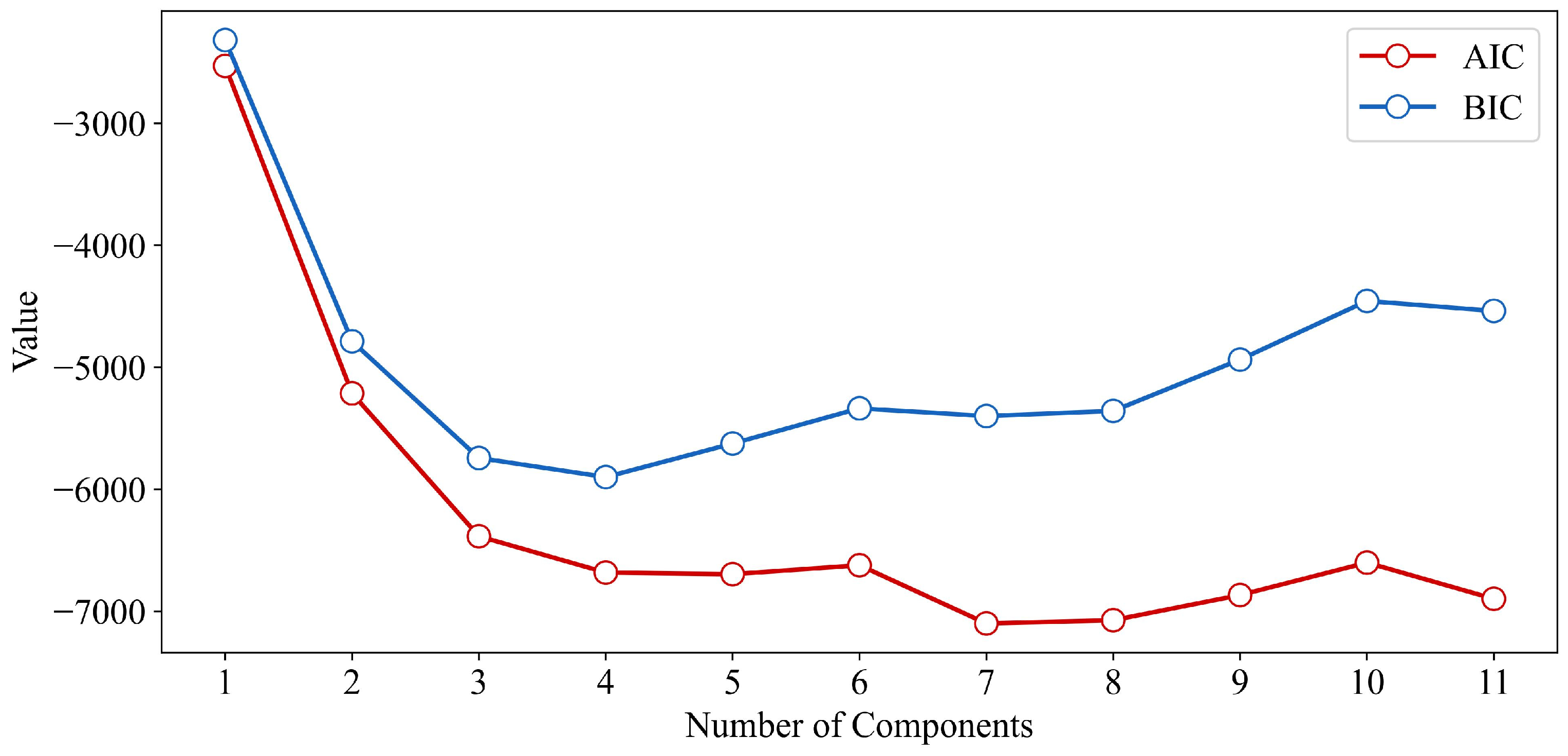

For the input features of the training set, we first developed a Gaussian Mixture Model (GMM) to generate virtual sample input and evaluate their rationality. Considering that the input feature dimension of the dataset is 11, the number of GMM components was set from 1 to 11. For each GMM configuration, parameter estimation was performed using the EM algorithm, and model fit was quantified using the AIC and the BIC metrics. The evaluation metrics for GMMs with different numbers of components are shown in

Figure 7.

As shown in the figure, both AIC and BIC values exhibit a trend of initially decreasing and then increasing as the number of components increases. In the early stages, the sharp decline in AIC/BIC suggests that the GMM was initially unable to fully capture the multi-modal characteristics of the input features. However, as the number of components continues to grow, the AIC and BIC values begin to rise, indicating an increased risk of overfitting due to excessive model complexity, which is detrimental to generalization. According to the AIC results, the improvements become marginal after four components, with the lowest AIC value observed at seven components. In contrast, the BIC reaches its minimum at four components and subsequently increases as model complexity rises. Considering both model complexity and performance, we ultimately selected the GMM with four components as the optimal model.

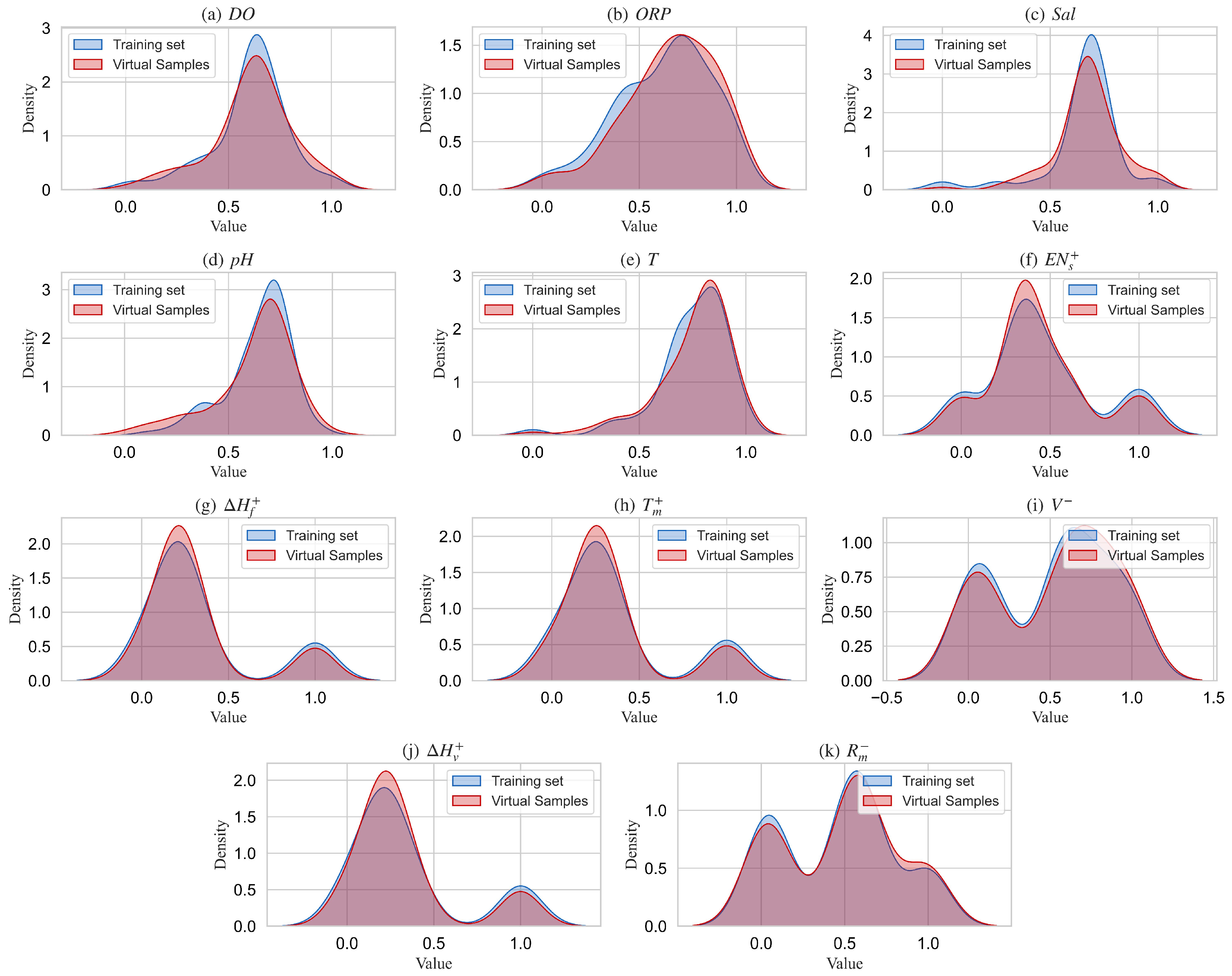

After the development of the GMM, the virtual sample input was generated by randomly sampling from the optimal GMM model. The boundaries of the generated virtual samples were constrained within the feature boundaries of the training set. To validate whether the distribution of the generated virtual samples is consistent with that of the real training samples, we generated a set of virtual samples (100 samples) approximately matching the size of the training set.

Figure 8 shows the probability density distributions for each feature in both the generated virtual samples and the real training samples.

As can be seen from the figure, the distribution of the virtual samples sampled from the best GMM model shows a good correspondence with the real training sample distribution, particularly in recognizing the multi-modal characteristics of the features. The GMM successfully captured the multi-modal nature of the 11 features, with the peak characteristics of the virtual samples maintaining a high degree of consistency with those of the real training samples. Furthermore, we performed a K-S test to quantitatively assess the consistency between the distributions of the virtual and real samples, and the results are presented in

Table 6.

As can be seen from the table, the mean and variance of the virtual samples are quite close to those of the real samples. In the K-S test, the D-values for all features are below 0.22. Using a significance level of 0.05 as the test criterion, all the 11 features passed the distribution consistency test. The results further quantitatively validate that the GMM model accurately captures the multi-modal distribution characteristics of the original training set samples and can be used to generate realistic virtual sample inputs.

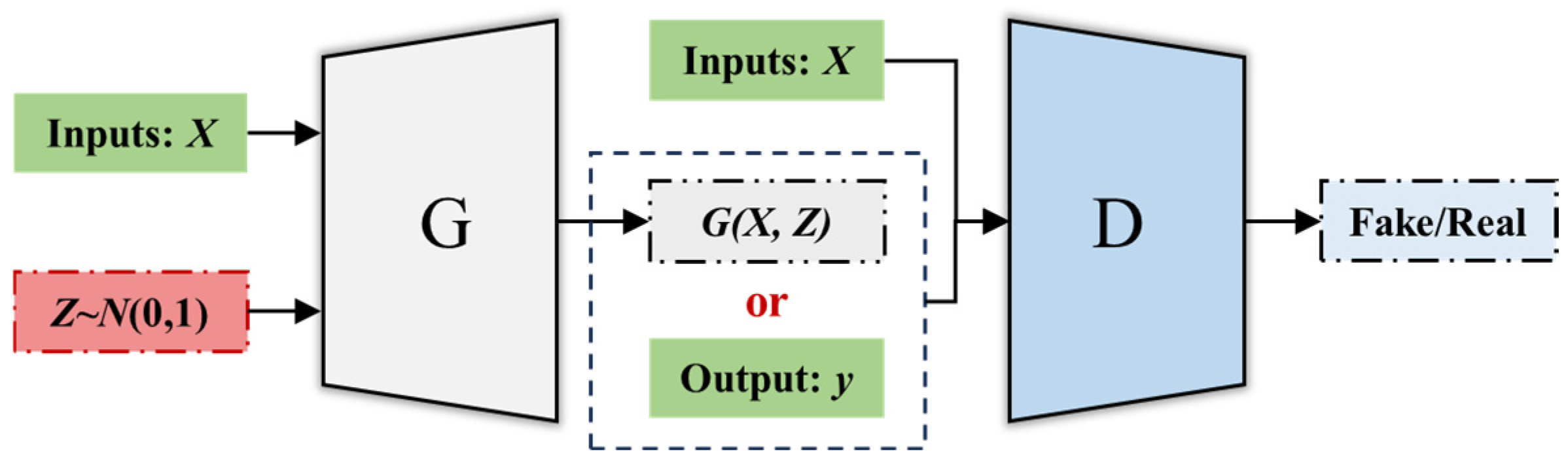

4.3. RegGAN Training and VSG Output Analysis

Based on the RegGAN structure defined in

Section 3.3.2, we performed model parameter estimation and evaluation using the training set data. During training, the generator is updated twice for every one update of the discriminator.

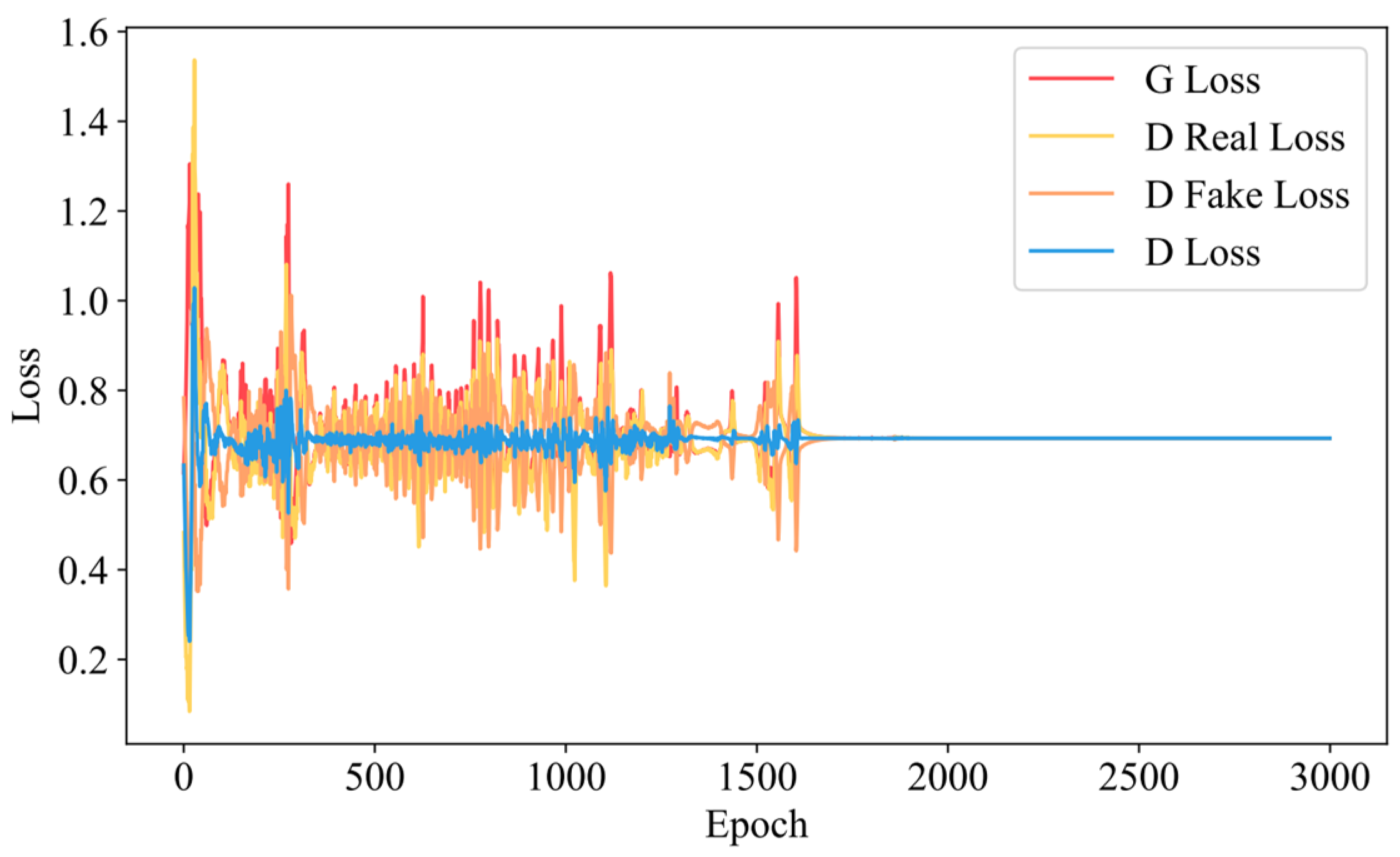

Figure 9 illustrates the changes in the losses of the generator and discriminator during the training process of the RegGAN model.

In the figure, the red line represents the generator loss, the yellow and orange lines represent the discriminator losses for real and virtual samples, respectively, and the blue line indicates the average discriminator loss. As can be seen from the figure, in the early stages of training, both the generator and discriminator losses are relatively high. As training progresses, both the generator and discriminator losses decrease, accompanied by significant fluctuations. In the final stages of training, the losses of both the generator and discriminator converge close to log(0.5), indicating that the losses of both the generator and discriminator have stabilized.

Using the virtual samples generated by the GMM model in

Section 4.2 as input, and combining them with random sampling from the noise space, we feed them into the generator of RegGAN to produce virtual sample outputs. To address the variability introduced by random sampling noise, we perform 20 random samplings for each input and compute the mean of the resulting outputs.

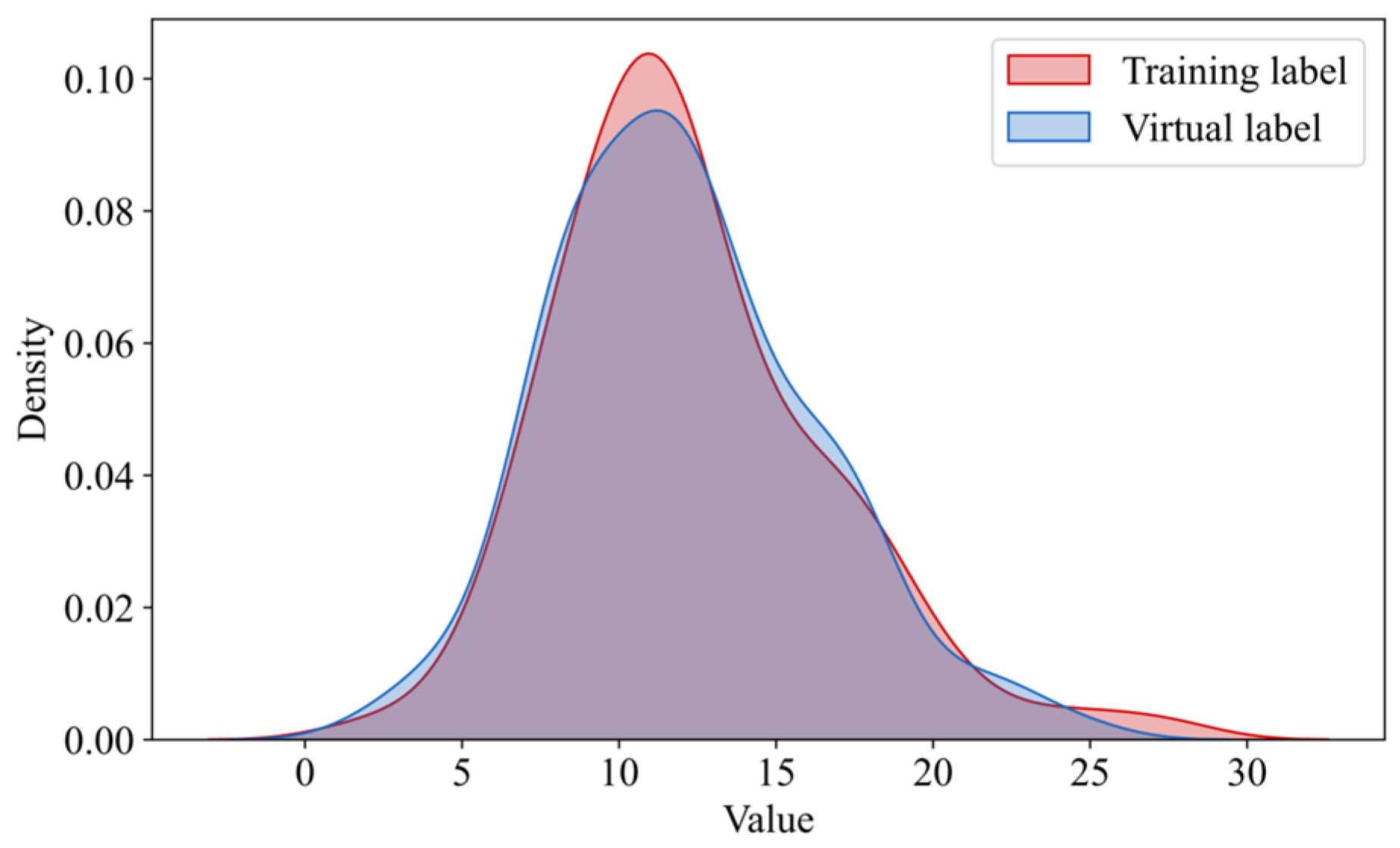

Figure 10 shows the probability density functions of the virtual sample outputs and the training sample outputs. As observed in the figure, the generated virtual sample outputs exhibit a high degree of consistency with the training set samples, validating the reasonableness of the generated virtual samples.

4.4. Model Update and Performance Comparison

By setting the number of virtual samples to 0, 10, 20, 50, 100, 150, 200, 300, 400, and 500, we initially investigated the impact of varying quantities of virtual samples on the improvement of model performance. To better compare the optimization effects of the proposed virtual sample generation method, we selected five classical virtual sample generation models for comparison: MD-MTD [

34], t-SNE [

44], GMM [

60], NITAE [

45], and CGAN [

46]. The performance improvements of the proposed model and the comparative models on the training set under different virtual sample quantities are illustrated in

Figure 11 and

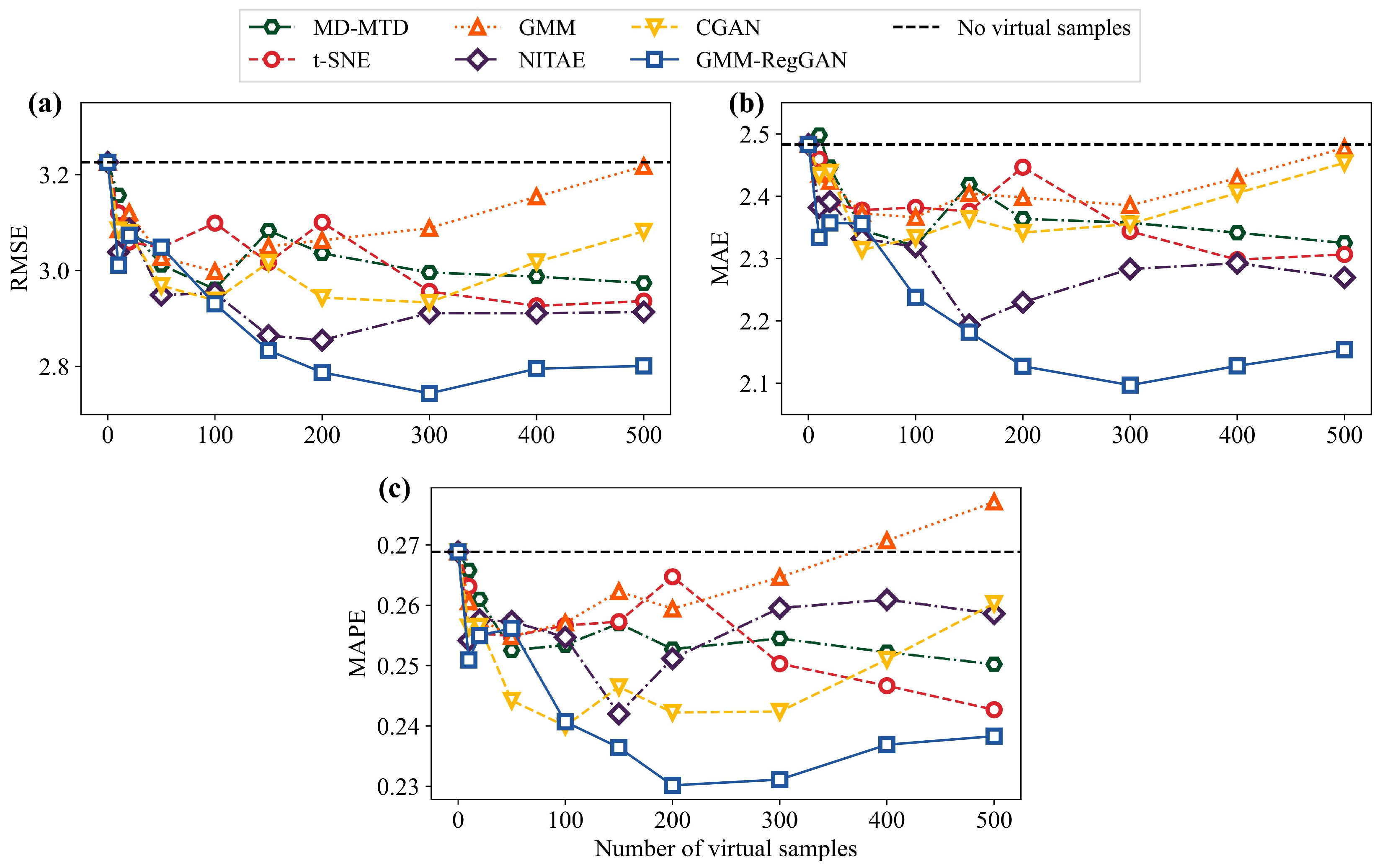

Tables S3–S5.

As can be seen from the figure, the introduction of virtual samples for model updating generally leads to a reduction in the three-evaluation metrics across all the VSG methods. This demonstrates the feasibility of VSG methods in enhancing the prediction performance of corrosion in marine steel under small-sample conditions. Furthermore, different VSG methods result in varying optimal numbers of virtual samples for improving model performance. For most models, the optimal performance is achieved with between 100 and 300 virtual samples. Compared to other methods such as MD-MTD, t-SNE, GMM, NITAE, and CGAN, the proposed GMM-RegGAN model demonstrates the most significant improvement in performance, with notable reductions in the three metrics.

4.5. Performance Improvement Rate Analysis

To further quantitatively analyze the model performance improvement, we calculated the error improvement rate (EIR) of the evaluation metrics for different numbers of virtual samples. The results are shown in

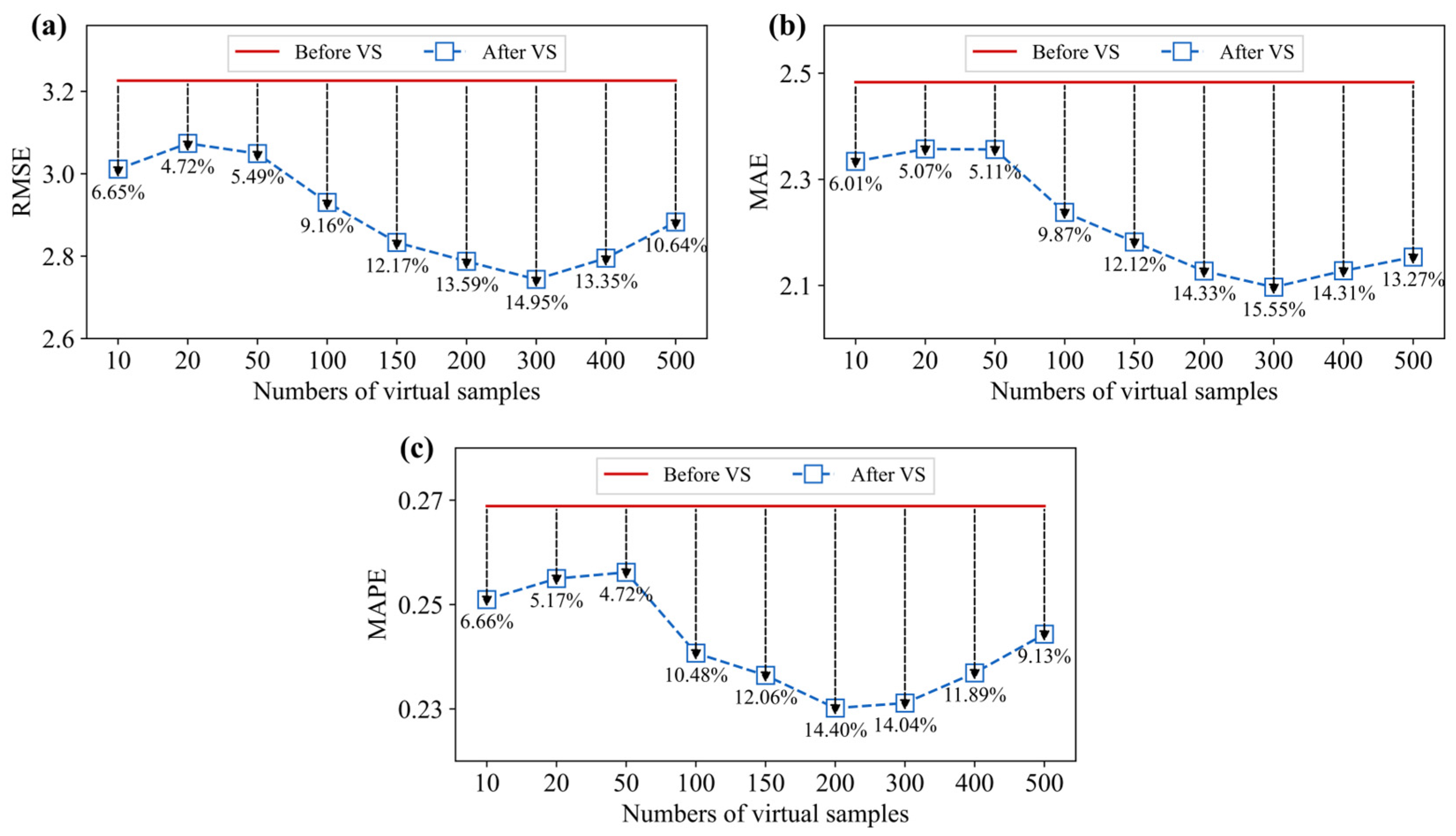

Figure 12.

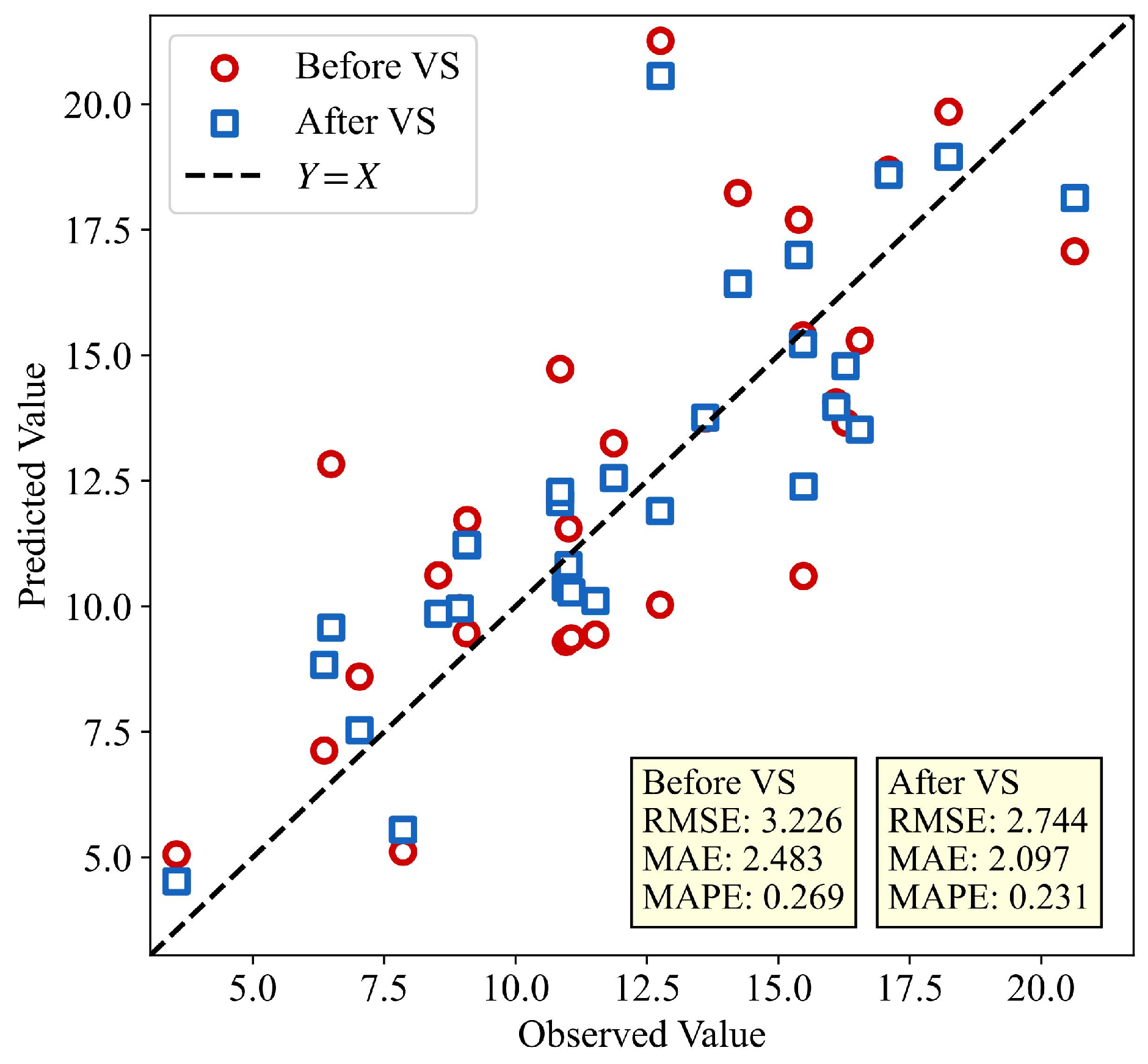

From the figure, it can be seen that the performance of the proposed method followed a trend where the performance initially increases and then decreases as the number of virtual samples increases. The optimal performance was reached when the number of virtual samples is 300. When comparing the model with the original base model (without virtual sample) at the optimal number of virtual samples, the RMSE decreases from 3.226 to 2.744, MAE decreases from 2.483 to 2.097, and MAPE decreases from 0.269 to 0.231. The model EIRs for the three metrics are 14.95%, 15.55%, and 14.04%, respectively, indicating that the proposed model has good optimization performance.

To observe the improvement in single-sample prediction results after VSG, we used the GMM-RegGAN model to generate the optimal number of virtual samples. Then, we compared the prediction results for single testing samples with those of the base model. The results are shown in

Figure 13. As can be seen from the figure, the model after VSG produces prediction results that were mostly between the predictions of base models and the actual values. This indicates that the virtual samples achieved improvement in the prediction error for individual samples.

5. Discussion

5.1. Approaches to Model Improvement

Virtual sample generation (VSG) is an effective approach to enhance model performance in small-sample problems using machine learning. However, there is a theoretical upper limit to the performance improvement provided by VSG, and the enhancement is not infinite. On one hand, the quality of the generated virtual samples directly impacts the model’s performance. If the virtual samples significantly deviate from the true data distribution or introduce noise, it may lead to overfitting and reduce the model’s generalization ability. On the other hand, VSG cannot generate entirely new information, it can only interpolate or extrapolate based on the existing data distribution. Thus, it cannot overcome the inherent limitations of the data itself. To further improve model performance, VSG can be combined with methods such as multi-model fusion and transfer learning. For example, by using transfer learning, degradation data from other domains can be transferred to the target task, optimizing feature extraction methods and enhancing the model’s generalization ability. The multi-model fusion strategy can also help avoid the limitations of single-model predictions. Combining these fusion modeling techniques provides an effective way to improve the prediction performance of small-sample marine steel corrosion.

5.2. Insight of the Model Structure

This study aims to address the challenges in small-sample marine steel corrosion prediction, including high-dimensional collinearity, poor model robustness, and low accuracy. The genetic algorithm-optimized machine learning framework overcomes model robustness issues in small-sample problems, significantly improving performance and stability. Meanwhile, the GMM-RegGAN virtual sample generation method updates the model by generating virtual samples, further enhancing its predictive accuracy. The proposed model structure has been validated on marine steel corrosion datasets, demonstrating its effectiveness. Given the difficulty in monitoring structural degradation in marine environments, most degradation issues in marine engineering share small-sample characteristics. Therefore, the proposed method is also applicable to various marine engineering problems with similar structures. Moreover, the current model proposed in this study only considers metal properties and dynamic marine environments as inputs. In reality, corrosion degradation of metals under various operating conditions and long-term service environments may also face small-sample modeling issues. Future research could expand the applicability of the proposed model by incorporating an analysis of factors such as operating conditions and service time.