Regulating AI in the Energy Sector: A Scoping Review of EU Laws, Challenges, and Global Perspectives

Abstract

1. Introduction

How do EU laws and regulations influence the development, adoption, and deployment of AI-driven digital solutions in the energy sector?

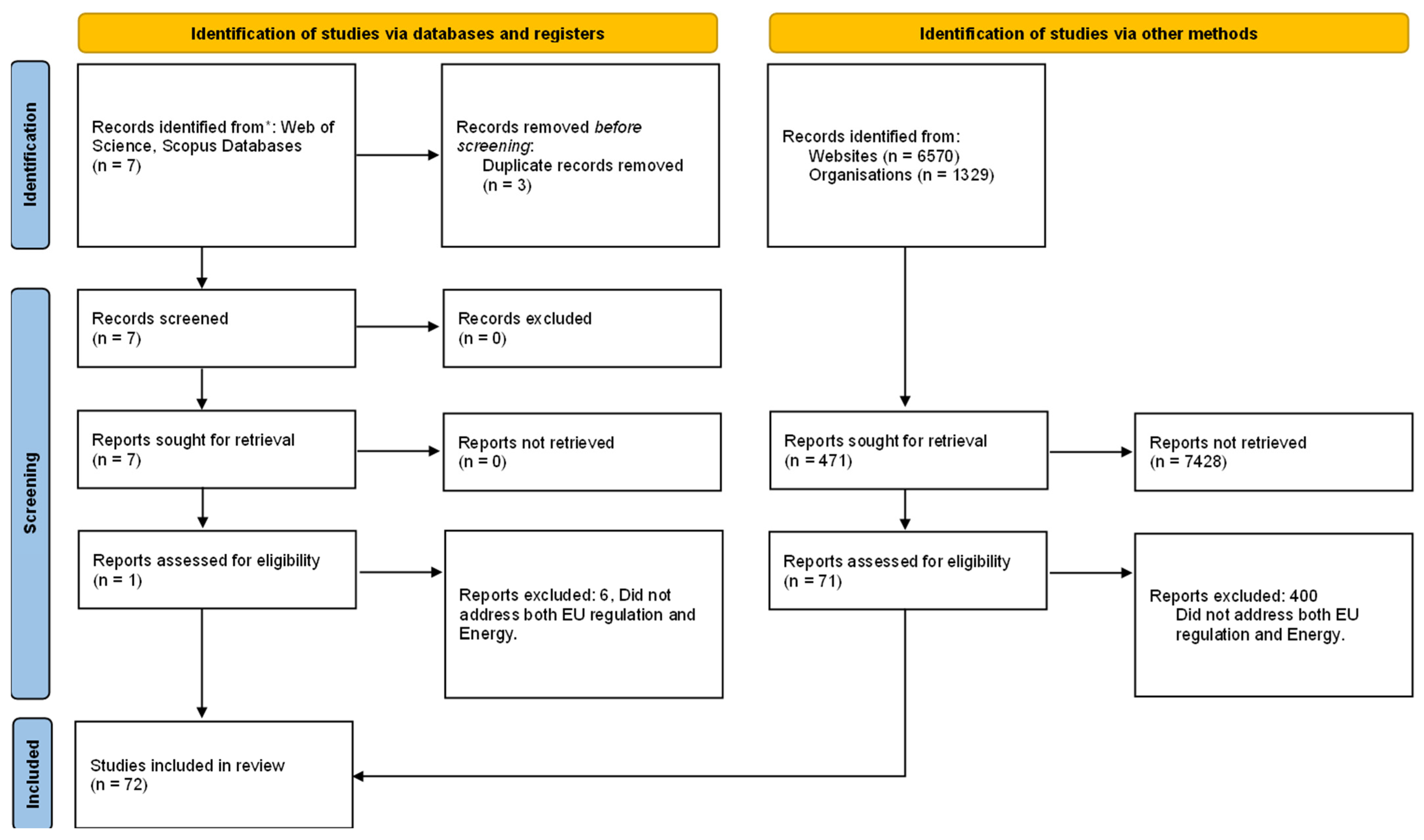

2. Methodology

- Official EU legal documents: The search in EUR-Lex, the EU’s legal database, identified regulations, directives, and decisions relevant to AI and digital technology in the energy sector [5]. Key documents included the AI Act proposal, GDPR, NIS2 Directive, Cyber Resilience Act proposal, Network Code on Cybersecurity for electricity, and the Cybersecurity Act.

- Regulatory and policy reports: The European Commission website [6] and agencies like the European Union Agency for Cybersecurity (ENISA) [7] were searched for relevant reports, strategy documents, or press releases. For instance, the European Commission’s press release on the Digitalization of Energy Action Plan [8] and the adoption of the Network Code on Cybersecurity was reviewed [9]. ENISA publications like AI cybersecurity in electricity forecasting [10]) were included to glean insights into compliance guidelines and threat landscapes.

- Academic literature: Searches in scholarly databases, including Scopus and Web of Science (WoS), identified articles that examine the intersection of law, regulation, and AI in the energy sector. These included a 2021 study on AI governance in electricity systems under the proposed AI Act [1] and a comparative analysis of AI and energy regulations in China [11].

- Industry white papers and technical standards: The review included white papers from industry associations, consulting firms, and standardization bodies to analyze AI deployment in energy under regulatory constraints. Examples include a law firm’s analysis of AI and energy in the U.S. [12] and consulting reports on the EU AI Act’s implications for energy utilities [3].

- Energy agencies: The review incorporated documents from energy regulators, such as the Agency for the Cooperation of Energy Regulators (ACER), the European Network of Transmission System Operators for Electricity (ENTSO-E), the International Energy Agency (IEA), the International Renewable Energy Agency (IRENA), and the North American Electric Reliability Corporation (NERC).

(EU OR “European Union”) AND (Energy or power) AND (Act OR Law OR Regulation OR Directive) AND (AI OR “Artificial Intelligence” OR Digitalization) AND (“Data Privacy” OR Cybersecurity OR Resilience) AND (Generation OR “Transmission grid” OR “Distribution grid” OR consumption OR Market)

3. Overview of Key EU Regulations

3.1. EU Artificial Intelligence Act (AI Act)

- Prohibited AI practices: Certain AI uses deemed unacceptable (e.g., social scoring by governments, or AI that manipulates humans subconsciously) will be banned outright (these are not typical in the energy sector, so less relevant here).

- High-risk AI systems: The Act designates specific high-impact use cases as “high-risk”, subjecting them to strict obligations. Notably, AI systems used in critical infrastructure management (including energy) are on this list. Annex III of the proposal explicitly classifies “AI systems intended to be used as safety components in the management and operation of […] the supply of water, gas, heating and electricity” as high-risk [18]. This means an AI system that, for example, controls an electrical substation, balances supply and demand on the grid, or optimizes a power plant’s output could be considered high-risk if its malfunction might jeopardize safety or service continuity [18]. High-risk AI providers must implement comprehensive risk management, ensure high-quality training data (to minimize bias and errors), enable traceability and logging, provide detailed technical documentation, and undergo conformity assessments before deployment [1]. Users of such AI (e.g., a grid operator deploying an AI tool) also have obligations like human oversight and monitoring of performance.

- Limited-risk systems: For certain AI systems that interact with people or generate content, like chatbots or deepfakes, the Act requires transparency, i.e., disclosing that one is interacting with AI. In energy, an example might be an AI-powered customer service agent for a utility; it would need to identify itself as AI to the customer, but these systems are not “high-risk” under the Act.

- Minimal-risk AI: AI applications with minimal risk, such as internal analytics tools, are not directly regulated by the Act, apart from general voluntary codes of conduct.

3.2. EU General Data Protection Regulation (GDPR)

- Lawful basis and purpose limitation: Energy companies using AI that processes personal data, such as algorithms optimizing home energy consumption based on smart thermostat data, must establish a legal basis for data processing. This may include consent, contractual necessity, or legitimate interest. Data must not be repurposed in ways that conflict with their original purpose. For example, using detailed smart meter data to infer household behavior may require explicit consent due to their potential to reveal sensitive personal habits.

- Data minimization and privacy-by-design: The GDPR pushes companies to collect only data that are necessary and to integrate privacy measures into technology design. This means that AI models should avoid using personally identifiable information if not needed. Techniques like anonymization or aggregation of energy data are encouraged so that AI can learn from usage patterns without exposing individual identities [19]. In a grid context, if AI monitors distributed energy resources, ensuring that individual customer data are de-identified can help compliance. ENISA’s guidance for AI in electricity demand forecasting, for example, highlights filtering or anonymizing consumer data to comply with the GDPR [10].

- Automated decision-making and profiling (Article 22): The GDPR gives individuals rights when significant decisions about them are made by algorithms. In energy, an example could be an AI system that automatically adjusts a user’s tariffs or disconnects service for non-payment. If these decisions have legal or similarly significant effects, consumers have the right to human review and an explanation. Energy providers must be cautious when using AI for things like credit scoring for energy contracts or detecting fraud, as these could invoke Article 22.

- Security and breach notification: The GDPR requires personal data protection against breaches. AI systems handling customer data must implement security controls, often aligning with cybersecurity laws, and report breaches to authorities within 72 h. This creates a strong incentive to secure AI pipelines, ensuring measures such as protecting training datasets stored in the cloud.

- Data Protection Impact Assessments (DPIA): If an AI application poses a high risk to individual privacy, such as large-scale monitoring through smart devices, the GDPR requires a Data Protection Impact Assessment (DPIA). This structured assessment identifies risks and mitigation measures. Many AI projects in the energy sector, particularly smart home and smart city initiatives, will need DPIAs to evaluate privacy impacts systematically [19].

3.3. EU Cybersecurity of Networks and Information Systems (NIS2 Directive)

- Expanded Scope—Essential Entities: Under NIS2, medium and large organizations in the energy sector are classified as essential entities that must comply with the directive [22]. This means that most large utilities, grid operators such as transmission system operators (TSOs) and distribution system operators (DSOs), major power generation companies, and emerging players like large-scale renewable operators and aggregators. Many of these entities are actively implementing AI for grid management, trading, and maintenance. Smaller companies may be exempt, but supply chain dependencies will extend compliance requirements to AI providers, as essential entities will require their suppliers to adhere to regulations.

- Cybersecurity Risk Management Measures: NIS2 mandates that covered entities implement “appropriate and proportional technical, operational, and organizational measures” to manage cybersecurity risks [23]. This includes measures such as risk analysis, incident handling, business continuity, encryption, access control, and others [24]. For an AI system integrated into operations, this means it must be secured as part of the overall network. For instance, if a DSO uses an AI to autonomously control voltage or detect outages, that AI and its data flows must adhere to state-of-the-art security, including secure development practices, regular vulnerability assessments, network segmentation, etc. State of the art is explicitly expected [23]. Implementing strong cybersecurity measures for AI models, such as protecting against adversarial attacks and ensuring the integrity of AI decisions, becomes a key aspect of compliance.

- Incident Reporting: Energy entities must report significant cybersecurity incidents to national authorities, such as CSIRTs, within strict timelines, typically providing an initial notice within 24 h [9]. If an AI system is compromised, such as an intrusion manipulating an AI-driven energy management system, it triggers mandatory incident reporting. This requirement incentivizes continuous monitoring of AI behavior to detect anomalies and enhance security. Notably, the new Network Code on Cybersecurity for electricity aligns with NIS2’s reporting mechanisms [9], so that energy-specific incidents are handled coherently.

- Supply Chain Security and Supervision: NIS2 places emphasis on the cybersecurity of supply chains and supplier relationships [22]. Energy companies must evaluate the security of any AI software or cloud service they use. Regulators can impose penalties or corrective measures for non-compliance, while European cooperation through bodies like the Cooperation Group will provide further guidance. From an AI perspective, this pushes the use of trusted AI providers and possibly certified products. It also means documentation: energy entities might have to document how an AI tool’s risks are managed, to satisfy regulators during audits.

- National Strategies and Cross-Border Collaboration: Each Member State must have a national cybersecurity strategy for sectors, including energy [22]. This, along with broader EU initiatives, fosters a strong focus on cyber resilience across all levels. In this environment, AI projects may find it easier to access support and guidance from national authorities on secure deployment practices. Collaboration frameworks such as the EU CyCLONe network, which manages cybersecurity crises, also cover incidents in the energy sector [9], ensuring that if an AI-triggered incident occurs (e.g., a widespread grid disruption from a cyberattack on AI systems), there is coordination in response.

3.4. EU Cyber Resilience Act (CRA)

- Scope of Products: The CRA applies to almost any software or hardware product with a digital component, except for certain products already regulated under other frameworks, such as medical devices [22]. This broad scope includes everything from AI software libraries to smart inverters in solar panel systems. In the energy sector, devices such as smart meters, EV charging stations, grid monitoring tools, and home energy management apps all fall under its requirements and must comply with its cybersecurity standards.

- Baseline Security Requirements: Manufacturers must ensure that their products meet specific cybersecurity requirements, including protection against unauthorized access, secure-by-design and default configurations, data protection for confidentiality and integrity, and mitigation of known vulnerabilities [22]. For example, the AI software used in a wind turbine control system must not contain hardcoded passwords or unpatched vulnerabilities. The CRA’s requirements cover the entire product lifecycle, from design and development, where security must be embedded, to post-sale maintenance, which includes providing security updates [22].

- Critical Products and Conformity Assessment: The Act designates certain product categories as “critical”, including operating systems, firewalls, and specific industrial control systems. These products may require third-party conformity assessments before they can be placed on the market [22]. In an energy context, a control system for substations or grid management software could be deemed critical and thus need an independent cybersecurity certification prior to deployment. If an AI platform falls under a critical category, its provider must get an external audit of its security measures.

- CE Marking and EU Market Access: Compliant products will bear the CE marking, indicating that they meet CRA standards [22]. Non-compliance can lead to fines or exclusion from EU markets, making cybersecurity a crucial consideration for global AI vendors in the energy sector. This regulation extends the traditional concept of safety compliance, familiar in energy for electrical safety, to include the cyber safety of digital components.

- Impact on AI Developers: While the CRA does not explicitly mention AI, any AI software qualifies as a digital product and must comply. This includes AI-driven energy management software. The CRA’s secure development requirements, such as addressing vulnerabilities and publishing security guidance, align with the AI Act’s mandates for high-risk AI, particularly regarding robustness and accuracy. For example, under the CRA, an AI software package must not contain unpatched critical common vulnerabilities and exposures (CVEs), while the AI Act requires safeguards against manipulation of its outputs. Together, these regulations drive developers toward building secure and trustworthy AI.

3.5. EU Network Code on Cybersecurity (Electricity Sector)

- Target and Scope: The code focuses on cross-border electricity flows and the key entities that influence them. In practice, this includes transmission system operators (TSOs), large distribution system operators (DSOs) connected to cross-border systems, and potentially other critical grid participants such as regional coordination centers. These entities manage the high-voltage grid and facilitate electricity movement between countries, making them essential to European energy security. A cyber incident affecting them could have widespread consequences across the EU.

- Risk Assessment Regime: The core of the code is the establishment of a recurrent cybersecurity risk assessment process for the electricity sector [9]. TSOs and other designated operators must systematically identify digital assets and processes essential to cross-border electricity flows, assess their cybersecurity risks, and implement appropriate mitigation measures. AI systems used in grid operations, such as those used for load balancing or interconnector control, will be evaluated as part of this process. If an AI system is deemed critical, operators must assess the cyber risks it introduces, including threats like data poisoning attacks or AI malfunctions, and implement the necessary controls. These may range from technical safeguards to staff training for AI oversight.

- Governance and Alignment with NIS2: The network code establishes a governance model for cybersecurity in electricity that leverages existing structures [9]. It aligns with horizontal legislation like NIS2 by using similar or the same reporting channels and collaborative bodies. For example, incident reporting under this code would go through the national CSIRTs, as mandated by NIS2 [9]. In the case of a large-scale cyber crisis affecting energy, coordination would occur via the EU CyCLONe network (Cyber Crisis Liaison Organization Network) [9]. This alignment prevents duplication, allowing energy companies to follow a unified process rather than separate compliance frameworks. It also integrates energy-specific insights into the broader cybersecurity ecosystem. The European Commission emphasized that this common baseline approach ensures consistency with NIS2 while respecting established practices in the power industry [9].

- Stakeholder Collaboration: The code was developed with input from ENTSO-E (the European network of TSOs), the EU DSO entity, and the Agency for Cooperation of Energy Regulators (ACER) [9]. It fosters a collaborative approach where methodologies and risk scenarios can be shared, and the code can be updated as threats evolve [9]. We can expect sector-specific guidance, perhaps even lists of best practices for securing AI in control systems, to emerge under this framework.

- Compliance and Enforcement: As a delegated regulation under the Electricity Regulation (EU) 2019/943, once it passes the scrutiny period by the Council and Parliament [9], it becomes binding. TSOs/DSOs will likely have to report compliance and could face regulatory scrutiny by energy regulators or cybersecurity authorities if they fall short. The code promotes a common baseline, but also respects investments already made [9], meaning that if a country’s TSO already has a robust cyber program, the code will not force unnecessary changes, so long as outcomes are met.

3.6. EU Cybersecurity Act and Certification Framework

- ENISA’s Role: The Act empowered ENISA to actively support the implementation of cybersecurity policies, including developing certification schemes and providing guidance [19,26]. ENISA has been actively involved in cybersecurity efforts for both the energy sector and AI. It has published reports on AI-related cybersecurity challenges, including one on AI for electricity demand forecasting, which highlights potential threats and corresponding security controls [10], and is developing frameworks for AI cybersecurity best practices [27,28]. These efforts help interpret high-level regulations into practical measures. An energy company looking to secure an AI system can refer to ENISA’s guidance to understand threats specific to industrial AI and how to counter them, feeding into their NIS2 and network code compliance.

- European Cybersecurity Certification Framework: The Act lays out a structure by which EU-wide cybersecurity certification schemes can be created for different categories of products/services [10,29]. These certification schemes are typically voluntary unless mandated by later legislation and include assurance levels of Basic, Substantial, and High. This is particularly relevant for the energy sector, as certification can apply to smart grids, IoT, and cloud services, all of which are essential for AI applications. Notably, a certification scheme for general IT product security based on Common Criteria and the EU Cloud Certification Scheme (EUCS) have been in advanced development stages [30]. A future certification scheme for AI systems or energy sector IT could emerge, but until then, existing schemes provide a useful framework. For example, if an AI platform for energy management operates on a cloud service, an energy company might opt for a provider certified under the EU Cloud Certification Scheme (EUCS) at the “High” assurance level, ensuring stringent security standards [31]. Similarly, the IoT components used in AI-driven smart grids might be certified under an EU IoT security scheme.

- Synergy with the Cyber Resilience Act: The Cybersecurity Act’s framework essentially became the basis for the CRA’s future implementation. The CRA might leverage existing schemes or initiate new ones to certify that products meet its requirements. Energy companies can actively contribute to the development of certification schemes by engaging with standards organizations to ensure that energy-specific requirements, such as reliability, are addressed. This fosters a harmonized approach where independent certification replaces vendor-specific security claims, providing a higher level of assurance. Such certifications can also streamline procurement processes, enabling companies to prioritize certified AI systems for critical operations.

- Global and Sectoral Influence: The Act also positions the EU as a leader in cybersecurity standard-setting [18]. For the energy sector, which operates within a globally interconnected framework involving cross-border grids and international equipment suppliers, EU cybersecurity standards could shape global norms. The Cybersecurity Act explicitly promotes the development of international standards for trustworthy technology, reinforcing the EU’s role in setting cybersecurity benchmarks that may influence regulations worldwide [18]. In AI and energy, this could lead to international guidelines or mutual recognition of certification, very relevant when compared with U.S. or Chinese approaches later.

4. Impact of Regulations on AI Development, Adoption, and Deployment in Energy

4.1. AI in Energy Generation (Supply-Side) and Regulations

- AI Act (High-Risk Systems): Many generation facilities are part of critical infrastructure. If an AI system is introduced as a safety component in a power plant’s control system—for example, an AI that helps regulate reactor conditions in a nuclear plant or ensures safe load dispatch in a large hydro dam—it would likely be deemed high-risk under the AI Act’s critical infrastructure category [18]. Compliance would require the AI provider of the control system to conduct rigorous ex ante testing and certification of the AI. This could slow down AI adoption because AI providers must invest in documentation and risk assessments and possibly involve notified bodies for assessment. However, given that generation companies are accustomed to certification, e.g., turbines must be certified to grid codes, integrating an AI certification step might be feasible. A compliance challenge is the lack of established standards today for validating AI performance in control. Until formal standards are established, proving that an AI system meets the “state of the art” in accuracy and robustness may be challenging. This creates legal uncertainty that regulators need to address through guidelines or implementing acts.

- GDPR (if personal data are involved): Power generation is primarily industrial and typically does not involve extensive personal data processing. However, certain cases require GDPR compliance. For example, AI systems optimizing distributed residential solar and battery systems may handle user data, overlapping with the consumer side. Within power plants, AI typically processes equipment sensor data, which are not personal. However, the GDPR becomes relevant when workforce data are involved, such as AI-driven workforce scheduling, or when AI-powered security systems process facial images, as these constitute personal data.

- NIS2 and Cybersecurity/Network Code: Power generation, especially electricity generation, is part of the energy sector in NIS2 [22]. Large generators are considered essential entities. Therefore, any AI introduced in generation must be covered in the generator’s cybersecurity risk management. For instance, if a gas power plant deploys an AI for predictive maintenance that connects to cloud analytics, NIS2 would mandate assessing risks like data exfiltration or control override via that AI. The Network Code on Cybersecurity primarily focuses on grid operations, but also extends to generation assets, as TSOs depend on generator behavior for cross-border electricity flows. Risk assessments under the code may identify critical generation sites where digital systems, including AI-driven governors or automated voltage regulators, require cybersecurity risk mitigation measures [9]. This could drive sector-wide initiatives to enhance AI security in generator control systems, potentially leading to standardized configurations or emergency override mechanisms for AI anomalies. A key compliance challenge is integrating AI within both IT and OT environments. Many power plants operate under functional safety standards, such as IEC 61508, which govern traditional operational technology. Certifying AI within these frameworks is not straightforward, creating dual pressure for generation companies to maintain existing safety certifications while also meeting new cybersecurity and AI compliance requirements.

- Cyber Resilience Act: If generators acquire new equipment with AI capabilities, such as a smart wind turbine controller with machine learning, the Cyber Resilience Act (CRA) ensures that the product is designed with cybersecurity in mind. This benefits generators by reducing concerns over fundamental security vulnerabilities. However, manufacturers must adapt to CRA requirements, which may initially delay product releases or slightly increase costs due to secure coding practices and external testing. In the medium term, the CRA is likely to enhance trust in AI-driven generation technologies. A wind farm operator, for example, can have greater confidence that an AI-enabled turbine bearing a CE mark under the CRA meets stringent security standards.

- Cybersecurity Act (Certification): Large generators may rely on established certification schemes for different components, such as ISO/IEC 27001 for plant IT systems and IEC 62443 for industrial control system security. The EU cybersecurity certification framework could introduce sector-specific schemes, such as a “High” assurance certification for industrial automation security, which generators could use to certify AI-driven control systems. While not yet mandatory, forward-thinking companies may adopt these certifications to demonstrate due diligence, potentially lowering insurance costs and reducing liability risks.

4.2. AI in Transmission and Distribution Grids and Regulations

- AI Act (High-Risk and Requirements): AI used by TSOs/DSOs for grid management will generally fall under high-risk AI, as it involves managing critical electricity network infrastructure [18]. The AI Act’s strict obligations apply to AI systems used in grid operations. Grid operators and technology vendors must implement comprehensive risk management measures, which include identifying potential failure modes, ensuring high accuracy through rigorous testing, and integrating failsafe mechanisms. AI false positives in fault detection, for example, must be accounted for to prevent unnecessary disruptions. Extensive documentation is required to demonstrate compliance. A particularly relevant requirement is human oversight, which mandates that high-risk AI systems must be effectively monitored by humans, with intervention or override capabilities when necessary. This aligns with existing grid operation practices, where operators can switch to manual control if automation fails. However, under the AI Act, this process must be formally structured. For example, if an AI system recommends switching off a power line to prevent overload, a human operator should verify the action, particularly in the early stages of adoption. Over time, as trust in AI grows, some decisions may become fully automated with only ex-post human monitoring. However, under the AI Act, operators must prove that the AI system is reliable and trustworthy before granting it greater autonomy.

- NIS2 and Network Code (Cybersecurity): TSOs and large DSOs are explicitly essential entities under NIS2 and have some of the strictest obligations [22]. The new Network Code on Cybersecurity adds a tailored layer, requiring recurring cyber risk assessments focused on grid operations [9]. For AI in grids, continuous evaluation is essential to understand how AI alters the cybersecurity threat landscape. A distribution system operator deploying AI for distribution automation must analyze new risks, such as the possibility of an attacker manipulating sensor inputs to create false load readings and trigger a power outage. Mitigation strategies should include authentication of sensor data to prevent spoofing, redundancies where multiple AI models cross-check results for consistency, and controlled execution where AI provides recommendations, but a secure control system validates and executes them. Compliance is not a one-time process, but requires ongoing risk assessment and adaptation. The Network Code on Cybersecurity envisions a dynamic security process that evolves alongside emerging threats, ensuring that AI-enabled grid operations remain resilient over time [9]. This continuous loop could slow the deployment of AI if each new AI app triggers a lengthy risk assessment, but it is vital for security.

- Cyber Resilience Act (Grid Equipment): Much grid equipment (RTUs, IEDs, sensors) and software will need CRA compliance, as discussed. For grid operators, one impact is that they will have to ensure new procurements after 2027 are CRA-compliant. If a DSO purchases AI-powered grid management software in 2028 that lacks CE marking under the CRA, it may face legal issues using it. Therefore, operators will likely update technical specifications to require CRA compliance from vendors. The CRA also covers things like routers, switches, and other network gear in substations, many of which often come with default passwords, etc., which historically is a weak point. The CRA should eliminate such weak points by forcing vendors to ship secure defaults [22]. Hence, the grid’s digital infrastructure becomes more secure by default, reducing the risk that an AI algorithm operating correctly could be undermined by underlying system hacks.

- GDPR (Smart Grid Data): At the TSO level, personal data usage is minimal, but at the DSO level, particularly in low-voltage networks, there is a significant overlap with consumer data. DSOs manage smart meter data, which qualifies as personal data under the GDPR. When AI is applied to consumption or prosumer data, such as for detecting non-technical losses or forecasting local demand at the transformer level, GDPR compliance becomes mandatory. As regulated monopolies, DSOs must strictly adhere to purpose limitations when using customer data. If AI applications require data beyond what is essential for grid operation, they may conflict with the GDPR’s purpose limitation principle. For example, using smart meter data to infer household occupancy patterns might not be legally permitted unless the data are anonymized or aggregated. To navigate these restrictions, DSOs are likely to anonymize or aggregate data when training AI models for load forecasting. This approach aligns with regulatory best practices and is encouraged by ENISA’s privacy guidelines, reducing compliance risks while maintaining AI’s effectiveness in grid management [19].

- Sector Regulations and Codes: Beyond the EU’s overarching regulations, transmission system operators and distribution system operators must adhere to technical standards such as ENTSO-E operational codes and national grid codes. These standards often mandate reliability criteria like N-1 security, ensuring that the grid can withstand the failure of a single component without widespread outages. When integrating AI into grid operations, operators must ensure that these systems do not compromise established reliability standards. While AI can optimize grid performance, excessive optimization could reduce necessary safety margins. Regulators may require demonstration that any automated system, including AI, maintains or enhances reliability. This could involve obtaining regulatory approval before deploying fully autonomous AI systems, such as voltage control mechanisms, to ensure compliance with operational criteria. Recognizing AI’s increasing role in grid management, some EU national energy regulators have initiated consultations to update regulatory frameworks. The European Commission has launched a stakeholder survey to gather input from grid operators and regulators on AI integration [35]. Additionally, the Eurelectric Action Plan on Grids outlines industry perspectives on the regulatory evolution needed for AI-driven grid management [36].

4.3. AI in Energy Consumption (Demand-Side) and Regulations

- GDPR (and ePrivacy): The most relevant regulation on the demand side is the GDPR, which governs smart meter data as personal information. Smart meters collect granular household electricity data, revealing usage patterns and daily routines. Any AI processing these data must ensure compliance. For example, an AI-powered app analyzing smart meter data for energy-saving tips must obtain user consent or rely on a legal basis like legitimate interest, with proper assessments. In many EU countries, using smart meter data beyond billing requires explicit consent. If AI profiles customers into usage patterns for targeted offers, individuals may have the right to object. The draft ePrivacy Regulation, if enacted, could further regulate data use from smart devices. Compliance requires clear and plain-language privacy notices explaining AI data processing and automated decisions. Regulatory bodies such as the CNIL (French DPA) have issued GDPR guidance on AI [37], emphasizing the importance of choosing the right legal basis and conducting Data Protection Impact Assessments (DPIAs). Given the systematic monitoring of consumption data, demand-side AI providers will almost always need a DPIA.

- AI Act (Limited-Risk Transparency and Some High-Risk): Most demand-side AI tools would not be classified as high-risk under the AI Act since they are not safety-critical. However, AI used for access to essential private services, such as credit scoring, is considered high-risk. In rare cases, this could apply to AI systems that determine eligibility for special energy tariffs if they affect financial obligations, similar to credit decisions. More relevant are the transparency requirements for AI that interacts with users. If a consumer engages with an AI chatbot about their energy bill, the AI Act requires the chatbot to disclose that it is AI and not a human [3]. capco.com. Similarly, if an AI generates content, such as an energy advisory report, it may need to identify itself as AI-generated. The Act also promotes voluntary codes of conduct for lower-risk AI. Companies offering AI-powered smart thermostats, for example, may adopt an EU-wide code to ensure fairness and transparency as a best practice.

- Consumer Protection Law and Product Liability: While not explicitly addressing AI, general consumer protection laws such as the Unfair Commercial Practices Directive and the General Product Safety Regulation still apply. If an AI-driven product misleads consumers, for example, by falsely claiming greater energy savings than it delivers, regulators can intervene. Similarly, if a smart home AI device malfunctions and causes damage, such as an AI thermostat overheating an appliance and starting a fire, liability may fall under product liability law. The EU is updating the Product Liability Directive to cover software-related damage, including AI failures. These changes aim to make it easier for consumers to claim compensation, shifting the burden of proof in some cases when AI causes harm [38]. Companies must ensure truthful marketing of AI benefits and maintain product safety to comply with these evolving regulations. The AI Act will also amend product safety legislation. For example, the Machinery Regulation now includes provisions ensuring that AI-powered machines remain safe, reinforcing the importance of aligning AI-driven energy products with existing safety standards [39].

- Cybersecurity (CRA, etc.): The demand side is experiencing a surge in Internet of Things devices such as smart plugs, thermostats, home batteries, and EV chargers, many of which include connectivity and artificial intelligence. These devices can serve as entry points for cyberattacks if not properly secured. The Cyber Resilience Act enhances security by enforcing stricter cybersecurity requirements, protecting both consumers and the grid. For instance, unsecured high-wattage IoT devices can be compromised by malware. If multiple devices are hijacked, attackers could manipulate them simultaneously to disrupt grid stability, a concern raised by researchers. Compliance with the Cyber Resilience Act will mitigate these risks by mandating measures like unique passwords and secure update mechanisms, strengthening AI-powered home devices against cyber threats [22]. While the Network and Information Security Directive 2 primarily targets operators of essential services and digital infrastructure, it does not directly apply to individual consumers or most device manufacturers unless they are classified as important entities in the digital sector. However, fostering a secure ecosystem benefits the reliability of demand-side AI applications. In the interim, organizations such as the European Union Agency for Cybersecurity provide guidelines for IoT security that manufacturers are encouraged to follow until the Cyber Resilience Act is fully enforced [32].

- Energy-Specific Data Regulations: The European Union has introduced regulations to facilitate data sharing in the energy sector, promoting innovation while ensuring privacy protection. The Electricity Directive 2019/944 emphasizes data interoperability and grants consumers the right to access their energy consumption data and authorize third-party service providers, such as AI companies, to use it. This framework requires consumers to provide consent for data sharing, and distribution system operators must ensure data security [40]. This approach aligns with the General Data Protection Regulation, as consent serves as the legal basis for data processing, ensuring a level playing field. For AI startups offering new analytical applications, these laws are enabling. They allow access to smart meter data via standard application programming interfaces with user consent, eliminating the need for proprietary sensors. However, once they receive this data, they assume the responsibilities of a data controller under the GDPR [41]. Additionally, the EU Data Act promotes seamless data sharing between holders and users while maintaining confidentiality. This regulation supports innovative energy services by clarifying the rules on data usage and access [42]. These frameworks ensure secure and transparent handling of consumer data, fostering trust and enabling AI-driven services that optimize energy consumption.

4.4. AI in Energy Markets and Trading, and Regulations

- AI Act (Gaps in High-Risk Coverage): Interestingly, the EU AI Act does not explicitly classify financial or energy trading algorithms as high-risk, unlike financial credit scoring. Research highlights that risks such as market dominance and price manipulation by AI are not directly addressed within the AI Act’s current scope [1]. This means that an AI system used for energy trading is likely categorized as low or minimal risk under the regulation, provided it does not directly affect critical infrastructure operations. As a result, such AI would not require a conformity assessment or registration. This could be seen as a regulatory gap, considering that an errant algorithm could cause widespread economic disruption or enable anti-competitive behavior. There have been calls to address these risks more explicitly [1]. For now, the AI Act may only impose transparency obligations in cases where an AI interacts with a human trader, though this is an unlikely scenario.

- Market Regulations (REMIT and Competition Law): The EU regulates wholesale energy markets through the Regulation on Energy Market Integrity and Transparency (REMIT), which prohibits market manipulation and insider trading in electricity and gas markets. The REMIT mandates trading surveillance to detect abusive practices. Companies using AI for trading must ensure that their AI does not engage in manipulative behaviors, such as placing and withdrawing orders to influence prices, a tactic known as layering or spoofing, which has led to penalties in other financial markets. The REMIT applies regardless of whether trades are executed by a human or AI, making companies fully accountable. If an AI trading strategy causes an artificial price spike, the company could face an investigation or fines, even if there was no intent to manipulate the market [1]. EU competition law is also relevant, as AI could lead to tacit collusion, where algorithms learn to coordinate prices without explicit agreements [45]. While collusion is illegal under Article 101 TFEU (cartel law), proving AI-driven tacit collusion is complex. There is no law explicitly banning specific AI pricing strategies, but regulators caution that using common third-party AI platforms or pricing algorithms could inadvertently facilitate collusion, exposing companies to antitrust prosecution. Legal advisors are urging firms to consider antitrust compliance when deploying AI in pricing decisions, as enforcement approaches to algorithm-driven parallel behavior remain uncertain.

- Financial Regulations (if applicable): Some advanced energy trading activities, such as derivatives and futures, fall under financial regulations like Markets in Financial Instruments Directive (MiFID II) and Market Abuse Regulation (MAR). MiFID II includes provisions for algorithmic trading in financial markets, requiring high-frequency algorithmic trading firms to be authorized and implement risk controls to prevent disorderly markets [46]. If an energy company engages in such trading activities, such as electricity derivatives, using AI, these rules apply. In practice, larger utilities often have trading divisions that are already under financial supervision. They must notify exchanges about their algorithmic trading techniques and ensure thorough testing of algorithms to avoid instability [47]. Therefore, energy AI traders might already be in compliance with these existing frameworks. MAR also imposes controls on algorithmic trading systems to prevent abusive practices, ensuring that firms deploying AI-based trading strategies maintain robust monitoring and safeguards [48].

- Cybersecurity and Resilience: Market operators, such as EPEX Spot and national exchanges, along with aggregators running platforms, are likely classified under the NIS2 Directive as digital infrastructure or financial market infrastructure entities. [22,49]. This classification mandates robust cybersecurity measures for critical systems, including algorithmic matching engines and AI-driven market monitoring tools. A security breach or manipulation of these systems could lead to significant disruptions, such as false signals causing price volatility. Consequently, NIS2, alongside the general principles of the Network Code (which primarily focuses on grid operations), emphasizes the necessity for strong defensive measures [50]. Also, the upcoming Digital Operational Resilience Act (DORA) aims to enhance the digital operational resilience of financial entities within the EU, including those in the energy sector, if they are considered part of the financial market infrastructure. The DORA ensures that critical trading systems are equipped with backup and recovery mechanisms to maintain operational continuity. While the DORA does not specifically target AI systems, these systems are integral to the overall resilience planning mandated by the regulation [51].

- Transparency and Accountability: The use of AI in markets raises calls for transparency. For instance, if an AI system autonomously makes bidding decisions in a capacity auction, questions arise regarding whether regulators or market operators should have the authority to audit the AI’s decision-making processes to ensure compliance and prevent manipulative practices. Currently, there is no explicit legislation mandating companies to disclose their proprietary algorithms, as these are often considered trade secrets. The EU’s Artificial Intelligence Act primarily focuses on user notification and transparency, which may not directly address the need for regulatory access to algorithmic logic in this context. However, existing financial regulations do require the maintenance of logs for automated trading decisions to facilitate oversight. This practice could extend to energy markets, where regulators might, in the future, request algorithmic trading policies from energy firms, similar to the requirements imposed by financial regulators. The Agency for the Cooperation of Energy Regulators (ACER) currently monitors trading patterns under the Regulation on Wholesale Energy Market Integrity and Transparency (REMIT). ACER is exploring the use of AI tools to enhance its surveillance capabilities, aiming to efficiently detect and analyze unusual trading behaviors that could indicate market manipulation [52,53].

5. Comparative Analysis: EU vs. U.S. and China

5.1. United States: Sectoral and Market-Driven Approach with Emerging Oversight

- The energy industry regulators The Federal Energy Regulatory Commission (FERC) has not issued AI-specific regulations. However, it oversees grid reliability standards developed by the North American Electric Reliability Corporation (NERC), which indirectly apply to technologies used in grid operations. NERC Critical Infrastructure Protection (CIP) standards impose cybersecurity requirements on bulk power system entities [54]. If a utility deploys AI in control systems, it must still comply with CIP standards for electronic security perimeters and incident reporting. Similar to NIS2, U.S. grid operators must secure their systems, but compliance falls under existing CIP rules rather than an AI-specific law.

- Data Privacy: The U.S. does not have a nationwide equivalent to the GDPR. Instead, state laws such as California’s CCPA/CPRA and sector-specific regulations govern data privacy. In the energy sector, many states have rules protecting utility customer data. State public utility commissions often enforce “customer data privacy” regulations that restrict how utilities share or use consumption data, with requirements varying by state. AI handling smart meter data in California, for instance, must comply with the CCPA, ensuring consumer data rights, and adhere to utility commission rules on data consent. Generally, U.S. utilities secure customer consent for third-party energy data sharing through contracts rather than through legal mandates, similar to the EU’s approach.

- AI Guidelines and Initiatives: In April 2024, the U.S. Department of Energy released AI for Energy: Opportunities for a Modern Grid and Clean Energy Economy, the first agency-level roadmap that maps AI use-cases to concrete grid-modernization objectives and sketches forthcoming performance metrics for federally funded pilots [55]. Although purely advisory, the roadmap signals that the criteria DOE is likely to embed in future funding calls and voluntary demonstration programs. More broadly, the DOE’s long-running “Artificial Intelligence for Energy” initiative continues to sponsor R&D and issue best-practice notes, but it imposes no binding obligations on utilities [12]. Complementing DOE’s work, the National Institute of Standards and Technology published a voluntary AI Risk Management Framework (AI RMF) in 2023 to guide organizations on bias, transparency, and security [56]. Energy companies that position themselves on the technological frontier have already begun aligning internal policies with these documents. At the federal level, the White House “AI Bill of Rights” blueprint (2022) articulates principles—safe and effective systems, algorithmic discrimination protections, data privacy, notice and explanation, and human alternatives—that utilities can incorporate into customer-facing AI applications [57].

- The U.S. Department of Energy (DOE) has been actively promoting AI R&D for grid modernization and has issued non-binding strategies. For instance, the DOE’s “Artificial Intelligence for Energy” programs outline use cases and principles but do not impose new regulations [12]. NIST (National Institute of Standards and Technology) released a voluntary AI risk management framework (AI RMF) to help organizations manage AI risks (covering bias, transparency, security, etc.) in 2023 [56]. Many energy companies, being technology-forward, are likely to adopt such guidelines internally. Additionally, the White House released an AI “Bill of Rights” blueprint in 2022, which lays out principles like safe and effective systems, algorithmic discrimination protections, data privacy, etc., but again as guidance [57].

- Recent Developments: Recognizing critical infrastructure stakes, President Biden’s Executive Order on AI (October 2023) explicitly calls for action to ensure AI’s safety in critical sectors [58]. It directs the DOE and the Department of Homeland Security to develop standards and practices for AI in critical infrastructure [12]. This is a notable shift towards more government oversight. While it is not a law, it likely heralds more concrete measures by possibly binding guidance or even regulations for things like AI in grid operations if needed. The EO also emphasizes cybersecurity for AI systems and could lead to more investment in testing AI for grid contingencies [12].

- Flexibility vs. Certainty: Compared to the EU, the U.S. framework is more flexible and less prescriptive. This can be good for innovation, as companies can experiment without waiting for approvals, but at the same time, it can lead to uncertainty and uneven practices. In the EU, an energy AI provider can look at the AI Act and plan compliance; in the U.S., the same provider must guess how various general laws might apply and stay tuned to agency guidance.

- Cybersecurity: U.S. energy cybersecurity is governed by mandatory NERC standards for bulk power and a patchwork for distribution. NIS2’s broad scope in EU has no direct U.S. equivalent beyond those bulk power standards. That said, U.S. grid cybersecurity is quite stringent under NERC and enforced with hefty fines. The concept of “secure by design” for products, like CRA, is not yet law in the U.S., though there are initiatives, like the IoT Cybersecurity Improvement Act for federal procurements. The U.S. might rely on market pressure and lawsuits to punish insecure products more than proactive certification.

- AI Ethics and Bias: The EU embeds these protections into law, while the U.S. relies on frameworks. For example, if an AI system unintentionally discriminates in energy marketing or demand response incentives, the EU’s AI Act and GDPR address fairness and data minimization. In the U.S., companies may refer to the AI Bill of Rights or the NIST RMF, but legal obligations arise only if civil rights laws are violated. The U.S. Federal Trade Commission has stated that it can act against “unfair or deceptive practices” involving AI, such as biased outcomes, under its general enforcement authority.

- Best Practices and Trends: A growing best practice in the U.S. is voluntary transparency to consumers and regulators. Some utilities in pilot programs inform customers about how AI analyzes their usage data to build trust, driven more by public relations than by legal requirements. Another approach involves industry consortiums developing guidelines. For example, the Electric Power Research Institute (EPRI) has initiatives focused on AI ethics for power systems [60]. These can often move faster than regulations and sometimes influence regulators to later codify them.

5.2. China: State-Driven Strategy with Tight Control and Rapid Implementation

- National AI Strategy: China’s government identified AI as a key driver for economic development and has issued plans like the New Generation AI Development Plan (2017) which set goals for AI deployment across industries, aiming to be a global leader by 2030. This strategy explicitly mentions encouraging AI in areas like smart grid, renewable integration, and energy efficiency.

- AI Regulations: In the past few years, China has introduced groundbreaking regulations on specific aspects of AI:

- ○

- Algorithms and Recommender Systems: The Internet Information Service Algorithmic Recommendation Management Provisions (effective March 2022) require companies to register algorithmic recommendation services with the Cyberspace Administration of China (CAC), adhere to rules ensuring content compliance and fairness, and allow users to opt out of recommendations [61]. While aimed at online content platforms, any energy app that provides algorithmic recommendations (e.g., an energy app recommending products or behaviors) technically could fall under this, though in practice it is more for internet platforms. Still, it shows the principle: algorithms must align with “core socialist values” and not endanger public order [11].

- ○

- Deep Synthesis (Deepfakes): The Provisions on Deep Synthesis Technologies (effective Jan 2023) regulate generative AI like deepfakes, requiring clear labeling and prohibiting misuse [62]. Not directly energy-related, but part of the AI legal landscape.

- ○

- Generative AI: In 2023, China issued Interim Measures for the Management of Generative AI Services (effective Aug 2023), which set rules for generative AI offered to the public, including content control, data governance, etc. [63]. Energy is not exempt, but likely not central to generative AI (unless using, say, GPT-like systems for customer service, which companies must ensure do not produce prohibited content).

- ○

- AI Ethics: China has guidelines like the Ethical Norms for New AI (2021), which, while not law, influence how companies should ensure AI is transparent, controllable, and does not violate ethics [64].

- Data and Security Laws: China implemented in 2021 two major laws: the Personal Information Protection Law (PIPL), akin to the GDPR in many respects [65], and the Data Security Law (DSL) [66]. PIPL protects personal data, including energy usage data linked to individuals, requiring consent or other legal bases, and granting individuals rights, though with Chinese-specific exemptions such as broad allowances for state agencies. The DSL categorizes data, with some energy data potentially classified as important or core to the state, restricting cross-border transfers and impacting cloud AI services exporting data from China. The Cybersecurity Law of 2017 mandates critical sectors like energy to secure networks and possibly store data locally. These regulations impose strict data governance on energy companies using AI, ensuring that critical grid data remains within China and that personal consumer energy data are processed lawfully under the PIPL.

- Energy Sector Regulation: China is drafting a new comprehensive Energy Law (as noted in a 2024 draft) aimed at optimizing energy use and ensuring security [11]. Although not explicitly about AI, it includes incentives for reliable and stable energy services, linking to advanced technologies like AI for grid stability. China’s energy regulators, such as the National Energy Administration, issue policies on smart grids and renewable integration that indirectly promote AI, for example, by requiring utilities to enhance forecasting, which implies AI adoption.

- Standards and Implementation: In China, regulations are often supported by detailed technical standards from bodies like CESI or SGCC research units. State Grid likely has internal AI security standards for its operations. The Chinese model pilots technology in state-owned enterprises, with State Grid and China Southern Grid running large AI projects such as predictive maintenance and AI-powered drone inspections. The regulatory framework is directive rather than compliance-driven in the Western sense. When the government mandates AI adoption for specific goals, state companies implement it, managing regulatory approvals internally.

- Regulatory Tightness: China’s AI regulations impose stricter controls than the EU on content and permissible behavior due to censorship and political oversight, but allow faster deployment. The state can mandate AI adoption without extended consensus building or legal obstacles. While the EU debates the AI Act, China already enforces algorithm registries [11] and AI output censorship. These restrictions mainly apply to public content, while industrial applications like energy face fewer explicit limits but must comply with security requirements.

- Privacy: Both the EU and China have comprehensive data laws, with the GDPR and PIPL sharing structural similarities, such as requiring consent. Key differences lie in enforcement and exemptions. China’s enforcement may be selective, and state entities are largely exempt from PIPL. A state-owned power utility in China likely has more flexibility in using consumer data for grid management than a European utility under the GDPR.

- Cybersecurity: China’s critical infrastructure rules, under the Cybersecurity Law and Critical Information Infrastructure regulations, mandate security reviews for important IT equipment, including AI in energy. They also prioritize secure, preferably domestic, technology procurement. The EU’s CRA shares the goal of ensuring secure products, but China enforces this partly through strict import controls, such as scrutinizing foreign AI software in the grid.

- Security and Stability: These are paramount to Chinese energy policy. AI in grids or power plants must not compromise grid stability. The government will likely mandate rigorous testing through state QA processes. There might not be public information on it, but internal controls are likely stringent.

- Centralized Data and AI Platforms: China favors centralized platforms, which could lead to a national energy data center using AI for grid operations across provinces. This would extend its regional grid dispatch model, with AI deployed centrally under government oversight rather than by individual companies. In contrast, the EU allows multiple private actors to innovate within regulatory frameworks.

- Standardization and pilot projects: China is advancing standardization and pilot projects, viewing energy and AI regulation as interconnected, with a focus on security and reliability [11]. A key approach is to embed AI standards into energy reforms. As China drafts its Energy Law, it simultaneously shapes AI norms, ensuring alignment [11]. This integrated strategy could inform the EU, which could update energy legislation, such as network codes and market rules, alongside AI advancements.

- Government as a stakeholder in AI deployment: In China, the government and state-owned enterprises lead much of the R&D, developing indigenous AI for grid management to reduce reliance on Western technology. This approach serves both as a policy for self-reliance and as a regulatory measure favoring domestic technology in procurement.

- AI Ethics and Stability: Chinese regulators have explicitly noted maintaining social stability and avoiding economic disruption as goals of AI regulation [67]. This implies that, for instance, if algorithmic trading in energy markets (China has a nascent electricity market) caused volatility, authorities would step in quickly, perhaps by restricting AI trading or imposing caps. The Chinese system can respond quickly with administrative orders, whereas the EU might rely on market rules and later adjustments.

5.3. Global Comparison

- The EU’s approach is rules-driven, prioritizing ethical and rights-based considerations while aiming to preempt potential harm through regulation. For instance, the Agency for the Cooperation of Energy Regulators (ACER) underlined this preventive stance in its Open Letter on the Notifications of Algorithmic Trading and Direct Electronic Access (July 2024), reminding market participants that AI-based trading tools must be notified in advance under REMIT [69]. The “Brussels effect” often extends these standards globally, influencing AI governance beyond Europe [18].

- The U.S. approach is market-driven, reactive, and fragmented, prioritizing innovation with minimal initial constraints while addressing challenges as they arise. However, this is gradually shifting toward more regulatory oversight.

- China is strategy-driven, rapid, and focused on control and security, implementing specific rules to direct AI’s trajectory in society and key sectors in line with state objectives [11]. It treats AI both as an opportunity to leapfrog in tech and as a potential tool that must be tightly guided.

- The EU seeks to balance fostering innovation with protecting ethical and societal values through detailed legislation [3].

- The U.S. historically leans pro-innovation, trusting existing laws to catch egregious problems.

- China leans pro-control, aggressively deploying AI, but under watchful regulation aligning with government aims [11].

6. Challenges, Gaps, and Recommendations

6.1. Key Challenges and Gaps

- Overlapping Regulations and Compliance Complexity: A clear challenge is the sheer number of regulations an energy AI project must navigate—the AI Act, GDPR, NIS2, sector-specific codes, etc. While each addresses a facet (ethical AI, data privacy, cybersecurity), in practice, they can overlap or create duplicative requirements. For example, an AI system might require both an AI Act conformity assessment and a cybersecurity certification, plus data protection impact assessment under the GDPR. Especially for smaller companies or research collaborations, this is challenging. There is a risk of fragmented compliance efforts, where one team handles the GDPR and another handles the AI Act, potentially missing their interconnections. Gap: Guidance on how to streamline compliance across these laws has not yet been fully developed.

- Legal Uncertainty and Evolving Standards: Many concepts in AI regulation are new and lack clear precedents or technical standards. “State-of-the-art” security under NIS2 [23] or “robustness” under the AI Act are somewhat open-ended until standards are set. Likewise, it is unclear how regulators will enforce certain provisions, such as determining whether an AI provider has sufficiently mitigated bias or quantifying “acceptable risk” in high-risk AI. This uncertainty may delay deployment, as companies adopt a wait-and-see approach, or result in inconsistent interpretations across jurisdictions. Gap: Similarly, as noted, AI in energy markets is a gap, as current high-risk classification does not cover it, so potential issues might slip through until addressed [1].

- Keeping Pace with Technology: AI is advancing rapidly, with developments such as generative AI and increasingly autonomous control systems. Regulatory processes, particularly in the EU, are slower. By the time regulations take effect, new AI capabilities or risks may have emerged. For example, the AI Act drafts initially overlooked generative AI, requiring later amendments to address foundation models. In energy, if new AI techniques like swarm AI for distributed energy resource control arise, existing regulations may not explicitly cover them, leading to uncertainty about compliance. Gap: Regulatory agility is limited, requiring mechanisms for dynamic updates or clarifications. Approaches such as delegated acts, regulatory guidance, or insights from sandbox environments could help refine and adapt rules more effectively.

- Data Availability vs. Privacy: AI development relies on large datasets, but energy data are often sensitive, including customer usage and grid operations, which have security and competitive implications. The GDPR and data localization laws can hinder data pooling for AI training. For instance, an EU energy AI developer may struggle to access pan-European smart meter data due to varying privacy law interpretations or proprietary data silos. Energy companies may also hesitate to share data with AI vendors due to liability concerns. Gap: The balance between data sharing for AI innovation and ensuring privacy and security is complex. Frameworks like the Data Governance Act aim to address this by establishing trusted data-sharing intermediaries, but these efforts are still in the early stages. Uncertainty around key concepts, such as what qualifies as true anonymization under the GDPR, remains a challenge. Energy consumption patterns may be difficult to fully anonymize, increasing the risk that data sharing could violate privacy regulations.

- Talent and Interdisciplinary Understanding: Complying with these regulations requires expertise that crosses law, AI technology, and energy engineering. A shortage of professionals who understand all three realms is a challenge. Energy companies report difficulty in finding staff or consultants who can implement AI solutions and ensure compliance simultaneously. Gap: This is partly a workforce training gap, where universities and training programs have only recently begun integrating data science with energy and policy education.

- Fragmentation Across Member States: Even though EU regulations aimed at harmonization. Some regulations, such as NIS2, allow for national implementation specifics, leading to inconsistencies. Member States may have different competent authorities and enforcement practices. Gap: Variations in enforcement create difficulties for companies operating across multiple EU countries. For instance, NIS2 may be enforced more strictly in one country than in another, and data protection authorities may provide differing guidance on energy data processing. Similarly, energy market rules vary despite EU integration efforts, leading to different constraints for AI solutions, such as varying data access rules from TSOs and DSOs.

- Global Alignment and Competitiveness: The EU’s stringent AI regulations, while leading in ethical oversight, raise concerns about competitiveness. If regions like the U.S. impose fewer constraints, EU companies may struggle to keep pace with AI development. Additionally, varying regulatory regimes make it difficult for companies to create a single AI system for global deployment, requiring adjustments for compliance across the EU, the U.S., and China. Gap: There is no established international standard specifically for AI in critical infrastructure. While ISO/IEC efforts exist, adoption, and consistency remain limited. This lack of harmonization complicates cross-border issues, such as an EU company using a U.S.-based AI cloud service—raising questions about which laws apply in case of disputes or failures.

- Ensuring Ethical and Fair Outcomes in Practice: Laws set principles such as transparency and fairness, but ensuring these outcomes in real-world AI is challenging. Gap: There is a lack of tools and methodologies for auditing AI in energy applications. For example, assessing whether an AI optimizing energy distribution unfairly favors certain regions or customers is complex. Regulations do not yet fully address fairness concerns, such as preventing AI-driven demand responses from excluding those without smart technology, which could worsen energy inequality. Regulators may also lack the resources and expertise to evaluate AI deployments effectively.

- Engagement and Clarity for Industry: Ensuring that regulations do not create innovation bottlenecks or favor only large companies that can afford dedicated compliance departments. If compliance is perceived as too complex, it may discourage startups from innovating in the energy sector, pushing them toward less regulated domains. Gap: The industry often lacks clear guidance or best practices tailored to energy-specific compliance. Many companies, especially smaller ones, struggle to understand the exact requirements, leading to uncertainty about how to comply.

6.2. Recommendations

- Develop Integrated Guidance and “One-Stop” Compliance Toolkits: The EU should create consolidated guidance for AI in critical sectors like energy that interprets overlapping regulations in a unified way. For example, a guidance document (or online toolkit) co-developed by the European Commission (AI Alliance), ENISA, and the European Data Protection Board could outline how an energy company can simultaneously comply with the AI Act, NIS2, and GDPR when deploying a specific solution (say, an AI for demand response). This should include use-case examples, a checklist of obligations, and references to standards. Such an approach would reduce fragmentation and especially help smaller actors. Regulatory sandboxes can play a role here: companies in a sandbox could pilot the integrated compliance approach, and regulators can then publish learnings. The EU’s planned AI regulatory sandboxes (mentioned in the AI Act drafts) could prioritize energy sector experiments to generate these insights.

- Accelerate Standards Development: The EU, in collaboration with international bodies (ISO/IEC, IEEE), should prioritize developing harmonized technical standards for AI in safety-critical and energy systems. These standards would operationalize concepts like “robustness”, “accuracy”, and “security” for high-risk AI. Having standards will provide the concrete yardstick needed for compliance and streamline conformity assessments [1]. For example, a standard could specify testing procedures for an AI voltage control system under various grid conditions to ensure reliability, aligning with the AI Act and network code requirements. The EU could direct CEN/CENELEC and ETSI to collaborate with energy industry experts on this. Additionally, developing an AI cybersecurity standard, possibly extending ISA/IEC 62443 for industrial control to address AI-specific threats, would help bridge the AI Act and NIS2 requirements. Speed is critical, so iterative standard updates should be considered, such as initially publishing technical specifications that can later evolve into full standards.

- Enhanced Regulatory Agility and Adaptive Regulation: To ensure that regulatory frameworks remain responsive to the rapid evolution of AI technologies in the energy sector, regulators should adopt a dual approach that combines agile regulatory tools with continuous monitoring and feedback. Regulators should periodically update high-risk use lists, issue rapid interpretative guidance, and offer experimental licenses to enable timely adjustments as new AI capabilities and risks emerge [18]. At the same time, establishing robust monitoring systems and structured feedback loops will allow for data-driven evaluations of the regulations’ real-world impacts, thereby reducing legal uncertainty and facilitating prompt refinements [70]. This integrated strategy creates a regulatory environment that is both agile and adaptive—providing clear, up-to-date guidance to industry stakeholders while keeping pace with technological advances [18,68].

- Promote Data Sharing Frameworks with Privacy Protection: The EU should operationalize the Data Governance Act and upcoming Energy Data Space in a way that eases access to energy datasets for AI development while preserving privacy. This could include creating anonymized or synthetic datasets of smart meter data for research. Synthetic data does not violate GDPR because they are truly anonymized by default. Investment in privacy-enhancing technologies (PETs) like federated learning, where AI models train across datasets without sharing raw data, should be encouraged through research funding and pilot projects [71]. Regulators can provide guidance to clarify when energy data are classified as personal versus sufficiently aggregated to be non-personal, ensuring companies have confidence in sharing aggregated grid data for AI applications. Another approach is to establish a data sandbox where utilities and AI developers can collaborate on real data under strict privacy controls and oversight. This would enable the development of useful AI models that benefit the entire sector, similar to existing healthcare data sandbox initiatives in the EU.

- Capacity Building and Interdisciplinary Teams: At the EU level, support should be given to training programs that blend AI, energy engineering, and law. This could mean new Erasmus Mundus master programs or professional certifications for “AI Governance in Energy”. Regulators and operators should hold joint workshops, e.g., ENISA, energy regulators, and AI experts co-host cybersecurity exercises for AI in grids (like scenario planning for AI failures or attacks). The energy community (ENTSO-E, EU DSO entity, etc.) could establish a permanent forum on AI governance in energy to share best practices. Also, ensure that regulators (like data protection authorities and energy regulators) get training on AI and energy systems so they can make informed decisions.

- Harmonize Implementation and Foster Cross-Border Collaboration: Encourage Member States to implement directives like NIS2 in a harmonized way for energy. The Cooperation Group under NIS2 could issue specific energy sector guidance to align national efforts [22]. ACER could establish a common framework for evaluating AI in cross-border energy operations using the Network Code on Cybersecurity as a model for coordinated regional assessments. Mutual recognition of AI system approvals should also be considered. If an AI system is certified in one EU country as meeting the AI Act or network code requirements, other member states should accept it to prevent redundant approvals. This could be implemented through the EU AI Act’s conformity assessment regime and existing energy cooperation mechanisms, such as the CEER.

- International Cooperation on AI Governance in Energy: Given the global nature of energy and AI, the EU, the U.S., China, and others should engage in dialogue to share best practices and align with certain standards. Forums such as the G20 or the IEA could facilitate discussions on AI in energy infrastructure, potentially leading to globally endorsed principles for trustworthy AI in critical infrastructure, similar to the OECD AI Principles, which received broad support, including from the U.S. and some backing from China. For example, all parties could agree on the principle of human oversight in critical AI decisions, even if implemented differently across jurisdictions. The EU could take the lead in establishing an International Electrotechnical Commission (IEC) working group on AI for energy system security, involving experts from the U.S. and China to develop standards that enhance interoperability and safety. This would help prevent conflicting regulatory requirements, ensuring that manufacturers do not need to design entirely separate AI systems for different markets.

- Address Specific Gaps (Market AI, etc.) with Targeted Rules or Guidance: If concerns about AI-driven trading and market manipulation increase, EU regulators, including ACER for energy and competition authorities, should issue guidelines on algorithmic trading behavior. These guidelines could clarify how existing REMIT rules apply to AI and potentially require companies to implement internal policies for AI-driven trading. Similarly, for consumer protection, the European Consumer Organization (BEUC) and national agencies should develop guidelines ensuring fairness and transparency in AI-enabled dynamic pricing and recommendation services [44]. This would go beyond the AI Act’s provisions, addressing specific risks in energy markets [72]. Sector-specific guidance should address gaps not covered by the AI Act. The upcoming revision of the EU’s product liability and AI liability regimes should explicitly include energy use cases to clarify responsibility distribution. For instance, if a grid operator and an AI vendor share liability, the framework should define how this applies. Clear liability rules will incentivize all parties to uphold high safety standards.

- Encourage Ethical AI and Transparency Beyond Compliance: Regulations set minimum requirements; however, exceeding them can help companies differentiate themselves and build trust. Industry bodies in the energy sector should develop codes of conduct for ethical AI, aligning with the AI Act’s encouragement of voluntary codes for non-high-risk AI. These codes should address sector-specific concerns, such as preventing bias in AI-driven resource allocation and ensuring explainability for grid operators. Regulators could endorse such codes, offering recognition or incentives, such as lighter oversight, for companies adhering to a certified code of conduct. Additionally, transparency tools should be expanded. For example, a registry of AI systems used in critical energy infrastructure, similar to the EU AI Act’s database but potentially including moderate-risk systems on a voluntary basis, could enhance oversight. Making this registry accessible to regulators and, where appropriate, to the public would help build trust in AI governance.

7. Conclusions

- The EU is establishing a comprehensive regulatory framework for AI that will significantly impact AI solutions in the energy sector. The risk-based AI Act, along with strict data and cybersecurity laws, will require energy companies and technology providers to adapt to new compliance demands. This shift will likely necessitate cross-functional expertise and the early incorporation of legal considerations into technology development [1,24].

- Regulatory barriers, including compliance costs, uncertainty in interpreting new requirements, and potential delays in AI deployment, present significant challenges. However, these are offset by several enablers. A clear regulatory framework can strengthen trust among consumers and investors, while a unified EU approach prevents the fragmentation of national regulations, reducing long-term costs. Additionally, certain regulations, such as data-sharing initiatives, actively support innovation by providing controlled access to essential resources [19,24].

- Specific challenges like aligning AI with GDPR’s privacy mandates or ensuring cybersecurity for AI systems in critical grid operations will require careful attention. Yet, solutions are emerging, e.g., privacy-preserving computation and federated learning can allow AI models to train on distributed energy data without infringing privacy [70], and the Network Code on Cybersecurity provides a template for continuous risk management of new digital tools in grids [9].

- The EU’s regulatory leadership is likely to shape AI governance beyond its borders through the “Brussels effect”, setting global standards for AI in the energy sector. However, differences between the U.S. and China underscore the need for dialogue and the potential development of international standards. Addressing cross-border risks, such as cybersecurity threats and market manipulation in interconnected energy systems, will require coordinated global efforts [11,12].