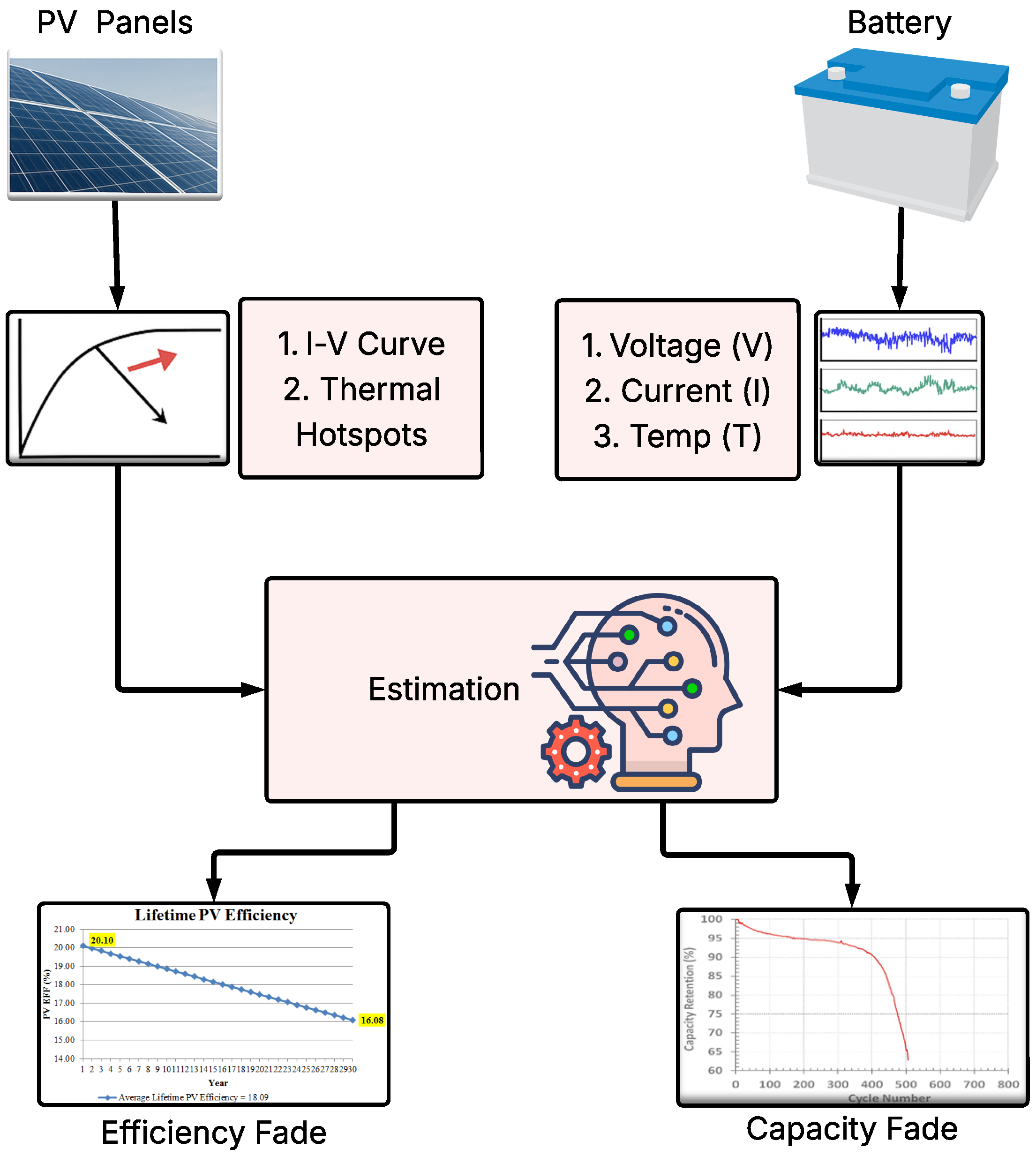

3.1. Framework

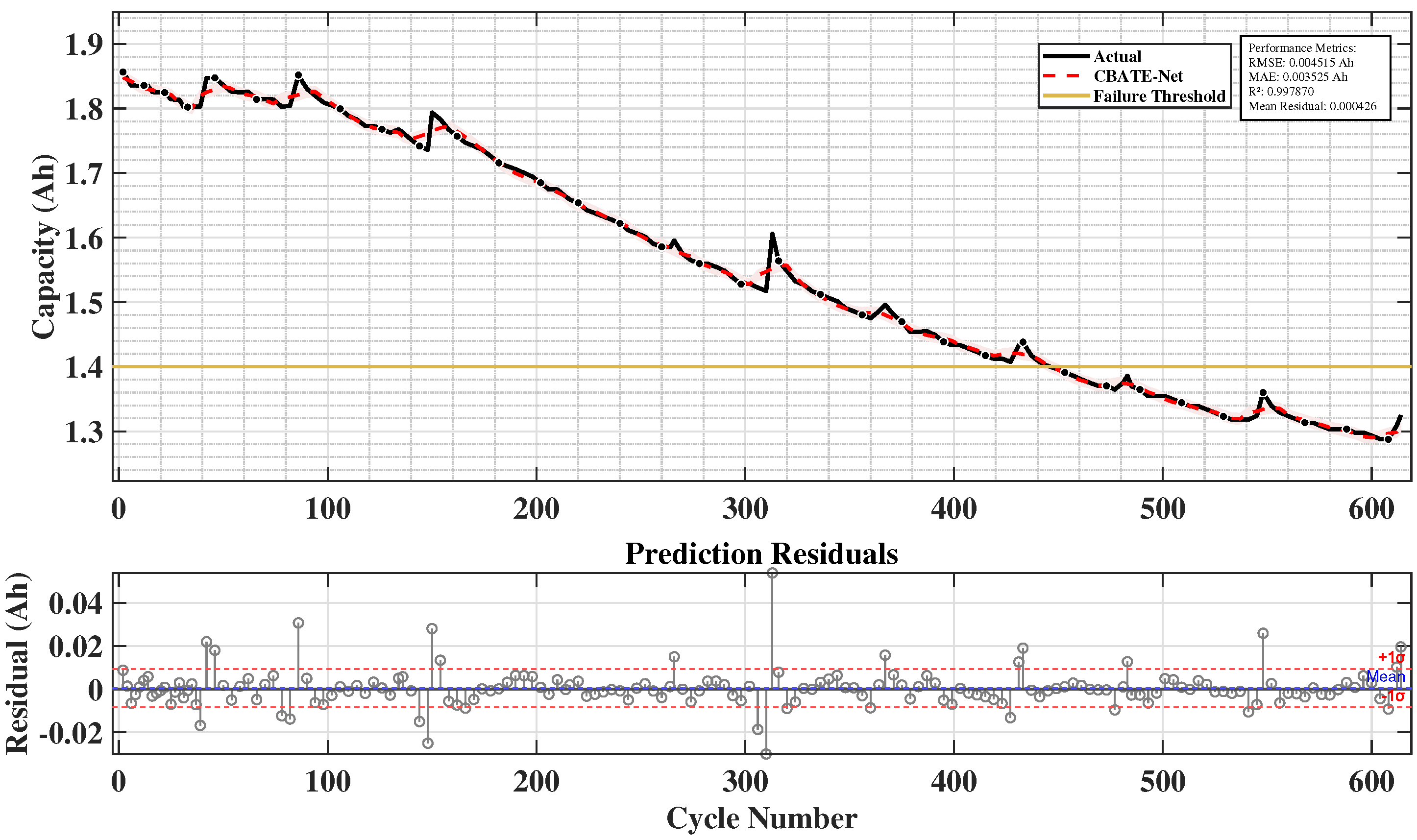

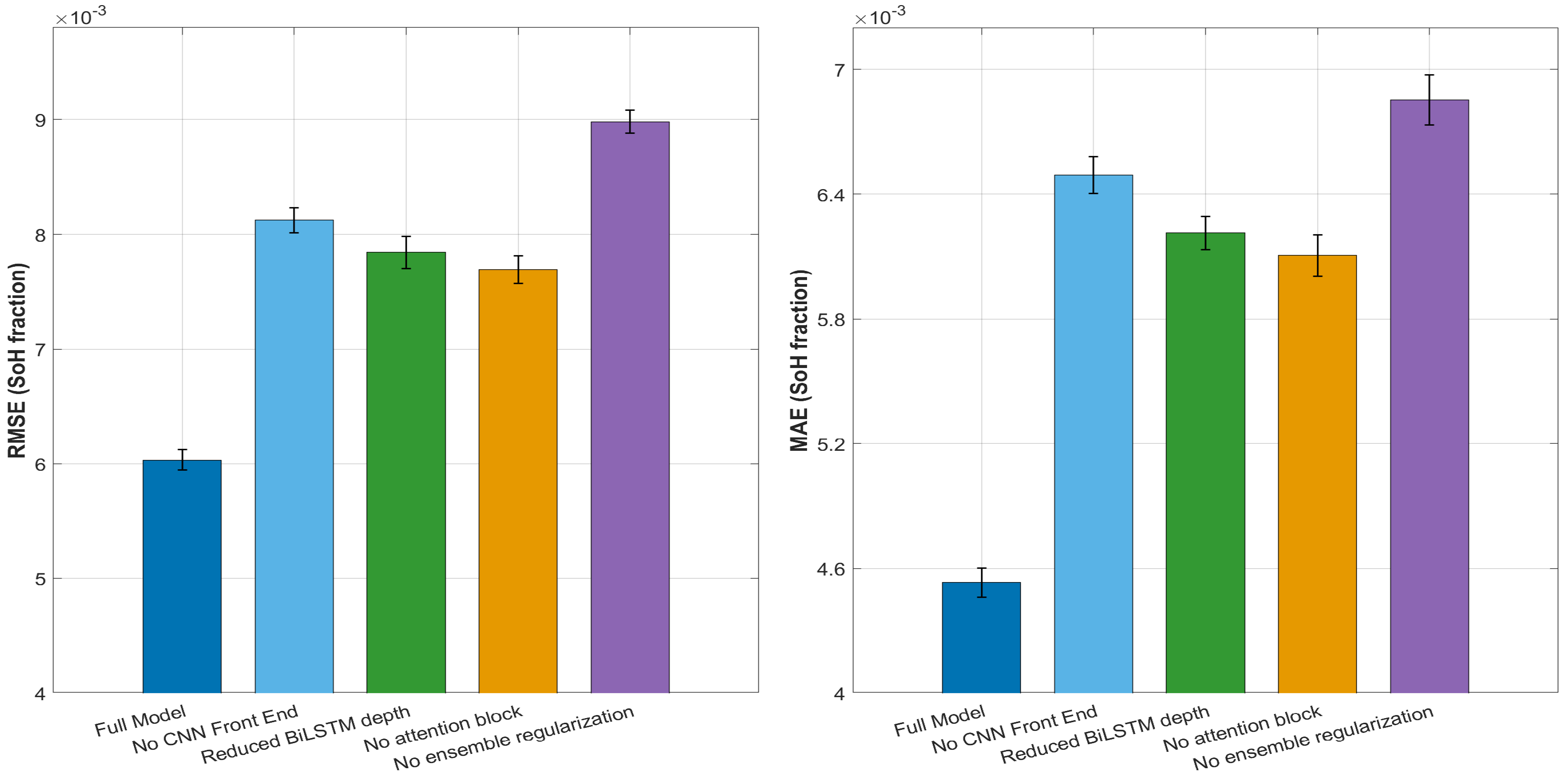

The proposed CBATE-Net is an advanced, hybrid deep learning (DL) framework designed for the accurate estimation of battery capacity and state of health (SoH). It combines four elements: CNN for localized feature extraction, BiLSTM for sequence modelling, a temporal attention mechanism for selective weighting of degradation-relevant intervals, and ensemble learning for robustness.

The pipeline follows a sequential yet integrated flow within the overall system. First, discharge signals of voltage, current and temperature are preprocessed and converted into standardized, cycle-wise sequences. A feature set derived from these signals is then passed to the hybrid DL model. CNN layers capture local variations, BiLSTM learns long-term dependencies in both directions and the attention mechanism highlights critical intervals linked to degradation of the lithium-ion batteries. To improve stability and precision, multiple model instances are trained independently and their predictions are aggregated through ensemble averaging.

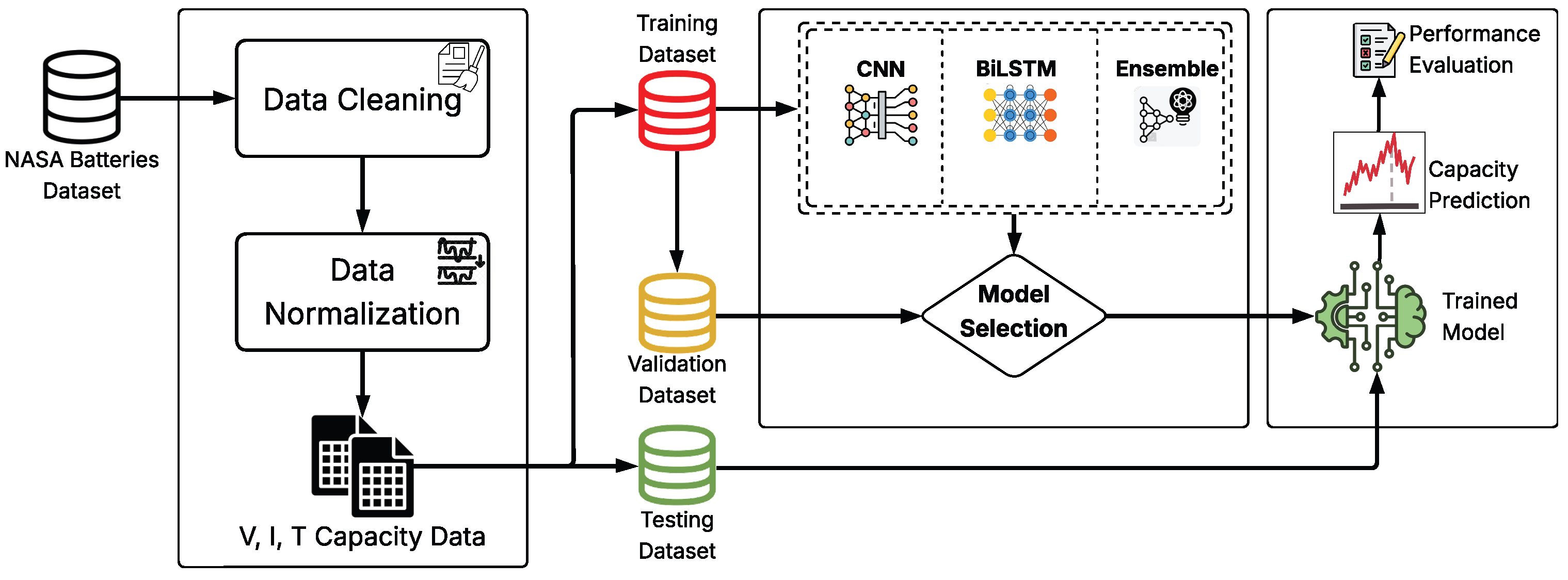

Figure 3 illustrates the workflow of the proposed framework. The pipeline begins with the NASA lithium-ion battery dataset, which is cleaned and normalized first. The processed voltage, current, and temperature signals are then divided into training, validation, and testing subsets. These data are passed through a hybrid DL model consisting of a CNN, a BiLSTM, and an ensemble module, after which model selection takes place. The final stage produces capacity predictions together with a performance evaluation. SoH can then be derived from the predicted capacity as the ratio of predicted to nominal capacity, and RUL can be estimated relative to the 1.40 Ah end-of-life threshold, as explained in later sections. This design captures both localized fluctuations and long-term degradation patterns, ensuring stable performance under irregular cycling conditions.

The input to the model consists of data with dimensions 256 by 12. This input is first passed through a one-dimensional convolutional layer with 32 filters and a kernel size of 5. The output is then normalized using batch normalization, followed by a ReLU activation function and a max-pooling layer with a pool size of 2. Next, the signal is processed by a second Conv1D layer containing 64 filters with a kernel size of 5, again followed by batch normalization, ReLU activation, and max-pooling with a size of 2.

A third Conv1D layer with 128 filters and a kernel size of 3 is then applied, followed by batch normalization, ReLU activation, and a dropout layer with a dropout probability of 0.10 to prevent overfitting. The extracted features are then fed into a sequence of three bidirectional LSTM layers; the first two have 128 units in each direction, and the final one has 64 units in each direction. After the recurrent layers, a temporal attention mechanism is applied to focus on the most relevant time steps in the sequence.

The attention-weighted output is then passed through a fully connected (dense) layer with 64 neurons, using the ReLU activation function and L2 regularization with a penalty coefficient of 1 × 10

−4. The final layer is a single-neuron dense layer that produces the model’s output. The State of Health (SoH) is computed using Equation (

2). Finally, to enhance robustness and accuracy, the final prediction is obtained by averaging the outputs of M independently trained models in an ensemble.

Table 3 describes the complete layer-by-layer configuration for the complete architecture of the system.

3.2. Experimental Dataset of Lithium-Ion Batteries of NASA

This study uses the NASA lithium-ion battery dataset, which is widely adopted in the literature for battery capacity and state of health (SoH) evaluation [

21,

26]. The operating specifications of each battery are summarized in

Table 2. Constant current (CC)–constant voltage (CV) charging occurs at 1.5 A to 4.2 V with a cut-off current of 20 mA. Similarly, the following cut-off voltages were obtained: 2.7 V (B0005), 2.5 V (B0006 and B0018) and 2.2 V (B0007). Furthermore, the initial capacity was found to range from 1.86 to 2.04 Ah, with an end-of-life threshold of 1.40 Ah.

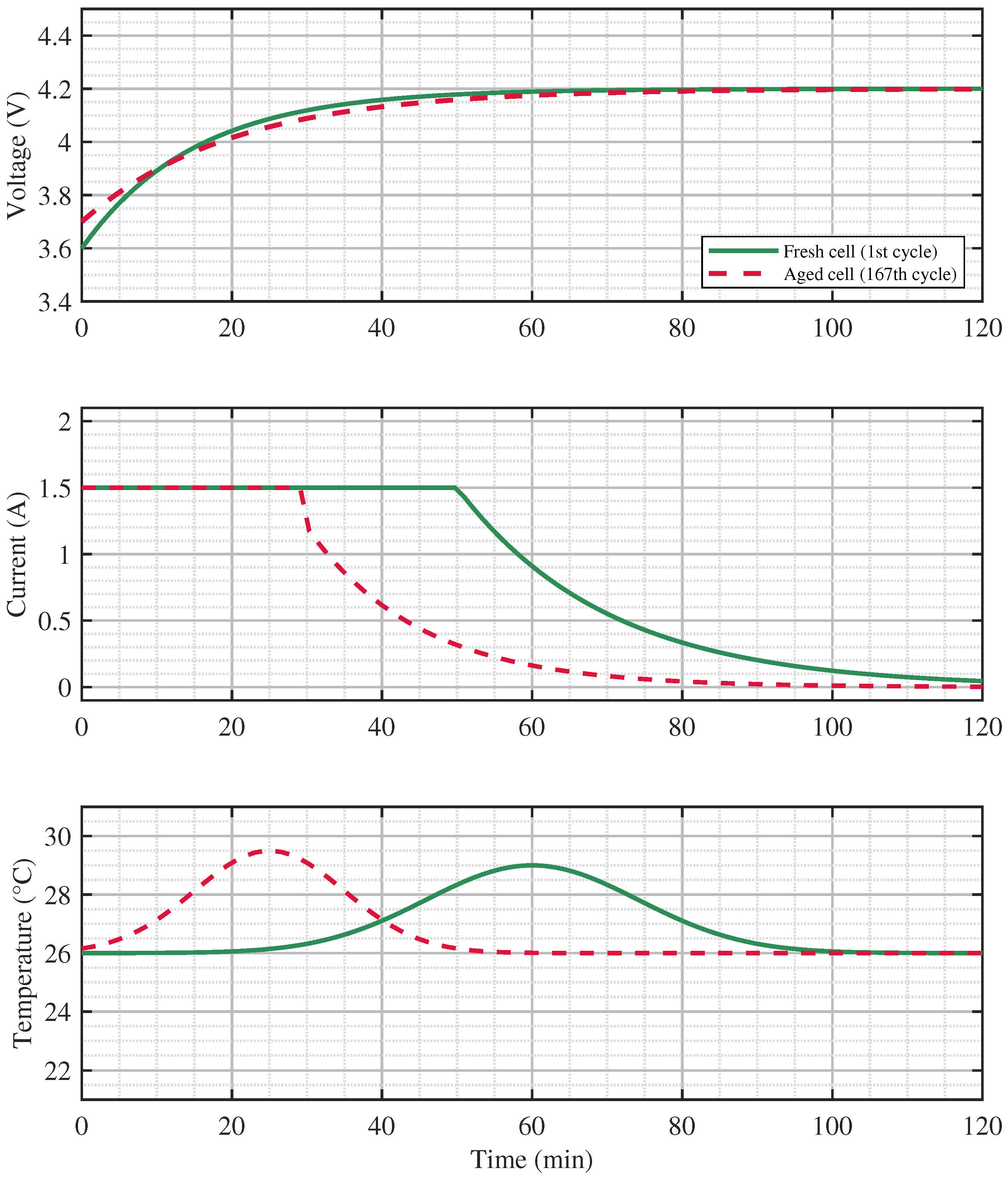

Figure 4 shows the differences between the fresh and aged cycles of the lithium-ion battery parameters, such as voltage, current, and temperature over time in minutes. As the battery ages, there will be an early current discharge and temperature rise. Also, its output voltage decreases in amplitude. Therefore, the traces clearly show shifts in these parameters with usage and time.

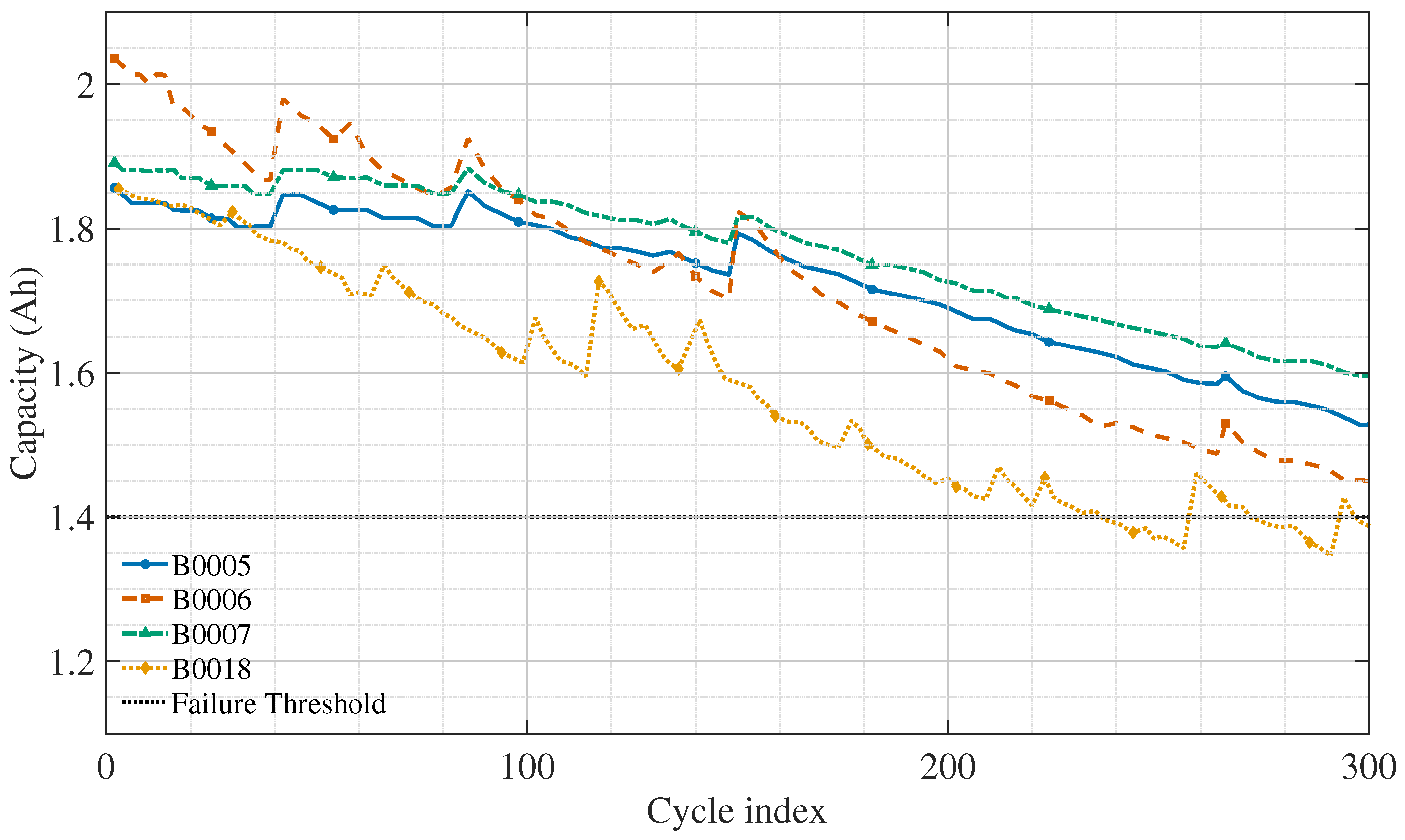

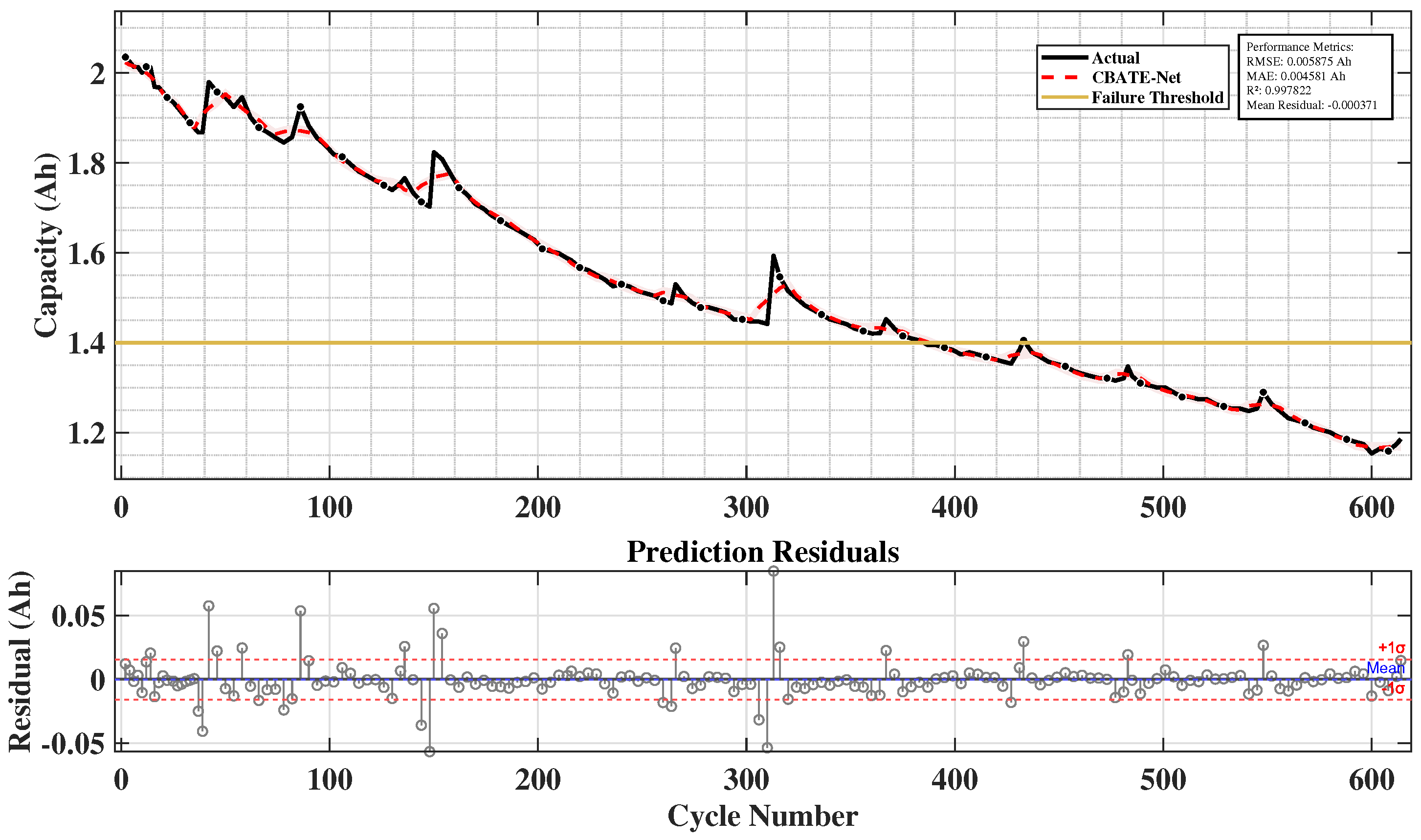

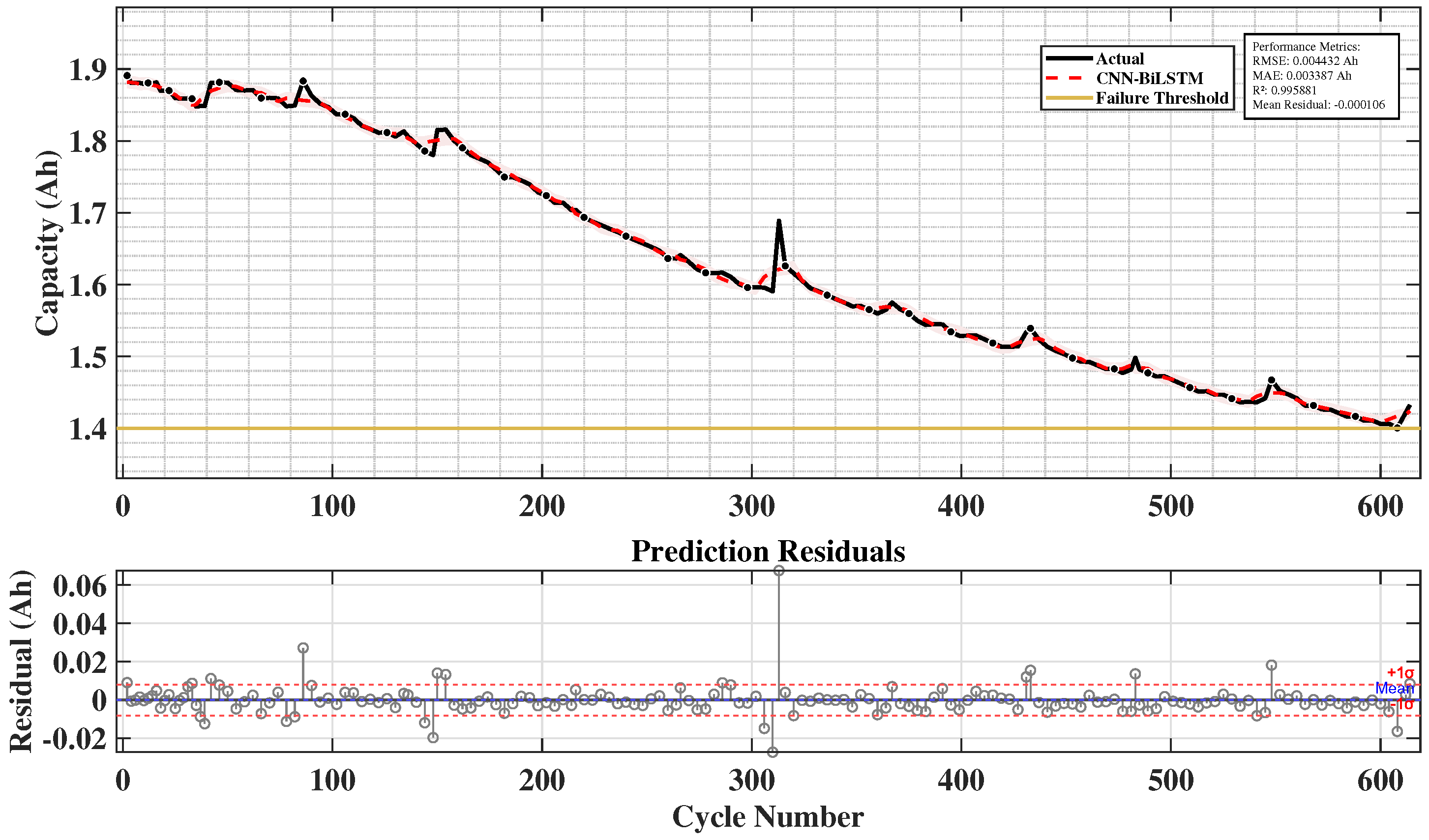

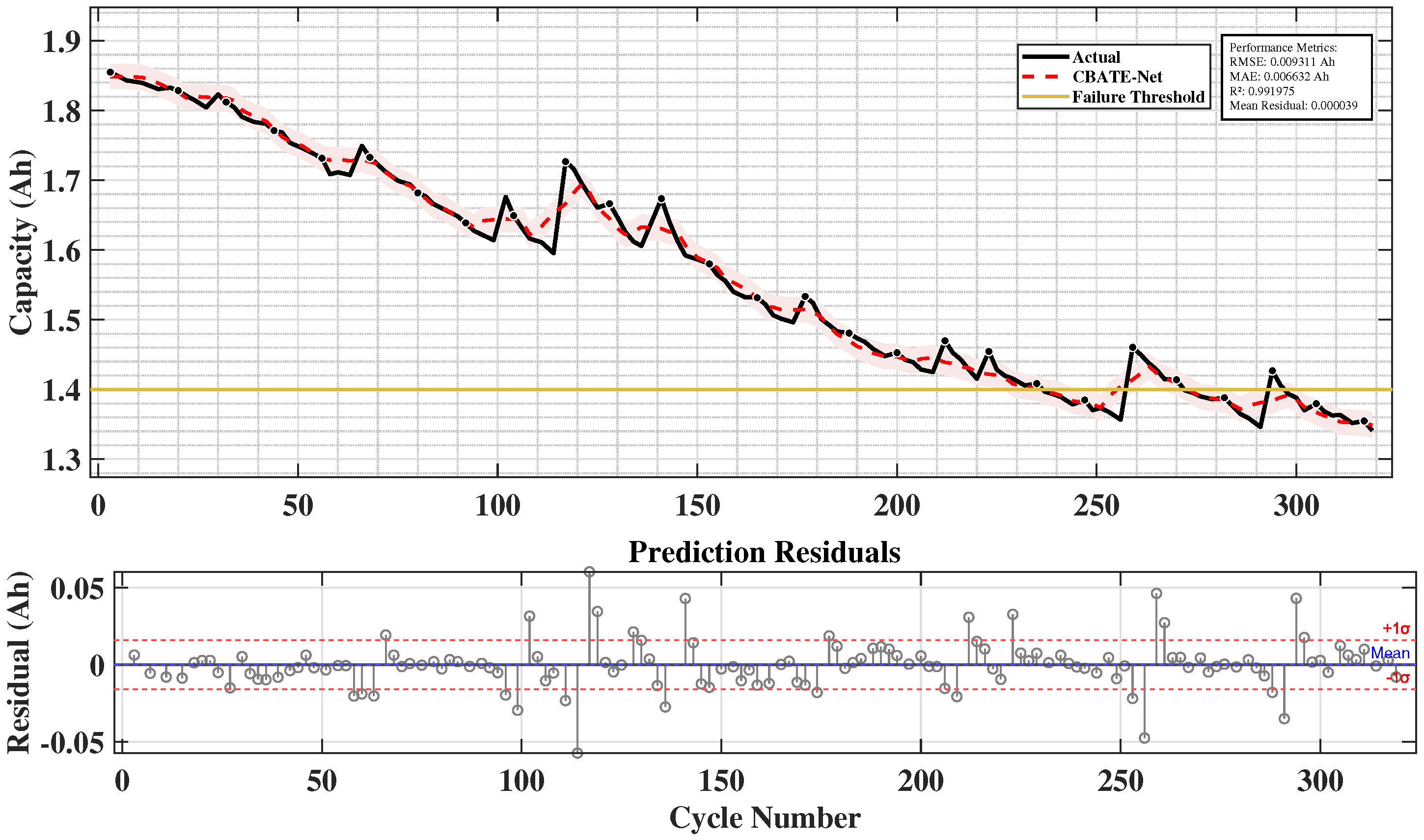

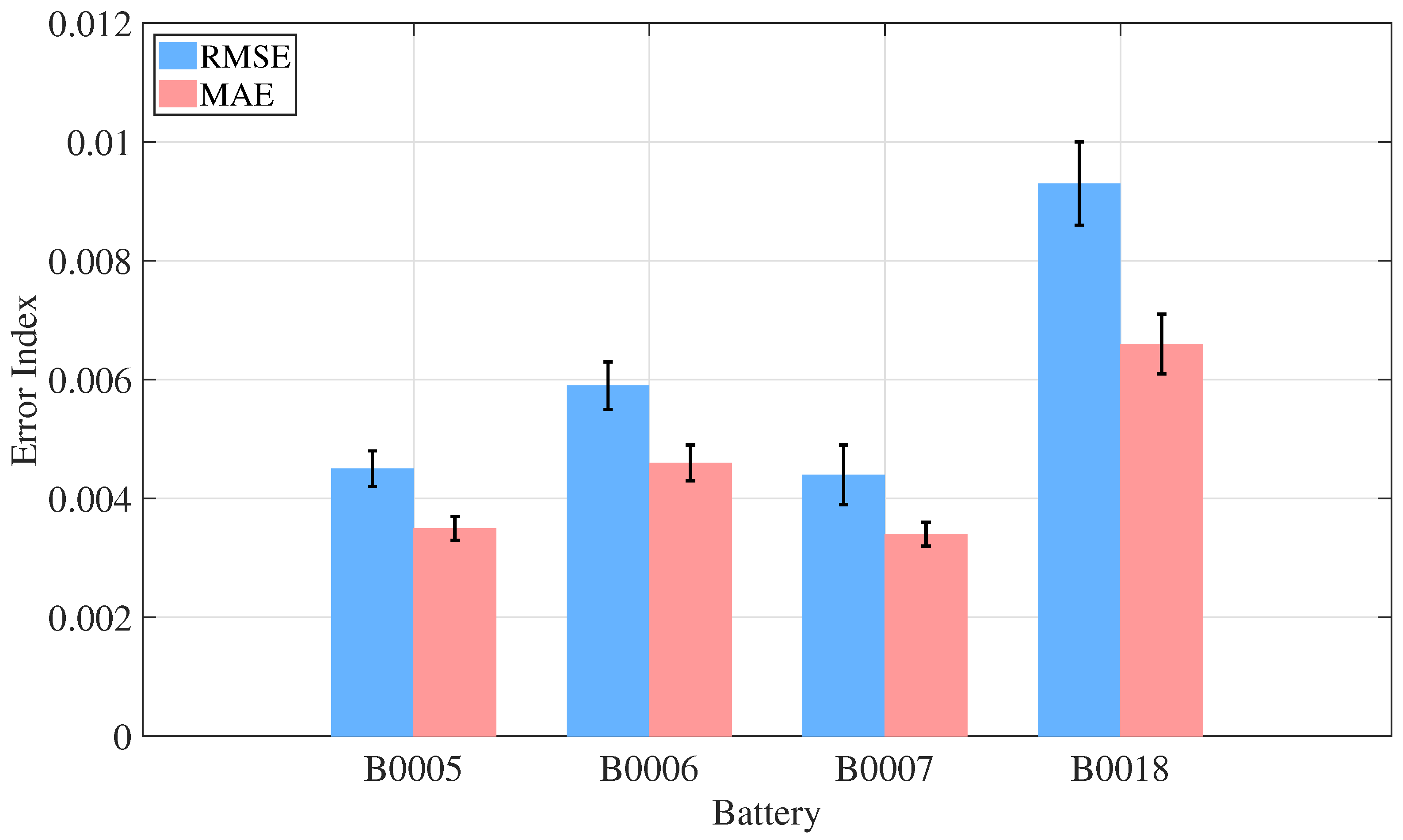

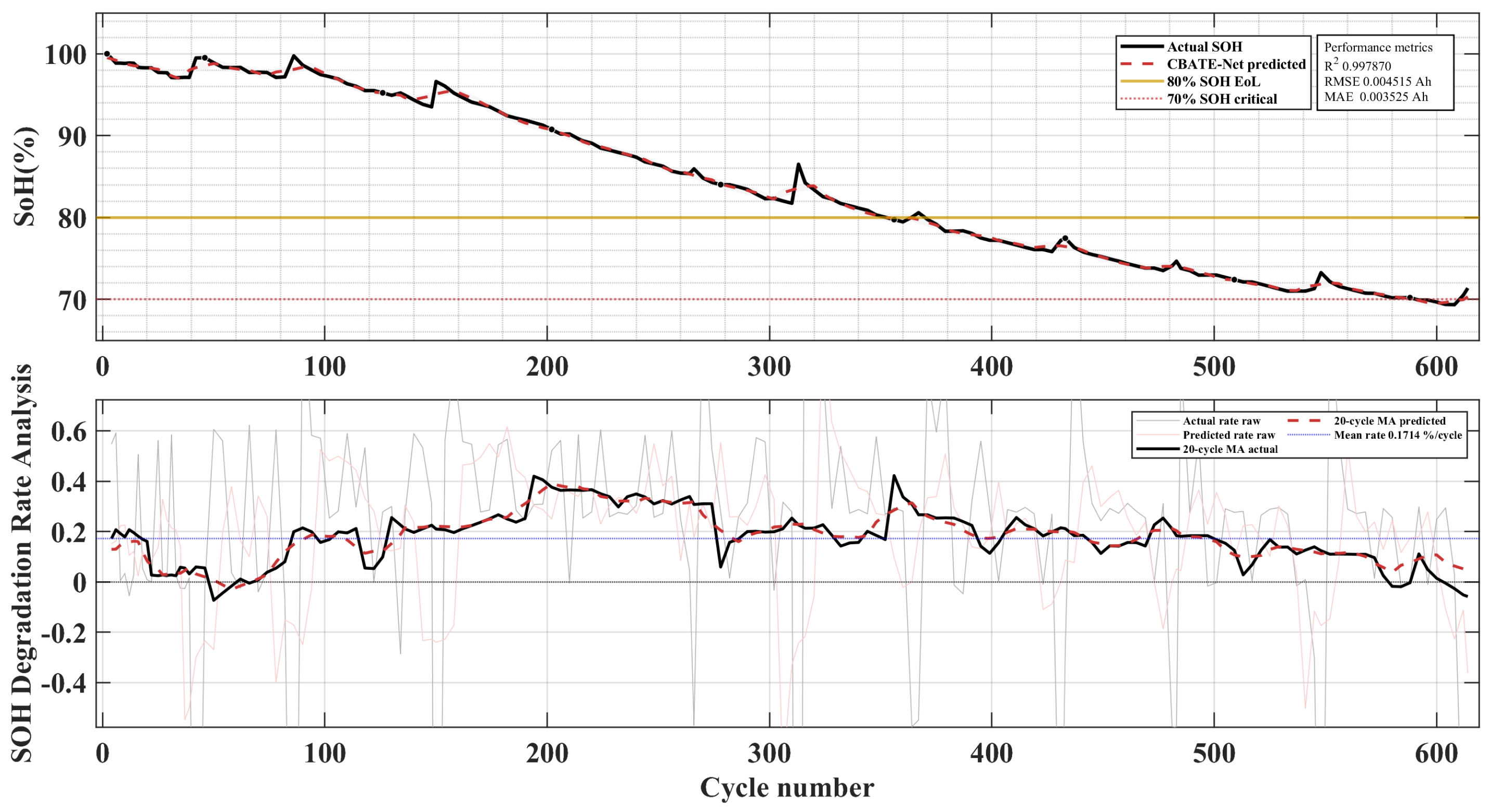

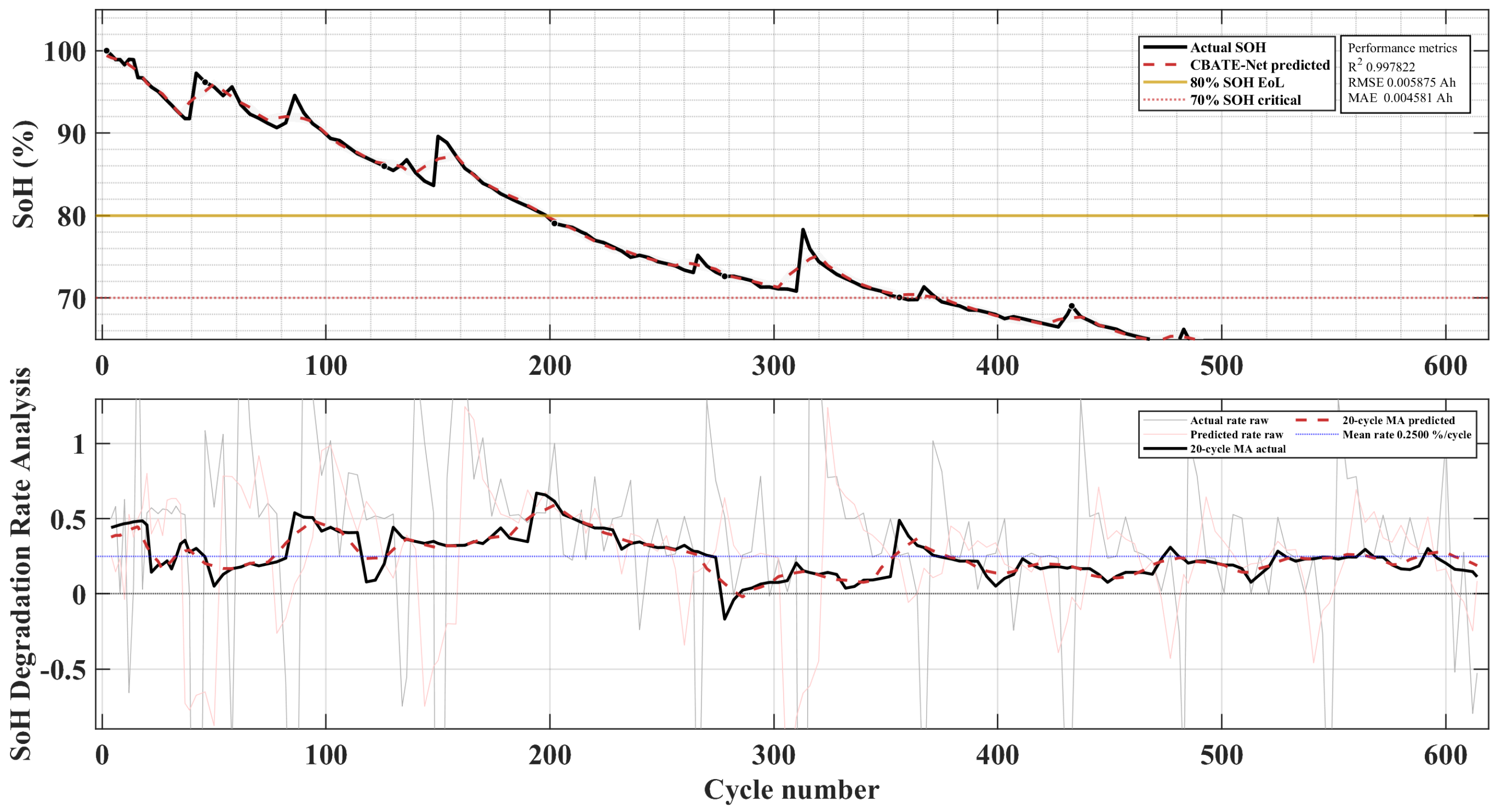

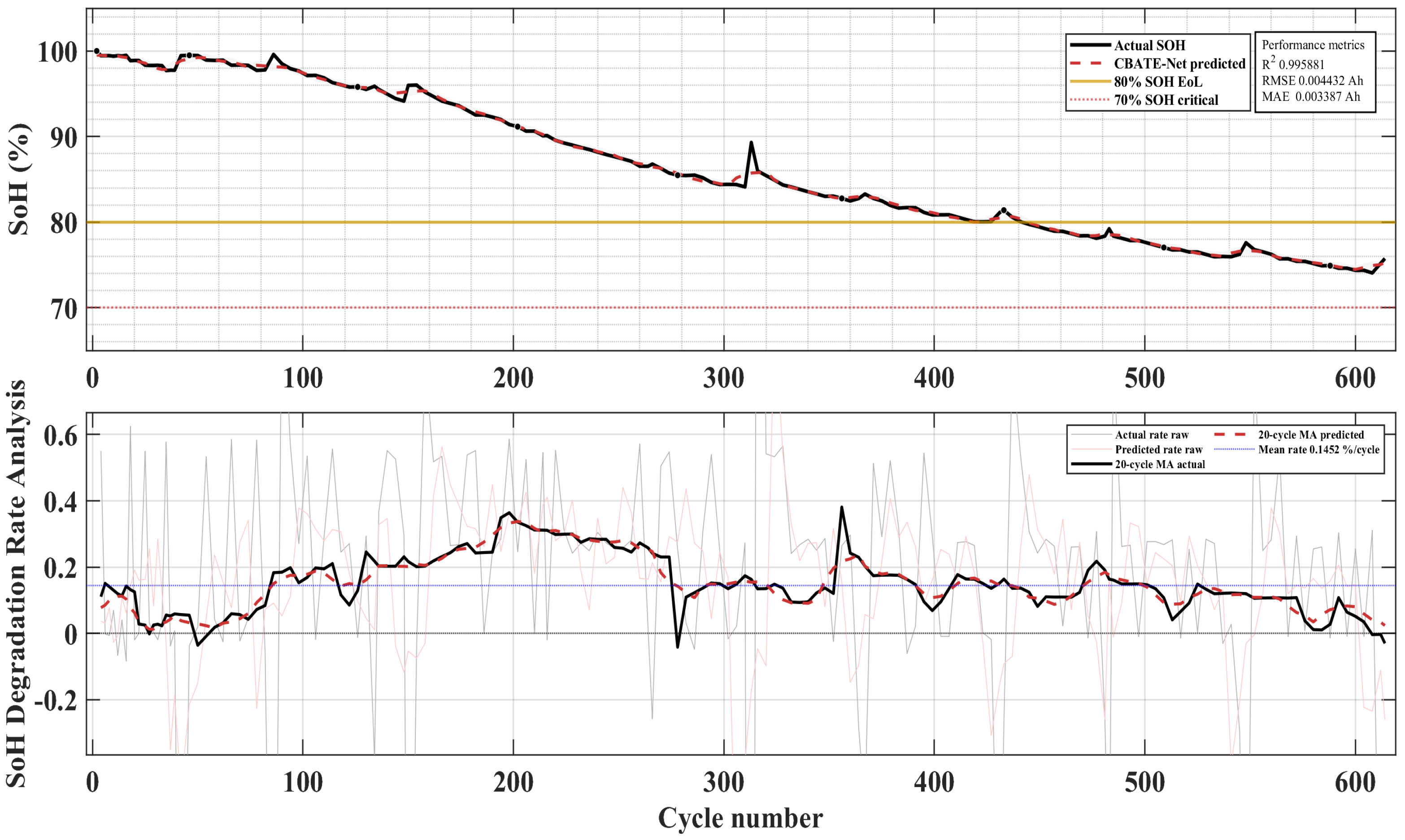

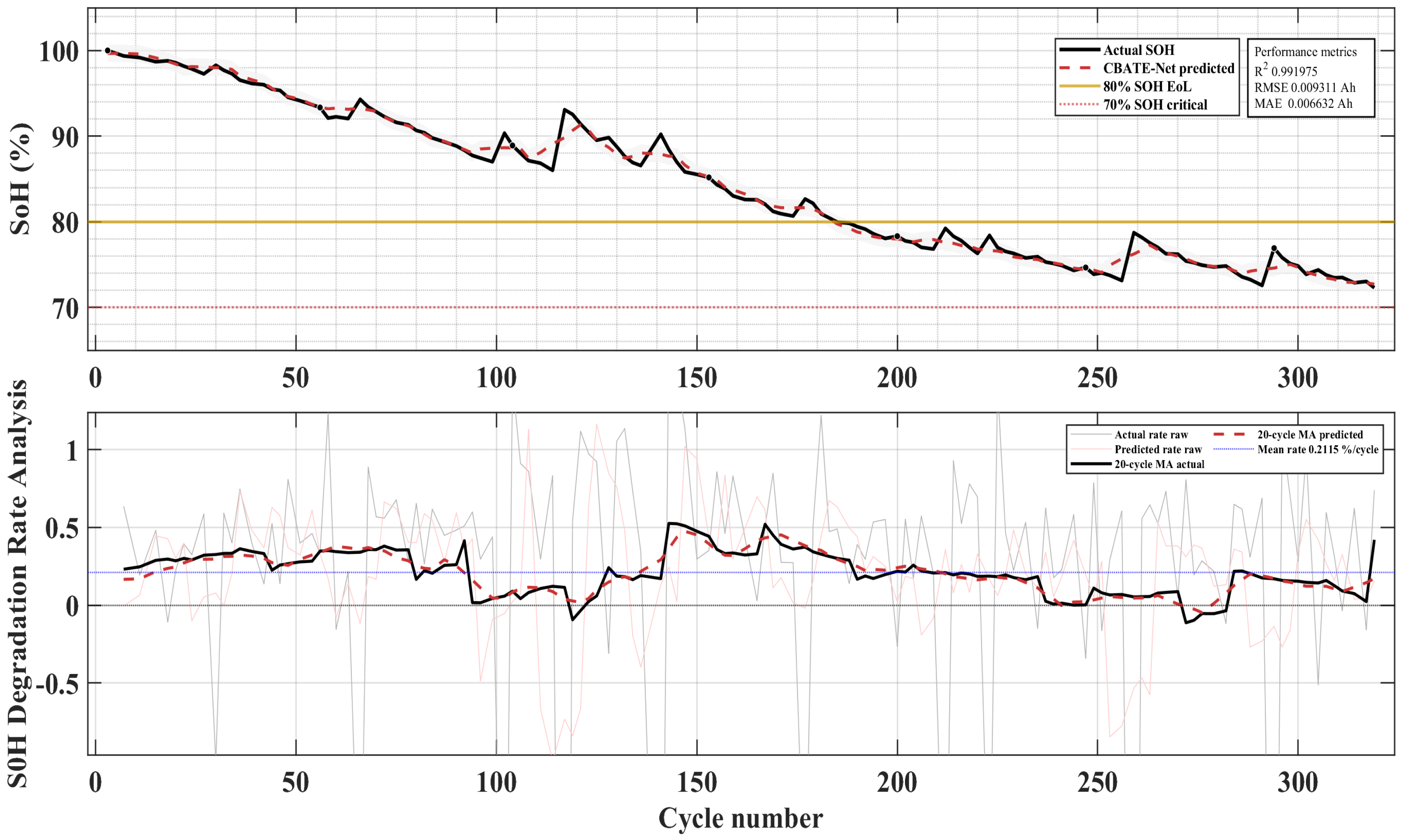

The corresponding battery capacity degradation trajectories are shown in

Figure 5. These plots illustrate the diversity of aging behaviors: B0005 exhibits relatively regular degradation, B0006 demonstrates slightly higher capacity retention, B0007 suffers sudden early degradation, and B0018 shows accelerated capacity fade. This diversity provides a challenging and representative testbed for evaluating model robustness.

Table 2.

Specifications of NASA lithium-ion batteries used in this study.

Table 2.

Specifications of NASA lithium-ion batteries used in this study.

| Battery ID | Charging Protocol (CC–CV) | Discharge Current (A) | Discharge Cut-Off Voltage (V) | Operating Temperature (°C) | Initial Capacity (Ah) | End-of-Life Criterion (Ah) | Role |

|---|

| B0005 | 1.5 A, 4.2 V, 20 mA cut-off | 2.0 | 2.7 | 25 ± 2 | 1.86 | 1.40 | Train/Test |

| B0006 | 1.5 A, 4.2 V, 20 mA cut-off | 2.0 | 2.5 | 25 ± 2 | 2.04 | 1.40 | Train/Test |

| B0007 | 1.5 A, 4.2 V, 20 mA cut-off | 2.0 | 2.2 | 25 ± 2 | 1.89 | 1.40 | Train/Test |

| B0018 | 1.5 A, 4.2 V, 20 mA cut-off | 2.0 | 2.5 | 25 ± 2 | 1.86 | 1.40 | Train/Test |

Figure 4.

Time-series plots of voltage, current, and temperature for fresh (Cycle 1) and aged (Cycle 168) battery cells.

Figure 4.

Time-series plots of voltage, current, and temperature for fresh (Cycle 1) and aged (Cycle 168) battery cells.

Figure 5.

Capacity versus cycle number for NASA cells, with end of life threshold marked at 1.40 Ah, about 70 percent of nominal capacity.

Figure 5.

Capacity versus cycle number for NASA cells, with end of life threshold marked at 1.40 Ah, about 70 percent of nominal capacity.

3.3. Data Preprocessing

Effective preprocessing is essential for converting raw discharge data into reliable training inputs. This study used multi-channel sequences of voltage, current, and temperature from four NASA PCoE lithium-ion cells (B0005, B0006, B0007 and B0018). Outlier cycles were removed using a median absolute deviation (MAD) filter and those with capacities outside the valid range of 0.5–2.5 Ah were excluded. After cleaning, all features were standardized by z-score normalization using training set statistics. For each channel

j, the normalized value

of a sample

is computed as

where

and

denote the mean and standard deviation of channel

j in the training partition. Validation and test sets used the same parameters, and predictions were de-normalized after inference to recover their physical scale [

42,

43]. To improve generalization and avoid overfitting, light data augmentation was used only on the training set. Moreover, additive Gaussian noise was added with a mean of zero and a standard deviation of about 1–2 percent of each feature’s amplitude. Additionally, temporal jitter was also introduced, allowing a maximum shift of ±two sampling points, which is less than 0.5 percent of the total discharge time. These small changes simulate real sensor noise and slight timing errors found in actual BMS data. Therefore, the physical continuity of voltage, current, and temperature signals was preserved. Hence, no rescaling or artificial shape distortion was applied [

18,

44]. This reduced overfitting and improved generalization under irregular cycling conditions.

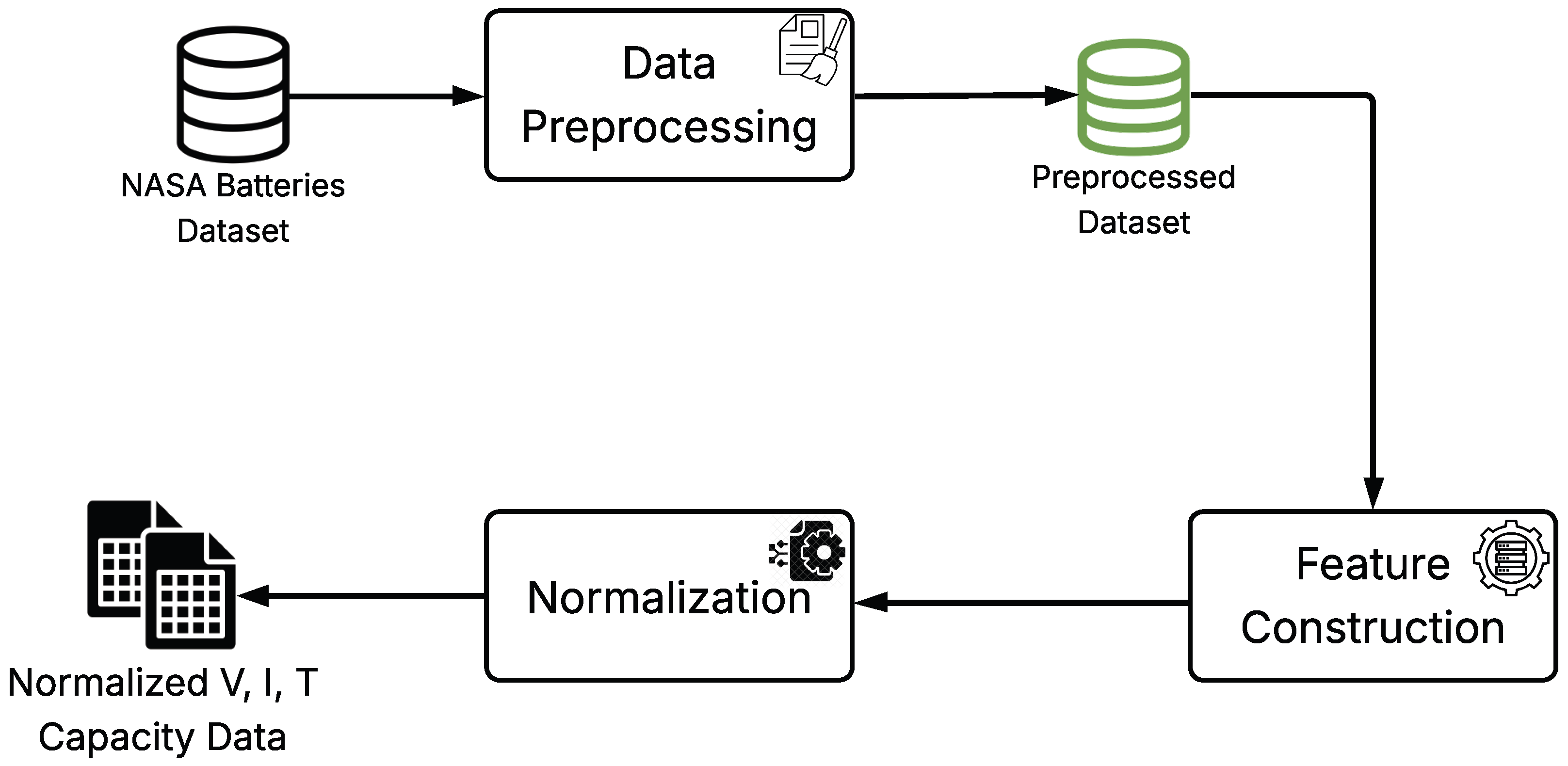

Figure 6 shows the workflow of battery data processing and normalization.

3.4. Feature Extraction and Selection

Each discharge cycle was represented by a 12-dimensional feature vector derived from voltage, current, and temperature signals. The features included raw measurements (V, I, T), first- and second-order derivatives , power and energy terms normalized cumulative E, smoothed moving averages, and discharge duration.

Figure 7 shows the z-score distributions of these features across cycles. Voltage and its derivatives display the highest variability, indicating high sensitivity to degradation, while temperature-related channels provide complementary thermal information.

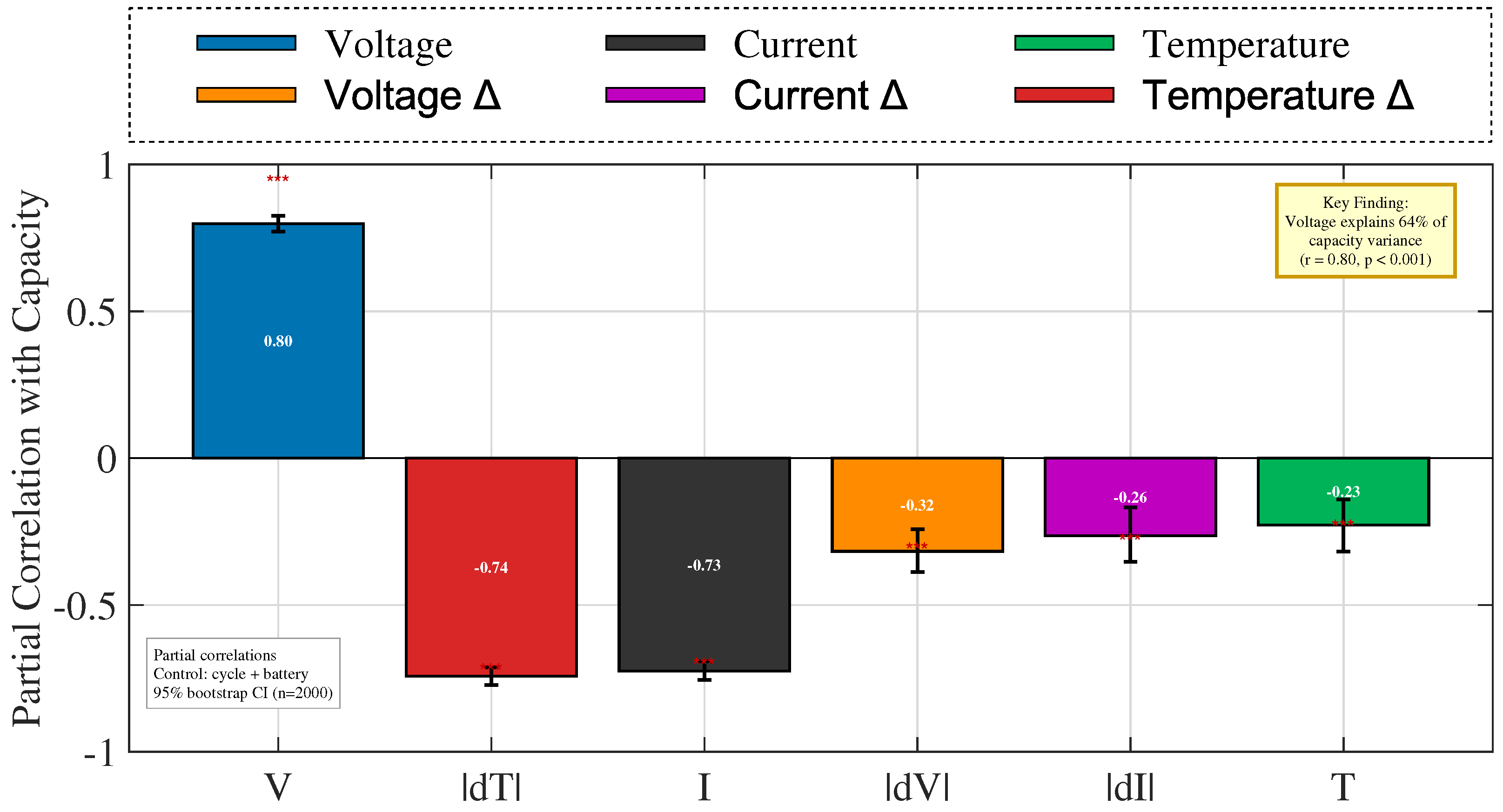

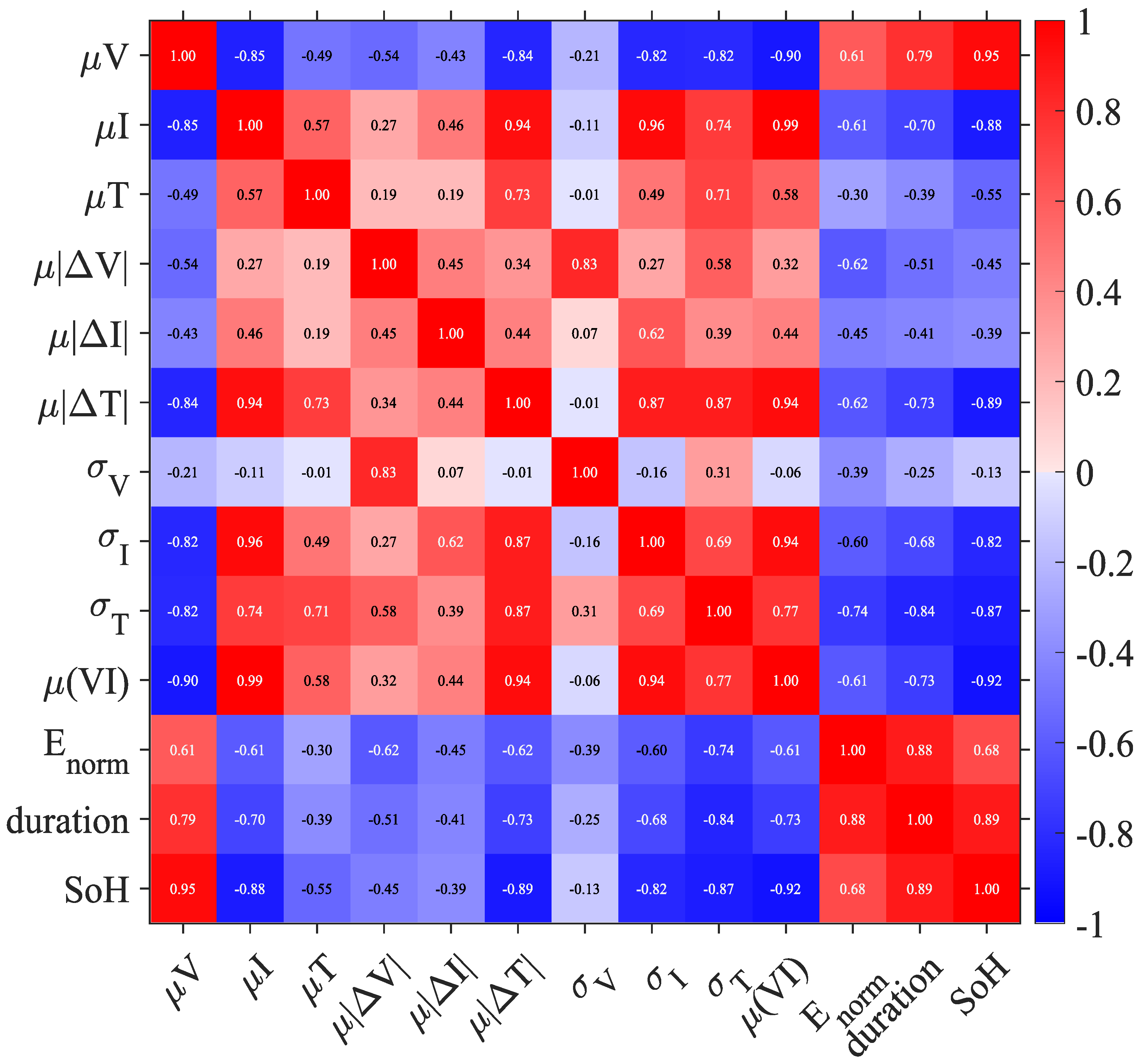

Figure 8 further reports partial correlation coefficients with measured capacity, controlling for cycle index and battery identity.

Figure 9 presents the Pearson correlation matrix with SoH [

45]. Both analyses confirm that multiple features are informative, and no single descriptor dominates degradation. Consequently, all features were retained as model inputs. This enables convolutional layers to emphasize localized signals and BiLSTM to capture temporal dependencies. Retaining all features allows CBATE-Net to exploit both strong predictors and complementary cues, preserving nonlinear interactions that might otherwise be lost.

3.5. CBATE-Net Models

Battery capacity and SoH estimation requires models that can capture short-term localized patterns and long-range sequential dependencies from multi-channel discharge data. Traditional regressors and handcrafted features are insufficient under heterogeneous operating conditions. Therefore, the proposed framework integrates four complementary components: CNN, BiLSTM networks, temporal attention, and ensemble learning. The following subsections (

Section 3.5.1,

Section 3.5.2,

Section 3.5.3 and

Section 3.5.4) describe each model family in detail and explain its role in the integrated CBATE-Net framework.

3.5.1. Convolutional Neural Network

CNN layers are employed at the feature extraction stage to capture short-term, localized patterns in the discharge signals of voltage (V), current (I), and surface temperature (T), which often indicate early degradation. Examples include slope changes in voltage or sudden temperature spikes, detected through convolutional filters. The convolution operation for a one-dimensional input sequence is:

where

a is the input sequence (V, I, T),

K is the kernel (learnable filter), and

is the resulting feature at position

t. The extracted features are then passed to the BiLSTM layers.

3.5.2. Bidirectional Long Short-Term Memory (BiLSTM)

While CNN layers effectively capture localized degradation patterns, accurate capacity and SoH prediction also requires modeling temporal dependencies that span the full discharge sequence. LSTM networks address this need through gated recurrent units that regulate information flow across time steps and mitigate vanishing or exploding gradients in conventional RNN [

21,

24,

46,

47]. In a standard LSTM, the memory cell

is updated by balancing information from the previous state with new candidate input (using forget and input gates):

where

is the forget gate,

is the input gate, and

is the candidate update. The hidden states are updated as:

where

is the hidden state (scaled by the output gate) and

represents the output state. A Bidirectional LSTM (BiLSTM) extends this formulation by processing the sequence in both forward and backward directions, producing hidden states

and

. The final representation is obtained by concatenating both directions:

This bidirectional design enables the framework to jointly exploit early- and late-cycle degradation cues. The extracted features are then passed to the temporal attention model.

3.5.3. Temporal Attention

While BiLSTM layers capture sequential dependencies, they treat all time steps equally, which may reduce the influence of degradation-relevant segments in a discharge profile. To address this, a temporal attention mechanism assigns higher weights to critical time steps [

48,

49]. Given a sequence of hidden states

produced by the BiLSTM, the attention weight

is computed as:

where

is the hidden state at time step

t,

T is the total number of steps,

is a learnable scoring function,

is the unnormalized importance score,

is the normalization term, and

is the normalized attention weight with

The context vector is then obtained as a weighted sum of hidden states:

Here, c is the aggregated sequence representation that emphasizes intervals critical to degradation. This mechanism amplifies critical regions, such as voltage plateaus that flatten with aging or temperature segments with accelerated rise. By focusing on these subsequences, temporal attention improves both interpretability and predictive accuracy.

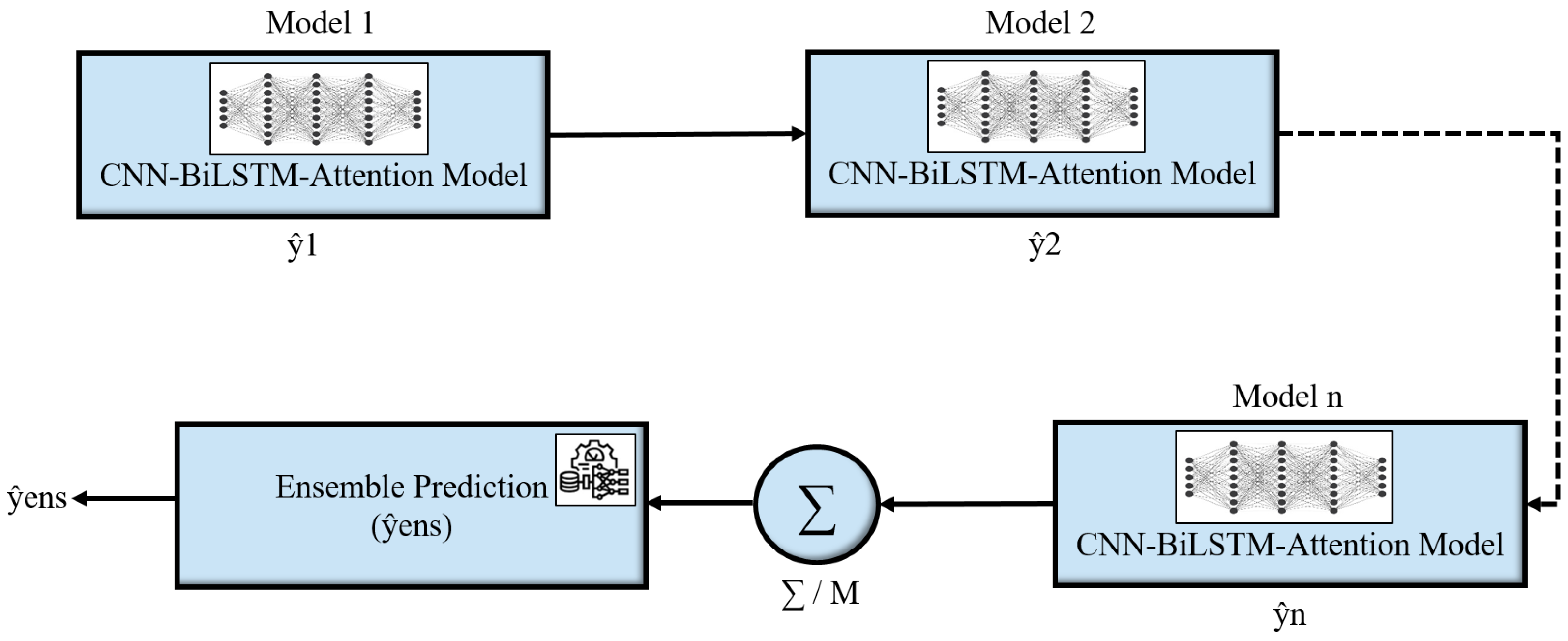

3.5.4. Ensemble Learning

DL models such as CNN-BiLSTM with temporal attention capture degradation dynamics effectively. However, their predictions may still be sensitive to initialization, data splits, or stochastic optimization. To mitigate this variability, the framework incorporates ensemble learning, where multiple base models are trained independently and their outputs are averaged into a consensus prediction. If

denotes the prediction from the

i-th model, the ensemble output is

where

M is the number of models. This averaging reduces variance, enhances stability, and makes predictions less sensitive to noise or outliers. Diversity among base models is achieved through variations in weight initialization, training subsets, and minor hyperparameters [

43]. The overall ensemble flow is illustrated in

Figure 10.

3.6. Training and Model Selection

The proposed CBATE-Net framework was trained on the NASA PCoE lithium ion datasets. For each battery, we stratify samples by the target distribution (within that battery) and then assign 70% to training, 15% to validation, 15% to test while enforcing chronology [

50,

51]. Specifically, we require that validation and test samples come from cycles later than any training samples. Overlaps of input windows across splits are disallowed. Normalization statistics (mean, std) are computed on training data exclusively, then applied unchanged to validation and test. A fixed random seed ensures reproducibility. We re-ran all experiments under this chronology-constrained split; results maintain the same model ranking and principal conclusions. The strength of augmentation was kept within realistic sensor-noise limits. Therefore, when the model was retrained without augmentation, the average RMSE changed by less than 1 percent. Hence, this result confirms that the augmentation did not bias or distort the model. Optimization employed the Adam algorithm [

10,

20], which adapts learning rates and provides stable convergence under noisy gradients. The loss function was defined as mean squared error (MSE) between predicted and measured capacity values:

where

and

denote predicted and true capacities, respectively. To enhance robustness, ensemble learning described in

Section 3.5.4 was applied. Multiple base models were trained independently with variations in initialization, batch ordering, and minor hyperparameter settings. Their outputs were aggregated by simple averaging, reducing variance, and stabilizing predictions even when individual learners differed in performance. Model performance was assessed using four standard regression metrics: root mean squared error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and coefficient of determination (

).

This combination of sampling, adaptive optimization, and ensemble averaging produced accurate and stable estimates, which is important for BESS, including PV coupled scenarios with non-uniform cycling and variable loads.