1. Introduction

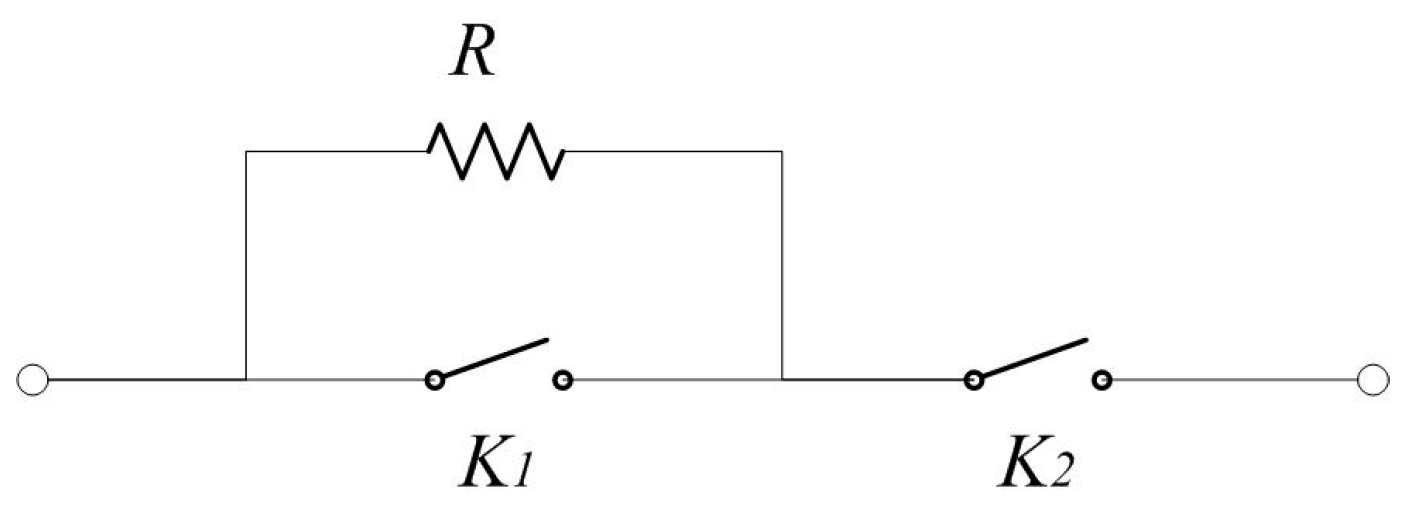

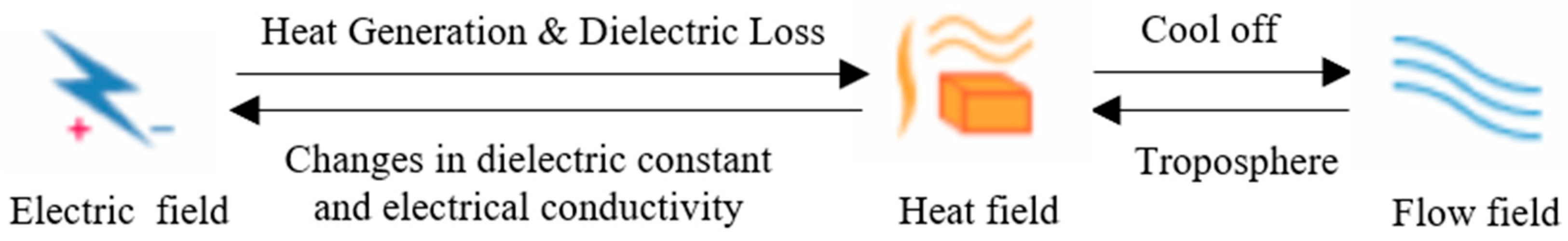

Rapid economic growth has driven the demand for more efficient and reliable electrical networks, making the development of ultra-high-voltage (UHV) and extra-high-voltage (EHV) transmission systems increasingly critical. Concurrently, pre-insertion resistors (PIRs) are now more widely incorporated in the design of electrical switches, including gas-insulated switches (GISs), hybrid gas-insulated switches (HGISs), and oil circuit breakers [

1,

2]. In circuit breaker operations, PIRs play a key role in mitigating inrush currents and transient over-voltage during switching. This has led to increased research focus on their dynamic thermal and electrical behavior in high-voltage systems [

3].

In a series of innovative studies, researchers have explored and advanced PIR’s power testing, synchronous switching technology, applications in other areas, and failure analysis to gain insight into its potential to improve system reliability and performance in a variety of situations.

H. Heiermeier et al. [

4] proposed an alternative approach for the power testing of PIRs that aims to preserve their critical parameters. This method involves six key steps: assessing the mechanical insertion time and the dielectric strength decay rate, calculating the maximum energy of the PIR and its electrical insertion time (EIT), identifying the most adverse network conditions for testing, determining the adjusted EIT and resistor stack length necessary for the equivalent PIR test, and evaluating the new EIT and corresponding PIR energy and the “pre-arc” behavior of the PIR switch. This provides a complete and effective test procedure for PIR. To mitigate transient voltages during the switching of shunt power capacitor compensators, R. Sun et al. [

5] introduced synchronized switching techniques incorporating PIR for use in ultra-high-voltage transmission systems. Their study identified that factors such as the closing target angle, resistor insertion time, and resistor size are significant contributors to switching transients in both single-bank and back-to-back switching configurations. Kunal A. Bhatt et al. [

6] demonstrated that the use of a controlled switching device with PIR-CB can effectively reduce the asymmetric DC component in the charging current during the energization of a shunt reactor. This reduction is achieved by optimizing the insertion timing, resistor value, and EIT of the PIR. The simulation outcomes were also validated via comparison with field data, confirming the practical feasibility of the proposed approach in real-world applications. The fast transient overvoltage generated by the operation of the disconnecting switch influences the number of premature failures of the current transformer. By applying a PIR of 1000 Ω to the disconnecting switch, simulations show that it is possible to reduce the overvoltage value to the standard limit and also significantly reduce the rate of rise in the voltage waveform [

7]. S. Zondi et al. [

8] simulated the transient voltage during capacitor bank switching with an electromagnetic transient program, and the simulation results showed that the PIR method can significantly reduce this transient phenomenon. L. He [

9] developed a generalized model of a modular multilevel converter (MMC) for high-voltage DC, and the simulation results proved that a PIR can effectively suppress the inrush current of the converter transformer. A method [

10] is presented for determining the optimum value of a PIR to minimize the increasingly frequent current drifts and switching over-voltage that occur in high-voltage cables in frequent-use scenarios. H. Shi et al. [

11] conducted simulations to analyze the transient currents under three fault conditions in relation to PIRs: short circuit, breakdown, and open circuit. Their findings revealed that parameters such as the harmonic content of the inrush current, the timing of the peak inrush current, and the three-phase imbalance exhibit notable differences when compared to normal operating conditions. These variations can serve as useful indicators for fault diagnosis techniques.

These collective endeavors not only enhance understanding of PIR functionality within electrical systems but also pave the way for their optimized application, promising improved system stability and efficiency.

PIRs will heat up rapidly during the opening and closing process of the circuit breaker. However, due to the temperature limit of PIRs, repeated opening and closing operations of the circuit breaker may cause PIRs to be damaged by overheating. The opening and closing of circuit breakers are common operations in power system. At present, there is no temperature prediction for PIRs, which leads to their frequent damage. Temperature prediction is an important indicator for determining whether a circuit breaker can perform opening and closing operations. Few studies have considered the thermal characteristics of PIRs under fault conditions and their implications for system reliability [

12]. In particular, thermal damage caused by electric–thermal coupling during fault-induced closures is a major risk factor, yet it has not been extensively studied. However, there are very few studies on the temperature rise in PIRs. Most existing works focus on electromagnetic or mechanical behaviors, while temperature prediction remains underexplored despite its critical impact on failure modes [

12]. During closing, the PIR accumulates heat due to the passage of the current, causing its internal temperature to rise. When a transmission line fault occurs, the PIR may be broken due to multiple closures. If the temperature of the PIR can be accurately measured, then the closing interval can be increased to extend the cooling time when the PIR temperature is too high, which will effectively prevent the PIR from breaking and then ensure the stable operation of the transmission line. Due to the high-voltage environment in which PIRs function, direct temperature measurement using sensors is not feasible. Moreover, the heating and cooling cycles of PIRs involve complex, nonlinear, and tightly coupled processes influenced by various parameters, making precise mathematical modeling challenging. Therefore, this study employed deep learning models to predict the temperature of the PIR.

Nowadays, many models have been widely used in engineering prediction. Machine learning techniques have been widely used in fault detection for power system components such as transformers, supporting more intelligent and proactive condition monitoring [

13]. In the early stages of predictive analytics, statistical models were extensively employed, exemplified by methodologies such as autoregressive integrated moving average (ARIMA [

14]). These models, however, are primarily limited to handling linear data. To address this limitation, machine learning techniques like support vector regression (SVR [

15]) and extreme gradient boosting (XGBoost, Python 3.8 [

16]) emerged as solutions for extracting nonlinear patterns from time series data. Machine learning methods have been proven effective in similar predictive tasks for other substation equipment, enhancing fault prevention and system reliability [

17].

The effectiveness of AI-driven control and prediction strategies has already been demonstrated in the management of distributed energy systems [

18]. With the advancement of artificial intelligence, plenty of models have been developed for predictive tasks, among which recurrent neural network (RNN)-based models have gained popularity, such as long short-term memory (LSTM [

19]) and gated recurrent unit (GRU [

20]) models. The LSTM model, through its intricate design involving input, forget, and output gates, effectively captures temporal information, mitigating the gradient vanishing/exploding issues inherent to traditional RNN [

21]. Conversely, the GRU model simplifies the LSTM architecture while maintaining comparable predictive performance. Furthermore, other models, including those based on the Transformer [

22] (Informer [

23]), multi-layer perceptron (MLP [

24]), MLP-based models (Dlinear [

25], Frets [

26]), convolutional neural network (CNN [

27,

28,

29]), and CNN-based models (MICN [

30], TimesNet [

31]), have also been applied to predictive tasks such as temperature forecasting.

However, much research has focused on model improvement, with less attention paid to the training process. Commonly used traditional loss functions are sensitive to outliers and prone to overfitting due to their linearly increasing gradients with prediction errors. This often leads to diminished model accuracy on test datasets. To address this issue, this study proposes the rational smooth loss function (RSL), inspired by the principle of kernel function. RSL dynamically adjusts gradients and incorporates regularization terms to mitigate overfitting, offering a refined approach to enhancing predictive model performance.

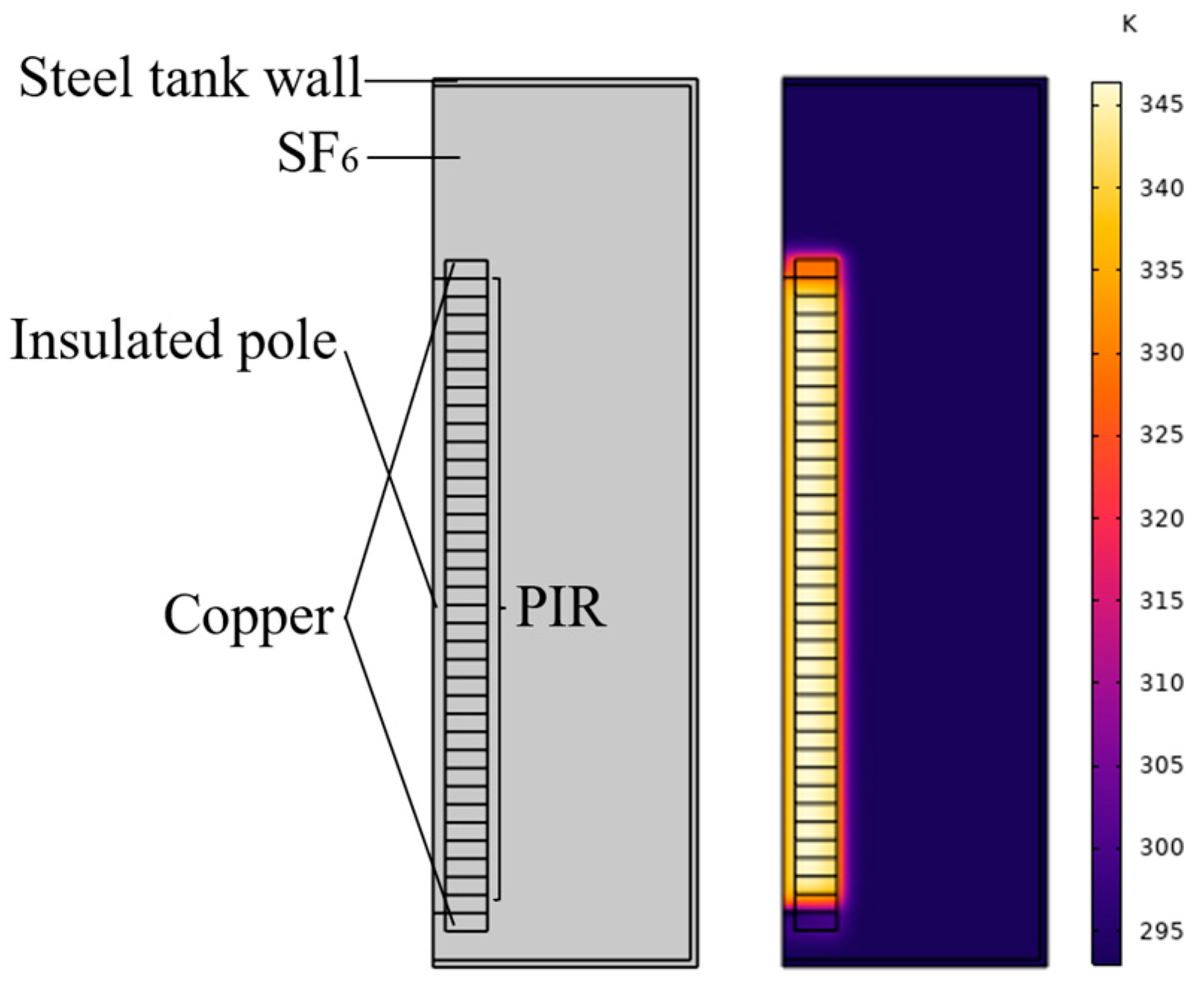

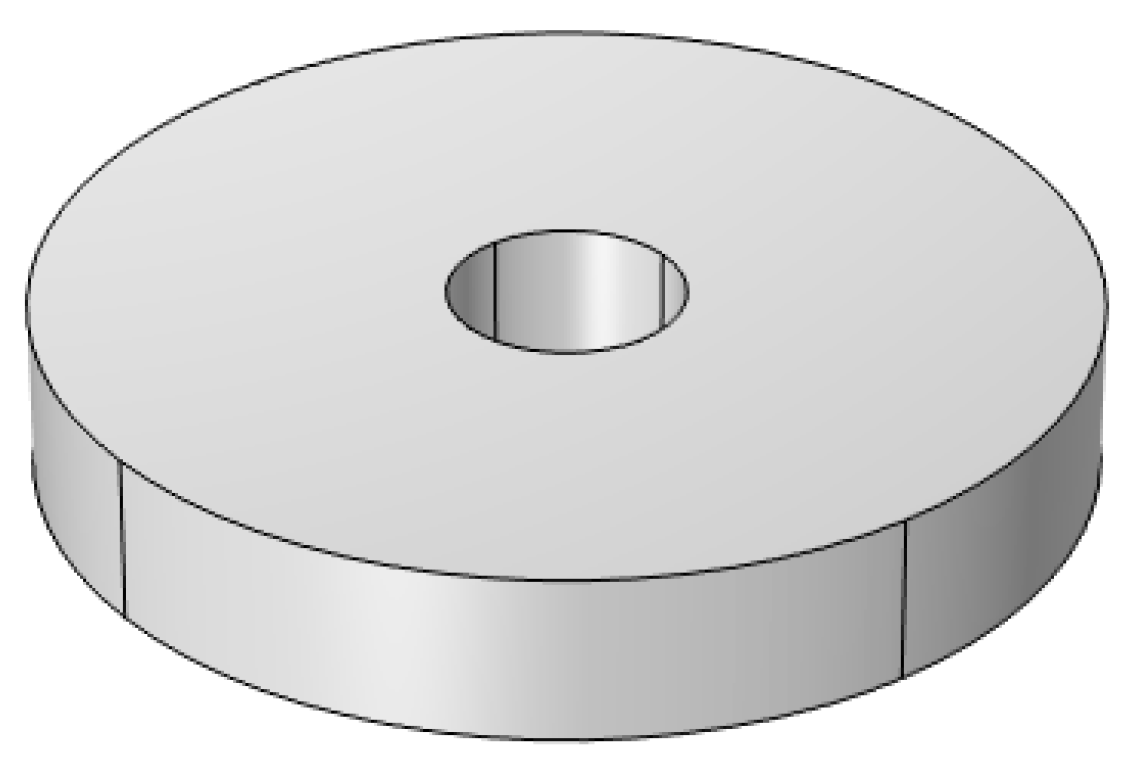

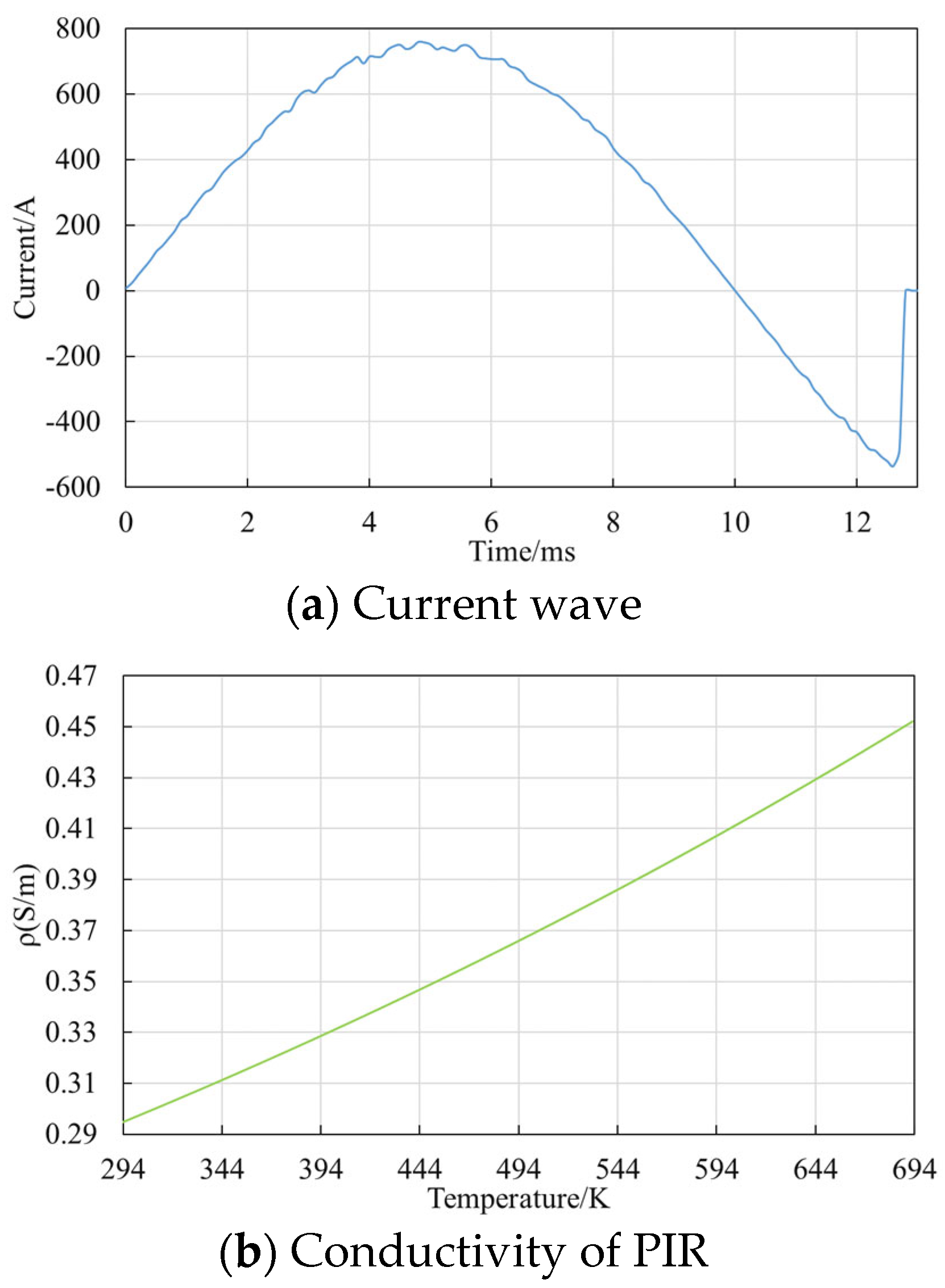

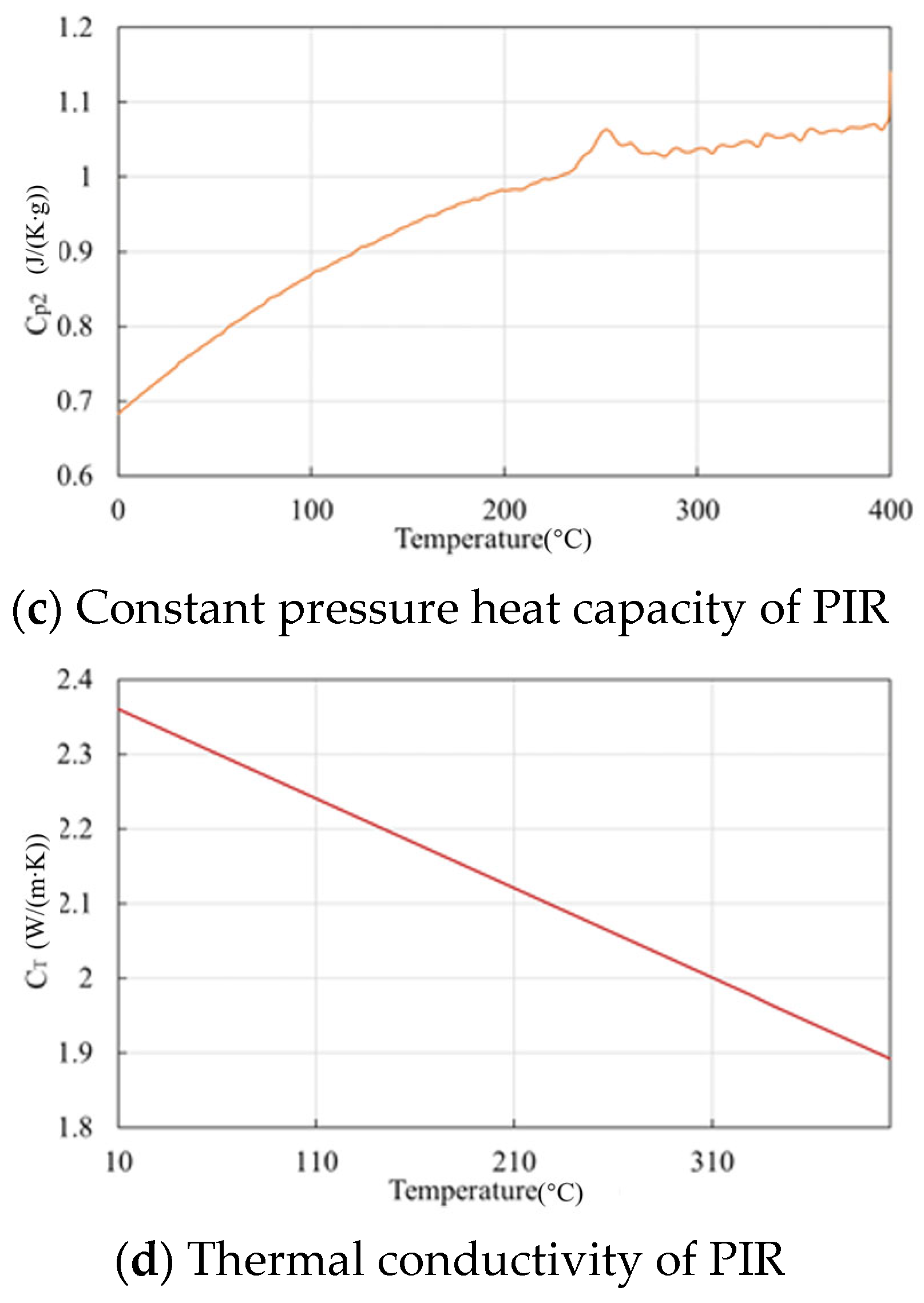

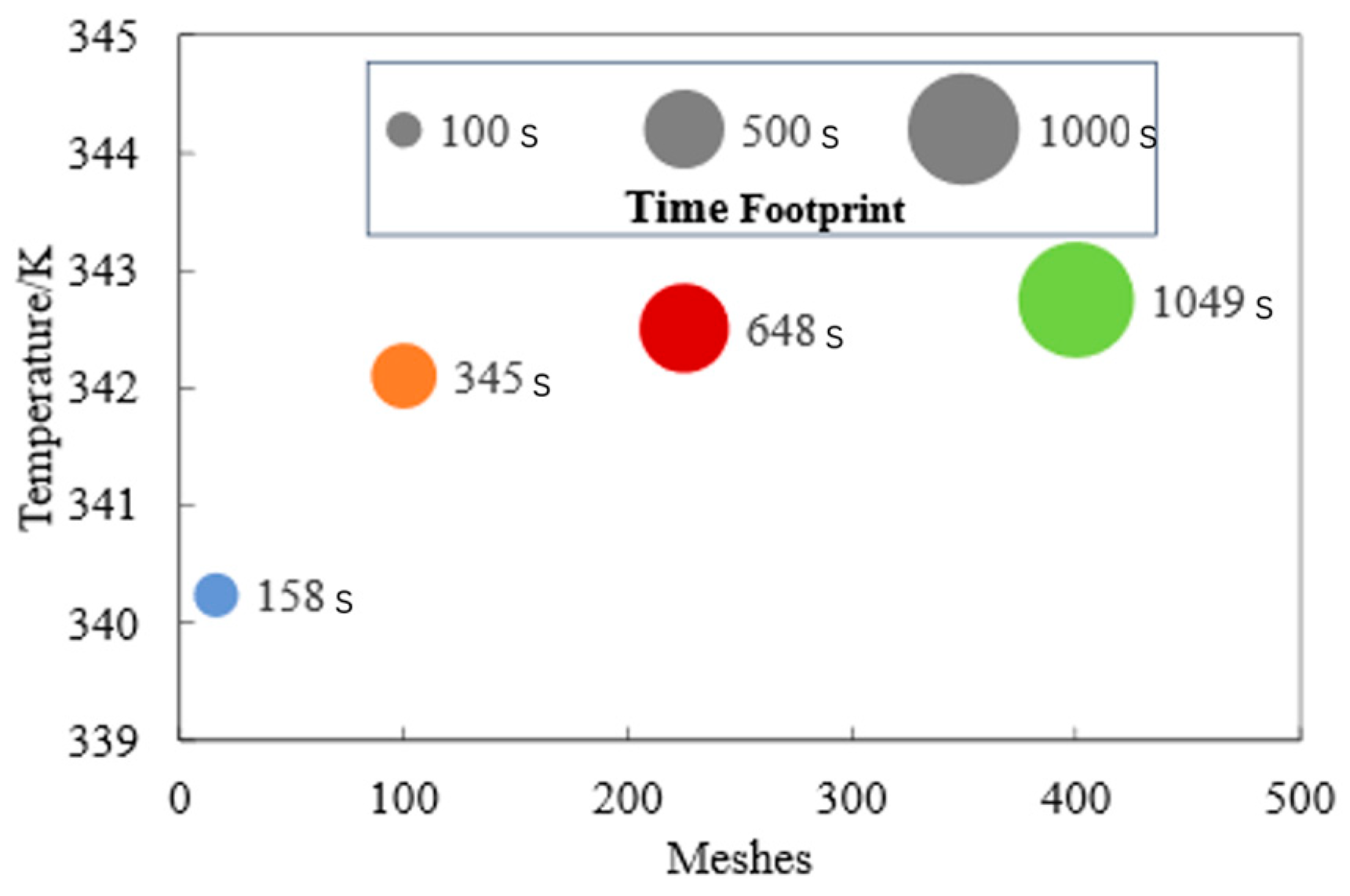

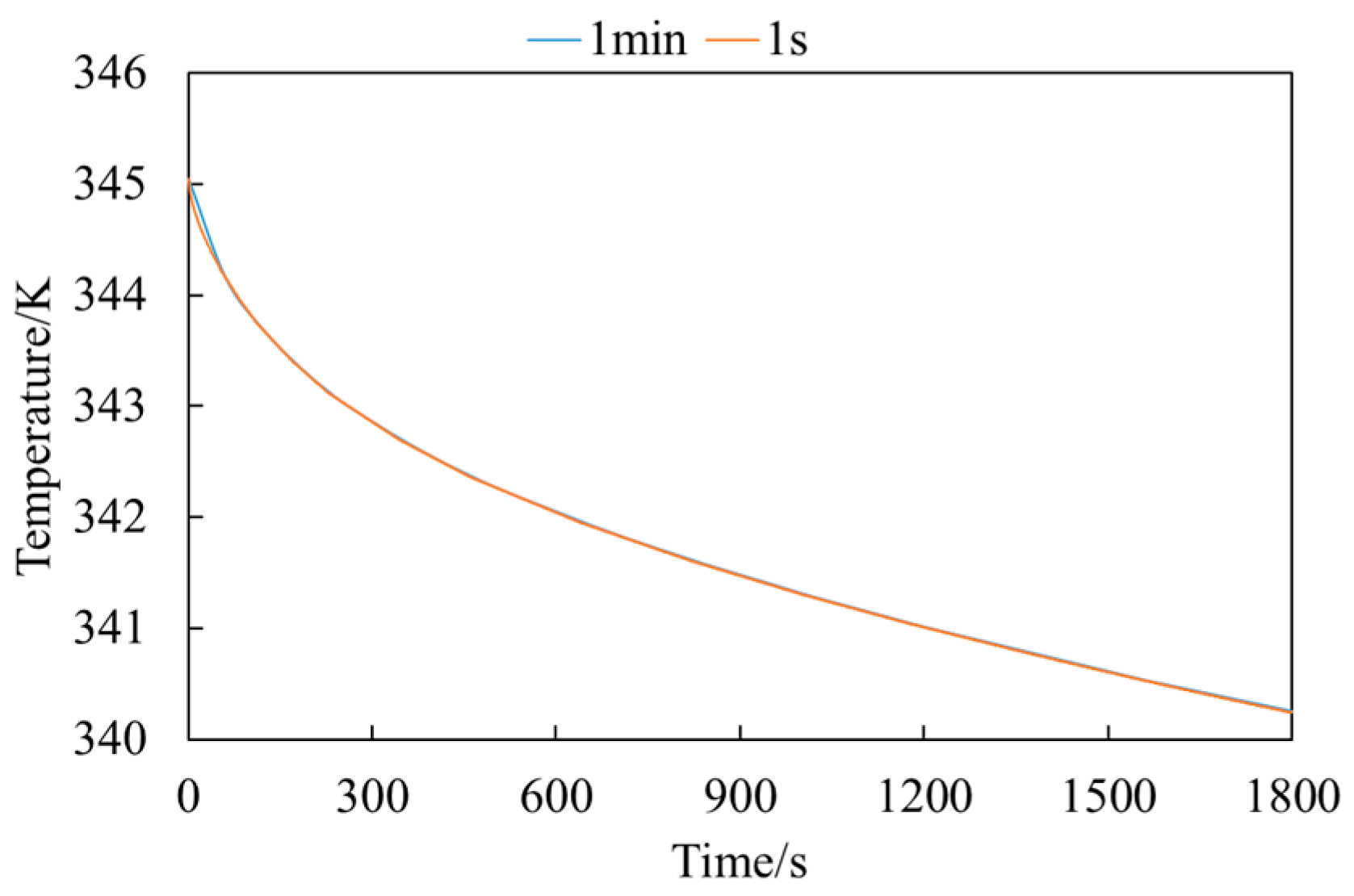

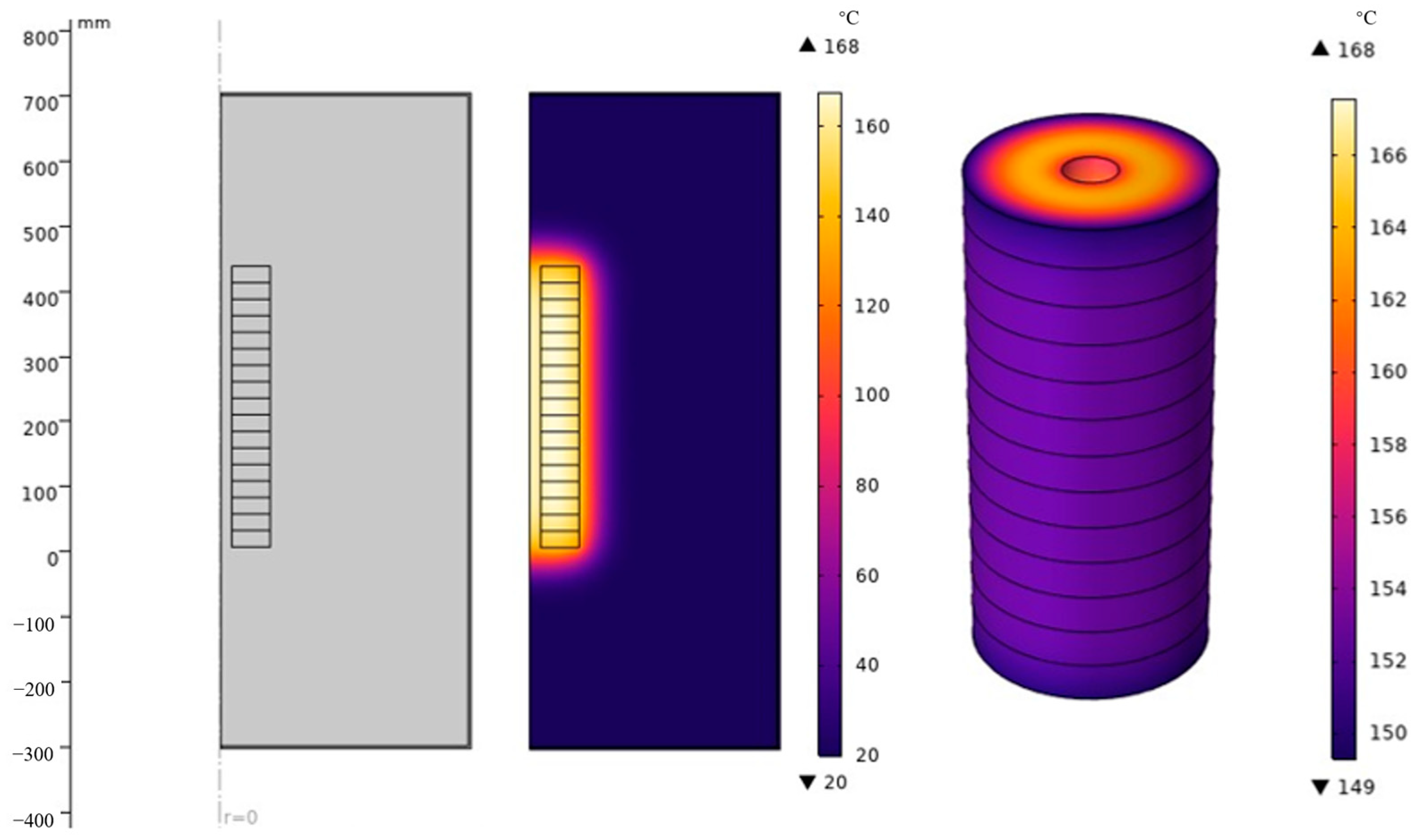

Predicting PIR temperature using deep learning models requires a large amount of data. However, there is less data available on the temperature rise of the PIR. Moreover, conducting experiments on the PIR is very costly. To obtain a large amount of data, a large number of simulations are performed using finite element simulation to obtain a dataset. The finite element method (FEM) is a computational technique used to approximate the solution of complex systems in various domains. It has already shown effectiveness in simulating a PIR’s internal thermal and electrical fields, especially when considering microstructural influences like porosity [

32]. By discretizing the domain into elements and interpolating using shape functions, FEM achieves a high degree of accuracy in modeling physical phenomena under a variety of boundary conditions, making it an indispensable method for engineering and scientific simulations. Compared to other methods (finite difference method and finite volume method), FEM has more advantages. It is very suitable for dealing with complex geometries and heterogeneous materials. FEM uses meshing, which allows the flexible adjustment of the mesh density to improve the accuracy of the model and is especially suitable for irregular shapes and boundaries. FEM has excellent multi-physics field coupling capability, enabling it to effectively simulate multiple interacting physical processes such as electromagnetic fields, thermal fields, hydrodynamics, etc., which is crucial for PIR simulations.

The rest of this article is organized as follows:

Section 2 describes the LSTM, GRU, and BP models.

Section 3 describes the novel loss function RSL.

Section 4 describes the population intelligent optimization algorithm, COA, and its improvement strategy used to find the RSL parameters.

Section 5 describes the finite element simulation model and presents the prediction results of the three algorithms after using RSL.

3. The Rational Smooth Loss Function

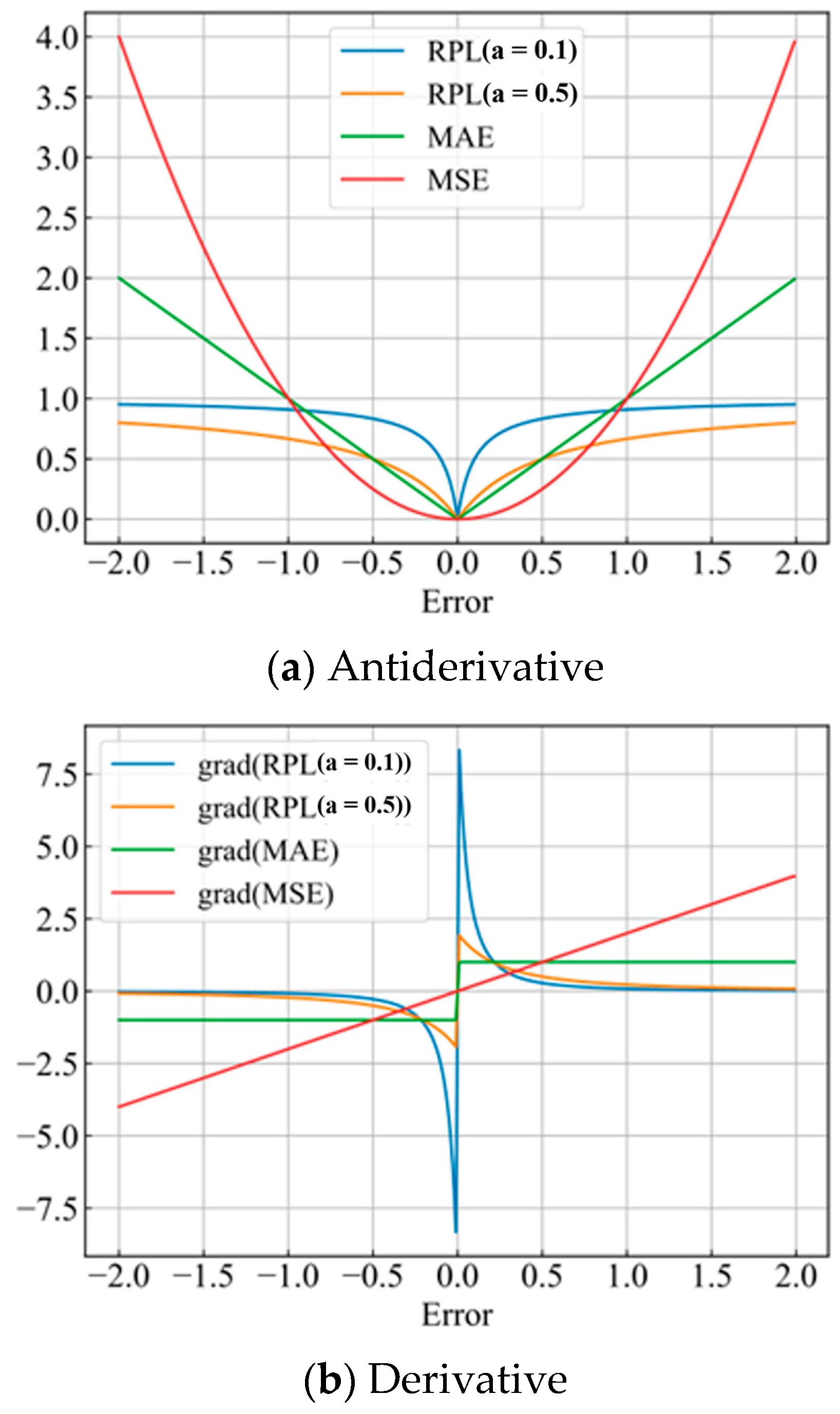

The traditional methods to avoid overfitting are generally regularization, dropout, early stopping, and cross-validation. In this paper, a novel loss function is designed to avoid overfitting. Since the temperature at which the PIRs need to be predicted is nonlinear, using the common L1 and L2 paradigms as model optimization objectives may not accurately portray the predicted nonlinearity. A common approach is to map the data to higher dimensions using a kernel function [

33,

34]. This does not add much computational complexity to map the data to a higher dimensional space. Assuming that

is a nonlinear mapping, the kernel function can be denoted as follows:

The rational quadratic loss function (RQL) may be used as a mapping function:

where

is a hyperparameter. Thus, the RQL is

where

is the prediction value and

is the true value. A Maclaurin expansion [

35] is conducted for

:

Note that the RQL has a lowest subterm of 2, which may lose some low-dimensional (linear) information. Therefore, this study transforms RQL into a rational primary loss function (RPL). The RPL can be described as follows:

Since RPL is not derivable at 0, the Maclaurin expansion cannot be performed directly. Thus, consider finding a point in the right neighborhood (math.) of 0, (0, c), c > 0 for Taylor expansion, when c is small enough. The Taylor expansion [

36] is approximated using the following equation:

Both low and high subterms can be found, and the original data can be adequately mapped to each dimension. From

Figure 1, it can be found that the gradient of RPL increases and then decreases with the error: the gradient is sensitive to the error within a certain range, and when the error is too high (anomaly), the gradient is less sensitive to the error, which suppresses the effect of the outliers on the optimization of the model. Unlike the MSE and MAE, it greatly reduces the sensitivity of the model to outliers, enhances the generalization performance of the model, and is less prone to overfitting.

In addition, the outlier effect is inevitably encountered during model training. To address this issue, regular terms that constrain the predicted values are added. In the formulation of the loss function, the integration of L1 and L2 constraints on the model’s predicted values introduces a nuanced approach to regulating these predictions. These constraints foster the sparsity of the predicted values within the parameter space of the model, a property that is advantageous for both selecting relevant features and diminishing noise interference. Concurrently, by moderating the magnitude of these predictions, the model safeguards against overfitting, curtails the impact of outliers, and ensures the stability of gradient descent. This strategy enhances the model’s interpretability and generalizability, thereby bolstering its resilience to noise and outliers and yielding more robust and efficacious outcomes in both model training and prediction phases. It renders the model more intelligible and adaptable, ensuring its efficacy in real-world scenarios. However, the regular term coefficients should not be too large. However, it is critical to calibrate the coefficients of these constraints carefully to prevent them from overshadowing the loss function, which could otherwise result in trivial predictions. Here is the formula for the rational smooth loss function (RSL):

where

and

c are hyperparameters of

, and

n is the length of the prediction sequence.

6. Results and Discussion

6.1. Evaluated Metrics

To fully evaluate RSL and the performance of the models, this study used common error metrics in this experiment, including the MAE, the MSE, the root mean square error (RMSE), the mean absolute percentage error (MAPE), and the coefficient of determination (R

2). The metrics are defined as follows:

where

,

, and

denote the actual, predicted, and actual temperature average, respectively.

6.2. Main Results

To compare the performance of RSL with traditional loss functions, experiments were conducted on the dataset using three different prediction models (LSTM, GRU, and BP) and four loss functions (MSE loss, MAE loss, smooth loss, and RSL).

Table 5 shows the different model predictions using the four loss functions, and

Table 6 demonstrates the improvement rate of RSL compared to the other loss functions.

RSL consistently exhibits the lowest values in MAE, MSE, and RMSE across the models tested. This underscores RSL’s effectiveness in minimizing prediction errors, thereby aligning predictions more closely with actual outcomes. In terms of MAPE, RSL outperforms alternative loss functions, indicating its proficiency in accurately gauging prediction errors, especially in instances of outliers or data points with significant deviations. RSL achieves higher R2 values, approaching 1, across LSTM, GRU, and BP models, suggesting that the RSL facilitates a more comprehensive explanation of variance in the target variable. The RSL demonstrates superior performance compared to traditional loss functions across all evaluated metrics (MAE, MSE, RMSE, MAPE, and R2) for LSTM, GRU, and BP. Compared to the traditional loss function, the model using RSL has an average reduction of 77.97%, 93.72%, 76.59%, and 78.27% in the four metrics of MAE, MSE, RMSE, and MAPE. And the LSTM using RSL can reduce the MAE to 0.29 K. The MAE loss function performs the best among the traditional loss functions, but the model using RSL still demonstrated great improvement compared to the MAE loss. The adaptability and robustness of the RSL are evident across various models and data characteristics. RSL’s approach to mapping nonlinear errors into reproducing kernel Hilbert spaces (RKHSs) enhances error measurement accuracy in nonlinear space. This refined error quantification supports superior model training and outcomes. Consequently, the application of RSL results in diminished errors when compared to conventional loss functions.

In summation, the findings underscore the benefits of integrating kernel techniques into predictive deep learning frameworks through the loss function, notably improving model performance. Furthermore, these outcomes highlight the imperative for developing nonlinear loss functions capable of accurately quantifying prediction errors within nonlinear datasets, thereby augmenting prediction precision.

6.3. Visualization of Results

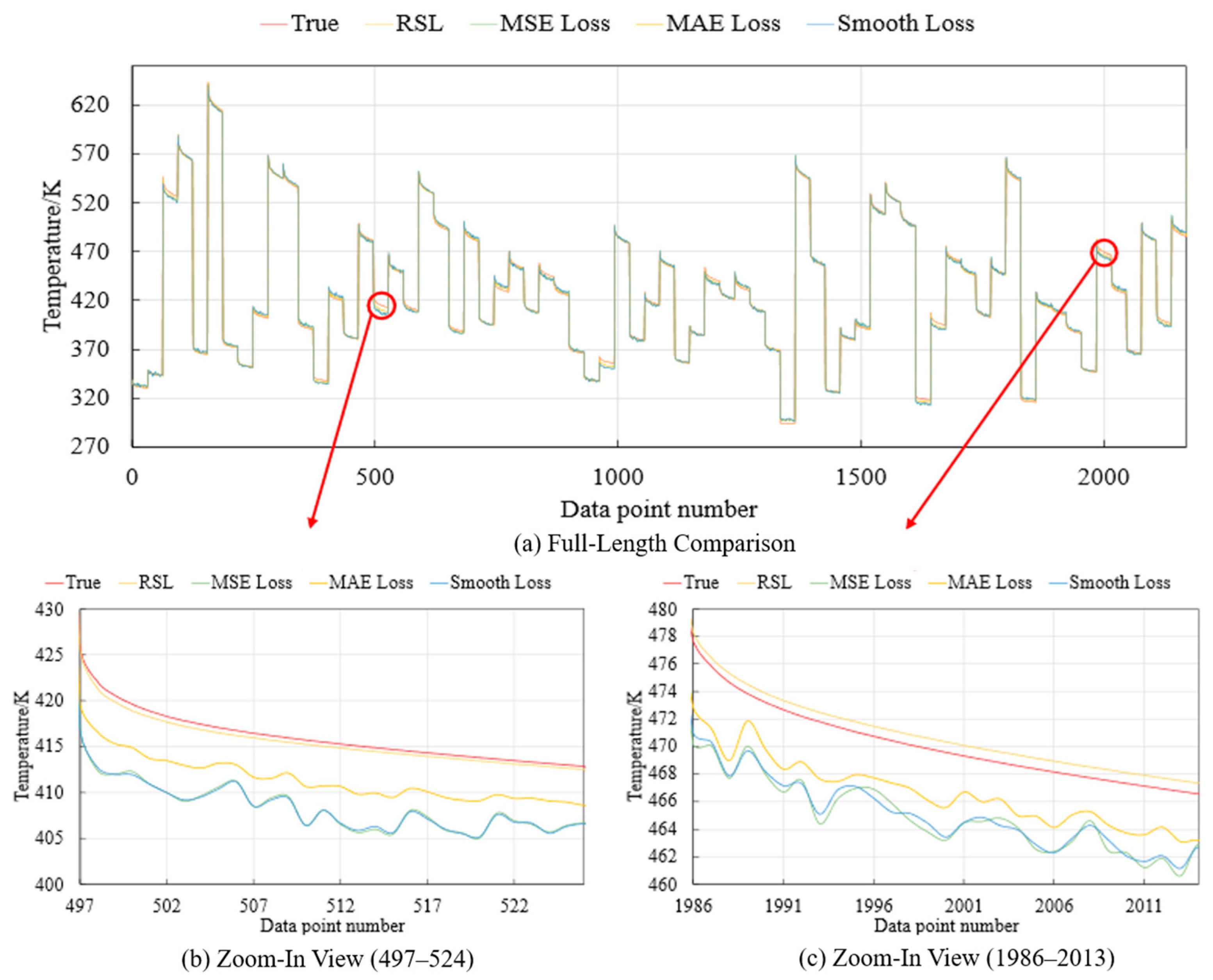

To more effectively highlight the advantages of the RSL, the prediction plot using LSTM based on different loss functions is shown in

Figure 11, where the MAE of RSL using LSTM is 0.29 K, which is a significant improvement compared to the MAE of 1.77 K in the literature [

11].

The LSTM model utilizing the RSL produces a prediction curve that closely approximates and, in many instances, nearly coincides with the actual data values, exhibiting an exceptional smoothness. Conversely, the prediction curves of LSTM employing traditional loss function are characterized by unsmooth and substantial discrepancies relative to the true values. This observation strongly suggests that the LSTM model equipped with RSL yields predictions of higher accuracy and robustness for this specific dataset. RSL is more suitable for dealing with the specific features and noise structure of this dataset and is more resistant to the effects of outliers.

6.4. Stability Experiments

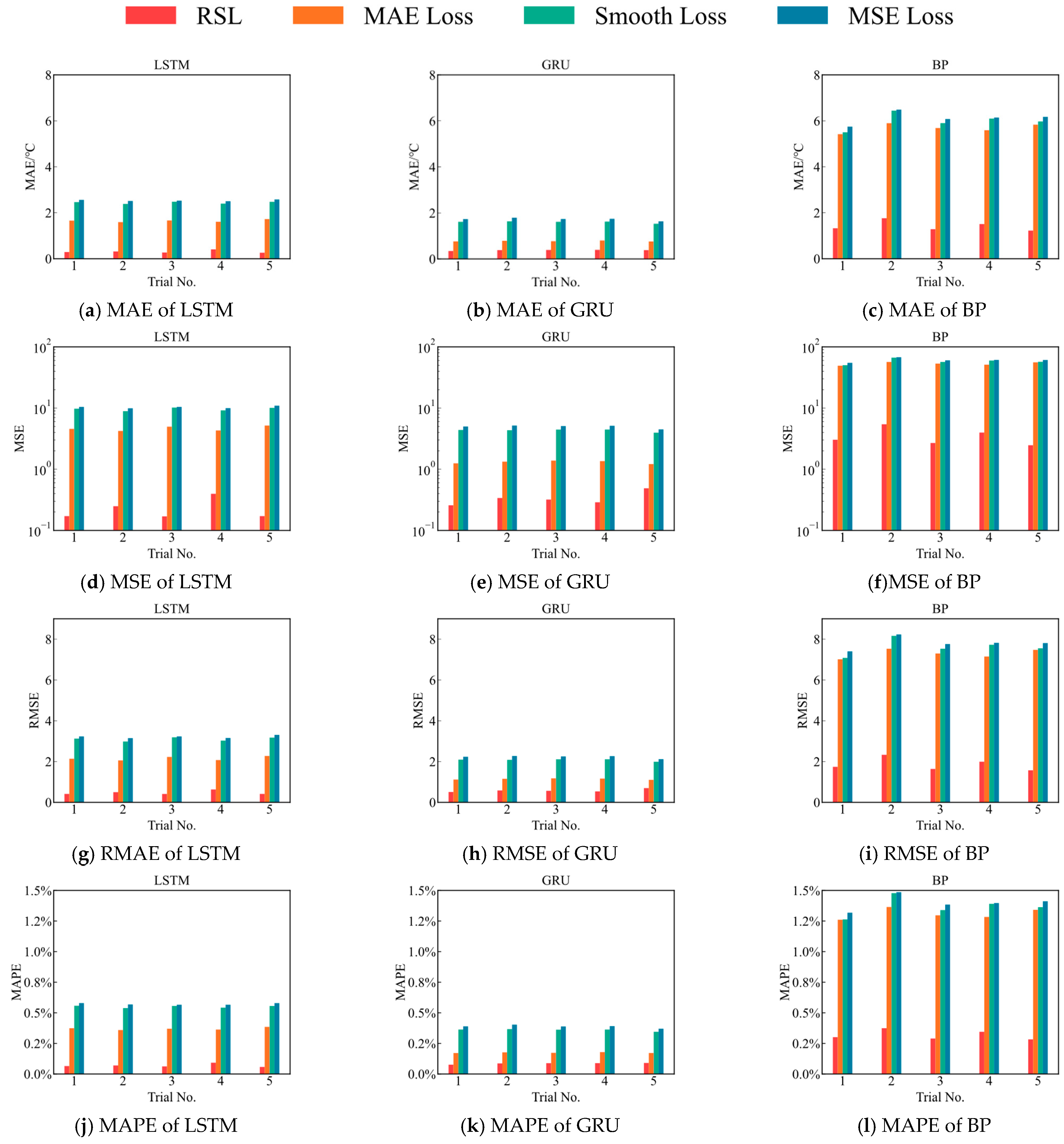

Given the inherent stochastic nature of neural network training, which encompasses aspects such as weight initialization, regularization techniques, and optimization strategies, the results vary from run to run. To assess the stability of the RSL, a series of experiments were conducted utilizing four distinct loss functions across three different models, with consistency in experimental conditions ensured through the application of identical random seeds for each trial.

Figure 12 compares the prediction curves (obtained by performing data prediction using four different loss functions) and the actual curve, derived from the four distinct loss functions and marked with different colors. In the figure, the upper subfigure displays the prediction curves for sampling points 0 to 2200, while the lower subfigure is a partial magnification of the upper one. Specifically, the left part of the lower subfigure presents the prediction curves for sampling points between 497 and 524, and the right part of the lower subfigure shows the prediction curves for sampling points between 1986 and 2013. The corresponding relationship between the upper and lower subfigure is indicated with red arrows.

Analysis of the results, as depicted in

Figure 13, reveals that, across five separate trials, models employing the RSL consistently outperformed those utilizing three traditional loss functions across all evaluated metrics—MAE, MSE, RMSE, and MAPE. Consequently, this evidence suggests the superior stability of RSL over conventional alternatives.