State of Health Estimation for Batteries Based on a Dynamic Graph Pruning Neural Network with a Self-Attention Mechanism

Abstract

1. Introduction

- Lack of explicit structural modeling among degradation features. Many methods treat SOH estimation as a purely temporal problem and ignore dependencies among voltage, current, temperature, and capacity.Our model builds a graph within each feature window, where nodes represent features and edges encode their statistical and physical relationships, and then performs topology-aware propagation with attention to capture these dependencies.

- Insufficient focus on informative degradation phases. Uniform weighting across time steps weakens sensitivity to rapid fading regimes and other salient intervals.The self-attention mechanism reweights time steps within each window so that degradation-salient intervals contribute more to the final estimate, which improves sensitivity to phase-specific patterns.

- Over-reliance on temporal ordering while neglecting relational patterns. Sequential encoders alone do not preserve the cross-feature structure that is critical for precise SOH inference.The proposed model jointly encodes temporal cues and relational topology through dynamic graph propagation coupled with attention, which preserves inter-feature interactions beyond time ordering and yields more informative representations.

- High computational cost in attention-enhanced recurrent models. Multi-layer recurrent processing combined with attention increases parameter count and floating point operations, which limits suitability for embedded deployment. An attention-guided structural pruning module learns sparse adjacency and removes low-utility edges, reducing the computation and parameter footprint while maintaining accuracy.A GCN baseline trained under the same hyperparameters, together with an ablation that disables pruning, isolates the efficiency and accuracy contribution of the pruning design.

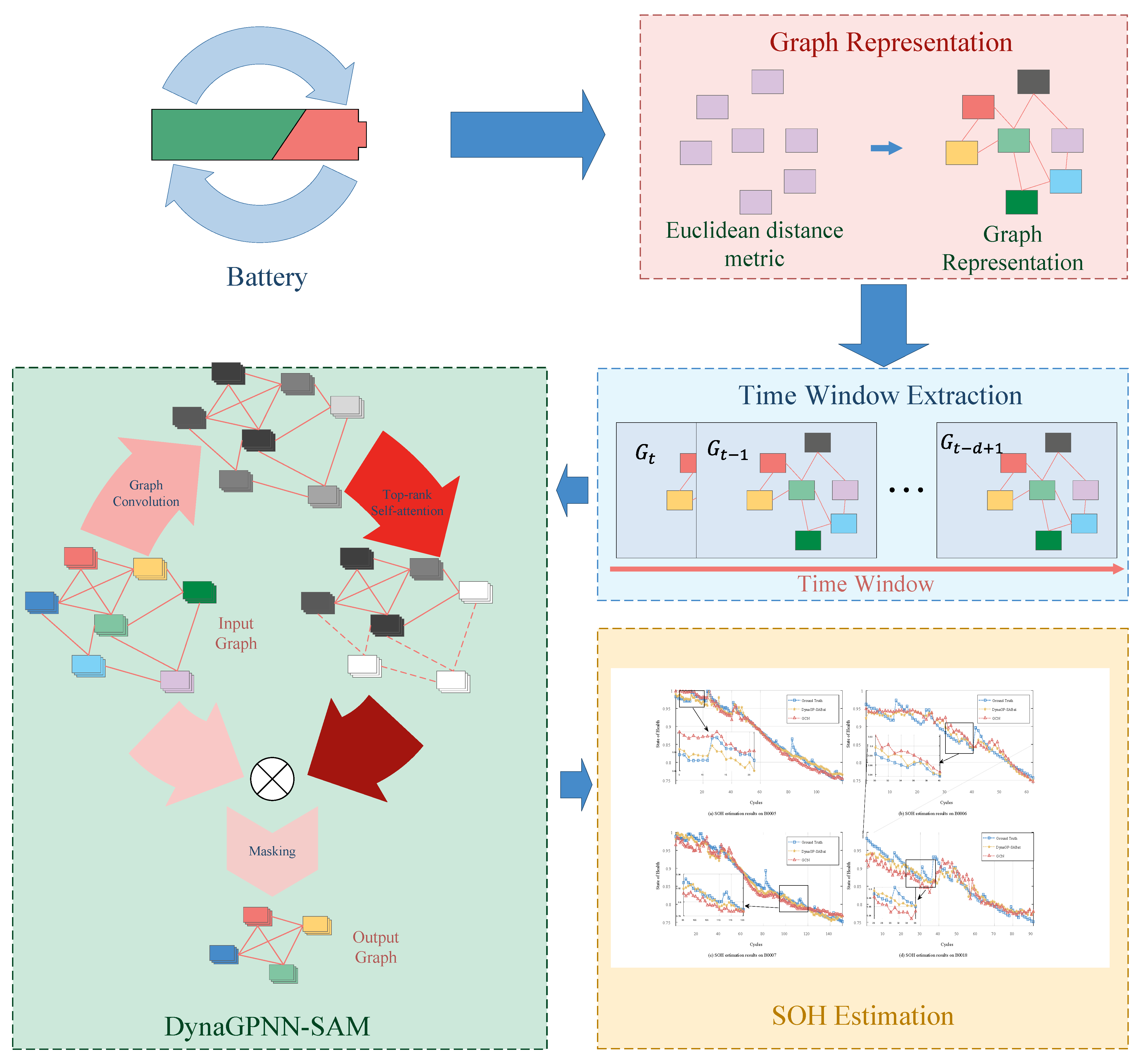

- Topology-first formulation for SOH. We introduce DynaGPNN SAM, which transforms per-window features into a graph and performs topology-aware propagation. Unlike recent graph transformer methods that primarily rely on global attention for token mixing, our formulation treats feature topology as the modeling primitive and learns window-specific adjacency that captures cross-feature dependencies beyond time ordering.

- Attention as a structural operator via pruning. We propose an attention-guided structural pruning mechanism that uses attention to select and retain a compact subgraph by removing low-utility edges, which provides interpretable salient subgraphs and reduces inference cost. This differs from graph transformers where attention serves as soft weighting rather than an explicit selector of the graph structure. The effect of pruning is isolated through a GCN control and a no pruning ablation under identical settings in Section 4.3.

- Phase-aware temporal emphasis coupled with structure. A self-attention module reweights time steps within each window to highlight degradation-salient phases while jointly preserving the learned relational topology, offering a unified treatment of temporal saliency and cross-feature structure that complements transformer style positional encoding.

- Complexity and empirical validation. Inference cost scales with the number of active edges after pruning rather than with quadratic attention, which clarifies efficiency implications. Under a unified protocol on the NASA dataset, the model surpasses strong baselines, including CNN, LSTM, and GCN, with controls reported in Section 4.4.

2. Problem Formulation

3. DynaGPNN-SAM Modeling

3.1. Graph Convolutional Networks

3.2. Dynamic Graph Pruning with Self-Attention Mechanism

3.3. Multi-Layer Stacking and End-to-End Prediction

3.4. Adam Optimization Algorithm

4. Experimental Study

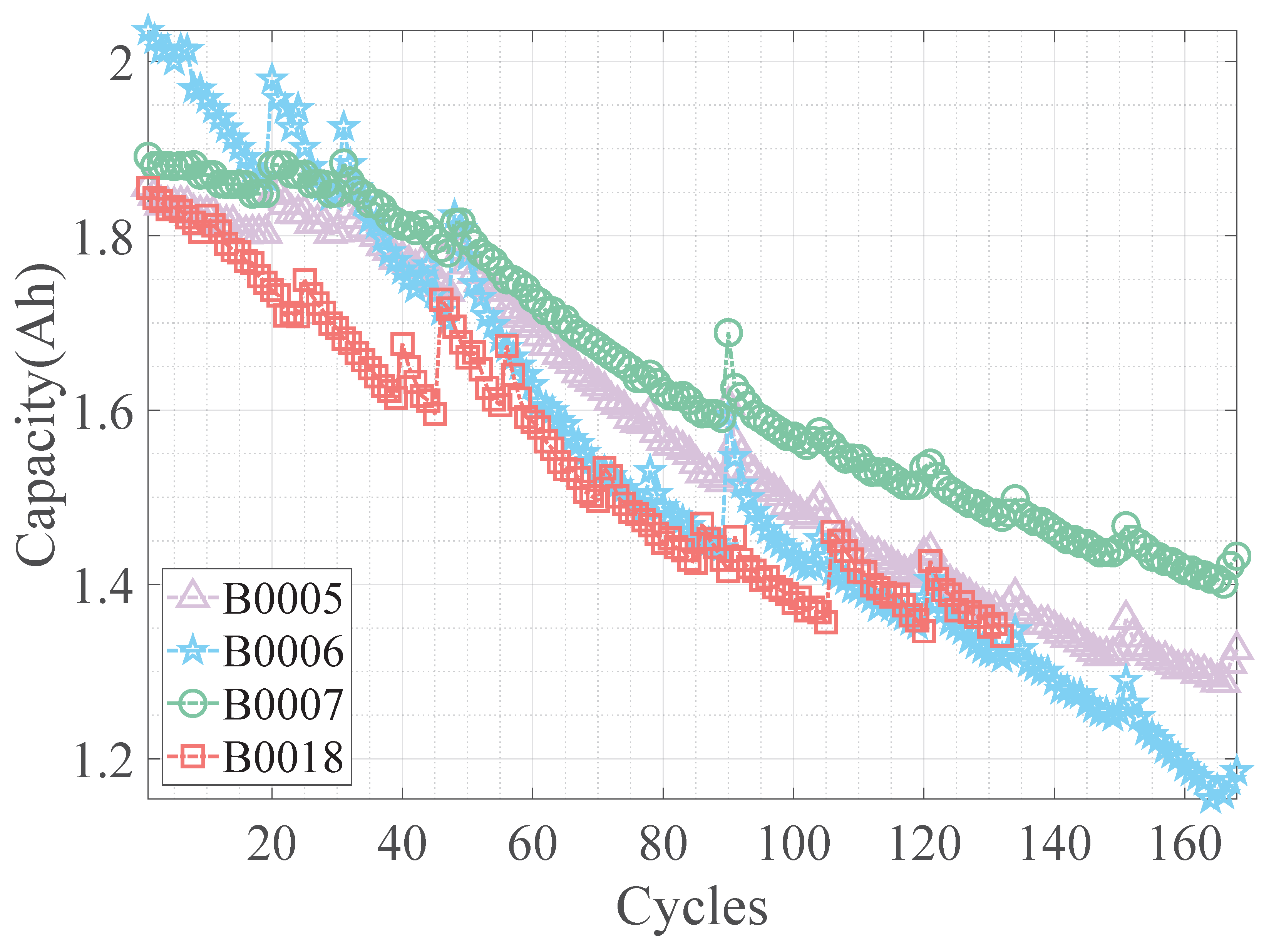

4.1. Dataset Description

4.2. Implementation Details of DynaGPNN-SAM

4.3. Ablation Study Design

4.4. Experimental Results and Analysis

- Traditional Machine Learning Methods: Support Vector Machine (SVM), Backpropagation Neural Network (BP), Wavelet Neural Network (WNN), and Gaussian Process Regression (GPR).

- Deep Learning Models: Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), fusion-based models, and SambaMixer [52].

- Gaussian Process-based Methods: QGPFR (Gaussian process functional regression with quadratic polynomial mean function), LGPFR (linear Gaussian process functional regression), and Combination QGPFR.

- Graph Neural Networks: Graph Convolutional Network (GCN).

- Hybrid Optimization Models: Genetic Algorithm-based WNN (GA WNN).

- Physics-informed Neural Networks: PINN4SOH [53].

4.5. Advantages of the Proposed Method

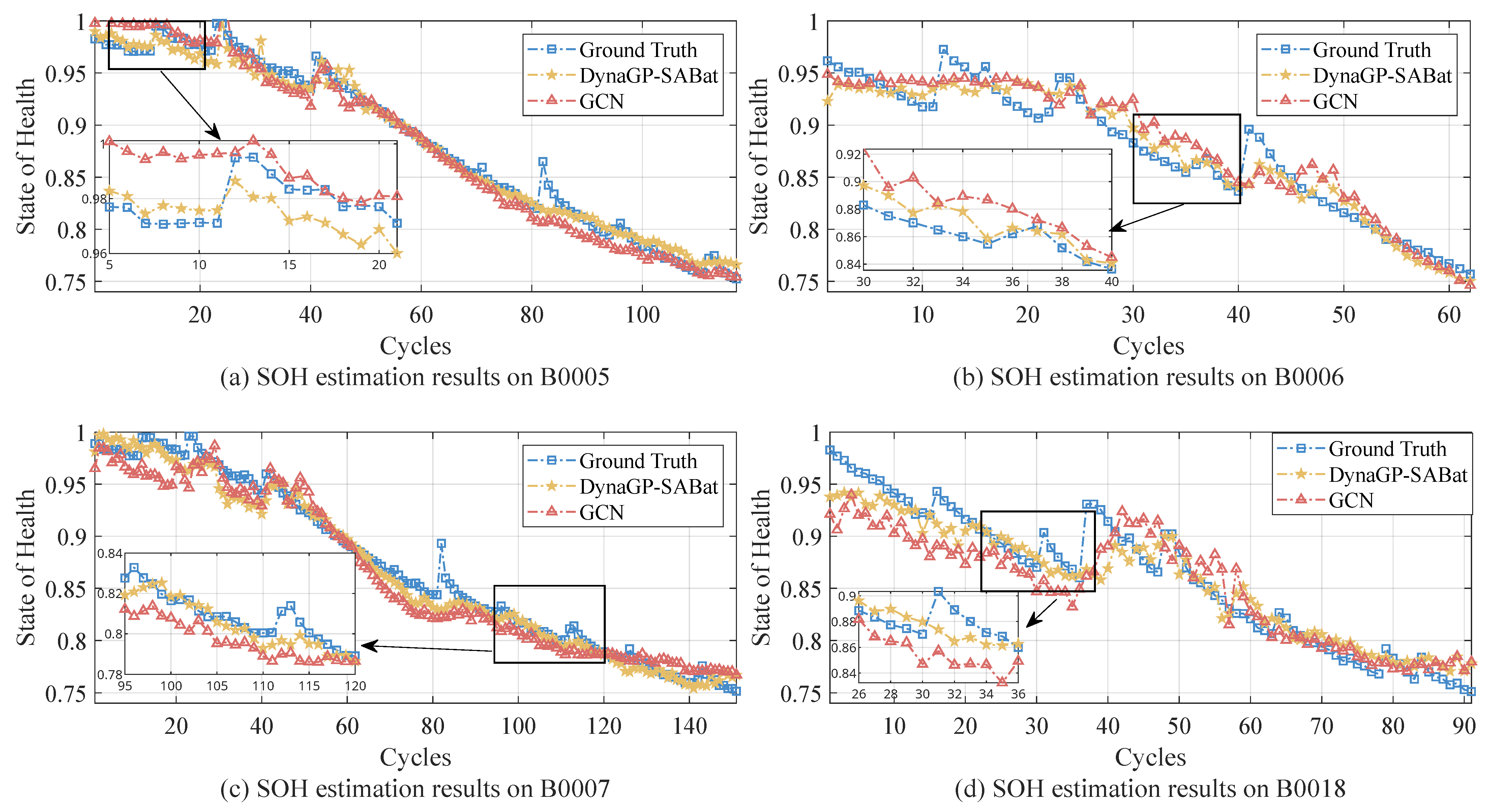

- Comprehensive Integration of Topological and Feature Information: Unlike conventional time-series models that treat battery degradation as a simple sequential process, DynaGPNN-SAM explicitly models the complex structural relationships among battery operational features through graph representation. As demonstrated in Figure 5, this approach enables the model to capture both temporal dependencies and spatial correlations among voltage, current, temperature, and capacity features simultaneously. The graph convolutional layers effectively encode the non-Euclidean relationships between these features, while the self-attention mechanism dynamically highlights nodes corresponding to critical degradation phases. This dual capability explains why our method achieves RMSE reductions of 30.3% and 28.6% on batteries B0007 and B0018 compared to standard GCN, as shown in Table 4, particularly excelling during rapid degradation phases where traditional methods exhibit significant deviations.

- Superior Handling of Nonlinear Degradation Patterns and Capacity Regeneration: One of the most challenging aspects of battery SOH estimation is accurately modeling nonlinear degradation trajectories, particularly the “knee point” phenomenon where degradation accelerates rapidly. Traditional methods often struggle with these nonlinearities and capacity regeneration events that introduce uncertainty into predictions. DynaGPNN-SAM’s architecture specifically addresses this challenge through its attention-guided node selection process, which automatically identifies and prioritizes segments containing knee points and regeneration events. As shown in Figure 5c, our model maintains high accuracy during the rapid degradation phase of battery B0006 (cycles 30–40), where competing methods exhibit significant deviations. Quantitatively, DynaGPNN-SAM achieves an RMSE of only 0.0057 on B0006 during this critical phase, compared to 0.0125 for GCN, a 54.4% improvement.

- End-to-End Learning Framework with Minimal Feature Engineering: The proposed method eliminates the need for manual feature extraction and selection, which has been a significant bottleneck in previous SOH estimation approaches. Traditional methods often require domain-specific knowledge to identify relevant health indicators (HIs) from raw battery data, a process that is both time-consuming and prone to information loss. DynaGPNN-SAM, by contrast, processes raw feature sequences directly through its graph construction mechanism, automatically learning which features and temporal segments contribute most significantly to SOH determination.

5. Conclusions and Future Perspectives

5.1. Conclusions

5.2. Perspectives

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Massaoudi, M.; Abu-Rub, H.; Ghrayeb, A. Advancing lithium-ion battery health prognostics with deep learning: A review and case study. IEEE Open J. Ind. Appl. 2024, 5, 43–62. [Google Scholar] [CrossRef]

- Hannan, M.A.; Hoque, M.M.; Hussain, A.; Yusof, Y.; Ker, P.J. State-of-the-art and energy management system of lithium-ion batteries in electric vehicle applications: Issues and recommendations. IEEE Access 2018, 6, 19362–19378. [Google Scholar] [CrossRef]

- Yang, B.; Zheng, R.; Han, Y.; Huang, J.; Li, M.; Shu, H.; Su, S.; Guo, Z. Recent Advances in Fault Diagnosis Techniques for Photovoltaic Systems: A Critical Review. Prot. Control Mod. Power Syst. 2024, 9, 36–59. [Google Scholar] [CrossRef]

- Zhang, M.; Han, Y.; Liu, Y.; Zalhaf, A.S.; Zhao, E.; Mahmoud, K.; Darwish, M.M.F.; Blaabjerg, F. Multi-Timescale Modeling and Dynamic Stability Analysis for Sustainable Microgrids: State-of-the-Art and Perspectives. Prot. Control Mod. Power Syst. 2024, 9, 1–35. [Google Scholar] [CrossRef]

- International Energy Agency. Global EV Outlook 2025. Section: Electric Vehicle Batteries. 2025. Available online: https://www.iea.org/reports/global-ev-outlook-2025/electric-vehicle-batteries (accessed on 17 September 2025).

- Kiruthiga, B.; Karthick, R.; Manju, I.; Kondreddi, K. Optimizing Harmonic Mitigation for Smooth Integration of Renewable Energy: A Novel Approach Using Atomic Orbital Search and Feedback Artificial Tree Control. Prot. Control Mod. Power Syst. 2024, 9, 160–176. [Google Scholar] [CrossRef]

- Ria, A.; Dini, P. A compact overview on Li-ion batteries characteristics and battery management systems integration for automotive applications. Energies 2024, 17, 5992. [Google Scholar] [CrossRef]

- Miao, Y.; Gao, Y.; Liu, X.; Liang, Y.; Liu, L. Analysis of State-of-Charge Estimation Methods for Li-Ion Batteries Considering Wide Temperature Range. Energies 2025, 18, 1188. [Google Scholar] [CrossRef]

- Zhou, Z.; Liu, Y.; You, M.; Xiong, R.; Zhou, X. Two-stage aging trajectory prediction of LFP lithium-ion battery based on transfer learning with the cycle life prediction. Green Energy Intell. Transp. 2022, 1, 100008. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, Y.; Zhao, W.; Gong, Y.; Zhang, Z.; Gao, W.; Shang, Y. Capacity degradation analysis and knee point prediction for lithium-ion batteries. Green Energy Intell. Transp. 2024, 3, 100171. [Google Scholar] [CrossRef]

- Liu, J.; Duan, Q.; Qi, K.; Liu, Y.; Sun, J.; Wang, Z.; Wang, Q. Capacity fading mechanisms and state of health prediction of commercial lithium-ion battery in total lifespan. J. Energy Storage 2022, 46, 103910. [Google Scholar] [CrossRef]

- Warner, J.T. The Handbook of Lithium-Ion Battery Pack Design: Chemistry, Components, Types, and Terminology; Elsevier: Amsterdam, The Netherlands, 2024. [Google Scholar]

- Goud, J.S.; Kalpana, R.; Singh, B. An online method of estimating state of health of a Li-ion battery. IEEE Trans. Energy Convers. 2020, 36, 111–119. [Google Scholar] [CrossRef]

- Stroe, D.I.; Schaltz, E. Lithium-ion battery state-of-health estimation using the incremental capacity analysis technique. IEEE Trans. Ind. Appl. 2019, 56, 678–685. [Google Scholar] [CrossRef]

- Ouyang, T.; Xu, P.; Lu, J.; Hu, X.; Liu, B.; Chen, N. Coestimation of state-of-charge and state-of-health for power batteries based on multithread dynamic optimization method. IEEE Trans. Ind. Electron. 2021, 69, 1157–1166. [Google Scholar] [CrossRef]

- Zhang, Q.; Huang, C.G.; Li, H.; Feng, G.; Peng, W. Electrochemical impedance spectroscopy based state-of-health estimation for lithium-ion battery considering temperature and state-of-charge effect. IEEE Trans. Transp. Electrif. 2022, 8, 4633–4645. [Google Scholar] [CrossRef]

- Ranga, M.R.; Aduru, V.R.; Krishna, N.V.; Rao, K.D.; Dawn, S.; Alsaif, F.; Alsulamy, S.; Ustun, T.S. An unscented Kalman filter-based robust state of health prediction technique for lithium ion batteries. Batteries 2023, 9, 376. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, Y.; Li, X.; Huo, M.; Luo, H.; Yin, S. An adaptive remaining useful life prediction approach for single battery with unlabeled small sample data and parameter uncertainty. Reliab. Eng. Syst. Saf. 2022, 222, 108357. [Google Scholar] [CrossRef]

- Wang, S.; Gao, H.; Takyi-Aninakwa, P.; Guerrero, J.M.; Fernandez, C.; Huang, Q. Improved Multiple Feature-Electrochemical Thermal Coupling Modeling of Lithium-Ion Batteries at Low-Temperature with Real-Time Coefficient Correction. Prot. Control Mod. Power Syst. 2024, 9, 157–173. [Google Scholar] [CrossRef]

- Li, N.; He, F.; Ma, W.; Wang, R.; Jiang, L.; Zhang, X. An indirect state-of-health estimation method based on improved genetic and back propagation for online lithium-ion battery used in electric vehicles. IEEE Trans. Veh. Technol. 2022, 71, 12682–12690. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, Y.; Li, X.; Luo, H.; Yin, S.; Kaynak, O. Remaining useful life prediction of lithium-ion battery with adaptive noise estimation and capacity regeneration detection. IEEE/ASME Trans. Mechatron. 2022, 28, 632–643. [Google Scholar] [CrossRef]

- Wu, J.; Cui, X.; Meng, J.; Peng, J.; Lin, M. Data-driven transfer-stacking-based state of health estimation for lithium-ion batteries. IEEE Trans. Ind. Electron. 2023, 71, 604–614. [Google Scholar] [CrossRef]

- Zhou, L.; Zhao, Y.; Li, D.; Wang, Z. State-of-health estimation for LiFePO 4 battery system on real-world electric vehicles considering aging stage. IEEE Trans. Transp. Electrif. 2021, 8, 1724–1733. [Google Scholar] [CrossRef]

- Qian, Q.; Wen, Q.; Tang, R.; Qin, Y. DG-Softmax: A new domain generalization intelligent fault diagnosis method for planetary gearboxes. Reliab. Eng. Syst. Saf. 2025, 260, 111057. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Alcaide, A.M.; Leon, J.I.; Vazquez, S.; Franquelo, L.G.; Luo, H.; Yin, S. Lifetime extension approach based on the Levenberg–Marquardt neural network and power routing of DC–DC converters. IEEE Trans. Power Electron. 2023, 38, 10280–10291. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Yan, P.; Wu, S.; Luo, H.; Yin, S. Multi-hop graph pooling adversarial network for cross-domain remaining useful life prediction: A distributed federated learning perspective. Reliab. Eng. Syst. Saf. 2024, 244, 109950. [Google Scholar] [CrossRef]

- Tian, J.; Jiang, Y.; Zhang, J.; Luo, H.; Yin, S. A novel data augmentation approach to fault diagnosis with class-imbalance problem. Reliab. Eng. Syst. Saf. 2024, 243, 109832. [Google Scholar] [CrossRef]

- Bracale, A.; De Falco, P.; Di Noia, L.P.; Rizzo, R. Probabilistic state of health and remaining useful life prediction for Li-ion batteries. IEEE Trans. Ind. Appl. 2022, 59, 578–590. [Google Scholar] [CrossRef]

- Lin, M.; You, Y.; Meng, J.; Wang, W.; Wu, J.; Stroe, D.I. Lithium-ion batteries SOH estimation with multimodal multilinear feature fusion. IEEE Trans. Energy Convers. 2023, 38, 2959–2968. [Google Scholar] [CrossRef]

- Gong, Q.; Wang, P.; Cheng, Z. An encoder-decoder model based on deep learning for state of health estimation of lithium-ion battery. J. Energy Storage 2022, 46, 103804. [Google Scholar] [CrossRef]

- Cai, L.; Cui, N.; Jin, H.; Meng, J.; Yang, S.; Peng, J.; Zhao, X. A unified deep learning optimization paradigm for lithium-ion battery state-of-health estimation. IEEE Trans. Energy Convers. 2023, 39, 589–600. [Google Scholar] [CrossRef]

- Bamati, S.; Chaoui, H. Lithium-ion batteries long horizon health prognostic using machine learning. IEEE Trans. Energy Convers. 2021, 37, 1176–1186. [Google Scholar] [CrossRef]

- Jia, K.; Zhao, L.; Qin, P. An Energy-Based Method for Predicting Battery Aging using Newly Constructed Features in Fractal Gradient-Enhanced LSTM Networks. IEEE Trans. Transp. Electrif. 2025, 11, 11497–11509. [Google Scholar] [CrossRef]

- Shu, X.; Shen, J.; Li, G.; Zhang, Y.; Chen, Z.; Liu, Y. A flexible state-of-health prediction scheme for lithium-ion battery packs with long short-term memory network and transfer learning. IEEE Trans. Transp. Electrif. 2021, 7, 2238–2248. [Google Scholar] [CrossRef]

- Liu, K.; Shang, Y.; Ouyang, Q.; Widanage, W.D. A data-driven approach with uncertainty quantification for predicting future capacities and remaining useful life of lithium-ion battery. IEEE Trans. Ind. Electron. 2020, 68, 3170–3180. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, H.; Kang, L.; Zhang, Y.; Wang, L.; Wang, K. Prediction of Health Level of Multiform Lithium Sulfur Batteries Based on Incremental Capacity Analysis and an Improved LSTM. Prot. Control Mod. Power Syst. 2024, 9, 21–31. [Google Scholar] [CrossRef]

- Zhang, Z.; Jeong, Y.; Jang, J.; Lee, C.G. A pattern-driven stochastic degradation model for the prediction of remaining useful life of rechargeable batteries. IEEE Trans. Ind. Inform. 2022, 18, 8586–8594. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, C.; Chow, M.Y.; Li, X.; Tian, J.; Luo, H.; Yin, S. A data-model interactive remaining useful life prediction approach of lithium-ion batteries based on PF-BiGRU-TSAM. IEEE Trans. Ind. Inform. 2023, 20, 1144–1154. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, W.; Li, W.; Ke, X. DGAT: Dynamic Graph Attention-Transformer network for battery state of health multi-step prediction. Energy 2025, 330, 136876. [Google Scholar] [CrossRef]

- Li, Z.; Liu, Y.; Zhou, C.; Liu, X.; Pan, X.; Cao, B.; Wu, X. Transformer-based Graph Neural Networks for Battery Range Prediction in AIoT Battery-Swap Services. In Proceedings of the 2024 IEEE International Conference on Web Services (ICWS), Shenzhen, China, 7–13 July 2024; pp. 1168–1176. [Google Scholar]

- Xing, C.; Liu, H.; Zhang, Z.; Wang, J.; Wang, J. Enhancing Lithium-Ion Battery Health Predictions by Hybrid-Grained Graph Modeling. Sensors 2024, 24, 4185. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Tu, L.; Zhang, C. A State-of-Health Estimation Method for Lithium Batteries Based on Incremental Energy Analysis and Bayesian Transformer. J. Electr. Comput. Eng. 2024, 2024, 5822106. [Google Scholar] [CrossRef]

- Lv, X.; Cheng, Y.; Ma, S.; Jiang, H. State of health estimation method based on real data of electric vehicles using federated learning. Int. J. Electrochem. Sci. 2024, 19, 100591. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, Y.; Ruan, X.; Zhang, X. A federated transfer learning approach for lithium-ion battery lifespan early prediction considering privacy preservation. J. Energy Storage 2024, 102, 114153. [Google Scholar] [CrossRef]

- Thelen, A.; Huan, X.; Paulson, N.; Onori, S.; Hu, Z.; Hu, C. Probabilistic machine learning for battery health diagnostics and prognostics—Review and perspectives. npj Mater. Sustain. 2024, 2, 14. [Google Scholar] [CrossRef]

- Zhang, R.; Ji, C.; Zhou, X.; Liu, T.; Jin, G.; Pan, Z.; Liu, Y. Capacity estimation of lithium-ion batteries with uncertainty quantification based on temporal convolutional network and Gaussian process regression. Energy 2024, 297, 131154. [Google Scholar] [CrossRef]

- Ke, Y.; Long, M.; Yang, F.; Peng, W. A Bayesian deep learning pipeline for lithium-ion battery SOH estimation with uncertainty quantification. Qual. Reliab. Eng. Int. 2024, 40, 406–427. [Google Scholar] [CrossRef]

- Shadi, M.R.; Mirshekali, H.; Shaker, H.R. Explainable artificial intelligence for energy systems maintenance: A review on concepts, current techniques, challenges, and prospects. Renew. Sustain. Energy Rev. 2025, 216, 115668. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tian, J.; Zhang, J.; Luo, H.; Huang, C.; Chow, M.Y.; Jiang, Y.; Yin, S. A Feature Extraction and Analysis Method for Battery Health Monitoring. In Proceedings of the 2024 IEEE 33rd International Symposium on Industrial Electronics (ISIE), Ulsan, Republic of Korea, 18–21 June 2024; pp. 1–6. [Google Scholar]

- Huang, S.C.; Tseng, K.H.; Liang, J.W.; Chang, C.L.; Pecht, M.G. An online SOC and SOH estimation model for lithium-ion batteries. Energies 2017, 10, 512. [Google Scholar] [CrossRef]

- Olalde-Verano, J.I.; Kirch, S.; Pérez-Molina, C.; Martín, S. SambaMixer: State of Health Prediction of Li-Ion Batteries Using Mamba State Space Models. IEEE Access 2025, 13, 2313–2327. [Google Scholar] [CrossRef]

- Wang, F.; Zhai, Z.; Zhao, Z.; Di, Y.; Chen, X. Physics-informed neural network for lithium-ion battery degradation stable modeling and prognosis. Nat. Commun. 2024, 15, 4332. [Google Scholar] [CrossRef]

- Chang, C.; Wang, Q.; Jiang, J.; Wu, T. Lithium-ion battery state of health estimation using the incremental capacity and wavelet neural networks with genetic algorithm. J. Energy Storage 2021, 38, 102570. [Google Scholar] [CrossRef]

- Liu, D.; Pang, J.; Zhou, J.; Peng, Y.; Pecht, M. Prognostics for state of health estimation of lithium-ion batteries based on combination Gaussian process functional regression. Microelectron. Reliab. 2013, 53, 832–839. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, J.; Xia, L.; Liu, Y. State of health estimation for lithium-ion battery using empirical degradation and error compensation models. IEEE Access 2020, 8, 123858–123868. [Google Scholar] [CrossRef]

| Method | Strengths | Limitations |

|---|---|---|

| Coulomb counting | Simple and interpretable energy balance, suitable for real-time implementation with low computational cost when current sensing is reliable | Sensitive to current sensor bias and drift, cumulative integration error, and limited ability to reflect dynamic degradation states |

| OCV analysis via incremental capacity analysis | Physically grounded voltage landmarks and robust capacity fade indicators after appropriate smoothing | Requires near-equilibrium segments; reduced applicability under highly dynamic electric vehicle duty cycles |

| OCV-based joint estimation of SOC and SOH | Joint inference reduces propagation of SOC errors into SOH and improves precision through coordinated models | Increased implementation complexity and need for model selection across operating regimes |

| EIS assisted equivalent circuit modeling | Parameter interpretability combined with sensitivity in the frequency domain, adaptable across temperature and SOC after calibration | Requires impedance instrumentation and careful calibration; additional acquisition time and setup effort |

| Parameter | Configuration |

|---|---|

| Time window length | 10 |

| Number of DynaGPNN-SAM layers | 2 |

| Number of DynaGPNN-SAM neurons | 10 |

| Learning rate | 0.001 |

| Batch size | 16 |

| Loss function | Mean square error |

| epochs | 100 |

| optimizer | Adam |

| Experiments | RMSE | MAE | ||||||

|---|---|---|---|---|---|---|---|---|

| B0005 | B0006 | B0007 | B0018 | B0005 | B0006 | B0007 | B0018 | |

| Original version | 0.0104 ± 0.0009 3 | 0.0164 ± 0.0007 | 0.0122 ± 0.0008 | 0.0205 ± 0.0004 | 0.0077 ± 0.0005 | 0.0131 ± 0.0008 | 0.0089 ± 0.0007 | 0.0155 ± 0.0004 |

| M1 1 | 0.0171 ± 0.0012 | 0.0240 ± 0.0019 | 0.0141 ± 0.0016 | 0.0262 ± 0.0019 | 0.0170 ± 0.0015 | 0.0197 ± 0.0023 | 0.0106 ± 0.0016 | 0.0205 ± 0.0018 |

| M2 2 | 0.0211 ± 0.0008 | 0.0171 ± 0.0014 | 0.0164 ± 0.0018 | 0.0357 ± 0.0042 | 0.0174 ± 0.0008 | 0.0144 ± 0.0014 | 0.0127 ± 0.0014 | 0.0281 ± 0.0043 |

| Approach | RMSE | MAE | ||||||

|---|---|---|---|---|---|---|---|---|

| B0005 | B0006 | B0007 | B0018 | B0005 | B0006 | B0007 | B0018 | |

| BP [54] | - | - | - | - | 0.0768 | 0.0759 | 0.0732 | 0.0753 |

| SVM [54] | - | - | - | - | 0.0442 | 0.0435 | 0.0449 | 0.0553 |

| WNN [54] | - | - | - | - | 0.0300 | 0.0362 | 0.0344 | 0.0322 |

| GPR [55] | 0.1303 | 0.2251 | 0.2070 | - | - | - | - | - |

| LSTM [56] | 0.0440 | 0.0550 | - | 0.0260 | - | - | - | - |

| CNN [56] | 0.0200 | 0.0230 | - | 0.0220 | - | - | - | - |

| Fusion model [56] | 0.0191 | 0.0205 | - | 0.0227 | - | - | - | - |

| Combination QGPFR [55] | 0.0180 | 0.2044 | 0.0269 | - | - | - | - | - |

| LGPFR [55] | 0.0171 | 0.0690 | 0.0159 | - | - | - | - | - |

| QGPFR [55] | 0.0150 | 0.0512 | 0.0552 | - | - | - | - | - |

| GA-WNN [54] | - | - | - | - | 0.0181 | 0.0161 | 0.0153 | 0.0167 |

| GCN | 0.0138 | 0.0201 | 0.0175 | 0.0287 | 0.0104 | 0.0173 | 0.0140 | 0.0234 |

| Combination LGPFR [55] | 0.0136 | 0.0686 | 0.0173 | - | - | - | - | - |

| SambaMixer [52] | 0.0227 | 0.0475 | 0.0250 | 0.0340 | 0.0206 | 0.0449 | 0.0230 | 0.0275 |

| PINN4SOH [53] | 0.0145 | 0.0197 | 0.0140 | 0.0282 | 0.0110 | 0.0141 | 0.0095 | 0.0248 |

| Ours | 0.0104 | 0.0164 | 0.0122 | 0.0205 | 0.0077 | 0.0131 | 0.0089 | 0.0155 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, X.; Liu, M.; Tian, J. State of Health Estimation for Batteries Based on a Dynamic Graph Pruning Neural Network with a Self-Attention Mechanism. Energies 2025, 18, 5333. https://doi.org/10.3390/en18205333

Gu X, Liu M, Tian J. State of Health Estimation for Batteries Based on a Dynamic Graph Pruning Neural Network with a Self-Attention Mechanism. Energies. 2025; 18(20):5333. https://doi.org/10.3390/en18205333

Chicago/Turabian StyleGu, Xuanyuan, Mu Liu, and Jilun Tian. 2025. "State of Health Estimation for Batteries Based on a Dynamic Graph Pruning Neural Network with a Self-Attention Mechanism" Energies 18, no. 20: 5333. https://doi.org/10.3390/en18205333

APA StyleGu, X., Liu, M., & Tian, J. (2025). State of Health Estimation for Batteries Based on a Dynamic Graph Pruning Neural Network with a Self-Attention Mechanism. Energies, 18(20), 5333. https://doi.org/10.3390/en18205333