1. Introduction

The recent advances in artificial intelligence (AI) are transforming how society approaches nearly every facet of human life, from science and engineering to the arts, entertainment, and medicine. AI’s transformative potential is evident in recent breakthroughs such as generative pre-trained transformer (GPT) models, such as the foundation of OpenAI’s ChatGPT and similar systems, which are accelerating AI integration into daily life. However, as AI becomes increasingly embedded in both everyday activities and technical fields, a critical question emerges: what are the implications when AI systems produce unintended or flawed outcomes?

Specifically, systematic errors in algorithms, often resulting from biases in training data or flawed development assumptions, can lead to inequitable and unreliable outcomes. This phenomenon, commonly referred to as AI bias, presents a significant challenge to widespread and ethical adoption of AI across various domains. While the consequences of AI bias in non-safety critical applications may be relatively minor, the stakes are considerably higher in safety-critical systems such as power systems operations and control.

1.1. AI Bias in Critical Infrastructure Context

In power systems, biased AI models can lead to significant risks, including compromised system reliability, unfair resource allocation, or sub-optimal decision-making, thereby undermining trust in AI solutions within the power systems community. This concern is particularly relevant given the recent introduction of the EU AI Act [

1], which establishes transparency, accountability, and fairness as foundational principles for high-risk AI systems in the EU. If not adequately addressed, these risks could hinder the adoption of AI technologies in essential infrastructure, limiting the realization of their potential benefits.

Evidence of AI bias manifests across multiple fields, providing insights into how similar issues might affect power systems. In healthcare, algorithms have assigned higher kidney function estimates to Black patients than actual values, potentially worsening healthcare inequalities [

2]. Similarly, facial recognition systems consistently perform better for lighter-skinned males while underperforming for darker-skinned females [

3]. These examples illustrate how AI bias can perpetuate or amplify existing societal inequalities, a concern that extends to energy access and distribution in power systems.

1.2. Power Systems AI Applications and Bias Challenges

AI applications in power systems promise transformative benefits, particularly for managing increasingly complex modern power networks. Data-driven methods are crucial for critical operations including load forecasting, renewable energy integration, grid optimization, fault detection, energy trading, asset management, stability analysis, and distributed energy resource management. However, the unique characteristics of power systems create specific bias vulnerabilities.

Contributing factors to bias in power systems AI include unrepresentative training data, flawed model design assumptions, and user-injected prejudices. Data quality and availability challenges can lead to biased prediction outcomes [

4], while ML models, particularly those trained for classification tasks, remain vulnerable to adversarial attacks that can exacerbate bias issues [

5]. This vulnerability is particularly concerning in safety-critical power system applications where robust protection is essential.

The efficacy of AI solutions in power systems also depends on the assumptions made during development. Unrealistic assumptions can impede real-world deployment by creating models that fail to reflect operational realities [

6]. This challenge is particularly acute in power systems. For instance, the complex, non-linear nature of high-voltage direct current (HVDC) systems and power electronic converters makes developing accurate ML models exceptionally difficult [

7,

8,

9].

Bias in AI solutions for power systems can have significant adverse effects. Biased ML models may lead to sub-optimal power flow control and flawed stability assessments, directly impacting grid reliability [

10,

11]. Additionally, these systems can result in inequitable energy distribution and inefficient renewable energy source (RES) utilization, undermining clean energy goals and power quality optimization in autonomous smart grids [

12] while potentially widening socioeconomic disparities.

AI bias represents one of the key challenges that must be addressed throughout the ML lifecycle from design and data selection to training and testing before the full benefits of AI can be realized in the electric power and energy community. Beyond socioeconomic implications, the current AI boom is prompting national and regional authorities to develop regulations for AI use in critical energy infrastructure. This makes addressing bias particularly timely for the electrical energy and power systems (EPES) community, which has traditionally been cautious about widespread AI adoption.

This paper makes three key contributions to the field: (1) it provides a systematic analysis of domain-specific AI bias in power systems through carefully selected use cases that represent diverse operational challenges; (2) it establishes a taxonomy of bias types and their implications specifically relevant to power system applications; (3) it presents targeted, practical mitigation strategies that balance technical performance with fairness considerations unique to the energy domain. Accordingly, the paper aims to (i) present an overview of machine learning methods relevant to the EPES community; (ii) foster awareness of AI bias challenges specific to power systems; (iii) demonstrate through exemplary cases how AI bias manifests in various power system applications; and (iv) propose effective bias mitigation strategies tailored to the energy domain.

To achieve these objectives, we follow a rigorous methodological approach outlined in the next section, which guides our analysis of AI bias across three representative use cases in the power systems domain.

2. Methodology

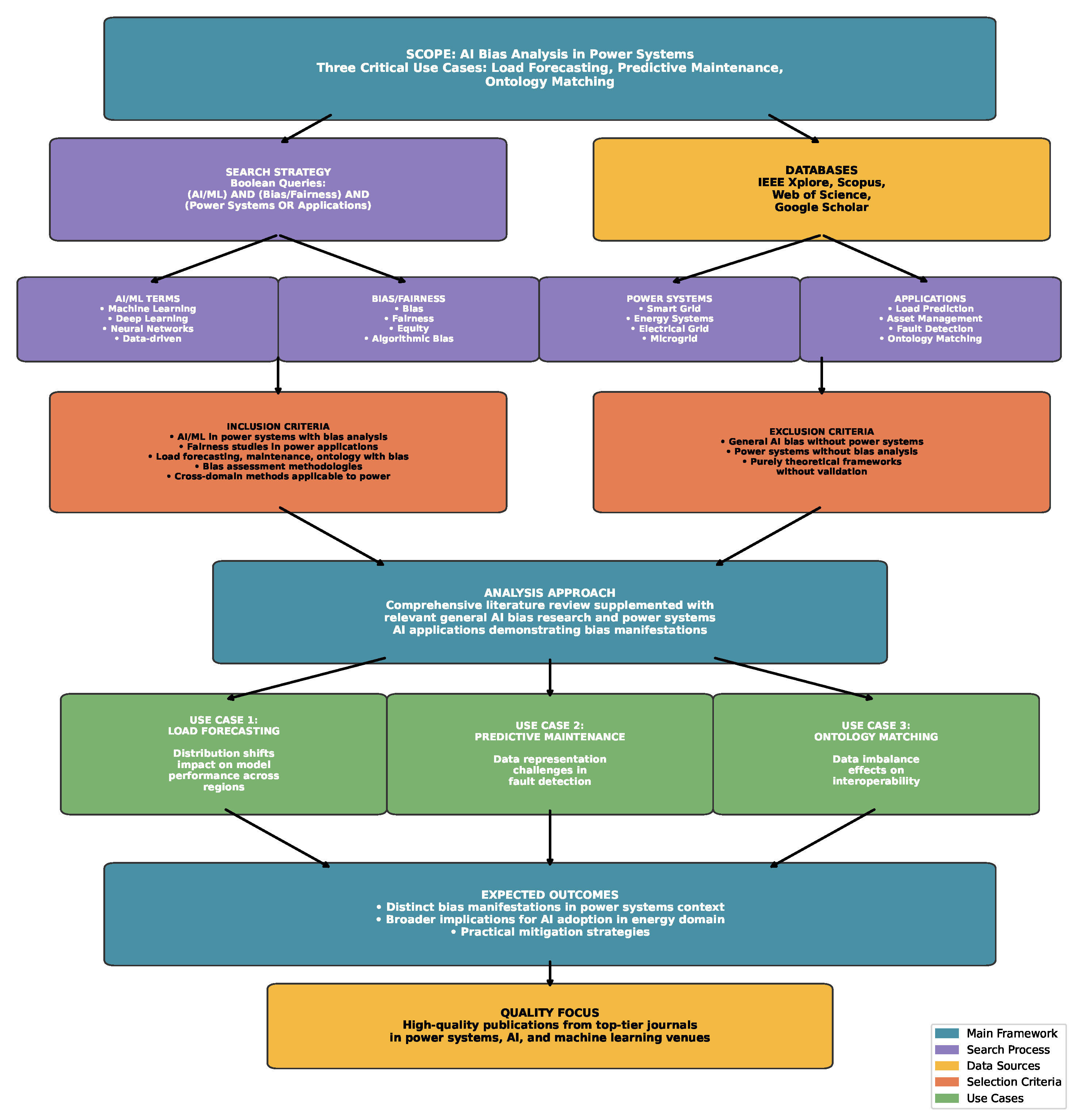

Figure 1 shows an overview of the methodology employed in this research. This systematic approach guides our examination of AI bias manifestations across three representative power systems applications.

Research Framework: Our methodology follows a structured four-phase approach: (1) systematic literature identification and analysis, (2) use case selection based on operational criticality and bias susceptibility, (3) bias characterization and impact assessment, and (4) mitigation strategy development and evaluation.

Use Case Selection Criteria: We select three use cases that represent different operational aspects of power systems: (1) load forecasting as a time series prediction challenge with temporal bias implications, (2) predictive maintenance representing classification tasks with data imbalance issues, and (3) schema matching addressing semantic interoperability with sampling bias challenges. These cases collectively demonstrate the breadth of bias manifestations across different AI application types in power systems.

Scope and Organization: This paper analyzes AI bias manifestations in power systems applications. We follow a systematic approach to identify, classify, and analyze bias types across three critical use cases: load forecasting, predictive maintenance, and ontology matching for system interoperability. Our methodology examines peer-reviewed publications across IEEE Xplore, Scopus, Web of Science, and Google Scholar, combining “AI bias,” “power systems,” “machine learning fairness,” and related keywords using Boolean operators. The analysis focuses on high-quality publications from top-tier journals within the power systems and AI communities and leading machine learning venues.

Search Keywords: Our systematic search employed four thematic keyword clusters: (1) AI/ML terms: “artificial intelligence”, “machine learning”, “deep learning”, “neural networks”, “data-driven”, “predictive analytics”, “algorithm”, “supervised learning”; (2) Bias/Fairness terms: “bias”, “fairness”, “equity”, “discrimination”, “algorithmic bias”, “data bias”, “model bias”, “systematic error”, “fairness metric”, “ethical AI”; (3) Power Systems terms: “power systems”, “electrical grid”, “smart grid”, “energy systems”, “power grid”, “electrical network”, “utility”, “EPES”, “microgrid”, “distribution system”; (4) Application-specific terms: “load prediction”, “demand forecasting”, “asset management”, “fault detection”, “equipment monitoring”, “ontology matching”, “semantic interoperability”, “data integration”.

Search Strategy: We constructed Boolean queries following the pattern (AI/ML terms) AND (Bias/Fairness terms) AND (Power Systems OR Application-specific terms). Given the nascent state of dedicated research on AI bias specifically within power systems contexts, direct searches yield limited results. We therefore adopted a comprehensive approach, supplementing the core domain-specific literature with relevant general AI bias research and power systems AI applications that demonstrate potential bias manifestations. Our analysis draws from high-quality publications across top-tier journals in power systems, AI, and machine learning venues to provide representative coverage of the intersection between these domains.

Inclusion Criteria: (1) AI/ML methods applied to power systems with documented bias analysis. (2) Studies examining fairness, equity, or bias in power system applications. (3) Research on load forecasting, predictive maintenance, or ontology matching with bias considerations. (4) Quantitative or qualitative bias assessment methodologies in power systems contexts. (5) Methods from other fields that could be leveraged in tackling bias in power systems domain.

Exclusion Criteria: (1) General AI bias studies without power systems applications. (2) Power systems research without bias or fairness analysis. (3) Purely theoretical bias frameworks without practical validation.

3. Machine Learning Overview

Understanding the ML landscape in power systems provides essential context for identifying where and how biases emerge in energy applications. Thus, this section establishes the technical foundation for the bias analysis that follows.

While AI encompasses various approaches, including rule-based systems and genetic algorithms, this paper focuses on machine learning (ML) methods prevalent in the EPES community, particularly deep learning applications.

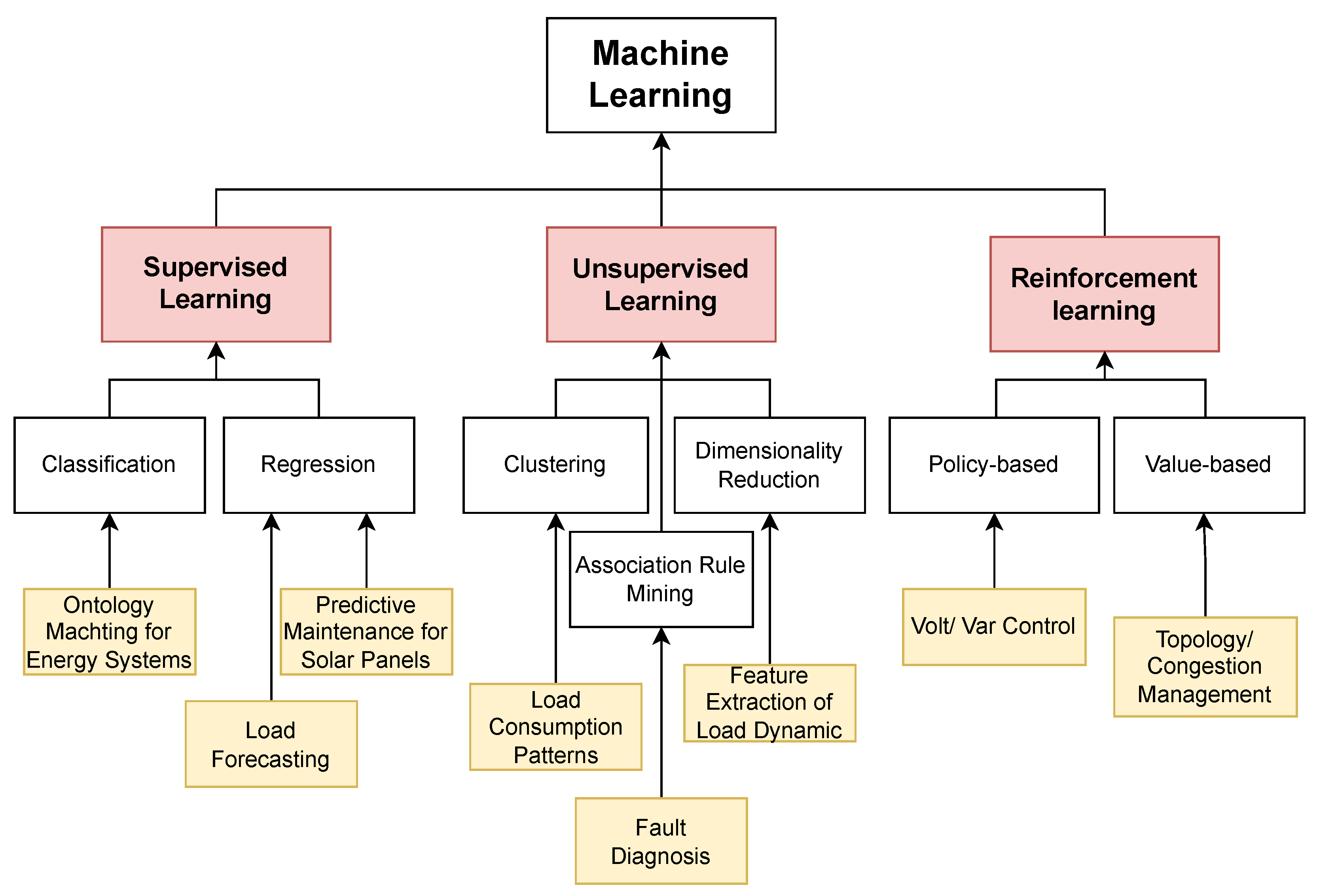

Figure 2 provides an overview of ML approaches with corresponding power system applications.

Power systems utilize supervised learning for regression tasks (load forecasting, remaining useful life prediction for maintenance) and classification tasks (security assessment [

13], ontology matching [

14]). Unsupervised learning enables pattern discovery in unlabeled data, such as clustering utility customers based on consumption profiles [

15,

16]. Reinforcement learning facilitates control and management through environment interaction, supporting applications such as topology optimization, voltage control, and demand response [

17,

18,

19].

These ML paradigms create different vulnerability points for bias introduction. Supervised learning models can inherit biases from labeled training data, unsupervised methods may amplify existing patterns in unlabeled datasets, and reinforcement learning systems can develop biased policies through skewed reward mechanisms. The three use cases examined in this paper—load forecasting (supervised regression), predictive maintenance (supervised classification), and schema matching (supervised classification with severe class imbalance)—illustrate how these fundamental ML approaches interact with power systems data to create domain-specific bias challenges.

This technical foundation sets the stage for examining how biases manifest differently across these ML paradigms when applied to critical power systems operations.

4. AI Bias Overview

This section presents an overview of AI bias with a focus on sources, types, how to test for AI bias, and mitigation strategies. However, because AI bias is often confused with random error, clarifying such misconceptions is important. The following defines these two terms and then offers a detailed distinction between them.

In the field of AI, error generally refers to deviation of an ML model/AI system’s output from an actual observed value (ground truth), which can arise from inaccuracies in data, model assumptions, or inherent randomness. Errors can be either random or systematic mistakes in prediction. On the one hand, AI bias refers to a subset of error involving systematic and consistent errors in an ML model/system’s predictions, decisions, or behaviors that disproportionately affect certain groups, individuals, or outcomes. A crucial point to note here is that unlike random errors, AI bias is systematic and can persist across similar tasks or domains, resulting in unfair or inequitable outcomes in applications ranging from hiring algorithms to medical diagnostics and power system operations [

20,

21].

4.1. Distinguishing AI Bias from Error

Although related, AI bias and error differ fundamentally in their nature and implications.

4.1.1. Nature of Occurrence

AI Bias: Bias emerges from the training process or model design. An ML model trained on data under-representing certain groups will consistently underperform for those groups, regardless of overall accuracy.

Error: Errors represent deviations from ground truth that occur even with representative datasets. They can often be reduced through hyperparameter tuning or improved data preprocessing.

4.1.2. Systematic vs. Random Patterns

AI Bias: Bias manifests in systematic patterns of unfairness. In power systems, a load forecasting model trained primarily on urban data may consistently underpredict loads in rural areas.

Error: Errors typically appear as random deviations caused by data noise or model limitations, without consistently favoring or disfavoring any particular group.

4.1.3. Impact and Consequences

AI Bias: Bias raises ethical, legal, and social concerns. In safety-critical applications like power system fault detection, biased models may systematically fail to identify faults in under-represented system configurations, creating serious safety and fairness risks.

Error: Errors affect overall accuracy without inherently disadvantaging specific groups. For example, load prediction errors may lead to sub-optimal dispatching but will not systematically favor urban over rural areas.

4.1.4. Mitigation Approaches

The mitigation strategies for AI bias and conventional error differ fundamentally in both approach and objective. AI bias mitigation requires targeted interventions including enhancing dataset diversity, implementing fairness-aware algorithms, and conducting comprehensive bias audits [

20]. In contrast, error reduction focuses primarily on technical improvements through data quality enhancement, model parameter tuning, and feature engineering without explicit fairness considerations.

AI bias manifests in both explicit and implicit forms. Explicit bias stems from conscious attitudes toward specific groups, while implicit bias arises from unconscious prejudices that subtly influence understanding, actions, and decisions—often reflecting broader structural inequities in society.

4.2. Sources of AI Bias

AI bias can originate in different stages of the ML lifecycle: (i) data collection and pre-processing, (ii) algorithm design, and (iii) user interactions with the AI solution [

22]. Since these stages interact with one another, such interactions can exacerbate bias. Consequently, ML operations (MLOps) can be considered a fourth (iv) source of AI bias.

4.2.1. Data Collection

This represents one of the most common sources of AI bias. Data bias occurs when training datasets contain unrepresentative samples or systematic errors. Sampling bias, a specific type of data bias arises when the training or testing data fails to represent the broader population or target domain [

23].

In power systems, data representativeness must account for multiple factors. These include operating conditions (light/heavy loading, secure/emergency states) and topology/geography considerations (network structure, line length, proximity to generation, urban/rural context).

Temporal dimensions also play a crucial role, such as sampling rate, time window, time-of-day, and seasonal variations. Additionally, external influences must be considered, including weather patterns, renewable penetration, e-vehicle adoption, automation level, coupling with other energy systems, regulations, market structures, and population density.

Unrepresentative training data [

24] can lead to inaccurate predictions (significant deviations from expected values) or skewed outputs (consistently favoring certain classes or groups). For example, an ML model trained on data from one geographic location may perform poorly when predicting electricity demand in other regions with different consumption patterns.

Other data collection biases include labeling errors from prior decision-makers, measurement inaccuracies affecting disadvantaged groups, and inherent biases from individuals involved in data collection and processing [

25]. These biases can cause AI systems to perpetuate existing prejudices, as seen in hiring algorithms with gender bias, predictive policing with racial bias, and energy investment decisions favoring urban areas over rural communities.

4.2.2. Algorithm Design

Algorithm bias stems from design choices or implementation decisions in ML models [

26]. This can result from biased assumptions, flawed mathematical formulations, or inadequate consideration of relevant factors. For instance, algorithms prioritizing computational efficiency over fairness may produce less robust models. Poor model design can also lead to predictions based on incorrect contextual relationships, while confirmation bias, where models reinforce pre-existing beliefs can arise from flawed training approaches or evaluation metrics.

4.2.3. User Interaction

User bias occurs when individuals interact with AI systems in ways that introduce or amplify biases. This happens when users knowingly or unknowingly inject personal prejudices by providing biased training data or interacting with the system in ways that reflect their existing biases [

27].

4.2.4. Machine Learning Operations

MLOps refer to the practices and tools used to streamline and automate the deployment, monitoring, and maintenance of ML systems. Although MLOps help to ensure scalability and reliability in AI applications, they can also inadvertently exacerbate bias by automating and scaling biased models used for load forecasting, fault detection and isolation, grid optimization etc. For instance, if training data disproportionately represents urban areas, automated pipelines might perpetuate systematic underperformance in rural regions when deployed, leading to inequitable resource allocation or reliability disparities. Also, continuous retraining on biased operational data, such as feedback loops from biased fault predictions, can further worsen these disparities. Additionally, the focus on operational efficiency in MLOps often neglects fairness monitoring, leading to issues such as data drift caused by seasonal or demographic changes in energy consumption patterns to go unnoticed. To address these risks, MLOp practices in power systems must incorporate fairness checks, interpretability tools, and proactive bias detection tailored to the sector’s safety-critical nature [

20,

28]. It is against this backdrop that there are currently ongoing research projects such as Common European-scale Energy Artificial Intelligence Federated Testing and Experimentation Facility (EnerTEF) which aims at system-level testing of AI systems to ease their adoption in the EPES sector within the EU [

29].

4.3. Types of Bias

Having established the primary sources of AI bias, we now examine the specific manifestations of bias that affect power systems applications. Building on the categorization framework from [

22],

Table 1 presents a comprehensive taxonomy of bias types enriched with power systems-specific examples and mitigation strategies. This taxonomy serves as a diagnostic framework for the detailed use case analyses that follow. As reported in [

22,

30,

31,

32,

33,

34,

35,

36], various types of AI bias exist in the literature. Additionally, we note that more than one type of bias can be traced to a single source.

The bias types outlined in

Table 1 provide the conceptual foundation for understanding how different sources of bias manifest in practice. The following subsections examine how these manifestations can be detected and addressed before exploring specific use cases where multiple bias types often interact to create complex operational challenges.

4.4. Overfitting and Underfitting

Overfitting and underfitting represent two critical model training issues related to AI bias. Overfitting occurs when models learn both general patterns and specific noise or details from training data, resulting in excellent training performance but poor generalization [

37]. A typical example is a complex neural network trained for hourly electricity demand prediction that memorizes specific holiday demand patterns rather than learning general relationships.

Conversely, underfitting happens when models are too simplistic to capture underlying data patterns, leading to poor performance on both training and test datasets. This commonly occurs when using neural networks with insufficient layers or neurons to model complex relationships, such as those between transformer sensor readings (temperature, vibration) and equipment health status.

4.5. Testing for Bias

The significant potential of AI in power systems and the obstacles posed by bias necessitate rigorous testing protocols for ML models deployed in this domain. Bias testing evaluates whether predictions systematically favor or disadvantage certain groups, regions, or system configurations.

The testing process begins with data analysis to identify imbalances such as under-representation of rural grids or specific equipment types that could lead to biased outputs [

20]. Model performance is then evaluated across various subsets (geographic regions, customer classes) using fairness metrics like demographic parity or equalized odds to quantify inequities [

38].

Scenario testing using extreme weather simulations or rare fault conditions can expose biases in edge cases, while specialized fairness tools help uncover subtle disparities in model behavior. Regular testing combined with diverse stakeholder input ensures equitable treatment across system conditions and user groups, mitigating risks associated with biased AI in critical power applications.

Industry initiatives like AI-EFFECT (Artificial Intelligence Experimentation Facility For the Energy Sector) [

39] are developing European Testing Experimentation Facilities with transparent benchmarks, certification processes, and automated evaluation criteria. These frameworks enforce data quality, privacy, and integrity standards aligned with the EU AI Act to foster trust in energy-domain AI solutions.

4.6. Bias Mitigation Strategies

Multiple approaches have been developed to address AI bias. This is particularly crucial in power systems, where obtaining data for rare operating conditions can be challenging. As noted by [

40], power system ML models require extensive datasets that accurately reflect real-world operations, yet standard datasets often lack comprehensive operational data and rarely include sufficient extreme scenarios for robust training.

Key mitigation strategies include (1) bias-aware algorithms that holistically address various bias types to minimize their impact on system outputs; (2) dataset augmentation techniques that enhance training data diversity to improve representativeness; and (3) user feedback mechanisms that leverage operational insights to identify and correct biases during deployment.

Having established the taxonomy of AI bias types relevant to power systems,

Table 2 provides a comprehensive comparison of how the existing literature addresses these biases across the three use cases examined in this paper. This comparative analysis serves as a foundation for the detailed examinations that follow in

Section 5,

Section 6 and

Section 7, illustrating the current state of bias mitigation research and identifying opportunities for advancement in each application domain.

The literature comparison reveals several key insights that guide the subsequent detailed analysis: concept drift dominates load forecasting research, ensemble methods show promise across multiple domains, and class imbalance remains a critical challenge in schema matching applications. These findings inform the targeted mitigation strategies presented in the following sections.

5. AI Bias and Mitigation Strategies in Load/Renewable Energy Generation Forecasting

This section presents a comprehensive analysis of AI bias in load and renewable energy generation forecasting applications, which constitute critical components of modern power system operations. The analysis addresses four interconnected dimensions: (1) the fundamental challenges and operational significance of forecasting in power systems; (2) the identification and characterization of common biases affecting forecasting models, with particular emphasis on their underlying origins and manifestations; (3) the quantitative and qualitative implications of these biases on grid operations, market efficiency, and system reliability; (4) the development and evaluation of effective strategies to mitigate bias in forecasting applications. This systematic examination builds upon recent advances in concept drift detection and adaptive forecasting frameworks to provide theoretically grounded and practically viable mitigation strategies for modern power systems.

Accurate forecasting of electrical load and renewable energy generation represents a fundamental prerequisite for maintaining grid stability and ensuring reliable power supply in modern electrical power systems. Power grid operators frequently employ load and generation forecasting techniques [

67] as essential tools for operational planning and real-time decision-making. This has become increasingly critical, as the growing integration of RES in power grids leads to more unstable and volatile electricity generation due to higher dependencies on difficult-to-forecast environmental factors [

68]. The conventional approach employed by grid operators involves utilizing previous observations of RES generation, loads, and weather conditions to forecast future RES generation and loads in the grid. However, the incorrect load predictions can negatively affect grid operators’ decisions, which can result in significant economic losses and compromise system reliability.

In the EPES community, three distinct forecasting time intervals are commonly considered: long-term forecasting of peak electricity demand, medium-term demand forecasting, and short-term load forecasting (STLF) [

69]. Among these, operators typically perform STLF within a time interval of a few minutes to a few days and commonly apply it to optimize grid operations [

69] due to its direct relevance to operational decision-making and real-time system management.

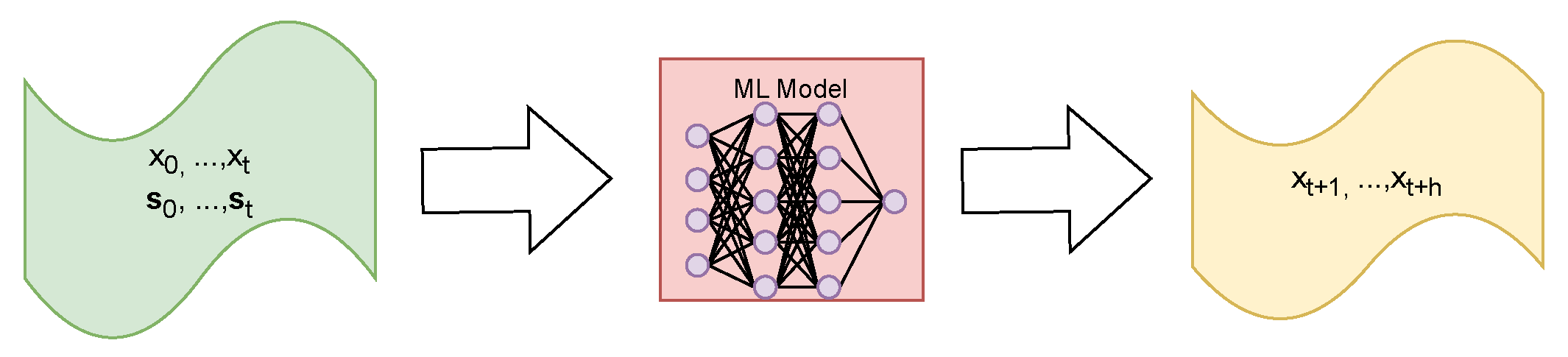

In forecasting tasks, historical data from the time interval [0, t] is usually fed into an algorithm to predict values for the time interval [t+1, t+h] with the forecast horizon h. The fundamental objective of ML model-based forecasting is to learn complex temporal dependencies and patterns from historical data that must be available for the training of the model’s weights. Specifically, to perform load forecasting, time series data from different sensors,

, and previous load measurements,

, are used to predict loads for the next time interval,

. The combination of the exogenous sensor features

and the endogenous load features

can be described by

, the general input features.

Figure 3 is a schematic presentation this process.

The primary objective of load forecasting algorithms is to recognize patterns, trends, and factors affecting the load through sophisticated pattern recognition and feature extraction mechanisms. Contemporary forecasting systems achieve prediction of future values based on existing time series data through advanced AI regression algorithms. Given the complex non-linear dependencies of data points and the advancements in AI models, forecasting with traditional and deep neural networks has become a highly researched topic. Among the various approaches, recurrent neural networks (RNNs) with either long-short term memory (LSTM) units or gated recurrent units (GRUs) represent one of the most widely adopted ML algorithms used for load forecasting. While several other approaches exist, the discussion here is restricted to the RNN-based methods, although the bias discussed here can equally apply to other ML/DL methods for load forecasting. The fundamental mechanism of RNNs involves processing time series input data by internally memorizing hidden states of previous inputs, thereby capturing temporal dependencies critical for accurate forecasting. Practitioners add LSTM units and GRUs when the memory of the previous state needs to be kept longer [

70], enabling the capture of long-term temporal patterns essential for robust forecasting performance.

In general, the input data x(t) for load forecasting models contains historical time series data, which is collected by different sensors distributed throughout the power system infrastructure. Grid operators conventionally collect data from their own electrical grid and other applicable sensor data, forming comprehensive datasets that capture system behavior across multiple operational dimensions. Typically, a dataset comprises the load of the electrical grid over a time frame of a few years annotated with years, months, days, and hours. Categorical variables such as further specifications about days (workdays, holidays, ⋯) are one-hot-encoded to enable effective processing by ML algorithms. Additionally, weather forecasts and measured weather data consisting of temperature, sun hours, wind, and precipitation are often used as auxiliary features when training a load forecasting model, providing critical exogenous information that significantly influences both load patterns and renewable generation capacity.

5.1. Biases Related to ML Models for Load Forecasting

Here, we present the primary sources of bias affecting load forecasting models, focusing on data representation issues and concept drift phenomena that compromise prediction accuracy and reliability. The analysis provides both theoretical foundations and empirical evidence to characterize these bias sources and their manifestations in practical forecasting applications.

Among the most prevalent sources of bias in load forecasting applications is data representation bias (for more details, refer to

Table 1) in the historical data used for training the models. A primary origin of this bias is the tooling used in the data collection process, which can introduce systematic distortions or inconsistencies in the recorded measurements. As a result, data points can be distorted or missing [

71]. This can manifest as just a few data points or the whole data collected from a sensor being compromised. Furthermore, changes in the way data is collected during the measurement time frame can also be software- or hardware-dependent and might lead to biases in the collected data. Particularly challenging are data irregularities due to missing data points that may not be easily observable if certain measurements are only triggered in specific event cases [

48], creating systematic gaps in the training data that can significantly affect model learning and generalization capabilities.

A fundamental and another origin of bias in this context is concept drift, which represents a critical challenge in time series forecasting applications. Formally, suppose the forecast data follows a distribution,

, from time 0 up to time

and follow a different distribution from

to

∞:

Then, according to [

41], a concept drift exists at the timestamp

if

such that

The concept drift can be explained by a change in the joint probabilities of and at time , where represents historical load and weather data, and represents the forecast target values over horizon h.

The mathematical decomposition into

shows that the source of the drift can be

,

, or both [

41]. This decomposition reveals that concept drift can originate from changes in the input feature distribution, alterations in the conditional relationship between inputs and targets, or simultaneous changes in both components.

Concept drift can be caused by changes in the data distribution over time and can occur due to various factors such as changes in consumer behavior, technological advancements, or environmental conditions. In the specific context of power systems, concept drift is particularly prevalent due to the dynamic nature of energy consumption patterns, evolving grid infrastructure, and changing environmental conditions that directly impact both load patterns and renewable generation characteristics. In the context of load forecasting, concept drift can lead to significant discrepancies between the training and evaluation data distributions, resulting in poor model performance and potentially compromising grid reliability and operational efficiency.

Concept drift manifests in four primary patterns in power systems forecasting, each characterized by distinct temporal dynamics and underlying mechanisms:

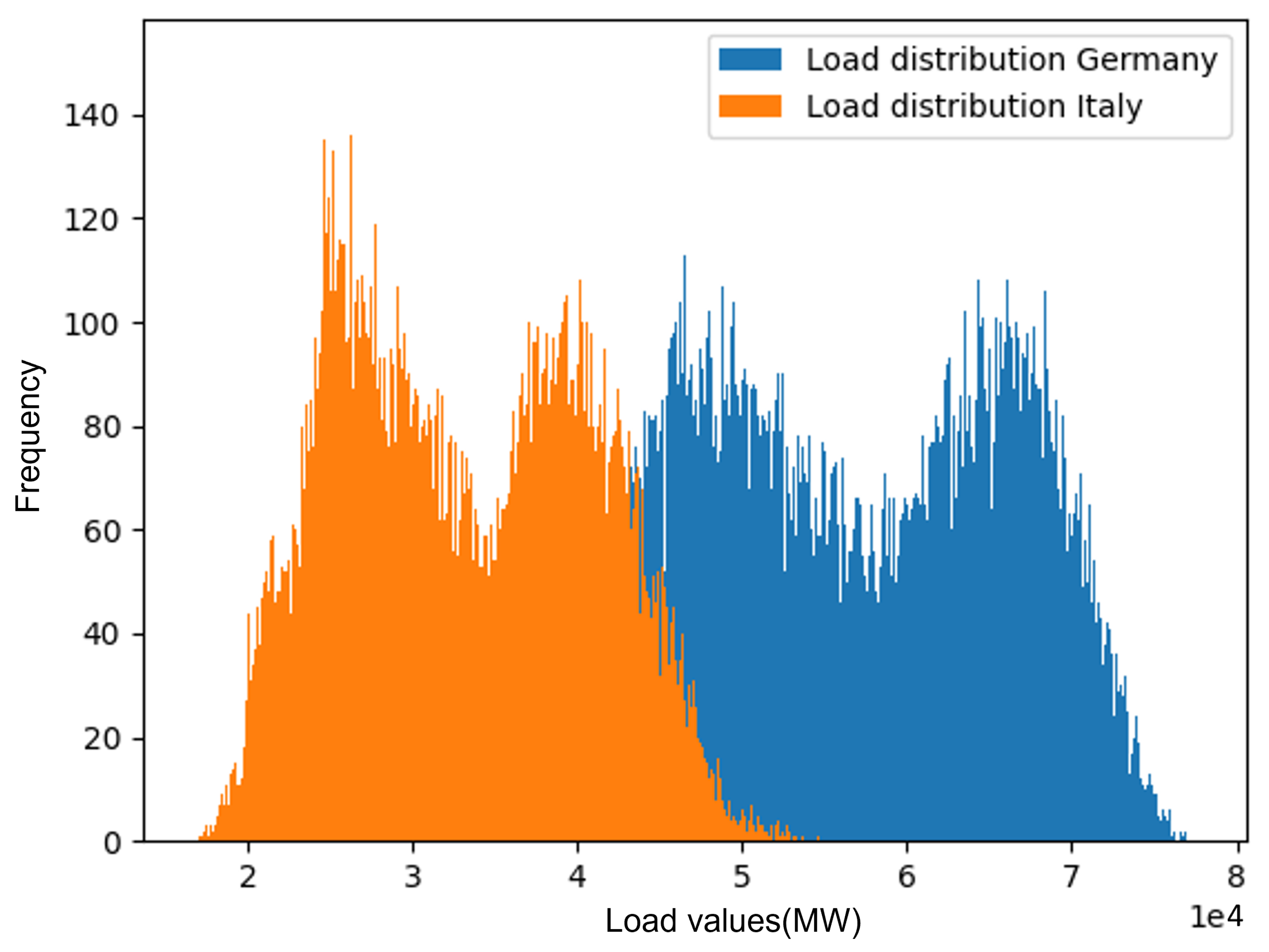

Sudden drift: Abrupt changes in data distribution, such as when forecasting models trained on one region’s data are applied to another region with different load patterns. As shown in

Figure 4, the load distributions of Germany and Italy both follow bimodal patterns but with significant shifts due to socioeconomic and environmental factors. This type of drift presents immediate challenges for model adaptation as it occurs without warning and requires rapid response mechanisms.

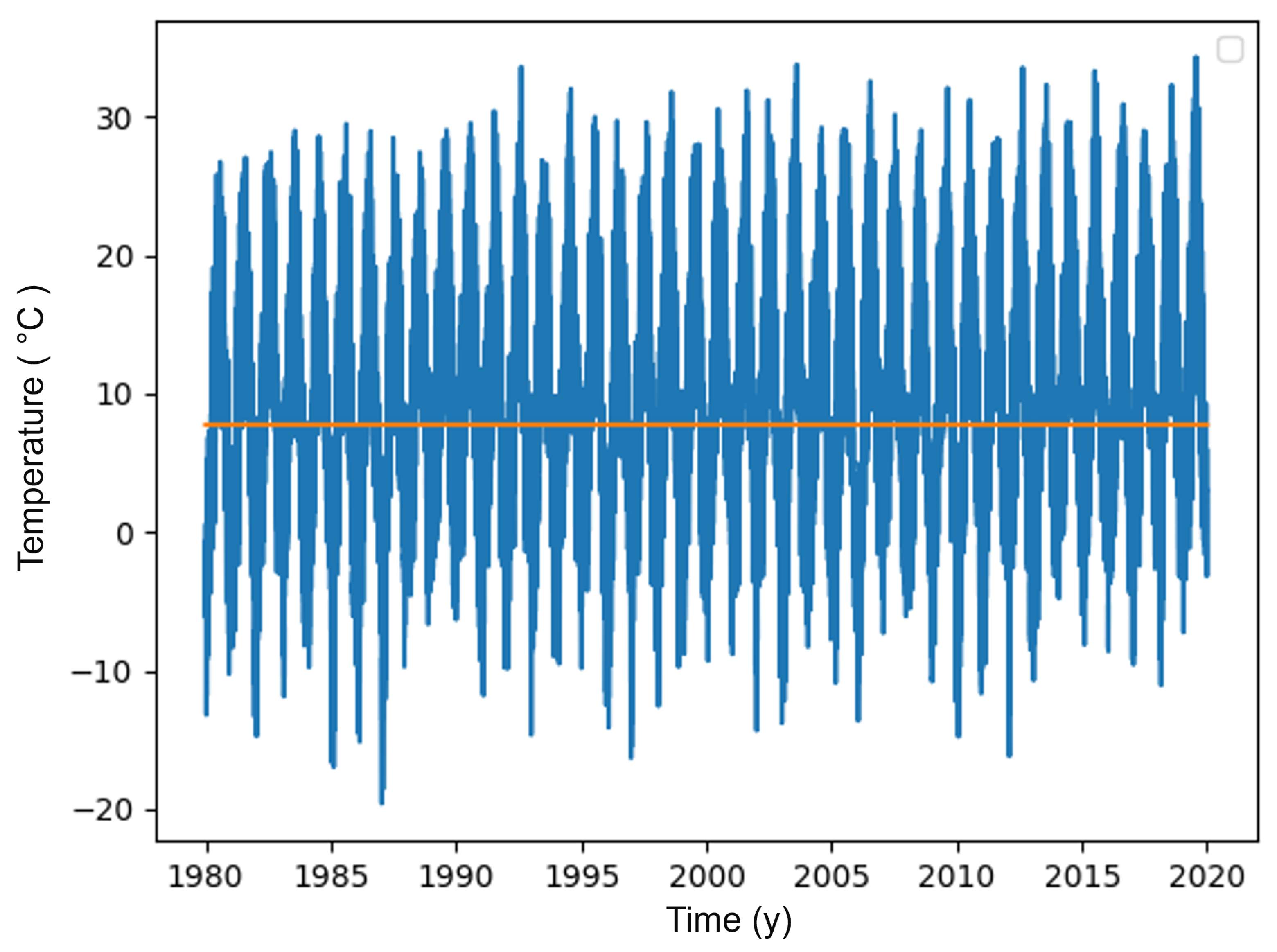

Incremental drift: Slow, gradual changes over time, such as the subtle 0.2 °C average temperature increase over 40 years in Germany shown in

Figure 5. These small shifts can significantly impact long-term forecasting models, as their cumulative effect may not be immediately apparent but can lead to substantial prediction errors over extended periods.

Recurring drift: Periodic shifts between distributions that return to previous patterns, typically seen in seasonal weather patterns or workday/weekend load variations. These are often anticipated and encoded in training data, making them more manageable through appropriate feature engineering and model design.

Gradual drift: Progressive replacement of one concept by another with a transition period where both concepts coexist, exemplified by emerging weather patterns that initially appear sporadically before becoming dominant. This type of drift requires sophisticated detection mechanisms and adaptive learning strategies to ensure smooth transitions between concepts.

The impact of these drifts on model performance can be substantial, as demonstrated through empirical analysis of cross-regional forecasting scenarios.

Figure 6a shows how an ML model was trained on German load data with the distribution (

) and evaluated on German load data with the same distribution; it achieved an RMSE of 0.0263 on the test data set, indicating excellent predictive performance under matched conditions. However, when the same model was evaluated on Italian load data, as shown in

Figure 6b, with the data distribution (

), the RMSE increased dramatically to 71.7954, a 273,000% degradation in accuracy. This striking performance deterioration exemplifies the severe impact of concept drifts on forecasting reliability and underscores the critical importance of bias mitigation strategies.

The RMSE is calculated as follows:

where

m denotes the number of target values,

the target value, and

the predicted value. A good result for the RMSE is close to 0, indicating the target and predicted values are similar. The higher the RMSE value is, the further apart the target and predicted values are. In addition, the RMSE penalizes a larger difference between the target and predicted values more severely due to the squaring operation.

This quantitative example, illustrated in

Figure 6a,b, demonstrates how distribution shifts render predictions unreliable for operational use and emphasizes the necessity of developing robust bias detection and mitigation frameworks for practical deployment in power systems.

5.2. Implications of AI Bias in Electricity Load Forecasting

Building upon the bias sources identified above, this subsection analyzes how these biases translate into operational and economic impacts for both grid operators and end consumers. The analysis considers both direct and indirect consequences, examining the cascading effects of biased forecasting on system reliability, market efficiency, and stakeholder confidence.

Bias in electricity load forecasting models can substantially affect both grid operators and customers through multiple interconnected pathways. For grid operators, biased forecasts can lead to demand overestimations, which can result in unnecessary power generation and inflated operational costs, or underestimations that increase the risk of grid stress, outages, and reliance on costly emergency measures. These errors can impede the efficient allocation and dispatch of generation assets, thereby jeopardizing the equilibrium between renewable and non-renewable energy sources and potentially compromising sustainability initiatives. Moreover, biased forecast results can distort wholesale electricity markets, creating unfair advantages or disadvantages for certain providers, especially smaller or renewable energy producers. The resulting market inefficiencies can lead to suboptimal resource allocation and reduced overall system efficiency. Persistent underestimation in specific regions can threaten grid reliability, heighten the risk of blackouts, and diminish public trust in the power system infrastructure and its management.

For customers, biased forecasting often translates into higher energy costs, as inefficiencies in grid operations and peak pricing adjustments are passed on to consumers through various pricing mechanisms and tariff structures. The bias can also lead to unequal service quality, disproportionately affecting certain geographic or socioeconomic groups with more frequent outages or higher prices, thereby exacerbating existing inequalities in energy access and affordability. Additionally, these inaccuracies can slow the integration of renewable energy, reducing access to cleaner and often more affordable energy options and impeding progress toward sustainability goals and carbon reduction targets. Over time, recurring forecast errors can trigger price volatility or supply unreliability, further deteriorating consumer confidence in the power system and potentially leading to reduced participation in demand response programs and energy efficiency initiatives.

5.3. Mitigating Bias in Load Forecasting

This subsection presents comprehensive strategies for addressing the biases identified in load forecasting, drawing from recent advances in adaptive learning and drift detection methodologies. The discussion encompasses both proactive and reactive approaches, providing a systematic framework for bias mitigation in operational forecasting systems.

Time series forecasting tools play a vital role in predicting future values using incoming data samples, often processed in real time. However, the distribution of these data samples is typically unknown, creating fundamental challenges for maintaining prediction accuracy under varying operational conditions. To address uncertainties that may arise from out-of-distribution data, detecting data drift becomes essential for ensuring robust and reliable forecasting performance. Recent research has identified three different approaches for detecting changes in the data distribution:

Error rate-based drift detection, which continuously monitors ML model performance [

41] to identify degradation patterns indicative of concept drift.

Data distribution-based drift detection, which uses a sliding window approach to sample the distribution of the incoming data stream

and calculates the difference to the training data distribution in real time [

42], enabling direct comparison of statistical properties.

Multiple-hypothesis test drift detection, which employs more than one hypothesis to detect concept drifts [

41] through statistical testing frameworks that provide robust detection capabilities.

Furthermore, Samarajeewa et al. [

43] propose an explainable drift detection framework that provides interpretable insights into drift patterns in energy forecasting systems, enabling operators to understand not just when drift occurs but why it happens. This advancement represents a significant step toward transparent and actionable bias detection mechanisms that facilitate informed decision-making in operational environments. In [

44], the authors employ an online forecasting process that compares the loss during training with the loss during the online forecasting to detect concept drifts in the data. This approach enables real-time monitoring and adaptive response to emerging drift patterns.

When operators detect concept drifts, they commonly discard the existing ML model and retrain a new one using data sampled from the updated distribution . This approach, while effective, requires substantial computational resources and may introduce temporary performance degradation during the retraining period. When sufficient data or resources for complete retraining are unavailable, the existing model can be fine-tuned with the new dataset through transfer learning techniques, offering a more resource-efficient approach to model adaptation.

Azeem et al. [

45] demonstrate the effectiveness of transfer-learning-enabled adaptive frameworks (TLA-LSTM) for handling concept drift in smart grids, achieving 7–15% improvement in forecasting accuracy under different drift scenarios across multiple generation modalities including coal, gas, hydro-, and solar power systems. These results demonstrate the practical viability of transfer learning approaches for maintaining forecasting performance under concept drift conditions.

Alternatively, a dual-model strategy can be employed, where the old ML model serves as a stable learner while a new reactive learner is introduced. This approach provides operational continuity while enabling gradual adaptation to new conditions. The reactive learner replaces the stable one if its predictions prove more accurate over a specified time frame, as determined by statistical hypothesis testing across multiple evaluation metrics [

41]. Such strategies offer robust safeguards against abrupt performance degradation while facilitating smooth transitions to improved models.

More recently, Zhao and Shen [

46] introduced proactive model adaptation techniques that estimate concept drift before it fully manifests, enabling models to adapt preemptively rather than reactively, thereby maintaining prediction accuracy during transitional periods. This proactive approach represents a significant advancement in bias mitigation, offering the potential to maintain forecasting performance even during drift events.

In load forecasting, recurring concept drifts often arise, presenting both challenges and opportunities for systematic bias mitigation. For instance, these patterns can appear as differences in data distributions between workdays and weekends or holidays, as well as seasonal weather patterns that repeat annually. These predictable shifts are apparent in the data from the outset, enabling proactive mitigation strategies. To address potential bias in such cases, one-hot encoding of such information typically yields the best model performance, as it explicitly captures these known patterns and incorporates them into the learning process. For other recurring concept shifts that are not known prior to implementation, ensemble methods have demonstrated promising results. These methods utilize multiple base ML models to compute results in parallel, applying various voting rules to determine the final prediction [

41]. Dynamic ensemble methods further enhance performance by adjusting the weights of base models dynamically, maintaining reliable results even as distribution shifts occur [

47], thus providing adaptive responses to evolving operational conditions.

Addressing data quality issues requires comprehensive preprocessing strategies. Bias caused by noise in the data can be reduced through data pre-processing techniques such as transformations, normalization, and small distribution adjustments, which help standardize input features and reduce measurement-related inconsistencies. Incomplete or noisy time series data can be addressed by either filtering out irrelevant data or applying imputation strategies, with the choice of approach depending on the nature and extent of data quality issues. In some cases, irregular time intervals can be retained if an ML model capable of handling such irregularities is used [

48], providing flexibility in managing diverse data collection scenarios. Sampling bias can be mitigated by removing samples that are not representative of the test cases or by upsampling the most relevant data [

49], ensuring that training data adequately represents the operational conditions the model will encounter. Furthermore, the impact of slightly out-of-distribution incoming data streams can be minimized by using deeper neural networks, as their generalization capabilities have been shown to be superior [

50], though this approach must be balanced with computational constraints and interpretability requirements in operational settings.

The load forecasting analysis demonstrates how temporal biases and concept drift create systematic prediction errors with severe operational consequences. The next section examines a fundamentally different challenge, predictive maintenance where data imbalance and representation issues create distinct bias patterns that require alternative mitigation approaches.

6. AI Bias and Mitigation Strategies in Predictive Maintenance of Power Systems Assets

This section analyzes AI bias in the predictive maintenance of power system assets, with a focus on PV plants. The analysis covers four critical aspects: (1) the role and challenges of predictive maintenance in power assets; (2) common biases affecting maintenance models and their sources; (3) how these biases impact asset management and system reliability; (4) effective strategies for reducing bias in maintenance applications.

Considering the growing increase in integration of RES in power grids, the need to maintain power systems assets (PSAs) extends beyond corrective and preventive measures. This is mainly because asset maintenance becomes unbearable in cases of eventual system breakdown and the related expenses for routine diagnostics activities. Conversely, predictive maintenance (PM) utilizes data from sensors in situ to implement diagnostics [

51,

52,

72]. These diagnostics detect patterns in the data that may indicate a deviation from the standard operation of the components to support timely reaction to faults, without the overhead cost of consistent device servicing. Additionally, PM makes remote monitoring of asset installations, as illustrated in

Figure 7, possible. While this is the preferred choice for optimizing power systems asset management, the precondition on the reliability of the methods used in PM becomes even more critical.

AI methods are applied in PM to predict q-steps-ahead values from present and past values acquired from the assets [

73,

74,

75]. More precisely, the ML model is optimized to approximate a function with a parameter set,

, which maps past observations of the independent variable

X to the target variable

Y;

. Such

is a sequence model, and

is the q-steps-ahead forecasts of the model, where

, and steps are a time measure, i.e., in seconds, hours, days, weeks, etc. Typically, at any time during monitoring, forecasts that differ from normal industrially accepted thresholds are flagged as imminent faults.

Fault detection in PSAs notably presents a critical scenario that requires a thorough mitigation of AI bias due to the direct impact on the economic cost incurred by power network operators and consumers alike. However, this domain is dominated by various examples of AI bias that forestalls large-scale adoption of AI solutions.

6.1. Biases Related to ML Models for Predictive Maintenance

Most of the bias that affects PM emanates from the data collection stage. More so, the non-favourable data collection situation makes it difficult to delineate the effect of implicit model bias from the compound effect from available data. In general, three main data bias sources can be identified that affect ML model application to PM in PSAs.

Non-representative measurement data is common in data-driven solutions for PSAs [

76]. In particular, the training data,

, provided for PM tasks tend to lack observations that show faults. This is mainly due to concerns about the practicality of destructive and interventional experiments in actual PSAs to generate sufficient fault occurrences.

As a workaround, data points, , from less accurate or complex simulations tend to be utilized for model training. As a result, the simulated data points do not always capture all potential configurations in PSAs that may cause faults. Hence, after models are trained on , actual faults present as out-of-distribution samples to the forecast models, i.e., —leading to underfitting.

Further, even when faulty samples are provided in

, they tend to be dominated by normal samples that lead to data imbalance [

77]. Unlike classification tasks, data imbalance is difficult to correct for time series forecasting [

78]. Hence, generalization from limited faulty samples becomes non-trivial in PM.

High uncertainty in the data-generating process manifests especially in RES, where PSA productivity is dependent on highly variable exogenous factors. In particular, weather influence on PV and wind generation, as well as the aging of PSAs, is significant and commonly considered in modeling decisions [

79]. However, weather profiles are non-deterministic for a relatively short period of time (in minutes, hours, days, or weeks) required for PM and are ever constantly diverging from previously established weather models [

80,

81]. Therefore, there exists strong relative dependence of PSA productivity on the operating conditions of the electrical components and the operating condition on weather.

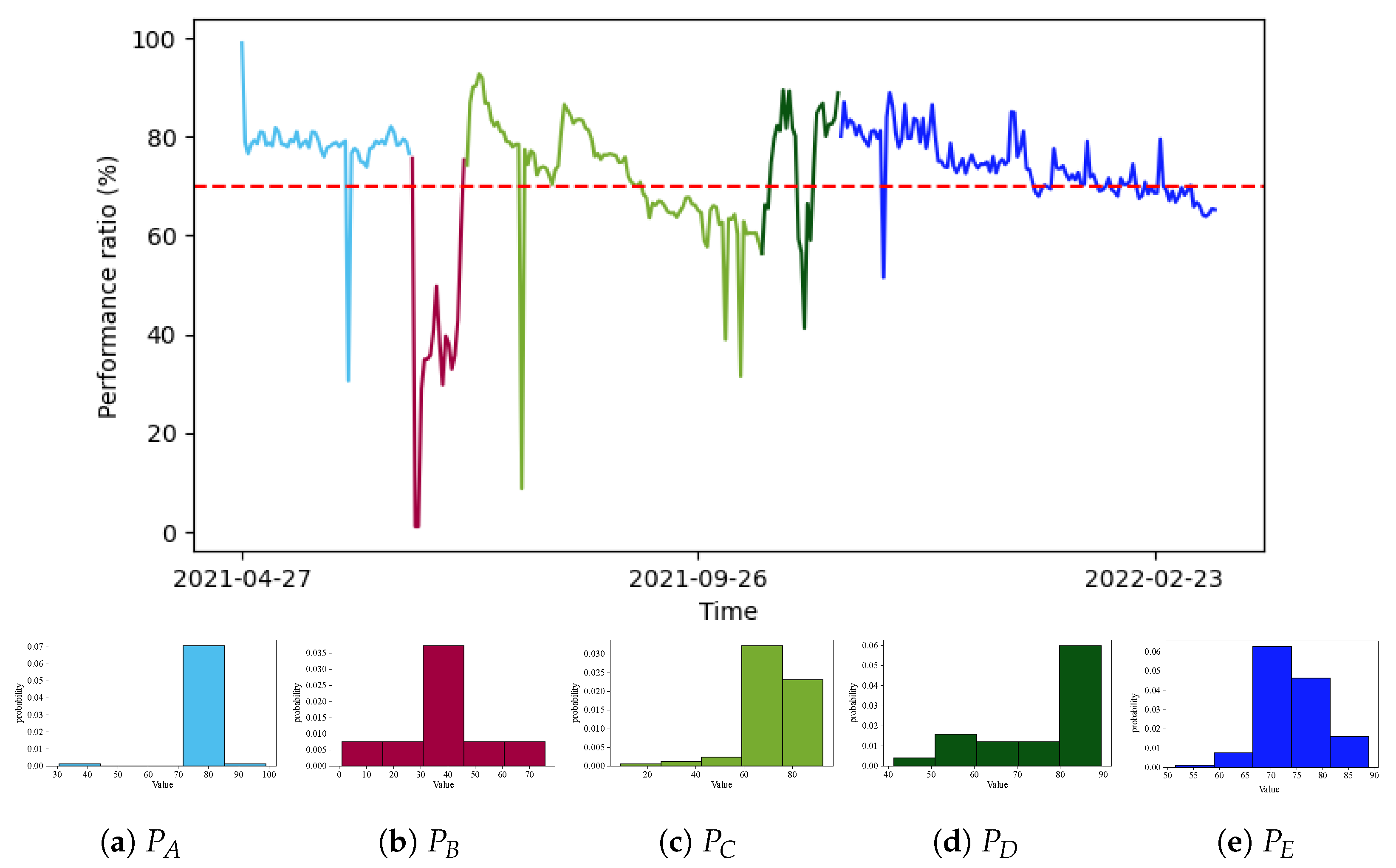

Figure 8 illustrates an example of PV generation with multiple change points in the data [

82], where due to the uncertainty of the factors, the output is void of any discernible trend or seasonality.

Figure 8a–e illustrate the different probability masses of the intervals identified in

Figure 8 and indicate distribution changes across intervals such that models trained on it tend to overfit the multiple change points yet generalize poorly to new samples with a different profile, as illustrated in

Table 3.

The presence of a confounded mechanism in PSA operation is an important source of bias in ML models for PM. This applies when the factors that explain the faults in the target variable

Y are not captured in the training data, leading to spurious functional dependence assumptions by ML models, i.e.,

(where

denotes statistical independence). Here,

Z is the confounding variable and explains away

X and

Y; thus, any analysis without it will lead to biased outcomes. This relates to representation, sampling, and algorithmic bias presented in

Table 1. In practical settings, the major reason for this bias is due to the complexity of EPES, such that the root causes of the faults are hidden or unobserved. Therefore, a wrong set of features are utilized for fault detection. For example, holidays and extreme weather conditions over a period of time can act as multiple causes of abnormal power consumption. Similarly, misrepresenting the effect of the causes on the target, i.e., as temporal or static, will outrightly lead to biased outcomes. Further, when fault mechanisms follow the temporal causal model, such that the causes of faults occur prior to the faults, and predict the faults effectively, the resolution rate of monitoring device can introduce bias in PM. This is a direct consequence of the need to downsample the standard Phasor Measurement Units (PMUs) and Supervisory Control and Data Acquisition (SCADA) measurements in

s to 15 min to 1 h intervals to aid management and storage. The resulting downsampled observations could fail to include the exact time steps that explain faults. In this case, the observations that cause a fault

are not captured in the data

, because of the sampling rate. Therefore, the data will exhibit loose temporal dependence for PM.

6.2. Effects of Bias in Predictive Maintenance

Applying ML models to data affected by the biases enumerated in

Section 6.1 can lead to different sub-optimal solutions for PM, even for complex and large models. In

Table 3, the performance of a gated recurrent unit (GRU) [

83], long short-term memory (LSTM) [

84], a multi-layer perceptron (MLP) [

85], and a temporal convolutional network (TCN) [

86] trained on a real-world dataset collected from a PV plant [

53] with input lags of (1, 2, 3) × 7-day sequences are compared for PM. The configurations of each model were obtained through hyperparameter search and are described in

Table 4. The drop-out rate was fixed at

for all the models, and the embedding layer of the TCN was made up of dilated convolution layers.

The values in

Table 3 are averaged over 10 independent runs to forecast the next 7 days in each case. The three metrics used to quantify the reliability of the results account for the spread of errors (the root mean square error (RMSE)), binary classification accuracy (the Matthews correlation coefficient (

M_corr)) [

87], and linear relationship strength (the Pearson correlation coefficient (

P_corr)) between forecasts and ground truth. Model performance is quantitatively assessed through low

values, representing the standard deviation of prediction errors; however, the

alone is insufficient for the PM task which incorporates a decision threshold (indicated by the red dotted line in

Figure 8). The

M_corr metric, bounded between

, provides a balanced measure of binary classification performance even with class imbalance: a value of

indicates perfect prediction of performance ratio relative to the fault threshold,

signifies complete misclassification (all predictions inverted), and 0 represents performance equivalent to random chance. Complementarily,

P_corr, also bounded in

, quantifies the linear correlation between forecast and ground truth time series, with

indicating perfect positive correlation,

perfect negative correlation, and 0 no linear relationship. More formally, the metrics are defined as follows:

where

denotes the actual observed value in the time step

i,

represents the corresponding model prediction,

and

are the arithmetic means of ground truth and predictions, respectively, over all

N samples, and

N is the total number of samples in the evaluation set. In the Matthews correlation coefficient formula, TP (true positive), TN (true negative), FP (false positive), and FN (false negative) represent the confusion matrix elements for binary classification of fault states, derived by applying the threshold to both predictions and ground truth values. From the results, it is worth noting that even with varying input sequences per model, a consistent performance across all metrics was difficult for all the models. Therefore, it becomes apparent that ML models struggle to attain optimum parameter settings in

to extract all the critical patterns from bias training sets that will result to low error in unseen sets of inputs, while being sensitive enough to timely and abrupt changes in the data.

6.3. Implications of AI Bias in Predictive Maintenance

AI bias in predictive maintenance for power system assets can severely impact the reliability and efficiency of grid operations. For instance, inaccurate failure predictions caused by bias may result in missed breakdowns or unnecessary maintenance, increasing downtime and unplanned repairs, which drive up operational costs. Additionally, biased models can misallocate maintenance efforts, focusing excessively on low-risk assets while neglecting high-risk ones, leading to inefficiencies in labor and financial resources and undermining the predictive maintenance strategy’s overall effectiveness.

Bias in ML models can also result in unequal service quality, where certain assets or regions receive disproportionate attention due to skewed data. This imbalance increases the likelihood of asset failures in underserved areas, potentially disrupting operations and customer service. Incorrect predictions can lead to avoidable shutdowns or critical system failures that go unnoticed, amplifying the operational risks.

6.4. Mitigating Bias Related to AI Methods for Predictive Maintenance

From the discussed sources of bias in PM, it is apparent that only marginal bias reduction is possible post hoc data collection. Similarly, the unique configuration of each monitored PSA makes common effective approaches in computer vision and language modeling, such as data augmentation, noise addition, and transfer learning, problematic [

88]. Therefore, to mitigate bias, reformulating model architectures is imperative. Such formulations systematically introduce additional parameters and structures to make models robust for PM, by reducing the generalization error. Generally, they take the form of regularization and interpretability of the ML models. Four possible approaches for mitigating AI bias for PM are discussed below.

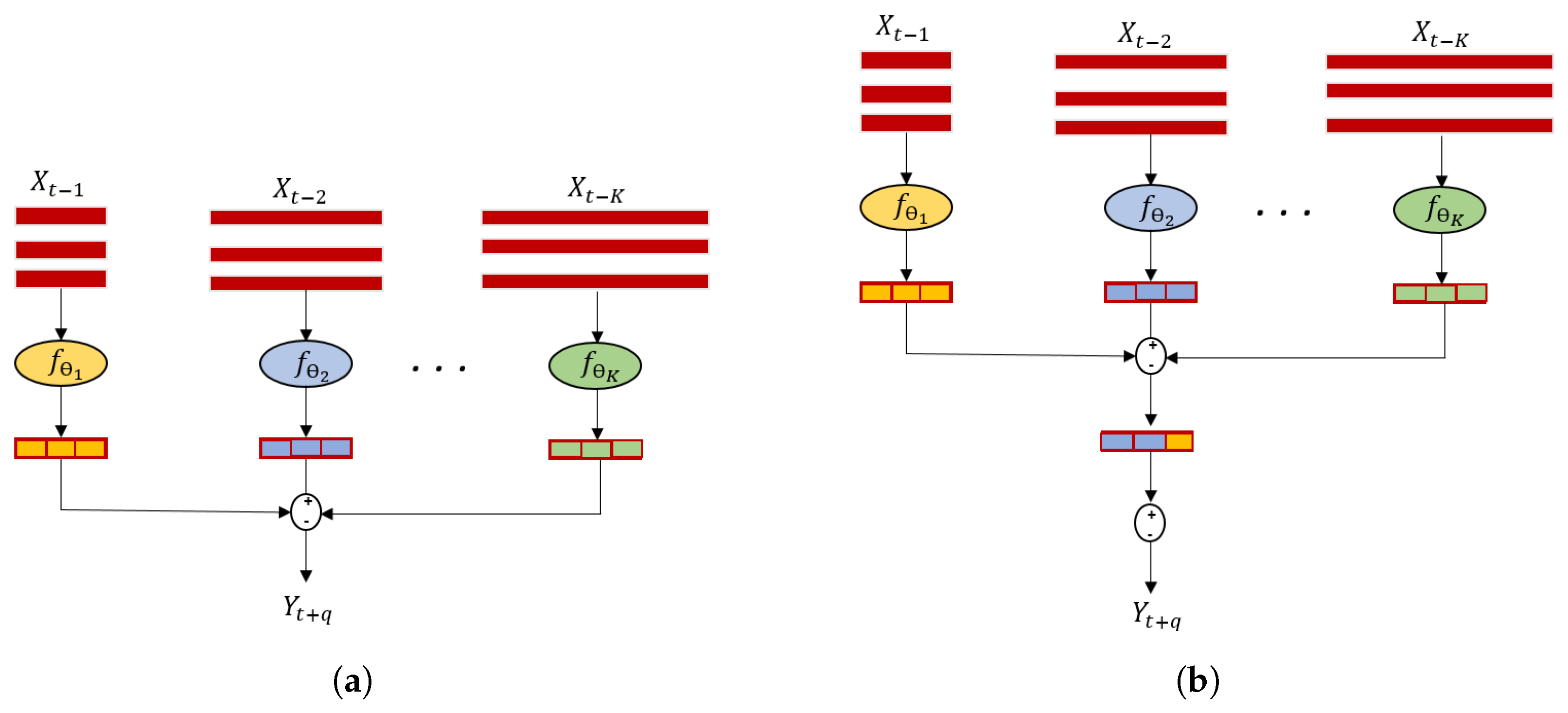

Ensembles of ML models combine the outputs of multiple models to improve model performance. The expected improvement is based on the premise that the models will not make the same error

from the data, and these have been used in many power-related applications to boost performance [

54,

55,

89]. For instance, for an ensemble of

K models,

, the resultant error is the mean performance of the models

. This premise may be fulfilled when models in the ensemble encode different information from the inputs due to the stochastic training process. In other cases, they do not; hence, extra considerations are implemented for effective combination [

90].

Varying model input localization can support bias reduction from the uncertainty in the data-generating process. In the simplest case, different time lags (

p) are used in the input sequence (

) for each

in the ensemble to predict the same

; see

Figure 9a. The outputs are then combined as the predictions. With input localization, models have the capability to capture patterns when there are significant temporal fluctuations across the samples. Varying input localization is also effective because it reduces the covariance of the errors from each

. More precisely, consider the expectation of the squared error:

where

represents the variance and

represents the covariance of errors. When the errors of the ensemble are uncorrelated,

, leaving only the first term in Equation (

6). Therefore, the desired objective is to make the errors independent—varying model input localization is one way to implicitly drive the model training to this objective. Different variants of the combination layer in

Figure 9a can be explored to combine the extracted patterns of

. In particular, a mixture of experts advances performance via an advanced gating system which propagates only the most relevant patterns [

56,

57].

Application of explainable ML models has increasingly been suggested lately for tasks that affect PSAs. For PM, this goes even further by providing some interpretations behind the models’ decisions about faults which improve trust and usability. In particular, for ensemble modeling, optimization may indiscriminately give high relevance to certain models; meanwhile end-users obtain just the forecasts without additional information.

Figure 9b illustrates a possible solution that adds extra interpretable structures to an ensemble to highlight the relevance of the hidden embedding for the predictions. Such structures are referred to as routing networks augmented with attention mechanisms to weight the influence of the embeddings of each model on

. The routing network can facilitate specialization (to model and input intervals) with sparse (binary) attention [

58,

59] or apply soft attention to dynamically weight each model embedding to improve the representational capacity [

60]. This will in turn help in determining which models are biased based on the output viz-a-viz input localization.

Favoring ML models based on causal frameworks such as factor modeling and other architectures that account for the effect of confounded inputs would reduce the effect of confounded mechanisms in data. On the one hand, a cause–effect relationship,

, would model the observations as the cause of the faults (effect) in

Y, while following the required criteria for causal sufficiency and faithfulness. On the other hand, the confounded relationships model

could take into account hidden causes. These setups can aid in root cause diagnosis and fault location, all from observational data [

61,

62].

While predictive maintenance challenges center on data scarcity and imbalance, our final use case, schema matching, presents a different class of bias problems rooted in semantic heterogeneity and class imbalance. This progression from temporal to classification to semantic challenges illustrates the diverse ways bias manifests across the power systems AI landscape.

7. AI Bias and Mitigation Strategies in Schema Matching in Energy Domain

This section examines AI bias in schema matching for energy-domain interoperability. The analysis addresses four interconnected dimensions: (1) the challenge of semantic heterogeneity in energy data systems; (2) common biases affecting schema matching models and their sources; (3) impacts of these biases on data integration and system interoperability; (4) effective strategies for reducing bias in schema matching applications.

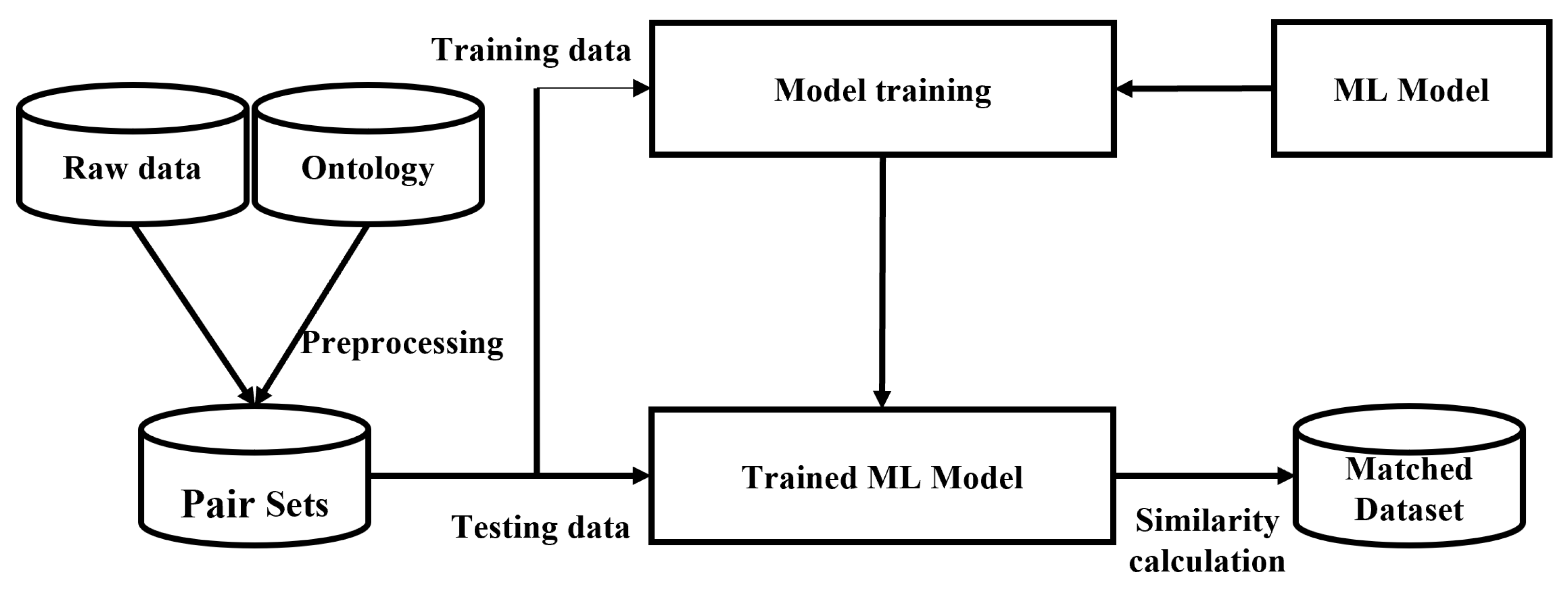

With the development of information and communication technologies in the energy domain, a huge amount of disparate data has become available; thus, managing heterogeneity among various data resources has been challenging. For example, the Open Energy Platform [

91] provides open data related to the energy domain. Schema matching refers to mapping of data to ontology to solve semantic heterogeneity problems. Ontologies describe domain terms and specify the meanings of terms. A common way to perform schema matching is based on semantic similarity, which can be formally defined as a function,

, where

and

are sets of entities from two different data schemas, and the output represents the degree of semantic correspondence between them. As illustrated in

Figure 10, the raw data and ontology are paired together as input for the model training and model prediction. The general preprocessing for energy-domain datasets contains symbol handing, word cases, and stemming. The data may contain special symbols. It is necessary to remove it accordingly (e.g., changing Building_energy to Building energy). Additionally, all the words should covert to lower case. For stemming, the roots of the words are found, which are the basic forms without verb tense or plural nouns. The training data contains labels that indicate if the raw data matches the ontology. Most energy-domain ontologies (e.g., [

92,

93,

94]) contain more entities than the raw data. Therefore, the pair sets contain many unmatched pairs. This leads to a class imbalance problem characterized by a skewed distribution ratio,

, where

is the number of samples in the majority class (unmatched pairs) and

is the number of samples in the minority class (matched pairs). When

, the classifier’s decision boundary becomes biased toward the majority class, resulting in statistically suboptimal classification performance for the minority class despite potentially high overall accuracy.

7.1. Bias Related to AI Methods for Schema Matching

In the context of schema matching, human bias can impact the quality of matching outcomes significantly. When humans are involved in matching tasks, their cognitive biases may lead to suboptimal decisions. This happens because individuals might rely too heavily on certain types of information, such as attribute names or data types, while neglecting other crucial aspects. Data bias is another major bias source. More specifically, the sampling bias in schema matching is unavoidable as the majority of the pairs are unmatched. This leads to models that perform well on unmatched cases but poorly on others. In [

63], a total of 49,815 pairs were generated, of which 103 pairs were correctly matched, while 49,712 pairs did not match, yielding an imbalance ratio

. This exemplifies a severe class imbalance problem where the minority class (matched pairs) represented only approximately 0.2% of the total dataset. When quantifying classifier performance in such scenarios, standard accuracy metrics become misleading; a naive classifier that predicts “unmatched” for all samples would achieve 99.8% accuracy despite failing entirely on the minority class. Alternative evaluation metrics such as precision (

), recall (

), F1-score (

), and the area under the precision–recall curve (AUPRC) provide more meaningful assessments of classification performance on imbalanced datasets.

7.2. Implications of AI Bias in Schema Matching

Bias in ML models used for schema matching in the energy domain can significantly hinder data integration by causing misaligned or incomplete mappings. These issues disrupt the merging of disparate datasets, leading to inconsistencies, reduced data accuracy, and impaired interoperability essential for EPES operations. Such challenges impede the ability to effectively manage and utilize data from multiple sources, undermining the foundation of efficient energy management.

The consequences of biased schema matching extend to decision-making inefficiencies and systemic inequities. Errors in schema alignment can cascade through energy management systems, affecting automated decisions like grid optimization and energy distribution, while also generating flawed insights. Furthermore, biased models may disproportionately allocate resources, favoring specific regions, technologies, or demographics, thereby exacerbating inequities and creating disparities in energy access and pricing, particularly for marginalized communities.

Operational and trust-related challenges further exacerbate the impact of biased schema matching. Misaligned schemas often require costly human intervention to reconcile mismatches, slowing processes and increasing financial burdens on EPES operators. Moreover, visible errors or inconsistencies can seriously affect stakeholder confidence in AI-driven solutions, delaying adoption and innovation. In such scenario, regulatory and compliance risks can also be significant, as inaccuracies caused by bias can result in non-compliance with industry standards and legal penalties and hinder progress toward sustainability goals such as renewable energy tracking.

7.3. Mitigating Bias in Schema Matching

A notable example is the “PoWareMatch” system, which integrates human decision-making with deep learning to improve the quality of schema matches [

14]. This system acknowledges human cognitive biases and tries to mitigate them by using a quality-aware approach that filters and calibrates human matching decisions. The aim is to combine human insights with algorithmic precision to enhance the overall reliability and accuracy of schema matching processes.

In [

63], only random oversampling was chosen to solve this problem. The smaller category is randomly replicated until balance between the smaller category and the larger category is achieved. Another random sampling-based approach is random undersampling, which reduces the sampling rate for the larger category data to achieve balance. However, undersampling may result in underfitting and oversampling may result in overfitting problems. The oversampling approach in this paper improved the prediction accuracy from

to

. Other approaches such as cluster-based sampling method, informed undersampling, and synthetic sampling with data generation can be taken to mitigate the unbalanced data problem [

64].

8. Conclusions

This paper provides a systematic analysis of AI bias in the power systems domain through three representative use cases: load forecasting, predictive maintenance, and ontology matching for system interoperability. The investigation demonstrates how AI bias manifests in different operational contexts, affecting not only technical performance but also fairness, reliability, and socioeconomic equity in energy distribution and management. The domain-specific analysis framework developed in this paper bridges the gap between abstract bias concepts and practical power system applications, offering a structured approach for bias identification that can be extended to other energy applications.

The proposed taxonomy of bias types relevant to power systems provides a foundation for future research and standardization efforts in the field. The power-specific mitigation strategies presented—emphasizing diverse data collection, transparent algorithm design, and cross-disciplinary stakeholder engagement—offer practical pathways for developing fair and equitable AI solutions that align with the technical and ethical requirements of modern power systems.

Despite the significant advances in bias mitigation represented in this work, challenges remain in achieving comprehensive solutions. Data limitations, algorithm design trade-offs, and evaluation framework inadequacies continue to present obstacles, particularly in resource-constrained environments and evolving grid configurations. Technical integration barriers, stakeholder alignment difficulties, and regional variability further complicate the widespread adoption of bias-aware AI systems in diverse power system contexts.

The path forward requires coordinated efforts among researchers, practitioners, and policymakers to establish robust frameworks for AI bias detection and mitigation. These frameworks must integrate rigorous statistical methodologies for quantifying bias, including formal distributional divergence metrics, hypothesis testing procedures, and causal inference techniques. By building on the structured approach and domain-specific insights presented in this paper, the EPES community can accelerate the responsible adoption of AI technologies, advancing both technical innovation and social responsibility without compromising system security and economic objectives. This work represents an important step toward realizing AI’s full potential in transforming power systems for a more efficient, reliable, and equitable energy future.