1. Introduction

The stable operation of key substation equipment forms the core foundation for ensuring the safe and reliable power supply of the electrical grid. These critical components perform essential functions in power conversion, distribution, and protection, with their operational status directly impacting the stability of the entire power system [

1]. However, substation equipment is persistently exposed to complex environments involving electromagnetic interference, temperature and humidity fluctuations, and mechanical stress, making it prone to defects and failures such as aging, loosening, corrosion, flashover, and overheating [

2]. Traditional manual inspections, relying on periodic on-site checks by maintenance personnel, are not only inefficient and costly but are also limited by human visual recognition capabilities and environmental conditions. This makes comprehensive, timely, and accurate defect detection challenging, particularly in large substations or during severe weather events [

3]. Therefore, leveraging intelligent technologies to achieve automated and high-precision condition monitoring and defect identification for critical substation equipment is of great practical significance for enhancing grid operation and maintenance efficiency and ensuring power supply security.

With the advancement of computer vision technology, intelligent inspection based on visible-light images has become a crucial means for monitoring the condition of substation equipment. Object detection technology, which is capable of automatically identifying equipment in images and locating their regions, serves as a key technical component for achieving automated defect identification [

4]. However, existing high-performance object detection models typically exhibit high computational complexity and large parameter sizes, making them difficult to run in real-time on edge computing devices deployed at substation sites. This limitation restricts their practical application efficacy in real inspection scenarios.

Deep learning-based object detection methods have driven significant progress in intelligent detection technology for critical substation equipment in recent years. Traditional detection methods rely on handcrafted features and classifiers (such as SVM [

5] and AdaBoost [

6]) to identify equipment. However, these approaches are usually designed for specific types of equipment or simple scenarios; when confronted with the complex environments of substations, their robustness and generalization capabilities are limited, often failing to meet high-precision detection requirements. As a data-driven technology, deep learning has become the mainstream solution in the field of object detection due to its powerful feature learning ability and end-to-end optimization framework [

7]. Researchers have significantly improved detection performance by applying deep neural networks to substation equipment detection. Nevertheless, existing deep learning methods still face dual challenges when handling complex substation scenes: On the one hand, lightweight models are constrained by their capacity, often struggling to meet stringent inspection requirements for localization accuracy (especially for small and densely packed objects) while guaranteeing real-time performance. On the other hand, the diverse morphology, significant scale variations, and frequent occlusion of substation equipment render generic feature extraction and fusion mechanisms inefficient and insufficiently flexible when addressing such cross-scale and fine-grained localization problems. This leads to a persistent difficulty in balancing model accuracy with inference speed.

To address these challenges, this paper proposes SD-FINE (where ‘S’ denotes ‘Substation’ and ‘D-FINE’ refers to our baseline detection method), a lightweight object detection technology specifically designed for critical substation equipment detection. Specifically, to overcome the insufficient localization accuracy of lightweight models, SD-FINE builds upon D-FINE [

8] by designing a novel Fine-grained Distribution Refinement (FDR) approach. This transforms the fixed coordinate-point prediction process for bounding boxes in the conventional DETR framework [

9] into an iterative refinement of the target position probability distribution. Unlike the predefined weighting function in D-FINE, SD-FINE utilizes intermediate features generated by decoder layers to learn weighting functions. This mechanism significantly enhances the model’s sensitivity to subtle positional variations of equipment, thereby substantially improving localization accuracy. Concurrently, to enhance the cross-scale feature fusion efficiency of lightweight models, this paper designs a novel Efficient Hybrid Encoder. This encoder provides adaptive sampling pathways for feature information at different scales during the fusion process, overcoming the limitations of traditional methods in cross-scale information interaction, such as insufficient flexibility and computational redundancy. It achieves more flexible and efficient lightweight feature extraction. Comprehensive experimental validation on a critical substation equipment detection dataset demonstrates that SD-FINE significantly enhances both the localization accuracy and inference speed for critical equipment while strictly maintaining model lightweightness, offering an efficient and reliable lightweight detection solution for intelligent substation inspection.

2. Related Research

2.1. Object Detection

Object detection, as a core task in computer vision, aims to identify bounding boxes of specific targets in images and determine their categories. Traditional methods often exhibit limitations in robustness and generalization capability under complex scenarios [

10]. With the rise of deep learning, detection methods based on Convolutional Neural Networks (CNNs) rapidly became mainstream. Among these, two-stage methods represented by Faster R-CNN [

11] first generate candidate regions before classifying each region and regressing bounding boxes. While achieving high accuracy, they suffer from substantial computational overhead and slower speeds. Single-stage methods, exemplified by the YOLO series [

12], directly predict target class probabilities and bounding box coordinates densely on feature maps, significantly improving detection speed and achieving a better speed–accuracy balance. However, their post-processing typically relies on Non-Maximum Suppression (NMS) to remove redundant detection boxes. DETR [

9] is the first framework to successfully employ a Transformer as the core building block for object detection. Its end-to-end design utilizes learnable object queries to interact with global image features, completely eliminating the need for NMS post-processing. However, DETR’s Transformer decoder incurs high computational costs and slow training convergence, partially offsetting the advantages gained from replacing NMS and limiting its widespread adoption in real-time or resource-constrained scenarios [

13]. RT-DETR [

14] was developed specifically to address these efficiency bottlenecks in DETR. It introduces an Efficient Hybrid Encoder to improve model speed through intra-scale feature interaction and cross-scale feature fusion. Nevertheless, the design of this Efficient Hybrid Encoder overlooks the intrinsic differences among features at different scales, resulting in insufficiently flexible feature extraction. Furthermore, RT-DETR’s bounding box regression employs a fixed coordinate point prediction mechanism. This approach demonstrates inadequate perception of subtle positional variations in targets within lightweight models, constraining its application potential on resource-constrained edge devices.

2.2. Object Detection in Substations

The complex environment of substations, particularly the dense distribution and frequent overlapping of critical equipment within large substations, imposes higher demands on existing object detection algorithms. To address the characteristics of this specific scenario, Xu et al. [

15] combined a Moving Object Region Extraction Network with a classification network to achieve the detection of foreign objects in substations. Ou et al. [

16] improved the feature extraction network of Faster R-CNN to detect five types of electrical equipment in substation infrared images. While such Faster R-CNN-based substation object detection algorithms can identify target objects within substations, the practicality of two-stage methods remains significantly limited. Consequently, Wu et al. [

17] proposed ISE-YOLO, designing a global–local fusion module based on the YOLO algorithm and enhancing the detection capability for small substation equipment by fusing coarse and fine-grained features. Tao et al. [

18] improved YOLOv8 using GSConv and VoVGSCSP modules, creating a lightweight network named YOLOv8_Adv capable of detecting live equipment in substations. However, both ISE-YOLO and YOLOv8_Adv still rely on NMS post-processing, imposing higher demands on deployment hardware.

Therefore, many researchers have turned their attention to DETR. Among them, Ji et al. [

19] proposed PFDAL-DETR, optimizing DETR’s convolutional kernels through a perception field dual attention mechanism. Li et al. [

20], considering model lightweighting, replaced the backbone network with ResNet18, reducing computational complexity while enhancing feature interaction efficiency. However, the bounding box regression process in DETR often yields coarse localization results [

21]. Furthermore, given the complex backgrounds of substations, the Efficient Hybrid Encoder in RT-DETR lacks flexibility during multi-scale feature fusion, resulting in less robustness.

In summary, while existing research on power grid equipment object detection has made progress, core challenges remain for lightweight models facing the complex substation environment: Firstly, the localization of existing lightweight models relies on bounding box regression, exhibiting poor capability, especially for densely packed targets in substations, making it difficult to meet high-precision inspection requirements. Secondly, the cross-scale feature fusion mechanisms designed for the multi-scale characteristics of substation equipment suffer from inefficiency and insufficient flexibility, constraining the simultaneous improvement of accuracy and speed in lightweight models. The SD-FINE technology proposed in this paper aims to specifically address these challenges.

3. Methodology

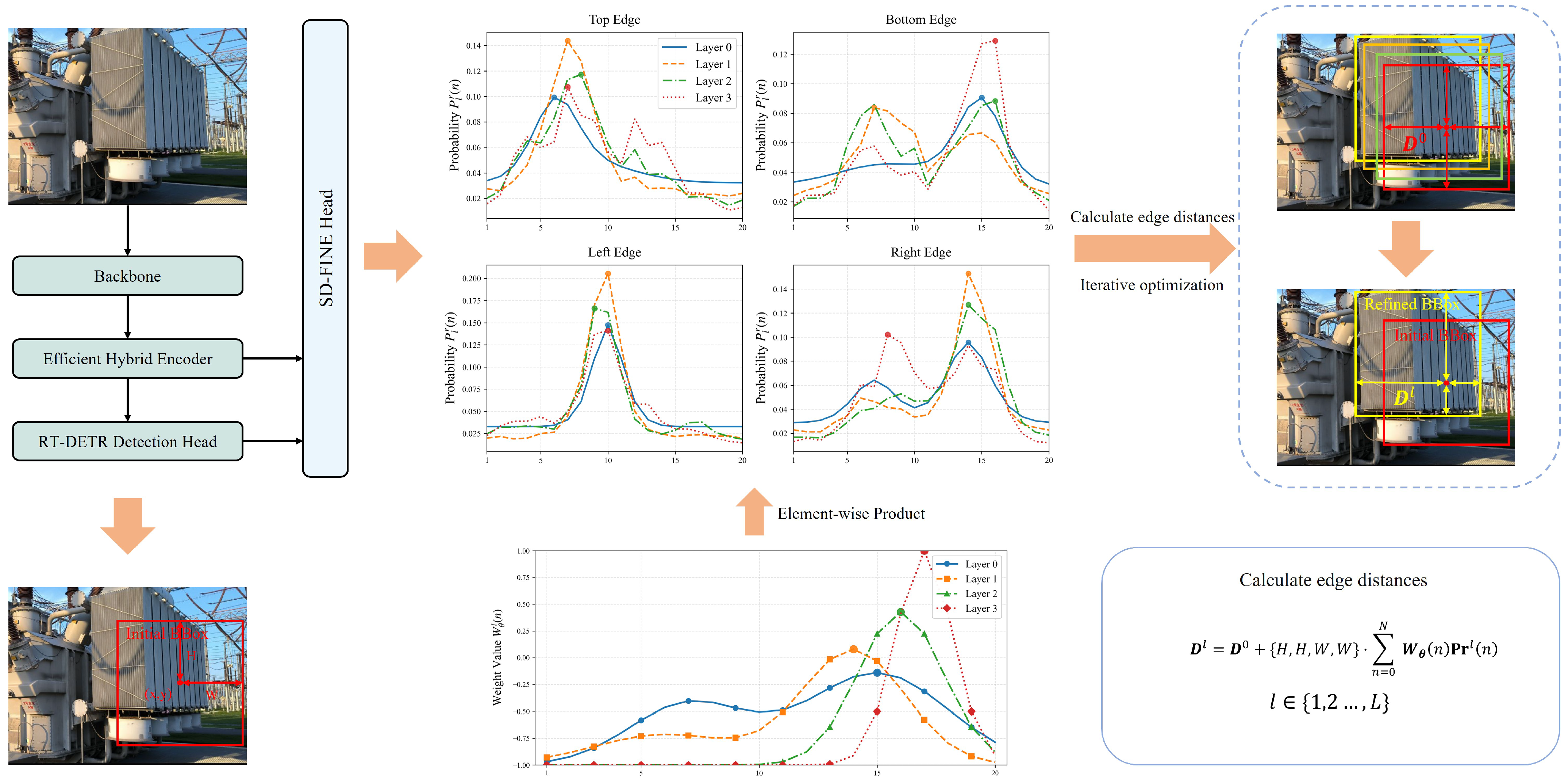

This section details the proposed lightweight object detection methodology for critical substation equipment. As illustrated in

Figure 1, the input image is first processed by the Backbone to extract foundational visual features. The region features extracted by the Efficient Hybrid Encoder and the RT-DETR Detection Head are then fed into the SD-FINE Head. The SD-FINE Head is designed to achieve independent distribution representation predictions for the four bounding box sides. It comprises four branches, each consisting of multiple decoder layers, which perform more precise and progressive refinement at each layer. In

Figure 1, the four probability distributions at the top represent examples of the independent distributions generated by different decoding layers for the four edges. The graph below shows an example of the weights produced by training the linear layer. The final edge distance is calculated based on these two components.

3.1. Efficient Hybrid Encoder

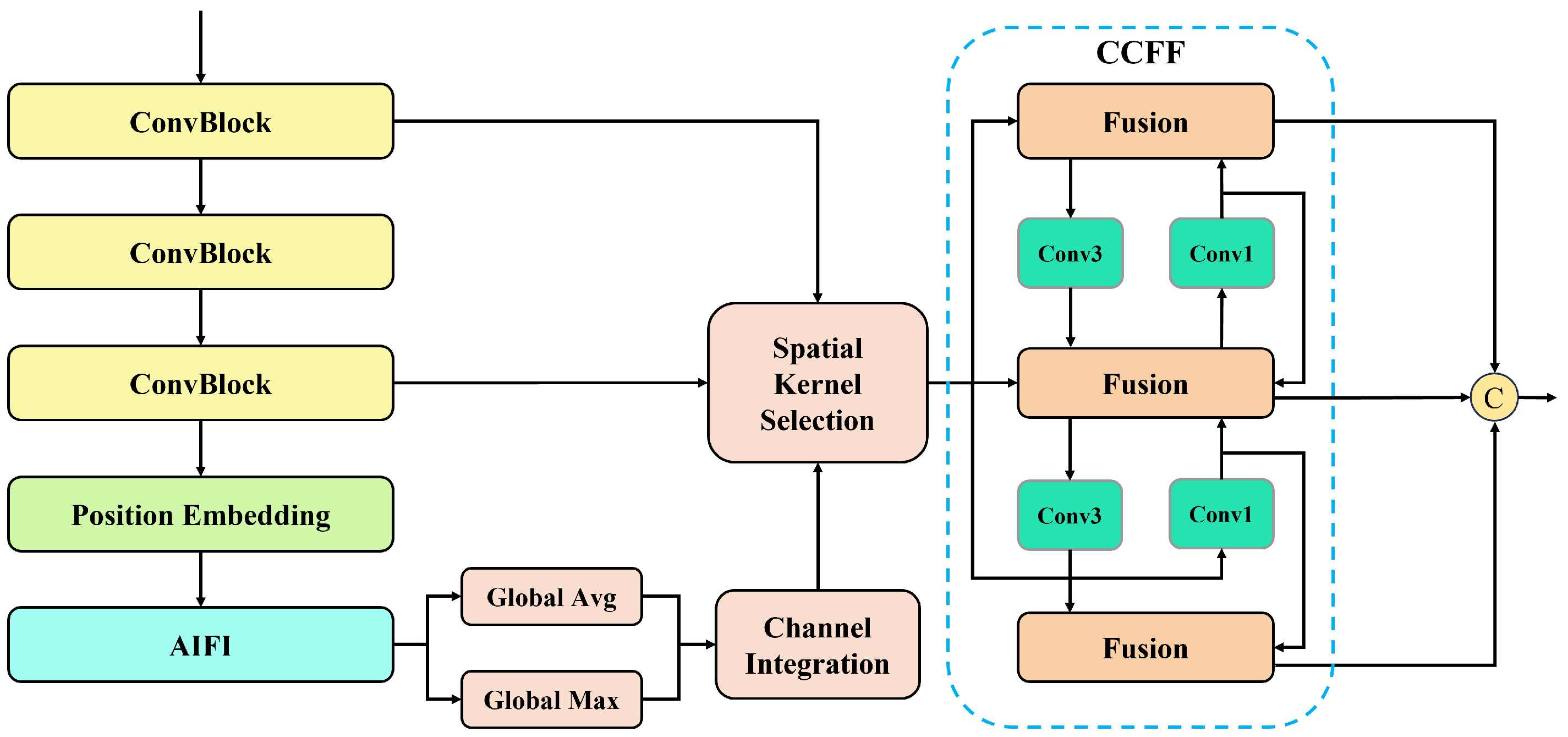

To flexibly extract substation equipment features across different scales, this paper designs a novel Efficient Hybrid Encoder, the structure of which is illustrated in

Figure 2. The ConvBlock denotes downsampling operations via convolution to obtain features at different scales. Features at the largest scale undergo Attention-based Intra-scale Feature Interaction (AIFI) after Position Embedding, significantly reducing computational costs. The results from AIFI are processed through Global Average Pooling (Global Avg) and Global Max Pooling (Global Max) to capture spatial relationships between feature maps with different contextual information. This process can be expressed as

where

A denotes the result of AIFI, and

and

represent the Global Avg and Global Max operations, respectively.

denotes a standard 1 × 1 convolution that preserves the channel dimension, and

is the sigmoid activation function. Specifically,

and

obtain the aggregated spatial feature descriptor and the maximum spatial feature descriptor, respectively. The concatenated descriptor has dimensions of

. To enable information interaction between the different spatial descriptors, the concatenated descriptor is processed through a 1 × 1 convolution followed by the sigmoid activation function, implementing Channel Integration. The result of Channel Integration represents the spatial relationships between feature maps capturing substation contextual information.

Denoting the features output by the first ConvBlock and the third ConvBlock at two different scales as

and

, respectively, the Spatial Kernel Selection procedure can be expressed as

where

denotes the element-wise product,

represents the adaptively weighted multi-scale features. These features are then fed into the CNN-based cross-scale feature fusion (CCFF) module for cross-scale feature fusion. Within CCFF, the fusion module signifies information interaction within the channels of the feature maps. Conv1 and Conv3 represent 1 × 1 and 3 × 3 convolutions, respectively, using the SiLU activation function. The results from the three ‘Fusion’ modules are concatenated along the channel dimension to produce the final output.

Evidently, within the Efficient Hybrid Encoder process, the features at the highest scale undergo AIFI, ensuring model lightweightness. During cross-scale feature fusion, Spatial Kernel Selection effectively weights features at different scales based on spatial descriptors. This approach reduces learning redundancy and enhances model flexibility.

3.2. Fine-Grained Distribution Refinement

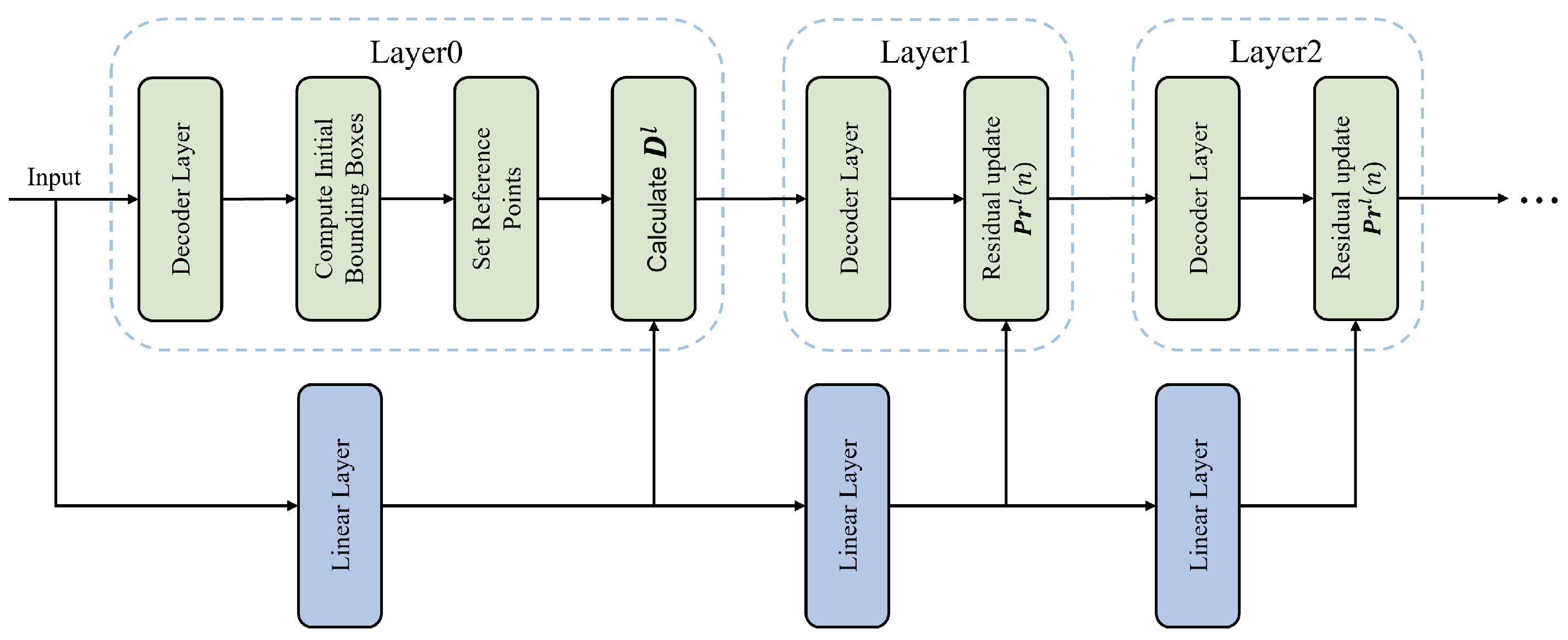

Traditional FDR requires determining four candidate values that are independently distributed, typically achieved through a weight function. Earlier methodologies utilized predefined piecewise linear functions for this weighting. Nevertheless, when encountering diverse target objects, varying contexts, or features from different levels, such a static functional form fails to accommodate the required offset distribution patterns. To overcome this constraint, we introduce a novel FDR methodology that adaptively learns weighting for the marginal probability distributions via a linear layer, and its structure is shown in

Figure 3. Formally, the initial bounding box

is parameterized by its center coordinates

and its distances to the boundaries

(representing top, bottom, left, and right distances from the center). For the output of the

l-th decoder layer (with

), the refined boundary distances

are computed as follows:

where

represents four independent distributions for the four edges,

is the weight function, and

n indexes the discrete

N bins, each corresponding to a potential edge offset. This process indicates that each distribution predicts the likelihood of candidate offset values for its corresponding edge, with these candidate values being weighted by the learnable weight function

.

Unlike the original predefined non-uniform step function,

in this work dynamically learns the weight function through a lightweight linear layer. This weight function acts on the probability distribution

, with its values generated by the following formula:

where

is the intermediate feature vector produced by the

l-th decoder layer, and

denotes a linear transformation layer parameterized by

. This linear layer outputs a vector

of dimensionality

N, whose element

represents the learnable weight coefficient for the

n-th bin. The learned weight coefficients

are multiplied with the corresponding bin probabilities

and summed to compute the layer’s predicted adaptive edge offset. The update mechanism for the refined probability distribution

remains unchanged, employing a residual adjustment strategy:

where

carries the distribution confidence information from the previous layer, and

represents the residual lg predicted by the current layer. These are summed and normalized via Softmax to obtain the new distribution.

In summary, within our FDR process, the weights are dynamically generated directly from the current layer’s feature . This enables the model to adaptively adjust its emphasis on different offset bins according to the input image content, target characteristics, and the current optimization stage. Furthermore, the linear layer parameters are learned from data during training, eliminating the need for predefined complex step rules. This grants the model greater flexibility to capture finer and more complex localization patterns, particularly for irregularly shaped or heavily occluded targets.

4. Experiments

This section presents our experimental setup and results. The experimental setup includes details of the dataset, training environment, model hyperparameter selections, and evaluation metrics. The results comprise comparisons of evaluation metrics against different methods and concrete prediction outcomes.

4.1. Experimental Setup

The dataset used in the experiment comprises 1309 images of substation equipment, encompassing five critical equipment types: Breakers, Condensers, MainTransformers, Reactors, and StationTransformers. Some relevant information about this equipment is provided in

Table 1. All images were captured via drone inspections conducted at substation sites, comprehensively covering diverse equipment conditions, varying environmental factors, and multiple shooting angles. The dataset was partitioned in an 8:2 ratio, allocating 1047 images for training and 262 images for testing to ensure reliable model evaluation.

Model training was performed on an NVIDIA A800 80GB Active GPU within a Python (3.10.14) environment using the PyTorch (2.3.0) deep learning framework, running on the centos 7.5.1804 operating system. Key hyperparameters included an initial learning rate of

, a final learning rate of

, the AdamW optimizer, 300 training epochs, a batch size of 8, and an input image size of

. For evaluation metrics, we employed Precision (

P), Recall (

R), and mean Average Precision (mAP) as core indicators to measure detection performance. Additionally, model parameters (Params), Giga Floating Point Operations Per Second (GFlops), and test time were incorporated to comprehensively assess computational efficiency and deployment potential, particularly addressing the requirements for lightweight object detection of critical substation equipment. Specifically,

P quantifies the accuracy of positive predictions by the model, expressed mathematically as

where

denotes the number of true positive instances correctly detected by the model, and

represents the number of negative instances erroneously predicted as positive by the model.

R measures the model’s ability to cover actually existing positive samples, and its calculation formula is

where

represents the number of positive instances that actually exist but were not detected by the model.

mAP is the core evaluation metric for comprehensively assessing the detection accuracy of a model, which is particularly important for multi-class object detection. Its calculation first requires plotting the

curve for each class at different confidence thresholds; the area under this curve is then computed to obtain the Average Precision (AP) for that class:

mAP is the average of the AP values across all categories. Params and GFlops provide crucial metrics for evaluating whether a model meets the requirements of lightweight and real-time deployment at substation edges, in terms of model storage demands and computational resource consumption respectively. They thus play a critical role in developing efficient lightweight object detection models suitable for complex field environments.

4.2. Experimental Results

To demonstrate the effectiveness of the proposed key equipment detection method for substations, we compared it with five mainstream object detection methods: YOLOv5, YOLOv8 [

12], YOLOv10 [

21], RT-DETR [

14], and D-FINE [

8]. To ensure fairness in the comparative experiments, models with parameter counts as close as possible to our method were selected, and consistent hyperparameter settings were maintained.

Table 2 presents the evaluation metrics achieved by our method and the others, with the best metrics highlighted in bold. It can be seen that in terms of model complexity, SD-FINE has the smallest number of parameters (0.577M), and its computational cost (11.3G GFLOPs) is only marginally higher than the optimal YOLOv8 (10.9G). Regarding detection performance, SD-FINE achieved the best results across all four core detection metrics: its

P (93.1%),

R (91.4%), mAP@0.5 (93.3%), and mAP@0.5:0.95 (62.7%) all lead other models. Specifically, SD-FINE’s

P is 1.1% higher than the second-best RT-DETR, its

R is 0.6% higher than the second-best YOLOv10, its mAP@0.5 is 0.5% higher than the second-best D-FINE, and its mAP@0.5:0.95 is 0.5% higher than the second-best RT-DETR/D-FINE. Additionally, it requires the least time for testing a single image. This strongly demonstrates that SD-FINE achieves comprehensive performance superiority while maintaining extremely low model complexity.

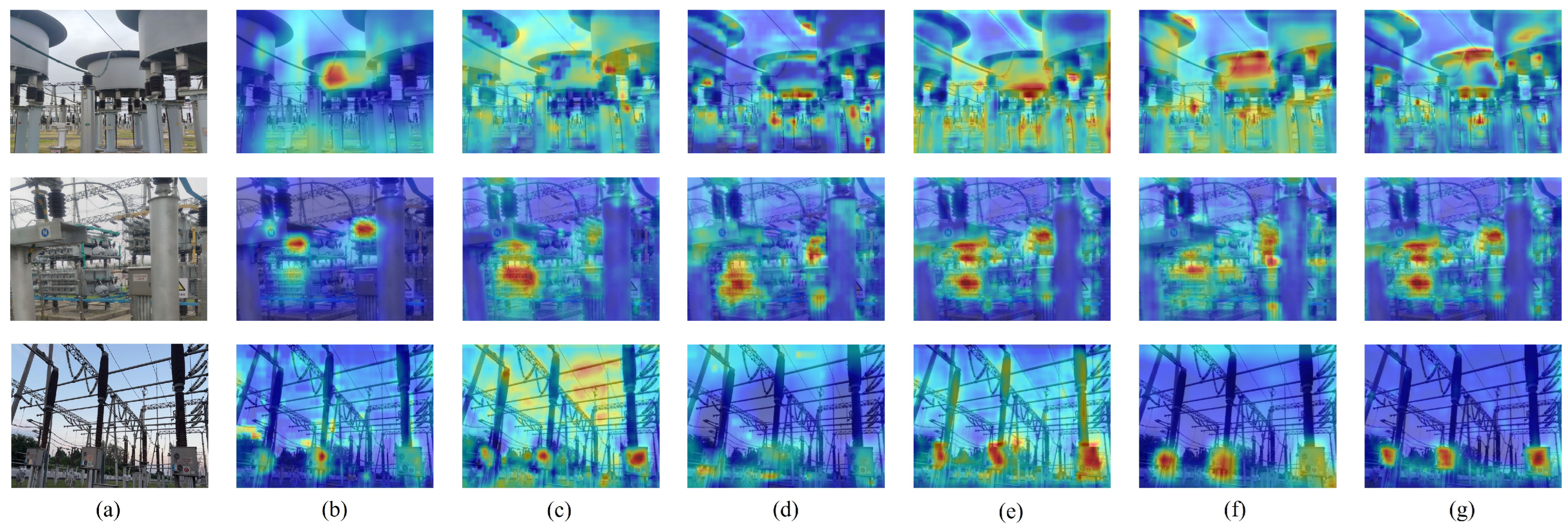

Figure 4 visualizes the heatmaps generated using Grad-CAM++ [

22] for different methods on three substation images. The red areas represent regions of interest in the feature maps for the model, with darker colors indicating higher attention. It can be observed that on all three images, our method exhibits the darkest color within the substation equipment regions and demonstrates stronger suppression capability against surrounding interference. Among the other methods, YOLOv5 shows insufficiently deep coloring in the equipment regions, indicating its relatively poor localization capability. While both YOLOv8 and RT-DETR manage to locate the equipment regions, they are susceptible to interference from non-target equipment, as is clearly shown in the third image.

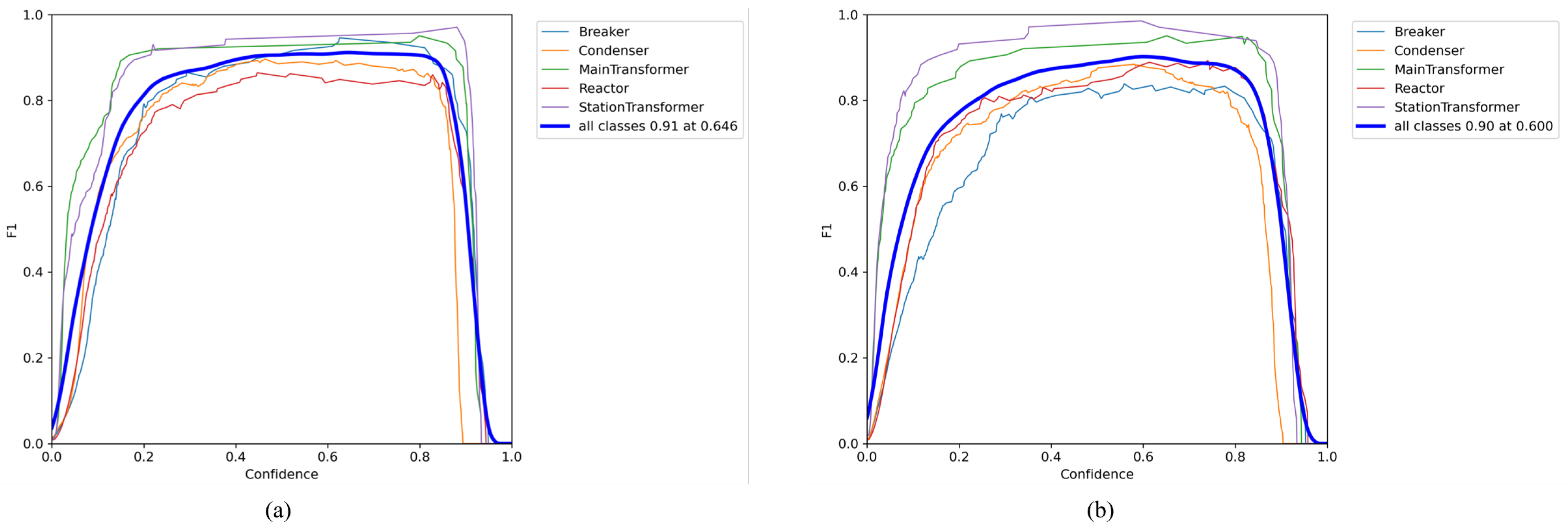

Additionally,

Figure 5 compares the F1 curves of SD-FINE and the second-best method, D-FINE. The F1 curve illustrates how the model’s F1 score changes across different confidence thresholds. The F1 score is the harmonic mean of

P and

R. It can be observed that SD-FINE achieves the highest maximum F1 score of 0.91. Furthermore, the confidence threshold for SD-FINE increased from 0.600 to 0.646, indicating that SD-FINE sustains the same F1 score at a higher confidence level.

Finally,

Figure 6 demonstrates the detection results of SD-FINE on photographs captured in actual substation scenarios. Across multiple viewing angles and various operational conditions, SD-FINE successfully identifies most critical electrical equipment while maintaining high detection confidence (the detection area for the breaker is its operating box). However, in the first image, the conservator on the main transformer is mistakenly detected as a reactor. This misidentification occurs primarily due to (1) the shooting angle obscuring distinctive features between the equipment components, and (2) the inherent limitation of the model’s 93.1% precision, which still permits occasional false positives. These observations indicate potential areas for further optimization in our model.

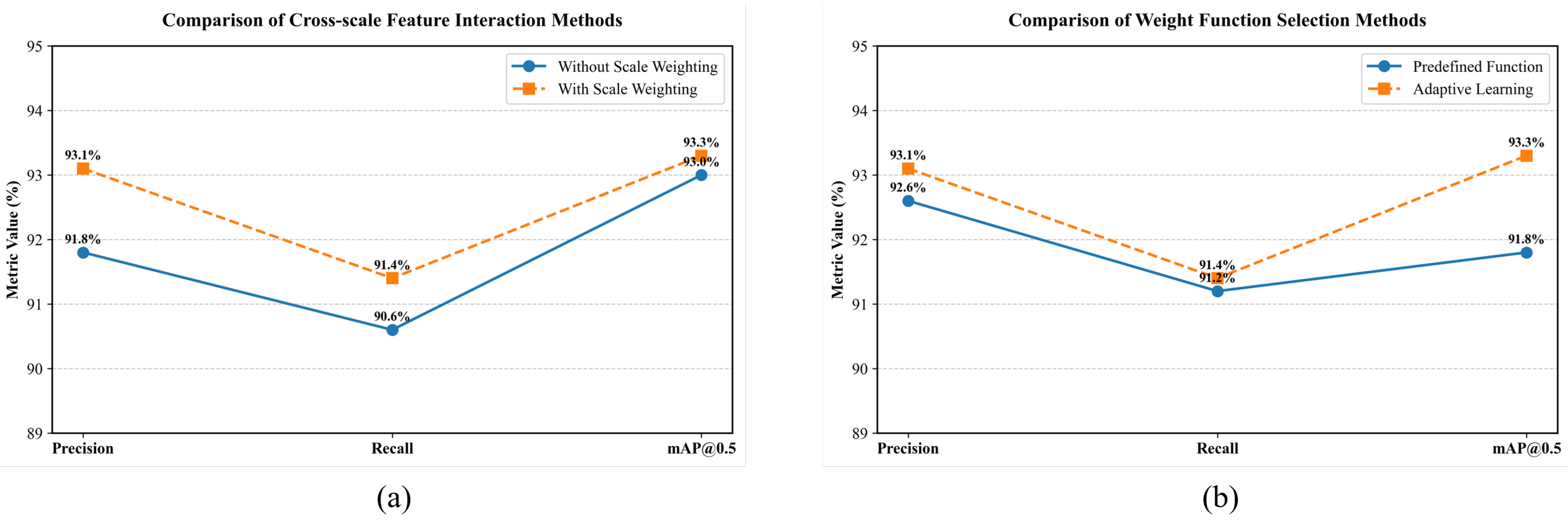

5. Ablation Study

To validate the effectiveness of the innovations in the proposed SD-FINE, we conducted ablation studies on the Efficient Hybrid Encoder and FDR. Specifically, the advantage of our designed Efficient Hybrid Encoder primarily lies in its effective weighting of features at different scales through spatial descriptors. The FDR approach proposed in this paper mainly learns weights for edge probability distributions via linear layers instead of predefined functions.

Figure 7 presents evaluation metrics under different experimental settings. The results demonstrate that scale-weighted feature fusion yields improvements of 1.3%, 0.8%, and 0.3% in

P,

R, and mAP@0.5, respectively. Meanwhile, enhancements from the FDR refinement are primarily reflected in mAP, achieving a 1.5% increase.

6. Conclusions

With the advancement of computer vision technology, intelligent inspection based on visible-light images has become a crucial approach for monitoring equipment status in substations. As a key component of automated defect identification, the performance of object detection directly impacts inspection efficiency. However, existing high-performance detection models typically suffer from high computational complexity and large parameter counts, making real-time deployment on substation edge devices challenging. Meanwhile, lightweight models face limitations in positioning accuracy and inefficient cross-scale feature fusion within complex substation scenarios. To address these issues, this paper proposes SD-FINE—a lightweight object detection technique tailored for critical substation equipment detection. Specifically, SD-FINE introduces a novel FDR mechanism that transforms fixed coordinate prediction in traditional bounding boxes into iterative optimization of target position probability distributions. This significantly enhances perception capability for subtle equipment position variations. Furthermore, to improve cross-scale feature fusion efficiency, we design an Efficient Hybrid Encoder that provides adaptive sampling channels during multi-scale feature integration. This overcomes the inflexibility and computational redundancy of conventional methods in cross-scale information exchange, enabling more flexible and efficient lightweight feature extraction.

Experimental validation on a substation critical equipment detection dataset demonstrates that our SD-FINE achieves state-of-the-art comprehensive detection performance while strictly maintaining model lightweightness. Regarding model complexity, SD-FINE has the fewest parameters (0.577 M), with computational load (11.3 GFLOPs) only slightly exceeding the optimal YOLOv8 (10.9 G). In detection performance, SD-FINE leads all four core metrics: P (93.1%, +1.1% over runner-up RT-DETR), R (91.4%, +0.6% over runner-up YOLOv10), mAP@0.5 (93.3%, +0.5% over runner-up D-FINE), and mAP@0.5:0.95 (62.7%, +0.5% over joint runners-up RT-DETR/D-FINE). Consequently, SD-FINE achieves significant improvements in both detection accuracy and speed while maintaining exceptionally low model complexity, providing an efficient and reliable lightweight solution for substation intelligent inspection. This demonstrates the FDR mechanism’s enhancement effect on fine-grained localization and the Efficient Hybrid Encoder’s substantial potential in optimizing cross-scale feature fusion.

Although SD-FINE has achieved leading results in the detection of critical equipment in substations, it occasionally produces some false detections in complex scenarios, such as limited camera angles or extremely small targets (as shown in the first image in

Figure 6), indicating that there is still room for improvement in model performance. Therefore, our future work will focus on (1) further optimizing model architecture and training strategies to enhance robustness in extreme scenarios, and (2) constructing a larger-scale, higher-quality visible-light image dataset covering more substation equipment types and complex scenarios.

Author Contributions

Conceptualization, W.S. and S.L.; methodology, W.S., Y.H., S.L., and Q.G.; software, W.S. and Z.Z.; validation, W.S., Y.H., L.X., and Q.G.; formal analysis, W.S. and Y.H.; investigation, W.S., Y.H., and Z.Z.; resources, S.L., Z.Z., and Q.G.; data curation, W.S. and Y.H.; writing—original draft preparation, W.S.; writing—review and editing, W.S., S.L., Y.H., Z.Z., L.X., and Q.G.; visualization, W.S. and L.X.; supervision, S.L. and Y.H.; project administration, S.L. and W.S.; funding acquisition, S.L. and Q.G. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Science and Technology Project Program of State Grid Anhui Electric Power Co., Ltd., Project No. 52120524000B.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to restrictions.

Conflicts of Interest

Authors Wei Sun, Yu Hao, Sha Luo, Zhiwei Zou and Lu Xing were employed by the company State Grid Anhui Electric Power Company Electric Power Research Institute. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Wang, Z.; Lan, X.; Zhou, Y.; Wang, F.; Wang, M.; Chen, Y.; Zhou, G.; Hu, Q. A Two-Stage Corrosion Defect Detection Method for Substation Equipment Based on Object Detection and Semantic Segmentation. Energies 2024, 17, 6404. [Google Scholar] [CrossRef]

- Li, Z.; Qin, Q.; Yang, Y.; Mai, X.; Ieiri, Y.; Yoshie, O. An enhanced substation equipment detection method based on distributed federated learning. Int. J. Electr. Power Energy Syst. 2025, 166, 110547. [Google Scholar] [CrossRef]

- Yao, Y.; Wang, X.; Zhou, G.; Wang, Q. A two-stage substation equipment classification method based on dual-scale attention. IET Image Process. 2024, 18, 2144–2153. [Google Scholar] [CrossRef]

- Wang, J.; Sun, Y.; Lin, Y.; Zhang, K. Lightweight substation equipment defect detection algorithm for small targets. Sensors 2024, 24, 5914. [Google Scholar] [CrossRef]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Taherkhani, A.; Cosma, G.; McGinnity, T.M. AdaBoost-CNN: An adaptive boosting algorithm for convolutional neural networks to classify multi-class imbalanced datasets using transfer learning. Neurocomputing 2020, 404, 351–366. [Google Scholar] [CrossRef]

- Xiang, S.; Chang, Z.; Liu, X.; Luo, L.; Mao, Y.; Du, X.; Li, B.; Zhao, Z. Infrared Image Object Detection Algorithm for Substation Equipment Based on Improved YOLOv8. Energies 2024, 17, 4359. [Google Scholar] [CrossRef]

- Peng, Y.; Li, H.; Wu, P.; Zhang, Y.; Sun, X.; Wu, F. D-FINE: Redefine regression Task in DETRs as Fine-grained distribution refinement. arXiv 2024, arXiv:2410.13842. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Chen, Y.; Du, Z.; Li, A.; Li, H.; Zhang, W. Substation Equipment Defect Detection Based on Improved YOLOv9. In Proceedings of the 2024 IEEE 4th International Conference on Software Engineering and Artificial Intelligence (SEAI), Xiamen, China, 21–23 June 2024; pp. 87–91. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Chen, S.; Sun, P.; Song, Y.; Luo, P. Diffusiondet: Diffusion model for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 19830–19843. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar]

- Xu, L.; Song, Y.; Zhang, W.; An, Y.; Wang, Y.; Ning, H. An efficient foreign objects detection network for power substation. Image Vis. Comput. 2021, 109, 104159. [Google Scholar] [CrossRef]

- Ou, J.; Wang, J.; Xue, J.; Wang, J.; Zhou, X.; She, L.; Fan, Y. Infrared image target detection of substation electrical equipment using an improved faster R-CNN. IEEE Trans. Power Deliv. 2022, 38, 387–396. [Google Scholar] [CrossRef]

- Wu, T.; Zhou, Z.; Liu, J.; Zhang, D.; Fu, Q.; Ou, Y.; Jiao, R. Ise-yolo: A real-time infrared detection model for substation equipment. IEEE Trans. Power Deliv. 2024, 39, 2378–2387. [Google Scholar] [CrossRef]

- Tao, H.; Paul, A.; Wu, Z. Infrared Image Detection and Recognition of Substation Electrical Equipment Based on Improved YOLOv8. Appl. Sci. 2024, 15, 328. [Google Scholar] [CrossRef]

- Ji, C.; Liu, J.; Zhang, F.; Jia, X.; Song, Z.; Liang, C.; Huang, X. Oil leak detection in substation equipment based on PFDAL-DETR network. J. Real-Time Image Process. 2025, 22, 22. [Google Scholar] [CrossRef]

- Li, S.; Long, L.; Fan, Q.; Zhu, T. Infrared Image Object Detection of Substation Electrical Equipment Based on Enhanced RT-DETR. In Proceedings of the 2024 4th International Conference on Intelligent Power and Systems (ICIPS), Yichang, China, 6–8 December 2024; pp. 321–329. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).