1. Introduction

As the proportion of wind energy, a green and renewable energy source, continues to increase in the global energy structure, how to improve the operational efficiency, stability, and dispatching capability of wind farms has become an important topic in current renewable energy system research. Wind speed forecasting, as a fundamental part of wind power system operation and grid-connected control, plays a crucial role in power system dispatching optimization and operation management. Due to the highly nonlinear, non-stationary, and uncertain characteristics of wind speed, the task of wind speed forecasting has long faced great challenges in terms of modeling accuracy, generalization ability, and real-time performance.

Physical modeling methods are among the earliest approaches used for wind speed forecasting. These methods mainly rely on numerical weather-prediction models, such as the weather research and forecasting (WRF) model and the mesoscale model 5 (MM5) model [

1], and construct medium- or long-term wind speed-forecasting frameworks on a global or regional scale by systematically modeling the dynamic processes of the wind field, thermodynamic processes, terrain features, and boundary layer disturbances. Although physical modeling methods have good physical consistency and interpretability, they suffer from problems such as complex modeling, high computational cost, sensitivity to boundary conditions, and difficulty in frequent updates, making them unsuitable for short-term or ultra-short-term forecasting tasks [

2,

3].

Statistical models take wind speed time series as the modeling object and construct formal statistical relationship models based on historical data to perform regression or extrapolation on data trend changes. This type of method was widely applied in short-term wind speed forecasting in the early stage. Common models include autoregressive moving average model (ARMA) [

4], autoregressive integrated moving average model (ARIMA) [

5], markov autoregressive models [

6], and support vector regression (SVR) [

7,

8,

9]. However, these models generally rely on assumptions of stationarity and linearity, making it difficult to handle complex nonlinear wind speed signals, which leads to significant limitations in generalization ability and long-term forecasting accuracy.

In recent years, data-driven intelligent modeling methods have gradually replaced traditional physical modeling and statistical regression models, becoming a research hotspot. Deep learning, with its strong nonlinear modeling and adaptive capabilities, has been widely applied in the field of wind speed forecasting. Among them, structures such as deep neural network (DNN), convolutional neural network (CNN), long short-term memory (LSTM), and gated recurrent unit (GRU) have been widely used to model the temporal dynamic process of wind speed. Noorollahi et al. [

10] used artificial neural network (ANN) to forecast wind speed in both temporal and spatial dimensions. Ak et al. [

11] trained multilayer perceptron neural networks using a multi-objective genetic algorithm to realize wind speed interval forecasting, and combined the extreme learning machine with the nearest neighbor method for short-term wind speed prediction. Kadhem et al. [

12] developed a wind speed data-forecasting method based on the Weibull distribution and ANN, which can utilize the essential dependence between wind speed and seasonal weather variations to model wind speed data based on seasonal wind changes within a specific time range. Wang et al. [

13] proposed a hybrid deep learning method based on wavelet transform, deep belief network, and quantile regression for deterministic and probabilistic wind speed forecasting. Hu et al. [

14] used deep learning to extract wind speed patterns from data-rich wind farms and fine-tuned the model with data from newly built wind farms, realizing a knowledge transfer-based wind speed-forecasting method that effectively reduces prediction error. Khodayar et al. [

15] introduced a rough deep neural network—built on stacked autoencoders and denoising autoencoders—for ultra-short- and short-term wind speed forecasting. By adding rough neural networks to boost robustness, it achieved lower RMSE and MAE than both conventional deep and shallow models.

However, although these models have made some progress in prediction accuracy, they still suffer from problems such as being prone to local optima, overfitting, slow convergence speed, and weak generalization ability [

16]. Moreover, the inherent non-stationarity and strong disturbance characteristics of wind speed data cause single models to lack prediction stability in the face of severe fluctuations or sudden changes, and their generalization ability is limited. In response, researchers have gradually introduced data-preprocessing strategies, such as denoising the original wind speed series to reduce sequence randomness, or decomposing the wind speed series into multiple sub-series of different frequencies, and then forecasting the preprocessed wind speed data. Liu et al. [

17] proposed a novel multi-step wind speed-forecasting model combining variational mode decomposition (VMD), singular spectrum analysis (SSA), LSTM and extreme learning machine (ELM). Comparative experiments with eight other models showed that this model performed best and was more robust in multi-step forecasting performance and trend information extraction. Liu et al. [

18] proposed a wind speed-forecasting model based on wavelet packet decomposition (WPD), CNN, and CNN-LSTM. Comparative experiments with eight models demonstrated its robustness and superior performance, especially in wind speed mutation cases. Ref. [

19] established a hybrid framework for multi-step wind speed forecasting based on WPD, complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) and ANN, and compared the advantages and disadvantages of different decomposition methods. Santhosh et al. [

20] applied ensemble empirical mode decomposition (EEMD) to the original data and used wavelet neural network for forecasting. Zhang et al. [

21] used optimized variational mode decomposition (OVMD) to eliminate redundant noise. Peng et al. [

22] first used wavelet transform (WT) to decompose wind speed data into more stationary components in the wind speed-decomposition process. Peng et al. [

23] used the wavelet soft threshold denoising (WSTD) method to filter out redundant information in the original wind speed data during the decomposition process. Xu et al. [

24] proposed a wind speed-forecasting method based on seasonal and trend decomposition using Loess (STL), which decomposes wind speed series into seasonal, trend, and residual components and applies models such as attention-based long LSTM and ARIMA-LSTM to significantly improve forecasting accuracy and responsiveness to extremes.

The above methods have verified the positive role of data-preprocessing techniques in wind speed forecasting. However, due to the fact that the level of wind speed uncertainty cannot be accurately quantified through time-frequency analysis and mode decomposition, the bias in multi-step forecasting remains large. At present, signal-processing methods for original wind speed series cannot effectively handle medium- and long-term forecasts beyond 4 h. According to the power spectrum of the atmospheric boundary layer, actual wind speed can be divided into two components [

25]: the first part is the mean wind speed, and the second component is the turbulent wind speed. Numerous studies have shown that turbulent wind speed has a significant impact on wind power output. Kim et al. [

26] found that the wake effect significantly increased turbulence intensity and wind shear, and that the high turbulence intensity and wind shear gradient in the second stage led to an increase in fatigue load, with the damage equivalent load being 30–50% higher than that of the first stage. Siddiqui et al. [

27] conducted a complete 3D numerical transient simulation for offshore vertical axis wind turbines under varying turbulence intensity levels of incoming wind. The results showed that when turbulence intensity increased from

to

, the performance of the wind turbine dropped by approximately

to

compared with the non-turbulence case. Due to the above effects, turbulent wind speed should be considered in wind speed forecasting.

In addition, to further enhance the performance of wind speed forecasting, scholars have devoted efforts to integrating multiple models to achieve complementary advantages of various methods, thereby more comprehensively leveraging the predictive power of hybrid models and providing stronger support for wind speed forecasting. Neshat et al. [

28] integrated VMD with an improved arithmetic optimization algorithm (IAOA) to process wind speed data and established a hybrid model combining quantum convolutional neural network (QCNN) and bidirectional long short-term memory (BiLSTM) for wind speed prediction. Meanwhile, a fast and efficient hyper-parameters tuner (HPT) was introduced to adjust the parameters and architecture of the hybrid model. Experimental results showed that its accuracy and stability significantly outperformed five existing machine learning models and two hybrid models. Memarzadeh et al. [

29] decomposed the original wind speed data using wavelet transform and utilized the crow search algorithm to determine the hyperparameters of LSTM. The results indicated that this hybrid model outperformed the single LSTM model.

Alternatively, some researchers have attempted to incorporate intelligent optimization algorithms to automatically tune the hyperparameters of prediction models, aiming to further improve model performance and forecasting accuracy. These methods perform optimization in the search space, thereby avoiding the limitations of manual parameter setting, and have demonstrated promising potential in wind speed-prediction tasks. Samadianfard et al. [

30] applied the whale optimization algorithm (WOA) to a multilayer perceptron (MLP) model, and the results showed that this model outperformed multilayer perceptron-genetic algorithm (MLP-GA) and traditional MLP at all stations, achieving better performance in evaluation metrics such as RMSE. Wang et al. [

31], based on regression plot (RP) and SVR, introduced the cuckoo optimization algorithm (COA) to optimize model parameters and constructed a COA-SVR wind speed-forecasting model, which demonstrated stable and superior performance in multi-step forecasting and practical applications in wind farms. Wang et al. [

32] proposed a CNN-LSTM ensemble optimized by a multi-objective chameleon swarm algorithm (CSA), achieving notable improvements in ultra-short-term wind speed forecasting.

However, most ensemble systems allocate weights to the outputs of sub-models through optimization algorithms or multi-objective optimization algorithms and perform linear summation to obtain the final prediction result. Since wind speed has high instability and volatility, simple linear summation is not conducive to the organic integration of sub-model predictions, limiting the performance of the ensemble system. The Transformer architecture, due to its global attention mechanism advantage in sequence modeling, shows great potential in wind speed forecasting. Bommodi et al. [

33] combined Improved complete ensemble empirical mode decomposition (ICEEMDAN) with Transformer to model the multi-scale fluctuation structure of wind speed. Zha et al. [

34] introduced convolutional mechanisms into the Transformer encoder module to enhance the model’s ability to extract local dynamic features. These studies indicate that the Transformer-based dynamic fusion mechanism has better modeling efficiency and generalization ability compared to traditional weighted ensemble strategies, and is especially suitable for non-stationary time series with high noise and high-frequency disturbances like wind speed. Besides the evolution of modeling architectures, input feature construction is also a key factor affecting wind speed forecasting performance.

Current research has gradually expanded from modeling a single wind speed sequence to collaborative modeling of multiple meteorological factors. Variables such as temperature, air pressure, and wind direction are widely used to enhance the model’s predictive perception capability. Zhu et al. [

35] proposed a modeling method that integrates environmental features, background features, and multi-scale spatial information, significantly improving ultra-short-term wind speed-forecasting performance. Yu et al. [

36] combined multi-feature fusion strategies with frequency-domain feature-extraction techniques to achieve joint deterministic and probabilistic wind speed forecasting, demonstrating stronger modeling robustness and generalization ability. However, existing studies mostly treat these meteorological variables as static external features, directly concatenating them as model inputs, ignoring their own temporal dynamic characteristics and potential coupled evolutionary relationships with wind speed.

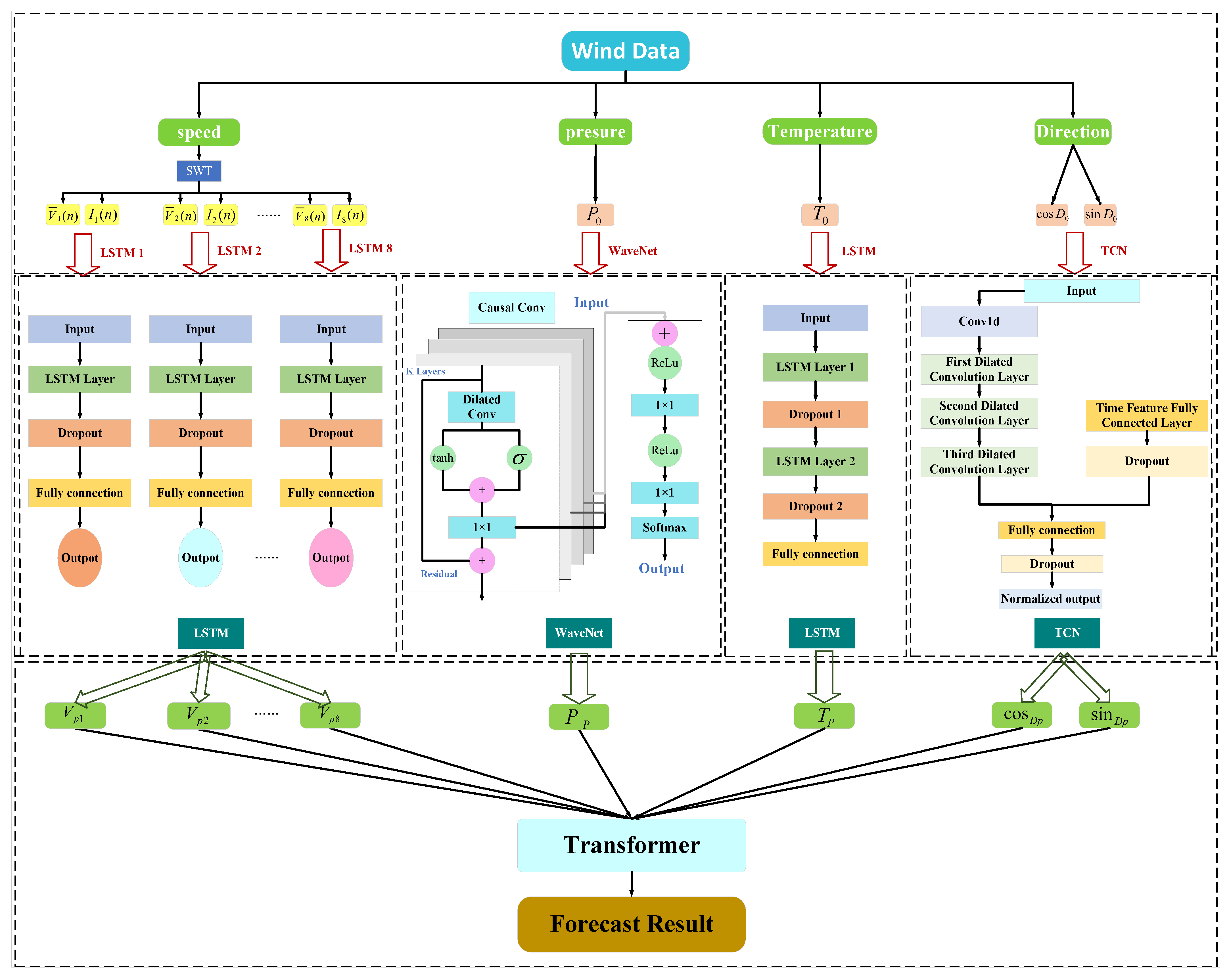

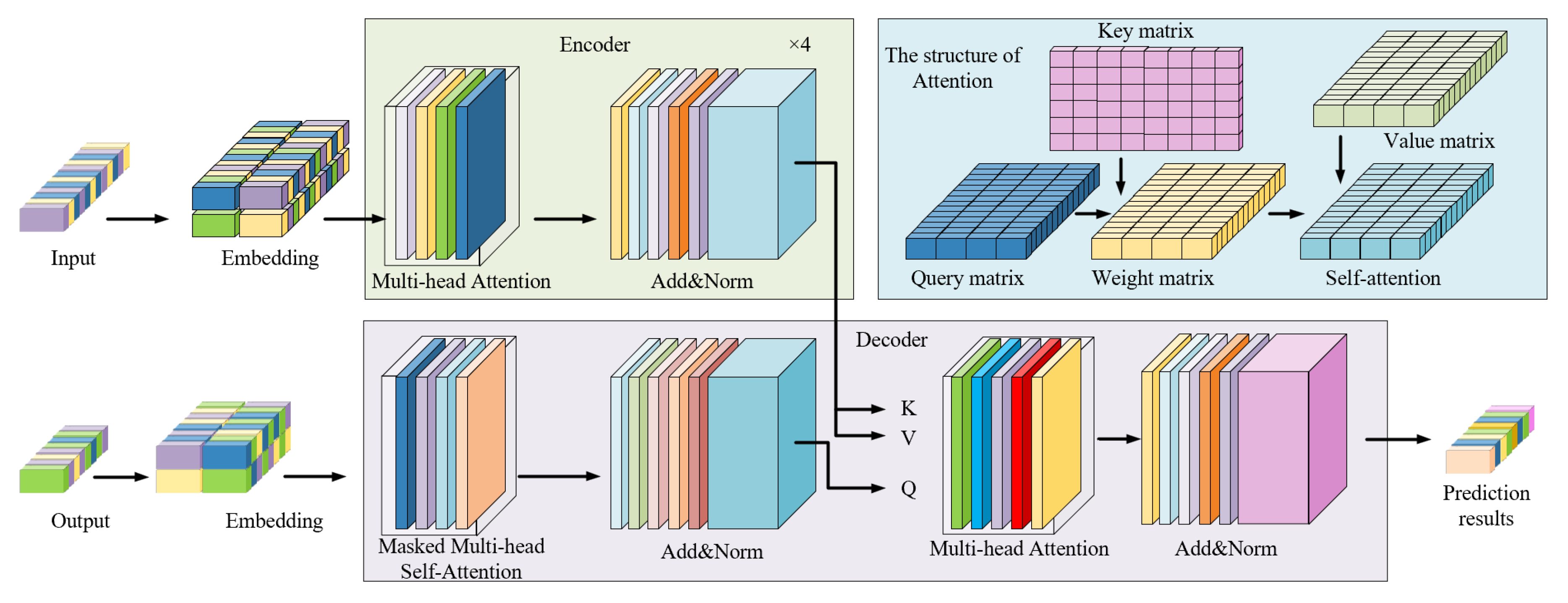

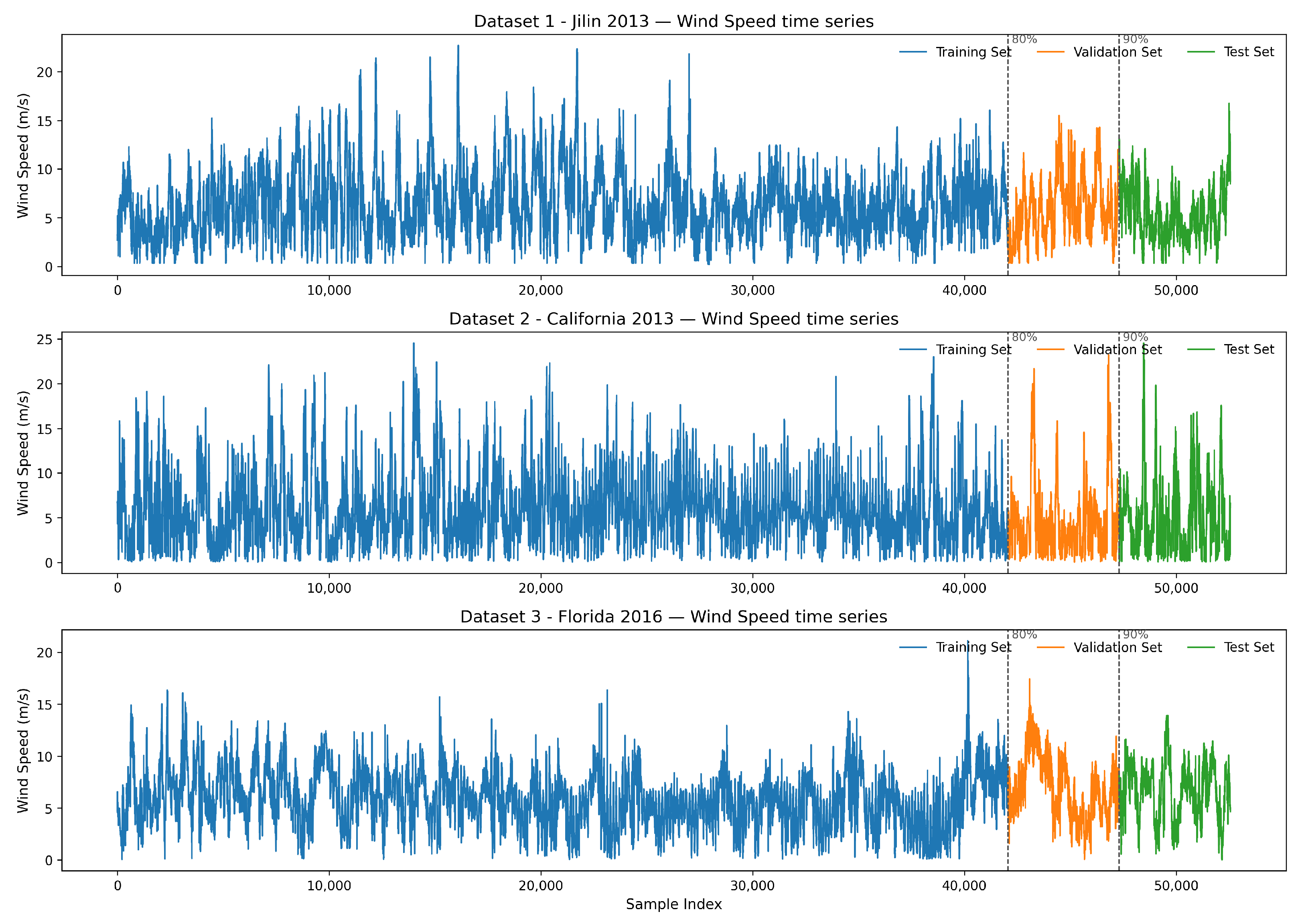

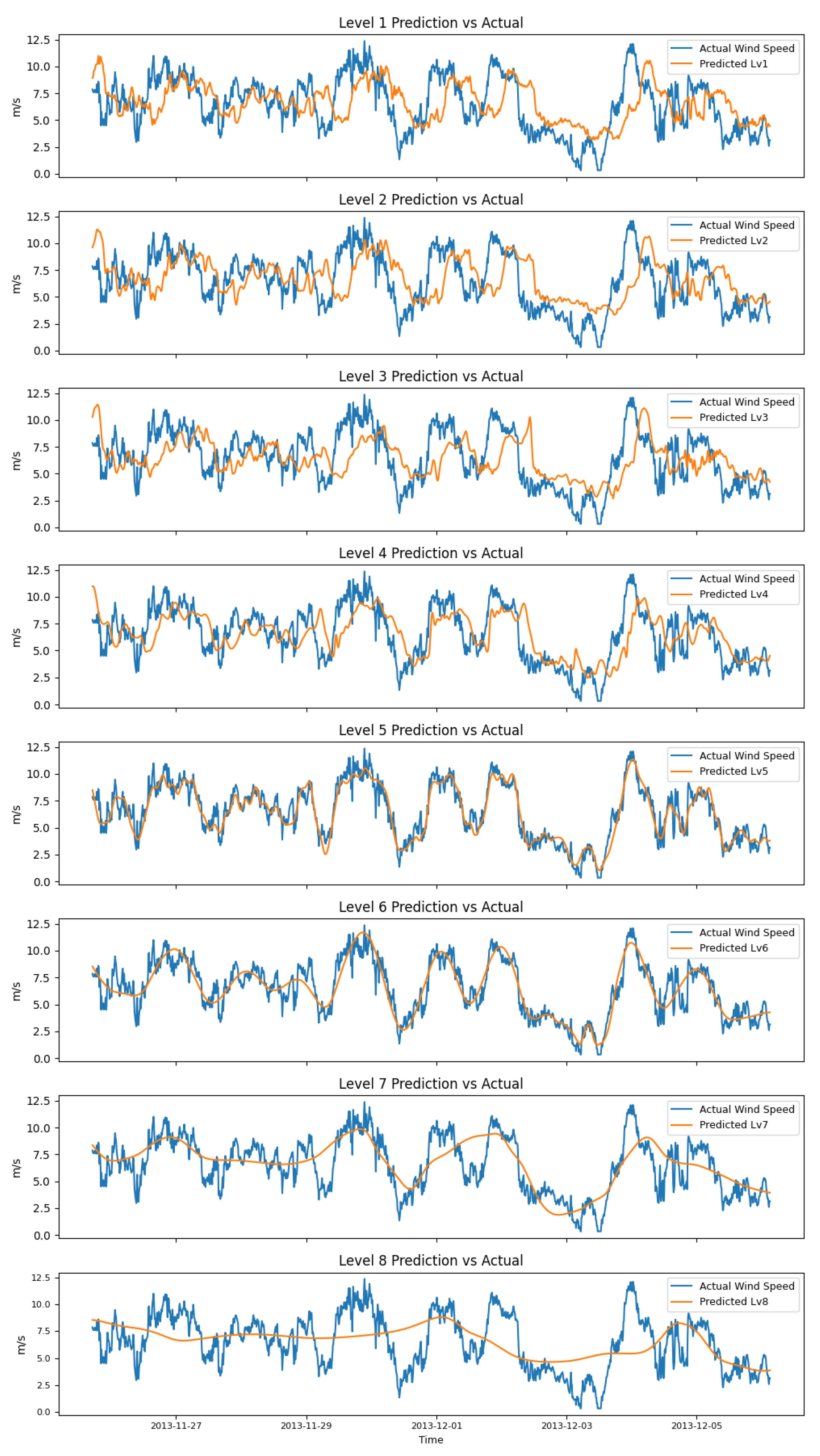

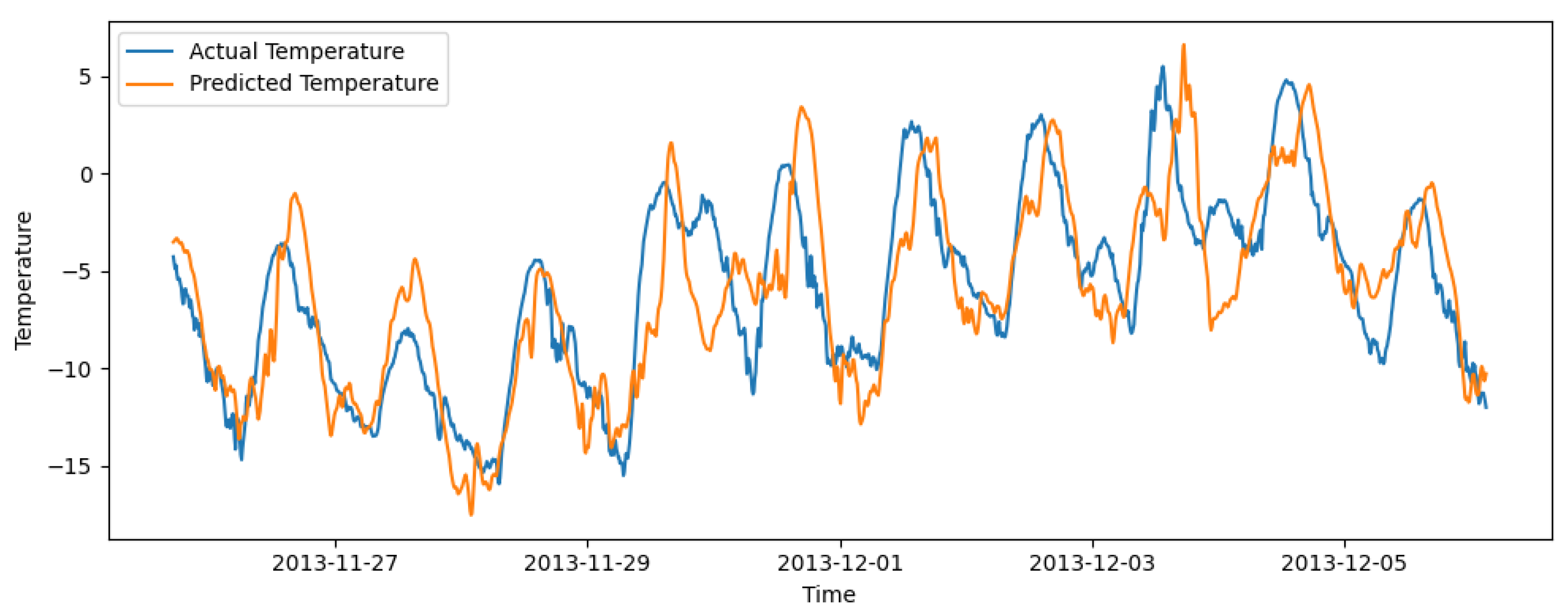

Based on the above literature review and the strengths and limitations of existing models, this study proposes a novel integrated system to maximize the predictive potential of wind speed and other relevant meteorological data, aiming to improve short- and medium-term wind speed-forecasting performance. First, stationary wavelet transform (SWT) with the Sym8 wavelet basis is employed in conjunction with turbulence intensity computation to perform multi-scale decomposition of the original wind speed data, yielding eight sequences representing mean wind speed and turbulent wind speed at different temporal scales. Second, the eight groups of decomposed wind speed and turbulence intensity features are fed into eight independently constructed LSTM sub-models to obtain initial predictions. Third, three meteorological factors—air pressure, temperature, and wind direction—are incorporated and modeled using WaveNet, LSTM, and temporal convolutional network (TCN) architectures respectively, thereby supplementing the external environmental information relevant to wind speed forecasting. Finally, the eight sub-component wind speed predictions and four meteorological prediction outputs are integrated into a Transformer model. Leveraging the self-attention mechanism, the model captures deep temporal dependencies among multiple variables, enabling nonlinear feature fusion and final wind speed output.

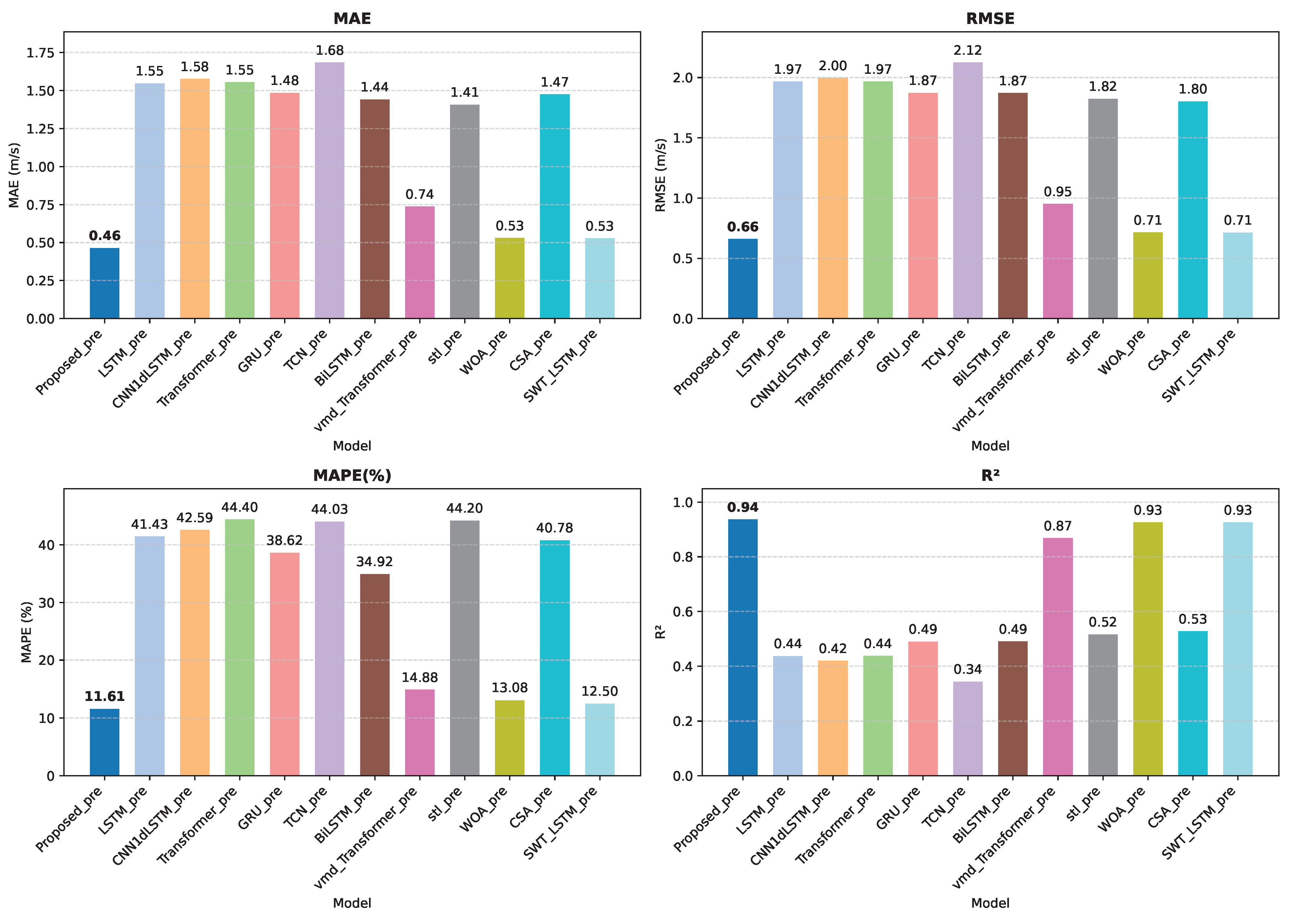

The proposed method is validated from the following perspectives: (1) Comparison with six classical single neural network prediction models; (2) Comparison with two neural network models based on data-preprocessing techniques; (3) Comparison with a model where the nonlinear combination output module in the proposed model is replaced by a linear combination output based on optimization algorithms (such as CSA, WOA); (4) Comparison with a model that uses only the LSTM model based on turbulence intensity and SWT, without incorporating multiple meteorological features and multiple time scales.

The main contributions of this study are as follows:

- (1)

Systematic introduction of multi-scale turbulence intensity as a measure of wind speed uncertainty. Through SWT decomposition, joint modeling of mean wind speed and turbulence intensity features is achieved, significantly enhancing the model’s capability to capture rapid fluctuations in wind speed.

- (2)

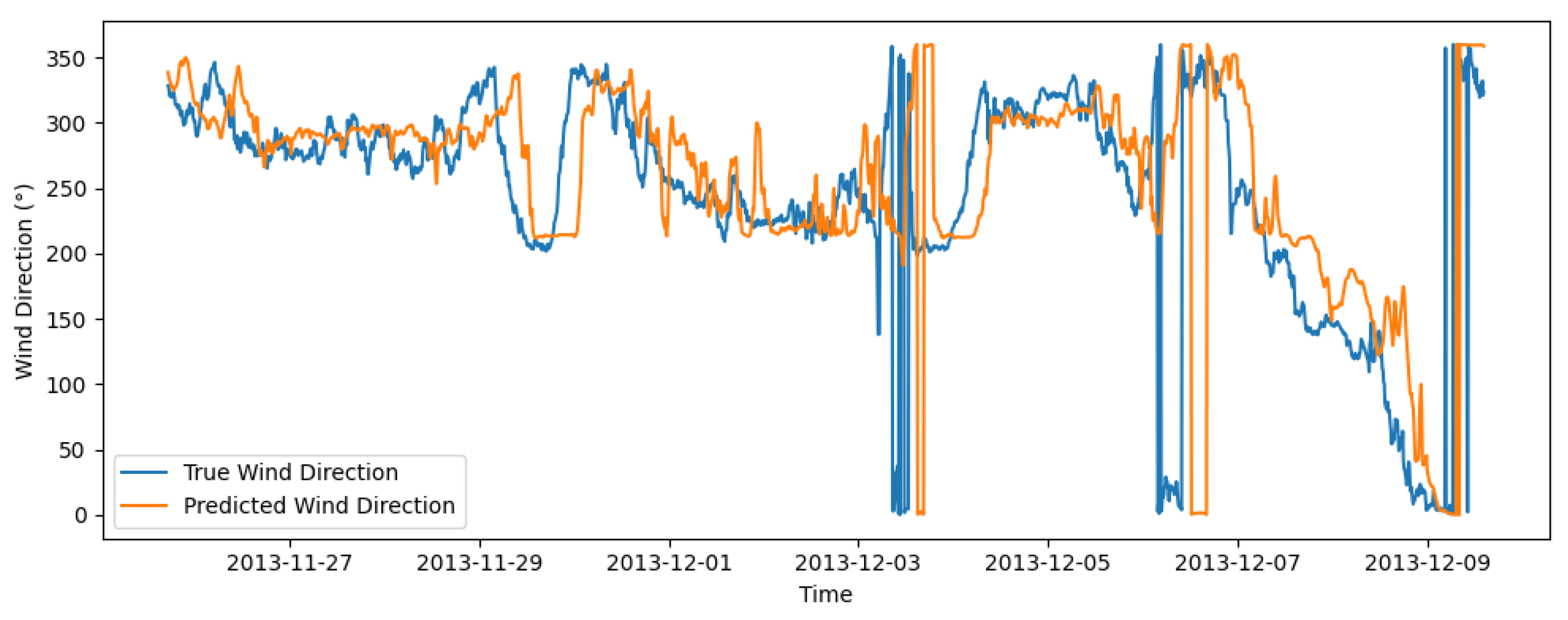

For the first time in wind speed forecasting, independent prediction sub-models were constructed for other meteorological features to extract their evolutionary trend characteristics, and the results were unified with the primary wind speed model within a Transformer architecture. This differs from previous approaches that treated other meteorological factors as static inputs. Additionally, considering the cyclical nature of wind direction, the cosine and sine values of wind direction were innovatively employed as both the input and output of the TCN.

- (3)

Utilization of the self-attention mechanism within the Transformer to enable dynamic, nonlinear fusion of sub-model outputs, effectively addressing the limitations of fixed-weight combinations under imbalanced data conditions and achieving more adaptive prediction integration.

- (4)

Demonstration of strong multi-step forecasting extensibility and structural flexibility, with excellent adaptability to abrupt wind fluctuations and long-term forecasting scenarios, indicating promising engineering applicability and deployment potential.

The remainder of this paper is organized as follows:

Section 2 presents the materials and methods used in the ensemble forecasting system.

Section 3 provides data description, evaluation metrics and details about the framework of the proposed ensemble system.

Section 4 introduces four experiments and the corresponding analyses. Further Conclusions and future work are provided in

Section 5.

5. Conclusions

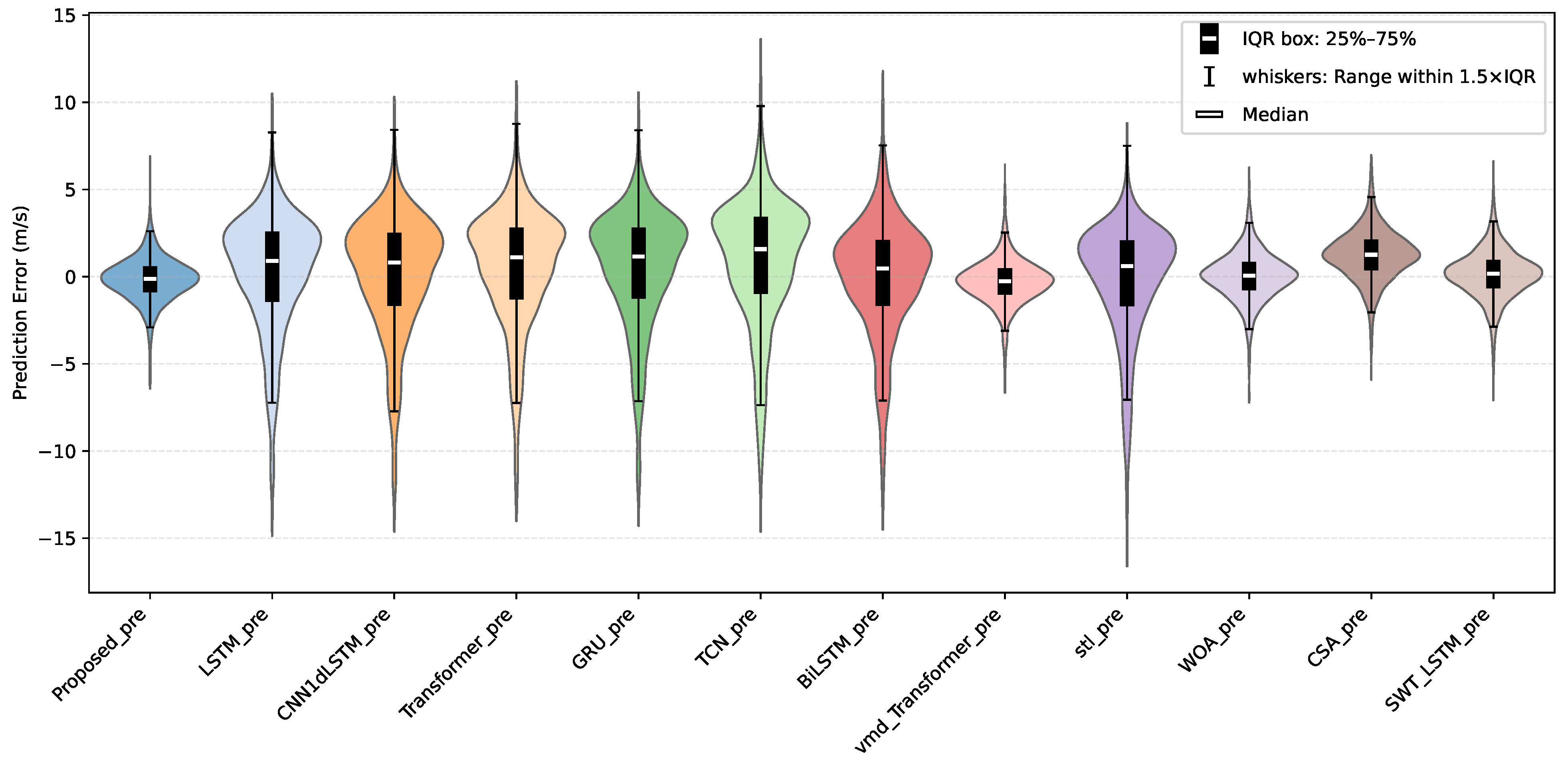

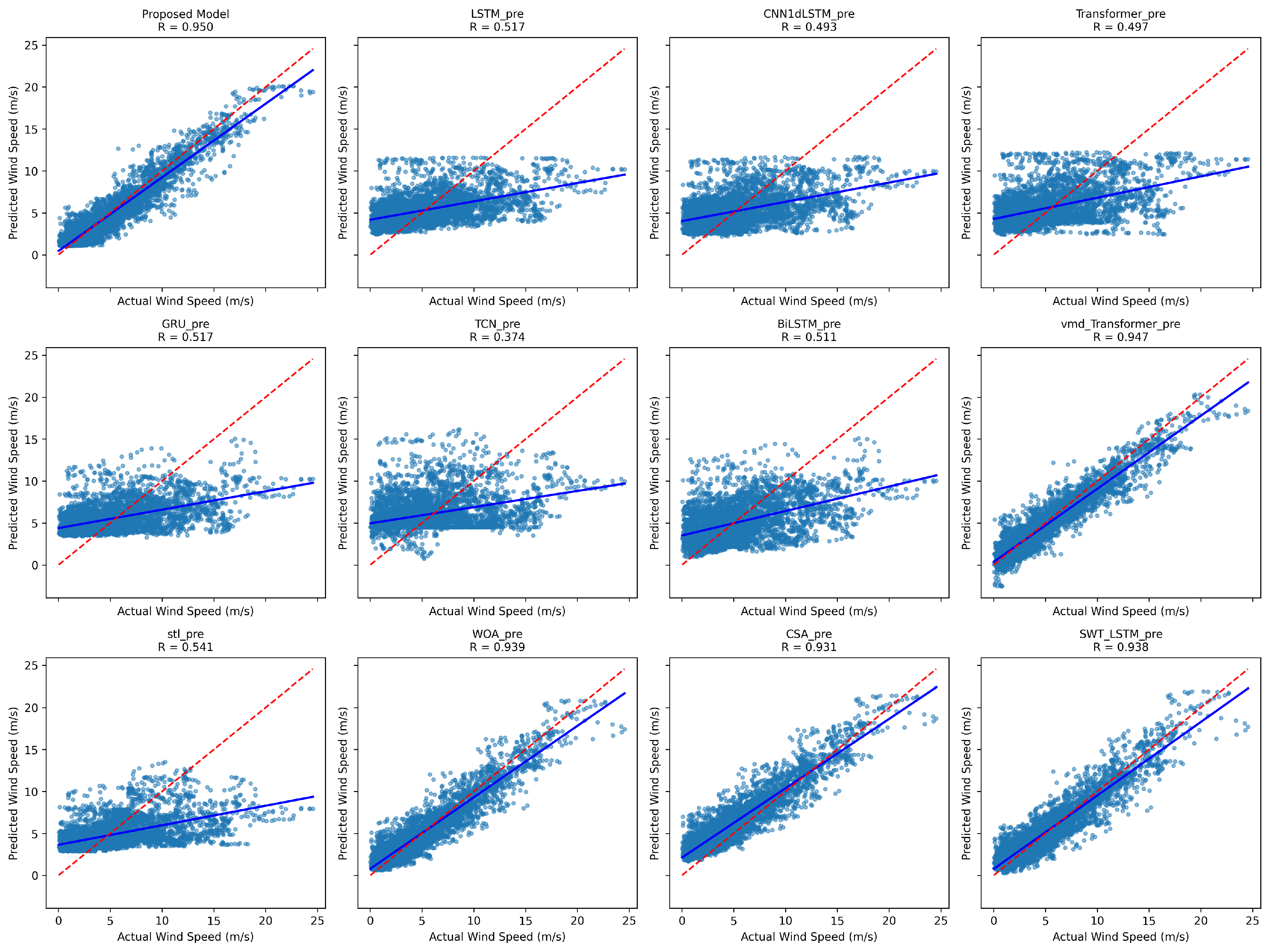

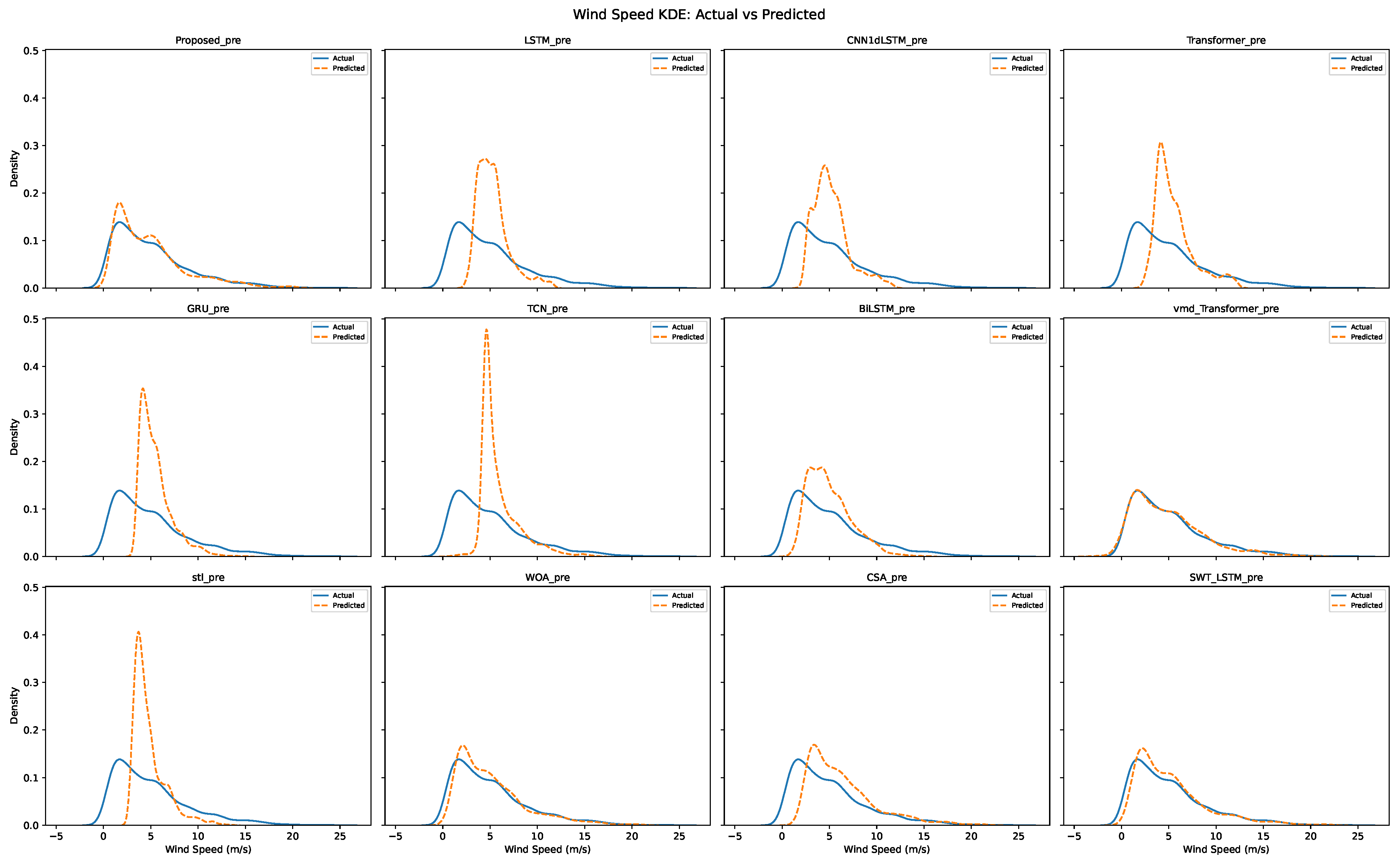

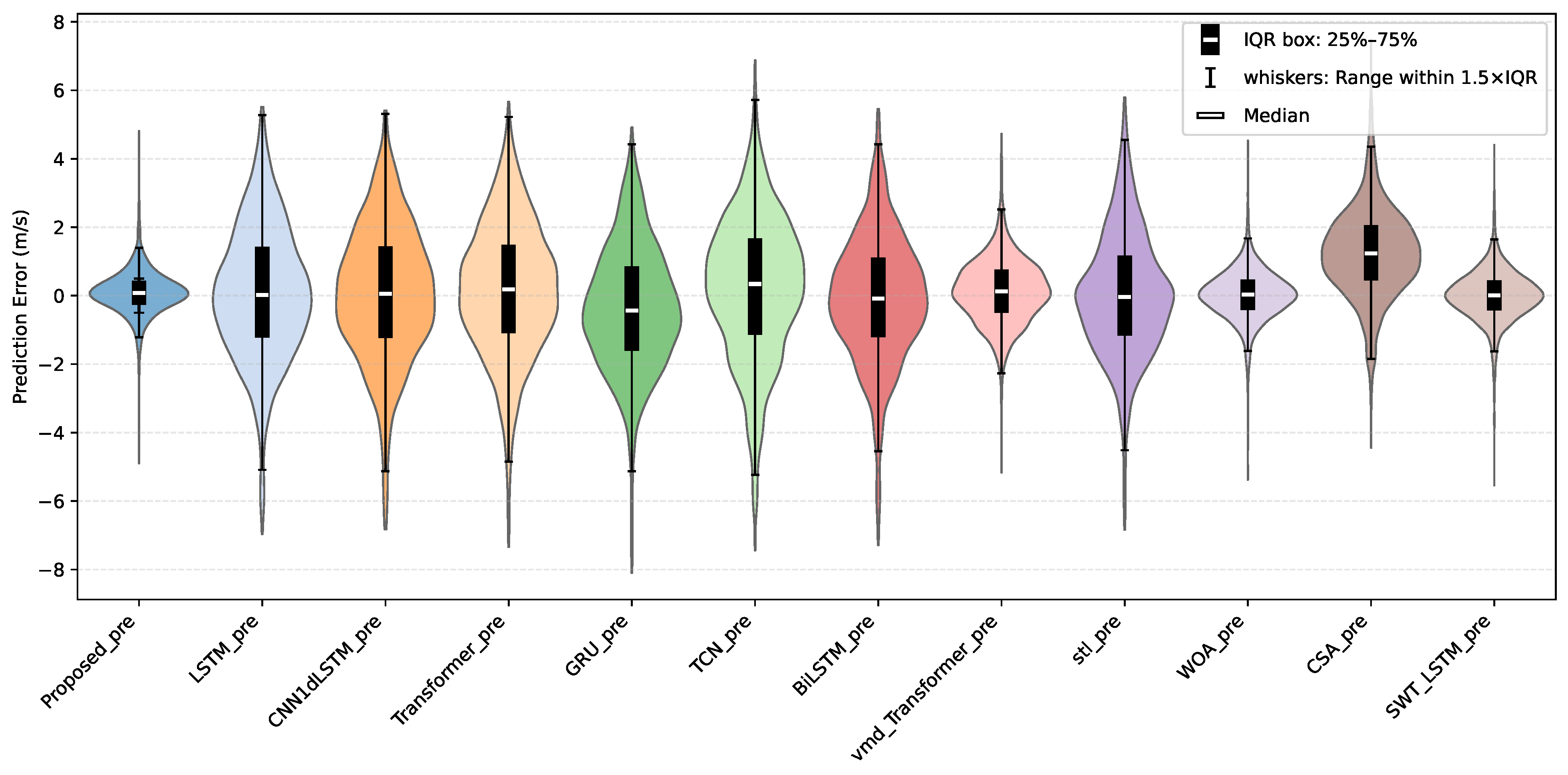

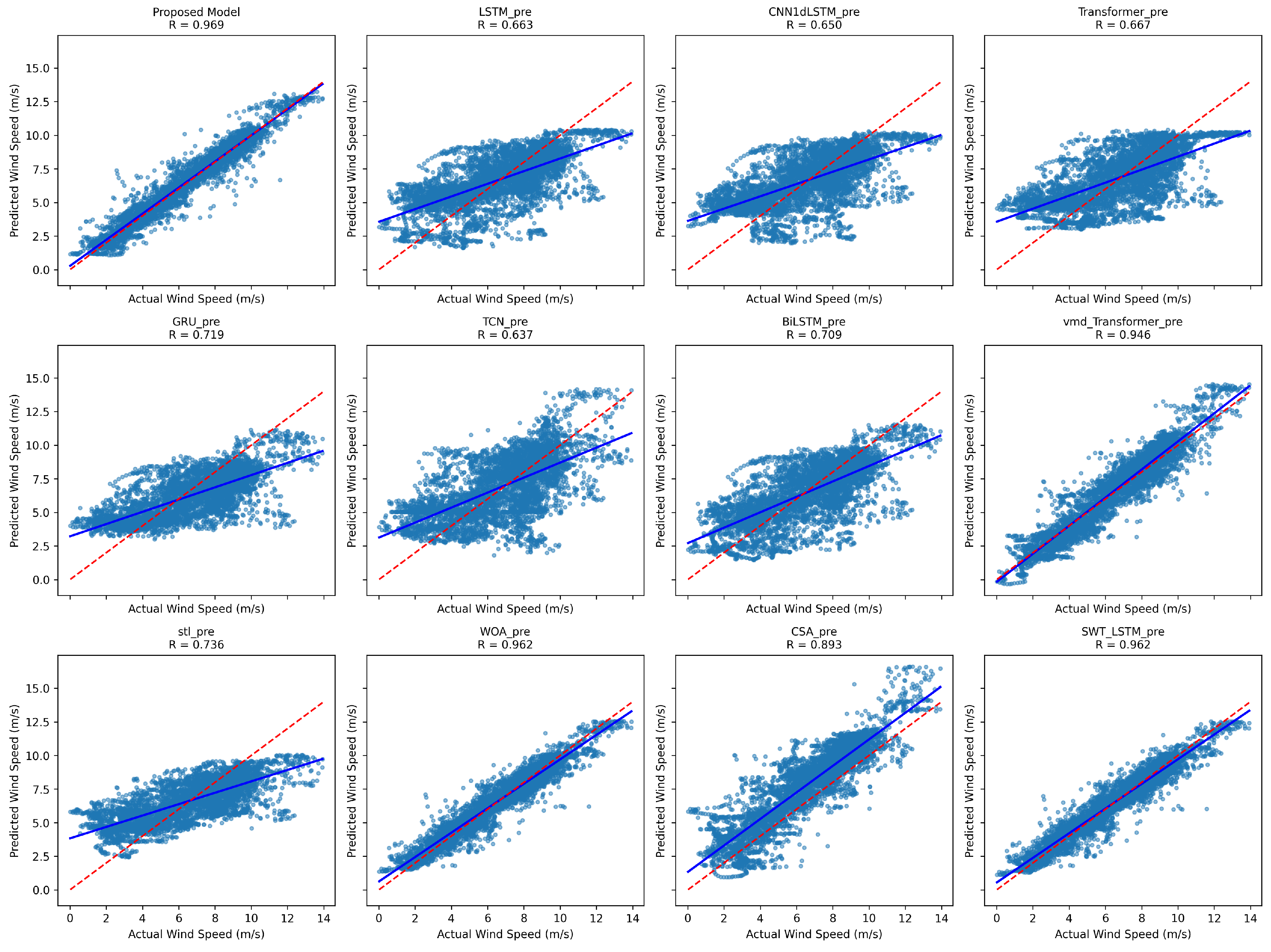

To enhance the accuracy and stability of short- and medium-term wind speed forecasting, this study proposes a forecasting framework that integrates multi-resolution turbulence intensity features with a hybrid deep neural network architecture. Comprehensive experimental results demonstrate that the proposed model achieves significant improvements across multiple evaluation metrics, particularly showing superior adaptability and robustness in capturing high-frequency fluctuations and extreme values.

From a structural perspective, the integration of Stationary Wavelet Transform (SWT) and turbulence intensity-extraction modules enables multi-scale dynamic feature representation, allowing subsequent neural sub-models to capture both the trend and stochasticity of wind speed at varying temporal resolutions. In particular, the introduction of turbulence intensity provides an effective quantification of wind speed non-stationarity. Experimental evidence suggests that this feature significantly contributes to the prediction accuracy, especially during periods of sharp wind fluctuations.

In terms of predictive model design, three distinct architectures—LSTM, WaveNet, and TCN—are employed to model the coupling sequences between wind speed–turbulence and auxiliary meteorological variables at different scales. These models collaboratively extract informative features from both spatial locality and temporal dependency perspectives. Finally, a Transformer-based self-attention mechanism is used for feature fusion, which overcomes the limitations of traditional linear weighted summation by enabling nonlinear and adaptive aggregation of multi-source outputs. The experimental results indicate that the proposed method demonstrates outstanding accuracy and stability in mid-term wind speed prediction, achieving a MAE of 0.6465, RMSE of 0.8740, MAPE of and a coefficient of determination () of 0.9174 on average over the three datasets, suggesting strong potential for engineering applications.

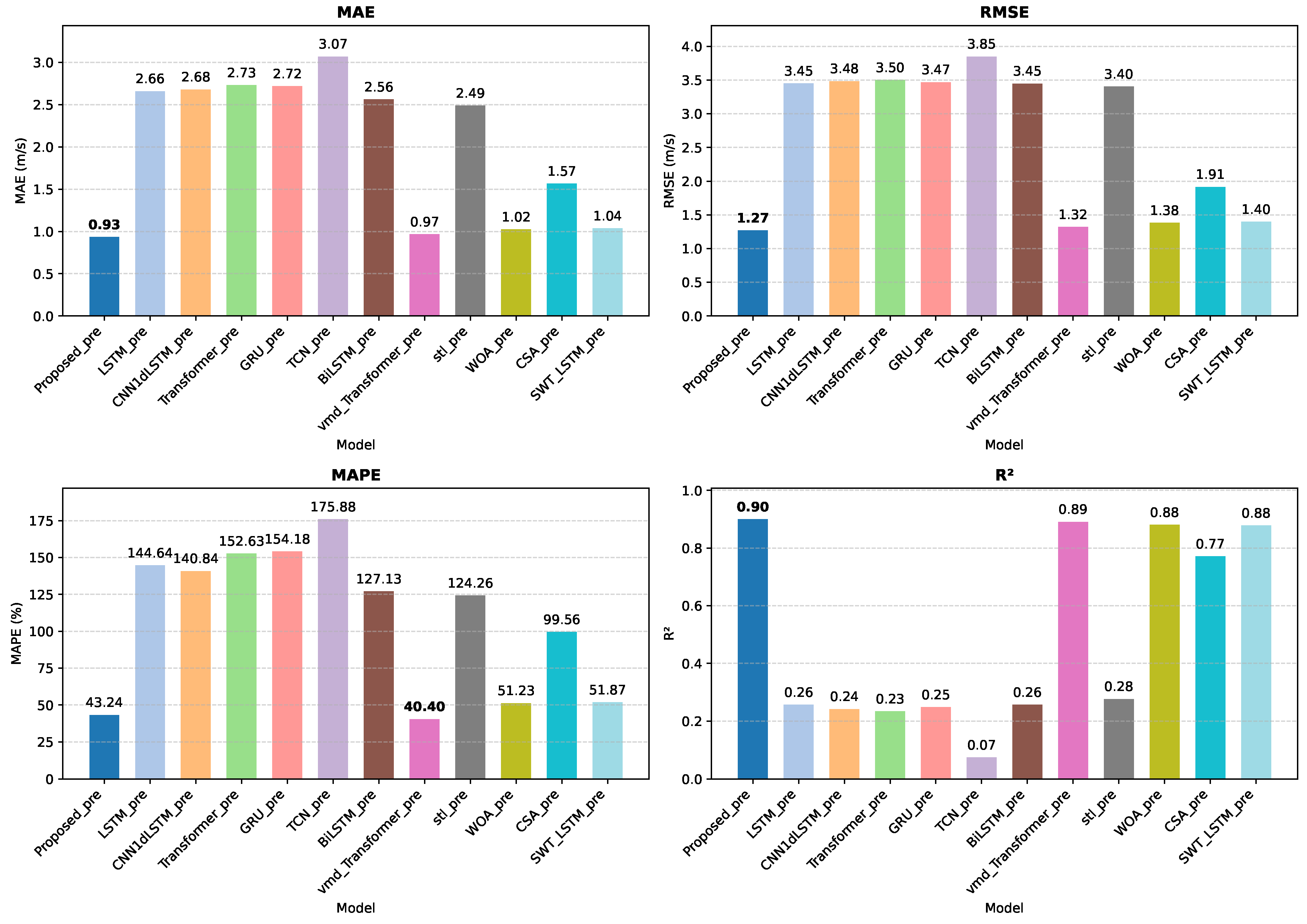

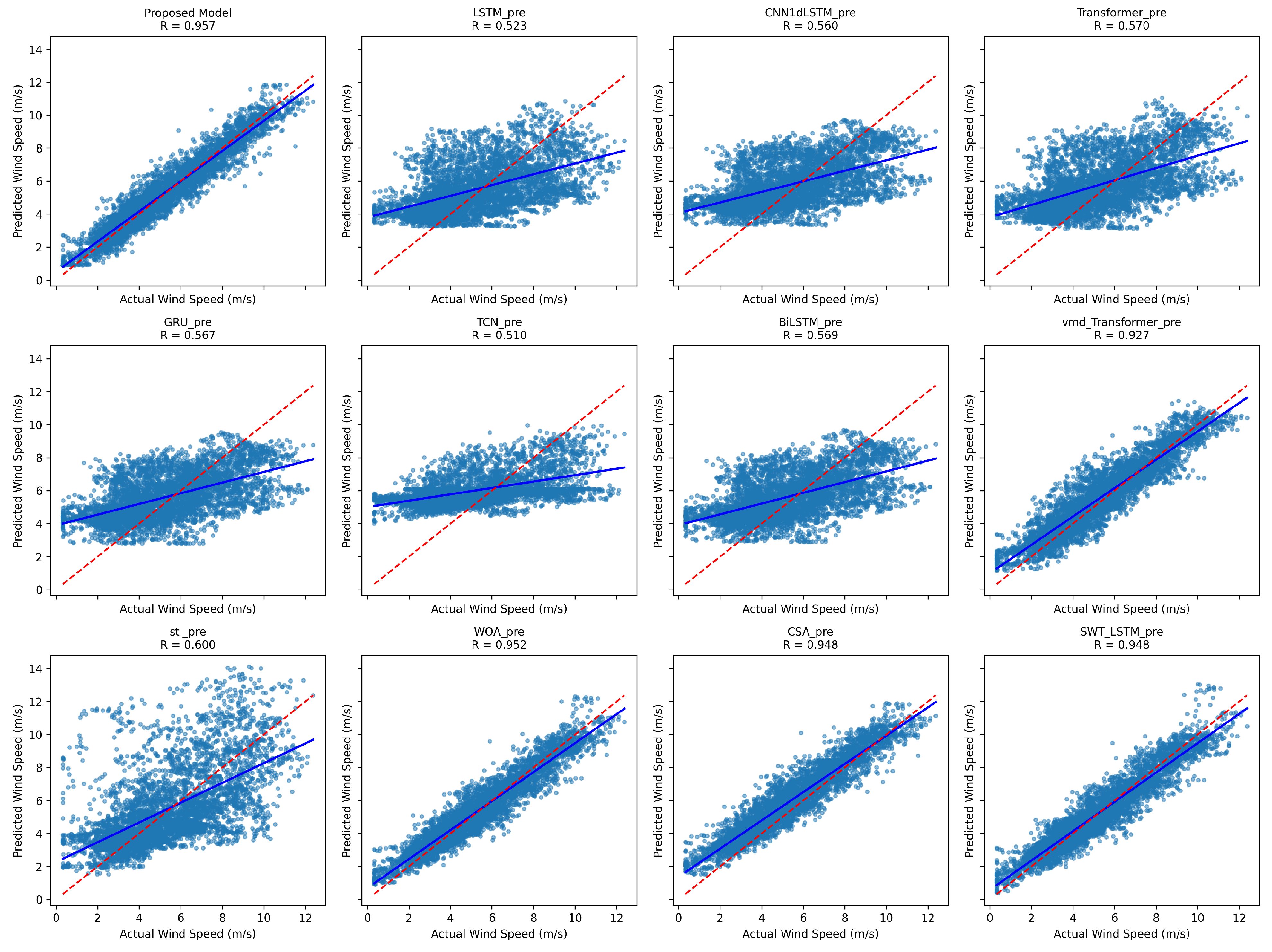

Comparative experiments further validate the performance advantages of the proposed approach. Compared to typical single deep learning models (LSTM, CNN1d-LSTM, Transformer, TCN, GRU, BiLSTM), on average over the three datasets, the proposed model achieves over reductions in MAE and RMSE, and an improvement of more than in , demonstrating the effectiveness of the hybrid ensemble strategy. Furthermore, in contrast to conventional preprocessing–AI model combinations such as STL-LSTM and VMD-Transformer, the proposed framework reduces MAE and RMSE by approximately , and increases by on average over the three datasets, highlighting the advantages of dynamic multi-variable prediction. Additionally, when compared with output-combination methods using traditional optimization algorithms (e.g., WOA, CSA), the proposed model consistently outperforms across all metrics, confirming the effectiveness of the Transformer-based nonlinear fusion strategy.

Based on the wind speed-forecasting framework proposed in this study, several strategic approaches can be adopted to adapt the model to new locations. First, the multi-resolution decomposition mechanism of the Stationary Wavelet Transform (SWT) naturally accommodates different wind regimes. Second, the Transformer-based fusion mechanism, with its attention weights, can dynamically adjust the contributions of different features according to varying geographical and climatic conditions, thereby autonomously adapting to the relationships between meteorological factors and wind speed at different sites and ensuring strong transferability. Although the outstanding performance of the proposed model on three datasets from distinct geographical and climatic contexts already demonstrates its robustness, further adaptation strategies may be required when applying the model to entirely new locations. For such cases, a two-stage adaptation strategy can be employed. In the first stage, limited fine-tuning with a small amount of local data can be conducted, for instance by fine-tuning the parameters of SWT (e.g., wavelet basis functions and decomposition levels) to optimally capture the dominant fluctuation characteristics of local wind patterns. In the second stage, a continual learning mechanism can be established to progressively incorporate new data and adapt to evolving environmental conditions.

Despite the promising results in model design and forecasting accuracy, several future directions warrant further investigation:

- (1)

Expansion of input features: Currently, the model focuses primarily on wind speed and turbulence intensity. Future research could incorporate additional meteorological variables such as boundary layer height, cloud cover, and humidity to improve sensitivity to complex climatic drivers.

- (2)

Hyperparameter tuning approach: Some hyperparameters of the deep learning model were determined through empirical settings or trial-and-error methods under the guidance of validation performance, which makes it difficult to ensure that the global optimum is achieved. In future research work, we plan to explore more systematic and efficient hyperparameter optimization strategies, including Bayesian optimization and meta-heuristic algorithms such as particle swarm optimization and genetic algorithms, so as to further improve the model performance.

- (3)

Fusion strategy optimization: Although the Transformer mechanism shows strong performance in feature fusion, its parameter redundancy and computational cost remain concerns. Lightweight attention mechanisms (e.g., Performer, Linformer) could be explored to retain accuracy while reducing training overhead.

- (4)

Extreme wind event forecasting: While the model performs well overall, its accuracy in predicting sudden gusts or extreme wind events needs further enhancement. Future efforts may involve integrating extreme value theory or imbalanced learning techniques to improve early-warning performance under extreme conditions.

In conclusion, this study presents a feasible and effective approach for constructing a multi-scale wind speed-forecasting system with deep feature-extraction capabilities. Future research will focus on feature enrichment, multi-site adaptability, fusion optimization, and extreme event forecasting, thereby facilitating the deployment of the proposed model in real-world wind power systems.