Sampling-Based Next-Event Prediction for Wind-Turbine Maintenance Processes

Abstract

1. Introduction

- This paper presents an approach for obtaining sampled logs based on trace importance values. First, the importance of each trace in the event log is quantified, and traces with higher importance are selected to form the sampled log. This approach effectively reduces the computational resources required for next-event prediction in wind turbines, enhances experimental efficiency, and mitigates the risk of overfitting. Unlike commonly used approaches such as random and stratified sampling, the proposed approach treats the complete trace as the basic unit, thereby preserving the temporal order and dependencies of events, while effectively capturing the primary execution paths;

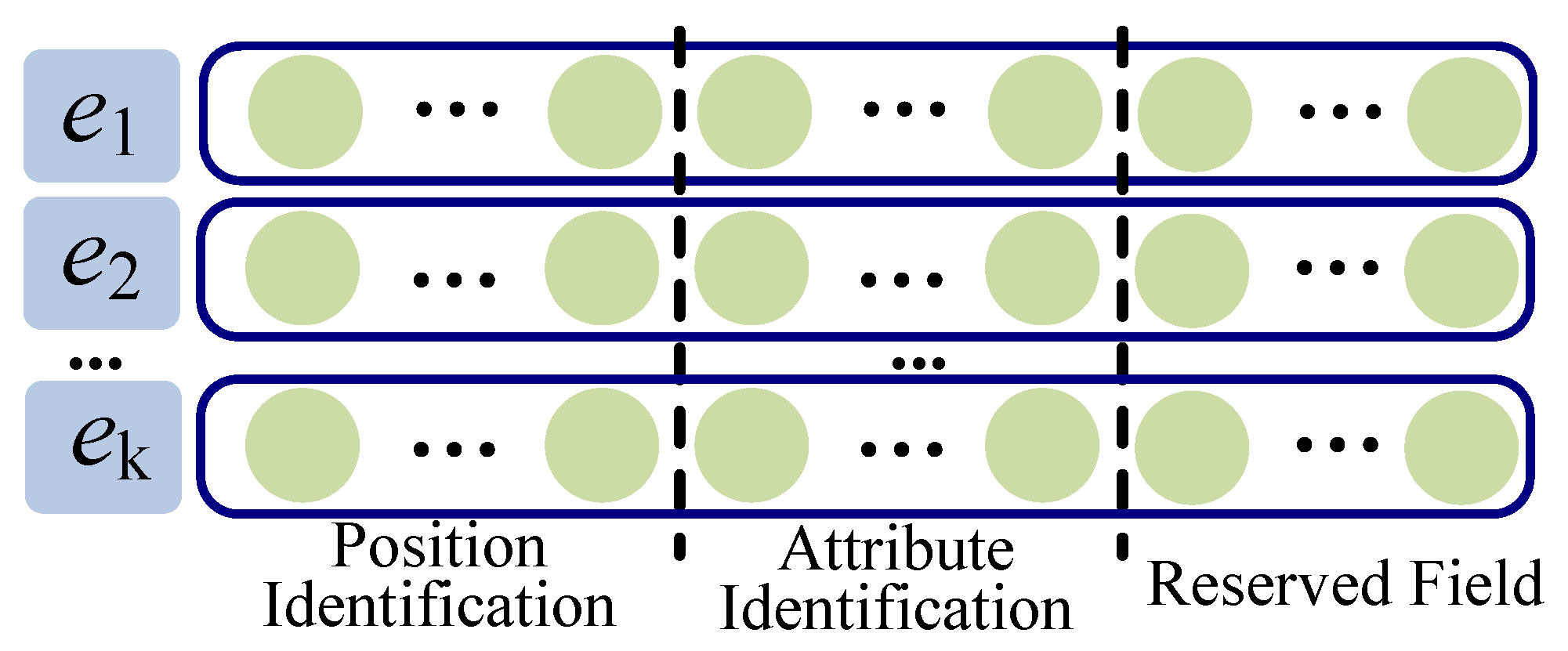

- This approach utilizes one-hot encoding to recode the trace prefixes, where each activity is represented as an independent ternary vector, offering a straightforward and unambiguous representation. Based on these encoded sequences, six prediction models are designed to capture the temporal dependencies between events and the underlying causal structure of the process, thereby enhancing next-event prediction performance in wind turbines. Moreover, the use of one-hot encoding enables the models to better learn the logical flow and sequence characteristics of WTMPs, which improves generalization and reduces the risk of overfitting.

2. Relation Work

2.1. Wind-Turbine Maintenance Management

2.2. Business Process Next-Event Prediction

2.3. Business Process Event Log Sampling

3. Preliminaries

3.1. Wind-Turbine Maintenance Process Event Logs

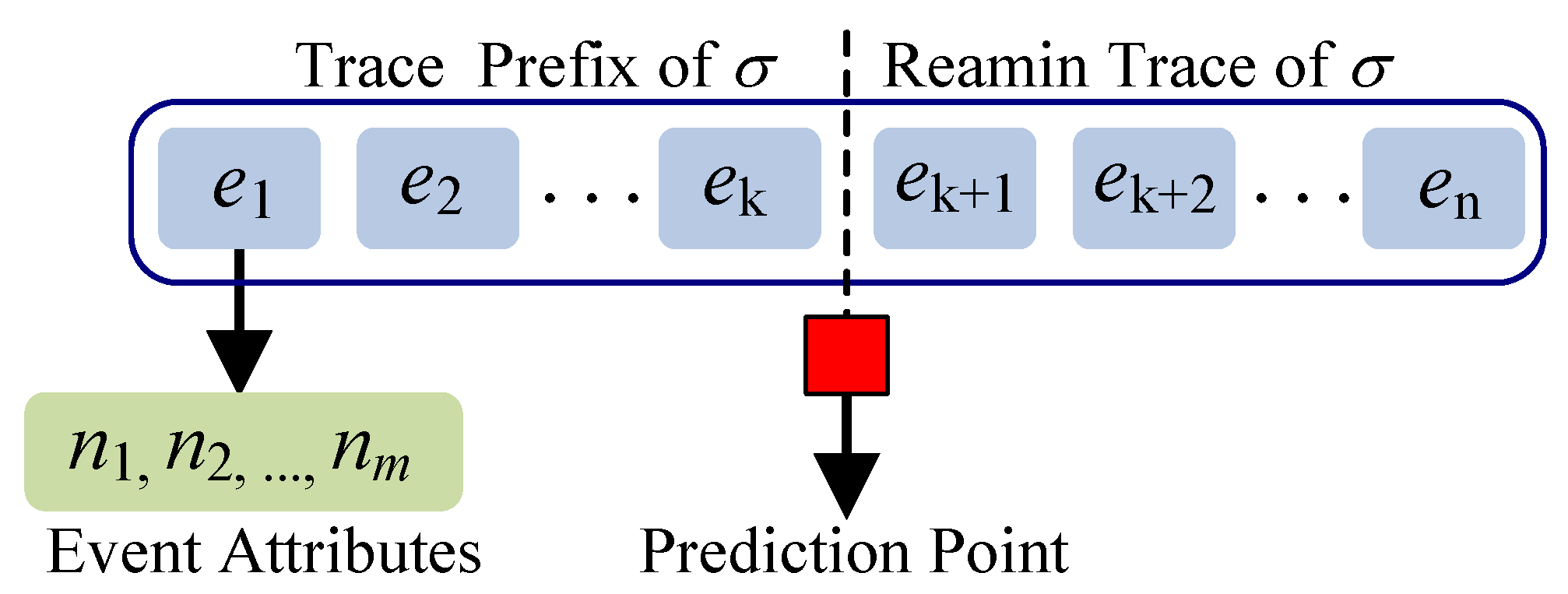

3.2. Next-Event Prediction

4. Sampling-Based Next-Event Prediction for the WTMP

4.1. An Approach Overview

- Phase 1. WTMP Event Log Pre-processing and Sampling. The WTMP event log is first divided into a training log and a validation one before the prediction model construction. A novel event log sampling technique is proposed to extract a representative sample from the original WTMP training log by quantifying the importance of individual traces. Please note that all existing sampling techniques can be applied in this phase, and the sampling process reduces the scale of the training log; therefore, the model training time is reduced; and

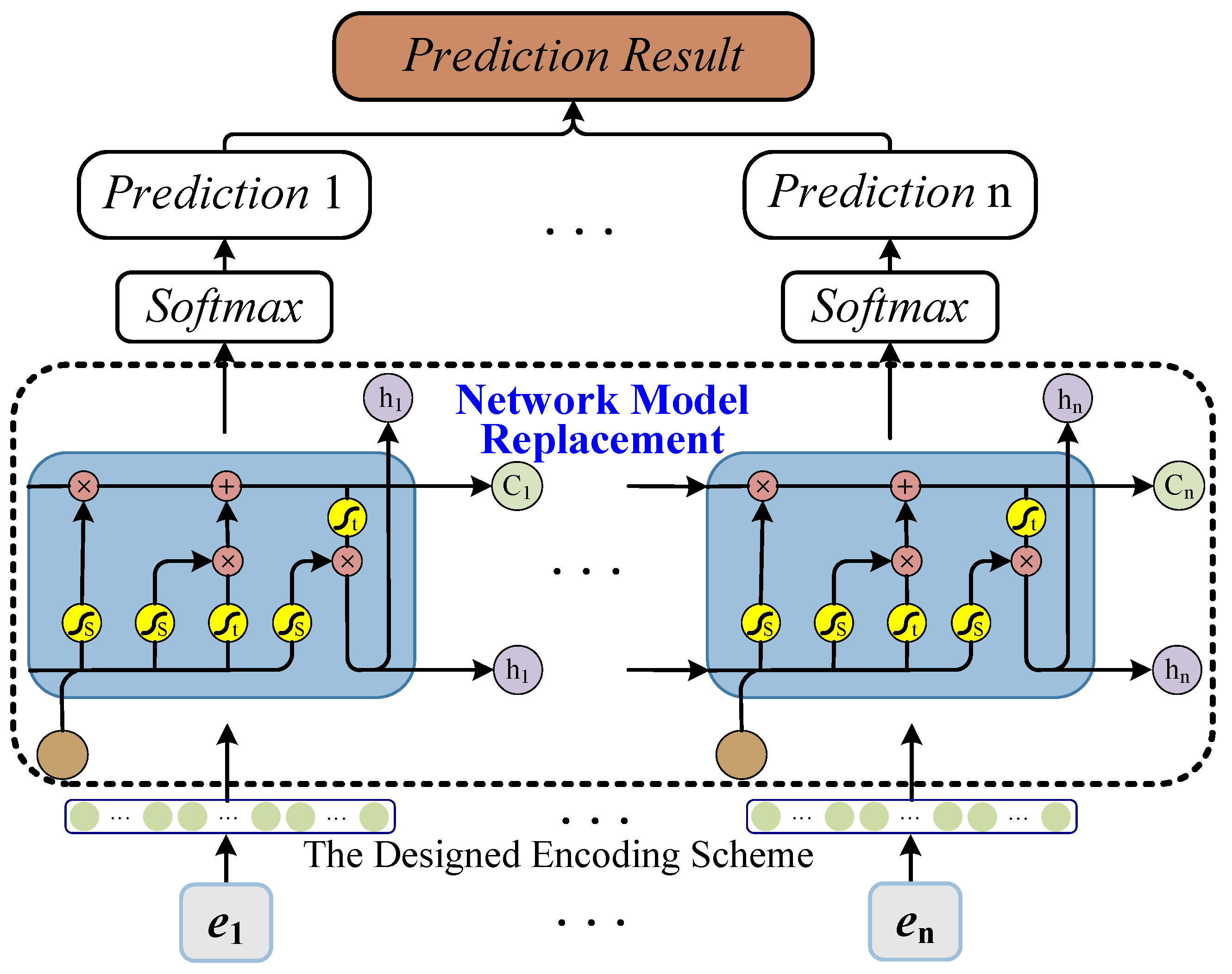

- Phase 2. Model Training and Prediction. The trace prefixes of the sampled logs are encoded using one-hot encoding and fed into six deep-learning models designed for next-event prediction. Please note that six state-of-the-art deep-learning prediction models, including LSTM, GRU, Bi-GRU, Bi-LSTM, RNN, and Transformer, are applied as baselines. Accuracy is used to quantify the prediction quality of the sampled log with respect to the original one. As for performance, the sum of the sampling time and the prediction time using the sampled log is compared to the prediction time required by the original WTMP event log.

4.2. WTMP Event Log Sampling

4.3. Sampling-Based Prediction Model Training and Next-Event Prediction

5. Experimental Evaluation

5.1. Experimental Setup and Baseline

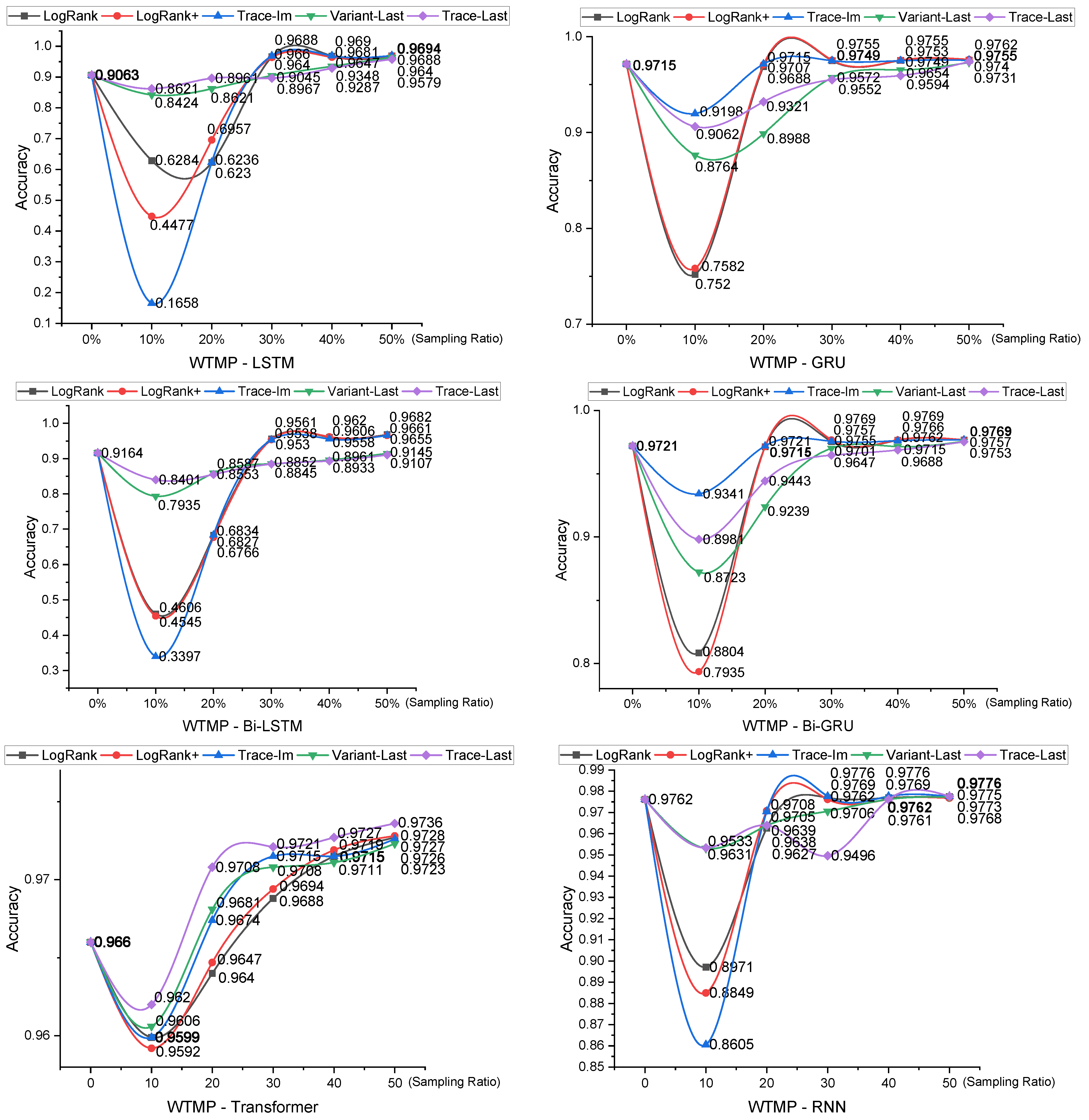

- Trace-Im sampling technique. Trace-Im sampling technique is proposed that quantifies the importance of traces based on activity and directly follows relation.

- LogRank sampling technique [29]. The well-known LogRank-based log sampling technique that implements a graph-based ranking mechanism is proposed. Specifically, it first abstracts an event log into a graph where a node represents a trace and edges represent the similarity between two traces, and then the PageRank algorithm is used to rank all variants iteratively.

- LogRank+ sampling technique [30]. LogRank+-based sampling technique is proposed by calculating the similarity between a trace and the rest of the log to be used as the importance value.

- Variant-Last sampling technique [31]. The Variant-Last sampling technique randomly selects traces from the original event log and sets threshold conditions based on the number of variants. Finally, the trace set is continuously updated, and the obtained sampled log contains a specific number of special variants.

- Trace-Last sampling technique [31]. The Trace-Last sampling technique first randomly selects the trace from the original event log and forms a sampled log after reaching a predetermined number. This strategy assures that the selected traces are diverse and random during the sampling process, and it also prevents repetitive selection.

5.2. Evaluation Metrics

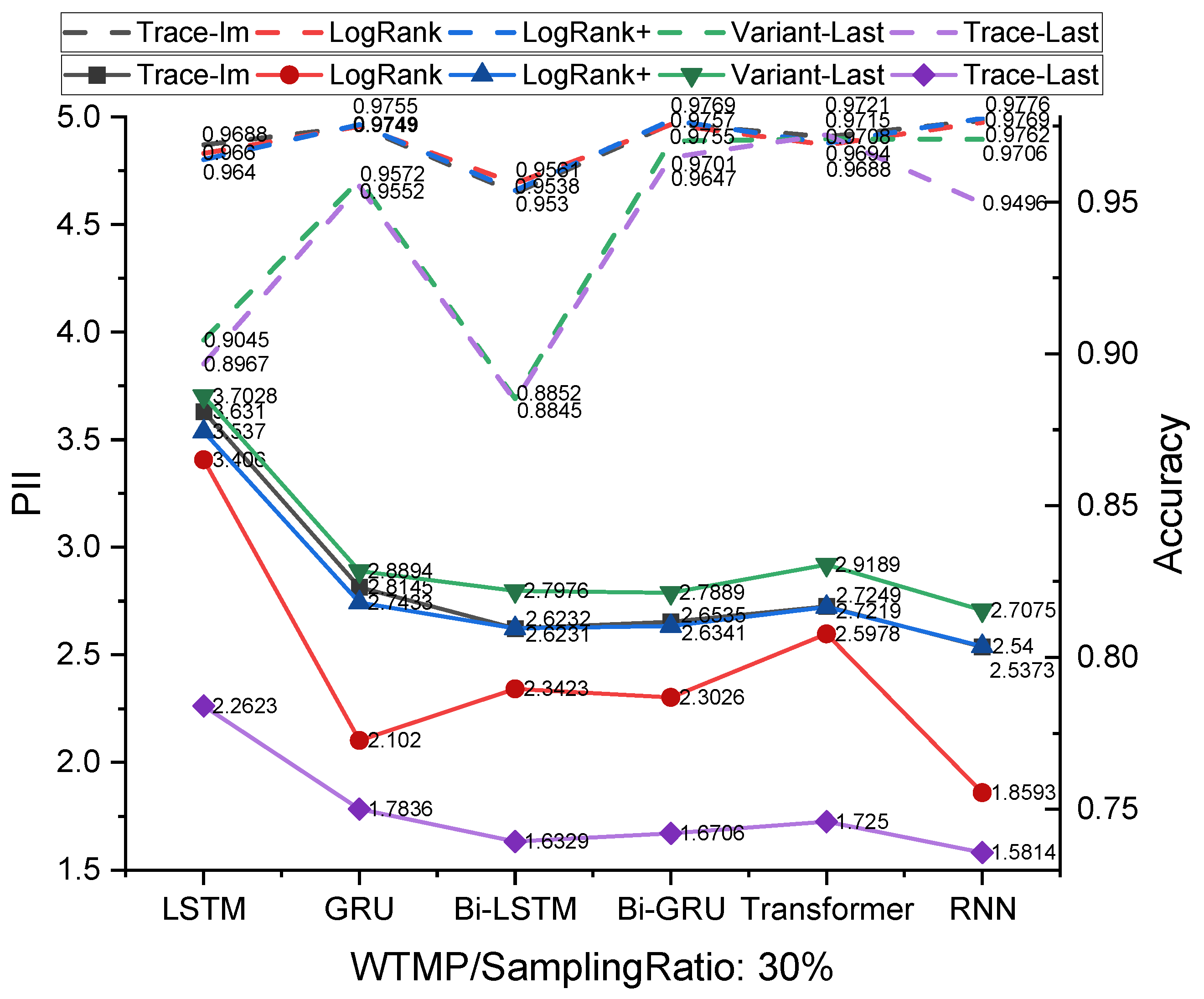

5.3. Experimental Results and Analysis

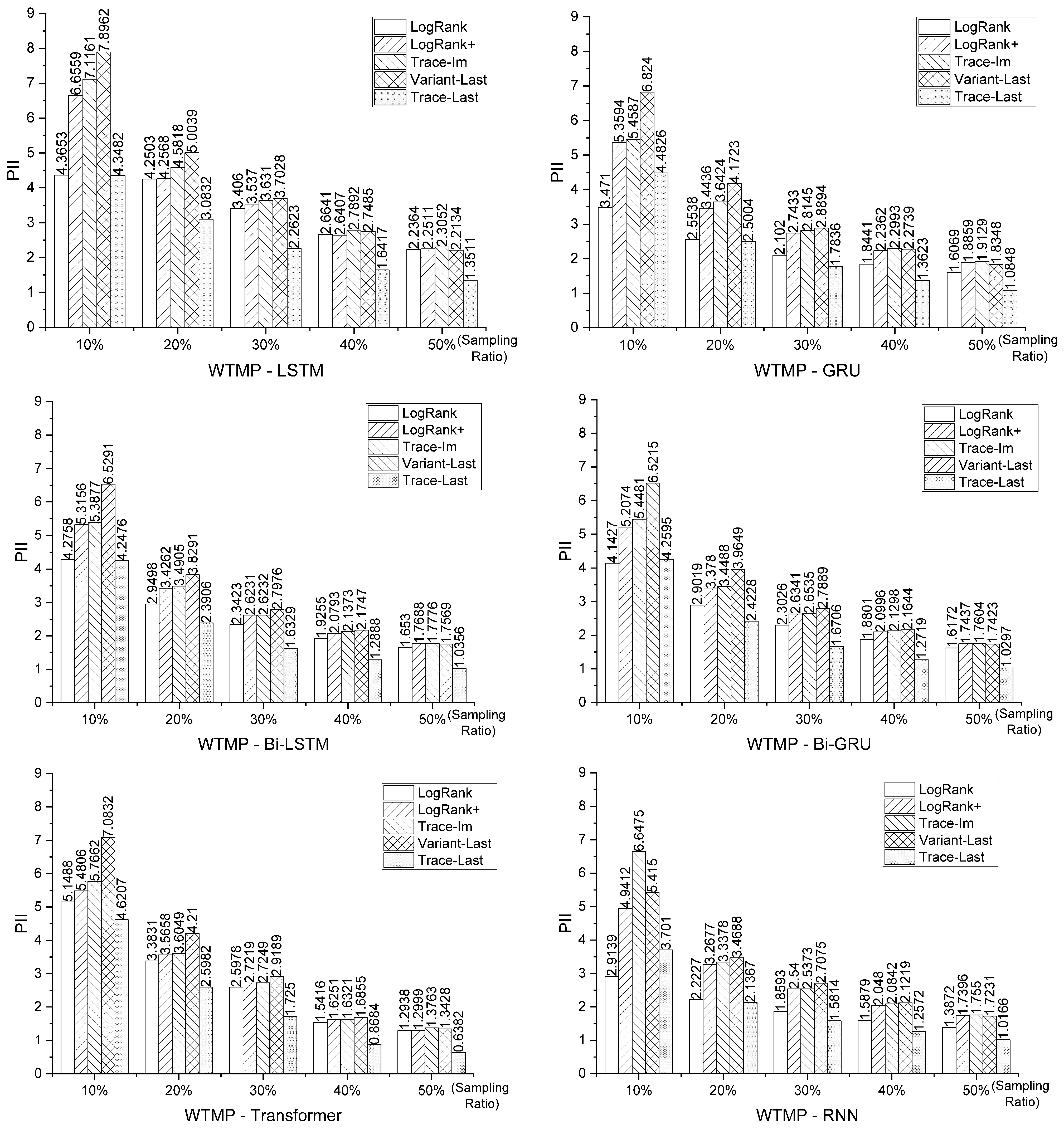

5.3.1. Prediction Accuracy Comparison

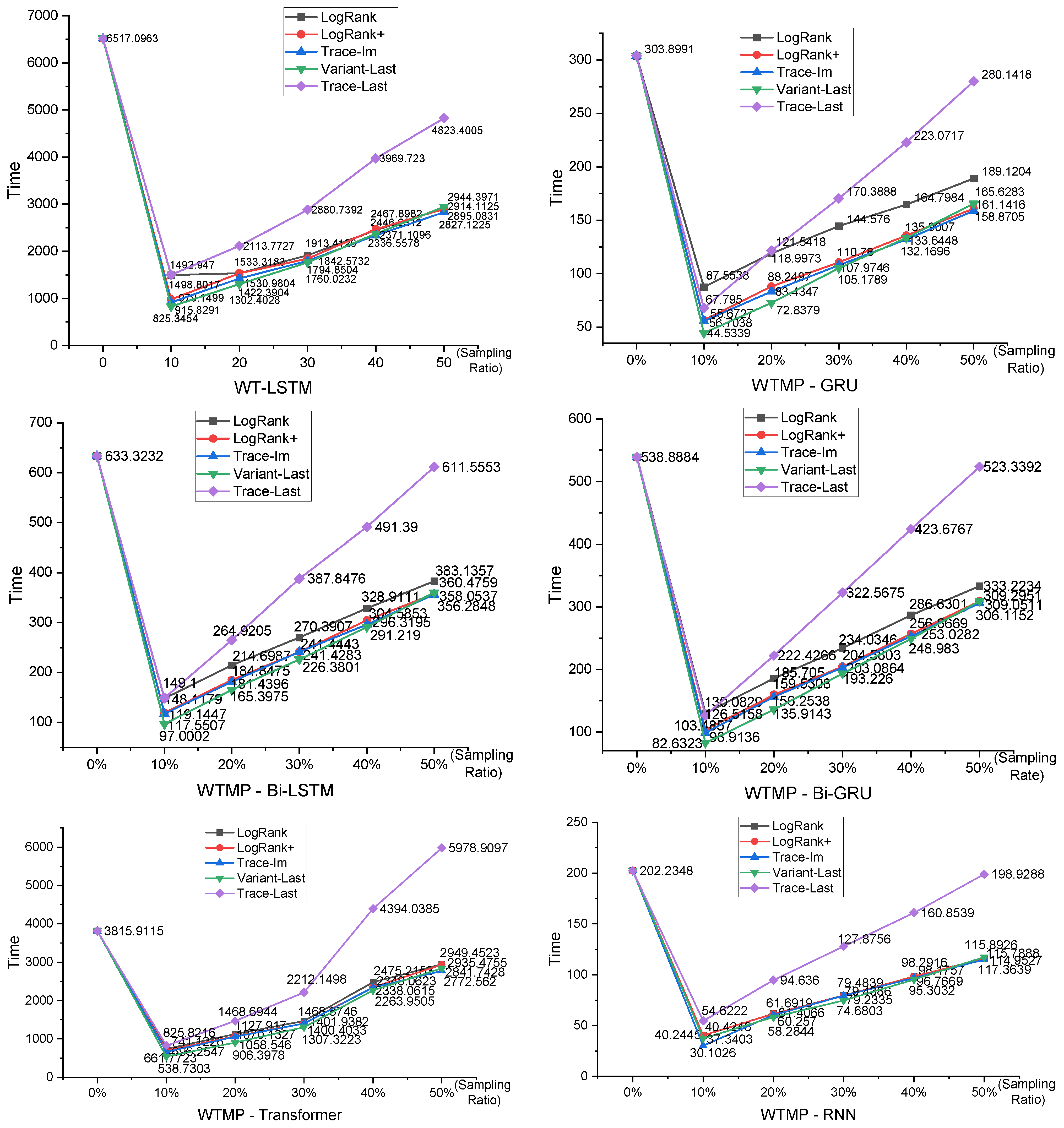

5.3.2. Prediction Efficiency Comparison

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bashir, M.B.A. Principle parameters and environmental impacts that affect the performance of wind turbine: An overview. Arab. J. Sci. Eng. 2022, 47, 7891–7909. [Google Scholar] [CrossRef] [PubMed]

- Márquez-Chamorro, A.E.; Resinas, M.; Ruiz-Cortes, A. Predictive monitoring of business processes: A survey. IEEE Trans. Serv. Comput. 2017, 11, 962–977. [Google Scholar] [CrossRef]

- Maggi, F.M.; Di Francescomarino, C.; Dumas, M.; Ghidini, C. Predictive monitoring of business processes. In Proceedings of the CAiSE 2014, Thessaloniki, Greece, 16–20 June 2014; Springer: Cham, Switzerland, 2014; pp. 457–472. [Google Scholar]

- Lakshmanan, G.T.; Shamsi, D.; Doganata, Y.N.; Unuvar, M.; Khalaf, R. A markov prediction model for data-driven semi-structured business processes. Knowl. Inf. Syst. 2015, 42, 97–126. [Google Scholar] [CrossRef]

- Leontjeva, A.; Conforti, R.; Di Francescomarino, C.; Dumas, M.; Maggi, F.M. Complex symbolic sequence encodings for predictive monitoring of business processes. In Proceedings of the BPM 2015, Innsbruck, Austria, 31 August–3 September 2015; Springer: Cham, Switzerland, 2015; pp. 297–313. [Google Scholar]

- Breuker, D.; Matzner, M.; Delfmann, P.; Becker, J. Comprehensible predictive models for business processes. MIS Q. 2016, 40, 1009–1034. [Google Scholar] [CrossRef]

- Evermann, J.; Rehse, J.R.; Fettke, P. Predicting process behaviour using deep learning. Decis. Support Syst. 2017, 100, 129–140. [Google Scholar] [CrossRef]

- Chen, J.X.; Jiang, D.; Zhang, Y.N. A Hierarchical Bidirectional GRU Model With Attention for EEG-Based Emotion Classification. IEEE Access 2019, 7, 118530–118540. [Google Scholar] [CrossRef]

- Jalayer, A.; Kahani, M.; Pourmasoumi, A.; Beheshti, A. HAM-Net: Predictive Business Process Monitoring with a hierarchical attention mechanism. KBS 2022, 236, 107722. [Google Scholar] [CrossRef]

- Niu, D.; Yu, M.; Sun, L.; Gao, T.; Wang, K. Short-term multi-energy load forecasting for integrated energy systems based on CNN-BiGRU optimized by attention mechanism. Appl. Energy 2022, 313, 118801. [Google Scholar] [CrossRef]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Ruschel, E.; Santos, E.A.P.; Loures, E.d.F.R. Establishment of maintenance inspection intervals: An application of process mining techniques in manufacturing. J. Intell. Manuf. 2020, 31, 53–72. [Google Scholar] [CrossRef]

- Dong, X.; Gao, D.; Li, J.; Li, S. Evaluation model on uncertainty of the wind turbine state. Sustain. Energy Technol. Assess. 2021, 46, 101303. [Google Scholar] [CrossRef]

- Rocchetta, R.; Bellani, L.; Compare, M.; Zio, E.; Patelli, E. A reinforcement learning framework for optimal operation and maintenance of power grids. Appl. Energy 2019, 241, 291–301. [Google Scholar] [CrossRef]

- Garcia Marquez, F.P.; Peinado Gonzalo, A. A comprehensive review of artificial intelligence and wind energy. Arch. Comput. Method Eng. 2022, 29, 2935–2958. [Google Scholar] [CrossRef]

- Gonzalo, A.P.; Benmessaoud, T.; Entezami, M.; Márquez, F.P.G. Optimal maintenance management of offshore wind turbines by minimizing the costs. Sustain. Energy Technol. Assess. 2022, 52, 102230. [Google Scholar]

- Guo, N.; Liu, C.; Mo, Q.; Cao, J.; Ouyang, C.; Lu, X.; Zeng, Q. Business Process Remaining Time Prediction Based on Incremental Event Logs. IEEE Trans. Serv. Comput. 2025, 18, 1308–1320. [Google Scholar] [CrossRef]

- Gómez Muñoz, C.Q.; García Márquez, F.P.; Arcos, A.; Cheng, L.; Kogia, M.; Papaelias, M. Calculus of the defect severity with EMATs by analysing the attenuation curves of the guided waves. Smart. Struct. Syst. 2017, 19, 195–202. [Google Scholar] [CrossRef]

- Yang, Y.; Sørensen, J.D. Cost-optimal maintenance planning for defects on wind turbine blades. Energies 2019, 12, 998. [Google Scholar] [CrossRef]

- Mensah, A.F.; Dueñas-Osorio, L. A closed-form technique for the reliability and risk assessment of wind turbine systems. Energies 2012, 5, 1734–1750. [Google Scholar] [CrossRef]

- Hussain, B.; Afzal, M.K.; Ahmad, S.; Mostafa, A.M. Intelligent traffic flow prediction using optimized GRU model. IEEE Access 2021, 9, 100736–100746. [Google Scholar] [CrossRef]

- Mohammadi Farsani, R.; Pazouki, E. A transformer self-attention model for time series forecasting. JECEI 2020, 9, 1–10. [Google Scholar]

- Fani Sani, M.; van Zelst, S.J.; van der Aalst, W.M.P. The impact of event log subset selection on the performance of process discovery algorithms. In Proceedings of the ADBIS 2019, Bled, Slovenia, 8–11 September 2019; Springer: Cham, Switzerland, 2019; pp. 391–404. [Google Scholar]

- Wen, L.; Van Der Aalst, W.M.; Wang, J.; Sun, J. Mining process models with non-free-choice constructs. Data Min. Knowl. Discov. 2007, 15, 145–180. [Google Scholar] [CrossRef]

- Leemans, S.J.; Fahland, D.; van der Aalst, W.M.P. Discovering block-structured process models from event logs containing infrequent behaviour. In Proceedings of the BPM 2013, Beijing, China, 26–30 August 2013; Springer: Cham, Switzerland, 2014; pp. 66–78. [Google Scholar]

- Berti, A. Statistical sampling in process mining discovery. In Proceedings of the IARIA eKNOW 2017, Nice, France, 19–23 March 2017; pp. 41–43. [Google Scholar]

- Bauer, M.; Senderovich, A.; Gal, A.; Grunske, L.; Weidlich, M. How much event data is enough? A statistical framework for process discovery. In Proceedings of the CAiSE 2018, Tallinn, Estonia, 11–15 June 2018; Springer: Cham, Switzerland, 2018; pp. 239–256. [Google Scholar]

- Liu, C.; Pei, Y.; Zeng, Q.; Duan, H. LogRank: An approach to sample business process event log for efficient discovery. In Proceedings of the KSEM 2018, Changchun, China, 17–19 August 2018; Springer: Cham, Switzerland, 2018; pp. 415–425. [Google Scholar]

- Liu, C.; Pei, Y.; Zeng, Q.; Duan, H.; Zhang, F. LogRank+: A Novel Approach to Support Business Process Event Log Sampling. In Proceedings of the WISE 2020, Amsterdam, The Netherlands, 20–24 October 2020; pp. 417–430. [Google Scholar]

- Fani Sani, M.; Vazifehdoostirani, M.; Park, G.; Pegoraro, M.; van Zelst, S.J.; van der Aalst, W.M.P. Event log sampling for predictive monitoring. In Proceedings of the ICPM 2021, Eindhoven, The Netherlands, 31 October–4 November 2021; Springer International Publishing: Cham, Switzerland, 2021; pp. 154–166. [Google Scholar]

- Liu, C.; Huiling, L.; Zhang, S.; Cheng, L.; Zeng, Q. Cross-department collaborative healthcare process model discovery from event logs. IEEE Trans. Autom. Sci. Eng. 2022, 20, 2115–2125. [Google Scholar] [CrossRef]

- Su, X.; Liu, C.; Zhang, S.; Zeng, Q. Sampling business process event logs with guarantees. Concurr. Comput. Pract. Exp. 2024, 36, e8077. [Google Scholar] [CrossRef]

- Guo, N.; Cong, L.; Li, C.; Zeng, Q.; Ouyang, C.; Liu, Q. Explainable and effective process remaining time prediction using feature-informed cascade prediction model. IEEE Trans. Serv. Comput. 2024, 17, 949–962. [Google Scholar] [CrossRef]

| Event | Case ID | Attribute | ||

|---|---|---|---|---|

| Activity | Timestamp | Resource | ||

| e1 | 102C20200003 | Work Permit form Completion (WPC) | 2020/1/20 10:16:00 | Peter |

| e2 | 102C20200003 | Issuance of Work Permit (IWP) | 2020/1/20 10:18:00 | David |

| e3 | 102C20200003 | Approval for Work Permit (AWP) | 2020/1/20 17:07:00 | Aidan |

| e4 | 102C20200003 | Arrangement of Safety Measures (ASM) | 2020/1/20 17:36:00 | Bertie |

| e5 | 102C20200003 | Confirmation (CON) | 2020/1/20 17:41:00 | Eric |

| Model | Input_Size | Hidden_Size | Num_Layers | Dropout | Batch_Size | Learning_Rate | Activation Function |

|---|---|---|---|---|---|---|---|

| LSTM | 20 | 128 | 3 | 0.1 | 64 | 0.001 | softmax |

| GRU | 20 | 128 | 3 | 0.1 | 64 | 0.001 | softmax |

| Bi-LSTM | 20 | 128 | 3 | 0.1 | 64 | 0.001 | softmax |

| Bi-GRU | 20 | 128 | 3 | 0.1 | 64 | 0.001 | softmax |

| RNN | 20 | 128 | 3 | 0.1 | 64 | 0.001 | softmax |

| Transformer | 30 | 256 | 4 | 0.1 | 64 | 0.001 | softmax |

| Sampling Configuration | Improvement Ratio (%) | ||||||

|---|---|---|---|---|---|---|---|

| Techniques | Ratio | LSTM | GRU | Bi-LSTM | Bi-GRU | Transformer | RNN |

| Trace-Im | 10% | −81.706 | −5.322 | −62.931 | −3.909 | −0.632 | −11.852 |

| 20% | −31.193 | 0 | −25.426 | 0 | 0.145 | −0.584 | |

| 30% | 6.896 | 0.350 | 3.994 | 0370 | 0.569 | 0.143 | |

| 40% | 6.918 | 0.350 | 4.300 | 0.422 | 0.569 | 0.143 | |

| 50% | 6.962 | 0.412 | 5.423 | 0.494 | 0.683 | 0.143 | |

| Log-Rank | 10% | −30.663 | −22.594 | −49.738 | −16.84 | −0.632 | −8.103 |

| 20% | −31.259 | −0.278 | −25.502 | −0.062 | −0.207 | −1.383 | |

| 30% | 6.587 | 0.350 | 4.332 | 0.350 | 0.290 | 0.072 | |

| 40% | 6.819 | 0.391 | 4.823 | 0.463 | 0.569 | 0.072 | |

| 50% | 6.896 | 0.412 | 5.653 | 0.494 | 0.694 | 0.133 | |

| Log-Rank+ | 10% | −50.601 | −21.956 | −50.404 | −18.373 | −0.704 | −9.353 |

| 20% | −23.237 | −0.082 | −26.168 | −0.062 | −0.135 | −0.553 | |

| 30% | 6.367 | 0.412 | 4.081 | 0.494 | 0.352 | 0 | |

| 40% | 6.444 | 0.412 | 4.976 | 0.494 | 0.611 | 0 | |

| 50% | 6.962 | 0.484 | 5.358 | 0.494 | 0.704 | 0.062 | |

| Variant-Last | 10% | −7.051 | −9.789 | −13.411 | −10.266 | −0.559 | −2.366 |

| 20% | −4.877 | −7.483 | −6.296 | −4.958 | 0.217 | −1.270 | |

| 30% | −0.199 | −1.472 | −3.405 | −0.206 | 0.497 | −0.574 | |

| 40% | 3.145 | −0.628 | −2.215 | −0.062 | 0.528 | 0 | |

| 50% | 6.367 | 0.165 | −0.207 | 0.370 | 0.652 | 0.112 | |

| Trace-Last | 10% | −4.877 | −6.722 | −8.326 | −7.612 | −0.414 | −2.346 |

| 20% | −1.126 | −4.056 | −6.667 | −2.860 | 0.497 | −1.26 | |

| 30% | −1.059 | −1.678 | −3.481 | −0.761 | 0.631 | −2.725 | |

| 40% | 2.472 | −1.246 | −2.521 | −0.340 | 0.694 | −0.010 | |

| 50% | 5.694 | 0.257 | −0.622 | 0.329 | 0.787 | 0.143 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Liu, C.; Du, Q.; Zeng, Q.; Zhang, J.; Theodoropoulo, G.; Cheng, L. Sampling-Based Next-Event Prediction for Wind-Turbine Maintenance Processes. Energies 2025, 18, 4238. https://doi.org/10.3390/en18164238

Li H, Liu C, Du Q, Zeng Q, Zhang J, Theodoropoulo G, Cheng L. Sampling-Based Next-Event Prediction for Wind-Turbine Maintenance Processes. Energies. 2025; 18(16):4238. https://doi.org/10.3390/en18164238

Chicago/Turabian StyleLi, Huiling, Cong Liu, Qinjun Du, Qingtian Zeng, Jinglin Zhang, Georgios Theodoropoulo, and Long Cheng. 2025. "Sampling-Based Next-Event Prediction for Wind-Turbine Maintenance Processes" Energies 18, no. 16: 4238. https://doi.org/10.3390/en18164238

APA StyleLi, H., Liu, C., Du, Q., Zeng, Q., Zhang, J., Theodoropoulo, G., & Cheng, L. (2025). Sampling-Based Next-Event Prediction for Wind-Turbine Maintenance Processes. Energies, 18(16), 4238. https://doi.org/10.3390/en18164238