Exploring Augmented Reality HMD Telemetry Data Visualization for Strategy Optimization in Student Solar-Powered Car Racing

Abstract

1. Introduction

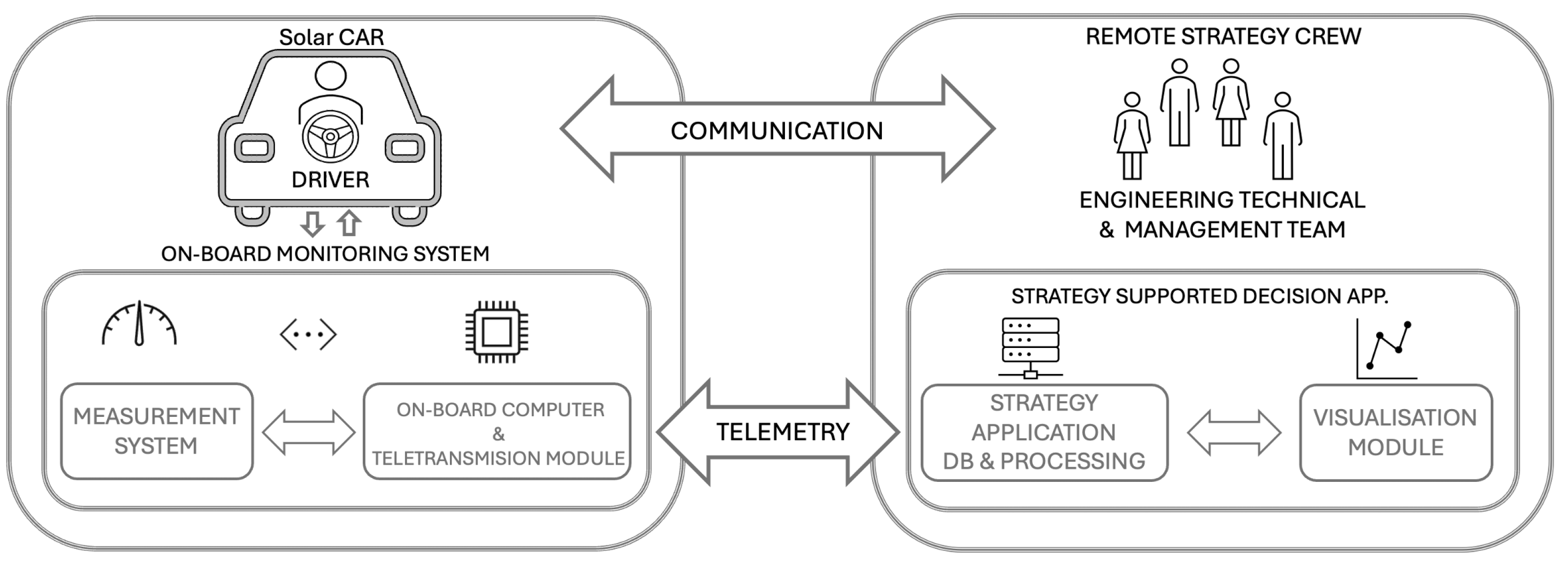

2. Telemetry-Related Work and System Overview

2.1. Telemetric Data Visualization

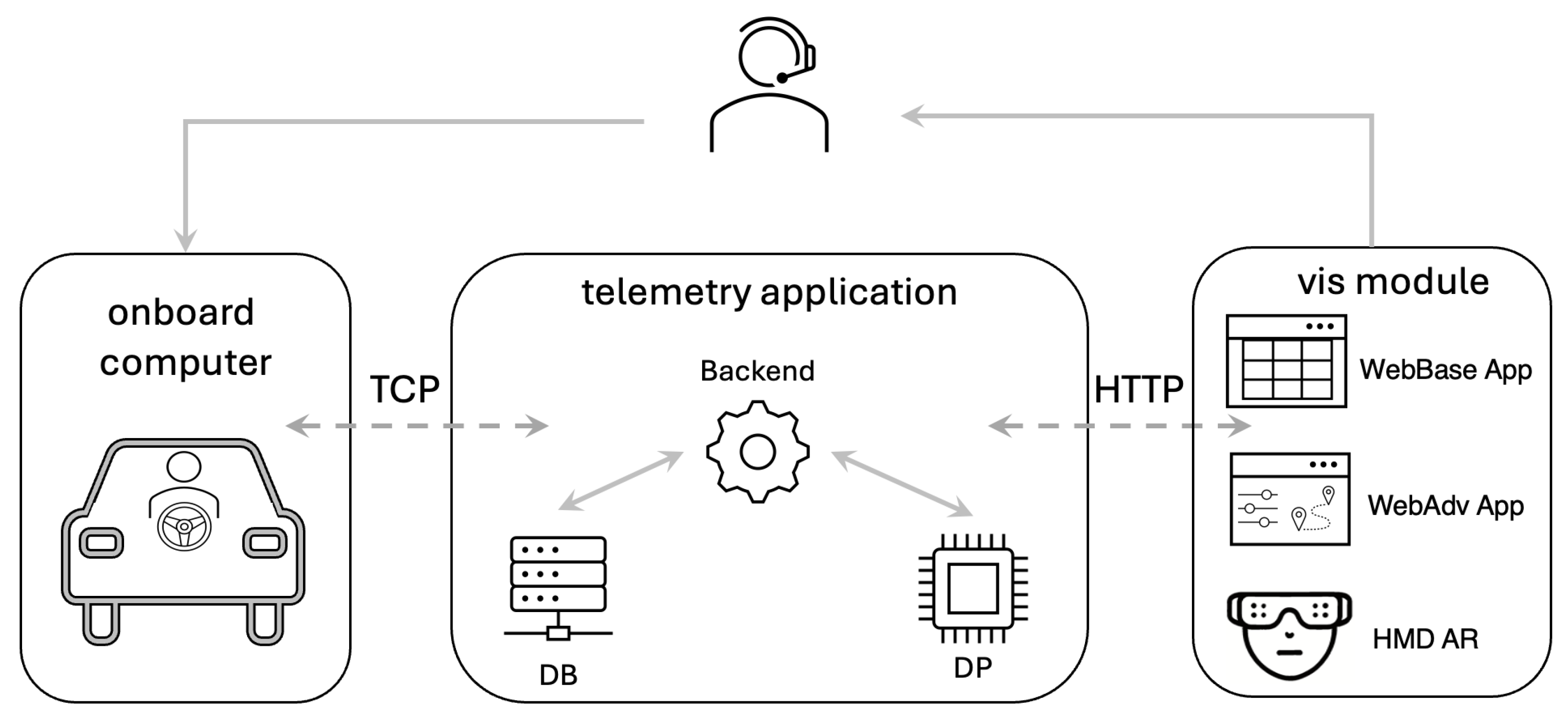

2.2. Telemetric Data Visualization Module for Eagle Two

2.3. System Overview

3. Telemetry Data Visualization Mode Investigation

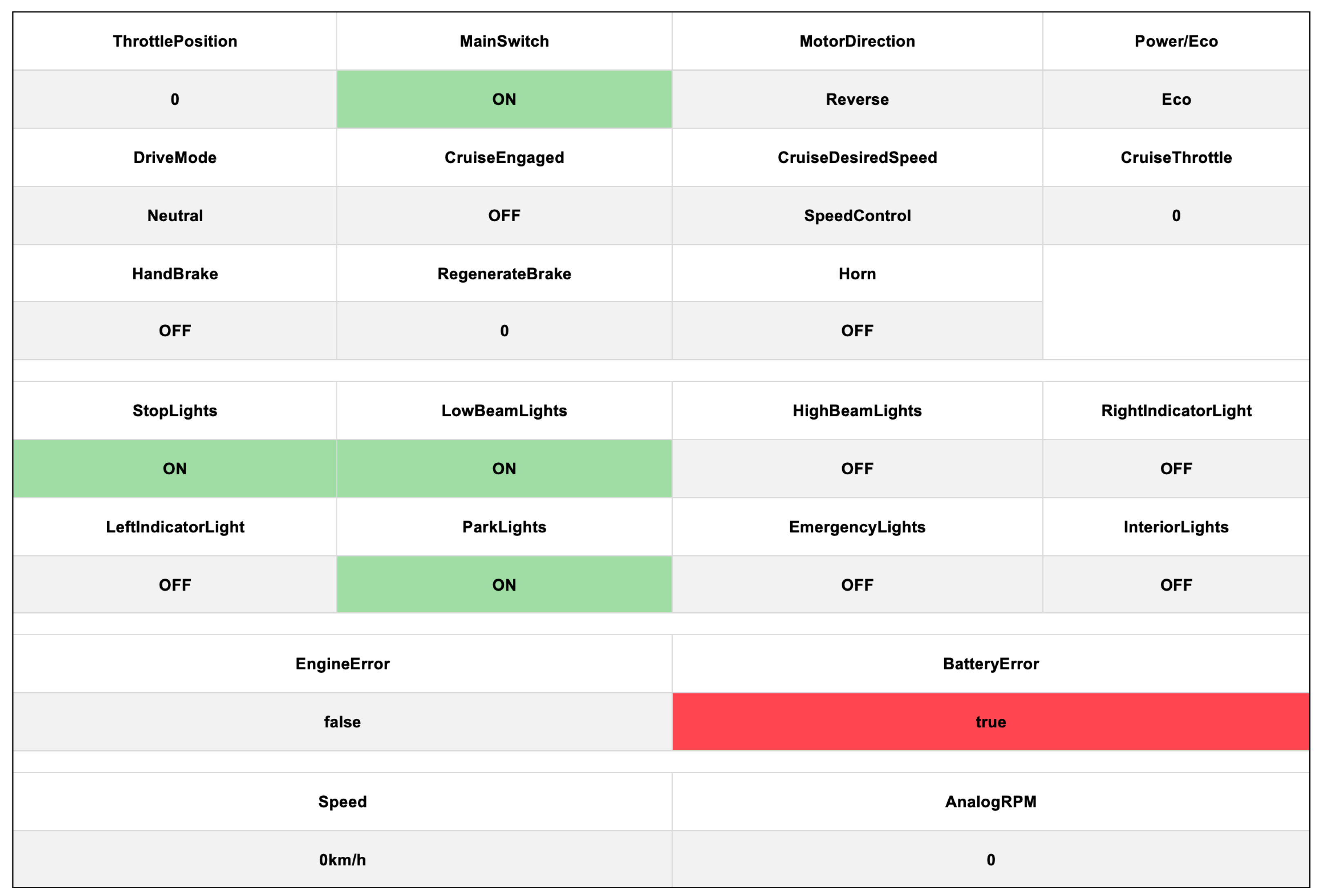

3.1. Basic Web Visualization Mode: WebBase App

3.2. Advanced Web Visualization Mode—WebAdv App

3.3. Augmented Reality Application

4. Evaluation

4.1. Research Questions

- RQ1:

- Does augmented reality-based data visualization for on-the-fly strategy adjustments in solar car races affect overall performance?

- RQ2:

- Which aspects of AR employment influence individual standard tasks related to the effective execution of telemetry-based Solar Race Car data analysis?

- RQ3:

- How does the experience level of team members translate into the effectiveness of using information presented in different interfaces?

4.2. User Study: Task-Based Evaluation

- Baseline simple, web-based application with a table-like simple interface application (henceforth referred to as WebApp Base);

- More advanced, containing graphical elements, yet still a web-based window view interface application (henceforth referred to as WebApp Adv);

- A head-mounted display–based, augmented reality holographic interface viewed through HoloLens (henceforth referred to as HMD AR).

- T1:

- Tire puncture—A sudden drop in air pressure in one of the wheels.

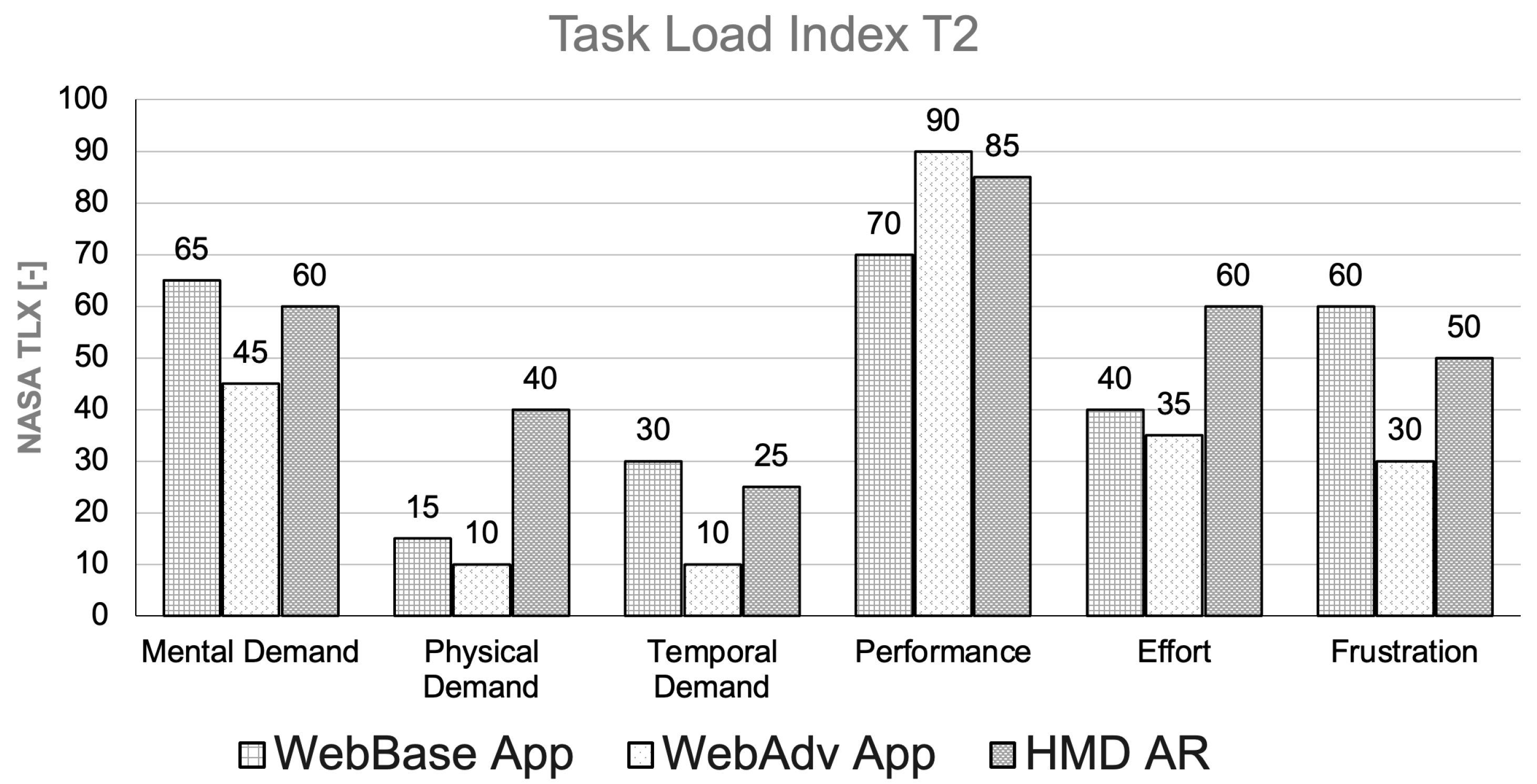

- T2:

- Battery malfunction—An unexpected voltage drop in one of the battery pack cells.

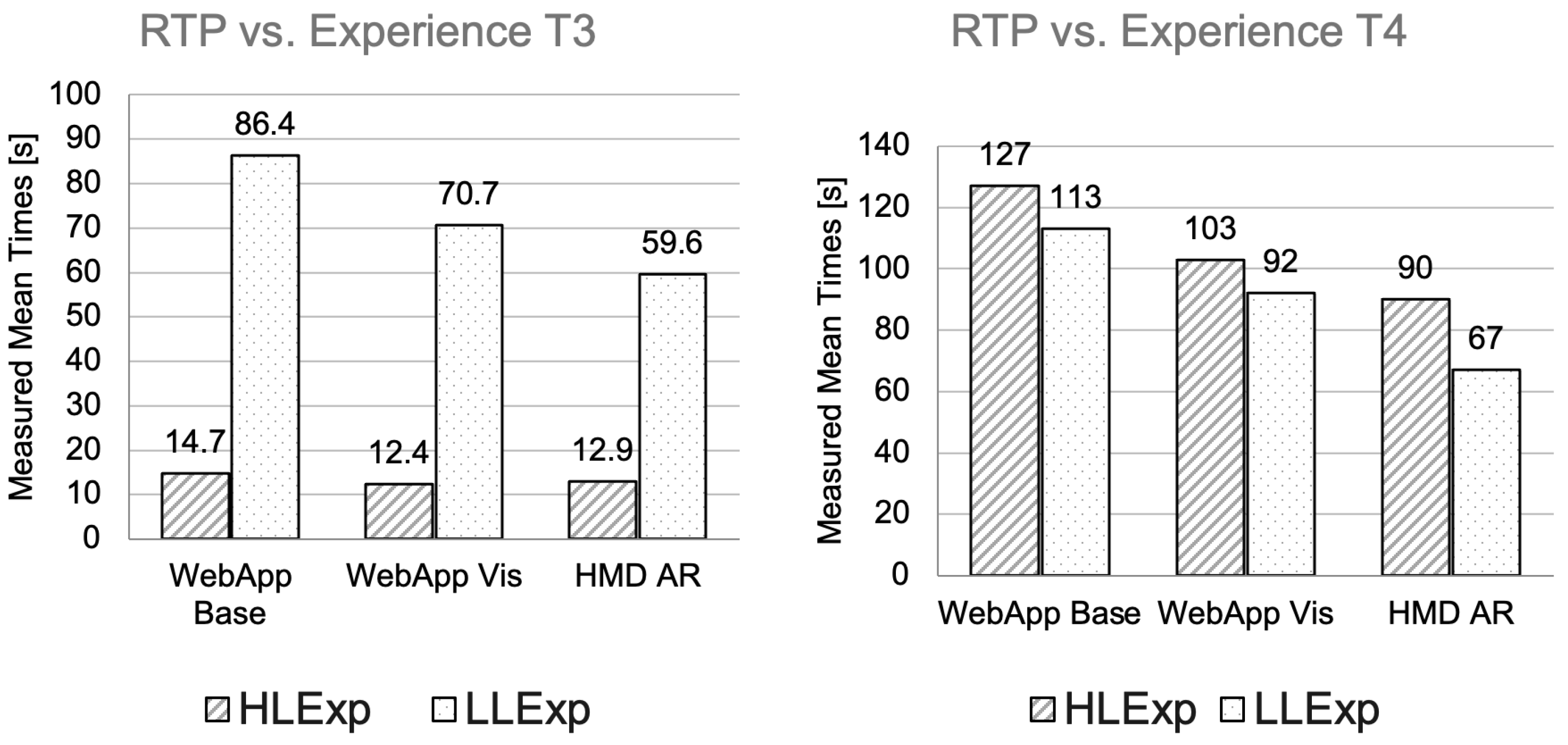

- T3:

- Optimal speed decision—Determine the best vehicle speed for the remainder of the race based on the current car condition and race situation.

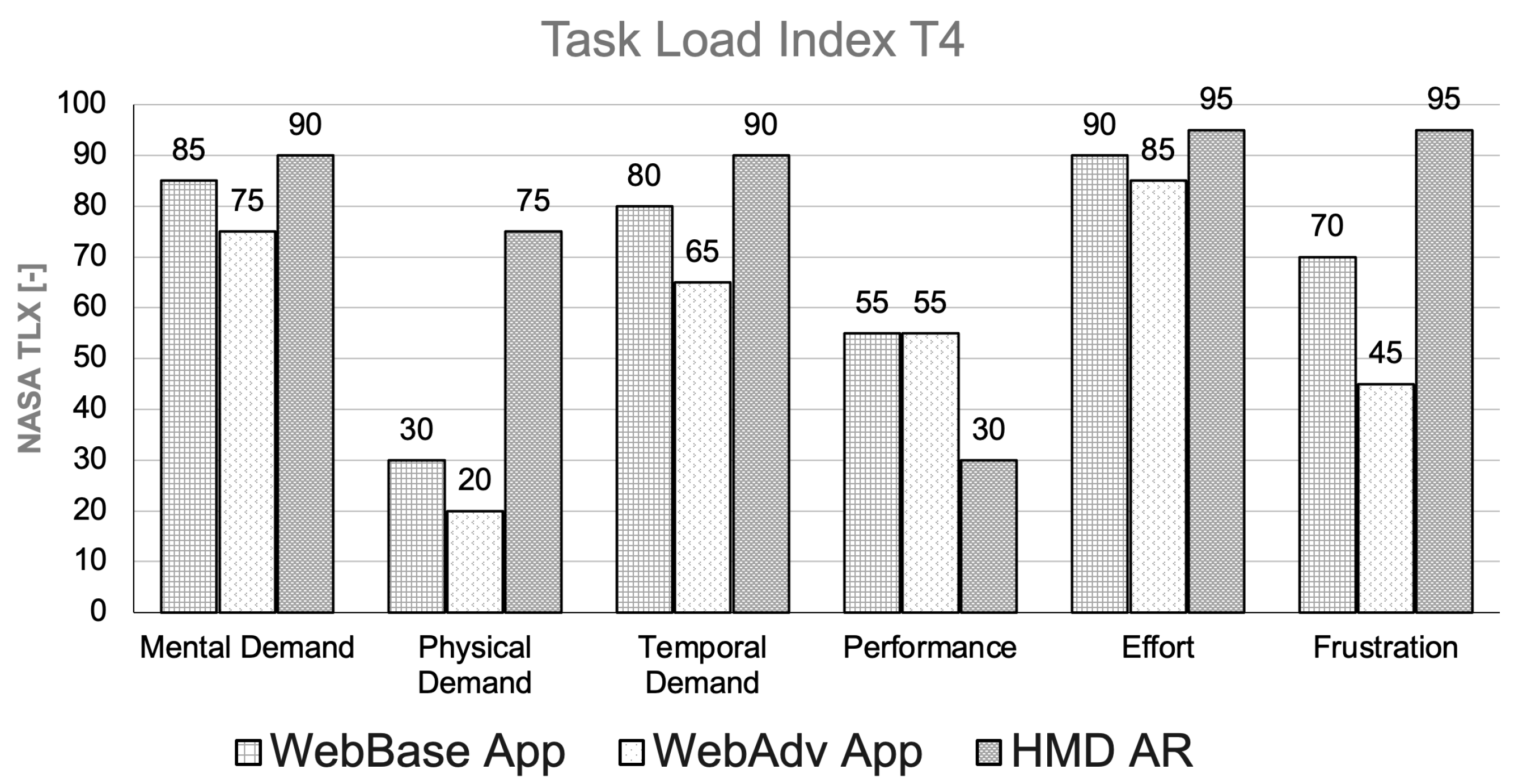

- T4:

- Charging strategy—Decide when to charge the vehicle during a circuit race, specifically how many laps should be completed before charging, taking into account the car’s status and race conditions.

4.3. Participants

4.4. Experimental Setup

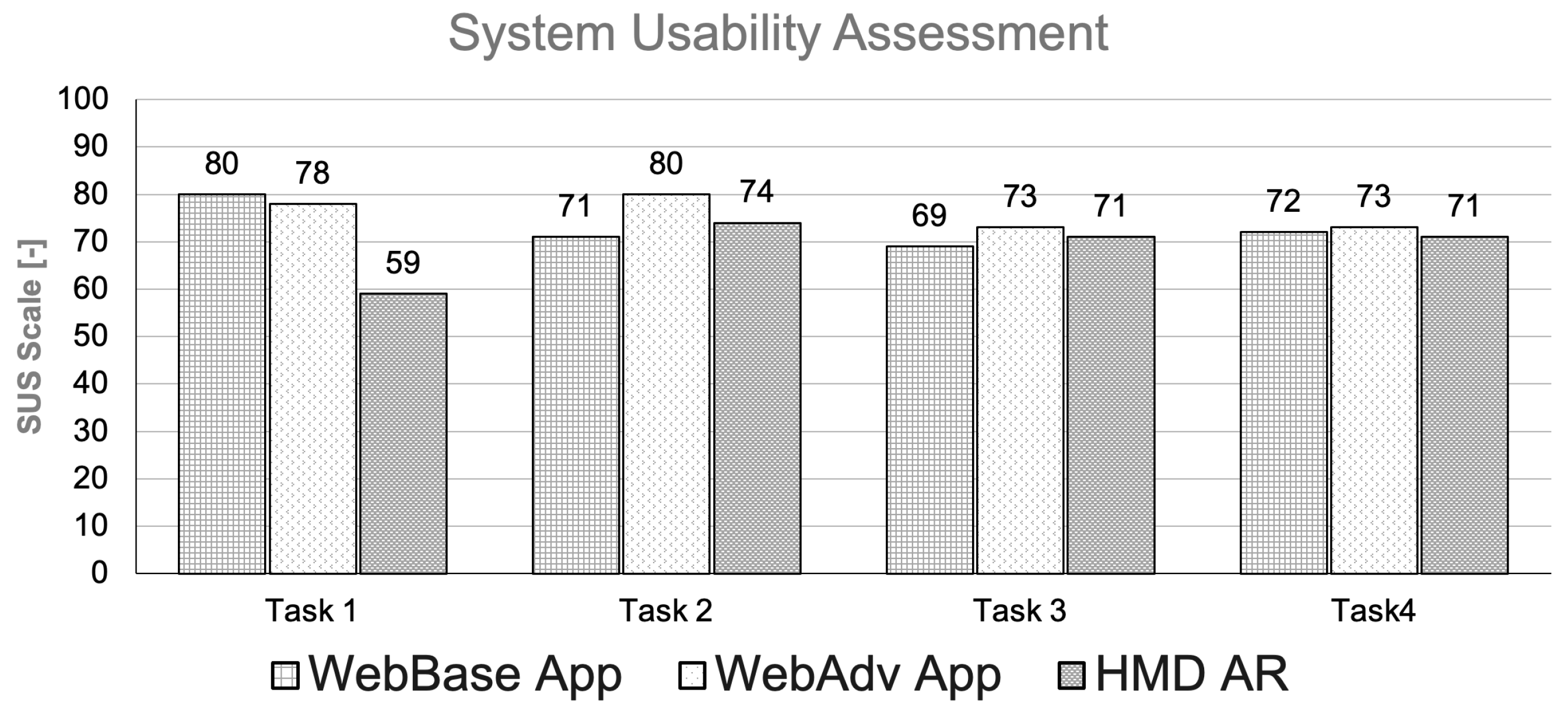

4.5. Quantitative and Qualitative Assessment

5. Results and Discussion

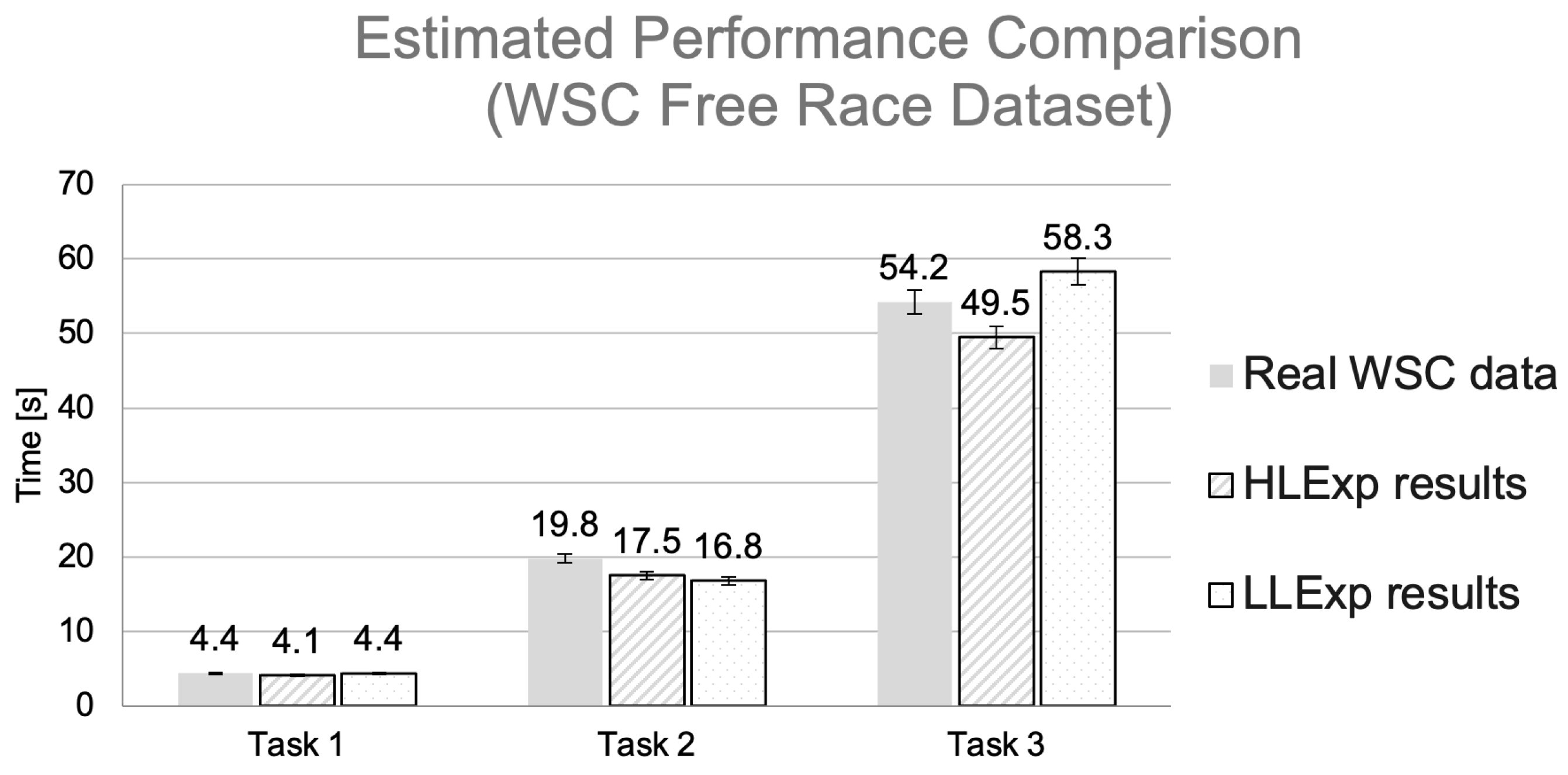

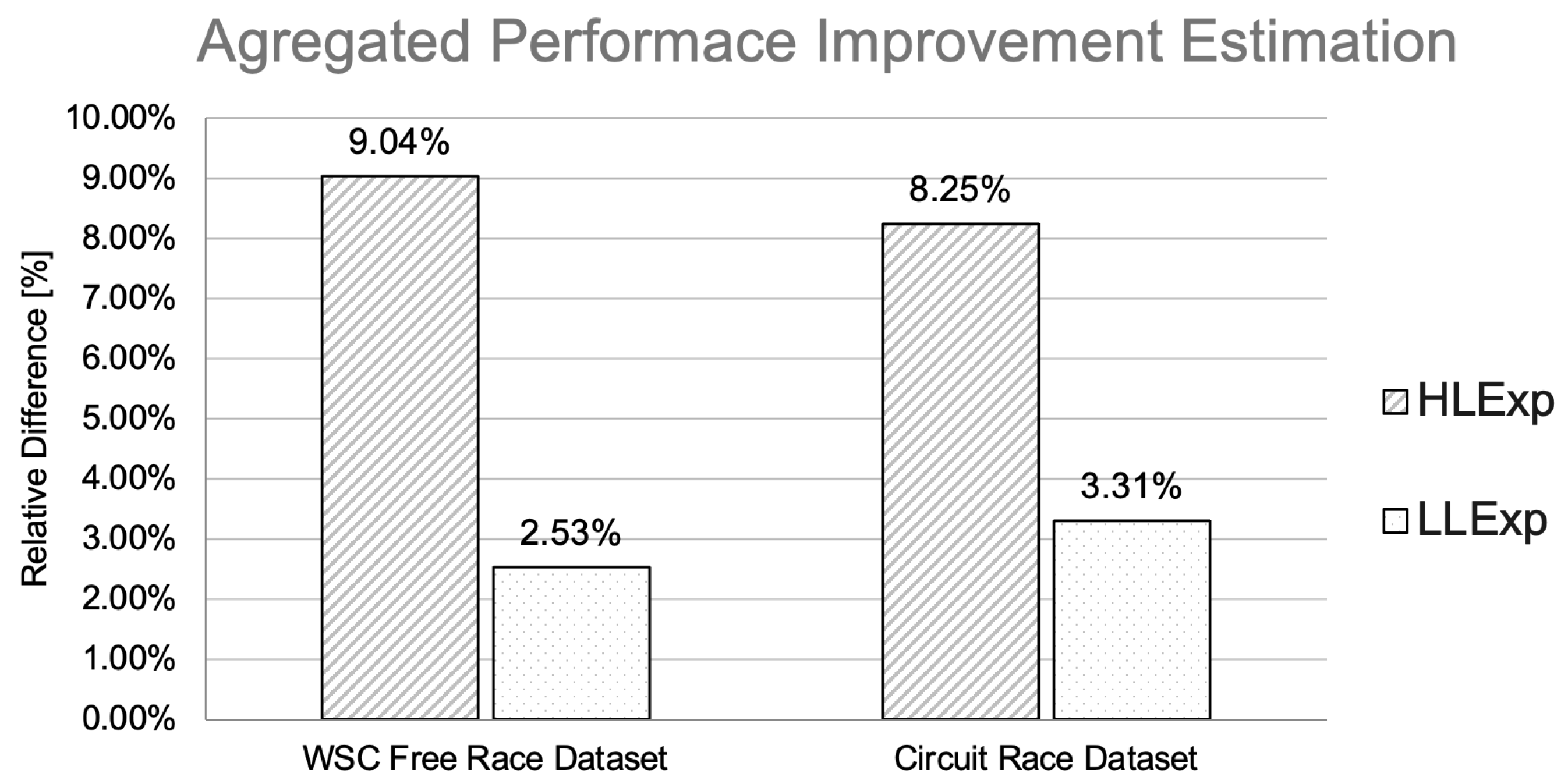

5.1. Prospective Impact on the Overall Performance Variation

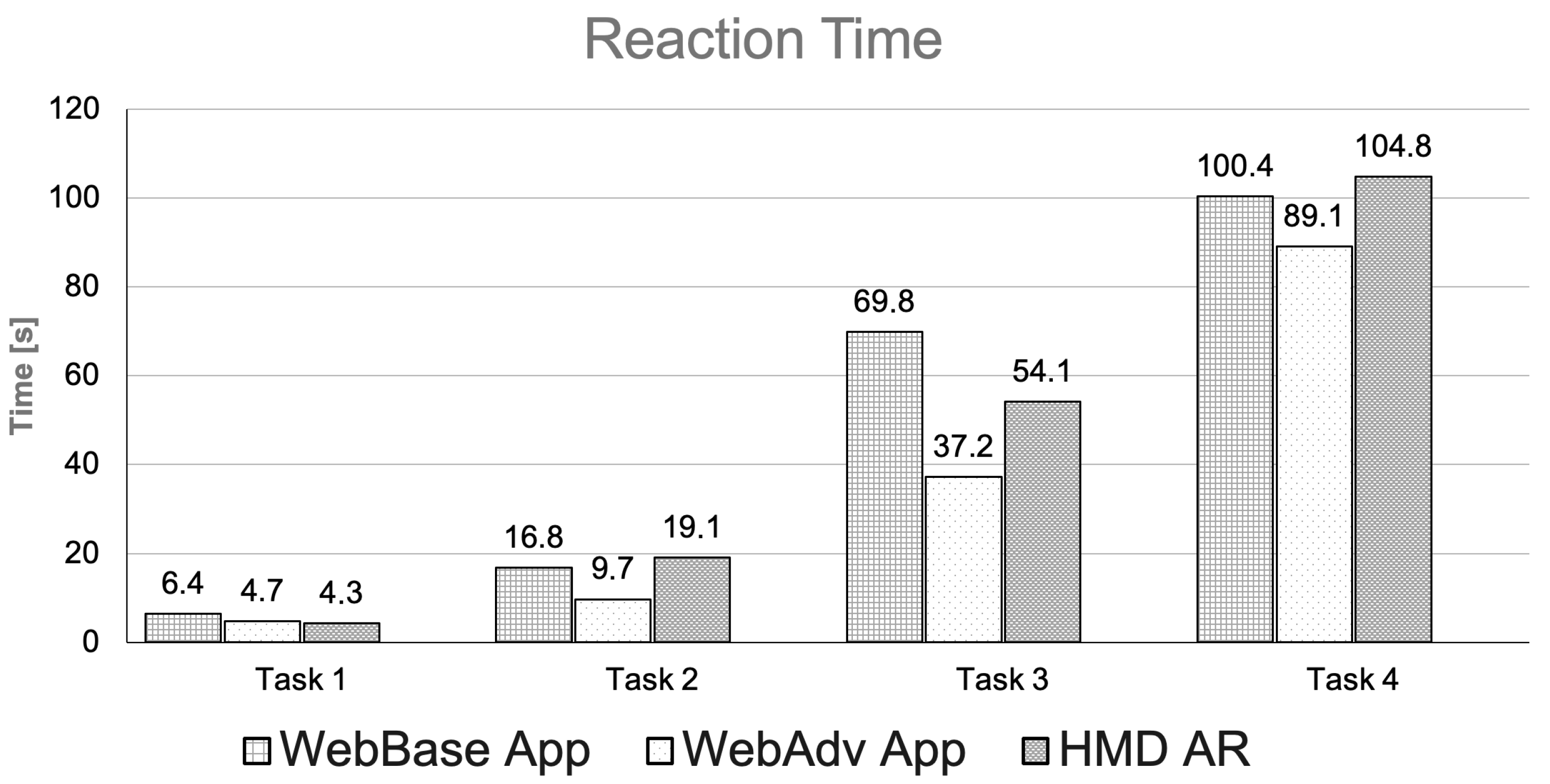

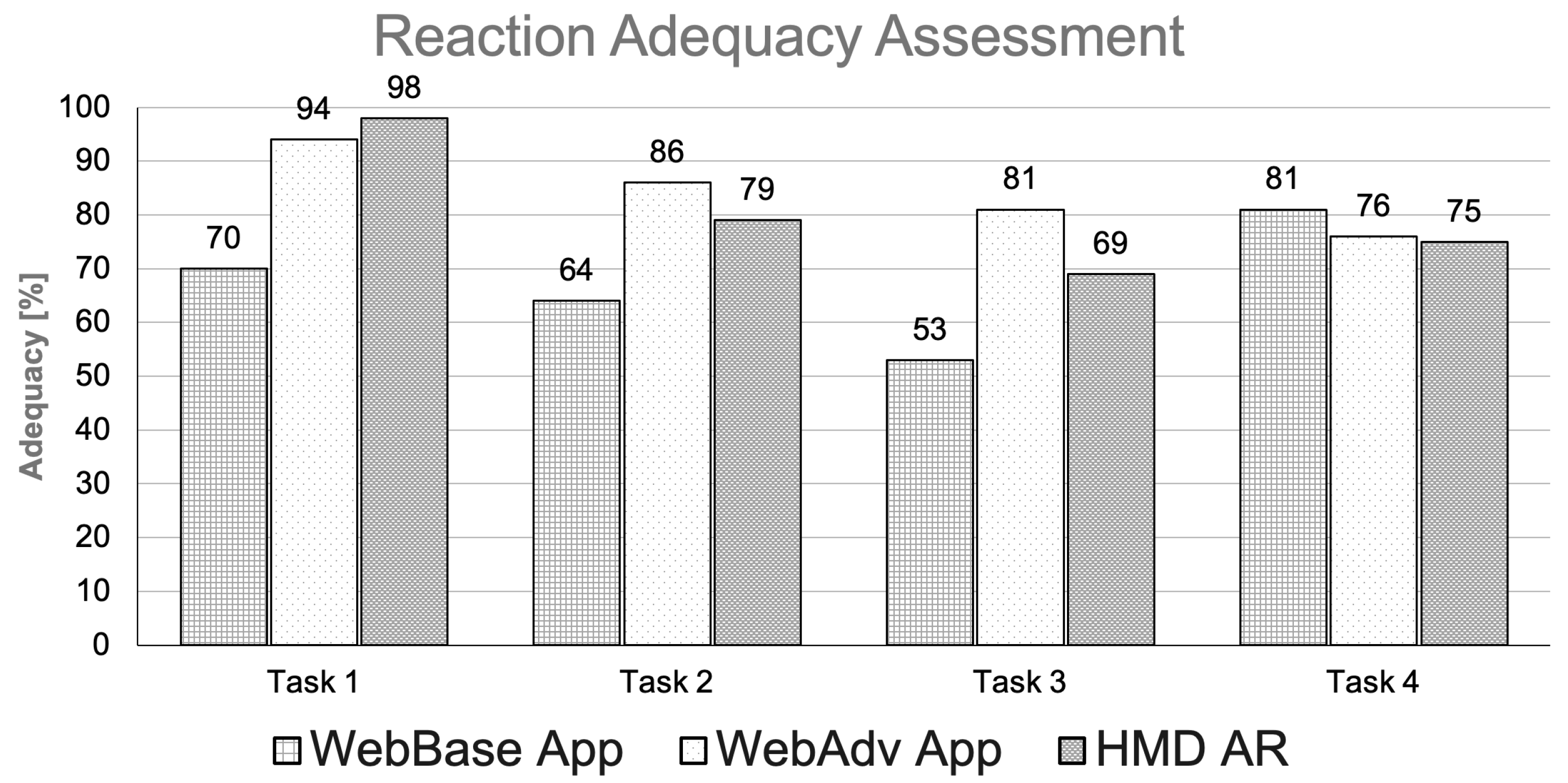

5.2. Detailed Quantitative Results

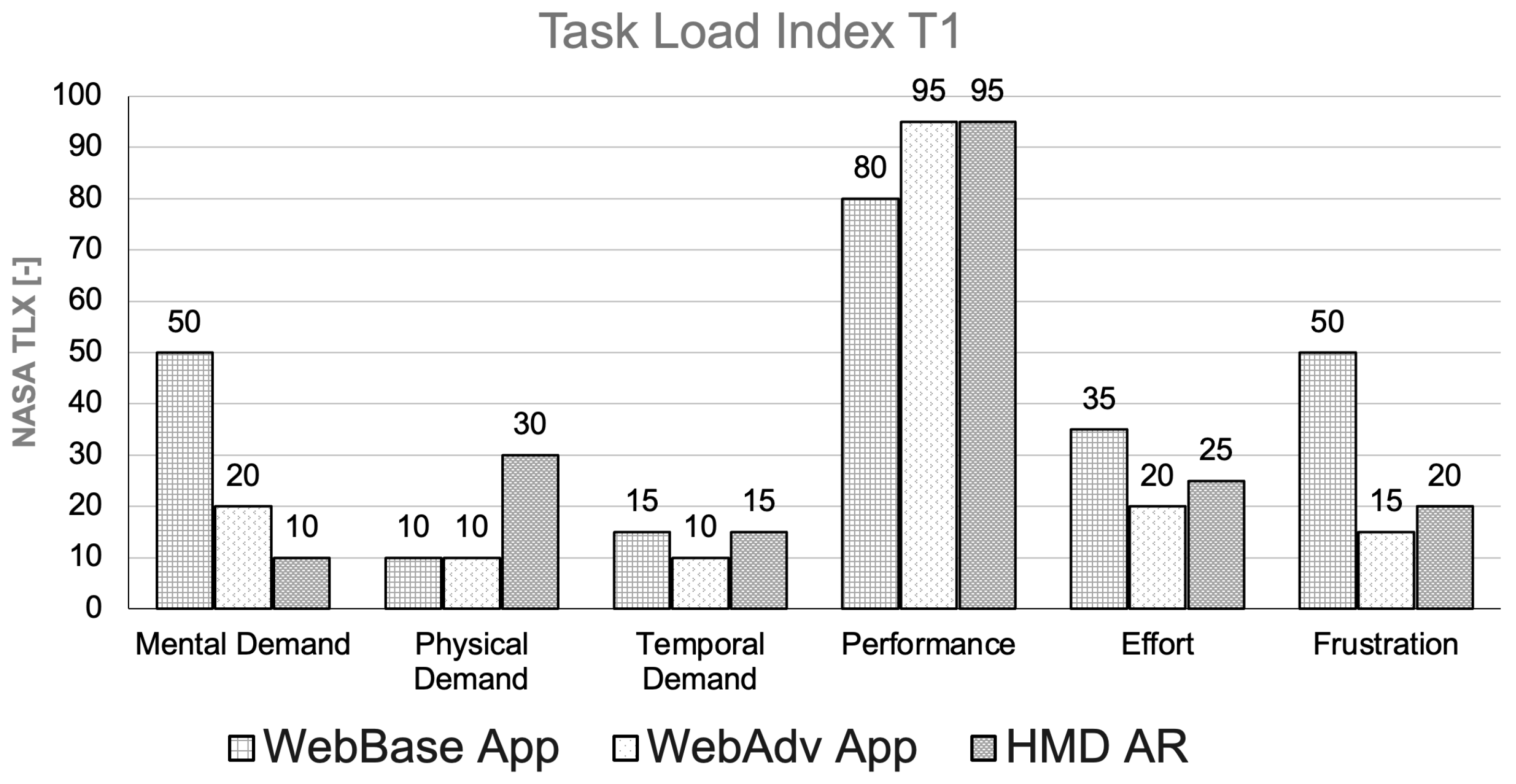

5.3. Cognitive Workload Assessment

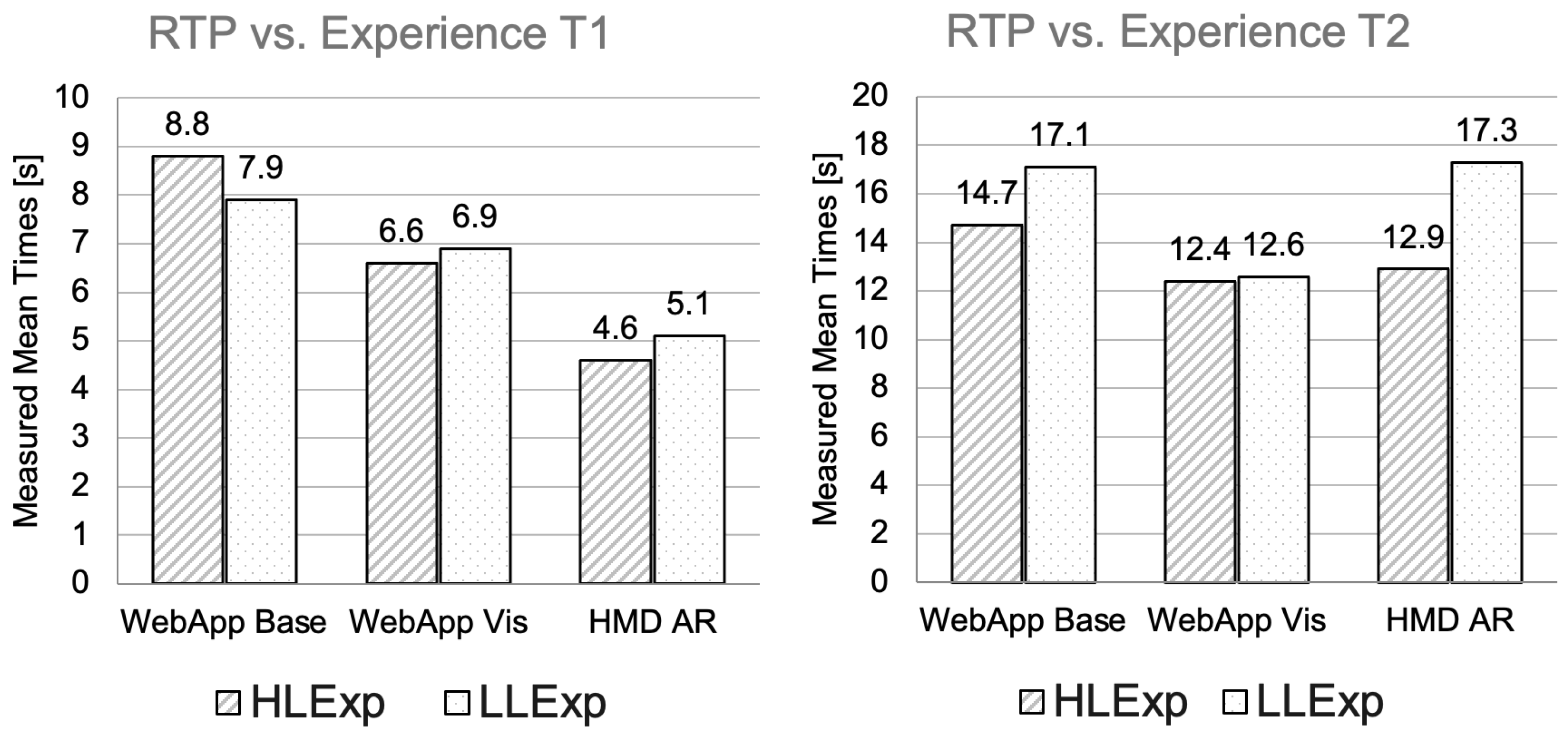

5.4. User’s Professional Experience Level vs. Mode of Telemetry Visualization

5.5. Summary of Results

6. Conclusions

6.1. Limitations

6.2. Directions for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADS | Automated Driving Systems |

| AR | Augmented Reality |

| AVR | Augmented Vehicle Reality |

| CAN | Controller Area Network |

| CFD | Computational Fluid Dynamic Modeling |

| CPU | Central Processing Unit |

| EV | Electric Vehicle |

| GPU | Graphical Processing Unit |

| HLExp | High-Level Experienced Users |

| LLExp | Low-Level Experienced Users |

| HMD | Head-Mounted Display |

| Head-Up | Displays (HUDs) |

| HVI | Human–Vehicle Interaction |

| HTTP | Hypertext Transfer Protocol |

| MR | Mixed Reality |

| NASA TLX | Task Load Index |

| RA | Reaction Adequacy |

| RT | Reaction time |

| RTP | correct Response reaction Time Performance |

| SRC | Solar Race Car |

| SUS | System Usability Scale |

| TCP | Transmission Control Protocol |

| TCT | Task Completion Times |

| VR | Virtual Reality |

| WebBase App | Basic web visualization mode |

| WebAdv App | Advanced web visualization mode |

| WSC | World Solar Challenge |

References

- European Parliament Document, R. Regulation (EU) 2023/851 of the European Parliament and of the Council of 19 April 2023 Amending Regulation (EU) 2019/631 as Regards Strengthening the CO2 Emission Performance Standards for New Passenger Cars and New Light Commercial Vehicles in Line with the Union’s Increased Climate Ambition. Available online: https://eur-lex.europa.eu/eli/reg/2023/851/oj (accessed on 20 March 2025).

- Mohammed, M.N.; Alfiras, M.; Alani, S.; Sharif, A.; Abdulghaffar, A.; Al Jowder, F.; Elyas, K.; Alhammadi, B.; Mahdi, M.; Khaled, N.; et al. Design and Development of the “GU CyberCar”: A Solar-Powered Electric Vehicle Based on IoT Technology. In Proceedings of the 2023 IEEE 8th International Conference on Engineering Technologies and Applied Sciences (ICETAS), Bahrain, Bahrain, 25–27 October 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Foxall, G.R.; Johnston, B. Innovation in Grand Prix motor racing: The evolution of technology, organization and strategy. Technovation 1991, 11, 387–402. [Google Scholar] [CrossRef]

- Dominy, J.; Bulman, D.N. An Active Suspension for a Formula One Grand Prix Racing Car. J. Dyn. Syst. Meas. Control 1985, 107, 73–79. [Google Scholar] [CrossRef]

- Sakai, T.; Doi, Y.; Yamamoto, K.; Ogasawara, T.; Narita, M. Theoretical and Experimental Analysis of Rattling Noise of Automotive Gearbox; Technical Report, SAE Technical Paper 810773; SAE International: Warrendale, PA, USA, 1981. [Google Scholar] [CrossRef]

- Soltic, P.; Guzzella, L. Performance simulations of engine-gearbox combinations for lightweight passenger cars. Proc. Inst. Mech. Eng. Part J. Automob. Eng. 2001, 215, 259–271. [Google Scholar] [CrossRef]

- Limebeer, D.J.N.; Perantoni, G.; Rao, A.V. Optimal control of Formula One car energy recovery systems. Int. J. Control 2014, 87, 2065–2080. [Google Scholar] [CrossRef]

- Bobrowski, J.; Sobczak, K. Numerical investigations on the effect of an underbody battery on solar vehicle aerodynamics. Arch. Thermodyn. 2021, 42, 247–260. [Google Scholar] [CrossRef]

- Betancour, E.; Mejía-Gutiérrez, R.; Osorio-gómez, G.; Arbelaez, A. Design of structural parts for a racing solar car. In Advances on Mechanics, Design Engineering and Manufacturing: Proceedings of the International Joint Conference on Mechanics, Design Engineering & Advanced Manufacturing (JCM 2016), Catania, Italy, 14–16 September 2016; Eynard, B., Nigrelli, V., Oliveri, S.M., Peris-Fajarnes, G., Rizzuti, S., Eds.; Springer: Cham, Switzerland, 2017; pp. 25–32. [Google Scholar] [CrossRef]

- Vinnichenko, N.A.; Uvarov, A.V.; Znamenskaya, I.A.; Ay, H.; Wang, T.H. Solar car aerodynamic design for optimal cooling and high efficiency. Solar Energy 2014, 103, 183–190. [Google Scholar] [CrossRef]

- Walter, E.; Glover, N.; Cureton, J.; Kosbar, K. Telemetry System Architecture for a Solar Car; International Foundation for Telemetering: Palmdale, CA, USA, 2015. [Google Scholar]

- Waldo, J. Embedded Computing and Formula One Racing. IEEE Pervasive Comput. 2005, 4, 18–21. [Google Scholar] [CrossRef]

- Betancur, E.; Osorio-Gómez, G.; Rivera, J.C. Heuristic Optimization for the Energy Management and Race Strategy of a Solar Car. Sustainability 2017, 9, 1576. [Google Scholar] [CrossRef]

- Howlett, P.; Pudney, P.; Tarnopolskaya, T.; Gates, D. Optimal driving strategy for a solar car on a level road. IMA J. Manag. Math. 1997, 8, 59–81. [Google Scholar] [CrossRef]

- Shimizu, Y.; Yasuyuki, K.; Torii, M.; Takamuro, M. Solar car cruising strategy and its supporting system. JSAE Rev. 1998, 19, 143–149. [Google Scholar] [CrossRef]

- Conti, J.P. Data driven [Comms—Telemetry]. Eng. Technol. 2008, 3, 70–75. [Google Scholar]

- Taha, Z.; Passarella, R.; How, H.X.; Sah, J.M.; Ahmad, N.; Ghazilla, R.A.R.; Yap, J.H. Application of Data Acquisition and Telemetry System into a Solar Vehicle. In Proceedings of the 2010 Second International Conference on Computer Engineering and Applications, Bali, Indonesia, 28–30 September 2010; Volume 1, pp. 96–100. [Google Scholar] [CrossRef]

- Ba, T.; Li, S.; Gao, Y.; Wang, S. Design of a Human–Computer Interaction Method for Intelligent Electric Vehicles. World Electr. Veh. J. 2022, 13, 179. [Google Scholar] [CrossRef]

- Kettle, L.; Lee, Y.C. Augmented Reality for Vehicle-Driver Communication: A Systematic Review. Safety 2022, 8, 84. [Google Scholar] [CrossRef]

- Aleva, T.K.; Tabone, W.; Dodou, D.; de Winter, J.C.F. Augmented reality for supporting the interaction between pedestrians and automated vehicles: An experimental outdoor study. Front. Robot. AI 2024, 11, 1324060. [Google Scholar] [CrossRef]

- Qiu, H.; Ahmad, F.; Bai, F.; Gruteser, M.; Govindan, R. AVR: Augmented Vehicular Reality. In Proceedings of the 16th Annual International Conference on Mobile Systems, Applications, and Services, Munich, Germany, 10 June 2018; pp. 81–95. [Google Scholar] [CrossRef]

- Murali, P.K.; Kaboli, M.; Dahiya, R. Intelligent In-Vehicle Interaction Technologies. Adv. Intell. Syst. 2022, 4, 2100122. [Google Scholar] [CrossRef]

- Forysiak, J.; Fudala, K.; Krawiranda, P.; Felcenloben, J.; Romanowski, A.; Kucharski, P. Solar Powered Electric Vehicle Information System. In Proceedings of the 216th The IIER International Conference, Kyoto, Japan, 27 January 2019; pp. 18–21. [Google Scholar]

- Mambou, E.N.; Swart, T.G.; Ndjiounge, A.; Clarke, W. Design and implementation of a real-time tracking and telemetry system for a solar car. In Proceedings of the AFRICON 2015, Addis Ababa, Ethiopia, 14–17 September 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Sanderson, M. Chapter 40—Telemetry. In Instrumentation Reference Book, 4th ed.; Boyes, W., Ed.; Butterworth-Heinemann: Boston, MA, USA, 2010; pp. 677–697. [Google Scholar] [CrossRef]

- Read, A.J. T—Telemetry. In Encyclopedia of Marine Mammals, 2nd ed.; Perrin, W.F., Würsig, B., Thewissen, J., Eds.; Academic Press: London, UK, 2009; pp. 1153–1156. [Google Scholar] [CrossRef]

- Rymarczyk, T.; Adamkiewicz, P. Nondestructive Method to Determine Moisture Area In Historical Building. Inform. Autom. Pomiary Gospod. Ochr. Środowiska 2017, 7, 68–71. [Google Scholar] [CrossRef]

- Woźniak, P.; Romanowski, A.; Yantaç, A.E.; Fjeld, M. Notes from the front lines: Lessons learnt from designing for improving medical imaging data sharing. In Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Helsinki, Finland, 26–30 October 2014; pp. 381–390. [Google Scholar] [CrossRef]

- DeCelles, G.; Zemeckis, D. Chapter Seventeen—Acoustic and Radio Telemetry. In Stock Identification Methods, 2nd ed.; Cadrin, S.X., Kerr, L.A., Mariani, S., Eds.; Academic Press: San Diego, CA, USA, 2014; pp. 397–428. [Google Scholar] [CrossRef]

- Kaundal, A.; Hegde, V.; Khan, H.; Allroggen, H. Home video EEG telemetry. Pract. Neurol. 2021, 21, 212–215. [Google Scholar] [CrossRef]

- Cancro, G.; Turner, R.; Nguyen, L.; Li, A.; Sibol, D.; Gersh, J.; Piatko, C.; Montemayor, J.; McKerracher, P. An Interactive Visualization System for Analyzing Spacecraft Telemetry. In Proceedings of the 2007 IEEE Aerospace Conference, Big Sky, MT, USA, 18 June 2007; pp. 1–9. [Google Scholar] [CrossRef]

- Guerra, J.C.; Stephan, C.; Pena, E.; Valenzuela, J.; Osorio, J. SystMon: A data visualization tool for the analysis of telemetry data. In Proceedings of the Observatory Operations: Strategies, Processes, and Systems VI, Edinburgh, UK, 1 July 2016; Peck, A.B., Seaman, R.L., Benn, C.R., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2016; Volume 9910, pp. 706–715. [Google Scholar] [CrossRef]

- Wang, C.; Ye, Z.; Wu, B.; Yin, H.; Cao, Q.; Zhu, J. The design of visualization telemetry system based on camera module of the commercial smartphone. In Proceedings of the Sensors, Systems, and Next-Generation Satellites XXI, Warsaw, Poland, 11–14 September 2017; Neeck, S.P., Bézy, J.L., Kimura, T., Eds.; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2017; Volume 10423, pp. 348–355. [Google Scholar] [CrossRef]

- Ibrahim, S.K.; Ahmed, A.; Zeidan, M.A.E.; Ziedan, I.E. Machine Learning Methods for Spacecraft Telemetry Mining. IEEE Trans. Aerosp. Electron. Syst. 2019, 55, 1816–1827. [Google Scholar] [CrossRef]

- Aparicio, M.; Costa, C.J. Data Visualization. Commun. Des. Q. Rev 2015, 3, 7–11. [Google Scholar] [CrossRef]

- Card, S. Information visualization. In Human-Computer Interaction; CRC Press: Boca Raton, FL, USA, 2009; pp. 199–234. [Google Scholar]

- Jelliti, I.; Romanowski, A.; Grudzien, K. Design of crowdsourcing system for analysis of gravitational flow using X-ray visualization. In Proceedings of the 2016 Federated Conference on Computer Science and Information Systems (FedCSIS), Gdansk, Poland, 14 September 2016; pp. 1613–1619. [Google Scholar]

- Romanowski, A.; Chaniecki, Z.; Koralczyk, A.; Woźniak, M.; Nowak, A.; Kucharski, P.; Jaworski, T.; Malaya, M.; Rózga, P.; Grudzień, K. Interactive Timeline Approach for Contextual Spatio-Temporal ECT Data Investigation. Sensors 2020, 20, 4793. [Google Scholar] [CrossRef]

- Brown, J.P. Visualisation tactics for solving real world tasks. In Mathematical Modelling in Education Research and Practice: Cultural, Social and Cognitive Influences; Springer: Berlin/Heidelberg, Germany, 2015; pp. 431–442. [Google Scholar]

- Talaver, O.V.; Vakaliuk, T.A. Dynamic system analysis using telemetry. In Proceedings of the CS&SE@ SW, Kyiv, Ukraine, 30 November 2023; pp. 193–209. [Google Scholar]

- Chaniecki, Z.; Grudzień, K.; Jaworski, T.; Rybak, G.; Romanowski, A.; Sankowski, D. Diagnostic System Of Gravitational Solid Flow Based On Weight And Accelerometer Signal Analysis Using Wireless Data Transmission Technology. Image Process. Commun. 2012, 17, 319–326. [Google Scholar] [CrossRef]

- Sakagami, R.; Takeishi, N.; Yairi, T.; Hori, K. Visualization Methods for Spacecraft Telemetry Data Using Change-Point Detection and Clustering. Trans. Jpn. Soc. Aeronaut. Space Sci. Aerosp. Technol. Jpn. 2019, 17, 244–252. [Google Scholar] [CrossRef]

- Galletta, A.; Allam, S.; Carnevale, L.; Bekri, M.A.; Ouahbi, R.E.; Villari, M. An Innovative Methodology for Big Data Visualization in Oceanographic Domain. In Proceedings of the International Conference on Geoinformatics and Data Analysis, Prague, Czech Republic, 20 April 2018; pp. 103–107. [Google Scholar] [CrossRef]

- Sulikowski, P. Evaluation of Varying Visual Intensity and Position of a Recommendation in a Recommending Interface Towards Reducing Habituation and Improving Sales; Chao, K.M., Jiang, L., Hussain, O.K., Ma, S.P., Fei, X., Eds.; Springer: Cham, Switzerland, 2020; pp. 208–218. [Google Scholar]

- Sulikowski, P.; Kucznerowicz, M.; Bąk, I.; Romanowski, A.; Zdziebko, T. Online Store Aesthetics Impact Efficacy of Product Recommendations and Highlighting. Sensors 2022, 22, 9186. [Google Scholar] [CrossRef]

- Mazurek, M.; Rymarczyk, T.; Kłosowski, G.; Maj, M.; Adamkiewicz, P. Tomographic Measuring Sensors System for Analysis and Visualization of Technological Processes. In Proceedings of the 2020 50th Annual IEEE-IFIP International Conference on Dependable Systems and Networks-Supplemental Volume (DSN-S), Valencia, Spain, 5 August 2020; pp. 45–46. [Google Scholar] [CrossRef]

- Banasiak, R.; Wajman, R.; Jaworski, T.; Fiderek, P.; Sankowski, D. Two-Phase Flow Regime Three-Dimensonal Visualization Using Electrical Capacitance Tomography—Algorithms and Software. Inform. Autom. Pomiary Gospod. Ochr. Środowiska 2017, 7, 11–16. [Google Scholar] [CrossRef]

- Parker, M.C.; Hargrave, G.K. The development of a visualisation tool for acquired motorsport data. Proc. Inst. Mech. Eng. Part P J. Sport. Eng. Technol. 2016, 230, 225–235. [Google Scholar] [CrossRef]

- Backhaus, C.; Boyer, K.; Elmadani, S.; Houston, P.; Ruckle, S.; Marcellin, M. A Portable Solution For On-Site Analysis and Visualization of Race Car Telemetry Data; International Foundation for Telemetering: Palmdale, CA, USA, 2018. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles; Hutter, M., Siegwart, R., Eds.; Springer: Cham, Switzerland, 2018; pp. 621–635. [Google Scholar]

- Chandiramani, J.R.; Bhandari, S.; Hariprasad, S. Vehicle Data Acquisition and Telemetry. In Proceedings of the 2014 Fifth International Conference on Signal and Image Processing, Bangalore, India, 31 March 2014; pp. 187–191. [Google Scholar] [CrossRef]

- Ahmad, S. Designing a Detailed Telemetry Dashboard for Sim-Racers. In The 39th Twente Student Conference on IT (TScIT 39); University of Twente: Enschede, The Netherlands, 2023. [Google Scholar]

- Woźniak, M.P.; Sikorski, P.; Wróbel-Lachowska, M.; Bartłomiejczyk, N.; Dominiak, J.; Grudzień, K.; Romanowski, A. Enhancing In-game Immersion Using BCI-controlled Mechanics. In Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 8 December 2021; VRST ’21. [Google Scholar] [CrossRef]

- Andrzejczak, J.; Kozłowicz, W.; Szrajber, R.; Wojciechowski, A. Factors Affecting the Sense of Scale in Immersive, Realistic Virtual Reality Space. In Proceedings of the Computational Science—ICCS 2021, Krakow, Poland, 16–18 June 2021; Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V.V., Dongarra, J.J., Sloot, P.M., Eds.; Springer: Cham, Switzerland, 2021; pp. 3–16. [Google Scholar]

- Zhang, Y.; Nowak, A.; Romanowski, A.; Fjeld, M. Virtuality or Physicality? Supporting Memorization Through Augmented Reality Gamification. In Proceedings of the Companion 2023 ACM SIGCHI Symposium on Engineering Interactive Computing Systems, Swansea, UK, 30 June 2023; pp. 53–58. [Google Scholar] [CrossRef]

- Brown, E.J.; Fujimoto, K.; Blumenkopf, B.; Kim, A.S.; Kontson, K.L.; Benz, H.L. Usability Assessments for Augmented Reality Head-Mounted Displays in Open Surgery and Interventional Procedures: A Systematic Review. Multimodal Technol. Interact. 2023, 7, 49. [Google Scholar] [CrossRef]

- Nowak, A.; Woźniak, M.; Rowińska, Z.; Grudzień, K.; Romanowski, A. Towards in-situ process tomography data processing using augmented reality technology. In Proceedings of the Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers (UbiComp/ISWC ’19 Adjunct), London, UK, 9 September 2019; pp. 168–171. [Google Scholar] [CrossRef]

- Arena, F.; Collotta, M.; Pau, G.; Termine, F. An overview of augmented reality. Computers 2022, 11, 28. [Google Scholar] [CrossRef]

- Nowak, A.; Wozniak, M.; Pieprzowski, M.; Romanowski, A. Towards Amblyopia Therapy Using Mixed Reality Technology. In Proceedings of the 2018 Federated Conference on Computer Science and Information Systems (FedCSIS), Poznań, Poland, 9–12 September 2018; pp. 279–282. [Google Scholar]

- Nowak, A.; Knierim, P.; Romanowski, A.; Schmidt, A.; Kosch, T. What does the Oscilloscope Say? Comparing the Efficiency of In-Situ Visualisations during Circuit Analysis. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25 April 2020; CHI EA ’20. pp. 1–7. [Google Scholar] [CrossRef]

- Walczak, N.; Sobiech, F.; Buczek, A.; Jeanty, M.; Kupiński, K.; Chaniecki, Z.; Romanowski, A.; Grudzień, K. Towards Gestural Interaction with 3D Industrial Measurement Data Using HMD AR. In Proceedings of the Digital Interaction and Machine Intelligence, Warsaw, Poland, 12–14 December 2023; Biele, C., Kacprzyk, J., Kopeć, W., Owsiński, J.W., Romanowski, A., Sikorski, M., Eds.; Springer: Cham, Switzerland, 2023; pp. 213–221. [Google Scholar]

- Zhang, Y.; Nowak, A.; Xuan, Y.; Romanowski, A.; Fjeld, M. See or Hear? Exploring the Effect of Visual/Audio Hints and Gaze-assisted Instant Post-task Feedback for Visual Search Tasks in AR. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Sydney, Australia, 16 October 2023; pp. 1113–1122. [Google Scholar] [CrossRef]

- Parker, C.; Tomitsch, M. Data Visualisation Trends in Mobile Augmented Reality Applications. In Proceedings of the 7th International Symposium on Visual Information Communication and Interaction, Sydney, Australia, 5 August 2014; pp. 228–231. [Google Scholar] [CrossRef]

- Woźniak, M.; Lewczuk, A.; Adamkiewicz, K.; Józiewicz, J.; Jaworski, T.; Rowińska, Z. ARchemist: Towards in-situ experimental guidance using augmented reality technology. In Proceedings of the 18th International Conference on Advances in Mobile Computing & Multimedia, Chiang Mai, Thailand, 2 December 2020; pp. 58–63. [Google Scholar]

- Haynes, P.; Hehl-Lange, S.; Lange, E. Mobile Augmented Reality for Flood Visualisation. Environ. Model. Softw. 2018, 109, 380–389. [Google Scholar] [CrossRef]

- Nowak, A.; Zhang, Y.; Romanowski, A.; Fjeld, M. Augmented Reality with Industrial Process Tomography: To Support Complex Data Analysis in 3D Space. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, 26 September 2021; pp. 56–58. [Google Scholar] [CrossRef]

- Mourtzis, D.; Siatras, V.; Zogopoulos, V. Augmented reality visualization of production scheduling and monitoring. Procedia CIRP 2020, 88, 151–156. [Google Scholar] [CrossRef]

- Zhang, Y.; Nowak, A.; Rao, G.; Romanowski, A.; Fjeld, M. Is Industrial Tomography Ready for Augmented Reality? A Need-Finding Study of How Augmented Reality Can Be Adopted by Industrial Tomography Experts. In Proceedings of the Virtual, Augmented and Mixed Reality, Gothenburg, Sweden, 22–27 June 2023; Chen, J.Y.C., Fragomeni, G., Eds.; Springer: Cham, Switzerland, 2023; pp. 523–535. [Google Scholar]

- Mourtzis, D.; Zogopoulos, V.; Katagis, I.; Lagios, P. Augmented Reality based Visualization of CAM Instructions towards Industry 4.0 paradigm: A CNC Bending Machine case study. Procedia CIRP 2018, 70, 368–373. [Google Scholar] [CrossRef]

- Liu, R.; Gao, M.; Wang, L.; Wang, X.; Xiang, Y.; Zhang, A.; Xia, J.; Chen, Y.; Chen, S. Interactive Extended Reality Techniques in Information Visualization. IEEE Trans.-Hum.-Mach. Syst. 2022, 52, 1338–1351. [Google Scholar] [CrossRef]

- Aaron Bangor, P.T.K.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Human–Computer Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Hart, S.G. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Sage Publications: Los Angeles, CA, USA, 2006; Volume 50, pp. 904–908. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Forysiak, J.; Krawiranda, P.; Fudała, K.; Chaniecki, Z.; Jóźwik, K.; Grudzień, K.; Romanowski, A. Exploring Augmented Reality HMD Telemetry Data Visualization for Strategy Optimization in Student Solar-Powered Car Racing. Energies 2025, 18, 3196. https://doi.org/10.3390/en18123196

Forysiak J, Krawiranda P, Fudała K, Chaniecki Z, Jóźwik K, Grudzień K, Romanowski A. Exploring Augmented Reality HMD Telemetry Data Visualization for Strategy Optimization in Student Solar-Powered Car Racing. Energies. 2025; 18(12):3196. https://doi.org/10.3390/en18123196

Chicago/Turabian StyleForysiak, Jakub, Piotr Krawiranda, Krzysztof Fudała, Zbigniew Chaniecki, Krzysztof Jóźwik, Krzysztof Grudzień, and Andrzej Romanowski. 2025. "Exploring Augmented Reality HMD Telemetry Data Visualization for Strategy Optimization in Student Solar-Powered Car Racing" Energies 18, no. 12: 3196. https://doi.org/10.3390/en18123196

APA StyleForysiak, J., Krawiranda, P., Fudała, K., Chaniecki, Z., Jóźwik, K., Grudzień, K., & Romanowski, A. (2025). Exploring Augmented Reality HMD Telemetry Data Visualization for Strategy Optimization in Student Solar-Powered Car Racing. Energies, 18(12), 3196. https://doi.org/10.3390/en18123196