Demand Time Series Prediction of Stacked Long Short-Term Memory Electric Vehicle Charging Stations Based on Fused Attention Mechanism

Abstract

1. Introduction

2. Data Collection and Preprocessing

2.1. Dataset

2.2. Data Preprocessing

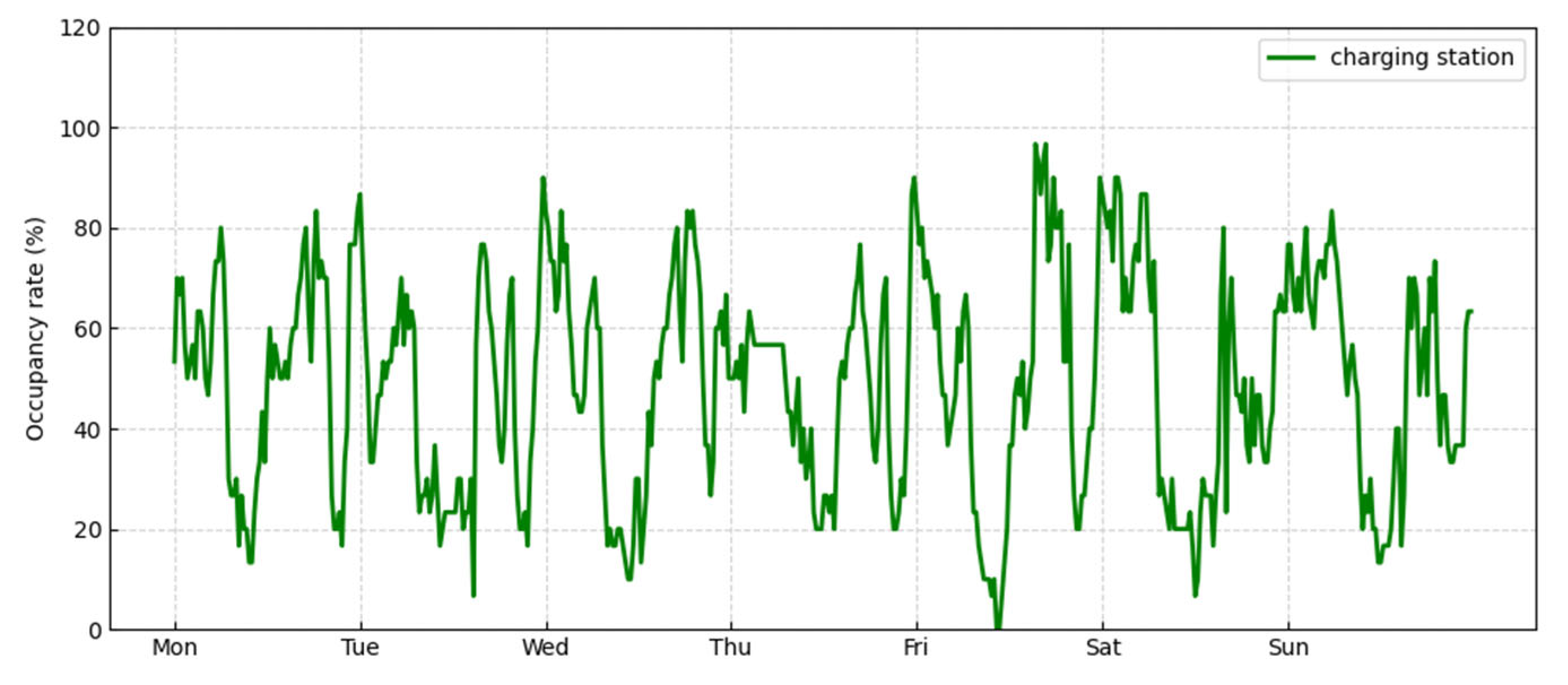

2.3. Usage Characteristics of Fast-Charging Stations

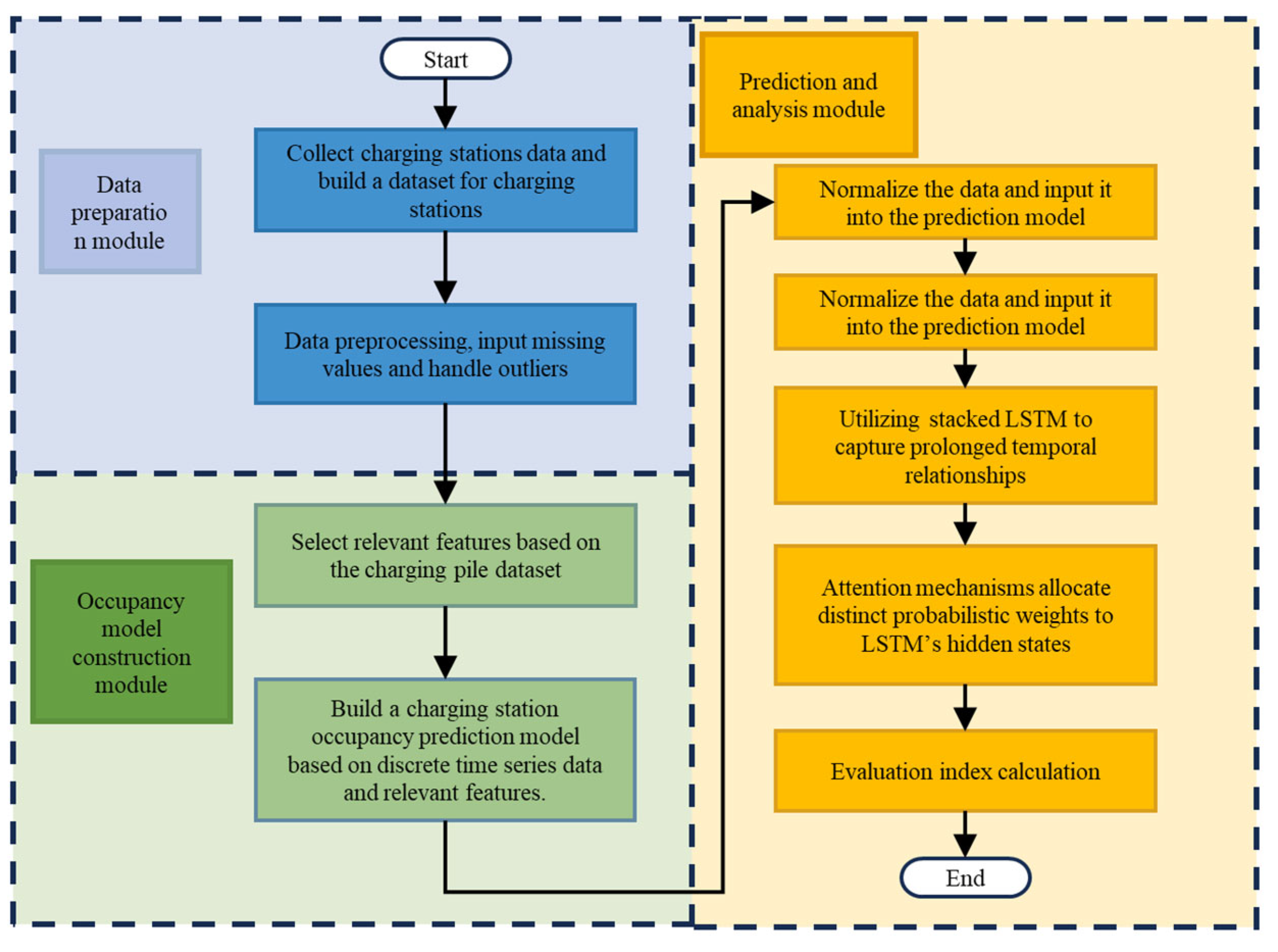

3. Charging Station Occupancy State Prediction Model

3.1. Attention-SLSTM Model

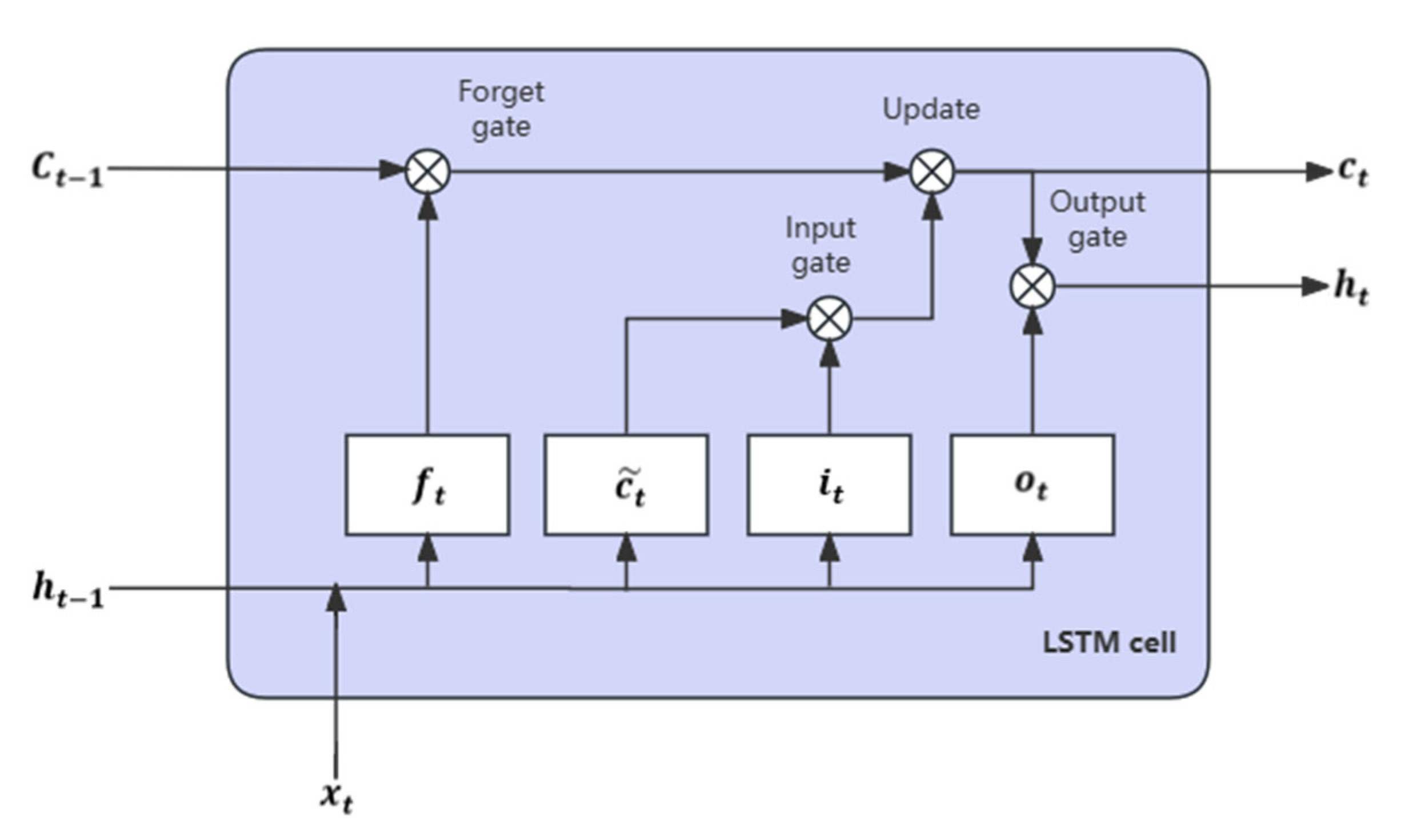

3.2. LSTM

3.3. Attention

3.4. Model Evaluation Metrics

4. Experiments and Analysis

4.1. Model Parameters and Evaluation Criteria

4.2. Model Parameters and Evaluation Criteria

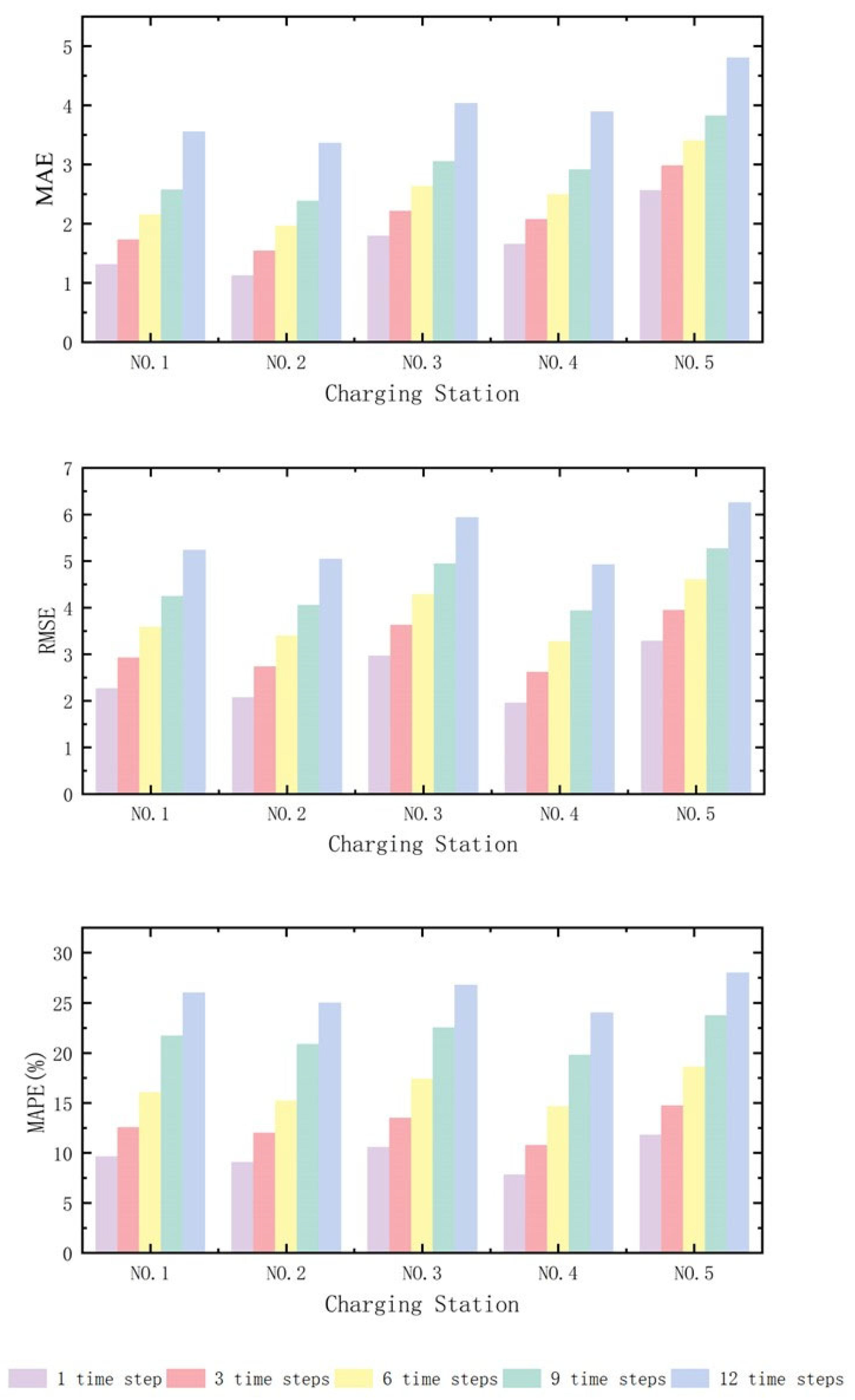

4.3. Results Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, Z.; Sun, T.; Yu, Y.; Ke, P.; Deng, Z.; Lu, C.; Huo, D.; Ding, X. Near-real-time carbon emission accounting technology toward carbon neutrality. Engineering 2022, 14, 44–51. [Google Scholar] [CrossRef]

- Li, R.; Wang, Q.; Liu, Y.; Jiang, R. Per-capita carbon emissions in 147 countries: The effect of economic, energy, social, and trade structural changes. Sustain. Prod. Consum. 2021, 27, 1149–1164. [Google Scholar] [CrossRef]

- Fang, K.; Li, C.; Tang, Y.; He, J.; Song, J. China’s pathways to peak carbon emissions: New insights from various industrial sectors. Appl. Energy 2022, 306, 118039. [Google Scholar] [CrossRef]

- Ji, Z.; Huang, X. Plug-in electric vehicle charging infrastructure deployment of China towards 2020: Policies, methodologies, and challenges. Renew. Sustain. Energy Rev. 2018, 90, 710–727. [Google Scholar] [CrossRef]

- Metais, M.O.; Jouini, O.; Perez, Y.; Berrada, J.; Suomalainen, E. Too much or not enough? Planning electric vehicle charging infrastructure: A review of modeling options. Renew. Sustain. Energy Rev. 2022, 153, 111719. [Google Scholar] [CrossRef]

- Bautista, P.B.; Cárdenas, L.L.; Aguiar, L.U.; Igartua, M.A. A traffic-aware electric vehicle charging management system for smart cities. Veh. Commun. 2019, 20, 100188. [Google Scholar]

- Chakraborty, P.; Parker, R.; Hoque, T.; Cruz, J.; Du, L.; Wang, S.; Bhunia, S. Addressing the range anxiety of battery electric vehicles with charging en route. Sci. Rep. 2022, 12, 5588. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chi, Y.; Xu, J.H.; Yuan, Y. Consumers’ attitudes and their effects on electric vehicle sales and charging infrastructure construction: An empirical study in China. Energy Policy 2022, 165, 112983. [Google Scholar] [CrossRef]

- Feng, H.J.; Xi, L.C.; Jun, Y.Z.; Ling, X.L.; Jun, H. Review of electric vehicle charging demand forecasting based on multi-source data. In Proceedings of the 2020 IEEE Sustainable Power and Energy Conference (iSPEC), Chengdu, China, 23–25 November 2020; pp. 139–146. [Google Scholar]

- de Quevedo, P.M.; Muñoz-Delgado, G.; Contreras, J. Impact of electric vehicles on the expansion planning of distribution systems considering renewable energy, storage, and charging stations. IEEE Trans. Smart Grid 2017, 10, 794–804. [Google Scholar] [CrossRef]

- Lazarou, S.; Vita, V.; Ekonomou, L. Protection schemes of meshed distribution networks for smart grids and electric vehicles. Energies 2018, 11, 3106. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, Z.; Guo, Y.; Zhang, J.; Yang, H. Short-term load forecasting for electric vehicle charging stations based on deep learning approaches. Appl. Sci. 2019, 9, 1723. [Google Scholar] [CrossRef]

- Kancharla, S.R.; Ramadurai, G. Electric vehicle routing problem with non-linear charging and load-dependent discharging. Expert Syst. Appl. 2020, 160, 113714. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Z.; Miller, E.J.; Cui, D.; Liu, P.; Zhang, Z. Charging demand prediction in Beijing based on real-world electric vehicle data. J. Energy Storage 2023, 57, 106294. [Google Scholar] [CrossRef]

- Aduama, P.; Zhang, Z.; Al-Sumaiti, A.S. Multi-Feature Data Fusion-Based Load Forecasting of Electric Vehicle Charging Stations Using a Deep Learning Model. Energies 2023, 16, 1309. [Google Scholar] [CrossRef]

- Park, D.C.; El-Sharkawi, M.A.; Marks, R.J.; Atlas, L.E.; Damborg, M.J. Electric load forecasting using an artificial neural network. IEEE Trans. Power Syst. 1991, 6, 442–449. [Google Scholar] [CrossRef]

- Sun, Q.; Liu, J.; Rong, X.; Zhang, M.; Song, X.; Bie, Z.; Ni, Z. Charging load forecasting of electric vehicle charging station based on support vector regression. In Proceedings of the 2016 IEEE PES Asia-Pacific Power and Energy Engineering Conference (APPEEC), Xi’an, China, 25–28 October 2016; pp. 1777–1781. [Google Scholar]

- Zhu, J.; Yang, Z.; Mourshed, M.; Guo, Y.; Zhou, Y.; Chang, Y.; Wei, Y.; Feng, S. Electric vehicle charging load forecasting: A comparative study of deep learning approaches. Energies 2019, 12, 2692. [Google Scholar] [CrossRef]

- Zhou, D.; Guo, Z.; Xie, Y.; Hu, Y.; Jiang, D.; Feng, Y.; Liu, D. Using bayesian deep learning for electric vehicle charging station load forecasting. Energies 2022, 15, 6195. [Google Scholar] [CrossRef]

- Deb, S.; Tammi, K.; Kalita, K.; Kalita, K.; Mahanta, P. Impact of electric vehicle charging station load on distribution network. Energies 2018, 11, 178. [Google Scholar] [CrossRef]

- Mao, T.; Zhang, X.; Zhou, B. Intelligent energy management algorithms for EV-charging scheduling with consideration of multiple EV charging modes. Energies 2019, 12, 265. [Google Scholar] [CrossRef]

- Mohamed, A.; Salehi, V.; Ma, T.; Mohammed, O. Real-time energy management algorithm for plug-in hybrid electric vehicle charging parks involving sustainable energy. IEEE Trans. Sustain. Energy 2013, 5, 577–586. [Google Scholar] [CrossRef]

- Buzna, L.; De Falco, P.; Ferruzzi, G.; Khormali, S.; Proto, D.; Refa, N.; Straka, M.; Poel, G. An ensemble methodology for hierarchical probabilistic electric vehicle load forecasting at regular charging stations. Appl. Energy 2021, 283, 116337. [Google Scholar] [CrossRef]

- Das, S.; Thakur, P.; Singh, A.K.; Singh, S.N. Optimal management of vehicle-to-grid and grid-to-vehicle strategies for load profile improvement in distribution system. J. Energy Storage 2022, 49, 104068. [Google Scholar] [CrossRef]

- von Bonin, M.; Dörre, E.; Al-Khzouz, H.; Braun, M.; Zhou, X. Impact of dynamic electricity tariff and home PV system incentives on Electric Vehicle Charging Behavior: Study on potential grid implications and economic effects for households. Energies 2022, 15, 1079. [Google Scholar] [CrossRef]

- Che, S.; Chen, Y.; Wang, L. Electric Vehicle Charging Station Layout for Tourist Attractions Based on Improved Two-Population Genetic PSO. Energies 2023, 16, 983. [Google Scholar] [CrossRef]

- Kchaou-Boujelben, M. Charging station location problem: A comprehensive review on models and solution approaches. Transp. Res. Part C Emerg. Technol. 2021, 132, 103376. [Google Scholar] [CrossRef]

- Unterluggauer, T.; Rich, J.; Andersen, P.B.; Hashemi, S. Electric vehicle charging infrastructure planning for integrated transportation and power distribution networks: A review. ETransportation 2022, 12, 100163. [Google Scholar] [CrossRef]

- Li, H.; Wang, J.; Bai, G.; Hu, X. Exploring the distribution of traffic flow for shared human and autonomous vehicle roads. Energies 2021, 14, 3425. [Google Scholar]

- Sun, M.; Shao, C.; Zhuge, C.; Wang, P.; Yang, X.; Wang, S. Uncovering travel and charging patterns of private electric vehicles with trajectory data: Evidence and policy implications. Transportation 2021, 31, 5403504. [Google Scholar] [CrossRef]

- Soldan, F.; Bionda, E.; Mauri, G.; Celaschi, S. Short-term forecast of EV charging stations occupancy probability using big data streaming analysis. arXiv 2021, arXiv:2104.12503. [Google Scholar]

- Huang, N.; He, Q.; Qi, J.; Hu, Q.; Wang, R.; Cai, G.; Yang, D. Multinodes interval electric vehicle day-ahead charging load forecasting based on joint adversarial generation. Int. J. Electr. Power Energy Syst. 2022, 143, 108404. [Google Scholar] [CrossRef]

- Su, S.; Li, Y.; Chen, Q.; Xia, M.; Yamashita, K.; Jurasz, J. Operating status prediction model at EV charging stations with fusing spatiotemporal graph convolutional network. IEEE Trans. Transp. Electrif. 2022, 9, 114–129. [Google Scholar] [CrossRef]

- Yi, Z.; Liu, X.C.; Wei, R.; Chen, X.; Dai, J. Electric vehicle charging demand forecasting using deep learning model. J. Intell. Transp. Syst. 2022, 26, 690–703. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Y.; Xie, D.; Wei, E.; Bao, X.; Chen, H.; Zhong, X. The application of improved random forest algorithm on the prediction of electric vehicle charging load. Energies 2018, 11, 3207. [Google Scholar] [CrossRef]

- Bi, J.; Wang, Y.; Sun, S.; Guan, W. Predicting charging time of battery electric vehicles based on regression and time-series methods: A case study of Beijing. Energies 2018, 11, 1040. [Google Scholar] [CrossRef]

- Gruosso, G.; Mion, A.; Gajani, G.S. Forecasting of electrical vehicle impact on infrastructure: Markov chains model of charging stations occupation. ETransportation 2020, 6, 100083. [Google Scholar] [CrossRef]

- Amini, M.H.; Karabasoglu, O.; Ilić, M.D.; Boroojeni, K.G.; Sitharama, S.L. ARIMA-based demand forecasting method considering probabilistic model of electric vehicles’ parking lots. In Proceedings of the 2015 IEEE Power & Energy Society General Meeting, Xi’an, China, 25–28 October 2016; pp. 1–5. [Google Scholar]

- Hu, J.; Sun, Q.; Wang, R.; Wang, B.; Zhai, M.; Zhang, H. Privacy-preserving sliding mode control for voltage restoration of AC microgrids based on output mask approach. IEEE Trans. Ind. Inform. 2022, 18, 6818–6827. [Google Scholar] [CrossRef]

- Liu, Z.K.; Liu, W.D.; Zhang, Y.; Qian, L.Q.; Zheng, W.Y.; Yu, Z.R.; Li, H.H.; Wu, G.; Liu, H.; Chen, Y.; et al. Enhancing the DC voltage quality in EV charging station employing SMES and SFCL devices. IEEE Trans. Appl. Supercond. 2021, 31, 5403504. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, Z.; Yin, Y.; Li, Y.; Xie, H.; Zhang, M.; Zhao, Y. A robust high-quality current control with fast convergence for three-level NPC converters in micro-energy systems. IEEE Trans. Ind. Inform. 2023, 19, 10716–10726. [Google Scholar] [CrossRef]

- Xue, X.; Ai, X.; Fang, J.; Cui, S.; Jiang, Y.; Yao, W.; Chen, Z.; Wen, J. Real-time schedule of microgrid for maximizing battery energy storage utilization. IEEE Trans. Sustain. Energy 2022, 13, 1356–1369. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Shabani, M.; Yousefi, M. An optimized model using LSTM network for demand forecasting. Comput. Ind. Eng. 2020, 143, 106435. [Google Scholar] [CrossRef]

- Fischer, T.; Krauss, C. Deep learning with long short-term memory networks for financial market predictions. Eur. J. Oper. Res. 2018, 270, 654–669. [Google Scholar] [CrossRef]

- Jawad, S.; Liu, J. Electrical Vehicle Charging Load Mobility Analysis Based on a Spatial–Temporal Method in Urban Electrified-Transportation Networks. Energies 2023, 16, 5178. [Google Scholar] [CrossRef]

- Davidich, N.; Galkin, A.; Davidich, Y.; Schlosser, T.; Capayova, S.; Nowakowska-Grunt, J.; Kush, Y.; Thompson, R. Intelligent Decision Support System for Modeling Transport and Passenger Flows in Human-Centric Urban Transport Systems. Energies 2022, 15, 2495. [Google Scholar] [CrossRef]

- Kampik, M.; Bodzek, K.; Piaskowy, A.; Pilśniak, A.; Fice, M. An analysis of energy consumption in railway signal boxes. Energies 2023, 16, 7985. [Google Scholar] [CrossRef]

- Sun, Y.; He, J.; Ma, H.; Yang, X.; Xiong, Z.; Zhu, X.; Wang, W. Online chatter detection considering beat effect based on Inception and LSTM neural networks. Mech. Syst. Signal Process. 2023, 184, 109723. [Google Scholar] [CrossRef]

- Aghsaee, R.; Hecht, C.; Schwinger, F.; Figgener, J.; Jarke, M.; Sauer, D.U. Data-Driven, Short-Term Prediction of Charging Station Occupation. Electricity 2023, 4, 134–153. [Google Scholar] [CrossRef]

- Abbasimehr, H.; Paki, R. Improving time series forecasting using LSTM and attention models. J. Ambient Intell. Humaniz. Comput. 2022, 13, 673–691. [Google Scholar] [CrossRef]

- Verma, A.; Asadi, A.; Yang, K.; Maitra, A.; Asgeirsson, H. Analyzing household charging patterns of Plug-in electric vehicles (PEVs): A data mining approach. Comput. Ind. Eng. 2019, 128, 964–973. [Google Scholar] [CrossRef]

- Yi, T.; Zhang, C.; Lin, T.; Liu, J. Research on the spatial-temporal distribution of electric vehicle charging load demand: A case study in China. J. Clean. Prod. 2020, 242, 118457. [Google Scholar] [CrossRef]

- Aït-Sahalia, Y. Telling from discrete data whether the underlying continuous-time model is a diffusion. J. Financ. 2002, 57, 2075–2112. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Logan, G.D. The CODE theory of visual attention: An integration of space-based and object-based attention. Psychol. Rev. 1996, 103, 603. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Wang, H.; Dong, J.; Zhong, G.; Sun, X. Prediction of sea surface temperature using long short-term memory. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1745–1749. [Google Scholar] [CrossRef]

- Ma, T.Y.; Faye, S. Multistep electric vehicle charging station occupancy prediction using hybrid LSTM neural networks. Energy 2022, 244, 123217. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Ojo, S.O.; Owolawi, P.A.; Mphahlele, M.; Adisa, J.A. Stock market behaviour prediction using stacked LSTM networks. In Proceedings of the 2019 International Multidisciplinary Information Technology and Engineering Conference (IMITEC), Vanderbijlpark, South Africa, 21–22 November 2019; pp. 1–5. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Learning rate | 0.001 |

| Number of training epochs | 20 |

| Regularization | Dropout (0.2) |

| Batch size | 32 |

| Optimizer | Adam |

| Indicators | ARIMA | LSTM | Stacked-LSTM | ATT-SLSTM |

|---|---|---|---|---|

| MAE | 2.7891 | 2.1698 | 1.7701 | 1.6860 |

| RMSE | 3.6120 | 2.9963 | 2.6366 | 2.5040 |

| MAPE | 14.5954 | 12.8830 | 11.0680 | 9.7680 |

| Model | Indicators | Time Steps | ||||

|---|---|---|---|---|---|---|

| 1 Time Step | 3 Time Steps | 6 Time Steps | 9 Time Steps | 12 Time Steps | ||

| MAE | 2.789 | 3.215 | 3.677 | 4.107 | 4.712 | |

| ARIMA | RMSE | 3.612 | 4.155 | 4.894 | 5.666 | 6.212 |

| MAPE | 14.595 | 18.811 | 22.731 | 25.881 | 30.788 | |

| MAE | 2.170 | 2.610 | 3.284 | 3.516 | 4.348 | |

| LSTM | RMSE | 2.996 | 3.713 | 4.747 | 5.120 | 5.948 |

| MAPE | 12.883 | 14.246 | 18.715 | 23.789 | 28.948 | |

| MAE | 1.770 | 2.422 | 2.787 | 3.304 | 4.038 | |

| Stacked-LSTM | RMSE | 2.637 | 3.599 | 4.164 | 4.633 | 5.807 |

| MAPE | 11.068 | 12.946 | 17.841 | 22.801 | 26.881 | |

| MAE | 1.686 | 2.106 | 2.526 | 2.946 | 3.926 | |

| Attention-SLSTM | RMSE | 2.504 | 3.164 | 3.824 | 4.484 | 5.474 |

| MAPE | 9.768 | 12.688 | 16.374 | 21.714 | 25.94 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, C.; Zhou, H.; Chen, X.; Huang, J. Demand Time Series Prediction of Stacked Long Short-Term Memory Electric Vehicle Charging Stations Based on Fused Attention Mechanism. Energies 2024, 17, 2041. https://doi.org/10.3390/en17092041

Yang C, Zhou H, Chen X, Huang J. Demand Time Series Prediction of Stacked Long Short-Term Memory Electric Vehicle Charging Stations Based on Fused Attention Mechanism. Energies. 2024; 17(9):2041. https://doi.org/10.3390/en17092041

Chicago/Turabian StyleYang, Chengyu, Han Zhou, Ximing Chen, and Jiejun Huang. 2024. "Demand Time Series Prediction of Stacked Long Short-Term Memory Electric Vehicle Charging Stations Based on Fused Attention Mechanism" Energies 17, no. 9: 2041. https://doi.org/10.3390/en17092041

APA StyleYang, C., Zhou, H., Chen, X., & Huang, J. (2024). Demand Time Series Prediction of Stacked Long Short-Term Memory Electric Vehicle Charging Stations Based on Fused Attention Mechanism. Energies, 17(9), 2041. https://doi.org/10.3390/en17092041