Exploring the Preference for Discrete over Continuous Reinforcement Learning in Energy Storage Arbitrage

Abstract

1. Introduction

2. Related Works

2.1. Traditional Optimization-Based Energy Arbitrage

2.2. Discrete RL-Based Energy Arbitrage

2.3. Continuous RL-Based Energy Arbitrage

3. Methods

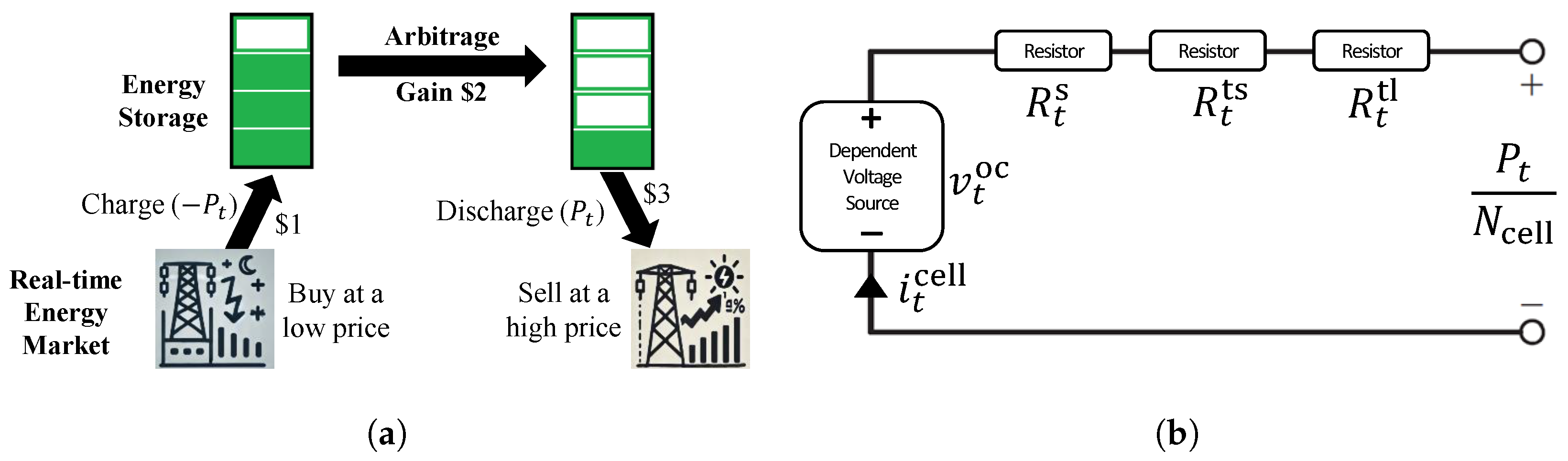

3.1. Energy Arbitrage and Battery Model

3.2. Discrete RL Method: Deep Q-Network (DQN)

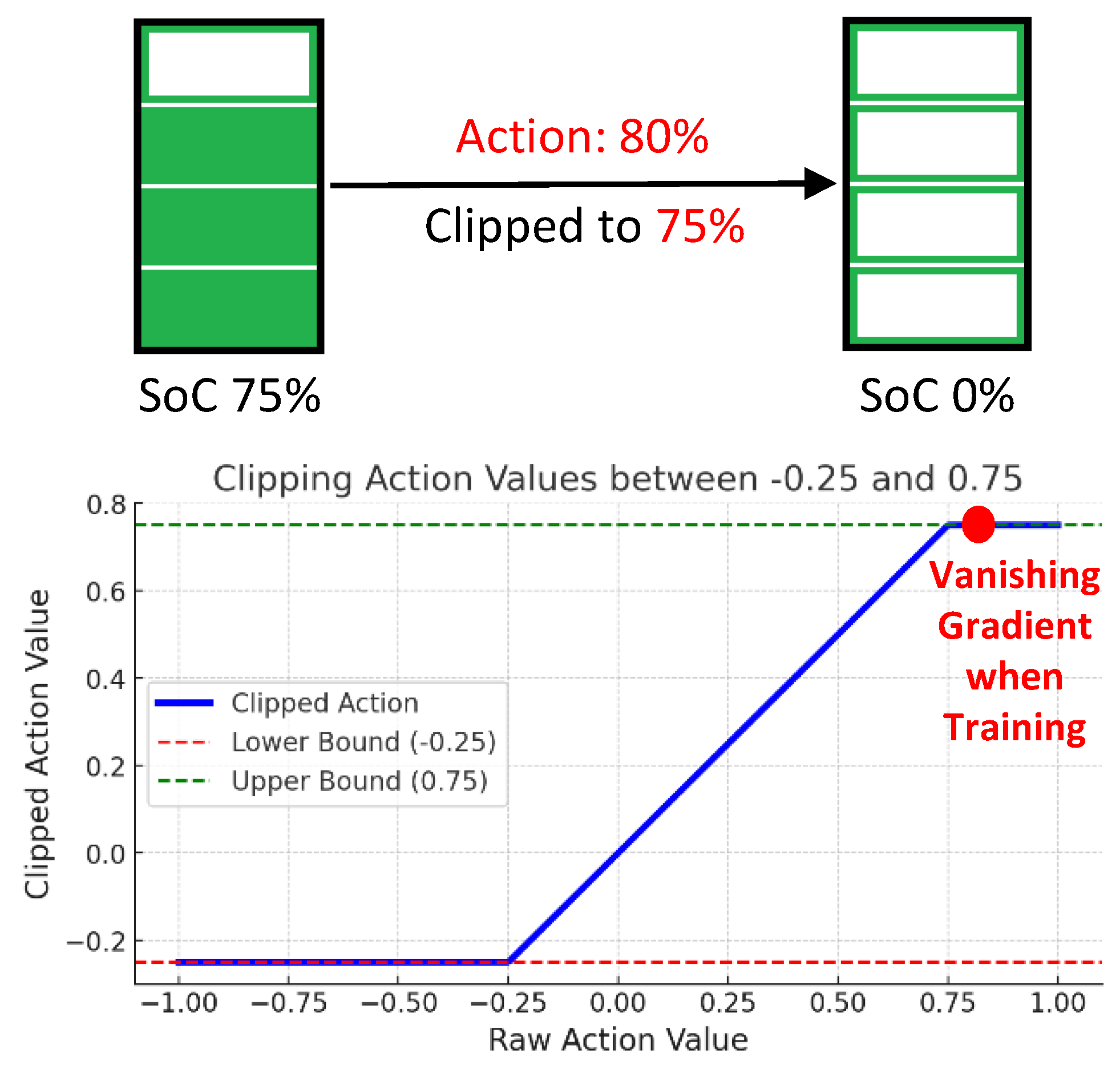

3.3. Continuous RL Method: Advantage Actor–Critic (A2C)

4. Performance Evaluation

4.1. Experimental Setup

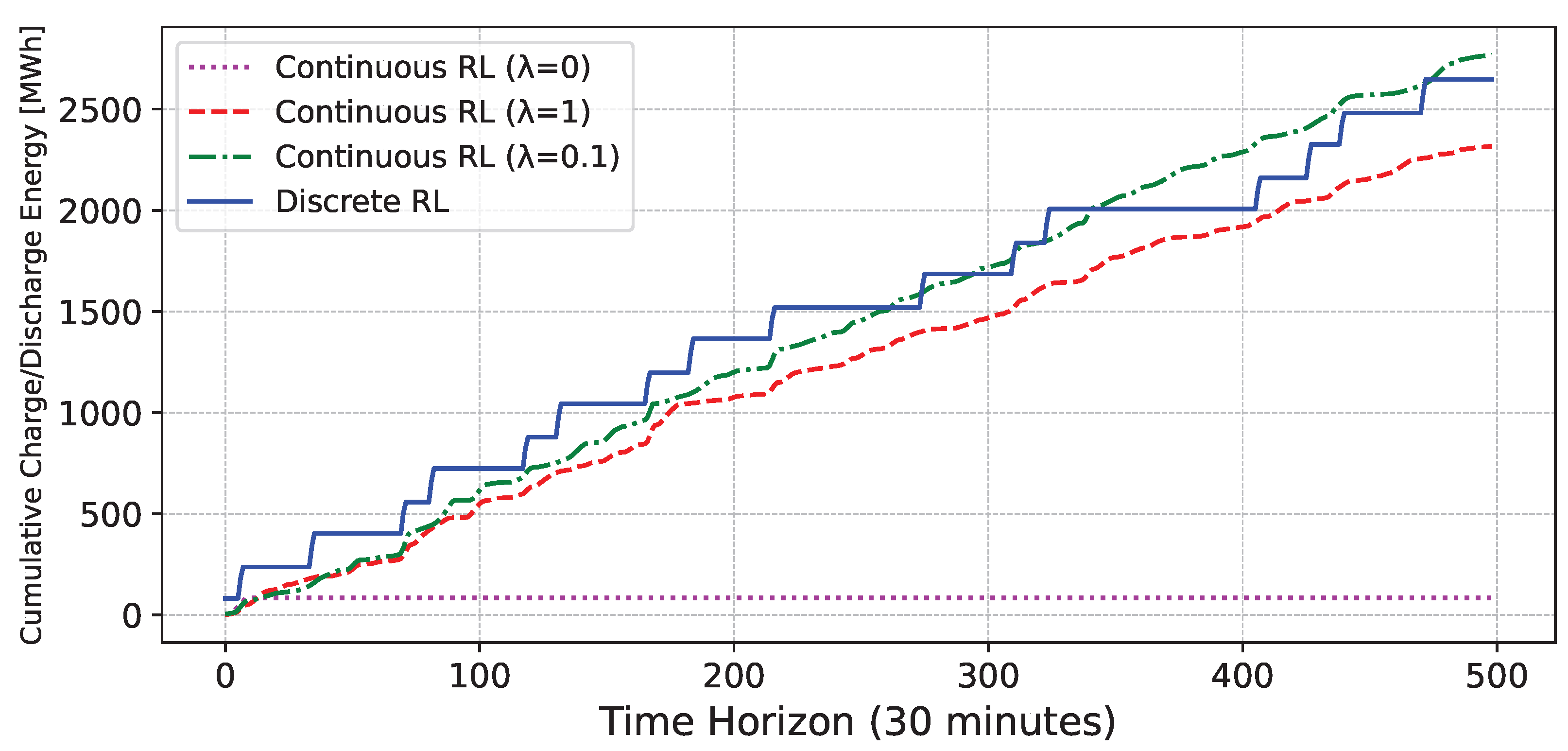

4.2. Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ESS | Energy storage system |

| SoC | State of charge |

| RL | Reinforcement learning |

| DQN | Deep Q-network |

| A2C | Advantage actor–critic |

| PPO | Proximal policy optimization |

| TinyML | Tiny machine learning |

References

- Vejdan, S.; Grijalva, S. The value of real-time energy arbitrage with energy storage systems. In Proceedings of the 2018 IEEE Power & Energy Society General Meeting (PESGM), Portland, OR, USA, 5–10 August 2018; IEEE: Piscataway Township, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Mikkelson, D.; Frick, K. Analysis of controls for integrated energy storage system in energy arbitrage configuration with concrete thermal energy storage. Appl. Energy 2022, 313, 118800. [Google Scholar] [CrossRef]

- Khakimov, R.; Moskvin, A.; Zhdaneev, O. Hydrogen as a key technology for long-term & seasonal energy storage applications. Int. J. Hydrog. Energy 2024, 68, 374–381. [Google Scholar]

- Cao, J.; Harrold, D.; Fan, Z.; Morstyn, T.; Healey, D.; Li, K. Deep Reinforcement Learning-Based Energy Storage Arbitrage With Accurate Lithium-Ion Battery Degradation Model. IEEE Trans. Smart Grid 2020, 11, 4513–4521. [Google Scholar] [CrossRef]

- Jeong, J.; Kim, S.W.; Kim, H. Deep reinforcement learning based real-time renewable energy bidding with battery control. IEEE Trans. Energy Mark. Policy Regul. 2023, 1, 85–96. [Google Scholar] [CrossRef]

- Chakraborty, T.; Watson, D.; Rodgers, M. Automatic Generation Control Using an Energy Storage System in a Wind Park. IEEE Trans. Power Syst. 2018, 33, 198–205. [Google Scholar] [CrossRef]

- Hashmi, M.U.; Mukhopadhyay, A.; Bušić, A.; Elias, J.; Kiedanski, D. Optimal storage arbitrage under net metering using linear programming. In Proceedings of the 2019 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids (SmartGridComm), Beijing, China,, 21–24 October 2019; IEEE: Piscataway Township, NJ, USA, 2019; pp. 1–7. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, UK, 1998; Volume 135. [Google Scholar]

- Jeong, J.; Ku, T.Y.; Park, W.K. Time-Varying Constraint-Aware Reinforcement Learning for Energy Storage Control. arXiv 2024, arXiv:2405.10536. [Google Scholar]

- Miao, Y.; Chen, T.; Bu, S.; Liang, H.; Han, Z. Co-optimizing battery storage for energy arbitrage and frequency regulation in real-time markets using deep reinforcement learning. Energies 2021, 14, 8365. [Google Scholar] [CrossRef]

- Madahi, S.S.K.; Claessens, B.; Develder, C. Distributional Reinforcement Learning-based Energy Arbitrage Strategies in Imbalance Settlement Mechanism. arXiv 2023, arXiv:2401.00015. [Google Scholar]

- Karimi madahi, S.s.; Gokhale, G.; Verwee, M.S.; Claessens, B.; Develder, C. Control Policy Correction Framework for Reinforcement Learning-based Energy Arbitrage Strategies. In Proceedings of the 15th ACM International Conference on Future and Sustainable Energy Systems, Singapore, 4–7 June 2024; pp. 123–133. [Google Scholar]

- Harrold, D.J.; Cao, J.; Fan, Z. Data-driven battery operation for energy arbitrage using rainbow deep reinforcement learning. Energy 2022, 238, 121958. [Google Scholar] [CrossRef]

- Achiam, J.; Held, D.; Tamar, A.; Abbeel, P. Constrained policy optimization. In Proceedings of the International Conference on Machine Learning. PMLR, Sydney, NSW, Australia, 6–11 August 2017; pp. 22–31. [Google Scholar]

- Liang, Q.; Que, F.; Modiano, E. Accelerated primal-dual policy optimization for safe reinforcement learning. arXiv 2018, arXiv:1802.06480. [Google Scholar]

- Lee, S.; Choi, D.H. Federated reinforcement learning for energy management of multiple smart homes with distributed energy resources. IEEE Trans. Ind. Inform. 2020, 18, 488–497. [Google Scholar] [CrossRef]

- da Silva André, J.; Stai, E.; Stanojev, O.; Hug, G. Battery control with lookahead constraints in distribution grids using reinforcement learning. Electr. Power Syst. Res. 2022, 211, 108551. [Google Scholar] [CrossRef]

- Park, S.; Pozzi, A.; Whitmeyer, M.; Perez, H.; Kandel, A.; Kim, G.; Choi, Y.; Joe, W.T.; Raimondo, D.M.; Moura, S. A deep reinforcement learning framework for fast charging of Li-ion batteries. IEEE Trans. Transp. Electrif. 2022, 8, 2770–2784. [Google Scholar] [CrossRef]

- Hesse, H.C.; Kumtepeli, V.; Schimpe, M.; Reniers, J.; Howey, D.A.; Tripathi, A.; Wang, Y.; Jossen, A. Ageing and efficiency aware battery dispatch for arbitrage markets using mixed integer linear programming. Energies 2019, 12, 999. [Google Scholar] [CrossRef]

- Cheng, B.; Powell, W.B. Co-optimizing battery storage for the frequency regulation and energy arbitrage using multi-scale dynamic programming. IEEE Trans. Smart Grid 2016, 9, 1997–2005. [Google Scholar] [CrossRef]

- Zheng, N.; Jaworski, J.; Xu, B. Arbitraging variable efficiency energy storage using analytical stochastic dynamic programming. IEEE Trans. Power Syst. 2022, 37, 4785–4795. [Google Scholar] [CrossRef]

- Seyde, T.; Gilitschenski, I.; Schwarting, W.; Stellato, B.; Riedmiller, M.; Wulfmeier, M.; Rus, D. Is bang-bang control all you need? solving continuous control with bernoulli policies. Adv. Neural Inf. Process. Syst. 2021, 34, 27209–27221. [Google Scholar]

- Abed, A.M.; Mouziraji, H.R.; Bakhshi, J.; Dulaimi, A.; Mohammed, H.I.; Ibrahem, R.K.; Ben Khedher, N.; Yaïci, W.; Mahdi, J.M. Numerical analysis of the energy-storage performance of a PCM-based triplex-tube containment system equipped with arc-shaped fins. Front. Chem. 2022, 10, 1057196. [Google Scholar] [CrossRef]

- Chen, M.; Rincon-Mora, G. Accurate electrical battery model capable of predicting runtime and I-V performance. IEEE Trans. Energy Convers. 2006, 21, 504–511. [Google Scholar] [CrossRef]

- Morstyn, T.; Hredzak, B.; Aguilera, R.P.; Agelidis, V.G. Model Predictive Control for Distributed Microgrid Battery Energy Storage Systems. IEEE Trans. Control Syst. Technol. 2018, 26, 1107–1114. [Google Scholar] [CrossRef]

- Ma, Y.J.; Shen, A.; Bastani, O.; Dinesh, J. Conservative and adaptive penalty for model-based safe reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 5404–5412. [Google Scholar]

- Huang, S.; Kanervisto, A.; Raffin, A.; Wang, W.; Ontañón, S.; Dossa, R.F.J. A2C is a special case of PPO. arXiv 2022, arXiv:2205.09123. [Google Scholar]

- De La Fuente, N.; Guerra, D.A.V. A Comparative Study of Deep Reinforcement Learning Models: DQN vs PPO vs A2C. arXiv 2024, arXiv:2407.14151. [Google Scholar]

- Troudi, F.; Jouini, H.; Mami, A.; Ben Khedher, N.; Aich, W.; Boudjemline, A.; Boujelbene, M. Comparative assessment between five control techniques to optimize the maximum power point tracking procedure for PV systems. Mathematics 2022, 10, 1080. [Google Scholar] [CrossRef]

- Kuppusamy, P.; Kapadia, D.; Manvitha, E.G.; Dhahbi, S.; Iwendi, C.; Khan, M.I.; Mohanty, S.N.; Khedher, N.B. EL-RFHC: Optimized ensemble learners using RFHC for intrusion attacks classification. Ain Shams Eng. J. 2024, 15, 102807. [Google Scholar] [CrossRef]

- The Changing Price of Wholesale UK Electricity over More Than a Decade. 2017. Available online: https://www.ice.org.uk/knowledge-and-resources/briefing-sheet/the-changing-price-of-wholesale-uk-electricity (accessed on 25 October 2024).

- Jeong, J.; Ku, T.Y.; Park, W.K. Denoising Masked Autoencoder-Based Missing Imputation within Constrained Environments for Electric Load Data. Energies 2023, 16, 7933. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

| Hyperparameters | Value |

|---|---|

| The number of hidden layers in LSTM | 2 |

| The size of hidden neurons in LSTM | 16 |

| Learning rate | 0.001 |

| Discount factor () | 0.99 |

| Minibatch size (discrete RL) | 32 |

| Size of experience replay buffer D (discrete RL) | 50,000 |

| Exploration rate (discrete RL) | 0.1 |

| The number of timesteps in episode (continuous RL) | 128 |

| Exploration standard deviation (continuous RL) | 0.1 |

| 30 min Averaged Profit (USD) | |

|---|---|

| Continuous RL ( = 0) | 5.369 |

| Continuous RL ( = 1) | 14.569 |

| Continuous RL ( = 0.1) | 22.823 |

| Discrete RL | 32.392 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, J.; Ku, T.-Y.; Park, W.-K. Exploring the Preference for Discrete over Continuous Reinforcement Learning in Energy Storage Arbitrage. Energies 2024, 17, 5876. https://doi.org/10.3390/en17235876

Jeong J, Ku T-Y, Park W-K. Exploring the Preference for Discrete over Continuous Reinforcement Learning in Energy Storage Arbitrage. Energies. 2024; 17(23):5876. https://doi.org/10.3390/en17235876

Chicago/Turabian StyleJeong, Jaeik, Tai-Yeon Ku, and Wan-Ki Park. 2024. "Exploring the Preference for Discrete over Continuous Reinforcement Learning in Energy Storage Arbitrage" Energies 17, no. 23: 5876. https://doi.org/10.3390/en17235876

APA StyleJeong, J., Ku, T.-Y., & Park, W.-K. (2024). Exploring the Preference for Discrete over Continuous Reinforcement Learning in Energy Storage Arbitrage. Energies, 17(23), 5876. https://doi.org/10.3390/en17235876