A Comparative Study of Data-Driven Early-Stage End-of-Life Classification Approaches for Lithium-Ion Batteries

Abstract

1. Introduction

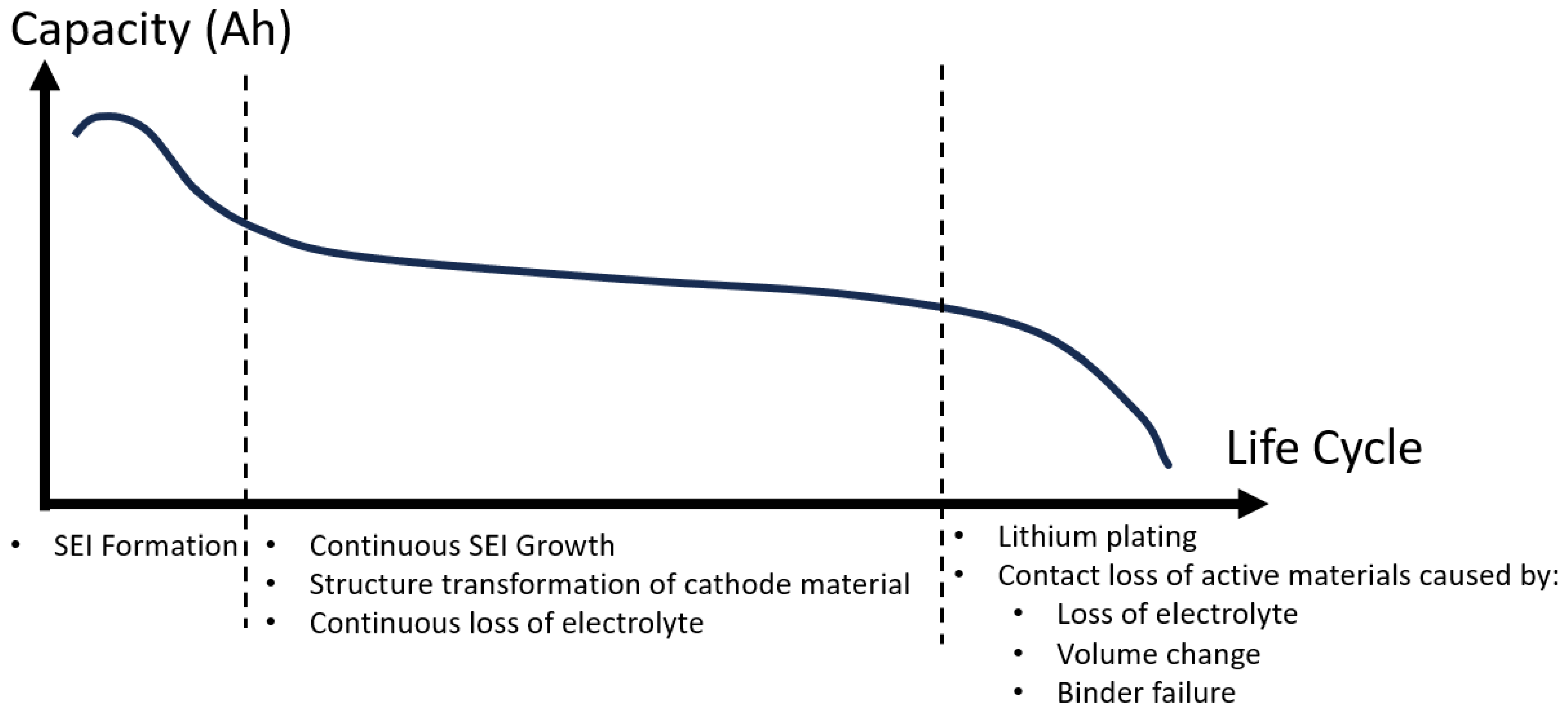

2. Chemical Mechanism for Li-Ion Battery Primary Degradation

2.1. Loss of Lithium Inventory (LLI)

2.2. Loss of Active Material of the Negative Electrode (LAM_NE)

2.3. Loss of Active Material of the Positive Electrode (LAM_PE)

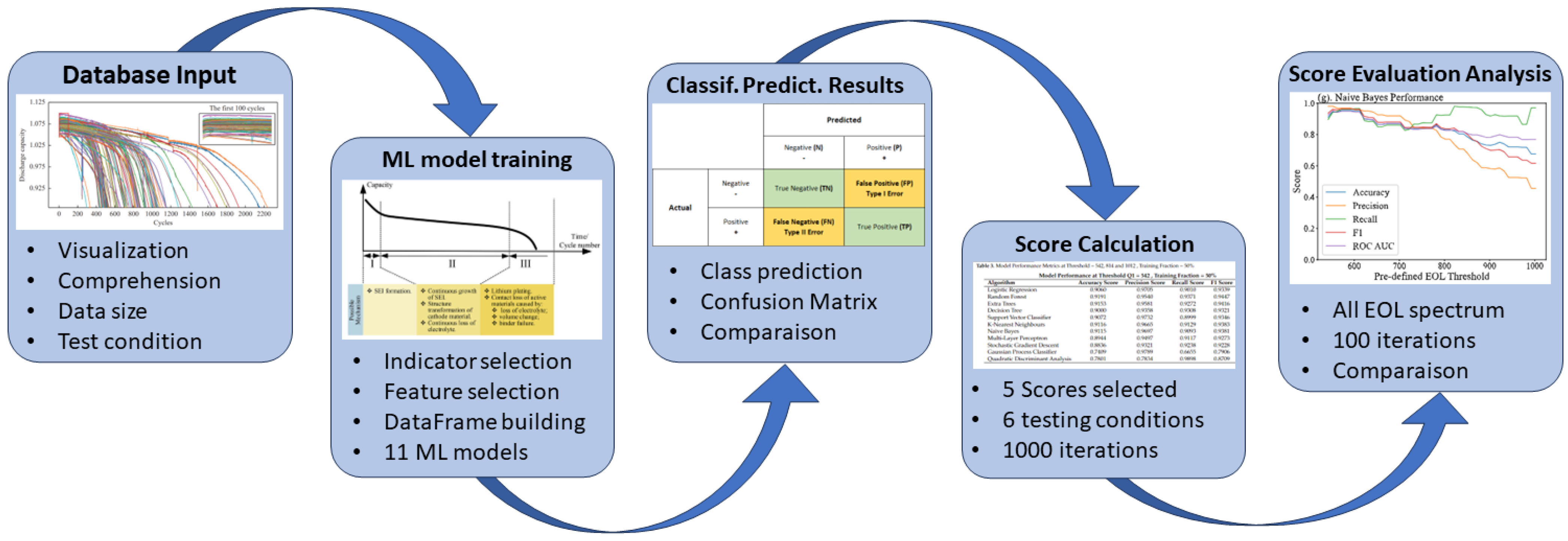

3. Methodology for Battery EOL Classification Estimation

3.1. Selected Database for Classification Training

3.2. Feature Selection

- Cycle index: This feature indicates the real-life cycle count of each cell, serving as the benchmark for our classification algorithm’s threshold.

- Charged/discharged capacity: Capacity fade is a critical occurrence that results from the loss of lithium inventory and the loss of active anode material, which are primary degradation modes triggered by various chemical reactions causing battery aging as presented in Figure 7a,b.

- Charged/discharged energy and energy efficiency: During charge-discharge cycles in a degraded battery, the increased internal resistance causes more energy to be lost as heat. This inefficiency means less energy is stored during charging and less is available for use during discharging, leading to overall energy loss as presented in Figure 7c,d.

- Average/maximum/minimun temperature: Due to increased internal resistance and breakdown of internal components, inefficient current flow leads to excess heat generation during operation, which manifests as temperature fluctuations as presented in Figure 7e.

- DC internal resistance: Battery degradation leads to an increase in DC internal resistance primarily due to the formation of internal chemical byproducts and the loss of electrode materials. Over time, these processes hinder the efficient flow of ions within the battery, thereby increasing its internal resistance as presented in Figure 7f.

3.3. Data Pre-Treatment

3.4. Data-Driven Approach for EOL Classification

- Logistic regression: This model is opted as a baseline model due to its simplicity, transparency, and interpretability. As a linear model, it provides a clear understanding of how individual features contribute to the probability of EOL prediction. While logistic regression may be considered less complex compared to other models, it serves as a valuable benchmark to assess the performance of more complex algorithms [28,29].

- Random forest classifier: The random forest classifier was selected because it can handle non-linearity, complex interactions between features, and resistance to overfitting. Intricate degradation patterns that may not be evident in simpler models can thus be captured. This model’s ensemble approach offers robustness and the potential to achieve high predictive accuracy [30].

- Extra tree classifier: Similar to the random forest classifier, the extra tree classifier was selected due to its ensemble nature, which mitigates overfitting and captures subtle variations in data. It was chosen to assess whether it provides a significant improvement over the Random Forest model, given its distinct tree-building strategy [31].

- Decision tree classifier: The decision tree classifier was introduced due to the simplicity and ability to partition the feature space into easily interpretable decision rules. Despite being susceptible to overfitting, the decision tree classifier is included as a comparative reference to measure the trade-off between interpretability and model performance [32].

- Support vector classifier: Support vector classifier is effective for both binary and multiclass classification. The objective is to find the hyperplane, which separates different classes within a maximized margin [30].

- K-nearest neighbors classifier: The K-nearest neighbors classifier is a non-parametric classifier that assigns labels to data points based on the majority class among the k-nearest neighbors in feature space [31].

- Naive Bayes classifier: The naive Bayes classifier is based on Bayes’ theorem; it employs a probabilistic mechanism, assuming independence among features to simplify the computation of conditional probabilities. This mechanism enables efficient model training and quick prediction generation.

- Multi-layer perceptron (MLP) classifier: This is an artificial neural network with multiple hidden layers; it captures complex relationships in data through its layered structure. However, the complexity can lead to challenges in training, requiring significant computational resources and time.

- Stochastic gradient descent (SGD) classifier: The SGD classifier iteratively updates the model parameters by considering a random subset of the training data, enabling it to efficiently handle large datasets while continuously enhancing the model’s performance [33].

- Gaussian process classifier: A sophisticated machine learning technique that leverages the power of Gaussian processes. It provides a flexible and probabilistic framework for classification tasks, enabling it to model complex relationships and uncertainties in the data. The Gaussian process classifier is particularly suited for scenarios where understanding the confidence of predictions and managing non-linear decision boundaries are crucial.

- Quadratic discriminant analysis: A discriminative modeling approach that captures non-linear decision boundaries by modeling the data point distribution for each class, offering flexibility in classification tasks.

3.5. Model Performance Evaluation Score

- Accuracy: Accuracy score is a straightforward metric that measures the ratio of correctly predicted instances to the total number of instances in the database. The accuracy score can be the most important score to evaluate the performance of a classification model. The expression of accuracy score is:

- Precision: Precision measures the ratio of correctly predicted positive instances (TP) to the total number of instances predicted as positive (TP + FP). Precision is useful when minimizing false positives. The expression of precision score is:

- Recall (Sensitivity or True Positive Rate (TPR)): Recall measures the ratio of correctly predicted positive instances (TP) to the total number of actual positive instances (TP + FN). It is useful for the purpose of minimizing false negatives. The expression of recall score is:

- F1-Score: The F1-score is the harmonic mean of precision and recall. It provides a balance between precision and recall. It is useful when an overall measure of a model’s performance is required. The expression of F1-score is:

- ROC AUC (Receiver Operating Characteristic—Area Under the Curve): ROC AUC is defined as the area under the receiver operating characteristic curve, which is the plot of the true positive rate (TPR) against the false positive rate (FPR) at each threshold setting. This score evaluates a model’s ability to distinguish between classes across different threshold values. It is particularly useful for imbalanced databases [36].

4. Results and Analysis

4.1. Classification Prediction Results

4.2. Threshold-Dependent Variability in Model Performance

4.2.1. Logistic Regression Performance

4.2.2. Random Forest Performance

4.2.3. Extra Trees Performance

4.2.4. Decision Tree Performance

4.2.5. Support Vector Classifier Performance

4.2.6. K-Nearest Neighbor Classifier Performance

4.2.7. Navie Bayes Classifier Performance

4.2.8. Multi-Layer Perceptron Classifier Performance

4.2.9. Stochastic Gradiant Descent Classifier Performance

4.2.10. Gaussian Process Classifier Performance

4.2.11. Quadratic Discriminant Analysis Classifier Performance

5. Discussion

5.1. Comparative Performance Analysis

5.1.1. Ensemble Methods

5.1.2. Linear Models

5.1.3. Support Vector Classifier and Neural Networks

5.1.4. Decision Tree and K-Nearest Neighbors

5.2. Future Works for Improvement

5.2.1. Feature Engineering

5.2.2. Model Tuning and Optimization

5.2.3. Hybrid and Ensemble Approaches

5.2.4. Computational Cost and Efficiency Analysis

5.3. Future Application to Online Health-Aware EMS Strategies

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, W.; Tan, H. Research on Calendar Aging for Lithium-Ion Batteries Used in Uninterruptible Power Supply System Based on Particle Filtering. World Electr. Veh. J. 2023, 14, 209. [Google Scholar] [CrossRef]

- Lu, L.; Han, X.; Li, J.; Hua, J.; Ouyang, M. A review on the key issues for lithium-ion battery management in electric vehicles. J. Power Sources 2013, 226, 272–288. [Google Scholar] [CrossRef]

- Meng, J.; Yue, M.; Diallo, D. A Degradation Empirical-Model-Free Battery End-of-Life Prediction Framework Based on Gaussian Process Regression and Kalman Filter. IEEE Trans. Transp. Electrif. 2023, 9, 4898–4908. [Google Scholar] [CrossRef]

- Susai, F.A.; Sclar, H.; Shilina, Y.; Penki, T.R.; Raman, R.; Maddukuri, S.; Maiti, S.; Halalay, I.C.; Luski, S.; Markovsky, B.; et al. Horizons for Li-Ion Batteries Relevant to Electro-Mobility: High-Specific-Energy Cathodes and Chemically Active Separators. Adv. Mater. 2018, 30, 1801348. [Google Scholar] [CrossRef] [PubMed]

- Fang, P.; Zhang, A.; Sui, X.; Wang, D.; Yin, L.; Wen, Z. Analysis of Performance Degradation in Lithium-Ion Batteries Based on a Lumped Particle Diffusion Model. ACS Omega 2023, 8, 32884–32891. [Google Scholar] [CrossRef] [PubMed]

- Han, X.; Lu, L.; Zheng, Y.; Feng, X.; Li, Z.; Li, J.; Ouyang, M. A review on the key issues of the lithium ion battery degradation among the whole life cycle. eTransportation 2019, 1, 100005. [Google Scholar] [CrossRef]

- Guo, R.; Lu, L.; Ouyang, M.; Feng, X. Mechanism of the entire overdischarge process and overdischarge-induced internal short circuit in lithium-ion batteries. Sci. Rep. 2016, 6, 30248. [Google Scholar] [CrossRef]

- Rahimi-Eichi, H.; Ojha, U.; Baronti, F.; Chow, M.-Y. Battery Management System: An Overview of Its Application in the Smart Grid and Electric Vehicles. IEEE Ind. Electron. Mag. 2013, 7, 4–16. [Google Scholar] [CrossRef]

- Palacín, M. Understanding ageing in Li-ion batteries: A chemical issue. Chem. Soc. Rev. 2018, 47, 4924–4933. [Google Scholar] [CrossRef]

- Omar, N.; Firouz, Y.; Gualous, H.; Salminen, J.; Kallio, T.; Timmermans, J.-M.; Coosemans, T.; Van den Bossche, P.; Van Mierlo, J. Aging and degradation of lithium-ion batteries. In Rechargeable Lithium Batteries: From Fundamentals to Applications; Woodhead Publishing: Sawston, UK, 2015; Chapter 9; pp. 263–279. ISBN 978-1-78242-090-3. [Google Scholar]

- Yu, C.; Zhu, J.; Wei, X.; Dai, H. Research on Temperature Inconsistency of Large-Format Lithium-Ion Batteries Based on the Electrothermal Model. World Electr. Veh. J. 2023, 14, 271. [Google Scholar] [CrossRef]

- Meng, J.; Boukhnifer, M.; Diallo, D.; Wang, T. Short-Circuit Fault Diagnosis and State Estimation for Li-ion Battery using Weighting Function Self-Regulating Observer. In Proceedings of the 2020 Prognostics and Health Management Conference (PHM-Besançon), Besancon, France, 4–7 May 2020; pp. 15–20. [Google Scholar] [CrossRef]

- Shao, L.; Zhang, Y.; Zheng, X.; He, X.; Zheng, Y.; Liu, Z. A Review of Remaining Useful Life Prediction for Energy Storage Components Based on Stochastic Filtering Methods. Energies 2023, 16, 1469. [Google Scholar] [CrossRef]

- Tao, S.; Ma, R.; Chen, Y.; Liang, Z.; Ji, H.; Han, Z.; Wei, G.; Zhang, X.; Zhou, G. Rapid and sustainable battery health diagnosis for recycling pretreatment using fast pulse test and random forest machine learning. J. Power Sources 2024, 597, 234156. [Google Scholar] [CrossRef]

- Tao, S.; Liu, H.; Sun, C.; Ji, H.; Ji, G.; Han, Z.; Gao, R.; Ma, J.; Ma, R.; Chen, Y.; et al. Collaborative and privacy-preserving retired battery sorting for profitable direct recycling via federated machine learning. Nat. Commun. 2023, 14, 8032. [Google Scholar] [CrossRef]

- Rauf, H.; Khalid, M.; Arshad, N. Machine learning in state of health and remaining useful life estimation: Theoretical and technological development in battery degradation modelling. Renew. Sustain. Energy Rev. 2022, 156, 111903. [Google Scholar] [CrossRef]

- Ashok, B.; Kannan, C.; Mason, B.; Ashok, S.D.; Indragandhi, V.; Patel, D.; Wagh, A.S.; Jain, A.; Kavitha, C. Towards Safer and Smarter Design for Lithium-Ion-Battery-Powered Electric Vehicles: A Comprehensive Review on Control Strategy Architecture of Battery Management System. Energies 2022, 15, 4227. [Google Scholar] [CrossRef]

- Severson, K.A.; Attia, P.M.; Jin, N.; Perkins, N.; Jiang, B.; Yang, Z.; Chen, M.H.; Aykol, M.; Herring, P.K.; Fraggedakis, D.; et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 2019, 4, 383–391. [Google Scholar] [CrossRef]

- Che, Y.; Foley, A.; El-Gindy, M.; Lin, X.; Hu, X.; Pecht, M. Joint Estimation of Inconsistency and State of Health for Series Battery Packs. Automot. Innov. 2021, 4, 103–116. [Google Scholar] [CrossRef]

- Guo, J.; Li, Y.; Pedersen, K.; Stroe, D.-I. Lithium-Ion Battery Operation, Degradation, and Aging Mechanism in Electric Vehicles: An Overview. Energies 2021, 14, 5220. [Google Scholar] [CrossRef]

- Barre, A.; Deguilhem, B.; Grolleau, S.; Gérard, M.; Suard, F.; RiuHan, D. A review on lithium-ion battery ageing mechanisms and estimations for automotive applications. J. Power Sources 2013, 241, 680–689. [Google Scholar] [CrossRef]

- Koleti, U.R.; Rajan, A.; Tan, C.; Moharana, S.; Dinh, T.Q.; Marco, J. A Study on the Influence of Lithium Plating on Battery Degradation. Energies 2020, 13, 3458. [Google Scholar] [CrossRef]

- Birk, C.R.; Roberts, M.R.; McTurk, E.; Bruce, P.G.; Howey, D.A. Degradation diagnostics for lithium ion cells. J. Power Sources 2017, 341, 373–386. [Google Scholar] [CrossRef]

- Tian, H.; Qin, P.; Li, K.; Zhao, Z. A review of the state of health for lithium-ion batteries: Research status and suggestions. J. Clean. Prod. 2020, 261, 120813. [Google Scholar] [CrossRef]

- Pastor-Fernández, C.; Uddin, K.; Chouchelamane, G.H.; Widanage, W.D.; Marco, J. A Comparison between Electrochemical Impedance Spectroscopy and Incremental Capacity-Differential Voltage as Li-ion Diagnostic Techniques to Identify and Quantify the Effects of Degradation Modes within Battery Management Systems. J. Power Sources 2017, 360, 301–318. [Google Scholar] [CrossRef]

- Jia, K.; Ma, J.; Wang, J.; Liang, Z.; Ji, G.; Piao, Z.; Gao, R.; Zhu, Y.; Zhuang, Z.; Zhou, G.; et al. Long-Life Regenerated LiFePO4 from Spent Cathode by Elevating the d-Band Center of Fe. Adv. Mater. 2022, 35, 2208034. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Hosen, M.S.; Kalogiannis, T.; Mierlo, J.V.; Berecibar, M. State of Health Estimation of Lithium-Ion Batteries Based on Electrochemical Impedance Spectroscopy and Backpropagation Neural Network. World Electr. Veh. J. 2021, 12, 156. [Google Scholar] [CrossRef]

- Kumar, A.; Rao, V.R.; Soni, H. An empirical comparison of neural network and logistic regression models. Mark. Lett. 1995, 6, 251–263. [Google Scholar] [CrossRef]

- Issitt, R.W.; Cortina-Borja, M.; Bryant, W.; Bowyer, S.; Taylor, A.M.; Sebire, N. Classification Performance of Neural Networks Versus Logistic Regression Models: Evidence From Healthcare Practice. Cureus 2022, 14, 22443. [Google Scholar] [CrossRef]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support Vector Machine Versus Random Forest for Remote Sensing Image Classification: A Meta-Analysis and Systematic Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Kumar, S.; Khan, Z.; Jain, A. A Review of Content Based Image Classification Using Machine Learning Approach. Int. J. Adv. Comput. Res. (IJACR) 2012, 2, 55. [Google Scholar]

- Geurts, P.; Irrthum, A.; Wehenkel, L. Supervised Learning with Decision Tree-Based Methods in Computational and Systems Biology. Mol. Biosyst. 2009, 5, 1593–1605. [Google Scholar] [CrossRef]

- Osho, O.; Hong, S. An Overview: Stochastic Gradient Descent Classifier, Linear Discriminant Analysis, Deep Learning and Naive Bayes Classifier Approaches to Network Intrusion Detection. Int. J. Eng. Tech. Res. (IJETR) 2021, 10, 294–308. [Google Scholar]

- Hossin, M.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process. 2015, 5, 1. [Google Scholar]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Hand, D.J. Measuring Classifier Performance: A Coherent Alternative to the Area Under the ROC Curve. Mach. Learn. 2009, 77, 103–123. [Google Scholar] [CrossRef]

- Liu, K.; Peng, Q.; Li, K.; Chen, T. Data-Based Interpretable Modeling for Property Forecasting and Sensitivity Analysis of Li-ion Battery Electrode. Automot. Innov. 2022, 5, 121–133. [Google Scholar] [CrossRef]

- Liu, X.; Tao, S.; Fu, S.; Ma, R.; Cao, T.; Fan, H.; Zuo, J.; Zhang, X.; Wang, Y.; Sun, Y. Binary multi-frequency signal for accurate and rapid electrochemical impedance spectroscopy acquisition in lithium-ion batteries. Appl. Energy 2024, 364, 123221. [Google Scholar] [CrossRef]

| Parameter | Specification |

|---|---|

| Nominal capacity and voltage | 1.1 Ah, 3.3 V |

| Recommended standard charge method | 1.5 A to 3.6 V CC-CV, 45 min |

| Recommended fast charge current | 4 A to 3.6 V CC-CV, 15 min |

| Maximum continuous discharge | 30 A |

| Recommended charge and cut-off V at 25 °C | 3.6 A to 2 V |

| Operating temperature range | −30 °C to +60 °C |

| Storage temperature range | −50 °C to +60 °C |

| Core cell weight | 39 g |

| Parameter | Definition |

|---|---|

| Initial value of cycle 0 | |

| Value at cycle 400 | |

| Derivative between cycle 400 − and 400 | |

| Derivative between cycle 400 − 2 and 400 | |

| (with the selected interval, in the following sections) | |

| , | Maximal value and its cycle index |

| Model Performance at Threshold Q1 = 542, Training Fraction = 50% | |||||

|---|---|---|---|---|---|

| Algorithm | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.9060 | 0.9705 | 0.9010 | 0.9339 | 0.9114 |

| Random Forest | 0.9191 | 0.9540 | 0.9371 | 0.9447 | 0.9807 |

| Extra Trees | 0.9153 | 0.9581 | 0.9272 | 0.9416 | 0.9782 |

| Decision Tree | 0.9000 | 0.9358 | 0.9308 | 0.9321 | 0.8724 |

| Support Vector Classifier | 0.9072 | 0.9732 | 0.8999 | 0.9346 | 0.9145 |

| K-Nearest Neighbours | 0.9116 | 0.9665 | 0.9129 | 0.9383 | 0.9115 |

| Naive Bayes | 0.9115 | 0.9697 | 0.9093 | 0.9381 | 0.9138 |

| Multi-Layer Perceptron | 0.8944 | 0.9497 | 0.9117 | 0.9273 | 0.8792 |

| Stochastic Gradient Descent | 0.8836 | 0.9321 | 0.9238 | 0.9228 | 0.8487 |

| Gaussian Process Classifier | 0.7409 | 0.9789 | 0.6655 | 0.7906 | 0.8124 |

| Quadratic Discriminant Analysis | 0.7801 | 0.7834 | 0.9898 | 0.8709 | 0.5946 |

| Model Performance at Threshold Q2 = 814, Training Fraction = 50% | |||||

| Algorithm | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.8509 | 0.8476 | 0.8614 | 0.8507 | 0.8530 |

| Random Forest | 0.9501 | 0.9363 | 0.9672 | 0.9504 | 0.9874 |

| Extra Trees | 0.9364 | 0.9191 | 0.9589 | 0.9370 | 0.9852 |

| Decision Tree | 0.9139 | 0.9186 | 0.9108 | 0.9124 | 0.9161 |

| Support Vector Classifier | 0.8314 | 0.8377 | 0.8425 | 0.8308 | 0.8346 |

| K-Nearest Neighbours | 0.9297 | 0.9385 | 0.9229 | 0.9282 | 0.9306 |

| Naive Bayes | 0.8296 | 0.7559 | 0.9728 | 0.8492 | 0.8308 |

| Multi-Layer Perceptron | 0.7633 | 0.7353 | 0.8606 | 0.7732 | 0.7666 |

| Stochastic Gradient Descent | 0.7549 | 0.7233 | 0.8231 | 0.7394 | 0.7570 |

| Gaussian Process Classifier | 0.8191 | 0.9628 | 0.6632 | 0.7823 | 0.8188 |

| Quadratic Discriminant Analysis | 0.7522 | 0.7542 | 0.8649 | 0.7718 | 0.7597 |

| Model Performance at Threshold Q3 = 1012, Training Fraction = 50% | |||||

| Algorithm | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.8600 | 0.7808 | 0.6417 | 0.6948 | 0.7883 |

| Random Forest | 0.9588 | 0.9447 | 0.8936 | 0.9138 | 0.9957 |

| Extra Trees | 0.9438 | 0.9372 | 0.8404 | 0.8794 | 0.9881 |

| Decision Tree | 0.9464 | 0.9008 | 0.8932 | 0.8917 | 0.9189 |

| Support Vector Classifier | 0.8701 | 0.8303 | 0.6403 | 0.7074 | 0.7949 |

| K-Nearest Neighbours | 0.9340 | 0.9091 | 0.8257 | 0.8599 | 0.8987 |

| Naive Bayes | 0.6555 | 0.4255 | 0.9384 | 0.5792 | 0.7495 |

| Multi-Layer Perceptron | 0.7568 | 0.5753 | 0.5034 | 0.4780 | 0.6749 |

| Stochastic Gradient Descent | 0.6721 | 0.3089 | 0.5091 | 0.3438 | 0.6198 |

| Gaussian Process Classifier | 0.8414 | 0.8695 | 0.4443 | 0.5773 | 0.7105 |

| Quadratic Discriminant Analysis | 0.7463 | 0.0243 | 0.0139 | 0.0160 | 0.5039 |

| Model Performance at Threshold Q1 = 542, Training Fraction = 25% | |||||

|---|---|---|---|---|---|

| Model | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.9029 | 0.9727 | 0.8949 | 0.9317 | 0.9109 |

| Random Forest | 0.9154 | 0.9518 | 0.9348 | 0.9424 | 0.9787 |

| Extra Trees | 0.9131 | 0.9570 | 0.9257 | 0.9404 | 0.9756 |

| Decision Tree | 0.8978 | 0.9366 | 0.9276 | 0.9307 | 0.8740 |

| Support Vector Classifier | 0.9026 | 0.9738 | 0.8938 | 0.9315 | 0.9116 |

| K-Nearest Neighbours | 0.9092 | 0.9659 | 0.9108 | 0.9369 | 0.9087 |

| Naive Bayes | 0.9103 | 0.9706 | 0.9067 | 0.9371 | 0.9136 |

| Multi-Layer Perceptron | 0.8911 | 0.9509 | 0.9009 | 0.9225 | 0.8833 |

| Stochastic Gradient Descent | 0.8844 | 0.9347 | 0.9215 | 0.9231 | 0.8520 |

| Gaussian Process Classifier | 0.6158 | 0.9776 | 0.4949 | 0.6537 | 0.7306 |

| Quadratic Discriminant Analysis | 0.7410 | 0.7500 | 0.9838 | 0.8491 | 0.5177 |

| Model Performance at Threshold Q2 = 814, Training Fraction = 25% | |||||

| Model | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.8346 | 0.8428 | 0.8363 | 0.8332 | 0.8366 |

| Random Forest | 0.9275 | 0.9094 | 0.9541 | 0.9289 | 0.9798 |

| Extra Trees | 0.9126 | 0.8950 | 0.9400 | 0.9142 | 0.9753 |

| Decision Tree | 0.8906 | 0.8989 | 0.8856 | 0.8882 | 0.8933 |

| Support Vector Classifier | 0.8277 | 0.8418 | 0.8329 | 0.8265 | 0.8301 |

| K-Nearest Neighbours | 0.8873 | 0.9014 | 0.8765 | 0.8842 | 0.8885 |

| Naive Bayes | 0.8204 | 0.7752 | 0.9095 | 0.8298 | 0.8209 |

| Multi-Layer Perceptron | 0.7534 | 0.7373 | 0.8296 | 0.7554 | 0.7568 |

| Stochastic Gradient Descent | 0.7469 | 0.7119 | 0.8013 | 0.7223 | 0.7497 |

| Gaussian Process Classifier | 0.7300 | 0.9589 | 0.4791 | 0.6333 | 0.7292 |

| Quadratic Discriminant Analysis | 0.7413 | 0.7233 | 0.8594 | 0.7613 | 0.7449 |

| Model Performance at Threshold Q3 = 1012, Training Fraction = 25% | |||||

| Model | Accuracy Score | Precision Score | Recall Score | F1 Score | ROC AUC |

| Logistic Regression | 0.8576 | 0.7843 | 0.6510 | 0.6949 | 0.7902 |

| Random Forest | 0.9305 | 0.9150 | 0.8117 | 0.8504 | 0.9872 |

| Extra Trees | 0.9117 | 0.8913 | 0.7623 | 0.8083 | 0.9721 |

| Decision Tree | 0.9189 | 0.8688 | 0.8161 | 0.8327 | 0.8804 |

| Support Vector Classifier | 0.8547 | 0.7865 | 0.6329 | 0.6744 | 0.7824 |

| K-Nearest Neighbours | 0.8886 | 0.8818 | 0.6691 | 0.7346 | 0.8178 |

| Naive Bayes | 0.6806 | 0.4476 | 0.8628 | 0.5750 | 0.7420 |

| Multi-Layer Perceptron | 0.7388 | 0.5449 | 0.4937 | 0.4502 | 0.6596 |

| Stochastic Gradient Descent | 0.6724 | 0.3225 | 0.5136 | 0.3510 | 0.6216 |

| Gaussian Process Classifier | 0.8099 | 0.8647 | 0.2983 | 0.4308 | 0.6412 |

| Quadratic Discriminant Analysis | - | - | - | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Meng, J.; Azib, T. A Comparative Study of Data-Driven Early-Stage End-of-Life Classification Approaches for Lithium-Ion Batteries. Energies 2024, 17, 4485. https://doi.org/10.3390/en17174485

Wang X, Meng J, Azib T. A Comparative Study of Data-Driven Early-Stage End-of-Life Classification Approaches for Lithium-Ion Batteries. Energies. 2024; 17(17):4485. https://doi.org/10.3390/en17174485

Chicago/Turabian StyleWang, Xuelu, Jianwen Meng, and Toufik Azib. 2024. "A Comparative Study of Data-Driven Early-Stage End-of-Life Classification Approaches for Lithium-Ion Batteries" Energies 17, no. 17: 4485. https://doi.org/10.3390/en17174485

APA StyleWang, X., Meng, J., & Azib, T. (2024). A Comparative Study of Data-Driven Early-Stage End-of-Life Classification Approaches for Lithium-Ion Batteries. Energies, 17(17), 4485. https://doi.org/10.3390/en17174485