1. Introduction

In recent years, wind energy has gained significant attention as an environmentally friendly, clean renewable energy. Wind power generation has the potential to alleviate the shortage of conventional energy and mitigate increasing environmental pollution [

1,

2]. However, due to the strong volatility and randomness of wind power, its integration into the grid on a large scale can adversely impact the stability of the power system [

3]. Accurate prediction of wind power can aid power companies, power plants and grid management departments in planning and dispatching wind power generation systems, improving the reliability and stability of power systems and reducing dependence on traditional energy sources [

4,

5].

Wind power prediction methods mainly include physical methods, statistical methods and combination methods [

6]. The physical methods need to establish a model based on the complex physical relationship between various physical quantities such as meteorological information and geomorphological information, which has a high computational cost of prediction and is not suitable for ultra-short-term prediction [

7]. Statistical methods establish a model that can express the mapping relationship between input and output by mining the inherent laws between a large number of historical data [

8]. Commonly used statistical methods include time series analysis [

9,

10,

11], the BP neural network (BPNN) [

12,

13], support vector regression (SVR) [

14,

15,

16,

17] and the deep learning network [

18,

19]. Wind power has the characteristics of a time series and has strong non-linearity. Models such as the time series analysis method, BPNN and SVR have difficulty in extracting the deeper features in a wind power series and cannot cope with their complex change trends, so it is difficult to predict wind power accurately using these methods. In contrast, deep learning models have stronger non-linear mapping abilities, self-learning abilities and feature extraction abilities [

20]. In particular, the long short-term memory (LSTM) network is suitable for wind power prediction due to its unique network structure [

21]. Several prediction models of wind power based on the LSTM have been proposed in [

22,

23,

24,

25,

26]. These models use the LSTM network to learn the time series characteristics in wind power data and have higher prediction accuracy than linear models, traditional machine learning models and artificial neural networks.

The performance of deep learning networks heavily depends on the learning progress, so the optimization algorithm of the network training is crucial [

27]. For the stochastic gradient descent method, the learning rate in the next round of training is adjusted according to the loss change in the training process and the current learning rate, which can improve the training speed and accuracy of the model [

28]. However, this method could not adaptively update every parameter in the network, and the prediction accuracy of the model was also limited. The adaptive moment estimation (Adam) algorithm is improved from the root mean square propagation (RMSProp) algorithm [

29]. It can adaptively update each parameter in deep learning networks and is also one of the commonly used optimization algorithms in deep learning networks. However, the global learning rate of Adam is a fixed value, and to avoid network non-converging caused by a learning rate that is too large in the later stage, the value of the global learning rate is often very small; therefore, the optimization effect of the Adam algorithm on the network is greatly limited.

To further improve the prediction accuracy and modeling efficiency of the model, we propose a loss shrinkage Adam (LsAdam) optimization algorithm by adjusting the global learning rate of the Adam according to the loss change during the model training process and construct an LsAdam–LSTM wind power prediction model.

3. LsAdam–LSTM Wind Power Prediction Model

Loss change is very important in the training process because the direction and magnitude of loss change can reflect the quality of the network learning process. Therefore, to improve the training speed of the wind power prediction model based on LSTM, further improve the prediction accuracy and overcome the limitations of the fixed global learning rate, it is necessary to adaptively adjust the global learning rate through loss change during network training. If the loss decreases after each training epoch, it is reasonable to think that parameter adjustment can gradually make the network converge, and the global learning rate can be appropriately increased to accelerate the training process. In contrast, if the loss increases after each training epoch, it means that the current parameter adjustment is not conducive to the training process, and the global learning rate can be appropriately reduced to stabilize the training process. To ensure faster loss shrinkage after each epoch of training, a gain coefficient based on loss change is used to dynamically adjust the global learning rate, as shown in Equation (1).

where

is the global learning rate at the

training epoch,

, and

is the gain coefficient at the

training epoch.

Unlike the Adam algorithm in which the global learning rate is a fixed value, we consider the loss change during training to adjust the global learning rate in real time and construct the LsAdam algorithm based on Equation (1) to determine the global learning rate at each training epoch. Based on the Adam algorithm, the proposed algorithm adaptively adjusted the global learning rate through the gain coefficient with the information of loss change, which could effectively alleviate the limitations of the model caused by the small fixed value of the global learning rate during the training process.

To effectively determine the gain coefficient, the loss change before and after each epoch of training needs to be evaluated. To better reflect the magnitude of the loss change during training, we use the relative loss change to measure its change, as shown in Equation (2).

where

is the relative change of the loss after the

training epoch compared with the previous epoch,

is the loss value of the network after the

training epoch, and

is the loss value of the network after the

training epoch.

To prevent the learning rate from being too large or too small due to the introduction of the gain coefficient, this coefficient should be negatively correlated with the current global learning rate. To reduce the influence of random factors such as data noise, an insensitive range is set, i.e., the global learning rate is kept constant when , i.e., the gain coefficient is 1.

Therefore, the rules for the value of the gain coefficient must satisfy the following conditions:

should be bounded and positive.

When , .

When , and is positively correlated with .

When , and is negatively correlated with .

When or , is negatively correlated with .

To this end, the gain coefficient is determined based on the basis of the amount of loss change and the current global learning rate. The inverse tangent function is a monotonically increasing function with limiting characteristics, and the value domain of the positive semi-axis of the independent variable is

. Therefore, the arctangent function is used as the basis for constructing the value rules for the gain coefficients. We construct the gain coefficients as shown in Equation (3); then, the update rule of the global learning rate is shown in Equation (4).

where

is the initial global learning rate, which is generally taken as the global learning rate commonly used in the Adam optimization algorithm, such as

;

is the gain coefficient insensitivity threshold, which is taken as

in this paper.

is a constant, which plays the role of controlling the range of values of

and then controlling the change in the global learning rate. To satisfy

, it is necessary to make

. If

is smaller, the change in the global learning rate is larger, and the algorithm has a more obvious optimization effect on the model; if

is larger, the change in the global learning rate is smaller, and the risk of model non-convergence is lower. So,

is taken as

in this paper. To effectively prevent excessive effects on the gain coefficient due to a wide range of

values,

is chosen.

is a constant, which is used to regulate the sensitivity of the gain coefficient to

, and

. A smaller

will make it easier for the global learning rate of the model to reach a smaller value in the later stages of training, which is beneficial to the convergence of the model; a larger

allows the global learning rate to change more significantly according to

, which makes the algorithm have a more obvious acceleration effect. So,

is taken as

in this paper.

In addition, when , will have a suppressive effect on the increase in the gain coefficient and a facilitating effect on the decrease in the gain coefficient; when , will have a facilitating effect on the increase in the gain coefficient and a suppressive effect on the decrease in the gain coefficient. As the network model converges, the magnitude of loss change will tend to decrease and the degree of determination of on will gradually become larger, which makes the global learning rate eventually close to and is conducive to model convergence.

In the LsAdam algorithm, the global learning rate is kept constant when the loss change is within the threshold range, and when the loss change is beyond the threshold range, the loss change and the current global learning rate will jointly determine the value of the global learning rate in the next training epoch. The process of LsAdam–LSTM is shown in Algorithm 3.

| Algorithm 3 LsAdam–LSTM algorithm |

| Require: : initial global learning rate, : initial parameter vector, : accuracy requirement, : number of iterations per epoch |

|

| LSTM model initialization |

| |

| |

|

|

|

|

|

| Calculate the gradient at step t |

|

| Update biased first moment estimate |

|

| Update biased second moment estimate |

|

| Update unbiased first moment estimates |

|

| Update unbiased second moment estimates |

|

| Update change of the LSTM model parameters |

|

| Updated LSTM model parameters |

|

| end while |

| |

| by Equation (2) |

| by Equation (4) |

| end while |

| end |

The overall change trend in the global learning rate of LsAdam–LSTM has the following characteristics during the training process. In the early stage of training, the general loss will decrease rapidly, and the global learning rate will increase with the decrease in loss, which can improve the convergence speed of the model. In the middle and late stages of training, the reduction speed of loss becomes slower, and the global learning rate gradually decreases. In the later stage of training, the global learning rate will gradually stabilize near the initial global learning rate, which is conducive to the convergence of the model. Based on this characteristic, the proposed model has higher prediction accuracy and a faster convergence speed.

4. Experimental Verification

4.1. Data Preparation

In this work, data from the SCADA systems are used to test the proposed LsAdam–LSTM, including wind speed, wind direction, generated power, etc. The data were sampled with a 10 min interval from the SCADA system of a wind turbine that works and generates power in Turkey. We only used a past generated power series to predict the next power output (10 min in the future). In addition, one month of observations from April 2018 were used to construct the sample set, with a total of 4320 pieces of data as shown in

Figure 2. When the wind speed is between the cut-in wind speed and the rated wind speed, the output power of the wind turbine is higher with a higher wind speed. When the wind speed is between the rated wind speed and the cut-out wind speed, the wind power is approximately the rated power. However, when the wind speed is lower than the cut-in wind speed or higher than the cut-out wind speed, the wind power is zero. Wind speed fluctuates over a wide range, so we can see that wind power has strong randomness and intermittency.

To evaluate the prediction performance more objectively, the max–min normalization method was used to scale the data to the interval [0, 1], as shown in Equation (5).

where

is the original data,

is the minimum value in the original data,

is the maximum value in the original data, and

is the scaled data.

For ultra-short-term power prediction, we used the actual power generated from the past 100 min to predict the power output of the next 10 min. To implement the prediction task with deep learning methods, the samples were constructed using the window receding approach. The width of the time window was chosen to be 10 intervals, so every sample included 11 pieces of data. As shown in

Table 1, the 1st to 10th data of the sample denote the past actual power, and the last piece of data is used as the future power generated. For one month of power generated records, a sample set with 4310 samples was obtained. To evaluate the generalization performances of the prediction models, the first 80% of the samples (a total of 3448 samples) were used as the training set, and the remaining 20% (a total of 862 samples) were used as the test set.

4.2. Evaluation Index of Ultra-Short-Term Wind Power Prediction Model

According to the Chinese national standard “Technical requirements for dispatching side forecasting system of wind or photovoltaic power” (GB/T 40607-2021), the mean square error (MSE), mean absolute error (MAE) and correlation coefficient (R) of the predicted power and actual power were used as evaluation indexes. Among them, MSE and MAE can reflect the difference between the predicted power and the actual power, and R can reflect the closeness of the correlation between the predicted power and the actual power. These three evaluation indexes were used to measure prediction performance, and the calculation of each index is shown in Equations (6)–(8).

where

n is the number of samples,

is the

true power value,

is the

predicted power value,

is the average value of the true power, and

is the average value of the predicted power value.

4.3. Experiment and Discussion

To verify the ultra-short-term wind power prediction performances, several models were trained and tested on the same wind turbine, including traditional shallow network BP, classical machine learning method SVR, Adam–LSTM and the proposed LsAdam–LSTM.

For the deep learning method LSTM, the optimization algorithm used in the training had a heavy effect on the final model. To evaluate the improved LsAdam–LSTM algorithm, the experiments were carried out using LsAdam and standard Adam as the optimization methods for LSTM training, respectively; then, the ultra-short-term wind power prediction models were obtained; namely, LsAdam–LSTM and Adam–LSTM. To further evaluate the prediction accuracy of the proposed method, comparison experiments were implemented with the BP neural network and SVR algorithm to build the ultra-short-term wind power prediction models.

The parameters of all the algorithms used in the experiments were fine-tuned. The main parameters were described as follows. The constructed LSTM network model contained two hidden layers, and each hidden layer contained 64 cells. The activation function was the Tanhyperbolic function, and the parameters in the LsAdam optimization algorithm were chosen as , and . When training the LsAdam–LSTM model and the Adam–LSTM model, the maximum epoch of training was set to 500, the initial global learning rate was set to 0.01, and full batch training was used. MSE was used as the error (loss) in the back-propagation process. To further check the adaptability and stability of the LsAdam–LSTM model, the LsAdam–LSTM model and Adam–LSTM model were tested under several commonly used global learning rates of 0.005, 0.02 and 0.03. The structure of the BP neural network model was 10-32-1, the learning rate was 0.1, the training epoch was 300, and the activation function was the Sigmoid function. The SVR model used the radial basis function as the kernel function and set the error penalty factor to 1.

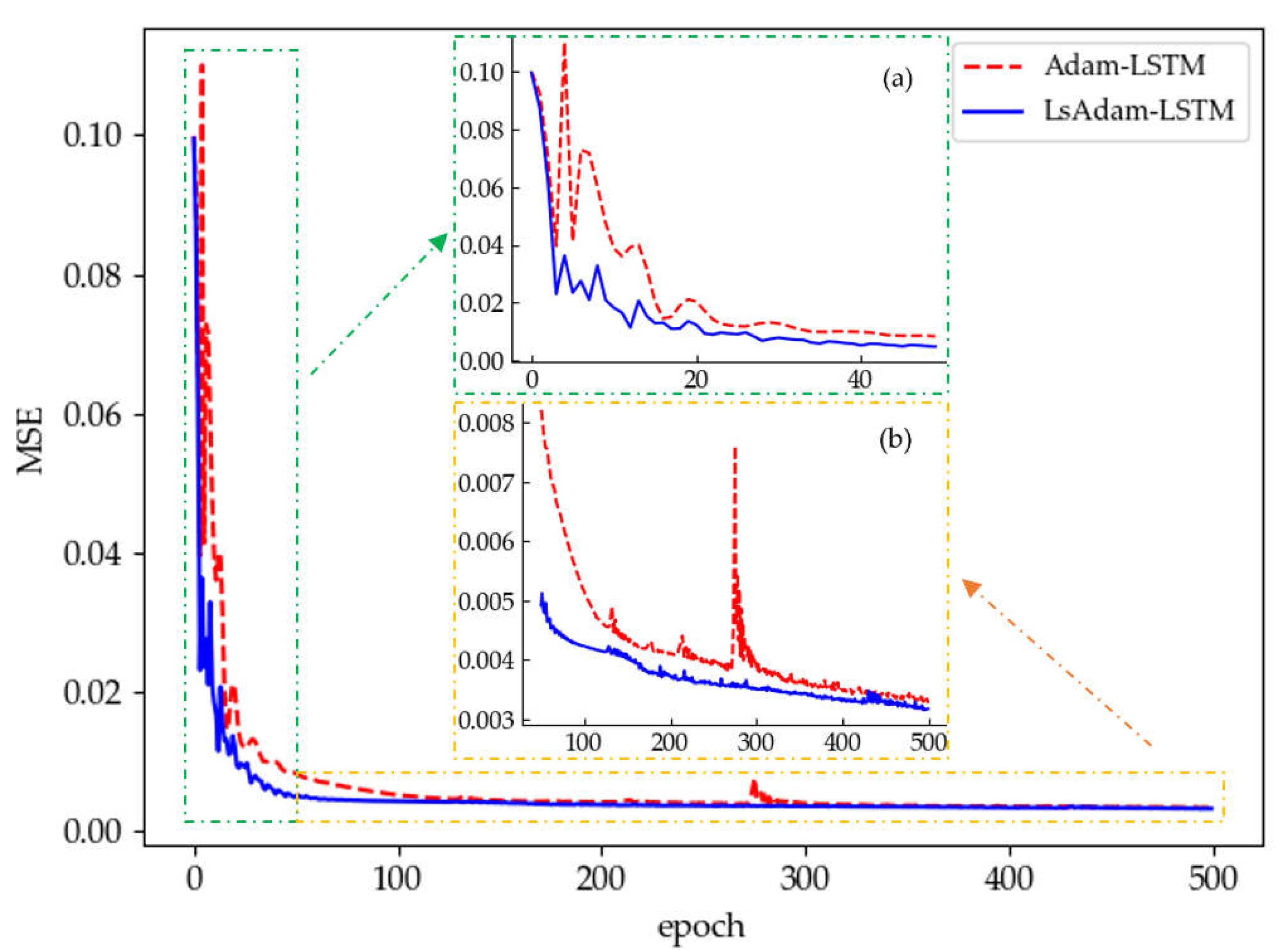

The experiments were implemented with initial global learning rates of 0.005, 0.01, 0.02 and 0.03. The loss-changing trends were similar. As shown in

Figure 3, the training progress of LsAdam–LSTM was significantly superior to that of Adam–LSTM, where the initial global learning rate was 0.01. Although the loss change of both methods can decrease with the training epoch globally, the loss of LsAdam–LSTM decreased more drastically in the early stage of training and reached a lower final value. Section (a) in

Figure 3 shows the detail of the loss changing in the early training stage. The training process of LsAdam–LSTM was more stable and the decline in loss was faster. In contrast, the Adam–LSTM had several large fluctuations in the early stages of training, and the loss after the 4th training epoch was even greater than the loss at the initial time. Section (b) in

Figure 3 shows the detail of the loss changing in the middle and late training stages. The loss of LsAdam–LSTM can converge to lower values more quickly, and the training process loss was lower overall than that of the Adam–LSTM. In addition, the Adam–LSTM underwent relatively drastic fluctuations after about the 270th epoch of training, which had a bad impact on the convergence of the Adam–LSTM.

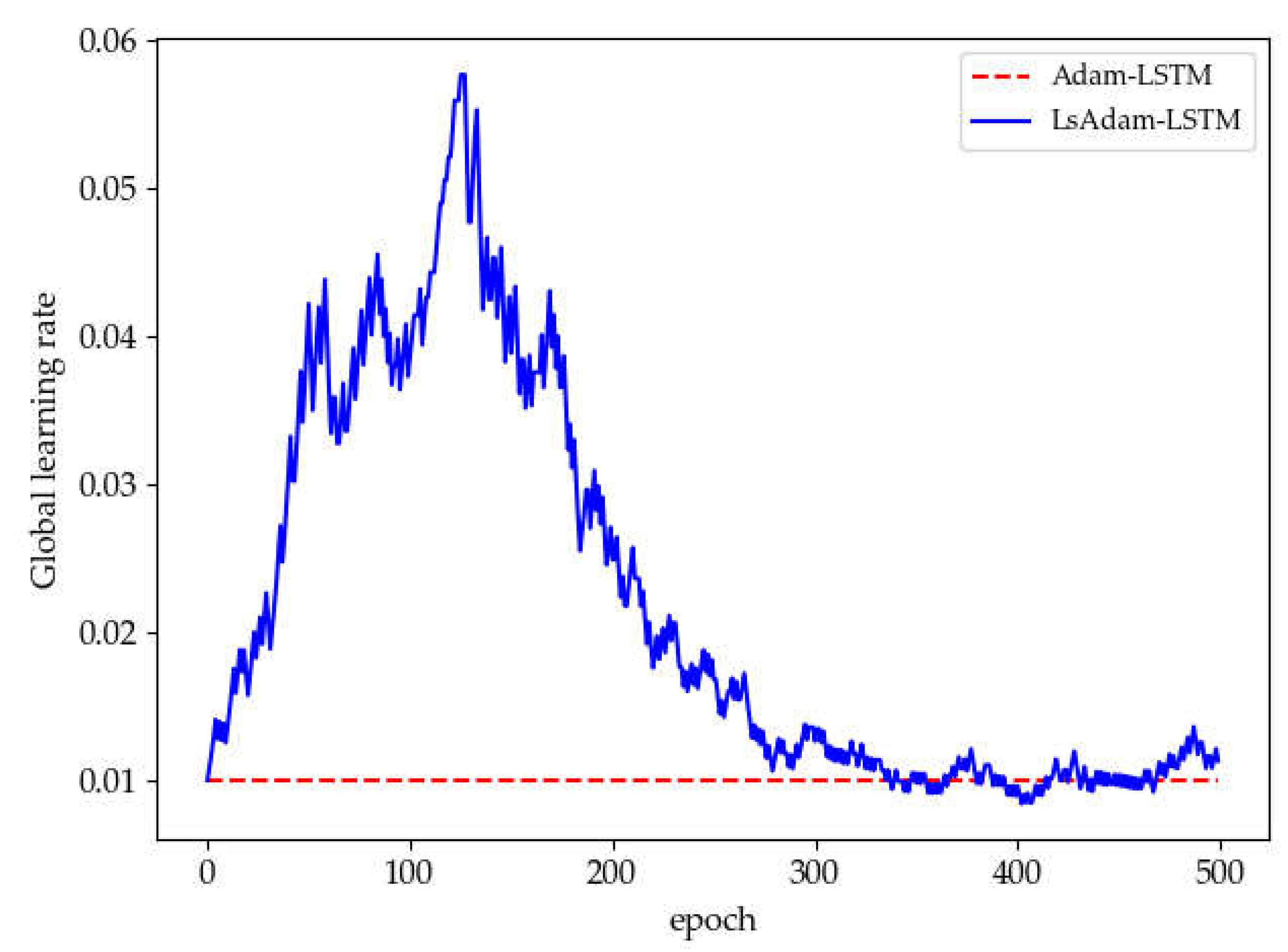

The global learning rate change during the training of the two LSTM models is shown in

Figure 4. The global learning of the LsAdam–LSTM increased first and then decreased and, eventually, settled around the initial value, while the global learning rate of the Adam–LSTM was a fixed value. Compared with Adam–LSTM, LsAdam–LSTM introduced gain coefficients with loss change information to regulate the global learning rate during the training process. When the loss decreases, the global learning rate should be appropriately increased, which, in turn, increases the convergence speed of the model, so the loss of LsAdam–LSTM decreased faster. The opposite occurs when the loss increases. The global learning rate should be appropriately reduced, which, in turn, prevents larger oscillations during the convergence of the model; thus, the LsAdam–LSTM had a much smoother loss descent process. In this way, the LsAdam–LSTM model formed a closed-loop feedback mechanism between the training loss and the learning rate and then accelerated the shrinkage of the model’s loss.

The performance metrics of the LsAdam–LSTM model and the Adam–LSTM model with the commonly used initial global learning rates are shown in

Table 2. For every initial global learning rate, the prediction accuracy of the LsAdam–LSTM model was improved compared to the Adam–LSTM model, and the number of training epochs could be reduced by at least 68. It is shown that the LsAdam–LSTM model could converge faster while maintaining higher prediction accuracy for all the initial global learning rates. Moreover, the variation of the prediction results of the LsAdam–LSTM model on the test set was smoother under different initial global learning rates. This indicates that the proposed model is less sensitive to the initial global learning rate and has strong adaptability and stability. Meanwhile, the results on the test and training sets show that the proposed model has good generalization ability.

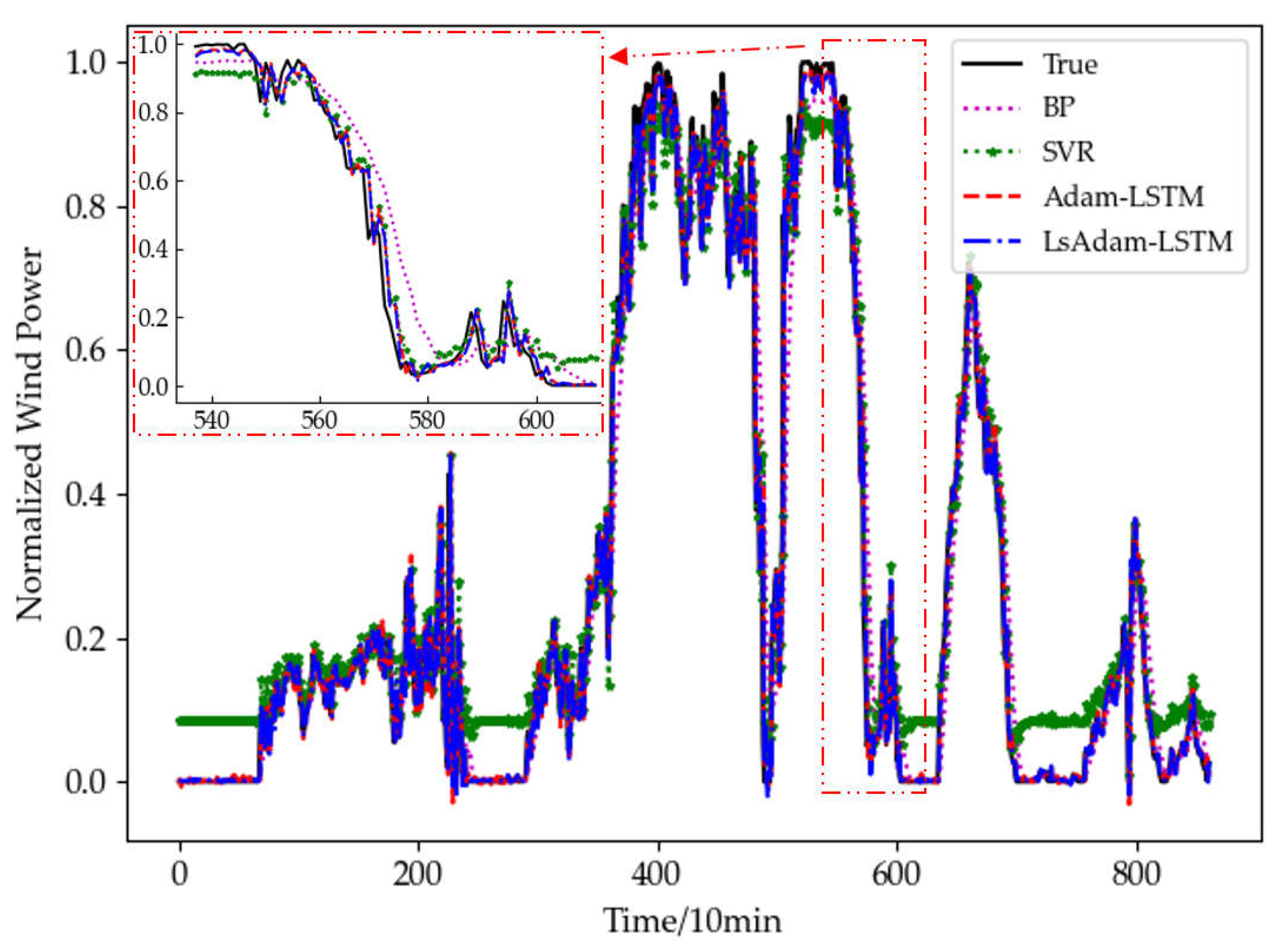

To evaluate the prediction accuracy, we obtained the ultra-short-term wind power prediction models using BP, SVR, Adam–LSTM and LsAdam–LSTM, respectively. The prediction results and local details of the four models on the test set are shown in

Figure 5, where the prediction results of both LSTM models are those at an initial global learning rate of 0.01. It can be seen that the prediction results of BP deviate significantly from the true value in almost the entire power range, and the prediction error of SVR is largest near both ends of the power range, while the prediction results of the Adam–LSTM model are improved significantly. The results are improved further with LsAdam–LSTM.

Table 3 shows the prediction performance of all the above models. All of the parameters are the same as those in

Figure 5. As for the MSE between the predicted values and true values, the prediction results of LsAdam–LSTM in the training set improved by 62.79%, 48.77% and 4.08% over BP, SVR and Adam–LSTM, respectively. The prediction results in the test set improved by 66.97%, 51.22% and 3.33%, respectively. The prediction accuracy of the LsAdam–LSTM also improved in terms of other evaluation metrics. It can be seen that the prediction results of the LsAdam–LSTM model outperformed the other models on both the training and test sets, which means that the model has higher prediction accuracy and a better generalization ability.

In summary, the proposed LsAdam–LSTM can predict ultra-short-term wind power more accurately and efficiently. By integrating the loss shrinkage information, the training of LSTM can actually be accelerated with better generalization performance. Compared with Adam, the global learning rate of every epoch can be tuned adaptively, which allows the learning progress to improve continuously. Compared with the traditional machine learning methods BP and SVR, the LsAdam–LSTM model has the nature of deep learning architecture, a stronger learning ability, and results that are significantly improved.