1. Introduction

Noise reduction in image processing is one of the most important pre-process stages for extracting information from images [

1]. Devices used for image storing and transferring are not in ideal form. Different parts of such devices as cameras and their lenes and image vibrations are effective in promoting image quality. Factors such as transfer channels and environmental parameters decrease the image quality in these devices, and low quality causes the image processing time to increase and even make a wrong decision in some operational and control utilizations [

2]. In fact, noise reduction is one image restoration technique to enhance image quality [

3].

While images are often corrupted by different noise types whose reduction is necessary for image processing steps, such as by object detection [

4,

5] and feature point matching [

6], this paper focuses on a particular type of noise, called impulsive noise, which has a short duration and high energy. In [

7,

8,

9,

10,

11], various impulsive noise filtering techniques have been suggested.

Many popular filtering approaches, such as median filters [

12,

13], weighted median filters [

14], lower-upper-middle smoothers [

15], multistage filters [

16], and center-weighted median filters [

17], are based on the theory of robust order statistics. A general item of such filters is referred to as the sample order, which conducts the corrupt images, especially impulsive noise, to the ordered sets’ borders.

Two main phases are considered for noise reduction. The first phase includes classification methods and then recognizing corrupt pixels from healthy pixels [

18]. Using the results obtained in the first phase, various spatial and adaptive filters are exerted on detected corrupt pixels, and afterward, in the second phase, we will analyze the results based on image quality. We will consider different feature extraction methods, including wavelet transformation and statistical methods to improve results, and we will also use histogram [

19]. For this purpose, we will decrease input data dependency in classifiers by principal component analysis (

PCA) and optimize the parameters of classifiers, especially

TASOM, with a genetic algorithm (

GA). Results of

SOM and

TASOM networks have been compared with each other, and some tests are discussed in calculative and objective terms; we will suggest the best filters and classifiers for restoring an image with impulsive noise. This will help us to deliver less corrupt image to the next image processing phases, such as pattern recognition; thus, it will assist researchers to decrease error probability in decision-making systems. In this field, there are several similar valuable works such as [

20,

21,

22,

23] that inspired us to improve our results and lead us to our new suggested approaches. We also observed very significant progress in using metaheuristic techniques for classification in [

24,

25,

26,

27].

Tests were executed on a PC with Intel core i3 processor, 2 GHz speed and 4 GB memory. The image format is in 256 × 256 size, and images are in Grey Level forms in

Table 1. The filter used in experiments by default is Median, and in tests, we usually used Norm and Median for extracting features from images.

2. Using Classifiers

In this article, contaminated pixel detection classifiers are divided into two main domains: (1)

SOM domain classifiers and (2) non-

SOM domain classifiers.

SOM domain classifiers are implemented in different methods and also different versions. These classifiers work in the unsupervised form. In contrast, non-

SOM domain classifiers such as a radial basis function neural network, probabilistic neural network, multi-layer perceptron neural network, support vector machines classifier, k-nearest neighbors’ classifier, and fuzzy c-mean classifier can be used in our work; most of these classifiers work in the supervised form. Regarding filters applied in this research, spatial filters are classified into three groups: (1) linear filters, (2) non-linear filters, and (3) adaptive filters [

28].

Linear filters include mean domain filters, and non-linear filters primarily consist of median domain filters. Filters such as geometric mean, harmonic mean, contra harmonic mean, alpha trimmed mean are categorized in either linear filters or non-linear filters. Max, min, and pseudo median filters are considered non-linear filters, and adaptive filters include the

MMSE filter and Wiener filter. We first have a brief introduction of the

TASOM network, and then we describe its recommended algorithm for carrying out classification and investigate each mentioned classifier and evaluate their results. Afterward, we inspect the linear, non-linear, and adaptive filter effects on the

TASOM classifiers’ results, and then we will optimize the

TASOM classifiers’ parameters using a genetic algorithm. In this research, the aim was to make use of all practical tools in impulsive noise reduction, and in some places, attempts have been made to use a classifier in several forms [

29]. We exploit different densities of corruption to simulate various conditions.

Another critical point is that we cannot restore the corrupted image entirely to the original one in the noise reduction issue. Even though parameters such as quality and speed will help us to obtain maximum noise reduction [

30], some image details, such as edges and transparency, will be lost in the process.

3. TASOM and Its Classification Algorithm

Learning SOM networks are based on competition between neurons. Neurons compete with each other in encountering input data.

Winner neurons with their neighboring peers are conducted towards the present input. A set or function of neighborhood specifies the neurons’ neighborhood. During network training, this neighborhood gradually shrinks. The neurons’ learning rate decreases during network training. Such a reducible behavior in training parameters (learning rate and neighborhood function) prepares this network for network convergence and reaches minimum error. However, these small parameters at the end of network training reduce the networks’ ability to face new data. Therefore, there is a need to modify the networks’ parameters in encountering variable and dynamic environments. Each neuron in TASOM has a different learning rate. Learning rates of all neurons are dynamically updated for each input data. Updating learning rates is done according to a specific criterion. The further neurons’ weights are from the input, the more their learning rate increases. If neurons’ weights are close to the input, learning rates will decrease. For each neuron, neighborhood function and separate neighborhood set are considered.

Neurons’ neighborhood functions are automatically updated. A winner neuron is specified for each new input, and only the winner neurons’ neighborhood function is updated. The neighborhood function of other neurons remains unchanged. This change also takes place regarding a particular criterion. In the TASOM network, a scale vector is exploited if input vector distribution is asymmetric. If we want topological order maintained and to have minor quantization error, we would use a non-uniform scale vector. The neurons’ topological order remains established in input space, but it also rapidly adapts itself to network learning parameters against intense changes in input vectors. If consistency is essential and topological order has less significance, we use a uniform vector that embodies scalar values, and then we will be able to benefit from this state.

4. TASOM Algorithm for Learning the Rates and Size of Adaptive Neighborhood

TASOM algorithm, with its consistent learning rates and neighborhood sets, can be summarized in the following seven steps:

Step (1) Initialization: Choose a value for initial weight vectors

wj(0) for

j = 1, 2, …,

N where

N is the number of neurons in the network. A value close to 1 must be considered as parameter

ηj(

n).

α,

β,

αs and

βs parameters can have values between 0 and 1. In this paper, neighboring neurons of each neuron

i in a network include

LNi set. For each neuron

i in a one-dimensional network

LNi = {

i − 1,

i + 1}, and similarly for each neuron (

i1,

i2) in a 2-dimensional network

Step (2) Sampling: Obtain the next input vector X from the input distribution.

Step (3) Similarity Matching: Find winner

i(

x) neuron at time

n using a minimum Euclidian distance function scaled by the

S(

n) function.

Step (4) Updating Neighborhood Size: A neighborhood set of winner neuron

i(

x) is updated with the following equations:

where

β is a fixed parameter between 0 and 1 and specifies the speed employed, by which neighborhood size can control local neighborhood errors and

Sg controls the compression and topological order of neuron weights. The neighborhood set of other neurons does not change [

31]. The

g function is an ascending scalar function used for normalizing the weight distance vector. The weights are updated as follows:

Step (5) Updating Learning Rate: In the neighborhood set

Λi(x) (

n+1),

ŋj (

x), learning rate

ŋj (

x) from the winner neuron

i(

x) is updated as the following equation:

Another neuron’s learning rate does not change anymore. f function is a monotonous or increasing function used for the normalizing distance between weight and input vectors.

Step (6) Updating the Scale Vector: Set

S (

n)

= [

S1(

n),…,

SP(

n)]

T, adjusted according to the following equations, where

E represents the expected value:

This scale vector is non-uniform. The uniform vector is defined as follows:

Step (7) Return to Step (2).

We assign a network to each category or class for using

TASOM as a classifier. In this paper, a network was trained using noisy images, as well as another network using noiseless images. Networks have a one-dimensional topology in the form of an open chain. In the experimental phase, the feature vector of the new corrupted image is compared with the weight vector of both networks. Then the selected category is a network that has the closest weight vector to the input as follows:

where

H = 1,

…,

m × n,

N = 1,

…,

m × n, and

m × n is the image size and size of the blocks from which we extract features is 3

× 3; the size of blocks that we will use for filtering noisy pixels is 3

× 3, and

P equals to the number of features.

5. TASOM Algorithm for Classification

The data classification algorithm initially works with two neurons [

32]. The input of more data from the environment causes neurons to be added to the network, and idle neurons will be removed from the network. The algorithm can be summarized in the following steps:

Step (1) An adaptive network is made in the form of an open linear topology with two neurons.

Step (2) The network is trained with the number of K × C input data vectors, where C is the number of neurons present in the network and K is a constant number trains this number of input vectors and forms an iteration.

Step (3) Any neuron which does not win after one complete iteration is removed from the network.

Step (4) If the distance between two neighboring neurons

‖Wi − Wi+1‖ is greater than the

DUW parameter, then a new neuron

j is inserted between the two neurons. The parameters of new neuron equal:

Step (5) Every two neighboring neurons i and i + 1 where the value ‖Wi − Wi+1‖ is less than DWD parameter are replaced with a new neuron j, weight vector, and the learning parameters derived from Equation (12).

Step (6) Network training proceeds to Step (2).

Any neuron having no idle neuron next to itself makes up one class: both DUW and DWD Control classifications’ accuracy and speed.

Now we consider the feature vector in the TASOM classifier for each pixel in the form of |i(x,y)| = [1,p], where p is the number of features. For the pixels’ classification, we assign a network for each class. We associate one network with noisy images and the other network with a noiseless image. Each network is trained with its training sample. Networks have an open-chain topology. The new corrupted image is compared with the weight vector in the experimental phase, and then the selected class is a network with the closest weight vector to the given input.

Here m × n is the image size and size of blocks from which we extract features: WD, in 3 × 3 form and filtering block and WF is in 3 × 3 form.

|WN,P| and

|WH,P| matrices are corrupted, and healthy image weight matrices and

|IT,P|, an input matrix, are obtained from the contaminated image. Now, the winner network is selected as follows:

The network with the least distance from the input vector of the test image is chosen as the winner.

6. Using Wavelet Transform in TASOM Classifier

This classifier which is known as “

TW” uses wavelet transformation as a feature. We try to optimize features extracted from image pixels. Deeper analysis levels in wavelet transformation give us less high-frequency information, so it is reasonable to use the first levels of the coefficients’ matrix with more high-frequency information. In order to extract feature for each pixel, we took a block with 9 × 9 form and analyzed it with wavelets in three levels, so that each pixel is located in the block’s center, considering that, in each level, we have one matrix for approximation and three matrices for horizontal, vertical and diagonal detailed coefficients. For example, we take Level 1 from the horizontal matrix and carry out feature extraction on it. We executed this task using these input data, and after network training. In

Figure 1, we used the

TASOM network once for a healthy image and another time for a corrupted image. We trained two

TASOM networks with an image in size, as explained before. We used a previously mentioned method for detecting corrupt pixels. The size of each block for detecting pixels is 9 × 9, and the size of the level ones’ coefficient matrix is 16

× 16.

In another form, we took

PCA transformation for each pixel from the Wavelet Coefficients matrix in a classifier which is called

TWP. We carried out wavelet transformation and extracted a feature from the image block with

P columns in terms of the number of features and

m × n rows, based on the number of image pixels. We observed better results with this transformation. Here, we obtained one score vector representing the input vector in

PCA space [

33].

Now It Is Necessary to Describe Calculative Image Quality Factors

This criterion represents the mean squared errors between the main image and the restored image and is defined with the following form:

where

m and

n are image dimensions, and

N and

O are noisy and healthy images.

This measuring criterion is defined in the following form:

The high value of this criterion demonstrates the high quality of the restored image. The acceptable level for a restored image is between 25 to 50 dB, and if this criterion is more than 40 dB, then two images are indistinguishable.

This criterion is defined in the following form:

7. Classifiers

7.1. Multi-Layer Perceptron

Multi-layer perceptron is a robust neural network in the field of function estimation and data classification [

34]. In this paper, we use a two-layer network in which each layer has six neurons, the activation function of the first layer is sigmoid, and the second layer is linear. The number of epochs for training is 100, and its training algorithm is that of “Levenburg and Marquardt”. Besides that, the learning rate is 0.8.

7.2. Radial Basis Function

In this network, similar to the

MLP network, after gaining the necessary features from a noisy image, the result vector is given to the

RBF network, and we trained it. Then, the other noisy image is corrupted by the same noise ratio and given to the network, and the necessary output is obtained and according to that, we applied filters to the corrupted pixels [

35]. The value of the spread parameter, which is the width of the

RBF function, equals 6.9. Maximum neurons of the hidden layer equal 66,000, and the number of neurons added to each step in hidden layers equals 2000 neurons.

7.3. Probabilistic Neural Network

This network is a kind of

RBF network used for classification. In this network, the spread parameter value is 0.05. A smaller spread parameter value makes this network closer to the

KNN classifier [

36].

7.4. K-Nearest Neighbor

In this classifier, the parameter K is three; K’s more considerable value causes a longer program run-time, whereas image quality is improved. For calculating distance, we used the Euclidian method.

7.5. Support Vector Machines

This classifier uses the supervisory learning method. In this classifier, similar to in the past, two training and test input data sets of extracted features were created, and after SVM training, we gave new test data to SVM for evaluation. SVM’s function is quadratic, although functions such as polynomial ones and MLP and can be used.

7.6. Fuzzy C-Means

In this classification technique, each datum belongs to a class with a degree that is specified with a membership function. Similar to previous classifiers, test and training data were used.

7.7. Self-Organized Map

One of the most critical applications of this competitive network is data clustering and classification [

37]. In this paper, we use this robust network to separate noisy and non-noisy pixels with two methods:

7.7.1. First Method: Normal SOM

Normal SOM (N-SOM) is a network with only two neurons and one layer representing one class. We gave training data to SOM and trained it. The order phase learning rate equals 0.9, and the tuning phase learning rate is 0.02. The order phase steps are 1000, and the tuning phase neighborhood distance is 1. The number of epochs is 100, and we used the Euclidian method for calculating the distance of weight and input matrices; after this stage, we gave test data to the network and specified its classes.

7.7.2. Second Method; Chain SOM

Using a classifier called chain

SOM (Ch-

SOM), we first trained an original image with a

SOM network, and at another time, we trained the same image in a corrupted form with another

SOM network, then we corrupted the other image as a test. Both

SOM networks have the learning parameters of the previous method, except that number of neurons is 40 and stands in [

5,

11] form in topology; the neighborhood shape is hexagonal.

We separated the weight vector of trained networks, then the feature vector for each pixel was compared with these two weight matrices, and a pixel was assigned to a class or category because its feature vector was closer to the weight vector. Here, each network is representative of a class.

7.8. TASOM, TW, and TWP

In

TASOM, according to

Table 2 the values of the maximum neuron parameter,

K,

DUW,

DWD, the number of primary neurons, and

Sf,

Sg, respectively, are 100, 100, 0.05, 0.0005, 2, 0.5, 0.5. In the

TW classifier, the mother wavelet is

Symlet, the number of analysis levels is 3, and network parameters are that of

TASOM.

8. Spatial and Adaptive Filter Using TASOM Classifier

In all the tests carried out using classifiers, the median filter is our default filter. We usually use Norm and Median for extracting features from images. In this section, we tested spatial and adaptive filters on the TASOM classifier, and we sought the best filter in calculative and objective terms for use on the TASOM classifier. We know that filters are divided into three main classes: spatial, frequency, and adaptive domain filters. In this part, the size of the detection window is 3 × 3, the size of the filtering window is 5 × 5, and the value of T in the Alpha Trimmed filter equals ((m × n) − 1)/3, such that m and n are image dimensions and the order value of the Contra Harmonic filter is between −3 and +3.

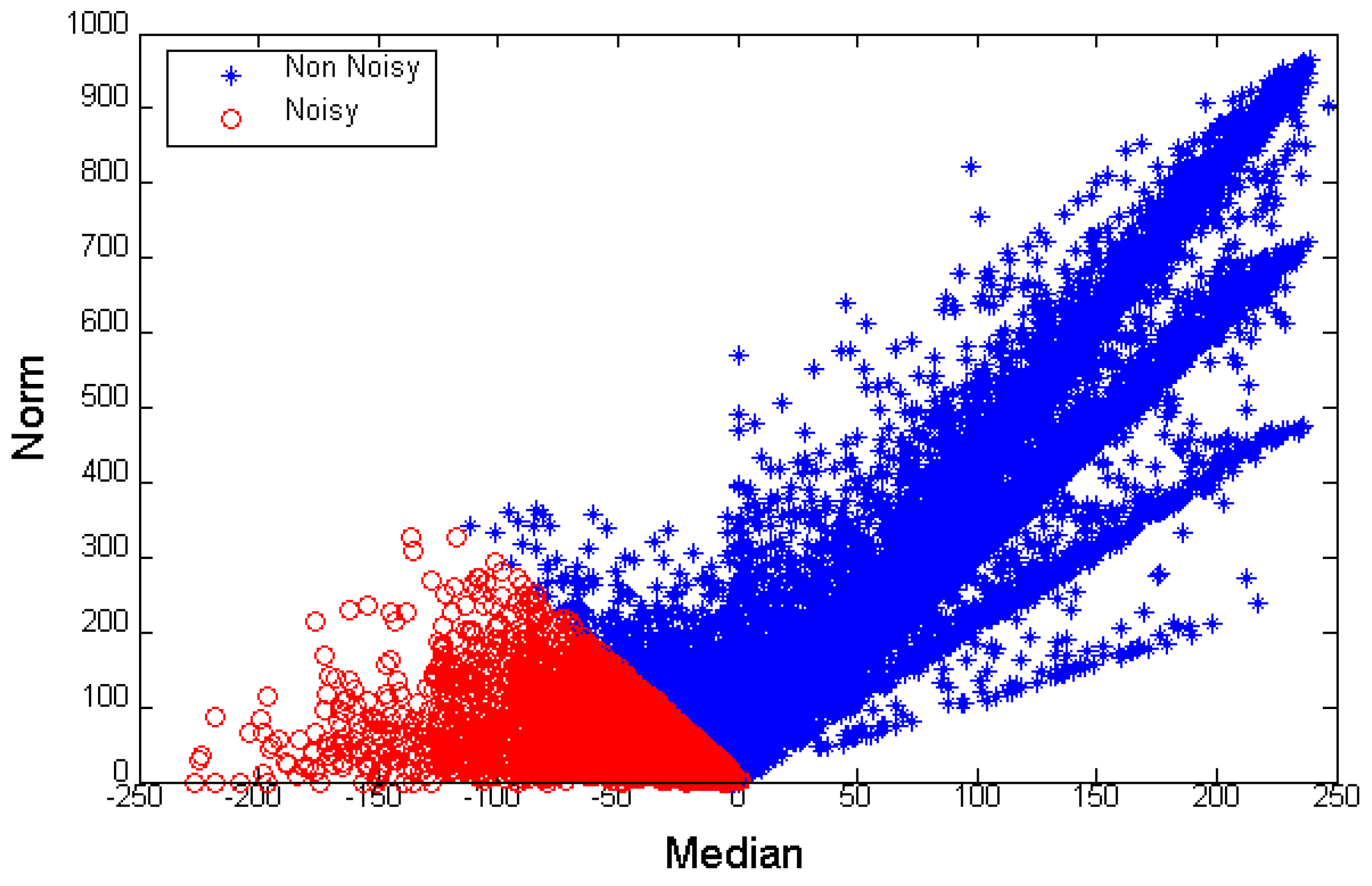

9. Feature Extraction

In this section, an attempt has been made to extract features directly or indirectly from the detection window histogram for each pixel [

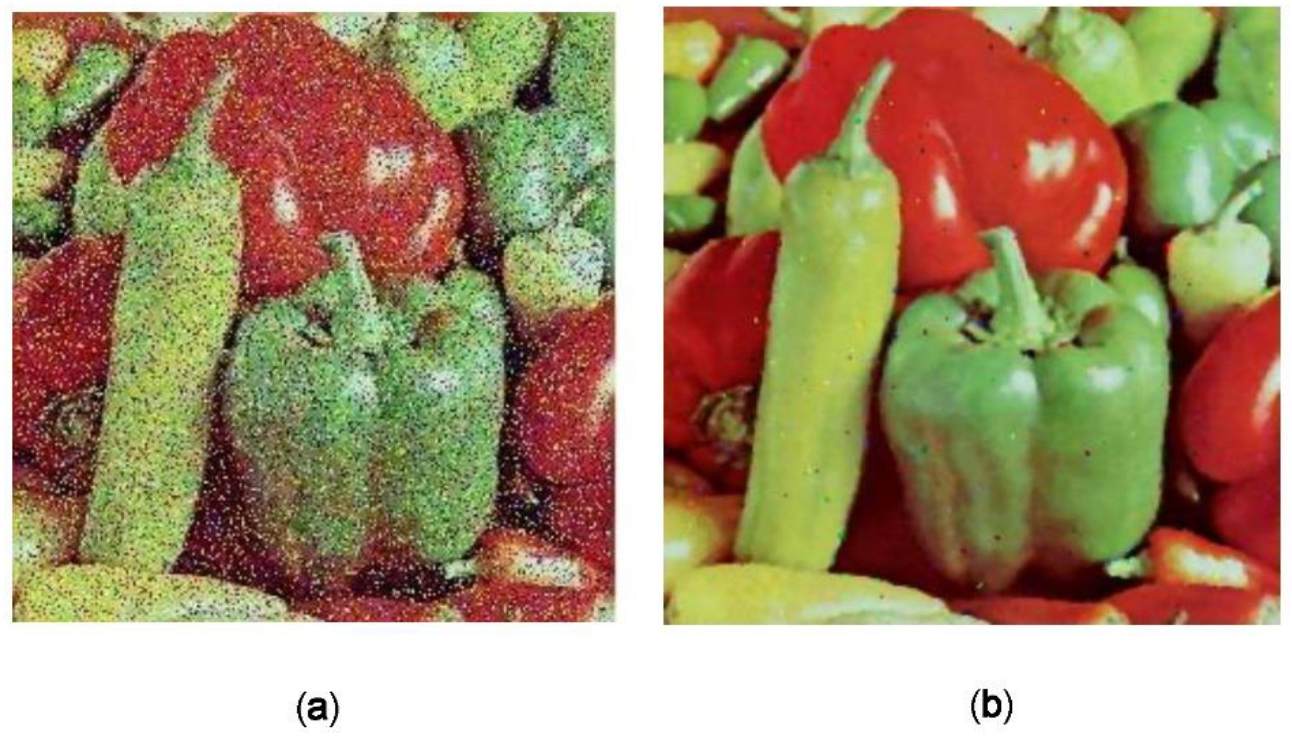

38]. We tried to use statistical features in the detection window and combine different features to obtain an optimum response. We can point out variance, norm, median, entropy, energy, and mean as desirable features; the corrupt image in these tests is Lena for network training, and the image for its test is Peppers. The corruption degree of both mentioned images equals 20%. Working on different combinations of features helps us reach an optimum result. At first, we investigated the dual combination of features, and then we increased their numbers. Then, we separated tests into two parts, comprising direct statistical features and histogram statistical features. Direct statistical features include median and mean, standard deviation, norm, entropy, and energy. Features extracted from the histogram consist of mean, standard deviation, energy, and entropy features [

39].

While investigating obtained results, we noticed that the best of them in terms of natural features are median and standard deviation, and

Table 3 features obtained values from the histogram; the best ones are energy and entropy. Then, we attempted to combine these four features. As we observed from results obtained from the above test, combining these four features gives us the optimum results.

10. Analysis of Results

According to the results gained from previous tests, adaptive filters such as

Wiener and

MMSE are not appropriate for reducing impulsive noises. In

SOM domain (unsupervised) classifiers, the best result is by

TWP,

TW,

TASOM,

N-SOM, and

Ch-SOM, as the noise density increases. In

Table 4 and

Table 5 and

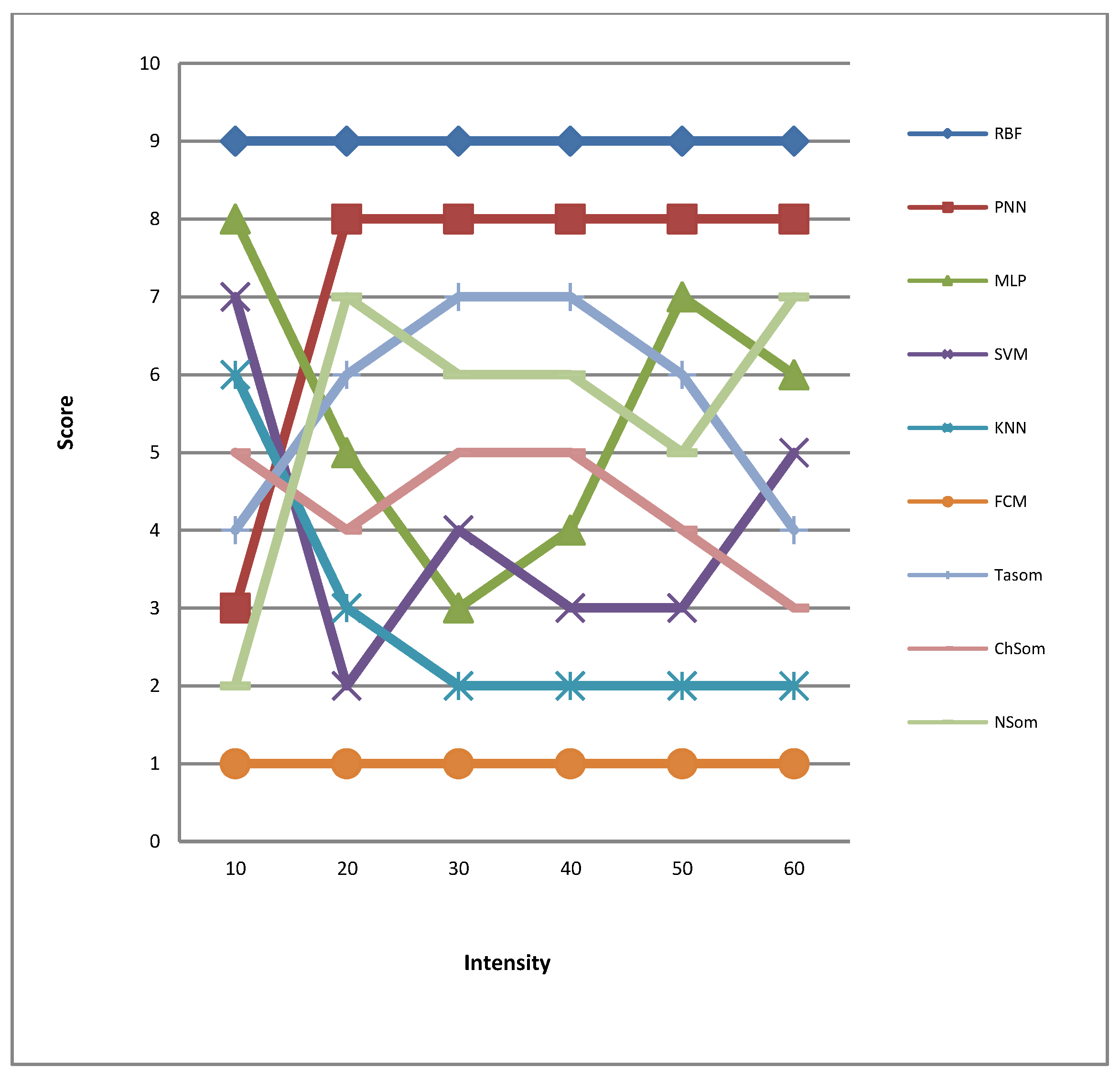

Figure 2, the

TASOM classifier shows better results, with medium densities from 20 to 50%, than those of the others, mainly supervised classifiers; the

Ch-SOM classifier has a usual trend because it is usually located in the middle of ranking and often works better than the

N-SOM classifier. In low densities of noise, the

SVM and

KNN classifiers work better than the others, but they are generally weak. Conversely,

PNN demonstrates acceptable results at high densities and 10% density, and its trend is not satisfactory.

TWP classification from 40% density onwards is stabilized.

TW, similar to

TWP, has a similar routine. The best results are for

RBF,

PNN,

MLP,

SVM,

KNN, and

Fuzzy C-Mean in the supervisory statistical classifier section.

RBF and

PNN classifiers have a uniform and very robust routine.

KNN and

Fuzzy C-Mean classifiers show a uniform and weak routine for all densities, too.

MLP and

SVM classifiers have a uniform and average routine. In

Figure 3 RBF,

PNN,

TWP,

TW,

TASOM,

MLP,

N-SOM,

Ch-SOM,

SVM,

KNN, and

FCM, respectively, amongst all classifiers, have shown better results. According to the above ranking,

RBF and

PNN mostly have shorter general run-times and better results. By the way,

TWP has been assigned to an average rank but has a good result.

Table 6 presents that the most extended general run-time is related to

KNN, and the shortest is related to

SVM. In conclusion, in terms of image quality,

TASOM classifier versions and

TWP and

TASOM classifiers work well. In general terms, according to the results of executed tests,

TASOM classifier versions and specifically the

TASOM classifier are amongst the best existing classifiers.

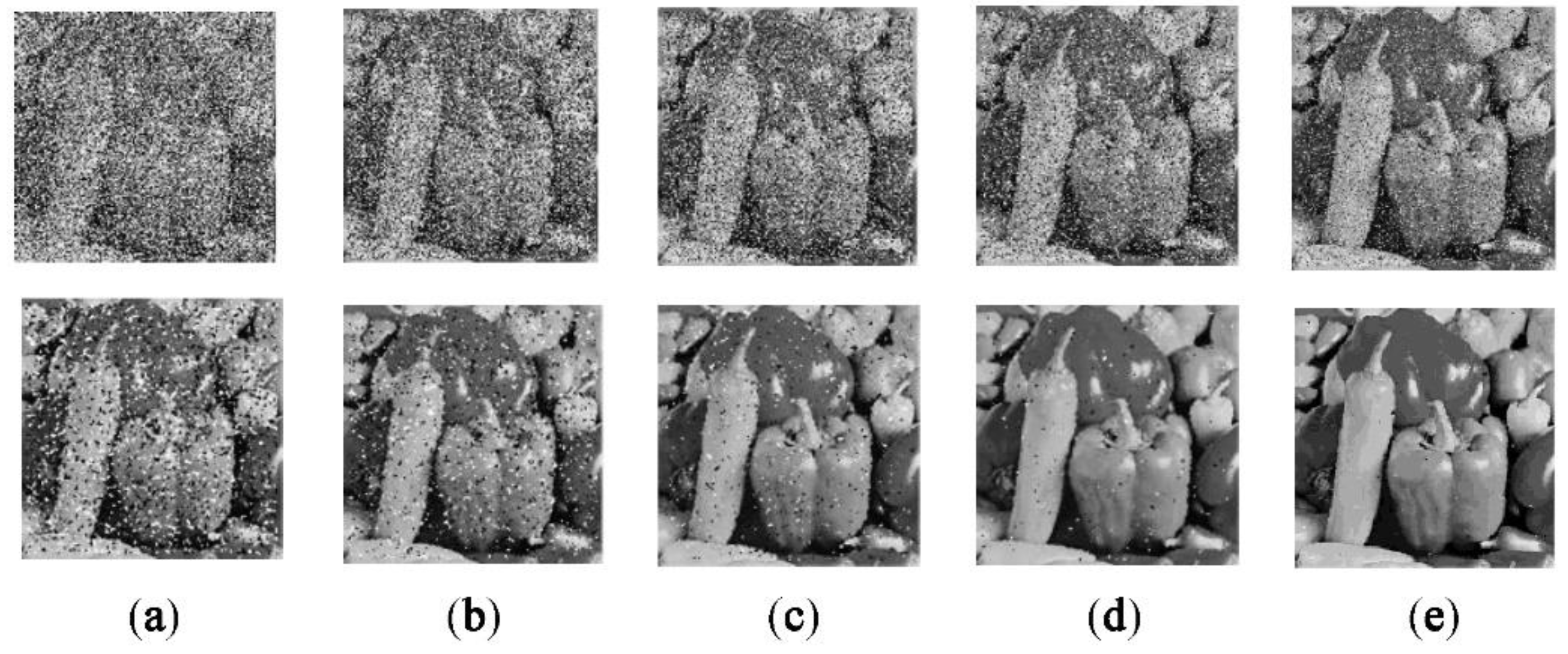

Regarding the spatial filters’ effect on impulsive noise in

Table 7, 12 evaluated filters, including Alpha Trimmed, Median, Mean, Pseudo median, Gaussian, Contra harmonic, Midpoint, Geometric, Harmonic, Min, and Max, have better results than the others. In

Table 8 and

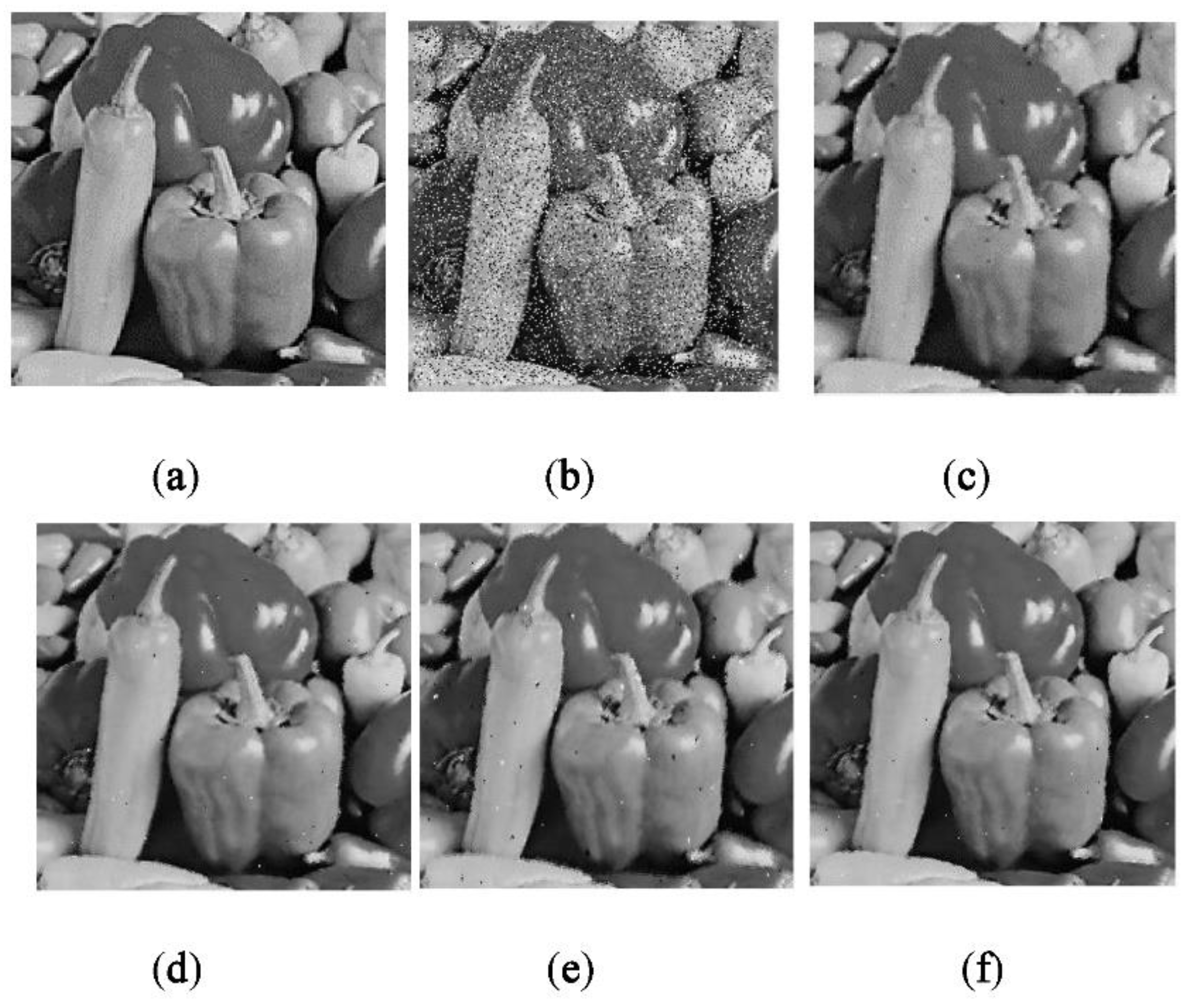

Figure 4, among the four best filters, Alpha trimmed and Median filters maintain image details better than the rest in the four best filters. The Mean filter with an average density gives better results than those with other densities, making the image blurred and a little dim. Max and Min filters are appropriate only for salt or pepper noises, even though they behave very poorly under other conditions. Generally, the results show that median domain filters, such as Alpha Trimmed, Median, and Pseudo Median, perform better in performance and preserve image details than mean domain filters. According to the tests, the best statistical features extracted from images as the input of the classifier without using an image histogram are median and norm, and in the case of using the histogram, they are energy and entropy. In

Table 9, a combination of these best features gives us a satisfactory result. In

Figure 5. the images confirm robustness of our novel proposed classifier in detection of corrupt pixels besides using an appropriate filter.

11. Future Works

There are other types of image noises such as Gaussian and periodic image noises in frequency domains. It would be an interesting field to research and find better tools to suppress such disrupting noises. In future works, we will obtain an appropriate way of eliminating noises from images.

12. Conclusions

Finally, results indicate that the TASOM classifier has a better result than SOM domain classifiers. This classifier, regarding its supervising learning method, works more successfully than the other supervised classifiers, except for the RBF and PNN networks. This will guide us to find an appropriate classifier and enhance the filtering of contaminated impulse noises on images in order to restore them while keeping their details.

Author Contributions

Conceptualization, S.H.H. and A.B. (Ali Bayandour); methodology, S.H.H. and A.B. (Ali Bayandour); software, S.H.H. and A.K.; validation, S.H.H., A.K. and A.B. (Ali Barkhordary); formal analysis, S.H.H. and A.B. (Ali Bayandour); investigation, S.H.H. and A.B. (Ali Bayandour); resources, A.F. and E.G.M.; data curation, S.H.H. and A.F.; writing—original draft preparation, A.B. (Ali Bayandour) and E.G.M.; writing—review and editing, S.H.H., A.K. and A.B. (Ali Barkhordary); visualization, S.H.H. and A.B. (Ali Bayandour); supervision, A.F.; project administration, A.F.; funding acquisition, A.F. All authors have read and agreed to the published version of the manuscript.”

Funding

This paper is supported by the Faculty of Engineering, and Research Management Center of Universiti Malaysia Sabah.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

Nomenclature

| TASOM | Time adaptive self-organizing map neural network |

| SOM | Self-organizing map neural network |

| TW | TASOM classifier using Wavelet Transform as feature |

| TWP | TASOM classifier using Wavelet and PCA as feature |

| N-SOM | Normal SOM classifier |

| Ch-SOM | Chain-SOM classifier |

| PCA | Principal Component Analysis |

| GA | Genetic Algorithm |

| PC | Personal Computer |

| MMSE | Minimum Mean Square Error |

| Wj(0) | Weight vector |

| N | Number of Neurons in the network |

| α, β | Controlling local neighborhood errors |

| Sf, Sg | Controlling compression and topological neuron orders |

| DUW, DWD | Controlling classification’s accuracy and speed |

| SVM | Support Vector Machine classifier |

| RBF | Radial Basis Function classifier |

| MLP | Multi-Layer Perceptron classifier |

| KNN | K-nearest Neighbors classifier |

| PNN | Probabilistic Neural Network classifier |

| FCM | Fuzzy C-means classifier |

References

- Gonzales, R.C.; Woods, R.E. Digital Image Processing Second Edition; Prentice Hall: Hoboken, NJ, USA, 2001. [Google Scholar]

- Vaseghi, S.V. Advanced Digital Signal Processing and Noise Reduction; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Jähne, B. DigitalImageProcessing. 2002. Available online: https://library.uoh.edu.iq/admin/ebooks (accessed on 1 November 2020).

- Hu, P.; Wang, W.; Zhang, C.; Lu, K. Detecting salient objects via color and texture compactness hypotheses. IEEE Trans. Image Process. 2016, 25, 4653–4664. [Google Scholar] [CrossRef]

- Naqvi, S.S.; Browne, W.N.; Hollitt, C. Feature quality-based dynamic feature selection for improving salient object detection. IEEE Trans. Image Process. 2016, 25, 4298–4313. [Google Scholar] [CrossRef] [PubMed]

- Meng, F.; Li, X.; Pei, J. A feature point matching based on spatial order constraints bilateral-neighbor vote. IEEE Trans. Image Process. 2015, 24, 4160–4171. [Google Scholar] [CrossRef]

- Chou, H.-H.; Hsu, L.-Y. A noise-ranking switching filter for images with general fixed-value impulse noises. Signal Process. 2015, 106, 198–208. [Google Scholar] [CrossRef]

- Roy, A.; Laskar, R.H. Multiclass SVM based adaptive filter for removal of high density impulse noise from color images. Appl. Soft Comput. 2016, 46, 816–826. [Google Scholar] [CrossRef]

- Wu, J.; Tang, C. Random-valued impulse noise removal using fuzzy weighted non-local means. Signal Image Video Process. 2014, 8, 349–355. [Google Scholar] [CrossRef]

- Xu, G.; Tan, J. A universal impulse noise filter with an impulse detector and nonlocal means. Circuits Syst. Signal Process. 2014, 33, 421–435. [Google Scholar] [CrossRef]

- Ahmed, F.; Das, S. Removal of high-density salt-and-pepper noise in images with an iterative adaptive fuzzy filter using alpha-trimmed mean. IEEE Trans. Fuzzy Syst. 2013, 22, 1352–1358. [Google Scholar] [CrossRef]

- Ataman, E.; Aatre, V.; Wong, K. A fast method for real-time median filtering. IEEE Trans. Acoust. Speech Signal Process. 1980, 28, 415–421. [Google Scholar] [CrossRef]

- Pitas, I.; Venetsanopoulos, A.N. Order statistics in digital image processing. Proc. IEEE 1992, 80, 1893–1921. [Google Scholar] [CrossRef]

- Brownrigg, D.R. The weighted median filter. Commun. ACM 1984, 27, 807–818. [Google Scholar] [CrossRef]

- Hardie, R.C.; Boncelet, C. LUM filters: A class of rank-order-based filters for smoothing and sharpening. IEEE Trans. Signal Process. 1993, 41, 1061–1076. [Google Scholar] [CrossRef]

- Arce, G.R. Multistage order statistic filters for image sequence processing. IEEE Trans. Signal Process. 1991, 39, 1146–1163. [Google Scholar] [CrossRef]

- Ko, S.-J.; Lee, Y.H. Center weighted median filters and their applications to image enhancement. IEEE Trans. Circuits Syst. 1991, 38, 984–993. [Google Scholar] [CrossRef]

- Dong, Y.; Chan, R.H.; Xu, S. A detection statistic for random-valued impulse noise. IEEE Trans. Image Process. 2007, 16, 1112–1120. [Google Scholar] [CrossRef]

- Acharya, T.; Ray, A.K. Image Processing: Principles and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Elaziz, M.A.; Ahmadein, M.; Ataya, S.; Alsaleh, N.; Forestiero, A.; Elsheikh, A.H. A Quantum-Based Chameleon Swarm for Feature Selection. Mathematics 2022, 10, 3606. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Abd Elaziz, M.; Vendan, A. Modeling ultrasonic welding of polymers using an optimized artificial intelligence model using a gradient-based optimizer. Weld. World 2022, 66, 27–44. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Elsheikh, A.H.; Oliva, D.; Abualigah, L.; Lu, S.; Ewees, A.A. Advanced Metaheuristic Techniques for Mechanical Design Problems: Review. Arch. Comput. Methods Eng. 2022, 29, 695–716. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Panchal, H.; Ahmadein, M.; Mosleh, A.O.; Sadasivuni, K.K.; Alsaleh, N.A. Productivity forecasting of solar distiller integrated with evacuated tubes and external condenser using artificial intelligence model and moth-flame optimizer. Case Stud. Therm. Eng. 2021, 28, 101671. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Alsaleh, N.A.; Elsheikh, A.H.; Saba, A.I.; Ahmadein, M. Boosting COVID-19 Image Classification Using MobileNetV3 and Aquila Optimizer Algorithm. Entropy 2021, 23, 1383. [Google Scholar] [CrossRef]

- Moustafa, E.B.; Hammad, A.H.; Elsheikh, A.H. A new optimized artificial neural network model to predict thermal efficiency and water yield of tubular solar still. Case Stud. Therm. Eng. 2022, 30, 101750. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Abd Elaziz, M.; Das, S.R.; Muthuramalingam, T.; Lu, S. A new optimized predictive model based on political optimizer for eco-friendly MQL-turning of AISI 4340 alloy with nano-lubricants. J. Manuf. Process. 2021, 67, 562–578. [Google Scholar] [CrossRef]

- Elsheikh, A.H.; Shehabeldeen, T.A.; Zhou, J.; Showaib, E.; Abd Elaziz, M. Prediction of laser cutting parameters for polymethyl methacrylate sheets using random vector functional link network integrated with equilibrium optimizer. J. Intell. Manuf. 2021, 32, 1377–1388. [Google Scholar] [CrossRef]

- Image Restoration Models. In Digital Image Processing; John Wiley & Sons: Hoboken, NJ, USA, 2001; pp. 297–317. [CrossRef]

- Ng, P.-E.; Ma, K.-K. A switching median filter with boundary discriminative noise detection for extremely corrupted images. IEEE Trans. Image Process. 2006, 15, 1506–1516. [Google Scholar] [PubMed]

- Zhang, X.; Xiong, Y. Impulse noise removal using directional difference based noise detector and adaptive weighted mean filter. IEEE Signal Process. Lett. 2009, 16, 295–298. [Google Scholar] [CrossRef]

- Shah-Hosseini, H.; Safabakhsh, R. TASOM: A new time adaptive self-organizing map. IEEE Trans. Syst. Man Cybern. Part B (Cybernetics) 2003, 33, 271–282. [Google Scholar] [CrossRef]

- Shah-Hosseini, H.; Safabakhsh, R. Automatic multilevel thresholding for image segmentation by the growing time adaptive self-organizing map. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1388–1393. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Arbib, M.A. Brain Theory and Neural Networks; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Graupe, D. Principles of Artificial Neural Networks; World Scientific: Singapore, 2007. [Google Scholar]

- Fausett, L. Fundamentals of Neural Networks: Architectures, Algorithms, and Applications; Prentice-Hall, Inc.: Hoboken, NJ, USA, 1994. [Google Scholar]

- Simon, H. Neural Networks: A Comprehensive Foundation; Prentice Hall: Hoboken, NJ, USA, 1999. [Google Scholar]

- Nixon, M.; Aguado, A.S. Feature Extraction & Image Processing, Second Edition; Academic Press, Inc.: Cambridge, MA, USA, 2008. [Google Scholar]

- Szepesvari, C. Image Processing: Low-level Feature Extraction; University of Alberta: Edmonton, AB, Canada, 2007. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).