An Enhancement Method Based on Long Short-Term Memory Neural Network for Short-Term Natural Gas Consumption Forecasting

Abstract

1. Introduction

2. Methodology

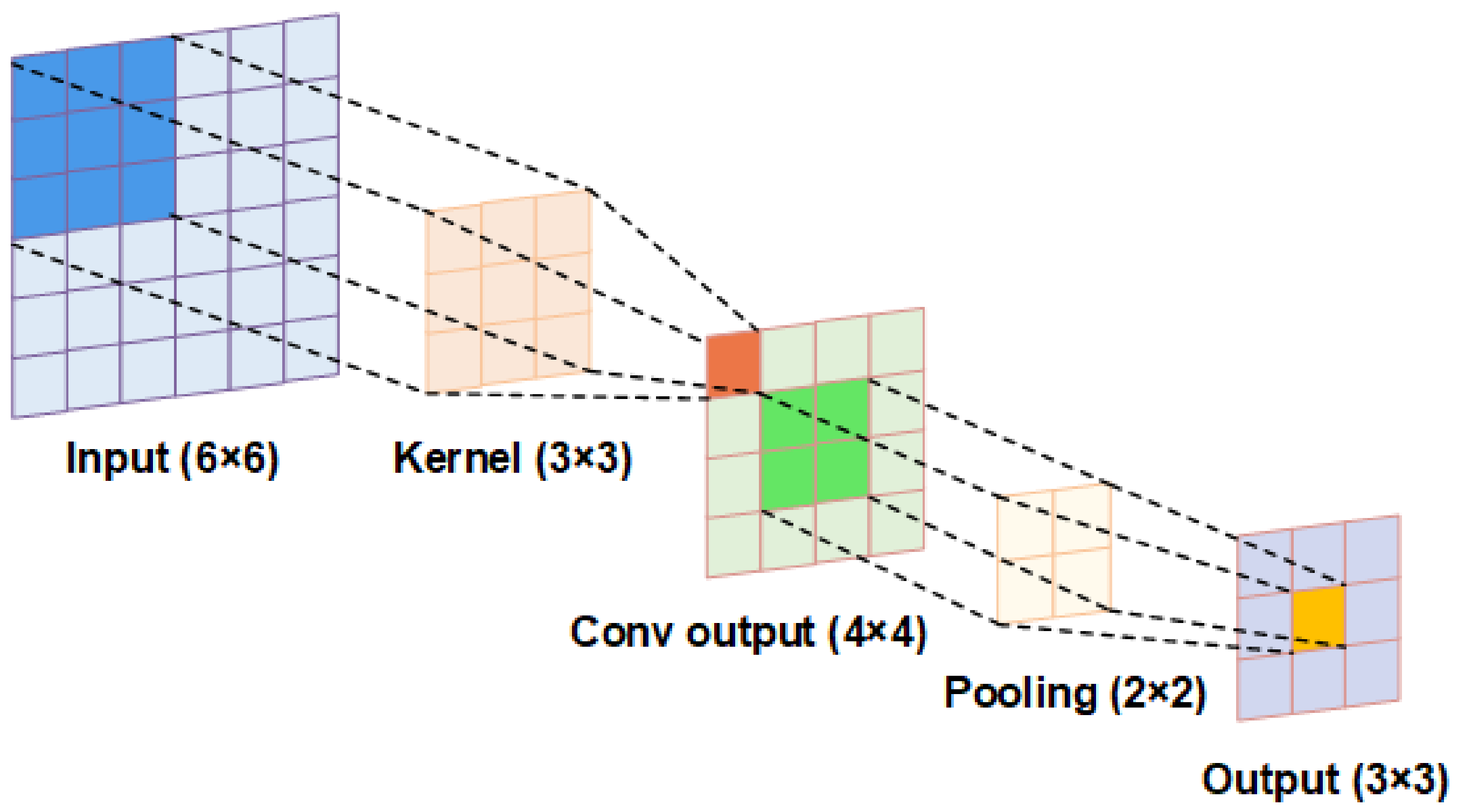

2.1. Convolutional Neural Network

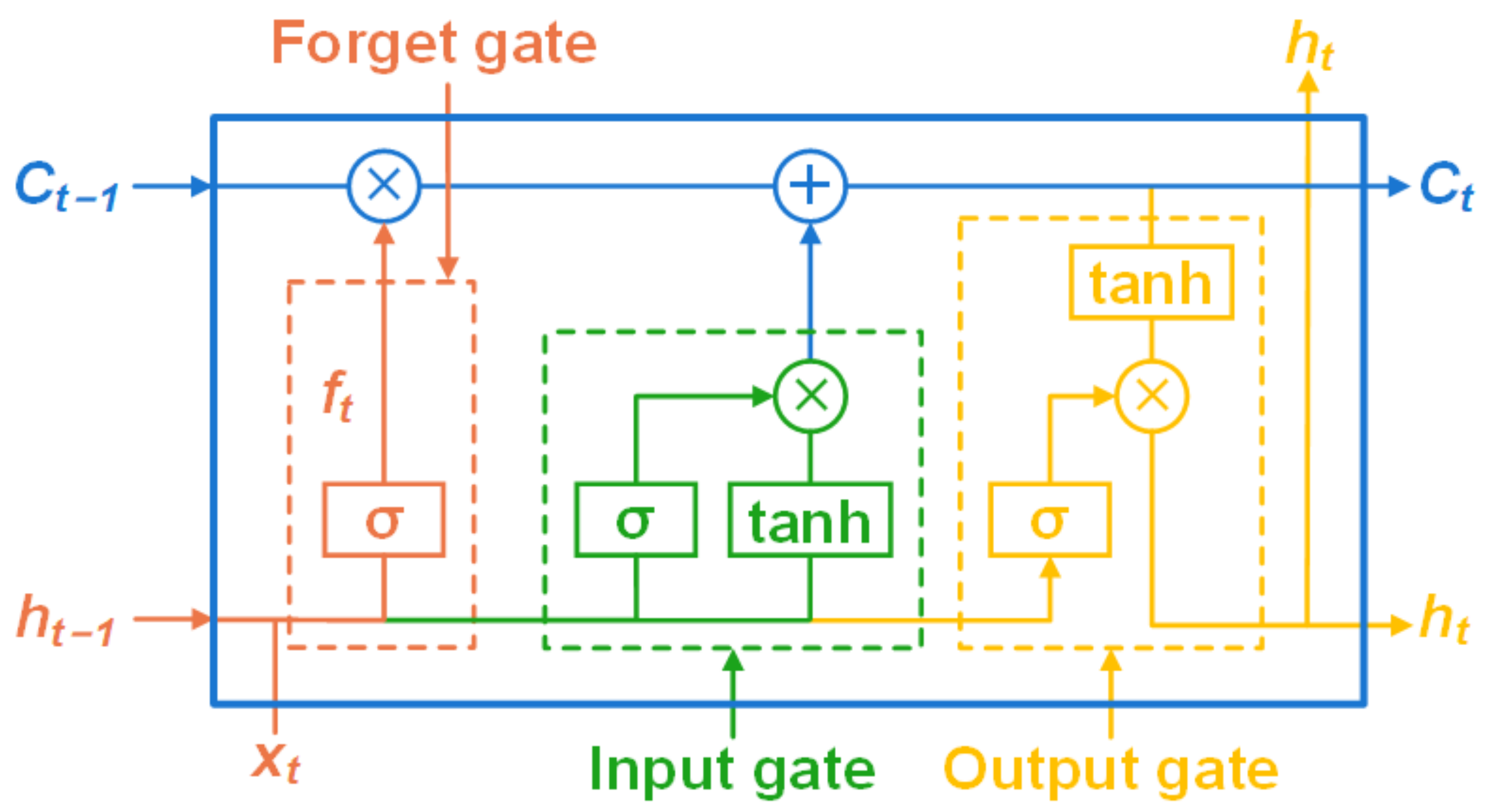

2.2. Long Short-Term Memory

- (1)

- Forget gate: the forget gate reflects the ability to learn historical information.

- (2)

- Input gate: the input gate performs the selectivity of the memory module by utilizing a nonlinear function to determine which portion of the input information will be stored.

- (3)

- Output gate: the function of the output gate is to update the parameters of hidden layers, including selective learning and preservation of historical data. The new cell state and hidden vector state will be transmitted to the subsequent time step.where Ct, , and Ct-1 represent cell status; xt and ht are the vector states at time t; and W and b are the weights and biases, respectively.

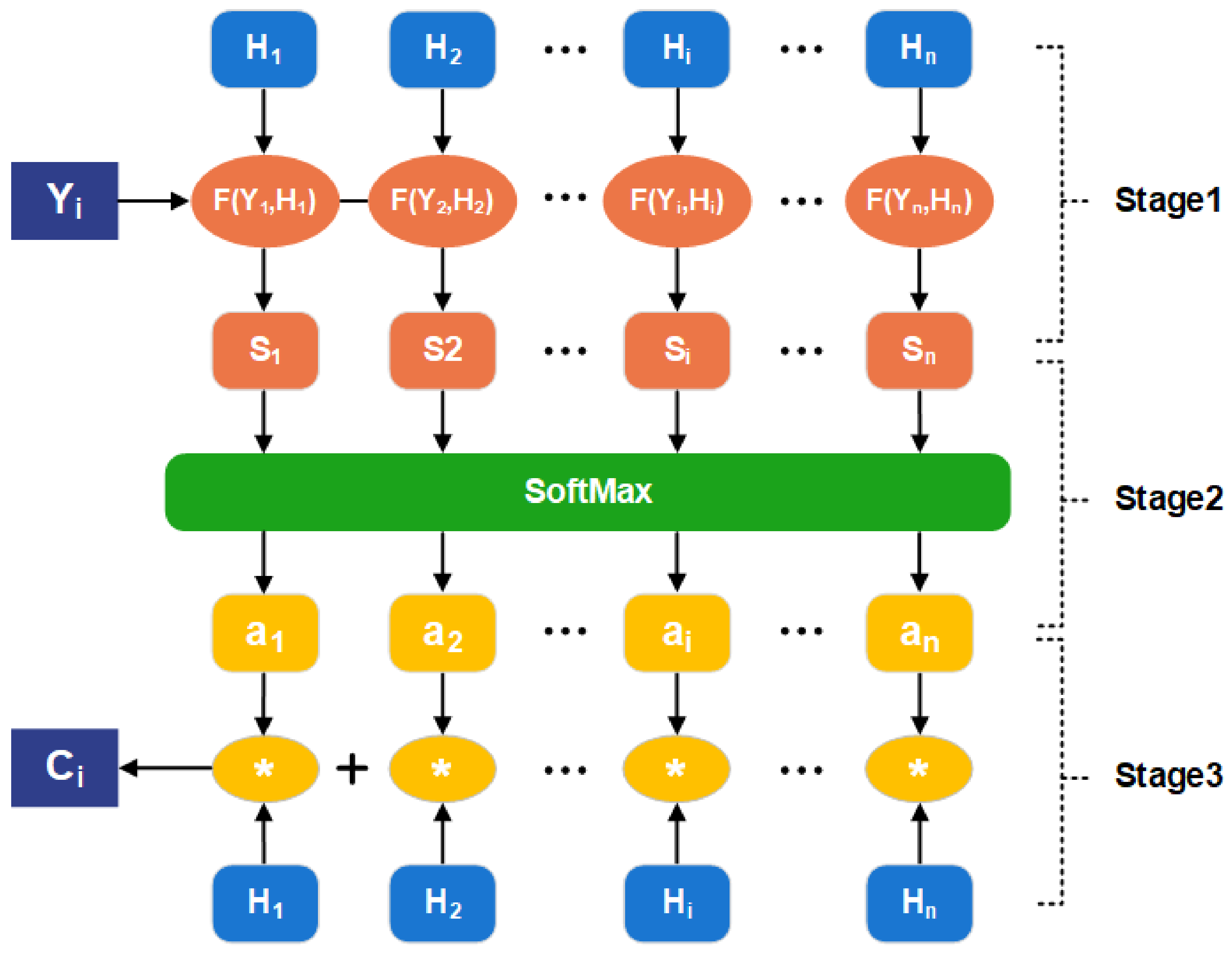

2.3. Attention Mechanism

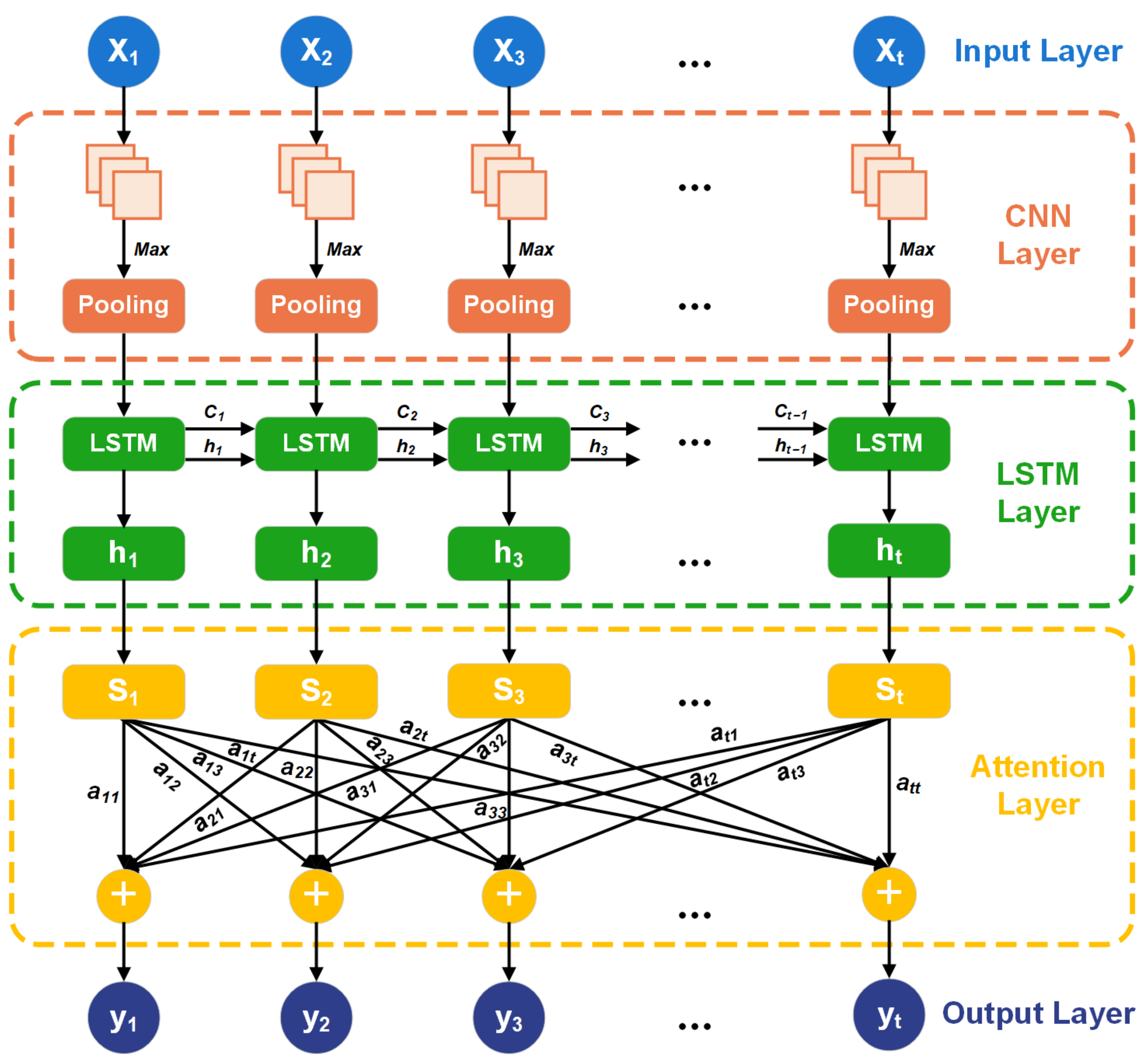

2.4. Strategy of the Proposed Model

3. Results and Discussion

3.1. Evaluation Methods

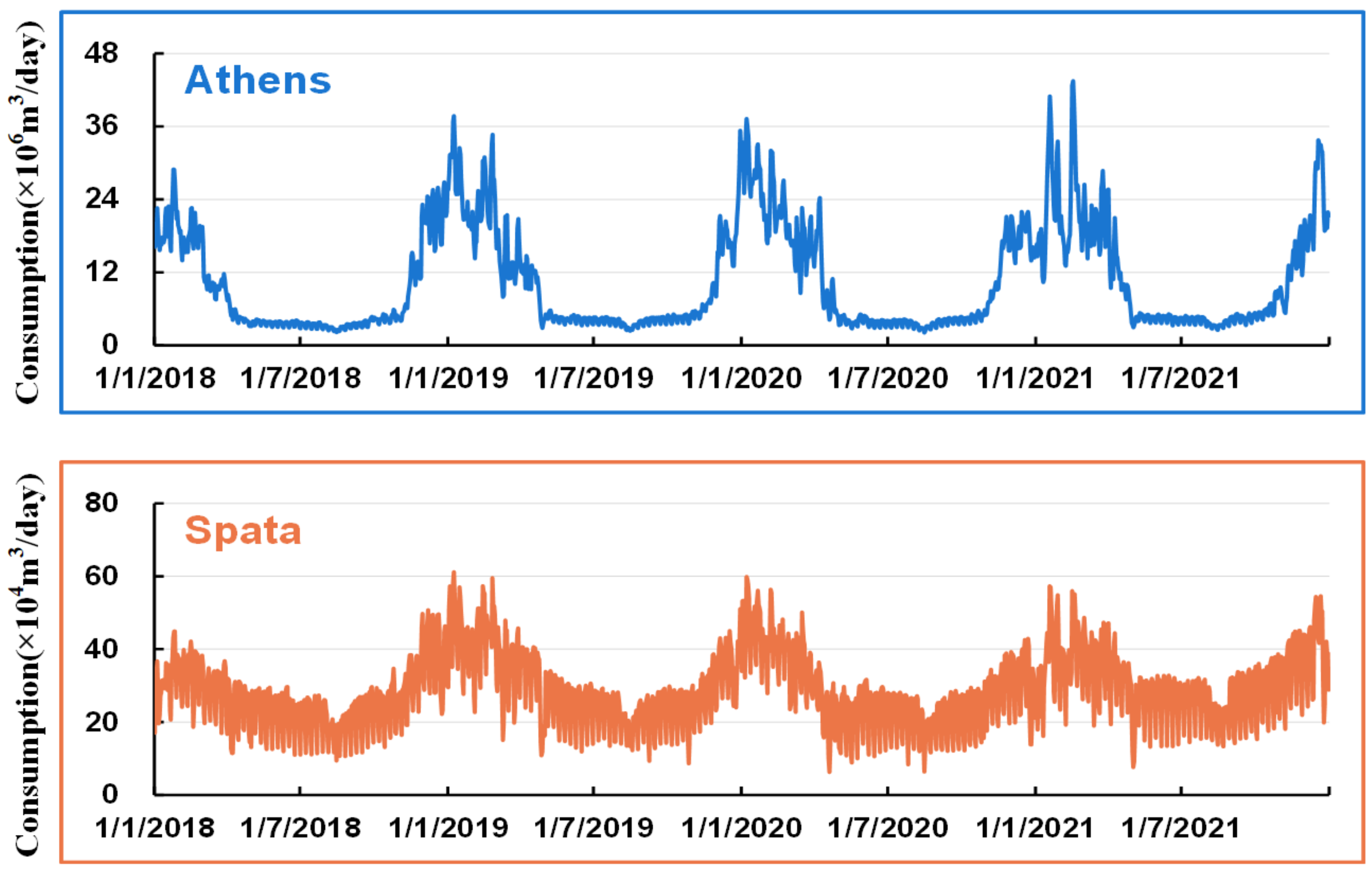

3.2. Data Description

3.3. Experimental Setup

3.4. Factor Selection

3.5. Performance Discussion

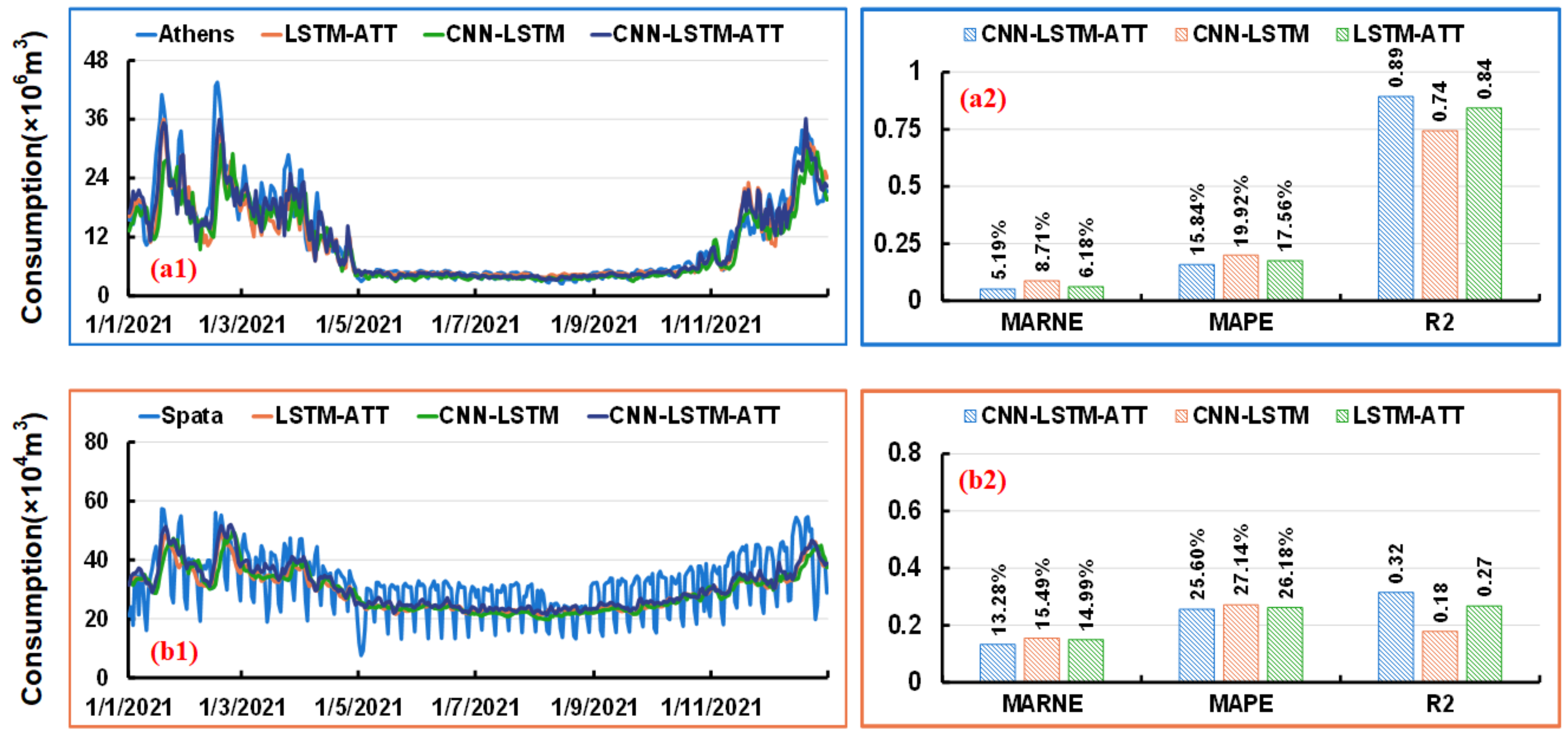

3.5.1. Comparison between Two Enhanced Methods for LSTM

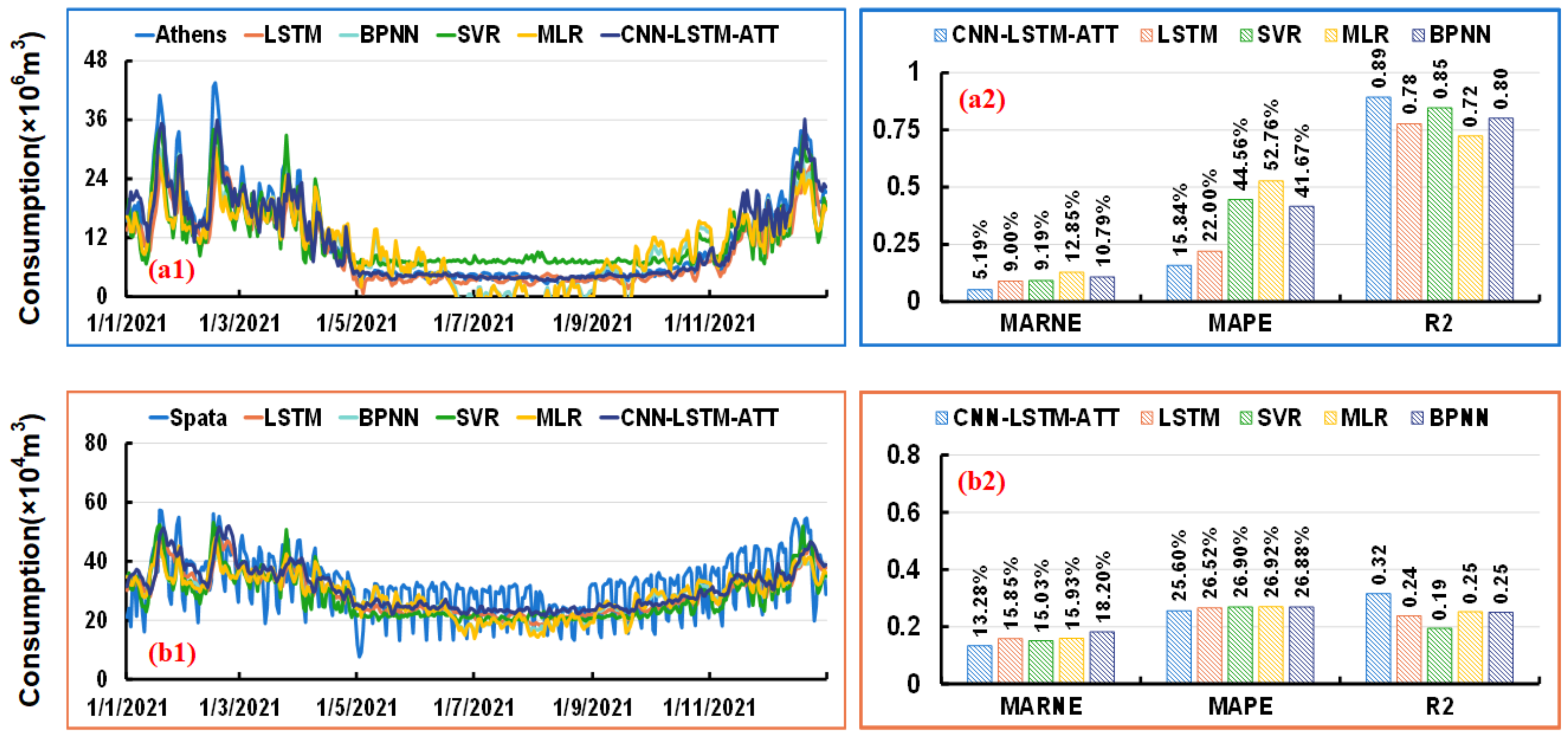

3.5.2. Comparison with Other Forecasting Models

4. Conclusions

- I

- Compared with two enhancement methods for LSTM, the combined approach of CNN, LSTM, and attention mechanism takes full advantage of each algorithm and achieves better performance. Compared with CNN-LSTM, the evaluation indicators of the proposed model are improved by more than 20% in Athens and by more than 5% in Spata. The MARNE of the proposed model is improved by more than 11% in the two designed datasets as against LSTM-ATT.

- II

- The proposed enhancement method for LSTM significantly improves forecasting accuracy. Compared with single LSTM, SVR, and MLR, CNN-LSTM-ATT perfectly fits the peak and trough of the consumption curves and exhibits the best performance and robustness. The results indicate that the improvement of the proposed model on MARNE, MAPE, and R2 exceeds 42%, 27%, and 5% in Athens, respectively. The R2 is improved by more than 25%, even in the high-complexity dataset, Spata.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, C.; Wu, W.-Z.; Xie, W.; Zhang, T.; Zhang, J. Forecasting natural gas consumption of China by using a novel fractional grey model with time power term. Energy Rep. 2021, 7, 788–797. [Google Scholar] [CrossRef]

- BP plc. BP Statistical Review of World Energy 2021; BP: London, UK, 2021. [Google Scholar]

- Deng, C.; Zhang, X.; Huang, Y.; Bao, Y. Equipping seasonal exponential smoothing models with particle swarm optimization algorithm for electricity consumption forecasting. Energies 2021, 14, 4036. [Google Scholar] [CrossRef]

- Wei, N.; Li, C.; Li, C.; Xie, H.; Du, Z.; Zhang, Q.; Zeng, F. Short-term forecasting of natural gas consumption using factor selection algorithm and optimized support vector regression. J. Energy Resour. Technol. 2019, 141, 032701. [Google Scholar] [CrossRef]

- Beyca, O.F.; Ervural, B.C.; Tatoglu, E.; Ozuyar, P.G.; Zaim, S. Using machine learning tools for forecasting natural gas consumption in the province of Istanbul. Energy Econ. 2019, 80, 937–949. [Google Scholar] [CrossRef]

- Verhulst, M.J. The theory of demand applied to the French gas industry. Econom. J. Econom. Soc. 1950, 18, 45–55. [Google Scholar] [CrossRef]

- Gil, S.; Deferrari, J. Generalized model of prediction of natural gas consumption. J. Energy Resour. Technol. 2004, 126, 90–98. [Google Scholar] [CrossRef]

- Fan, G.; Wang, A.; Hong, W. Combining grey model and self-adapting intelligent grey model with genetic algorithm and annual share changes in natural gas demand forecasting. Energies 2018, 11, 1625. [Google Scholar] [CrossRef]

- Akpinar, M.; Yumusak, N. Forecasting household natural gas consumption with ARIMA model: A case study of removing cycle. In Proceedings of the International Conference on Application of Information and Communication Technologies, Washington, DC, USA, 12–14 October 2022; pp. 1–6. [Google Scholar]

- Bai, Y.; Sun, Z.; Zeng, B.; Long, J.; Li, L.; de Oliveira, J.V.; Li, C. A comparison of dimension reduction techniques for support vector machine modeling of multi-parameter manufacturing quality prediction. J. Intell. Manuf. 2019, 30, 2245–2256. [Google Scholar] [CrossRef]

- Lu, H.; Azimi, M.; Iseley, T. Short-term load forecasting of urban gas using a hybrid model based on improved fruit fly optimization algorithm and support vector machine. Energy Rep. 2019, 5, 666–677. [Google Scholar] [CrossRef]

- Hribar, R.; Potocnik, P.; Silc, J.; Papa, G. A comparison of models for forecasting the residential natural gas demand of an urban area. Energy 2019, 167, 511–522. [Google Scholar] [CrossRef]

- Wei, N.; Yin, L.; Li, C.; Wang, W.; Qiao, W.; Li, C.; Zeng, F.; Fu, L. Short-term load forecasting using detrend singular spectrum fluctuation analysis. Energy 2022, 256, 124722. [Google Scholar] [CrossRef]

- Muzaffar, S.; Afshari, A. Short-term load forecasts using LSTM networks. Energy Procedia 2019, 158, 2922–2927. [Google Scholar] [CrossRef]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Trans. Smart Grid 2017, 10, 841–851. [Google Scholar] [CrossRef]

- Peng, S.; Chen, R.; Yu, B.; Xiang, M.; Lin, X.; Liu, E. Daily natural gas load forecasting based on the combination of long short term memory, local mean decomposition, and wavelet threshold denoising algorithm. J. Nat. Gas Sci. Eng. 2021, 95, 104175. [Google Scholar] [CrossRef]

- Hira, Z.M.; Gillies, D.F. A review of feature selection and feature extraction methods applied on microarray data. Adv. Bioinform. 2015, 2015, 198363. [Google Scholar] [CrossRef]

- Shahid, F.; Zameer, A.; Muneeb, M. Predictions for COVID-19 with deep learning models of LSTM, GRU and Bi-LSTM. Chaos Solitons Fractals 2020, 140, 110212. [Google Scholar] [CrossRef]

- Qiao, W.; Yang, Z.; Kang, Z.; Pan, Z. Short-term natural gas consumption prediction based on volterra adaptive filter and improved whale optimization algorithm. Eng. Appl. Artif. Intell. 2020, 87, 103323. [Google Scholar] [CrossRef]

- Wei, N.; Li, C.; Duan, J.; Liu, J.; Zeng, F. Daily natural gas load forecasting based on a hybrid deep learning model. Energies 2019, 12, 218. [Google Scholar] [CrossRef]

- Wu, Y.X.; Wu, Q.B.; Zhu, J.Q. Data-driven wind speed forecasting using deep feature extraction and LSTM. IET Renew. Power Gener. 2019, 13, 2062–2069. [Google Scholar] [CrossRef]

- Lu, Q.; Sun, S.; Duan, H.; Wang, S. Analysis and forecasting of crude oil price based on the variable selection-LSTM integrated model. Energy Inform. 2021, 4, 47. [Google Scholar] [CrossRef]

- Nakra, A.; Duhan, M. Feature Extraction and Dimensionality Reduction Techniques with Their Advantages and Disadvantages for EEG-Based BCI System: A Review. IUP J. Comput. Sci. 2020, 14, 21–34. [Google Scholar]

- Sebt, M.V.; Ghasemi, S.; Mehrkian, S. Predicting the number of customer transactions using stacked LSTM recurrent neural networks. Soc. Netw. Anal. Min. 2021, 11, 86. [Google Scholar] [CrossRef]

- Zdravković, M.; Ćirić, I.; Ignjatović, M. Explainable heat demand forecasting for the novel control strategies of district heating systems. Annu. Rev. Control 2022, 53, 405–413. [Google Scholar] [CrossRef]

- Yang, T.; Li, B.; Xun, Q. LSTM-attention-embedding model-based day-ahead prediction of photovoltaic power output using Bayesian optimization. IEEE Access 2019, 7, 171471–171484. [Google Scholar] [CrossRef]

- Le, T.; Vo, M.T.; Vo, B.; Hwang, E.; Rho, S.; Baik, S.W. Improving electric energy consumption prediction using CNN and Bi-LSTM. Appl. Sci. 2019, 9, 4237. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Bu, S.-J.; Cho, S.-B. Time series forecasting with multi-headed attention-based deep learning for residential energy consumption. Energies 2020, 13, 4722. [Google Scholar] [CrossRef]

- Jung, S.; Moon, J.; Park, S.; Hwang, E. An attention-based multilayer GRU model for multistep-ahead short-term load forecasting. Sensors 2021, 21, 1639. [Google Scholar] [CrossRef]

- Wei, N.; Yin, L.; Li, C.; Liu, J.; Li, C.; Huang, Y.; Zeng, F. Data complexity of daily natural gas consumption: Measurement and impact on forecasting performance. Energy 2022, 238, 122090. [Google Scholar] [CrossRef]

- Montesinos, L.; Castaldo, R.; Pecchia, L. On the use of approximate entropy and sample entropy with centre of pressure time-series. J. Neuroeng. Rehabil. 2018, 15, 116. [Google Scholar] [CrossRef] [PubMed]

- Wei, N.; Yin, L.; Li, C.; Li, C.; Chan, C.; Zeng, F. Forecasting the daily natural gas consumption with an accurate white-box model. Energy 2021, 232, 121036. [Google Scholar] [CrossRef]

- Sabo, K.; Scitovski, R.; Vazler, I.; Zekić-Sušac, M. Mathematical models of natural gas consumption. Energy Convers. Manag. 2011, 52, 1721–1727. [Google Scholar] [CrossRef]

- Soldo, B. Forecasting natural gas consumption. Appl. Energy 2012, 92, 26–37. [Google Scholar] [CrossRef]

- Tamba, J.G.; Essiane, S.N.; Sapnken, E.F.; Koffi, F.D.; Nsouandélé, J.L.; Soldo, B.; Njomo, D. Forecasting natural gas: A literature survey. Int. J. Energy Econ. Policy 2018, 8, 216–249. [Google Scholar]

- Liu, J.; Wang, S.; Wei, N.; Chen, X.; Xie, H.; Wang, J. Natural gas consumption forecasting: A discussion on forecasting history and future challenges. J. Nat. Gas Sci. Eng. 2021, 90, 103930. [Google Scholar] [CrossRef]

- Aljaman, B.; Ahmed, U.; Zahid, U.; Reddy, V.M.; Sarathy, S.M.; Jameel, A.G.A. A comprehensive neural network model for predicting flash point of oxygenated fuels using a functional group approach. Fuel 2022, 317, 123428. [Google Scholar] [CrossRef]

- Petropoulos, F.; Grushka-Cockayne, Y. Fast and frugal time series forecasting. arXiv 2021, arXiv:2102.13209. [Google Scholar] [CrossRef]

- Hewamalage, H.; Ackermann, K.; Bergmeir, C. Forecast Evaluation for Data Scientists: Common Pitfalls and Best Practices. arXiv 2022, arXiv:2203.10716. [Google Scholar] [CrossRef]

| City | Sample Entropy | Training Data | Testing Data |

|---|---|---|---|

| Athens | 1.25 | 1 January 2018–31 December 2020 | 1 January 2021–31 December 2021 |

| Spata | 2.10 | 1 January 2018–31 December 2020 | 1 January 2021–31 December 2021 |

| Factors | Athens | Spata | Factors | Athens | Spata | ||

|---|---|---|---|---|---|---|---|

| Temperature | Max | −0.86 | −0.65 | Humidity | Max | 0.36 | 0.30 |

| Avg | −0.85 | −0.64 | Avg | 0.46 | 0.35 | ||

| Min | −0.78 | −0.59 | Min | 0.47 | 0.35 | ||

| Dew Point | Max | −0.80 | −0.60 | Pressure | Max | 0.40 | 0.33 |

| Avg | −0.78 | −0.59 | Avg | 0.31 | 0.26 | ||

| Min | −0.73 | −0.54 | Min | 0.20 | 0.18 | ||

| Methods | Athens | Spata | ||||||

|---|---|---|---|---|---|---|---|---|

| MARNE | MAPE | R2 | Cost | MARNE | MAPE | R2 | Cost | |

| CNN-LSTM-ATT | 5.19% | 15.84% | 0.89 | 40.96 | 13.28% | 25.60% | 0.32 | 17.10 |

| CNN-LSTM | 8.71% | 19.92% | 0.74 | 56.71 | 15.49% | 27.14% | 0.18 | 30.78 |

| LSTM-ATT | 6.18% | 17.56% | 0.84 | 45.04 | 14.99% | 26.18% | 0.27 | 17.61 |

| Methods | Athens | Spata | ||||||

|---|---|---|---|---|---|---|---|---|

| MARNE | MAPE | R2 | Cost | MARNE | MAPE | R2 | Cost | |

| CNN-LSTM-ATT | 5.19% | 15.84% | 0.89 | 40.96 | 13.28% | 25.60% | 0.32 | 17.10 |

| LSTM | 9.00% | 22.00% | 0.78 | 36.59 | 15.85% | 26.52% | 0.24 | 27.14 |

| SVR | 9.19% | 44.56% | 0.85 | 0.37 | 15.03% | 26.90% | 0.19 | 0.38 |

| MLR | 12.85% | 52.76% | 0.72 | 1.38 | 15.93% | 26.92% | 0.25 | 0.28 |

| BPNN | 10.79% | 41.67% | 0.80 | 87.19 | 18.20% | 26.88% | 0.25 | 87.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Wang, S.; Wei, N.; Yang, Y.; Lv, Y.; Wang, X.; Zeng, F. An Enhancement Method Based on Long Short-Term Memory Neural Network for Short-Term Natural Gas Consumption Forecasting. Energies 2023, 16, 1295. https://doi.org/10.3390/en16031295

Liu J, Wang S, Wei N, Yang Y, Lv Y, Wang X, Zeng F. An Enhancement Method Based on Long Short-Term Memory Neural Network for Short-Term Natural Gas Consumption Forecasting. Energies. 2023; 16(3):1295. https://doi.org/10.3390/en16031295

Chicago/Turabian StyleLiu, Jinyuan, Shouxi Wang, Nan Wei, Yi Yang, Yihao Lv, Xu Wang, and Fanhua Zeng. 2023. "An Enhancement Method Based on Long Short-Term Memory Neural Network for Short-Term Natural Gas Consumption Forecasting" Energies 16, no. 3: 1295. https://doi.org/10.3390/en16031295

APA StyleLiu, J., Wang, S., Wei, N., Yang, Y., Lv, Y., Wang, X., & Zeng, F. (2023). An Enhancement Method Based on Long Short-Term Memory Neural Network for Short-Term Natural Gas Consumption Forecasting. Energies, 16(3), 1295. https://doi.org/10.3390/en16031295