Environment-Friendly Power Scheduling Based on Deep Contextual Reinforcement Learning

Abstract

:1. Introduction

2. Problem Description

2.1. Objective Function

2.2. Constraints

3. Proposed Methodological Framework

3.1. The Power Scheduling Dynamics as an MDP

3.2. Agent-Based Contextual Simulation Environment Algorithm

- ▪

- If , then set each based on the increasing order of ’s of Equation (10) until . If the capacity shortage is not fully corrected due to unconstrained OFF units, then is labeled as a terminal state () that would result an incomplete episode ().

- ▪

- If , then set each as per the decreasing order of ’s of Equation (10) until . If the excess capacity is not yet fully adjusted due to an insufficient number of unconstrained ON units, it results in an incomplete episode () as the state is terminal ().

- ▪

- If the current capacity does not satisfy future demands, set each as per the decreasing order of ’s of Equation (10). The current state is also labeled as terminal () if the future demands cannot be meet while the offline units must still be OFF due to an insufficient number of unconstrained OFF units.

3.3. Deep Contextual Reinforcement Learning

4. Demonstrative Example

5. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| Indices | |

| : | Number of units. |

| : | Number of emission types. |

| : | . |

| . | |

| : | . |

| Units and Demand Profiles | |

| : | (MW). |

| : | (MW). |

| : | (MW). |

| : | (hour). |

| : | (hour). |

| : | (hour). |

| ,: | . |

| : | (MW). |

| : | Percentage of demand for reserve capacity. |

| Objective Function | |

| : | . |

| : | Quadratic, linear, constant parameters of cost function of . |

| : | Externality cost of emission type ($/g), emission factor of unit for type (g/MW) |

| : | . |

| Others | |

| : | Expected value. |

| : | Indicator function. |

References

- Asokan, K.; Ashokkumar, R. Emission controlled Profit based Unit commitment for GENCOs using MPPD Table with ABC algorithm under Competitive Environment. WSEAS Trans. Syst. 2014, 13, 523–542. [Google Scholar]

- Roque, L.; Fontes, D.; Fontes, F. A multi-objective unit commitment problem combining economic and environmental criteria in a metaheuristic approach. In Proceedings of the 4th International Conference on Energy and Environment Research, Porto, Portugal, 17–20 July 2017. [Google Scholar]

- Montero, L.; Bello, A.; Reneses, J. A review on the unit commitment problem: Approaches, techniques, and resolution methods. Energies 2022, 15, 1296. [Google Scholar] [CrossRef]

- De Mars, P.; O’Sullivan, A. Applying reinforcement learning and tree search to the unit commitment problem. Appl. Energy 2021, 302, 117519. [Google Scholar] [CrossRef]

- De Mars, P.; O’Sullivan, A. Reinforcement learning and A* search for the unit commitment problem. Energy AI 2022, 9, 100179. [Google Scholar] [CrossRef]

- Jasmin, E.A.; Imthias Ahamed, T.P.; Jagathy Raj, V.P. Reinforcement learning solution for unit commitment problem through pursuit method. In Proceedings of the 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Bangalore, India, 28–29 December 2009. [Google Scholar]

- Jasmin, E.A.T.; Remani, T. A function approximation approach to reinforcement learning for solving unit commitment problem with photo voltaic sources. In Proceedings of the 2016 IEEE International Conference on Power Electronics, Drives and Energy Systems, Trivandrum, India, 14–17 December 2016. [Google Scholar]

- Li, F.; Qin, J.; Zheng, W. Distributed Q-learning-based online optimization algorithm for unit commitment and dispatch in smart grid. IEEE Trans. Cybern. 2019, 50, 4146–4156. [Google Scholar] [CrossRef] [PubMed]

- Navin, N.; Sharma, R. A fuzzy reinforcement learning approach to thermal unit commitment problem. Neural Comput. Appl. 2019, 31, 737–750. [Google Scholar] [CrossRef]

- Dalal, G.; Mannor, S. Reinforcement learning for the unit commitment problem. In Proceedings of the 2015 IEEE Eindhoven PowerTech, Eindhoven, Netherlands, 29 June–2 July 2015. [Google Scholar]

- Qin, J.; Yu, N.; Gao, Y. Solving unit commitment problems with multi-step deep reinforcement learning. In Proceedings of the 2021 IEEE International Conference on Communications, Control, and Computing Technologies for Smart Grids, Aachen, Germany, 25–28 October 2021. [Google Scholar]

- Ongsakul, W.; Petcharaks, N. Unit commitment by enhanced adaptive Lagrangian relaxation. IEEE Trans. Power Syst. 2004, 19, 620–628. [Google Scholar] [CrossRef]

- Nemati, M.; Braun, M.; Tenbohlen, S. Optimization of unit commitment and economic dispatch in microgrids based on genetic algorithm and mixed integer linear programming. Appl. Energy 2018, 2018, 944–963. [Google Scholar] [CrossRef]

- Trüby, J. Thermal Power Plant Economics and Variable Renewable Energies: A Model-Based Case Study for Germany; International Energy Agency: Paris, France, 2014.

- Zhang, K.; Yang, Z.; Başar, T. Multi-agent reinforcement learning: A selective overview of theories and algorithms. In Handbook of Reinforcement Learning and Control; Vamvoudakis, K.G., Wan, Y., Lewis, F.L., Cansever, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2021; pp. 321–384. [Google Scholar]

- Wilensky, U.; Rand, W. An Introduction to Agent-Based Modeling: Modeling Natural, Social, and Engineered Complex Systems with NetLogo; The MIT Press: London, UK, 2015. [Google Scholar]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; The MIT Press: London, UK, 2018. [Google Scholar]

- Matzliach, B.; Ben-Gal, I.; Kagan, E. Detection of static and mobile targets by an autonomous agent with deep Q-learning abilities. Entropy 2022, 24, 1168. [Google Scholar] [CrossRef] [PubMed]

- Adam, S.; Busoniu, L.; Babuska, R. Experience replay for real-time reinforcement learning control. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 201–212. [Google Scholar] [CrossRef]

- Yildirim, M.; Özcan, M. Unit commitment problem with emission cost constraints by using genetic algorithm. Gazi Univ. J. Sci. 2022, 35, 957–967. [Google Scholar]

| Hour | Optimal Commitments | Optimal Loads (MW) | (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 0 | 0 | 0 | 0 | 400.0 | 0 | 0 | 0 | 0 | 13.8 |

| 2 | 1 | 0 | 1 | 0 | 0 | 426.5 | 0 | 23.5 | 0 | 0 | 30.0 |

| 3 | 1 | 0 | 1 | 0 | 0 | 450.9 | 0 | 29.1 | 0 | 0 | 21.9 |

| 4 | 1 | 0 | 1 | 0 | 0 | 455.0 | 0 | 45.0 | 0 | 0 | 17.0 |

| 5 | 1 | 0 | 1 | 0 | 0 | 455.0 | 0 | 75.0 | 0 | 0 | 10.4 |

| 6 | 1 | 1 | 1 | 0 | 0 | 455.0 | 36.6 | 58.4 | 0 | 0 | 30.0 |

| 7 | 1 | 1 | 1 | 0 | 0 | 455.0 | 52.0 | 73.0 | 0 | 0 | 23.3 |

| 8 | 1 | 1 | 1 | 0 | 0 | 455.0 | 62.3 | 82.7 | 0 | 0 | 19.2 |

| 9 | 1 | 1 | 1 | 0 | 0 | 455.0 | 72.6 | 92.4 | 0 | 0 | 15.3 |

| 10 | 1 | 1 | 1 | 1 | 0 | 455.0 | 77.7 | 97.3 | 20 | 0 | 22.3 |

| 11 | 1 | 1 | 1 | 1 | 0 | 455.0 | 93.1 | 111.9 | 20 | 0 | 16.9 |

| 12 | 1 | 1 | 1 | 1 | 0 | 455.0 | 103.3 | 121.7 | 20 | 0 | 13.6 |

| 13 | 1 | 1 | 1 | 1 | 0 | 455.0 | 77.7 | 97.3 | 20 | 0 | 22.3 |

| 14 | 1 | 1 | 1 | 0 | 0 | 455.0 | 72.5 | 92.5 | 0 | 0 | 15.3 |

| 15 | 1 | 1 | 1 | 0 | 0 | 455.0 | 62.3 | 82.7 | 0 | 0 | 19.2 |

| 16 | 1 | 1 | 1 | 0 | 0 | 455.0 | 36.6 | 58.4 | 0 | 0 | 30.0 |

| 17 | 1 | 0 | 1 | 0 | 0 | 455.0 | 0 | 45.0 | 0 | 0 | 17.0 |

| 18 | 1 | 0 | 1 | 1 | 0 | 455.0 | 0 | 75.0 | 20 | 0 | 20.9 |

| 19 | 1 | 0 | 1 | 1 | 0 | 455.0 | 0 | 125.0 | 20 | 0 | 10.8 |

| 20 | 1 | 0 | 1 | 1 | 1 | 455.0 | 0 | 130.0 | 55 | 10 | 10.8 |

| 21 | 1 | 0 | 1 | 1 | 0 | 455.0 | 0 | 125.0 | 20 | 0 | 10.8 |

| 22 | 1 | 0 | 1 | 1 | 0 | 455.0 | 0 | 75.0 | 20 | 0 | 20.9 |

| 23 | 1 | 0 | 1 | 0 | 0 | 455.0 | 0 | 45.0 | 0 | 0 | 17.0 |

| 24 | 1 | 0 | 1 | 0 | 0 | 426.4 | 0 | 23.6 | 0 | 0 | 30.0 |

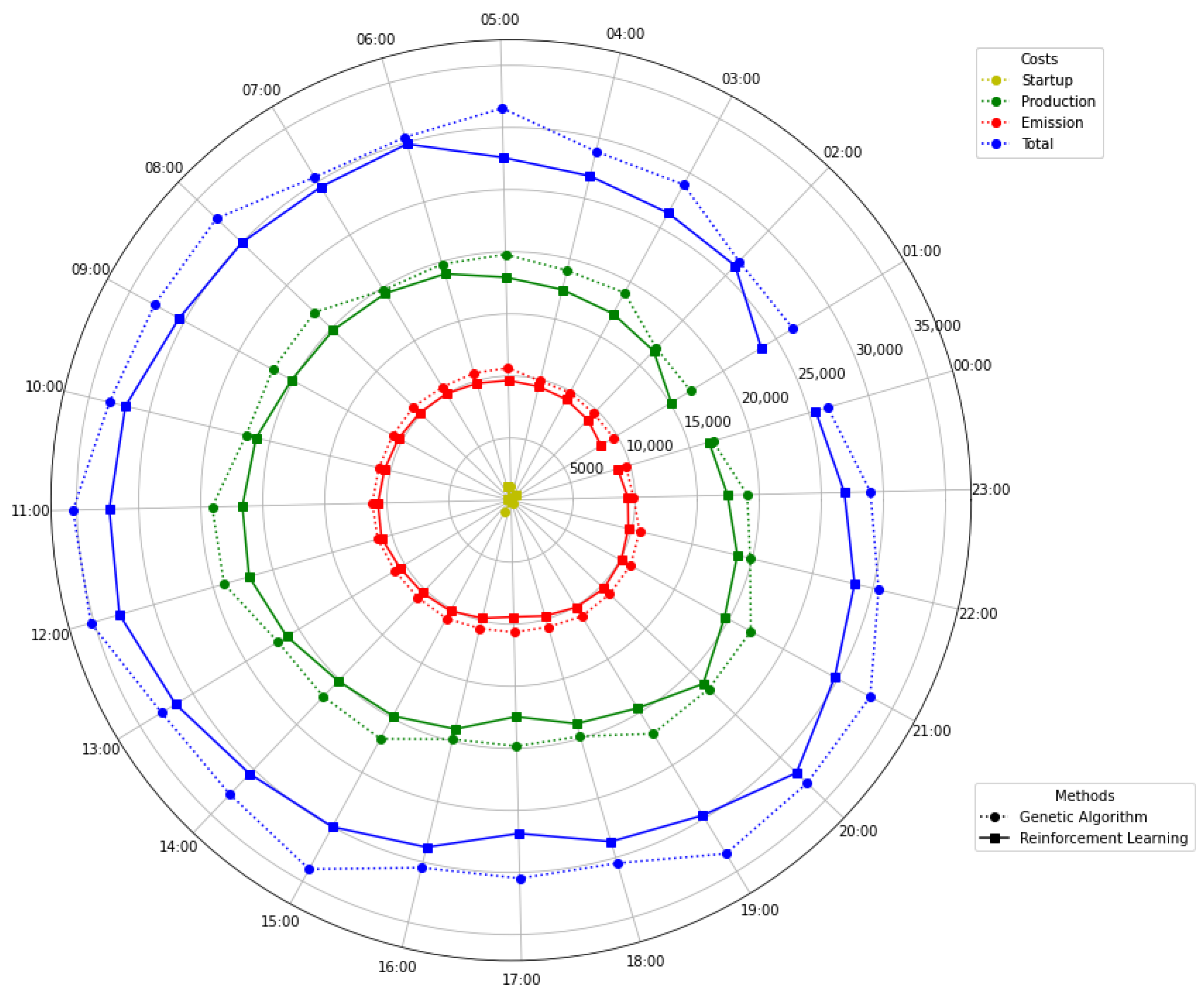

| Hour | Genetic Algorithm [20] | Proposed RL | ||||||

|---|---|---|---|---|---|---|---|---|

| Start-Up | Production | Emission | Total | Start-Up | Production | Emission | Total | |

| 1 | 0 | 8466 | 4824 | 13,290 | 0 | 7553 | 4241 | 11,793 |

| 2 | 0 | 8466 | 4828 | 13,294 | 560 | 9061 | 4702 | 14,324 |

| 3 | 60 | 10,564 | 5044 | 15,668 | 0 | 9560 | 5004 | 14,564 |

| 4 | 0 | 10,564 | 5044 | 15,608 | 0 | 9893 | 5170 | 15,063 |

| 5 | 1120 | 11,327 | 5825 | 18,271 | 0 | 10,395 | 5401 | 15,797 |

| 6 | 0 | 11,327 | 5825 | 17,151 | 1100 | 11,427 | 5555 | 18,082 |

| 7 | 0 | 11,327 | 5825 | 17,151 | 0 | 11,930 | 5786 | 17,717 |

| 8 | 60 | 13,425 | 6045 | 19,529 | 0 | 12,267 | 5940 | 18,207 |

| 9 | 0 | 13,425 | 6045 | 19,469 | 0 | 12,604 | 6094 | 18,699 |

| 10 | 340 | 13,523 | 6145 | 20,008 | 340 | 13,591 | 6251 | 20,183 |

| 11 | 30 | 15,621 | 6365 | 22,016 | 0 | 14,099 | 6483 | 20,582 |

| 12 | 0 | 15,621 | 6365 | 21,986 | 0 | 14,439 | 6637 | 21,076 |

| 13 | 0 | 13,523 | 6145 | 19,668 | 0 | 13,591 | 6251 | 19,843 |

| 14 | 30 | 13,425 | 6045 | 19,499 | 0 | 12,604 | 6094 | 18,699 |

| 15 | 1100 | 13,456 | 6045 | 20,601 | 0 | 12,267 | 5940 | 18,207 |

| 16 | 0 | 11,358 | 5825 | 17,182 | 0 | 11,427 | 5555 | 16,982 |

| 17 | 0 | 11,358 | 5825 | 17,182 | 0 | 9893 | 5170 | 15,063 |

| 18 | 0 | 11,358 | 5825 | 17,182 | 170 | 11,213 | 5481 | 16,865 |

| 19 | 340 | 13,554 | 6145 | 20,039 | 0 | 12,059 | 5866 | 17,926 |

| 20 | 0 | 13,554 | 6145 | 19,699 | 60 | 13,862 | 6085 | 20,007 |

| 21 | 0 | 13,554 | 6145 | 19,699 | 0 | 12,059 | 5866 | 17,926 |

| 22 | 0 | 11,358 | 5825 | 17,182 | 0 | 11,213 | 5481 | 16,695 |

| 23 | 60 | 10,564 | 5044 | 15,668 | 0 | 9893 | 5170 | 15,063 |

| 24 | 0 | 8466 | 4824 | 13,290 | 0 | 9061 | 4702 | 13,764 |

| Total | 3140 | 289,178 | 138,013 | 430,331 | 2230 | 275,962 | 134,931 | 413,122 |

| Number of Units | Cost ($) | |||

|---|---|---|---|---|

| Start-Up | Production | Emission | Total | |

| 10 | 4840 | 545,837 | 270,724 | 821,401 |

| 20 | 9300 | 1,083,979 | 540,476 | 1,633,754 |

| 30 | 14,370 | 1,622,473 | 811,866 | 2,448,710 |

| 40 | 18,980 | 2,160,114 | 1,082,666 | 3,261,760 |

| 50 | 31,660 | 2,744,090 | 1,329,071 | 4,104,821 |

| 80 | 51,320 | 4,369,093 | 2,129,526 | 6,549,939 |

| 100 | 58,960 | 5,456,700 | 2,674,207 | 8,189,867 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ebrie, A.S.; Paik, C.; Chung, Y.; Kim, Y.J. Environment-Friendly Power Scheduling Based on Deep Contextual Reinforcement Learning. Energies 2023, 16, 5920. https://doi.org/10.3390/en16165920

Ebrie AS, Paik C, Chung Y, Kim YJ. Environment-Friendly Power Scheduling Based on Deep Contextual Reinforcement Learning. Energies. 2023; 16(16):5920. https://doi.org/10.3390/en16165920

Chicago/Turabian StyleEbrie, Awol Seid, Chunhyun Paik, Yongjoo Chung, and Young Jin Kim. 2023. "Environment-Friendly Power Scheduling Based on Deep Contextual Reinforcement Learning" Energies 16, no. 16: 5920. https://doi.org/10.3390/en16165920

APA StyleEbrie, A. S., Paik, C., Chung, Y., & Kim, Y. J. (2023). Environment-Friendly Power Scheduling Based on Deep Contextual Reinforcement Learning. Energies, 16(16), 5920. https://doi.org/10.3390/en16165920