Systematic Development of a Multi-Objective Design Optimization Process Based on a Surrogate-Assisted Evolutionary Algorithm for Electric Machine Applications

Abstract

1. Introduction

- Development of a Bayesian optimization-based hyperparameter (HP) tuning process to improve the approximation accuracy of SMs;

- Development of new convergence criteria for the transition from FEA to SM;

- Analysis of the effect of the number of FEA calculations on the approximation accuracy of the SM for different objective functions;

- Comparative analysis of three different surrogate modeling techniques: ANN, Kriging, and SVR;

- Development of a clustering-based technique to reduce calculation time for verifying Pareto fronts predicted by an SM.

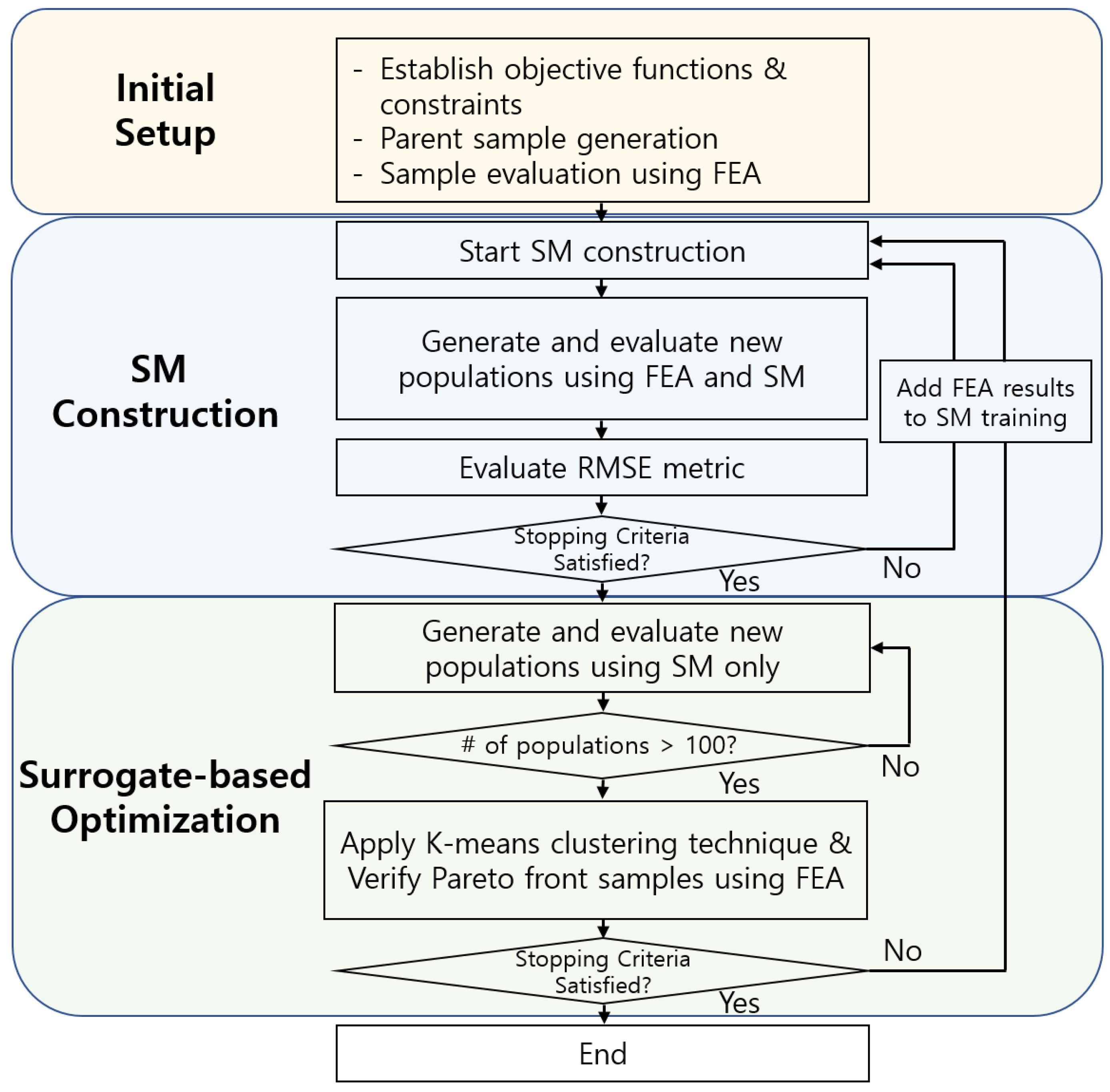

2. Proposed Surrogate-Assisted Design Optimization Process

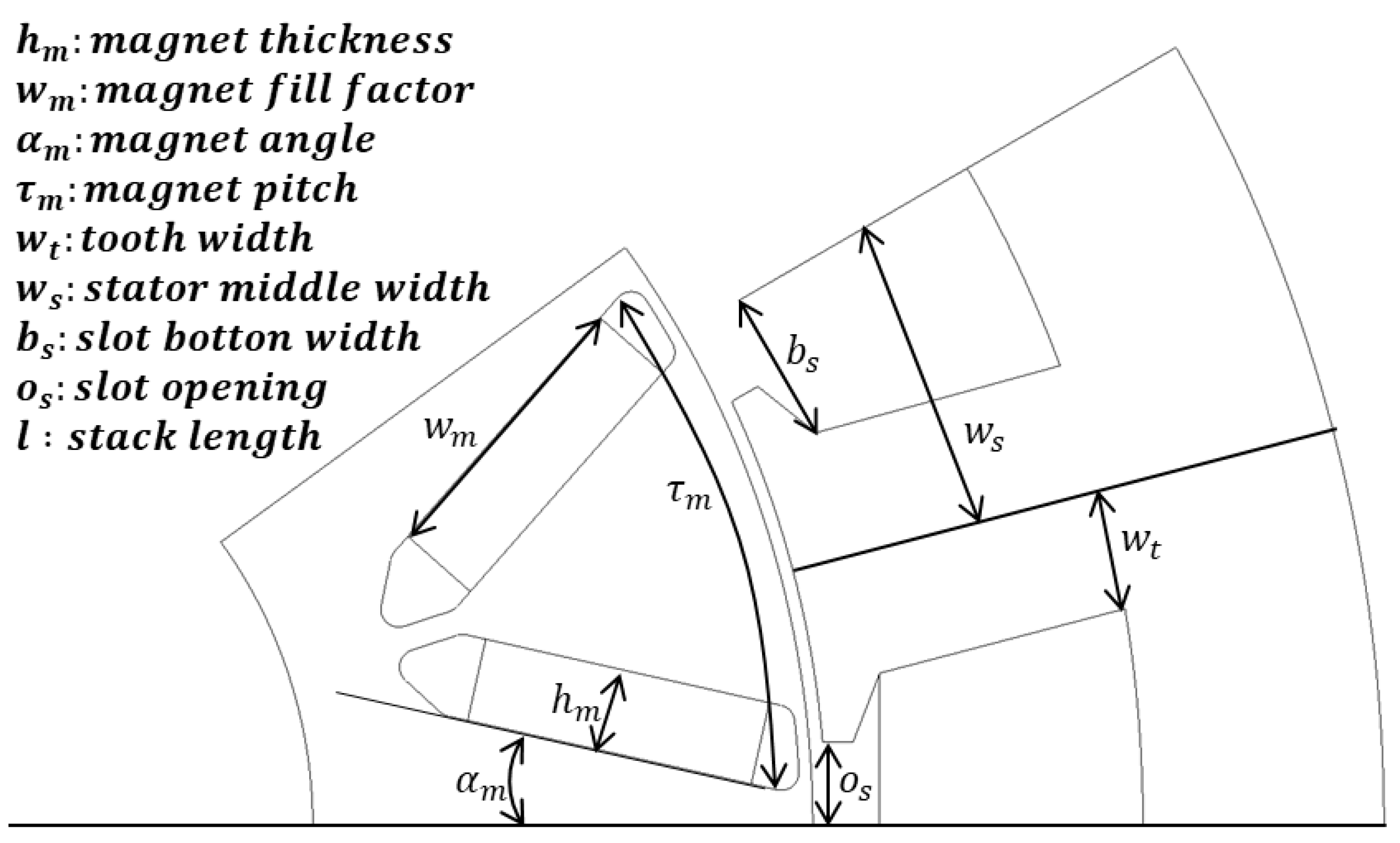

3. Experimental Setup

4. Surrogate Model Construction

4.1. Surrogate Modeling Techniques

4.1.1. Kriging

4.1.2. Artificial Neural Network

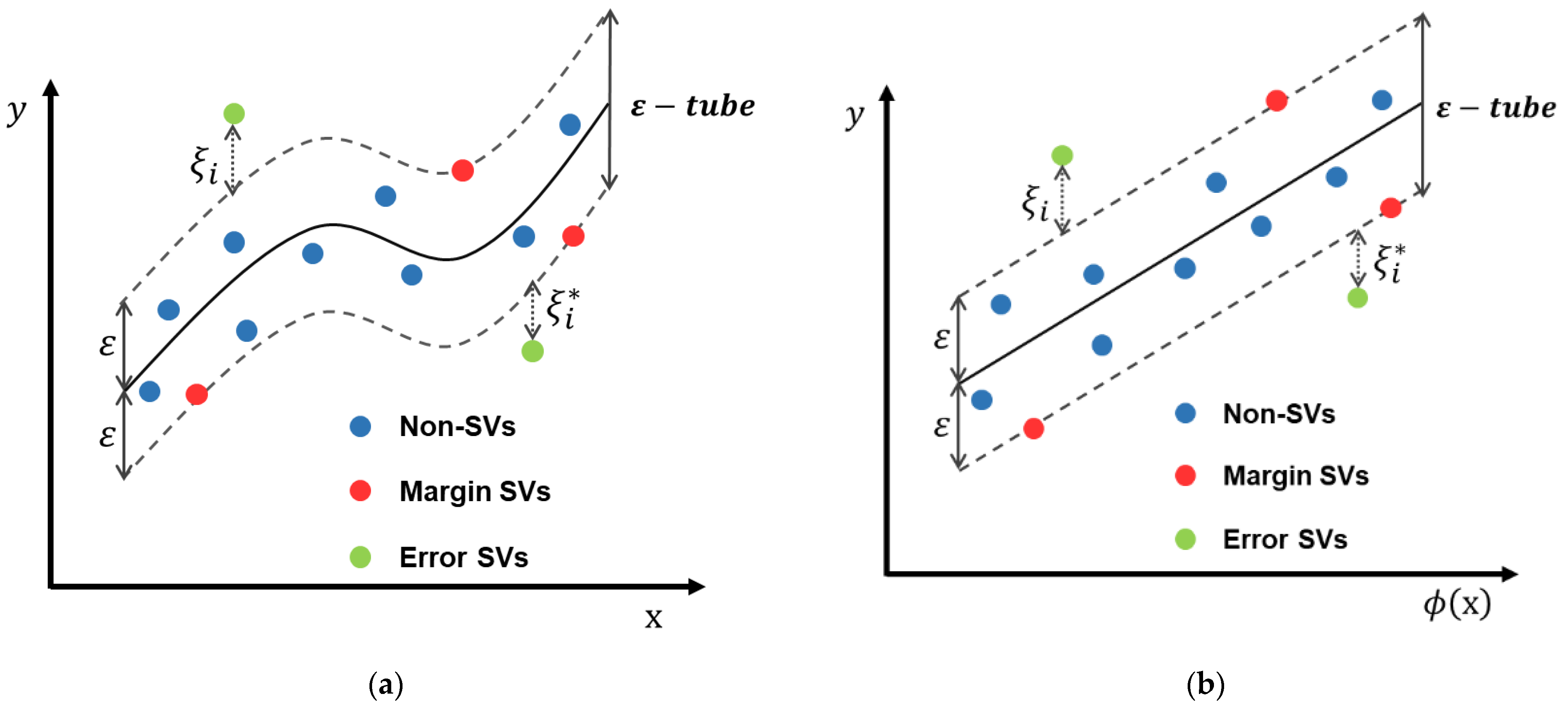

4.1.3. Support Vector Regression

4.2. Hyperparameters of Surrogate Model

4.3. Transition from FEA-Based to Surrogate-Based Optimization

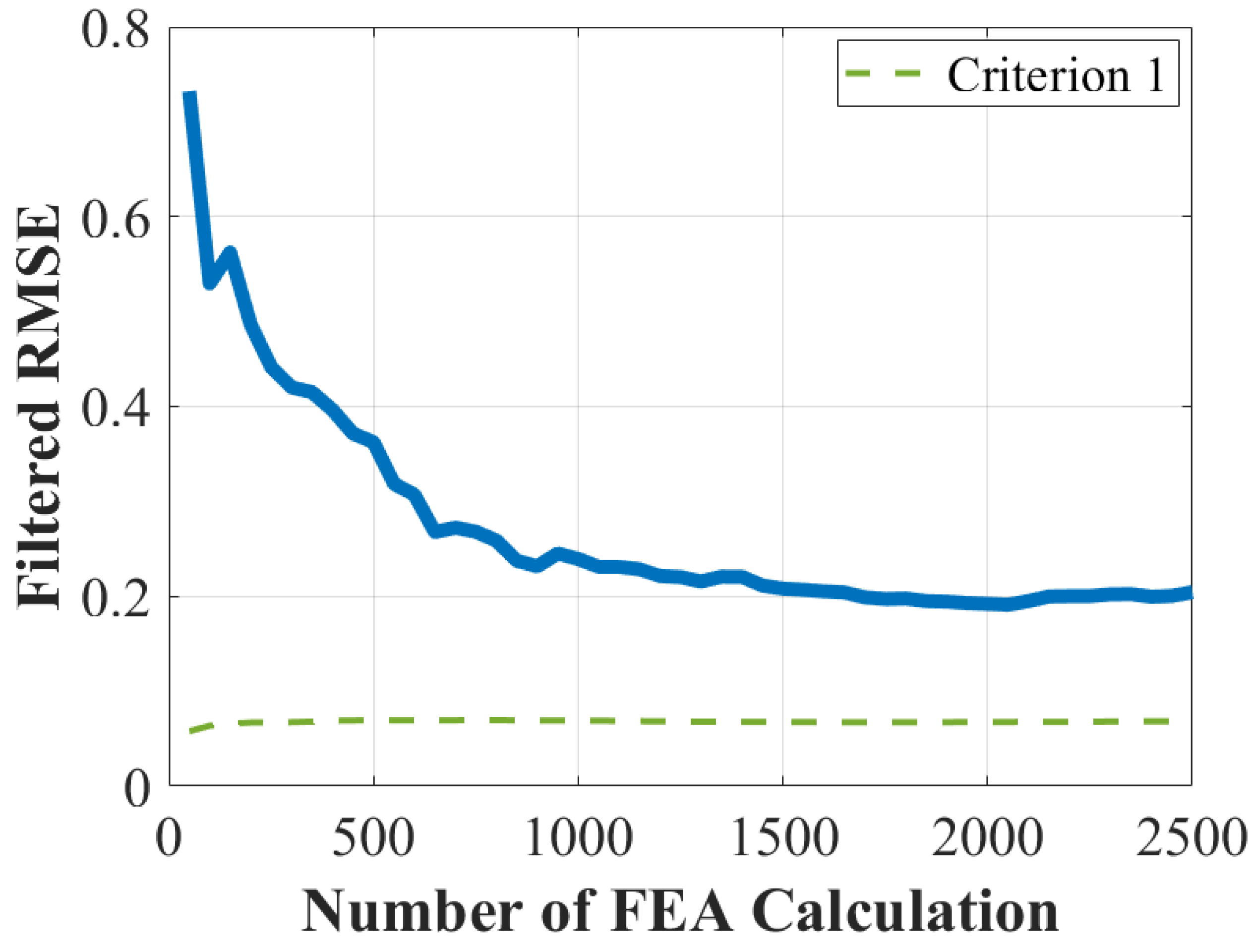

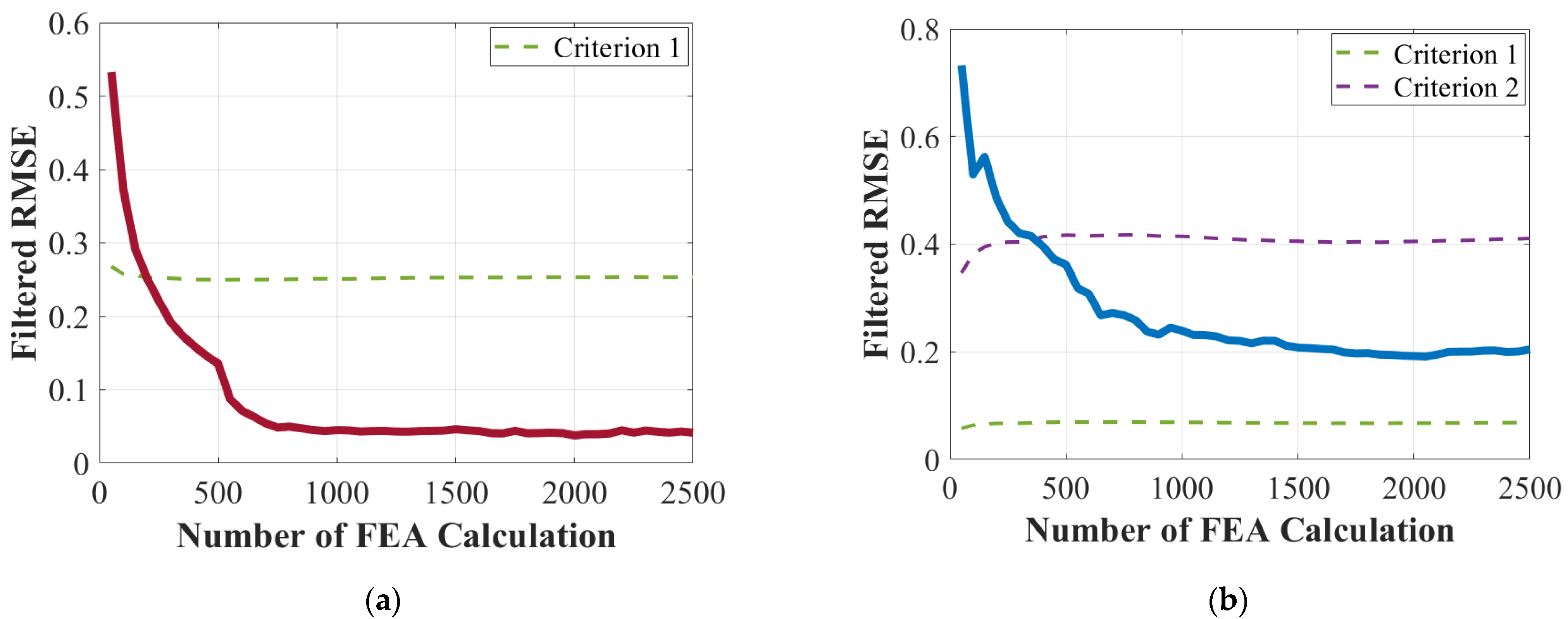

- Criterion 1: Stop if the RMSE is equal to or less than 0.5%;

- Criterion 2: Stop if the sign of the rate of change changes from negative to positive and RMSE is equal to or less than 3% after 10 iterations (500 FEA calculations);

- Criterion 3: Stop if the number of calculations is greater than 50 iterations (2500 FEA calculations).

4.4. Data Clustering Technique for Pareto Front Verification

5. Optimization Results

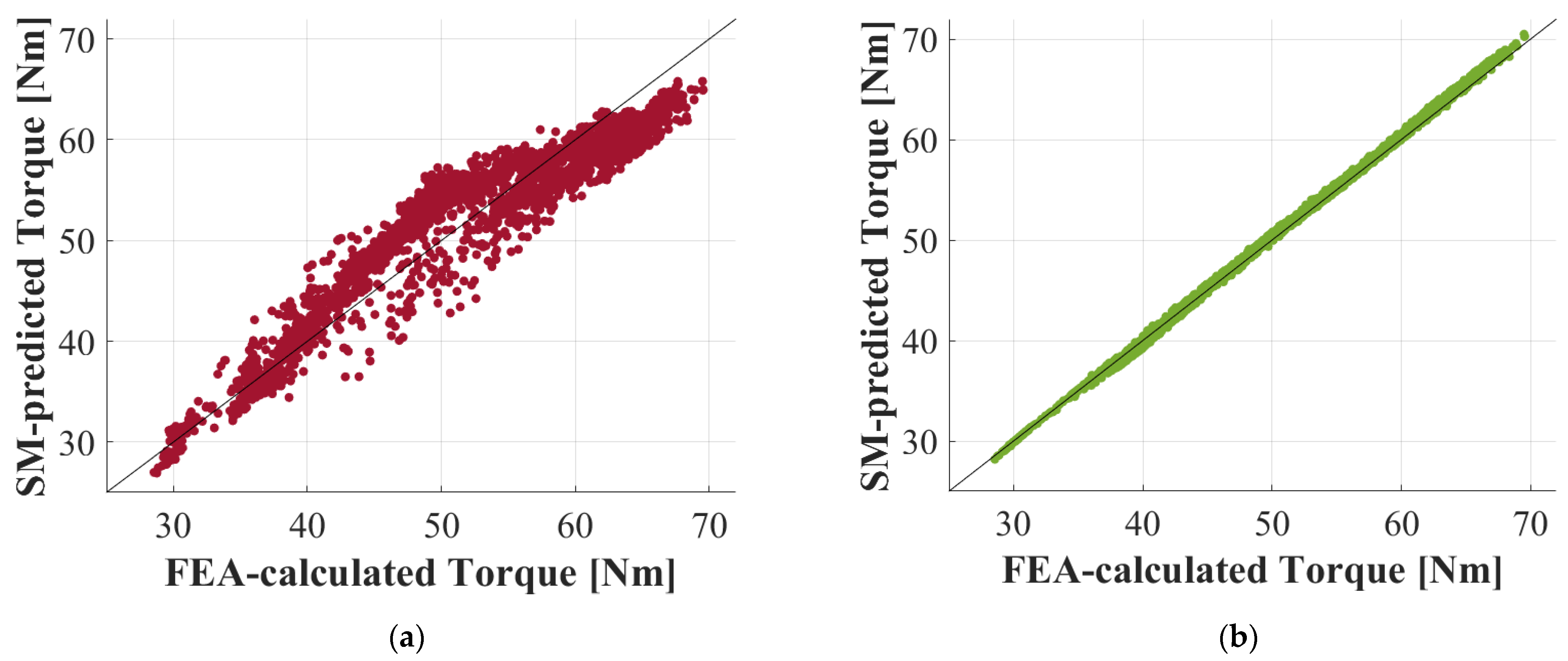

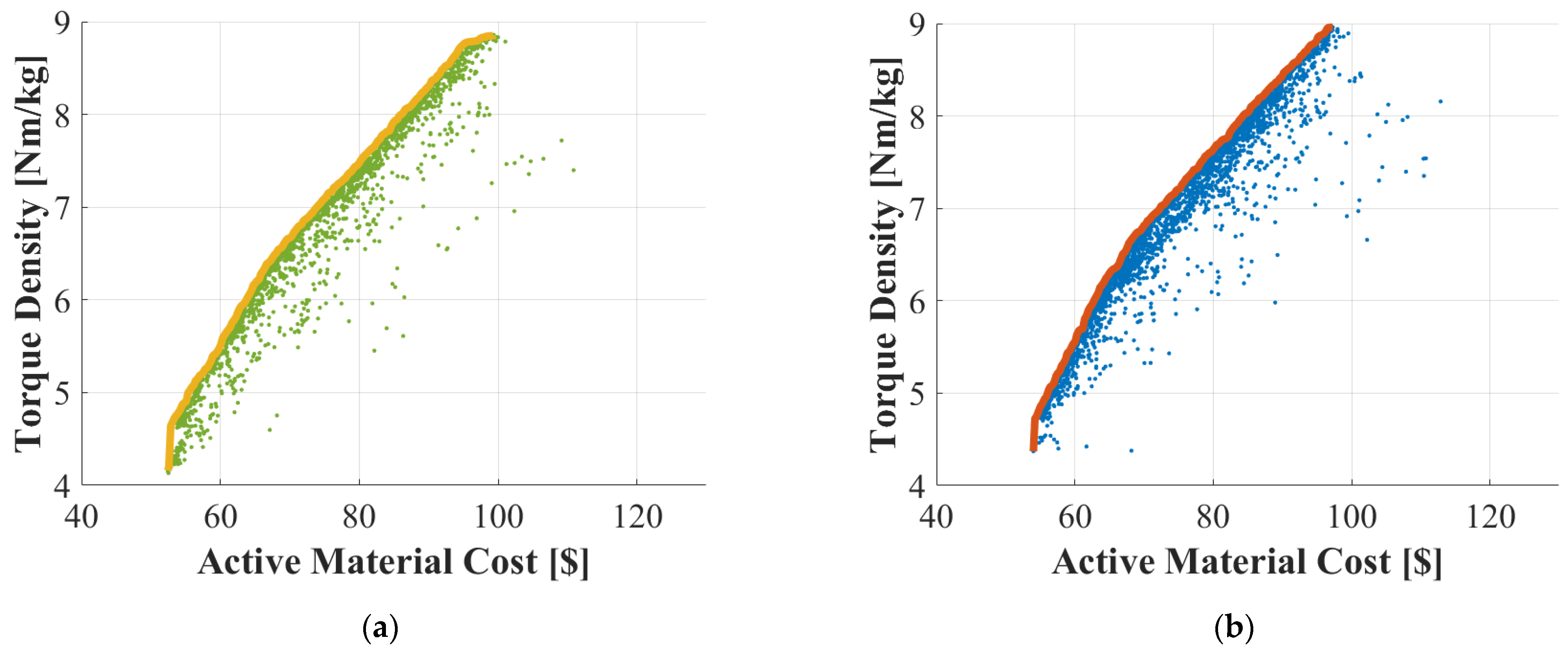

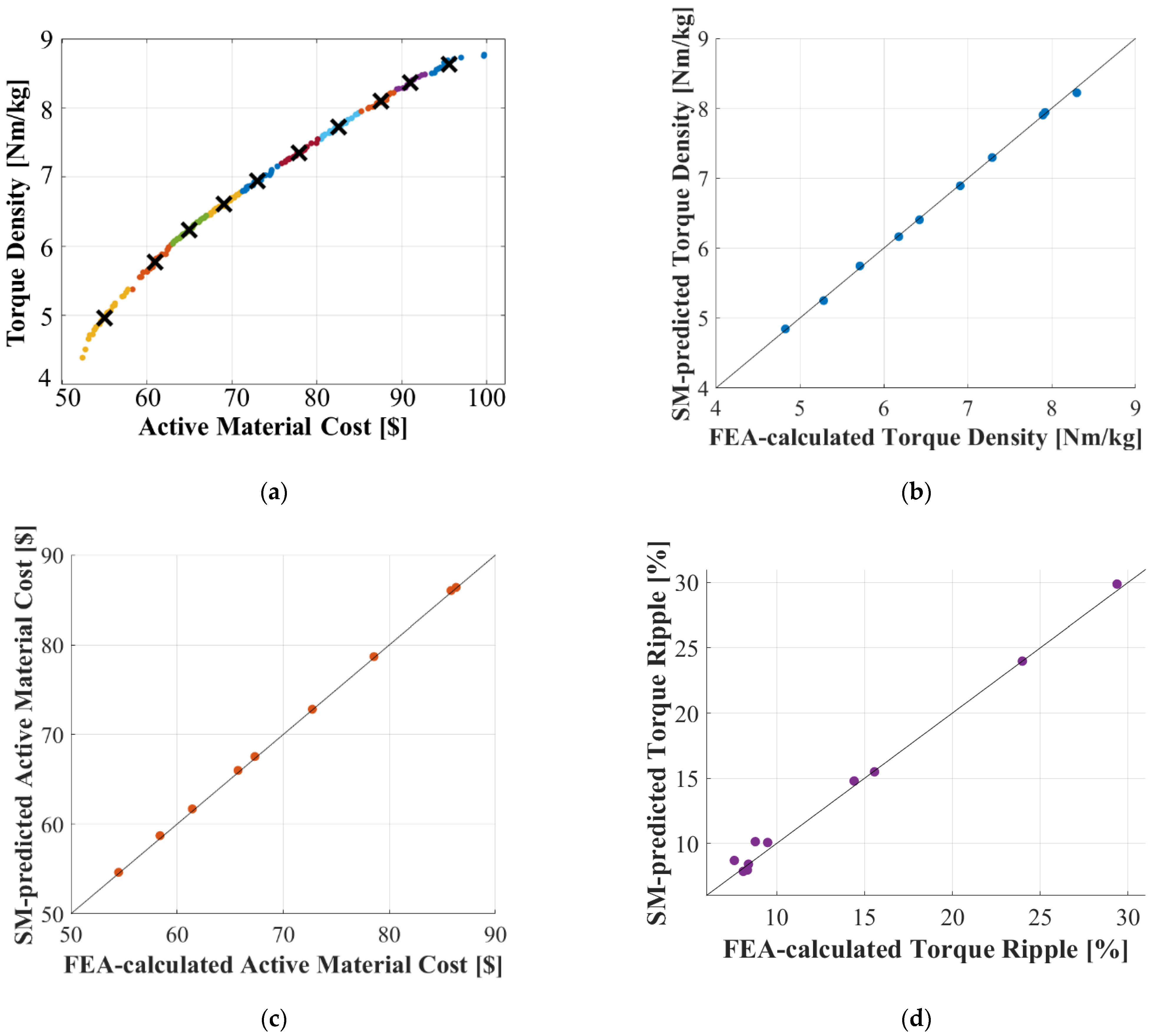

5.1. Performance Comparison between SMs

5.2. Comparative Analysis: FEA-Based vs. SM-Based Optimization Process

6. Conclusions and Future Works

- Comparison of three different surrogate modeling techniques, Kriging, ANN, and SVR;

- Introduction of an automated HP tuning process through Bayesian optimization;

- Development of robust three-step stopping criteria that determine the transition from FEA-based to SM-based optimization;

- Detailed analysis of the approximation accuracy of various SMs for four different objective functions considering the number of FEA results used for SM training and HP tuning effect;

- Reduction of calculation time for verification of the Pareto front predicted by SM using the k-means clustering technique;

- Computational time savings of more than 90% with no loss of accuracy compared to FEA-based optimization.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Perujo, A.; Ciuffo, B. The Introduction of Electric Vehicles in the Private Fleet: Potential Impact on the Electric Supply System and on the Environment. A Case Study for the Province of Milan, Italy. Energy Policy 2010, 38, 4549–4561. [Google Scholar] [CrossRef]

- Choi, G.; Bramerdorfer, G. Comprehensive Design and Analysis of an Interior Permanent Magnet Synchronous Machine for Light-Duty Passenger EVs. IEEE Access 2022, 10, 819–831. [Google Scholar] [CrossRef]

- Johansson, T.B.; van Dessel, M.; Belmans, R.; Geysen, W. Technique for Finding the Optimum Geometry of Electrostatic Micromotors. IEEE Trans. Ind. Appl. 1994, 30, 912–919. [Google Scholar] [CrossRef]

- Bianchi, N.; Bolognani, S. Design Optimisation of Electric Motors by Genetic Algorithms. IEE Proc. Electr. Power Appl. 1998, 145, 475–483. [Google Scholar] [CrossRef]

- Uler, G.F.; Mohammed, O.A.; Chang-Seop, K. Design optimization of electrical machines using genetic algorithms. IEEE Trans. Magn. 1995, 31, 2008–2011. [Google Scholar] [CrossRef]

- Gao, J.; Sun, H.; He, L. Optimization design of Switched Reluctance Motor based on Particle Swarm Optimization. In Proceedings of the 2011 International Conference on Electrical Machines and Systems (ICEMS), Beijing, China, 20–23 August 2011; pp. 1–5. [Google Scholar]

- Mutluer, M.; Bilgin, O. Design Optimization of PMSM by Particle Swarm Optimization and Genetic Algorithm. In Proceedings of the INISTA 2012—International Symposium on Innovations in Intelligent Systems and Applications, Trabzon, Turkey, 2–4 July 2012. [Google Scholar]

- Duan, Y.; Harley, R.G.; Habetler, T.G. Comparison of Particle Swarm Optimization and Genetic Algorithm in the design of permanent magnet motors. In Proceedings of the 2009 IEEE 6th International Power Electronics and Motion Control Conference, Wuhan, China, 17–20 May 2009; pp. 822–825. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Murata, T.; Ishibuchi, H.; Sanchis, J.; Blasco, X. MOGA: Multi-objective genetic algorithms. In Proceedings of the IEEE International Conference on Evolutionary Computation, Perth, WA, Australia, 29 November–1 December 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 289–294. [Google Scholar]

- Zhang, Q.; Li, H. MOEA/D: A multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Li, Y.; Lei, G.; Bramerdorfer, G.; Peng, S.; Sun, X.; Zhu, J. Machine Learning for Design Optimization of Electromagnetic Devices: Recent Developments and Future Directions. Appl. Sci. 2021, 11, 1627. [Google Scholar] [CrossRef]

- Song, J.; Dong, F.; Zhao, J.; Wang, H.; He, Z.; Wang, L. An Efficient Multiobjective Design Optimization Method for a PMSLM Based on an Extreme Learning Machine. IEEE Trans. Ind. Electron. 2019, 66, 1001–1011. [Google Scholar] [CrossRef]

- You, Y.-M. Multi-Objective Optimal Design of Permanent Magnet Synchronous Motor for Electric Vehicle Based on Deep Learning. Appl. Sci. 2020, 10, 482. [Google Scholar] [CrossRef]

- Kim, S.; You, Y. Optimization of a Permanent Magnet Synchronous Motor for e-Mobility Using Metamodels. Appl. Sci. 2022, 12, 1625. [Google Scholar] [CrossRef]

- Zăvoianu, A.-C.; Bramerdorfer, G.; Lughofer, E.; Silber, S.; Amrhein, W.; Klement, E.P. Hybridization of multi-objective evolutionary algorithms and artificial neural networks for optimizing the performance of electrical drives. Eng. Appl. Artif. Intell. 2013, 26, 1781–1794. [Google Scholar] [CrossRef]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: Nsga-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Jeyakumar, D.N.; Venkatesh, P.; Lee, K.Y. Application of multi objective evolutionary programming to combined economic emission dispatch problem. In Proceedings of the 2007 International Joint Conference on Neural Networks, Orlando, FL, USA, 12–17 August 2007; pp. 1162–1167. [Google Scholar]

- El-Nemr, M.; Afifi, M.; Rezk, H.; Ibrahim, M. Finite Element Based Overall Optimization of Switched Reluctance Motor Using Multi-Objective Genetic Algorithm (NSGA-II). Mathematics 2021, 9, 576. [Google Scholar] [CrossRef]

- Jo, S.-T.; Kim, W.-H.; Lee, Y.-K.; Kim, Y.-J.; Choi, J.-Y. Multi-Objective Optimal Design of SPMSM for Electric Compressor Using Analytical Method and NSGA-II Algorithm. Energies 2022, 15, 7510. [Google Scholar] [CrossRef]

- Pereira, L.A.; Haffner, S.; Nicol, G.; Dias, T.F. Multiobjective optimization of five-phase induction machines based on NSGA-II. IEEE Trans. Ind. Electron. 2017, 64, 9844–9853. [Google Scholar] [CrossRef]

- Chekroun, S.; Abdelhadi, B.; Benoudjit, A. A New Approach Design Optimizer of Induction Motor Using Particle Swarm Algorithm. AMSE J. 2014, 87, 89–108. [Google Scholar]

- Shayanfar, H.; Gharehchopogh, F.S. Farmland fertility: A new metaheuristic algorithm for solving continuous optimization problems. Appl. Soft Comput. 2018, 71, 728–746. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Khodadadi, N.; Mirjalili, S. Mountain Gazelle Optimizer: A New Nature-Inspired Metaheuristic Algorithm for Global Optimization Problems. Adv. Eng. Softw. 2022, 174, 103282. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Soleimanian Gharehchopogh, F.; Mirjalili, S. Artificial Gorilla Troops Optimizer: A New Nature-Inspired Metaheuristic Algorithm for Global Optimization Problems. Int. J. Intell. Syst. 2021, 36, 5887–5958. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S. Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artif. Intell. Rev. 2022, 1, 1–65. [Google Scholar] [CrossRef]

- Im, S.Y.; Lee, S.G.; Kim, D.M.; Xu, G.; Shin, S.Y.; Lim, M.S. Kriging SM-Based Design of an Ultra-High-Speed Surface-Mounted Permanent-Magnet Synchronous Motor Considering Stator Iron Loss and Rotor Eddy Current Loss. IEEE Trans. Magn. 2022, 58, 8101405. [Google Scholar] [CrossRef]

- Cressie, N. The origins of kriging. Math. Geol. 1990, 22, 239–252. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, P.; Song, B.; Wang, X.; Dong, H. An efficient kriging modeling method for high-dimensional design problems based on maximal information coefficient. Struct. Multidiscip. Optim. 2019, 61, 39–57. [Google Scholar] [CrossRef]

- Toal, D.J.J.; Bressloff, N.W.; Keane, A.J. Kriging hyperparameter tuning strategies. AIAA J. 2008, 46, 1240–1252. [Google Scholar] [CrossRef]

- Sacks, J.; Welch, W.J.; Mitchell, T.J.; Wynn, H.P. Design and Analysis of Computer Experiments. Statist. Sci. 1989, 4, 409–435. [Google Scholar] [CrossRef]

- Van, J.; Guibal, D. Beyond ordinary kriging—An overview of non-linear estimation. In Beyond Ordinary Kriging: Non-Linear Geostatistical Methods in Practice; The Geostatistical Association of Australasia: Perth, Australia, 1998; pp. 6–25. [Google Scholar]

- Fuhg, J.N.; Fau, A.; Nackenhorst, U. State-of-the-art and Comparative Review of Adaptive Sampling Methods for Kriging. In Archives of Computational Methods in Engineering; Leibniz Universität Hannover, Université Paris-Saclay: Hannover, Germany; Paris, France, 2021; Volume 28. [Google Scholar]

- McCulloch, W.; Pitts, W. A Logical Calculus of Ideas Immanent in Nervous Activity. Bull. Math. Bio. Phys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Balázs, C.C. Approximation with Artificial Neural Networks. Master’s Thesis, Eötvös Loránd University, Budapest, Hungary, 2001. [Google Scholar]

- Alex, J.S.; Schölkopf, B. A tutorial on support vector regression. Statist. Comput. 2014, 14, 199–222. [Google Scholar]

- Drucker, H.; Burges, C.J.C.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1997, 9, 155–161. [Google Scholar]

- Zahedi, L.; Mohammadi, F.G.; Rezapour, S.; Ohland, M.W.; Amini, M.H. Search Algorithms for Automated Hyper-Parameter Tuning. arXiv 2021, arXiv:2104.14677. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random Search for Hyper-parameter Optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Swinburne, R. Bayes’ Theorem. Rev. Philos. Fr. 2004, 194, 2825–2830. [Google Scholar]

- Wu, J.; Hao, X.C.; Xiong, Z.L.; Lei, H. Hyperparameter Optimization for Machine Learning Models Based on Bayesian Optimization. J. Electron. Sci. Technol. 2019, 17, 26–40. [Google Scholar]

- Cho, H.; Kim, Y.; Lee, E.; Choi, D.; Lee, Y.; Rhee, W. Basic Enhancement Strategies When Using Bayesian Optimization for Hyperparameter Tuning of Deep Neural Networks. IEEE Access 2020, 8, 52588–52608. [Google Scholar] [CrossRef]

- Zeaiter, M.; Rutledge, D. Preprocessing Methods. In Comprehensive Chemometrics: Chemical and Biochemical Data Analysis; Brown, S.D., Tauler, R., Walczak, B., Eds.; Elsevier: Amsterdam, The Netherlands, 2009; pp. 121–231. ISBN 978-0-444-52701-1. [Google Scholar]

- Querin, O.M.; Victoria, M.; Alonso, C.; Ansola, R.; Martí, P. Topology Design Methods for Structural Optimization; Elsevier: Amsterdam, The Netherlands, 2017; ISBN 9780081009161. [Google Scholar]

- Bejarano, L.A.; Espitia, H.E.; Montenegro, C.E. Clustering Analysis for the Pareto Optimal Front in Multi-Objective Optimization. Computation 2022, 10, 37. [Google Scholar] [CrossRef]

- Lloyd, S.P. Least Squares Quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Redmond, S.J.; Heneghan, C. A method for initialising the K-means clustering algorithm using kd-trees. Pattern Recognit. Lett. 2007, 28, 965–973. [Google Scholar] [CrossRef]

- Han, S.H.; Jahns, T.M.; Soong, W.L.; Guven, M.K.; Illindala, M.S. Torque Ripple Reduction in Interior Permanent Magnet Synchronous Machines Using Stators With Odd Number of Slots Per Pole Pair. IEEE Trans. Energy Convers. 2010, 25, 118–127. [Google Scholar] [CrossRef]

- Sanada, M.; Hiramoto, K.; Morimoto, S.; Takeda, Y. Torque Ripple Improvement for Synchronous Reluctance Motor Using an Asymmetric Flux Barrier Arrangement. IEEE Trans. Ind. Appl. 2004, 40, 1076–1082. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Peak Current Density | 15 Arms/mm2 |

| Slot/Pole | 12/10 |

| Peak Power/Peak Torque | 15 kW/70 Nm |

| Peak Current | 150 Arms |

| Series Turns | 17 Turns |

| Rotor Diameter | 110 mm |

| Airgap Length | 0.75 mm |

| Magnet Remanence | 1.1 T at 100 °C |

| Magnet Grade | NMX-36EH (Hitachi) |

| Lamination Grade | 35JN300 (JFE) |

| Design Variables and Their Range |

| Type of SM | Parameter (s) | Hyperparameter (s) |

|---|---|---|

| Kriging | Correlation matrix, vector | |

| ANN | Weight, bias | Number of hidden layers, neurons, and activation function |

| SVR | Support vector | Regularization hyperparameter, kernel function |

| Objective Function | Kriging | ANN | SVR |

|---|---|---|---|

| Total Mass | 50 | 50 | 50 |

| Active Material Cost | 50 | 50 | 100 |

| Average Torque | 50 | 200 | 350 |

| Torque Ripple | 700 | 950 | 2500 |

| Processing Unit | AMD Ryzen 9 5900X 12-Core Processor, 3.70 GHz |

| Operating System | Windows 11 Pro (64-bit) |

| Random Access Memory | 32 GB DDR4 |

| Data Storage Type | SSD SATA |

| Graphic Card | NVIDIA GeForce GTX 1650 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, M.; Choi, G.; Bramerdorfer, G.; Marth, E. Systematic Development of a Multi-Objective Design Optimization Process Based on a Surrogate-Assisted Evolutionary Algorithm for Electric Machine Applications. Energies 2023, 16, 392. https://doi.org/10.3390/en16010392

Choi M, Choi G, Bramerdorfer G, Marth E. Systematic Development of a Multi-Objective Design Optimization Process Based on a Surrogate-Assisted Evolutionary Algorithm for Electric Machine Applications. Energies. 2023; 16(1):392. https://doi.org/10.3390/en16010392

Chicago/Turabian StyleChoi, Mingyu, Gilsu Choi, Gerd Bramerdorfer, and Edmund Marth. 2023. "Systematic Development of a Multi-Objective Design Optimization Process Based on a Surrogate-Assisted Evolutionary Algorithm for Electric Machine Applications" Energies 16, no. 1: 392. https://doi.org/10.3390/en16010392

APA StyleChoi, M., Choi, G., Bramerdorfer, G., & Marth, E. (2023). Systematic Development of a Multi-Objective Design Optimization Process Based on a Surrogate-Assisted Evolutionary Algorithm for Electric Machine Applications. Energies, 16(1), 392. https://doi.org/10.3390/en16010392