Abstract

Electronic components are basic elements that are widely used in many industrial and technological fields. With the development of technology, their dimensions are being produced in smaller and smaller sizes. As a result, making fast distinctions becomes difficult. Being able to classify electronic components quickly and accurately will save labor and time in all areas where these elements are used. Recently, deep learning algorithms have become preferential in product classification studies due to their high accuracy and speed. In this paper, a classification study of electronic components was carried out with the deep learning method. A new convolutional neural network (CNN) model is proposed in the study. The model has six convolution layers, four pooling layers, two fully connected layers, softmax, and a classification layer. The training parameters of the network were determined as an ensemble size of 16, maximum period of 100, initial learning rate of 1 × 10−3, and the optimizing method sgdm. While determining the CNN model layers and training parameters, the values with the highest predictive values were selected as a result of the trials. Classification research was conducted using the pre-trained networks AlexNet, ShuffleNet, SqueezeNet, and GoogleNet for the same data, and their performance success parameters were compared to those of the proposed CNN model. The proposed CNN model showed higher performance than the other methods, and an accuracy value of 98.99% was obtained.

1. Introduction

Image classification is the process of categorizing images according to predetermined labels or classes. Today, it is widely used in many areas, such as industry, traffic alerts, unmanned aerial vehicles, disease detection in health, and plant classification in agriculture. These applications save both labor and time [1,2].

Electronic components are structures that express all components in an electrical circuit. They are produced in different sizes or with different technologies according to the area in which they are used. They are also widely used in many applications in the industrial field. With the development of technology, their size has been gradually reduced. In the present study, the classification of electronic components is made. The classification of electronic components is important for many areas, such as usage areas, industrial studies, and the recycling and production of large electronic devices [3,4].

Today, deep learning algorithms are used in classification studies. In applications of deep learning algorithms, convolutional neural networks (CNNs) are preferred because of their high performance [5,6]. The dataset used while creating the convolutional neural network model differs according to the model structure and the parameters used.

Machine learning methods are widely used in data mining, image recognition, and classification studies [7,8]. Machine learning methods include algorithms that learn with defined data and make decisions with predictions. Deep learning algorithms are algorithms developed as a sub-branch of machine learning. Deep learning methods also make classifications with high accuracy in image classification studies. Some of the classification studies that have been carried out are summarized in Table 1 below.

Table 1.

Literature search of image classification studies using deep learning methods.

The standard datasets used in these studies can be summarized as follows:

The CIFAR-10 dataset (Canadian Institute for Advanced Research, 10 classes) is a subset of the Tiny Images dataset. The CIFAR-100 dataset (Canadian Institute for Advanced Research, 100 classes) is a subset of the Tiny Images dataset. The 2MNIST database (Modified National Institute of Standards and Technology database) is a large collection of handwritten figures. The Caltech101 dataset contains images from 101 object categories (for example, “helicopter”, “elephant”, and “chair”) and a background category that includes images not from the 101 object categories [23]. In the present study, a performance comparison was made with the pre-trained networks in the MATLAB R2021a library and the proposed CNN model in the classification of electronic components.

The contributions of this paper can be summarized as follows:

- -

- A new CNN model is proposed for classification studies.

- -

- The performance of the proposed model is not complicated by the pre-trained deep learning model.

- -

- The proposed model is fast and reliable and shows high performance.

- -

- There are less learner parameters of the proposed CNN model than those of other models, namely, AlexNet, ShuffleNet, SqueezeNet, and ResNet, which shortens the analysis time. Time saving is very significant, particularly when working with very large datasets.

The second section of this study contains information regarding the deep learning model, preprocessing and data augmentation, the dataset used, convolutional neural networks, performance criteria, pre-trained CNN structures, and the proposed CNN structure. In the third section, the analysis and interpretation of the results are given by specifying the experimental results. In the fourth section, that is, the Discussion Section, a performance comparison with several studies in the literature is provided. In the last section, the conclusions of the study are summarized, and suggestions are provided in order to guide future studies.

2. Materials and Methods

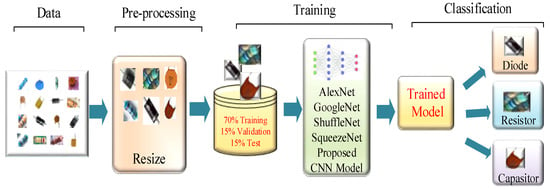

In this study, classification was carried out using the electronic component dataset consisting of 3 classes in total. In the classification process, pre-trained CNN structures, namely, AlexNet, ShuffleNet, SqueezeNet, GoogleNet, and the proposed CNN model were used. The block diagram of the deep learning method used in the study is as seen in Figure 1.

Figure 1.

Block diagram of the proposed deep learning method.

The images in the dataset were sized appropriately for the input of the CNN models. Then, the dataset was randomly divided into 70% training, 15% validation, and 15% test data. As a result, the trained CNN models classified the test data.

2.1. Proposed Deep Forecasting Method

The method proposed in this study and the methodology to be applied for other models are shown in Figure 1. First, data augmentation was carried out using the augmentation method on the dataset. Then, in the proposed method, firstly, the image dimensions were changed from 1600 × 1200 to the input size of 227 × 227. It was trained by taking an equal amount of data from all classes. The class with the least amount of data is the capacitor class with 1320 pieces of data. The same amount of data was randomly selected from the resistor and diode classes. Network training was carried out with the new proposed CNN model. A total of 70% of the dataset was selected for training, 15% for validation, and 15% for test data. The proposed CNN structure is explained in detail with its parameters in the next section. The trained proposed CNN model was tested with test data that the network had not seen before, and its classification performance was evaluated. Many attempts were made to determine the CNN model with the highest performance value, and the most appropriate values were decided.

2.2. Preprocessing and Data Augmentation

In some cases, the dataset may be insufficient to achieve better results in deep CNN networks. In order to create the best deep CNN model with the few available data and to increase model performance, augmentation is required. The augmentation process artificially generates training images using various processing methods or a combination of various processing methods, such as image flip, rotate, blur, relight, and random crop [24].

The images were normalized to 224 × 224 pixels due to their varying sizes, and the deaverage of the processed image, namely, G, R, B, and grayscale, was removed from the average value. Lastly, a dataset containing 2666 images was created for training using augmentation. Moreover, the size of the augmented dataset was 5332 images. All models were trained with the dataset augmented by the augmentation mirroring method.

2.3. Dataset

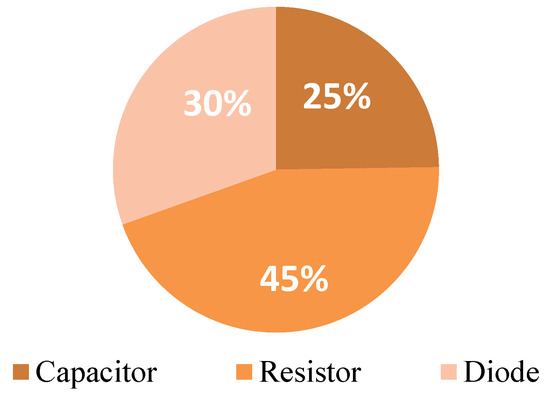

A total of 2666 electronic component datasets from the open access database were used. Data augmentation was carried out using the mirroring method, which is one of the most used methods of data augmentation. After data augmentation, the dataset contained 5332 images in total. The dataset consisted of 3 classes, and there were 1320 capacitors, 1624 diodes, and 2388 resistors. The capacitance, diode, and resistance distributions in the dataset are as given in Figure 2 [23].

Figure 2.

Distribution of dataset class data.

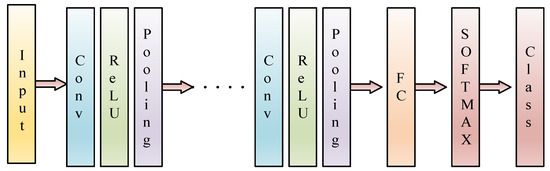

2.4. Convolutional Neural Networks (CNNs)

Convolutional neural networks are a preferred deep learning architecture in many studies due to their high performance [10]. A basic CNN structure consists of pooling, activation, and fully connected layers. Different models have been developed with various combinations of these layers. A simple CNN structure is given in Figure 3 [25,26].

Figure 3.

General structure of convolutional neural network.

The convolution layer is the first layer in which the image is examined. Here, a filter smaller than the original image wanders over the image and determines its properties. The ReLU layer is a nonlinear function shown in Equation (1). It allows for the removal of negative values in the CNN structure [27].

f(x) = max(0, x)

The pooling layer is used to reduce the original size of the image. As such, it ignores the unnecessary features in the image and enables the determination of important features. There are two pooling techniques, maximum pooling and average pooling. Maximum pooling takes the maximum value in the filter, and average pooling takes the average value in the filter [10].

The fully connected (FC) layer is the layer in which the matrix image passing through the convolution and pooling layers is converted into a flat vector [28].

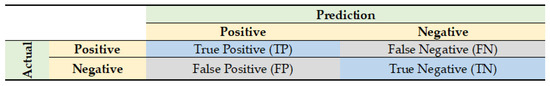

2.5. Performance Metrics

The success of the model in classification studies is related to the number of samples in the correct class and in the wrong class. The performance criterion of the model is expressed by the complexity matrix of the performance criterion of the test data. An example of the confusion matrix is given in Figure 4. The explanations and mathematical equations of the model performance criteria are summarized in Table 2 [29].

Figure 4.

Complexity matrix [17].

Table 2.

Basic concepts and equations used to evaluate model performance [28].

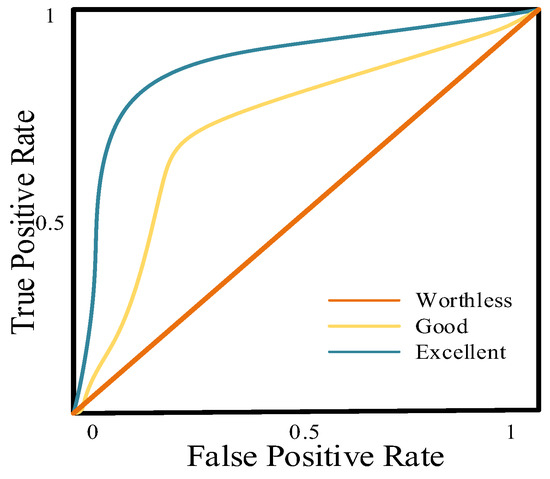

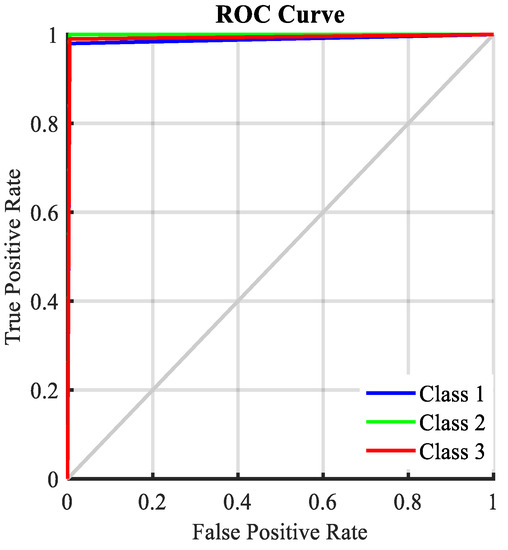

One of the performance measurement parameters in classification studies is the receiver operating characteristic (ROC) curve. It is one of the most extensively used measures to assess the effectiveness of machine learning algorithms, particularly in circumstances when datasets are unbalanced. It explains how good the model is at predicting. ROC is a probability curve for different classes. A typical ROC curve has False Positive Rate (FPR) on the X-axis and True Positive Rate (TPR) on the Y-axis. Another performance measurement parameter is the AUC-ROC curve. AUC means “area under the ROC curve”. The larger the area covered, the better the machine learning models are at distinguishing given classes. The ideal value for AUC is 1. An example ROC curve is given in Figure 5 [30].

Figure 5.

ROC curve [30].

2.6. Pre-Trained CNN Models

Pre-trained CNNs are trained on more than one million multiple images. Pre-trained CNNs can be trained using a new dataset and adapted and fine-tuned for a typical classification. The general features of the CNN models discussed in the present study are summarized in Table 3.

Table 3.

General characteristics of pre-trained CNN networks [20,22,31,32].

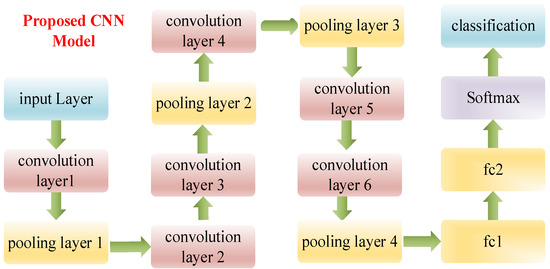

2.7. The Proposed CNN Model

The proposed CNN model for classification consists of thirteen layers, that is, six convolution layers, four pooling layers, two fully connected layers, and an output layer. For example, the filter size in the first, second, and third convolutional layers was 3 × 3; 5 × 5 in the fourth; and 9 × 9 in the fifth and sixth layers. The filter size of the pooling layers was assigned as 2 × 2 for all layers. The full link layers (FC1 and FC2) were selected as 750 and 1000. These selected parameters were determined according to the best accuracy rate during the experiments. The structure of the proposed CNN model is summarized in Figure 6, and the dimensional parameters of the layers are summarized in Table 4.

Figure 6.

The proposed CNN model architecture.

Table 4.

The proposed CNN model layers.

The layer in the first column in Table 4 represents the convolution, pooling, and fully connected layers used in the proposed CNN model. Input size: images are expressed as a matrix, so the input size is the matrix size. Filter size: Filters are used in feature mapping of the image. The step is the filter shift amount. The number of filters is also referred to as the feature map. This helps to capture different features of the image by using a different number of filters for each layer.

3. Results

In the present study, the classification of electronic components was made using a deep learning method. There were 5332 images in the database. Although the material images had a size of 1600 × 1200, they were reduced to sizes of 224 × 224 and 227 × 227 for use in deep learning algorithms. A total of 70% of these images were used for training, 15% for validation, and 15% for testing. Prior to the classification study, the dataset was divided into three parts, namely, training, validation, and test data. Thus, the network was trained with the training set and tested with the test dataset.

In the present study, the model was trained in each iteration, and at the end of each training session, success was measured with the test set. Since we used our test set as the measurement set in each iteration, there was an overfit situation, which we call the overfitting of the model to the test. The validation set was used to prevent our model from being overfitted and to evaluate the results in the training set. The validation set was a dataset that had not been trained on the model and was used to adjust the hyperparameters. With the validation set, after model effectiveness was tested at the end of each training session and the final model was decided, the effectiveness of our model was observed in a healthier way by testing it with the test set. Hence, if we did not use the validation set, the “test set” may have been overfitted. The validation set was used only to prevent overfitting to the test set.

The training and test images were randomly selected. The computer system used had hardware with i7-10750 H CPU @2.60 GHz, NVIDIA Quadro P620 GPU, and 16 GB RAM. Deep learning algorithms defined in Matlab R2020a were used for the application. The example images used for classification are shown in Figure 7. AlexNet, GoogleNet, ShuffleNet, SqueezeNet, and the proposed CNN model were used in the classification study. In addition, the parameters used during the training and testing with CNN models are given in Table 5.

Figure 7.

Classified dataset example [23].

Table 5.

Training parameters used in CNN models.

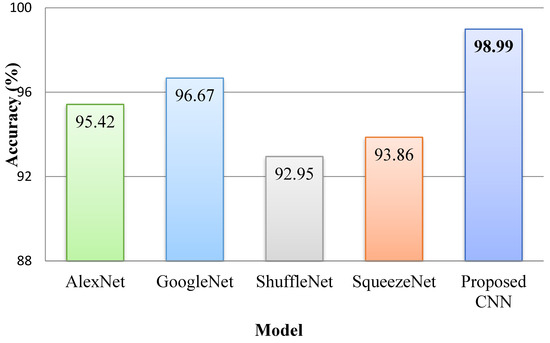

When the analysis results of the trained CNN models were examined, the accuracy rates for AlexNet, GoogleNet, ShuffleNet, SqueezeNet, and the proposed model were 95.42%, 96.67%, 92.95%, 93.86%, and 99.99%, respectively. The sensitivity value is an important criterion similarly to the accuracy value. When the sensitivity values of the models were evaluated, ShuffleNet showed the lowest performance with 0.938, followed by AlexNet with 0.947, SqueezeNet with 0.949, GoogleNet with 0.970, and the recommended CNN model with 0.986.

The models’ values of precision, specificity, and F-score criteria are also shown in Table 6. In light of the results obtained, the proposed CNN model showed a higher success rate than that of all other CNN models.

Table 6.

CNN models’ performance results.

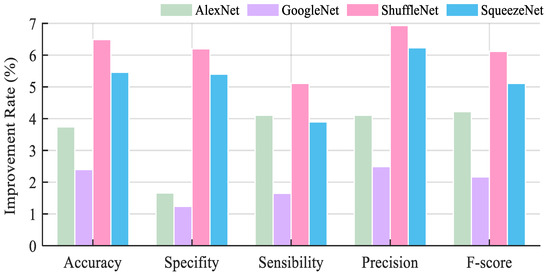

According to the results obtained, the proposed CNN model showed a higher success rate than all the other CNN models. The performance metrics of the proposed method and the other pre-trained models were compared. When making comparisons, the percentage of providing better results was examined for the proposed model and compared to the other models’ performances.

For example, while the accuracy value of the proposed method is 98.99%, this value is 95.42% for AlexNet. The proposed method provided an accuracy value of 3.74% higher than that of AlexNet; that is, the result was improved. The improvement rates of the proposed method’s other performance metrics were calculated, and they are given in Table 7 and visualized in Figure 8.

Table 7.

Improvement percentages of performance criteria metrics of the proposed model.

Figure 8.

Improvement rate (%) of the proposed model in performance criteria.

In terms of accuracy, the proposed method improved upon AlexNet, GoogleNet, ShuffleNet, and SqueezeNet performances by 3.74%, 2.40%, 6.49%, and 5.46%, respectively. It showed improvement between 1.66% and 6.2% in terms of specificity values. It can be seen that there were performance improvements of 1.66%, 1.24%, 6.2%, and 5.4% compared to AlexNet, GoogleNet, ShuffleNet, and SqueezeNet, respectively. In terms of sensitivity, it improved upon AlexNet, GoogleNet, ShuffleNet, and SqueezeNet performances by 4.11%, 1.65%, 5.11%, and 3.9%, respectively. In terms of precision values, it improved upon AlexNet, GoogleNet, ShuffleNet, and SqueezeNet performances by 4.11%, 2.49%, 6.93%, and 6.23%, respectively. Finally, when the improvement results in F-score values were examined, it could be seen that it showed performance improvements of 4.22%, 2.17%, 6.12%, and 5.11% compared to AlexNet, GoogleNet, ShuffleNet, and SqueezeNet, respectively. The complexity matrix for the proposed model is shown in Figure 9.

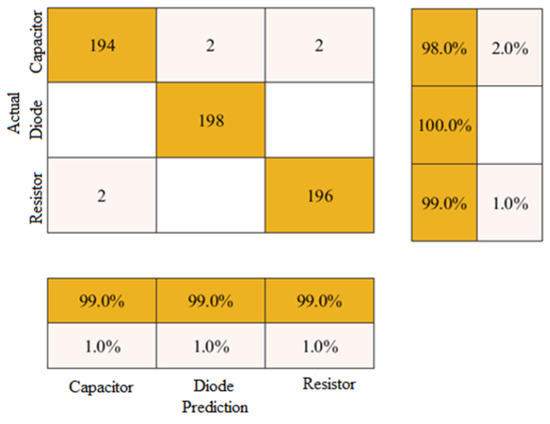

Figure 9.

Proposed CNN model complexity matrix.

When the complexity matrix for the proposed CNN model was examined, the model was found to correctly predict about 99% of the capacitors, diodes, and resistors in the test data. Although it found 194 of the capacitors in the test data in the correct class, it classified 2 of them incorrectly. For the other classes, two pieces of data were found to belong to different classes. As seen in Figure 10, the ROC value of the model shows that it makes perfect classification. A comparison of the classification accuracy rates of the pre-trained networks AlexNet, GoogleNet, ShuffleNet, SqueezeNet, and the proposed CNN method is given in Figure 11.

Figure 10.

ROC curve for each class.

Figure 11.

Classification accuracy rates obtained with CNN models.

4. Discussion

In this section, studies in the field of classification are given. In this context, the number of samples in the dataset, the dataset used, the number of classes, and the classification success rates of these studies are presented in Table 8.

Table 8.

CNN models performance results.

When the selected studies in Table 8 were examined, it could be seen that models and datasets with different characteristics were used. The CIFAR-10 dataset contains 10 classes. There are 6000 images per class comprising 5000 training and 1000 test images, and it contains 60,000 images in total.

The CIFAR-100 dataset contains 32 × 32 images. There are 100 classes. It contains 600 images per class. It consists of 60,000 color images in total. The MNIST database has a test set of 60,000 examples and a test set of 10,000 examples. The Fashion-MNIST dataset consists of 10 categories with 7000 images in each class. There are 60,000 images in the training set and 10,000 images in the test set. In ref. [34], disease classification was performed using red blood cell images. A total of 400 infected cells were increased to 4000 cells, and images of 1000 normal cells were increased with the augmentation method and resulted in 10,000 pieces of data.

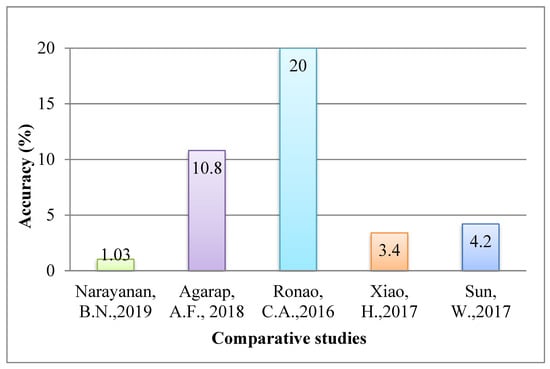

When the studies were examined, it could be seen that, in refs. [21,22,31,32,33,34,35], the success rates obtained were 97.98%, 89.35%, 82.43%, 95.75%, and 95%. In the present study, the accuracy rate obtained was 98.99%. The present study was compared with the studies given in Table 9, and the improvement in the accuracy rate was calculated and is given in Figure 12.

Table 9.

The rate of improvement in accuracy (%).

Figure 12.

Percentages of improvement in accuracy rates.

As can be seen in Figure 12, the proposed method showed improvements of 1.03%, 10.8%, 20.1%, 3.4%, and 4.2% in accuracy performances when compared to refs. [21,22,32,33,34], respectively.

5. Conclusions

In this study, various deep learning-based classification approaches were used for the classification of electronic components. Forecasting performances were analyzed comparatively. In this study, only the mirroring method was preferred among the augmentation methods. In the proposed method, the datasets were used in their original form and instead focused on the correct fine-tuning strategies. It was observed that the application of CNN-based architectures for classification gives effective results. The results obtained were architectures with a high accuracy rate. As a result, in the classification of electronic components, the following accuracy values were obtained: AlexNet with 95.42%, GoogleNet with 96.67%, ShuffleNet with 92.95%, SqueezeNet with 93.86%, and the recommended CNN model with 98.99%. In further studies, it is planned to develop the proposed CNN model. The model can be extended with residual connections. Work will be carried out to reduce the number of parameters in model improvement. In addition, the duration of the developed model in large datasets will be calculated, and efforts will be made to improve it to show high performance in a short time.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declare no conflict of interest.

References

- Yuan, J.; Hou, X.; Xiao, Y.; Cao, D.; Guan, W.; Nie, L. Multi-criteria active deep learning for image classification. Knowl.-Based Syst. 2019, 172, 86–94. [Google Scholar] [CrossRef]

- Cetinic, E.; Lipic, T.; Grgic, S. Fine-tuning Convolutional Neural Networks for fine art classification. Expert Syst. Appl. 2018, 114, 107–118. [Google Scholar] [CrossRef]

- dos Santos, M.M.; da S. Filho, A.G.; dos Santos, W.P. Deep convolutional extreme learning machines: Filters combination and error model validation. Neurocomputing 2019, 329, 359–369. [Google Scholar] [CrossRef]

- Liang, Z. Automatic Image Recognition of Rapid Malaria Emergency Diagnosis: A Deep Neural Network Approach. Master’s Thesis, York University, Toronto, ON, Canada, 2017. Available online: https://yorkspace.library.yorku.ca/xmlui/handle/10315/34319 (accessed on 10 January 2022).

- Han, H.; Li, Y.; Zhu, X. Convolutional neural network learning for generic data classification. Inf. Sci. 2019, 477, 448–465. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Convolutional deep belief networks on cifar-10. Unpubl. Manuscr. 2010, 40, 1–9. Available online: https://www.cs.toronto.edu/~kriz/conv-cifar10-aug2010.pdf (accessed on 10 January 2022).

- Rasekh, M.; Karami, H.; Wilson, A.D.; Gancarz, M. Performance Analysis of MAU-9 Electronic-Nose MOS Sensor Array Components and ANN Classification Methods for Discrimination of Herb and Fruit Essential Oils. Chemosensors 2021, 9, 243. [Google Scholar] [CrossRef]

- Roy, S.S.; Rodrigues, N.; Taguchi, Y.-H. Incremental Dilations Using CNN for Brain Tumor Classification. Appl. Sci. 2020, 10, 4915. [Google Scholar] [CrossRef]

- Chapelle, O.; Haffner, P.; Vapnik, V.N. Support vector machines for histogram-based image classification. IEEE Trans. Neural Netw. 1999, 10, 1055–1064. [Google Scholar] [CrossRef]

- Marée, R.; Geurts, P.; Piater, J.; Wehenkel, L. A generic approach for image classification based on decision tree ensembles and local sub-windows. In Proceedings of the 6th Asian Conference on Computer Vision, Jeju, Korea, 27–30 January 2004; pp. 860–865. Available online: https://www.semanticscholar.org/paper/A-generic-approach-for-image-classification-based-Mar%C3%A9e-Geurts/134bb1cfe1cd0d37ef1cf091105a4e5a37241898 (accessed on 24 February 2022).

- Milgram, J.; Sabourin, R.; Cheriet, M. Combining model-based and discriminative approaches in a modular two-stage classification system: Application to isolated handwritten digit recognition. In Progress in Computer Vision and Image Analysis; World Scientific, 2010; pp. 181–205. Available online: https://dl.acm.org/doi/10.1145/2598394.2602287 (accessed on 5 December 2021).

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13001–13008. [Google Scholar]

- David, O.E.; Greental, I. Genetic algorithms for evolving deep neural networks. In Proceedings of the Companion Publication of the 2014 Annual Conference on Genetic and Evolutionary Computation, Vancouver, BC, Canada, 12–16 July 2014; pp. 1451–1452. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

- Suganuma, M.; Shirakawa, S.; Nagao, T. A genetic programming approach to designing convolutional neural network architectures. In Proceedings of the Genetic and Evolutionary Computation Conference, Berlin, Germany, 15–19 July 2017; pp. 497–504. [Google Scholar]

- Bosch, A.; Zisserman, A.; Munoz, X. Image Classification using Random Forests and Ferns. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 60, 84–90. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Meier, U.; Masci, J.; Gambardella, L.M.; Schmidhuber, J. Flexible, high performance convolutional neural networks for image classification. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011. [Google Scholar]

- McDonnell, M.D.; Vladusich, T. Enhanced image classification with a fast-learning shallow convolutional neural network. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–7. [Google Scholar]

- Springenberg, J.T.; Dosovitskiy, A.; Brox, T.; Riedmiller, M. Striving for simplicity: The all convolutional net. arXiv 2014, arXiv:1412.6806. [Google Scholar]

- Ronao, C.A.; Cho, S.-B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Kaggle. “Kaggle”. Kaggle Data Set. 6 December 2021. Available online: https://www.kaggle.com/datasets (accessed on 10 December 2021).

- Van Dyk, D.A.; Meng, X.-L. The Art of Data Augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Acikgoz, H. A novel approach based on integration of convolutional neural networks and deep feature selection for short-term solar radiation forecasting. Appl. Energy 2022, 305, 117912. [Google Scholar] [CrossRef]

- Atik, I. A New CNN-Based Method for Short-Term Forecasting of Electrical Energy Consumption in the Covid-19 Period: The Case of Turkey. IEEE Access 2022, 10, 22586–22598. [Google Scholar] [CrossRef]

- Park, Y.; Yang, H.S. Convolutional neural network based on an extreme learning machine for image classification. Neurocomputing 2019, 339, 66–76. [Google Scholar] [CrossRef]

- Hiary, H.; Saadeh, H.; Saadeh, M.; Yaqub, M. Flower classification using deep convolutional neural networks. IET Comput. Vis. 2018, 12, 855–862. [Google Scholar] [CrossRef]

- Samui, P.; Roy, S.S.; Balas, V.E. Handbook of Neural Computation; Academic Press: London, UK, 2017. [Google Scholar]

- Hoo, Z.H.; Candlish, J.; Teare, D. What is an ROC curve? Emerg. Med. J. 2017, 34, 357–359. [Google Scholar] [CrossRef]

- Ucar, F.; Korkmaz, D. COVIDiagnosis-Net: Deep Bayes-SqueezeNet based diagnosis of the coronavirus disease 2019 (COVID-19) from X-ray images. Med. Hypotheses 2020, 140, 109761. [Google Scholar] [CrossRef]

- Narayanan, B.N.; Ali, R.A.; Hardie, R.C. Performance analysis of machine learning and deep learning architectures for malaria detection on cell images. In Proceedings of the Applications of Machine Learning, San Diego, CA, USA, 11–15 August 2019; Volume 11139, p. 111390W. [Google Scholar]

- Agarap, A.F. Deep Learning using Rectified Linear Units (ReLU). arXiv 2018, arXiv:1803.08375. [Google Scholar]

- Sun, W.; Tseng, T.-L.; Zhang, J.; Qian, W. Enhancing deep convolutional neural network scheme for breast cancer diagnosis with unlabeled data. Comput. Med. Imaging Graph. 2017, 57, 4–9. [Google Scholar] [CrossRef] [Green Version]

- Pan, W.D.; Dong, Y.; Wu, D. Classification of Malaria-Infected Cells Using Deep Convolutional Neural Networks. Mach. Learn. Adv. Tech. Emerg. Appl. 2018, 159. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).