Leveraging Graph Analytics for Energy Efficiency Certificates

Abstract

:1. Introduction

2. State of the Art Analysis

3. Methodology, Architecture, and Implementation

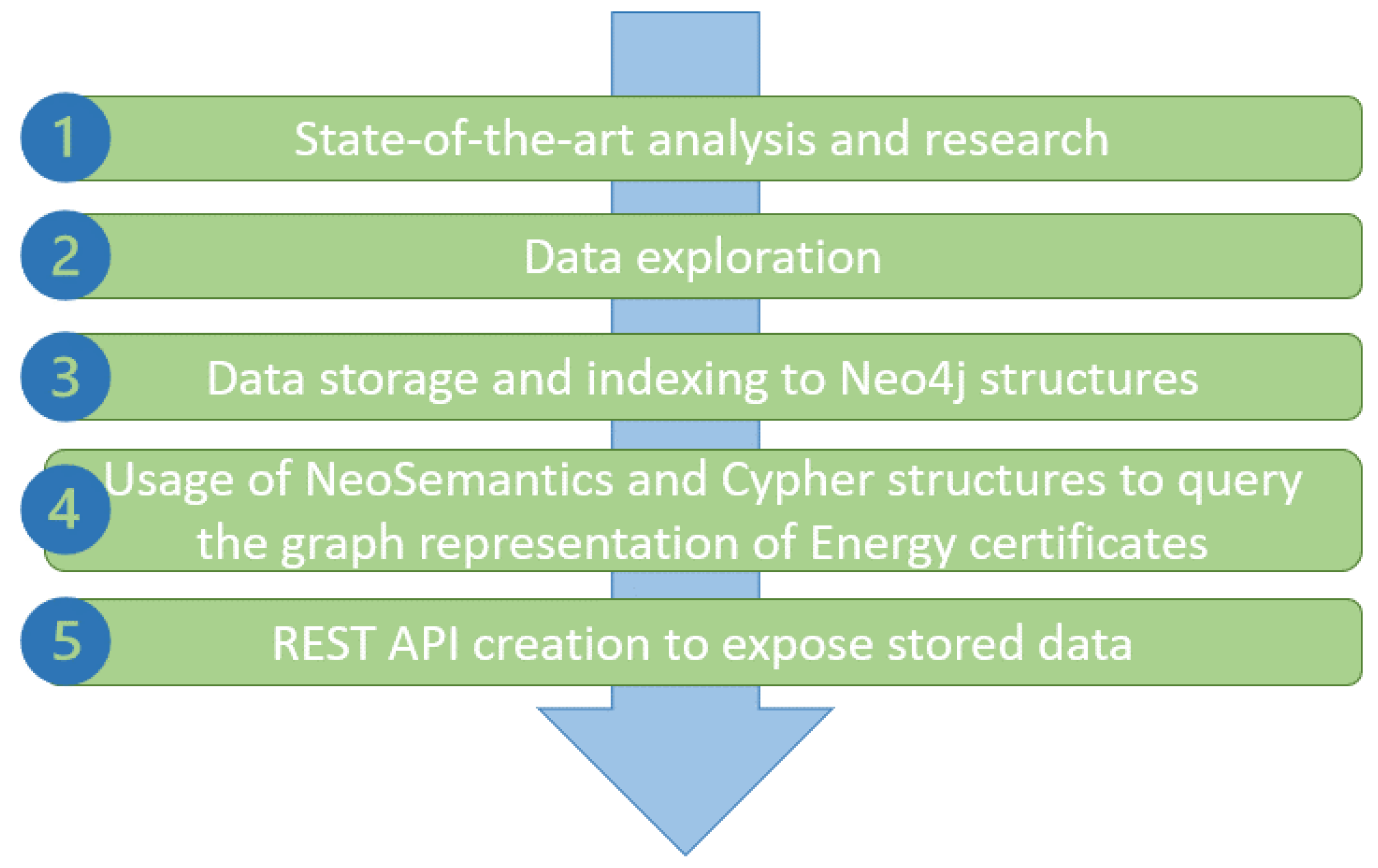

3.1. Methodology

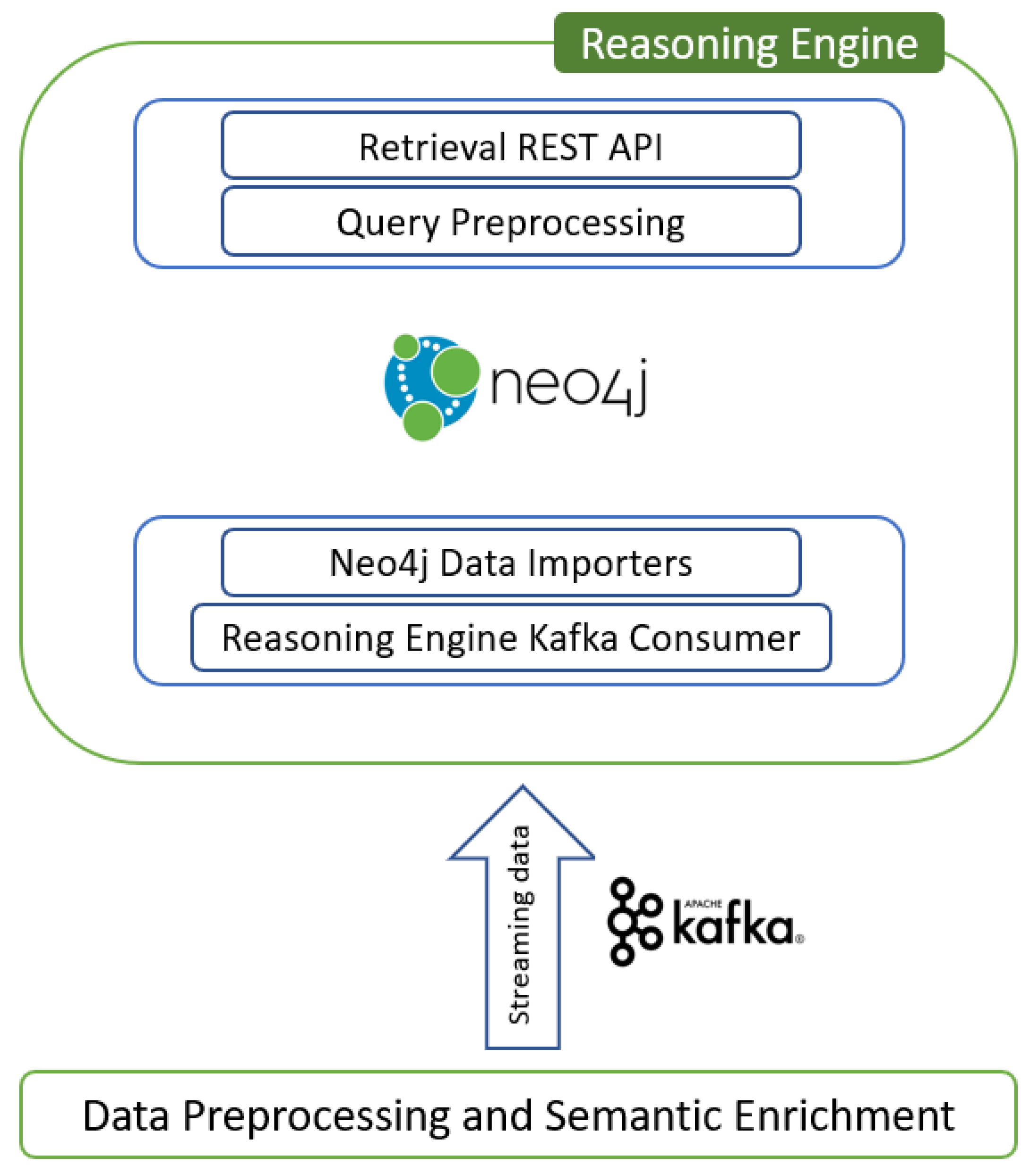

3.2. Architecture

3.3. Implementation

4. Results

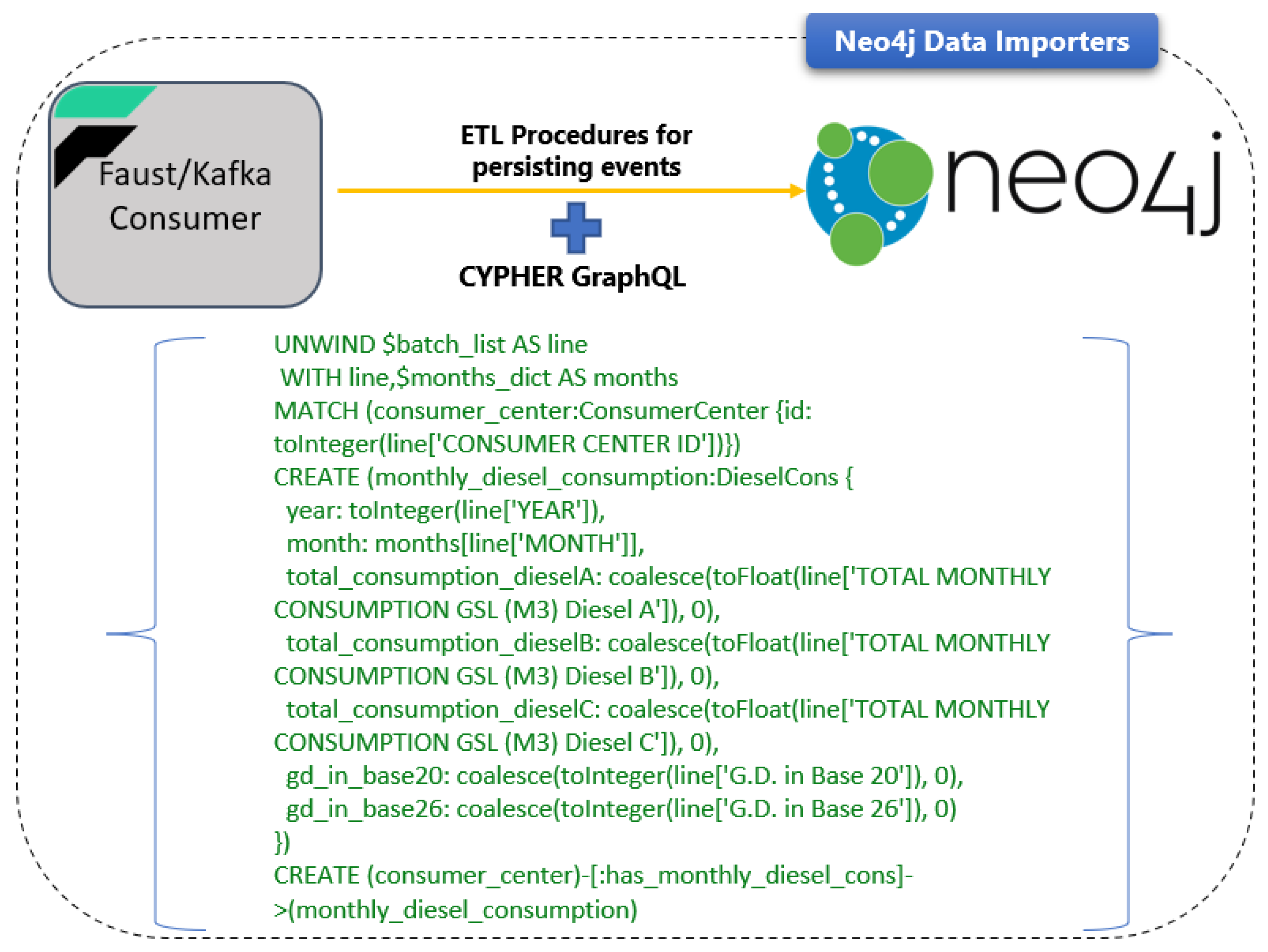

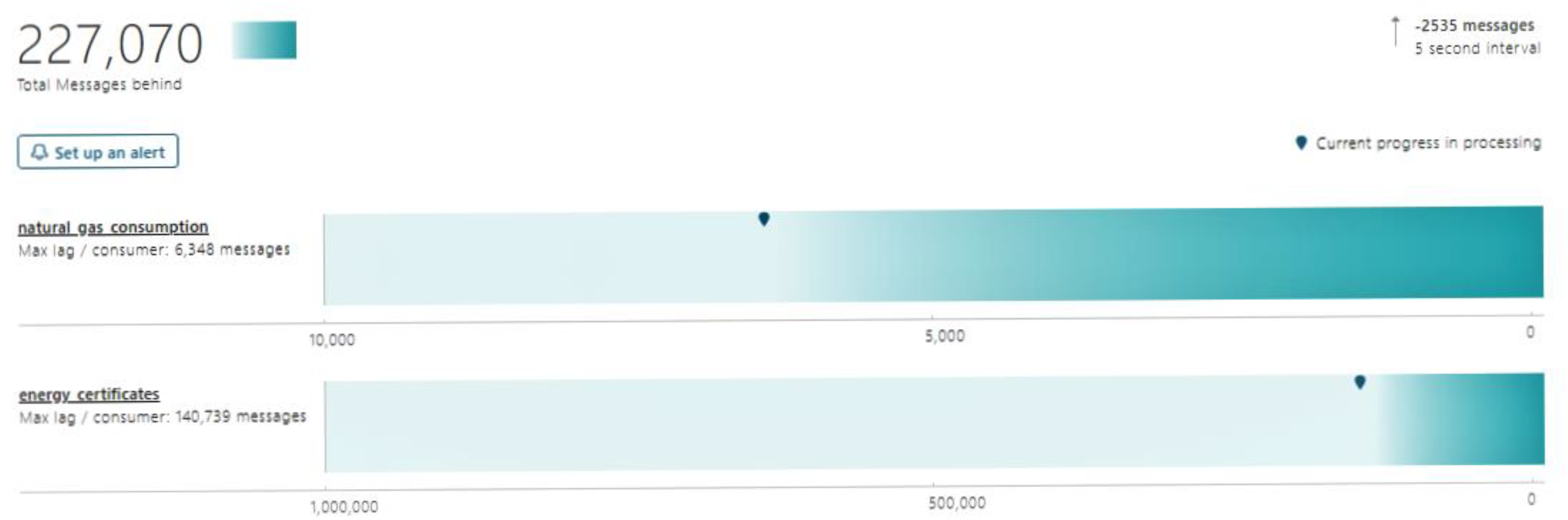

4.1. Data Flow in Reasoning Engine Kafka Consumer

4.2. Graph Creation in Neo4j

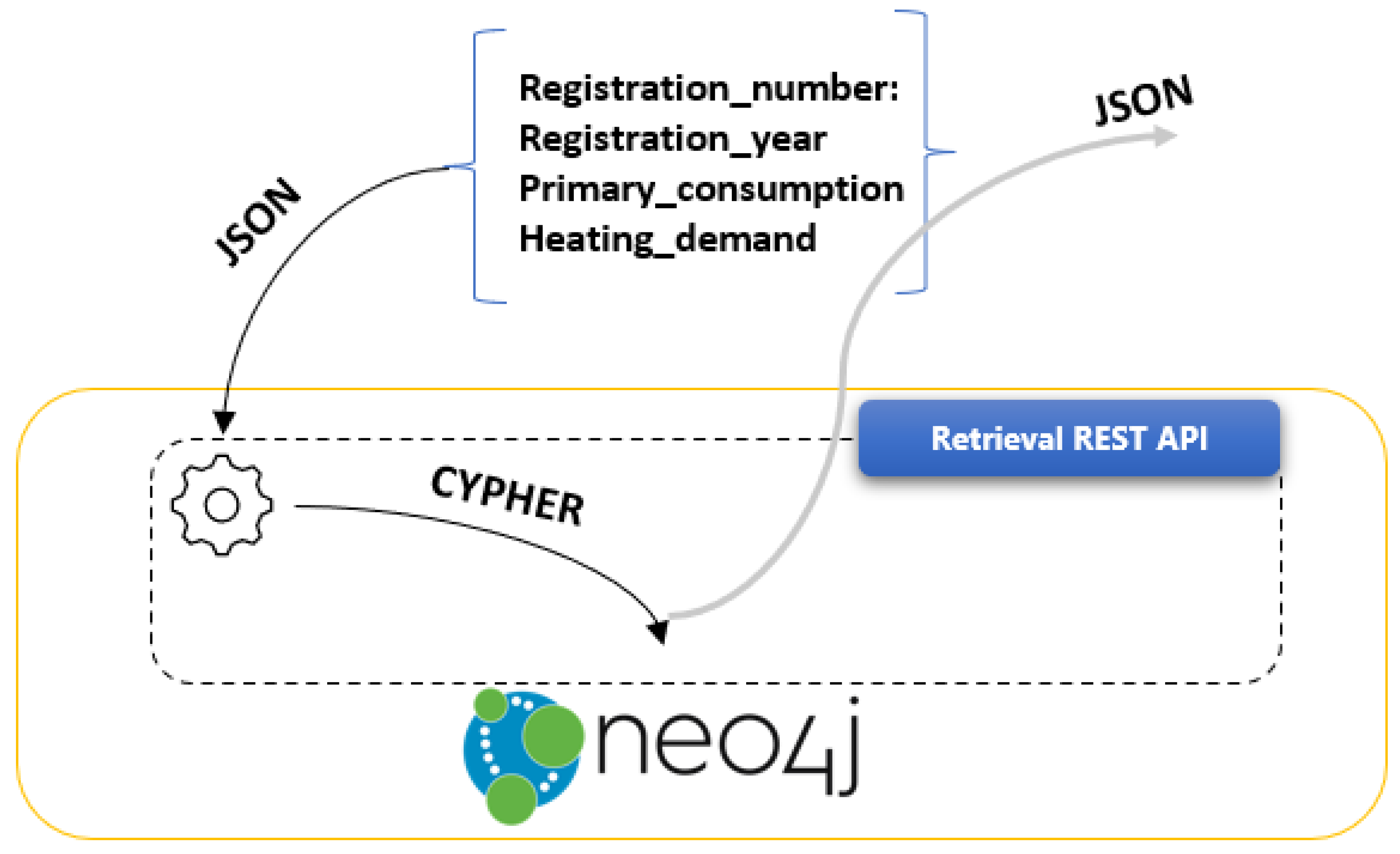

4.3. Retrieve Data from Reasoning Engine

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Definition | |

| AI | Artificial Intelligence |

| APACHE | APACHE SOFTWARE FOUNDATION |

| API | Application Programming Interface |

| BDVC | Big Data Value Chain |

| CYPHER | Neo4j Graph Database Query Language |

| DL | Deep Learning |

| EREN | Regional Energy Agency in Spain |

| EPC | Energy Performance Certificates |

| GraphQL | Graphical Query Language |

| HTTP | Hypertext Transfer Protocol Secure |

| IoT | Internet of Things |

| JSON | JavaScript Object Notation |

| ML | Machine Learning |

| ML/DL | Machine Learning/Deep Learning |

| REST | Representational State Transfer |

| RDF | Resource Description Framework |

| SPARQL | Semantic Query Language |

References

- Helm, D. The European Framework for Energy and Climate Policies. Energy Policy 2014, 64, 29–35. [Google Scholar] [CrossRef]

- Energy Performance of Buildings Directive—European Commission. Available online: https://ec.europa.eu/energy/en/topics/energy-efficiency/energy-performance-of-buildings/energy-performance-buildings-directive (accessed on 16 December 2021).

- Energy Efficiency Directive—European Commission. Available online: https://ec.europa.eu/energy/topics/energy-efficiency/targets-directive-and-rules/energy-efficiency-directive_en (accessed on 16 December 2021).

- Lu, Q.; Parlikad, A.; Woodall, P.; Don Ranasinghe, G.; Xie, X.; Liang, Z.; Konstantinou, E.; Heaton, J.; Schooling, J. Developing A Digital Twin at Building and City Levels: Case Study of West Cambridge Campus. J. Manag. Eng. 2020, 36, 05020004. [Google Scholar] [CrossRef]

- Marinakis, V.; Doukas, H. An Advanced Iot-Based System for Intelligent Energy Management in Buildings. Sensors 2018, 18, 610. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marinakis, V.; Doukas, H.; Karakosta, C.; Psarras, J. An Integrated System for Buildings’ Energy-Efficient Automation: Application in the Tertiary Sector. Appl. Energy 2013, 101, 6–14. [Google Scholar] [CrossRef]

- Marinakis, V.; Karakosta, C.; Doukas, H.; Androulaki, S.; Psarras, J. A Building Automation and Control Tool for Remote and Real Time Monitoring of Energy Consumption. Sustain. Cities Soc. 2013, 6, 11–15. [Google Scholar] [CrossRef]

- Marinakis, V. Big Data for Energy Management and Energy-Efficient Buildings. Energies 2020, 13, 1555. [Google Scholar] [CrossRef] [Green Version]

- Marinakis, V.; Doukas, H.; Koasidis, K.; Albuflasa, H. From Intelligent Energy Management to Value Economy through a Digital Energy Currency: Bahrain City Case Study. Sensors 2020, 20, 1456. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Marinakis, V.; Doukas, H.; Tsapelas, J.; Mouzakitis, S.; Sicilia, Á.; Madrazo, L.; Sgouridis, S. From Big Data to Smart Energy Services: An Application for Intelligent Energy Management. Future Gener. Comput. Syst. 2020, 110, 572–586. [Google Scholar] [CrossRef]

- Wei, Y.; Zhang, X.; Shi, Y.; Xia, L.; Pan, S.; Wu, J.; Han, M.; Zhao, X. A Review of Data-Driven Approaches for Prediction and Classification of Building Energy Consumption. Renew. Sustain. Energy Rev. 2018, 82, 1027–1047. [Google Scholar] [CrossRef]

- Marinakis, V.; Doukas, H.; Spiliotis, E.; Papastamatiou, I. Decision Support for Intelligent Energy Management in Buildings Using the Thermal Comfort Model. Int. J. Comput. Intell. Syst. 2017, 10, 882. [Google Scholar] [CrossRef] [Green Version]

- Papadakos, G.; Marinakis, V.; Konstas, C.; Doukas, H.; Papadopoulos, A. Managing the Uncertainty of the U-Value Measurement Using an Auxiliary Set along with a Thermal Camera. Energy Build. 2021, 242, 110984. [Google Scholar] [CrossRef]

- Ahmad, T.; Chen, H.; Huang, R.; Yabin, G.; Wang, J.; Shair, J.; Azeem Akram, H.; Hassnain Mohsan, S.; Kazim, M. Supervised Based Machine Learning Models for Short, Medium and Long-Term Energy Prediction in Distinct Building Environment. Energy 2018, 158, 17–32. [Google Scholar] [CrossRef]

- Sheng, G.; Hou, H.; Jiang, X.; Chen, Y. A Novel Association Rule Mining Method of Big Data for Power Transformers State Parameters based on Probabilistic Graph Model. IEEE Trans. Smart Grid 2018, 9, 695–702. [Google Scholar] [CrossRef]

- Miller, J.J. Graph database applications and concepts with Neo4j. In Proceedings of the Southern Association for Information Systems Conference, Atlanta, GA, USA, 23–24 March 2013; Volume 2324. [Google Scholar]

- Vukotic, A.; Watt, N. Neo4j in Action; Manning: Shelter Island, NY, USA, 2015. [Google Scholar]

- Kunda, D.; Phiri, H. A Comparative Study of NoSQL and Relational Database. Zamb. ICT J. 2017, 1, 1–4. [Google Scholar] [CrossRef]

- Angles, R. A Comparison of Current Graph Database Models. In Proceedings of the 2012 IEEE 28th International Conference on Data Engineering Workshops, Arlington, VA, USA, 1–5 April 2012. [Google Scholar]

- Guia, J.; Gonçalves Soares, V.; Bernardino, J. Graph Databases: Neo4j Analysis. In Proceedings of the 19th International Conference on Enterprise Information Systems, Porto, Portugal, 26–29 April 2017. [Google Scholar]

- Mary Femy, P.F.; Reshma, K.R.; Varghese, S.M. Outcome Analysis Using Neo4j Graph Database. Int. J. Cybern. Inform. 2016, 5, 229–236. [Google Scholar]

- Cabra, M. How the ICIJ Used Neo4j to Unravel the Panama Papers. Available online: https://neo4j.com/blog/icij-neo4j-unravel-panama-papers/ (accessed on 29 October 2021).

- Packer, D. How Walmart Uses Neo4j for Retail Competitive Advantage. Available online: https://neo4j.com/blog/walmart-neo4j-competitive-advantage/ (accessed on 29 October 2021).

- Allen, D.; Hodler, A.; Hunger, M.; Knobloch, M.; Lyon, W.; Needham, M.; Voigt, H. Understanding trolls with efficient analytics of large graphs in neo4j. BTW 2019. [Google Scholar] [CrossRef]

- Quamar, A.; Deshpande, A.; Lin, J. NScale: Neighborhood-Centric Analytics on Large Graphs. Proc. VLDB Endow. 2014, 7, 1673–1676. [Google Scholar] [CrossRef]

- Jindal, A.; Rawlani, P.; Wu, E.; Madden, S.; Deshpande, A.; Stonebraker, M. Vertexica. Proc. VLDB Endow. 2014, 7, 1669–1672. [Google Scholar] [CrossRef]

| curl --location --request POST ‘http://reasoning_engine:5000/leif/service’ \ --header ‘Content-Type: application/json’ \ --data-raw ‘{ “heating_total_consumption”: ${heating_total_consumption}, “heating_co2_emission”: ${heating_co2_emission}, “hot_water_total_consumption”: ${hot_water_total_consumption}, “hot_water_co2_emission”: ${hot_water_co2_emission}, “electricity_total_consumption”:${electricity_total_consumption}, “electricity_co2_emission”:${electricity_co2_emission} } |

| Column | Short Description | Example Value |

|---|---|---|

| registration_number | Building’s registration number | 050190003VI1870TI |

| registration_date | Building’s registration date | 1 August 2017 |

| building_usage | Building’s primary usage | FAMILY HOUSE |

| coordinates | Building’s location (long, lat) | −4.6788, 40.66 |

| address | Building’s address | CALLE DAVID HERRERO 24 05005 |

| municipality | Municipality that the building belongs to | ARENAS DE SAN PEDRO |

| province | Province that the building belongs to | ABRADA |

| primary_consumption_ratio | Building’s primary consumption ratio (total consumption) | 543.0 |

| primary_consumption_rating | Building’s primary consumption label | A |

| co2_emissions_ratio | Building’s CO2 emissions ratio | 61.0 |

| co2_emissions_rating | Building CO2 emissions label/rating | E |

| heating_demand_rating | Building’s heating demand rating | E |

| heating_demand_ratio | Building’s heating demand ratio | 111.87 |

| cooling_demand_ratio | Building’s cooling demand ratio | 12.14 |

| cooling_demand_rating | Building’s cooling demand rating | C |

| curl --location --request POST ‘http://reasoning_engine:5000/query’ \ --header ‘Content-Type: text/plain’ \ --data-raw ‘MATCH (b:Building)-[:has_primary_cons]->(prim_cons:PrimaryCons)-[:has_primary_label]->(l:Label {rating: ‘\’’A’\’’}) RETURN avg(prim_cons.ratio) as average_primary_cons’ |

| [ { “average_primary_cons”: 35.88089204257478 } ] |

| curl --location --request POST ‘http://reasoning_engine:5000/query’ \ --header ‘Content-Type: text/plain’ \ --data-raw ‘MATCH (b:Building)-[:has_municipality]-(m:Municipality {name: ‘\’’ADRADA (LA)’\’’}) MATCH (b)-[:has_primary_cons]->(prim_cons:PrimaryCons)-[:has_primary_label]->(r:Label) RETURN avg(prim_cons.ratio) as abrada_avg_cons’ |

| [ { “abrada_avg_cons”: 349.7089201877938 } ] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapsalis, P.; Kormpakis, G.; Alexakis, K.; Askounis, D. Leveraging Graph Analytics for Energy Efficiency Certificates. Energies 2022, 15, 1500. https://doi.org/10.3390/en15041500

Kapsalis P, Kormpakis G, Alexakis K, Askounis D. Leveraging Graph Analytics for Energy Efficiency Certificates. Energies. 2022; 15(4):1500. https://doi.org/10.3390/en15041500

Chicago/Turabian StyleKapsalis, Panagiotis, Giorgos Kormpakis, Konstantinos Alexakis, and Dimitrios Askounis. 2022. "Leveraging Graph Analytics for Energy Efficiency Certificates" Energies 15, no. 4: 1500. https://doi.org/10.3390/en15041500