1. Introduction

Recent advances in the machine learning domain have resulted in more and more state-of-the-art solutions in a camera image processing, and many other applications are based on neural network architectures. These solutions, however, are often treated as so called

methods [

1], meaning that, given a particular input, they output some predictions, but an internal data processing is more or less unknown. This approach yields many doubts, especially in the domain of autonomous driving, where the variety of processed scenes is enormous and it is unclear whether a new situation, not tested at a development stage, will be correctly classified. Moreover, this is directly connected to safety issues and regulations, as decisions made based on such results could affect human lives.

To resolve this matter, a new branch of research, called explainable artificial intelligence (XAI), is being developed. The purpose of XAI is to explain the inner workings of processing the data by a neural network in a way that the decision-making process can be understood by humans [

2,

3]. Using the XAI methods, a neural network-based solution could be more reliably evaluated in the context of safety regulations. Even if such evaluation is not required for a particular application, the XAI methods could also help researchers better understand machine learning models and, based on that knowledge, introduce improvements to overall performance.

Explainable artificial intelligence is also considered in autonomous vehicle (AV) systems development [

4]. Among other sub-systems, such as a localization or a path planning, an AV perception is crucial for the decision making and all other components relay on it. Perception systems use neural networks to process sensor data and create a model of the environment. Currently, the most common sensor suite used in AV perception systems consists of many cross-domain devices such as cameras, radars, and LiDARs. The XAI methods related to camera image processing and object detection networks are well developed as they were adopted from other vision tasks. Gradient-based methods [

5,

6,

7] prove to be especially useful as they can show the importance of each input pixel in a final prediction output in the form of a heatmap. Unlike the vision images, however, sensor data from radars and LiDARs, which come in the form of a pointcloud, lack such XAI methods.

Addressing the problem of lacking XAI methods, our main contributions in this paper are as follows:

Adaptation of an XAI Gradient-based Class Activation Maps (Grad-CAM) method used in camera image processing to a LiDAR object detection domain in order to visualize how an input pointcloud data affects the results of a neural network.

In-depth analysis of key components and changes that need to be performed in order to apply the Grad-CAM method to pointcloud-specific neural network architecture based on voxel-wise pointcloud processing and single shot detector with a multi-class and multi-feature grid output.

Usage of a Sparsity Invariant convolutional layer, from LiDAR depth-completion domain, which addresses a sparse nature of voxel-wise processed pointcloud data, for both object detection performance and quality of generated Grad-CAM heatmaps.

Experiments conducted on LiDAR pointcloud data from popular autonomous driving KITTI [

8] dataset and presentation of obtained results for proposed methods with comparison of pros and cons between them.

This paper is structured as follows. In

Section 2, we go over related work regarding gradient-based methods used for vision tasks and explain neural network architectures for LiDAR pointcloud object detection. In

Section 3, we present our approach to adapting those XAI methods to a LiDAR data format and network structure.

Section 4 contains a description of our conducted experiments and results, as well as problems encountered and proposed solutions. Finally, we draw our conclusions in

Section 5.

3. Proposed Approach

Processing a LiDAR pointcloud with a neural network, as stated in

Section 2, requires architectural solutions specific to that type of datum. On the other hand, such changes significantly affect the Grad-CAM method application. In order to understand those problems, in this section we will present the model used to detect objects in LiDAR pointcloud and compare original Grad-CAM with our approach.

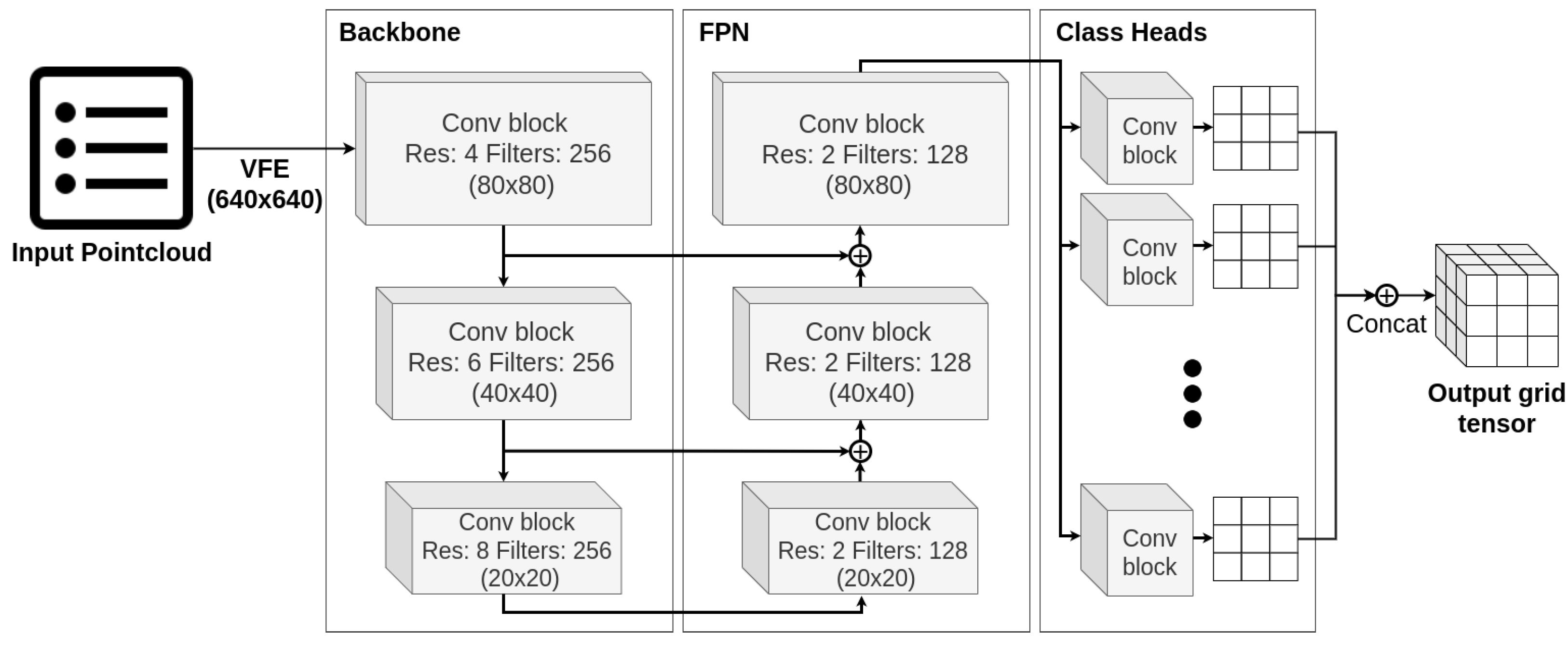

Our proposed LiDAR object detection model is a fully convolutional architecture (

Figure 4), that utilizes common mechanics such as residual blocks and skipped connections in a U-shaped network structure, similar to vision models. Gradient-based methods could be applied to this part of a model as they are. The main challenges are posed by the input and the output layers, specific to processing pointcloud data.

In order to handle the input data, we apply a voxelization method, assigning each detection point to a corresponding voxel and calculating the voxel features based on their content. Then, stacking voxels along

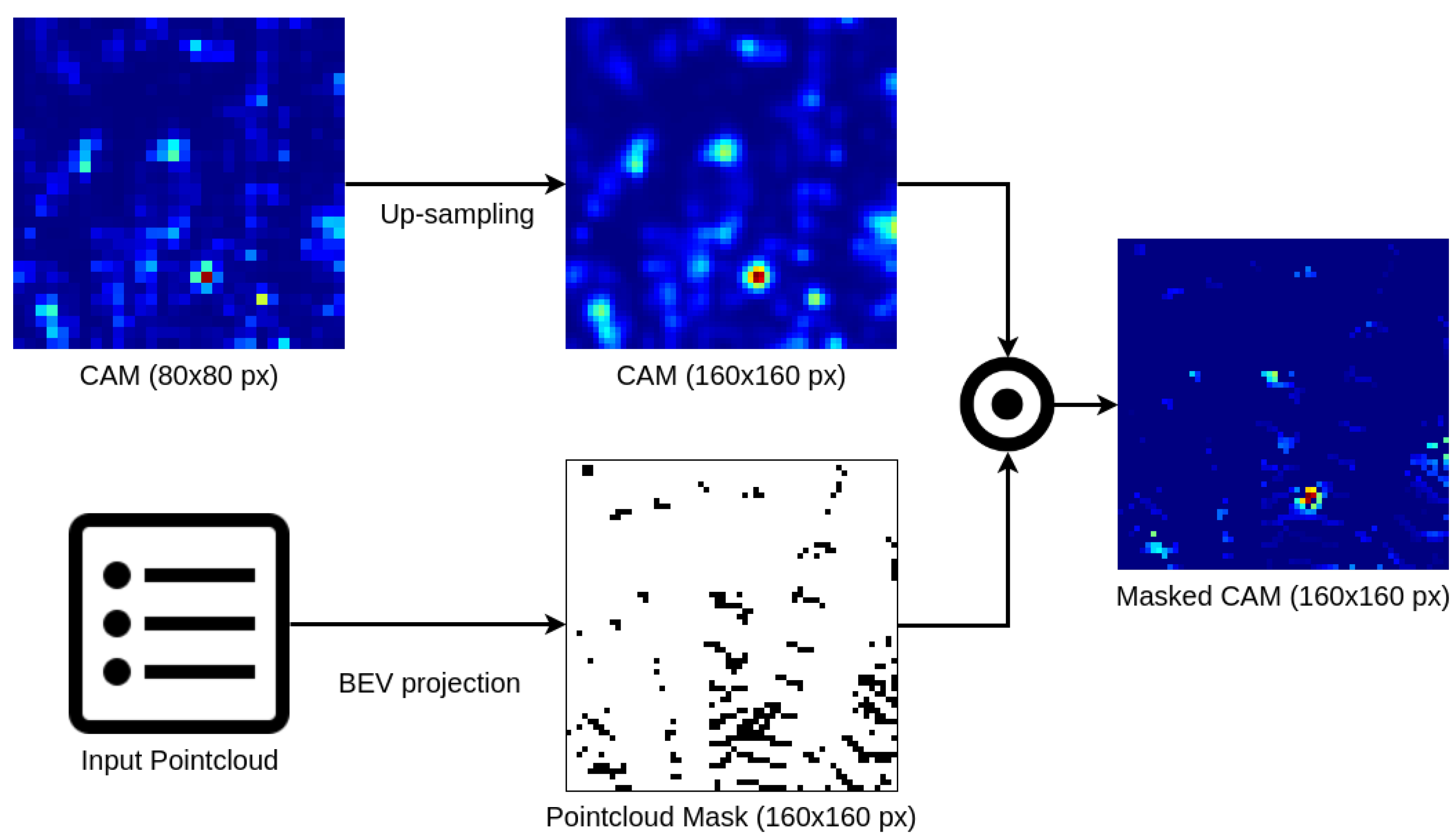

z-axis is performed to reduce tensor dimensions. All those operations enable middle layers to process data in a 2D convolutional matter, at a cost of losing pointwise information inside voxels as well as spatial relations along vertically stacked voxel feature vectors. The consequences of these simplifications affect Class Activation Maps creation, as their domain will be a two dimensional, birds-eye view (BEV) perspective with a grid resolution the same as voxel grid at target convolutional layer. To mitigate the effect of a mismatch between 3D pointcloud data and 2D CAMs, we propose a fused visualization method (

Figure 5). The method is based on an input pointcloud mask, created by casting each point into a BEV with a resolution significantly higher then a CAM size. It is then applied to CAMs by up-sampling them with cubic interpolation and element-wise multiplication with the mask values. This results in high resolution BEV heatmaps with considerably more detailed information around actual LiDAR readings. The size of the mask affects the outcome of such enhancement, thus we describe experiments with different mask resolutions in a following section.

Another area in which adapting a Grad-CAM method to the pointcloud processing model poses a challenge is the output of the network. Arguably, it could have the single greatest effect on the final CAMs result, as the output and related class scores are fundamental components of calculating activations weights via the backpropagation of its gradient to a target activation layer. Due to the model’s purpose and architecture solutions connected to object detection in the form of a single shot detector (SSD), the prediction format is vastly more complex than in a simple classification problem, as illustrated in

Figure 6.

The main goal of processing output in a context of a gradient-based XAI is to obtain a class score value. In an original Grad-CAM use-case for classification problem, the output of the model is a vector with probability predictions for each class. The class score value is the element of this vector under the index corresponding to a given class. In comparison, the SSD object detection model output is a multi-dimensional tensor, where the first two dimensions correspond to the 2D grid of cells that the whole region of interest is divided into. An optional third dimension represents anchor boxes of different sizes, if a model uses them. The last dimension is a per-cell (or anchor) vector of predicted feature values. This multi-feature prediction vector typically consists of fields such as objectiveness score, object position within a cell, object size, and classification probabilities for each class. In this most common output format, we propose the calculation of the class score value using the following formula:

in which the final class score

is a sum of class probability predictions

of every cell in the output grid along first two spatial grid dimensions

, as well as optional anchor dimension

a. Additionally, cells are filtered by predicted objectiveness score

, which needs to be higher then a certain threshold

in order for a cell to be taken into consideration.

Multi-class prediction in SSD models can be also achieved with a different approach. In the method above, we assumed a single prediction output, with a class encoded in a probability vector in every cell. Alternatively, a multiple class prediction mechanism could be embedded into a network structure. At some point, near the output layer, the main processing path branches into several identical prediction heads, one for a single class. Predictions from each head come in a form similar to the previously discussed grid with the exception of a missing classification probability vector in the features. The classification is no longer required due to the fact that, as a result of a training process with class labels properly distributed to corresponding heads, each of them specialize in detecting one particular class exclusively. Dividing the model output into several class-oriented heads could improve model performance, as convolutional filters are optimized separately for each type of objects. It also enables the possibility to define per-class anchor boxes. On the other hand, changing the output format, in particular adding new head dimensions, which constitutes merged head outputs for a single tensor prediction, as well as removing class probability features, affects class score calculation. In case of a multi-head output architecture, we propose following class score formula:

in which, as previously, we sum every cell score along

, but for the head dimension

h we filter only the ones corresponding to a given class

. Addressing the problem of missing class probability features, we decided to use the objectiveness score

, as it substitutes classification probability if a given head yields only this particular class of object.

Class score value is an initial point of a backpropagation algorithm which returns gradient values for a chosen convolutional layer with respect to the score. Those gradients in a Grad-CAM technique are the basis for obtaining weights of activations at a given layer. There are a couple of verified methods to calculate the weights out of the gradient values. In our adaptation to LiDAR pointcloud data, we tried to use both original Grad-CAM and Grad-CAM++ formulas, and both of them show satisfactory results. As the original method is very elegant in its simplicity, yet sufficient for our needs, we decided to use it as is, without altering it in any way.

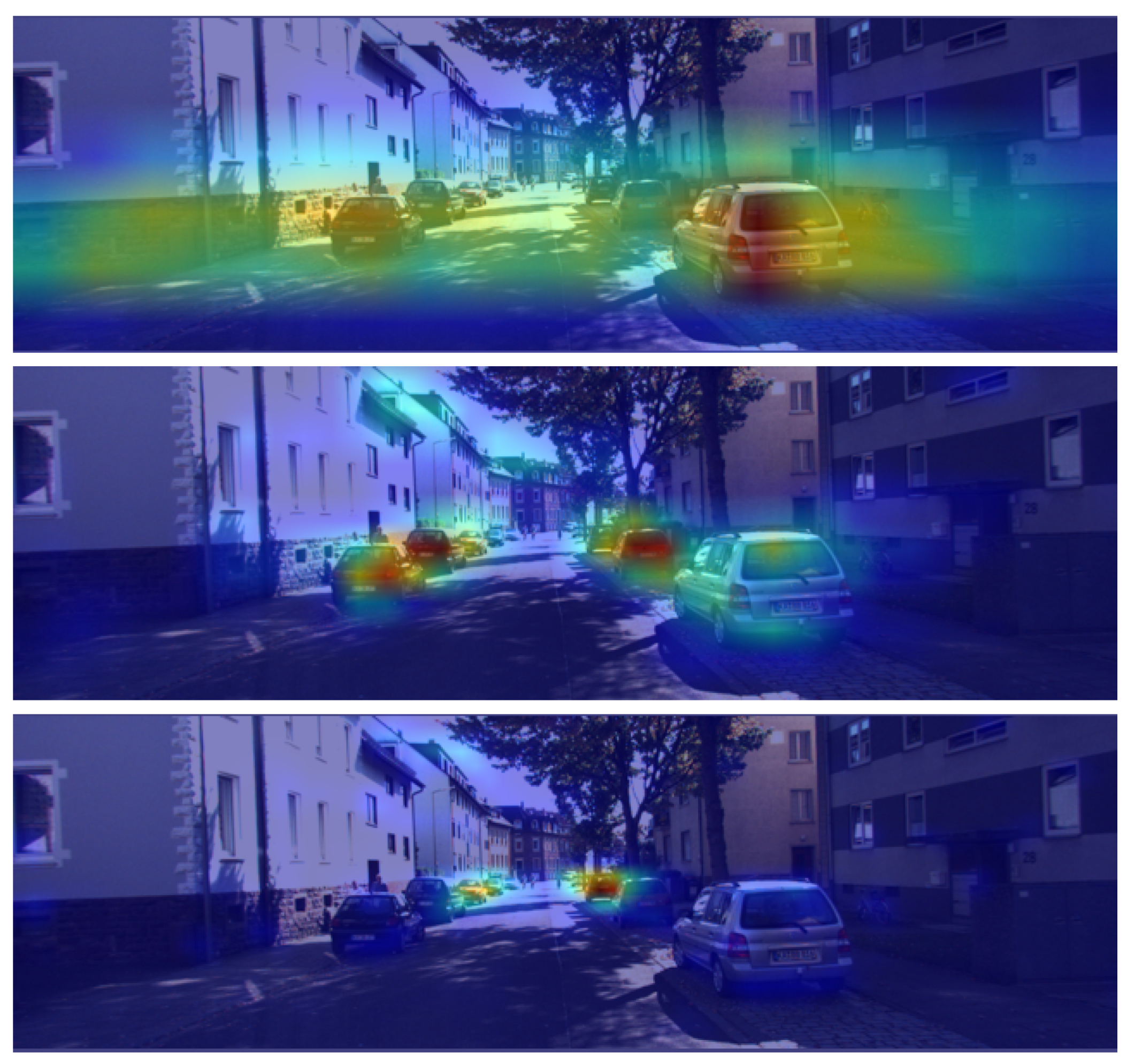

A separate architecture solution is used for detecting objects of the same class which differ significantly in size in selected region of interest (i.e., due to sensor perspective). For this problem, the model outputs several independent tensors, which vary in grid sizes, imitating diversified scales of detections. Creating a single CAM in the case of a multi-scale model is quite problematic as outputs, activations, and gradients tensors are different in size for each scale as well. We suggest applying Grad-CAM methods to every scale independently, as discussed above for single-scale models. The independent visualizations for each scale could prove to be more informative then a one-merge heatmap. Moreover, rescaling and merging CAMs is a much more approachable task in the image domain, thus when a single map is required for multi-scale model, we advise to create CAMs for each scale and merge them as images. The multi-scale model is not the case in our solution, as objects in BEV perspective remain the same with respect to the size, regardless of their relative position to the LiDAR. During our research, we did however try multi-scale CAMs generation for a vision model (

Figure 7), where the same object could have a different size (and best matching anchor) depending on the distance from the camera.

A previously proposed solution applies to one out of two parts of the Grad-CAM method, namely weights of the activations. During our research we noticed that, despite some of the CAMs generated with the use of our adapted technique looking accurate, in other cases they are influenced by some kind of noise. After further investigation, it turned out that even though gradient-based weights are properly calculated, the most relevant activations themselves are distorted, mainly in the parts of a grid where no input data are present. We started to examine the root cause, which we believe is a sparse nature of LiDAR pointcloud after a voxelization process. Due to the fact that a voxel-wise processing is an inseparable step of our network architecture, as well as the vast majority of LiDAR object detection models, we want to address this issue without discarding it altogether. We searched for a solution to the sparsity problem, which led us to incorporating Sparsity Invariant Convolution throughout our model in the place of ordinary convolutional layers. As a result, activations are free of the noise generated by empty input data, which significantly improves the quality of final CAMs, as we show in the next section.

4. Experiments and Results

Explainable AI methods, such as Grad-CAM, show the reasoning behind given model predictions which are more understandable to humans. In order to present our approach and results for a LiDAR pointcloud processing architecture, we first needed to train the actual model that performs the object detection task on the data. We decided to work with a popular, open-source autonomous driving dataset called KITTI [

8]. The sensor suite of the car used to collect it consists of two cameras for stereo vision, a rotating LiDAR sensor mounted on top of the roof and a set of 2D and 3D human-annotated labels for different classes of objects present in the scene. We divided the whole dataset into three subsets: training, validation, and testing, which consist of 4800, 2000, and 500 samples, respectively. We trained the architecture proposed in a previous section for 250 epochs on the training dataset, monitoring the progress with a use of validation examples. All presented results, as well as performance metrics, were calculated on test samples. After the training, we verified our solution using a popular object detection metric called Mean Average Precision (mAP). Our model achieves a mAP score of 0.77 and 0.76 for

car and

track class, respectively, as well as a mAP of 0.53 and 0.51 for

pedestrian and

bicycle class, respectively. Such scores place it in the middle of the official KITTI leader-board for the BEV object detection task. As the purpose of this work is to adapt the Grad-CAM method, and not to obtain highest state-of-the-art score in an object detection, we believe the performance of the model to be satisfactory enough to carry on with it.

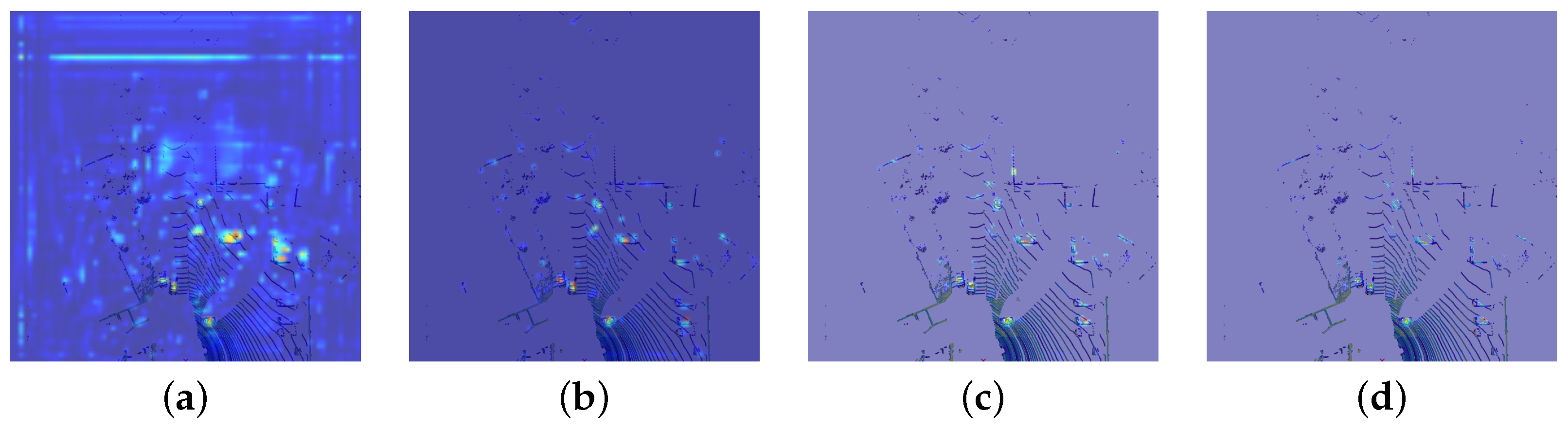

Our initial experiment concerned applying the Grad-CAM method to a LiDAR pointcloud processing network with a minimal modifications needed for it to work when compared to original vision application. The activations are obtained during feed-forward inference, so the only missing element to create CAMs are the weights. In order to determine the activation weights, we needed to propagate gradients from the class score value to a target convolutional layer. We used our proposed algorithm described before for the multi-head network output to accumulate a class score across whole prediction grid, then fed it into a backpropagation algorithm. Then, with a use of an original Grad-CAM formula, we extracted weights from the gradients. We tried different target convolutional layers to calculate CAMs. After checking several possible candidates, the best visual results came from the class head layers near the prediction output. The first Grad-CAM results for pointcloud data (

Figure 8) look appropriate, in a sense that the created map highlights objects in a scene, which proves our method of accumulating a class score across output grid and generating weights captures activations responsible for yielding object predictions. On the other hand, we found them inaccurate detail-wise compared to the sensor resolution and also burdened with significant noise.

Addressing the low resolution problem, in our next attempt, we applied a pointcloud-based mask to a CAM visualization in order to extend the level of detail of the result. The chosen activation layer, as well as the generated CAM, has a resolution of

, whereas pointcloud BEV projection could be performed with any given grid size. The pointcloud overlay in the presented results is

, which corresponds to a 12.5 cm by 12.5 cm cell represented by a single pixel. Therefore, we conducted experiments with pointcloud binary masks of sizes ranging from

to

(

Figure 9). Our main observation regarding pointcloud masks is that, at higher resolution, CAM are extremely filtered to the LiDAR detections, so the integrity of object instances is lost and the visualization looks too grained and thus illegible. On the other hand, a resolution of

yields no improvement to the precision. Hence, we decided to use a

mask resolution, as it compromises between readability and the level of detail. There are also other, not as explicitly visible advantages of applying such a mask. In the CAM domain, before visualization, the values from each weighted activation are genuinely small and the normalization process is needed to visualize them as an 8-bit image. As the masking is done prior to it, some parts of the activation are masked and not taken into account during a normalization, which results in a wider spectrum range for relevant CAM values. This could be observed in different mask visualization backgrounds as well as near highly confident detections.

Although using a pointcloud mask also filters out the vast majority of noise in generated CAMs, we do not consider it a solution to that problem. When part of a CAM which is significantly corrupted by noise happens to be covered with LiDAR detections (i.e., trees, bushes) as well, then the masking process would not filter it properly. We checked our implementation of calculating the class score, gradient backpropagation, and weights generation, but the noise problem is not always present, and some of generated CAMs are clear. Therefore, we searched for the root cause in the last major element of CAMs creation, which is inferred activations.

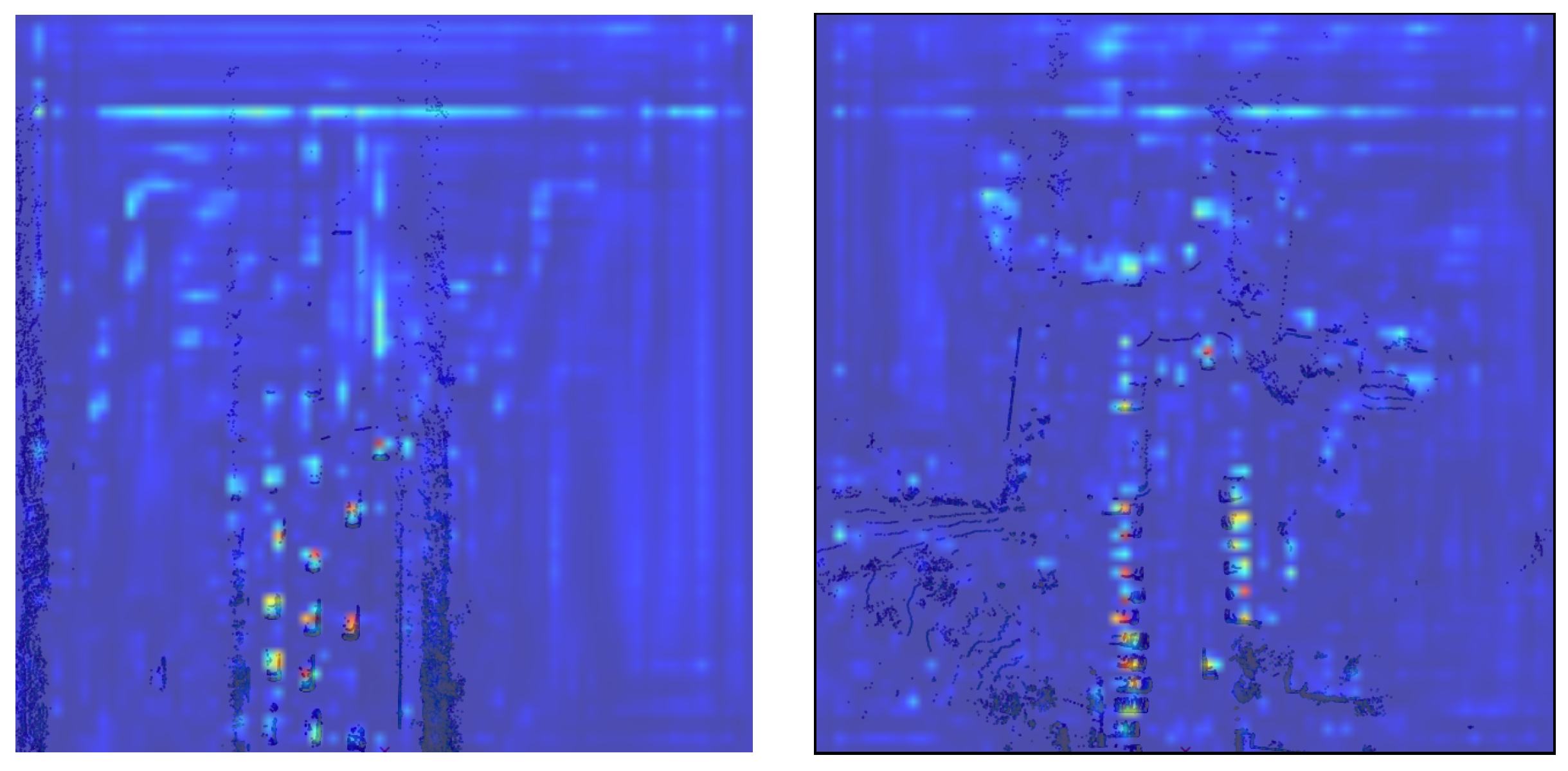

In

Figure 10, we show a visualisation of a single activation which, based on gradient values, has a significant weight for the CAM creation. Even though the activation values in the middle look vital for the CAM, the noise in the corner outbalances the rest and the outcome is corrupted. We found lots of cases similar to presented one, and the common aspect of the noise is that it appears in a part of the grid where input data is empty due to the sparse nature of voxel-wise pointcloud processing. Addressing this problem poses a challenge, as activations are obtained during feed-forward inference, which depends largely on the network structure and trained weights. For our final experiment, we changed every convolutional layer in our network architecture to a Sparsity-Invariant Convolution with similar parameters and retrained the whole model. Then, we repeated the activation analysis and found significant improvement over the previous model. Additionally, in

Figure 10, we present the exact same sample generated with the similar CAM method, but with the use of a different model. Sparsity Invariant Convolutions drastically reduced the noise generated by empty input data, and the resulting CAM is clearer with improved distinction of important regions.

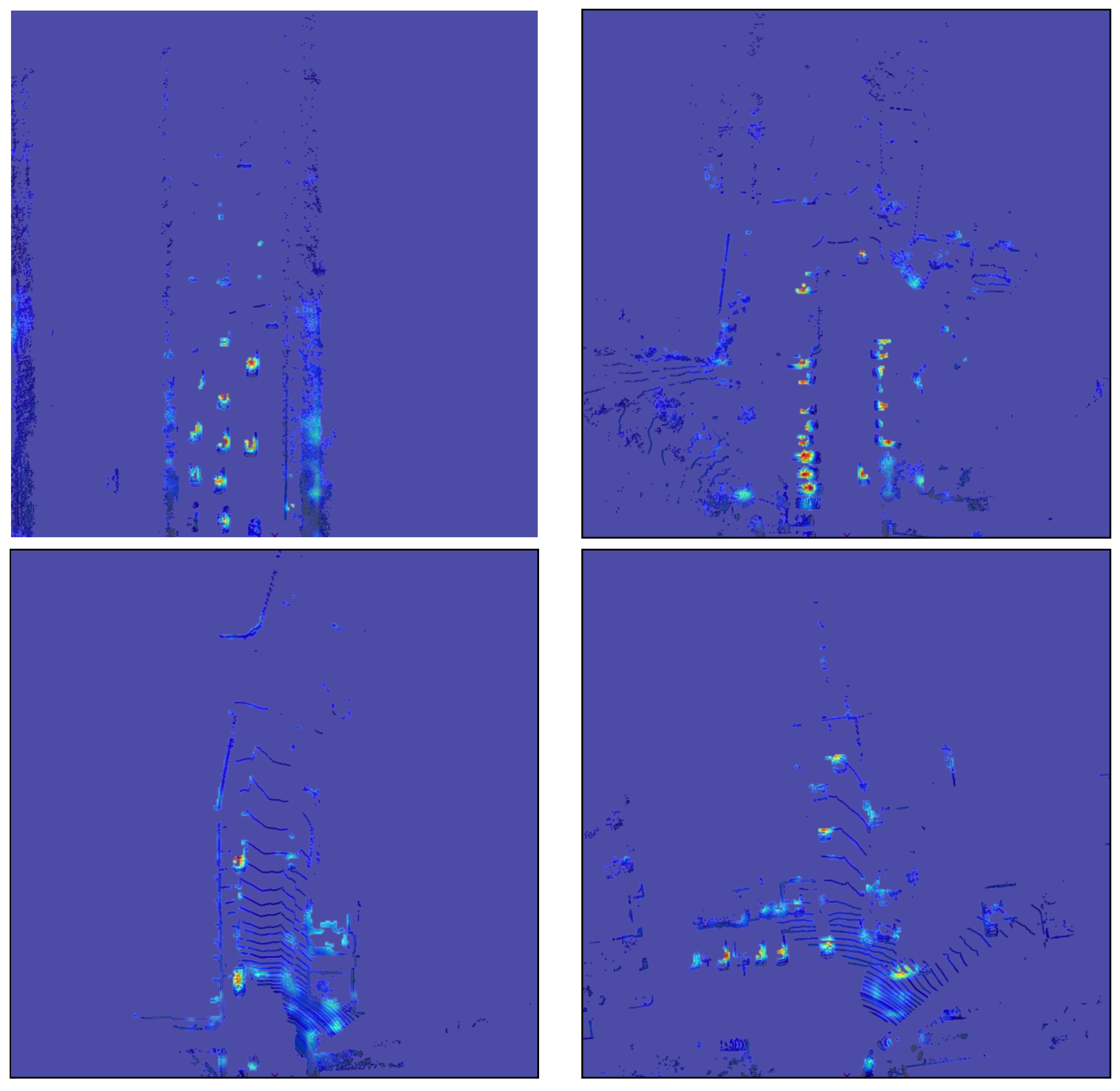

Combining all of our proposed solutions for Grad-CAM adaptation to LiDAR pointcloud network, in

Figure 11 we demonstrate the final result of our work. We use voxel-wise processing and 2D Sparsity Invariant Convolutions to infer model output, as well as intermediate activations from a target convolutional layer. Then, based on the proposed algorithm, we determine the class score from a multi-head, multi-feature model output tensor. With backpropagation, we find gradients for target layer with respect to the class score, and then calculate the activation weights for CAMs creation. Finally, we fuse obtained CAMs with a LiDAR pointcloud mask to enhance them with a higher level of detail. The final result is a high resolution, detailed heatmap of input significance for an object detection task on a LiDAR sensor pointcloud, which is also free of any undesirable noise that could disrupt human visual analysis. In

Figure 12, we compare results of Grad-CAM visualization for different classes of LiDAR object detections, namely

car,

track,

bicycle, and

pedestrian predictions.

5. Conclusions

In this article, we presented an adaptation of a gradient-based XAI method called Grad-CAM from an original camera image domain to a LiDAR pointcloud data. Our proposed Grad-CAM solution for processing a new data format, as well as a unique network structure used in LiDAR object detection architecture, achieves said task with most satisfactory results. Using our method, researchers could visualize pointcloud neural network results with respect to the input data to help understand and explain the decision-making process within a trained model, which was previously limited to the image networks domain. Additionally, such visual interpretation could help during the development process, as it highlights problems in a way that is easy to understand by a human.

We encountered one such problems during this research, namely noise in generated CAMs. With the use of activation heatmap visualization, we were able to determine the sparse data issue after voxelization and correct it with Sparsity Invariant Convolutions to obtain even better results. The drawback of this adjustment is the need to replace old convolution operations in a network structure and retrain the whole model but, as we have shown, this type of convolutional layer is much more suitable for sparse pointcloud data.

For future work, we would like to investigate the sparse pointcloud data problem even further. Although Sparsity Invariant Convolutions address the problem, they are still processing dense tensors with lots of empty values. An interesting outcome might be derived from using sparse tensors representation with a truly sparse convolution implementation applied to it. The resulting model should also be explainable with our Grad-CAM approach, as long as gradients would propagate back from a class score to a target layer.

Lastly, we presented our adaptation from a camera image domain to LiDAR pointcloud data only. Among automotive sensors, radars are also widely used in production perception systems. Progressively more such systems are being developed with the use of neural networks, which still lack XAI methods such as Grad-CAM. Radar and LiDAR pointcloud data formats differ, but they are more related to each other than to a camera image, thus only moderate changes would need to be performed in order to apply our method to radar networks as well.