Energy-Saving SSD Cache Management for Video Servers with Heterogeneous HDDs

Abstract

:1. Introduction

- We propose a new method of using an SSD cache to minimize overall HDD power consumption in video storage systems with heterogeneous HDDs by taking account of different HDD power characteristics.

- We propose an SSD storage management technique that allows files to be cached on an SSD with the aim of maximizing the sum of HDD energy saving as a result of I/O processing.

- We propose an SSD bandwidth management technique that allows the SSD to handle energy-intensive I/O tasks first, thereby saving more energy.

- We extensively evaluate the proposed scheme in terms of SSD size and bandwidth, popularity model, and number of HDDs.

2. Related Work

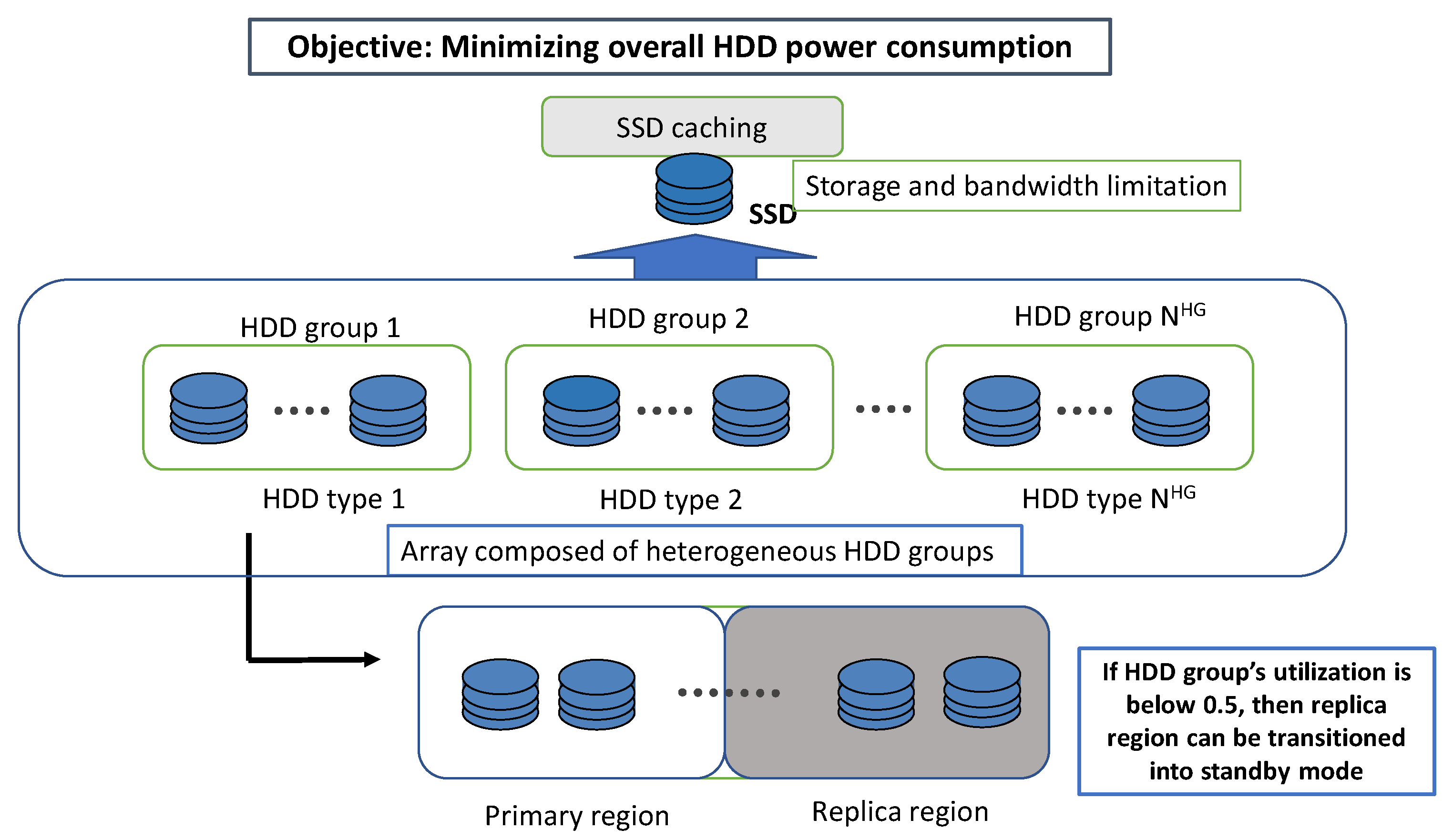

3. System Model

4. SSD Caching Determination

4.1. Algorithm Concept

4.2. SSD Caching Determination

5. SSD Bandwidth Management

5.1. Problem Formulation

- Seek phase: The total seek time of the HDD group m, is calculated as follows:As a result, the energy required in the seek phase, , is calculated as:

- Active phase: The total time taken to read data for , is expressed as:Therefore, the active energy, , is calculated as:

- Idle phase: If no HDD activity is occurring, the HDD is rotating without reading or seeking, which requires the power of . We calculate the total idle time for seconds by subtracting the seek and read times from . However, if I/O utilization over all HDDs in the HDD group is less than or equal to 0.5, then half of HDDs can be put into standby mode, halving the idle time. Let be the I/O utilization for an HDD group m when requests from the first to nth elements of are served from the SSD. is then calculated as follows:Therefore, idle time, can be calculated as follows:Thus, the energy required in the idle phase, , can be calculated as:

- Standby phase: If , then half of HDDs can be put into standby mode. If is the standby power for an HDD group m, then can be calculated as follows:

5.2. SSD Request Selection (SRS) Algorithm

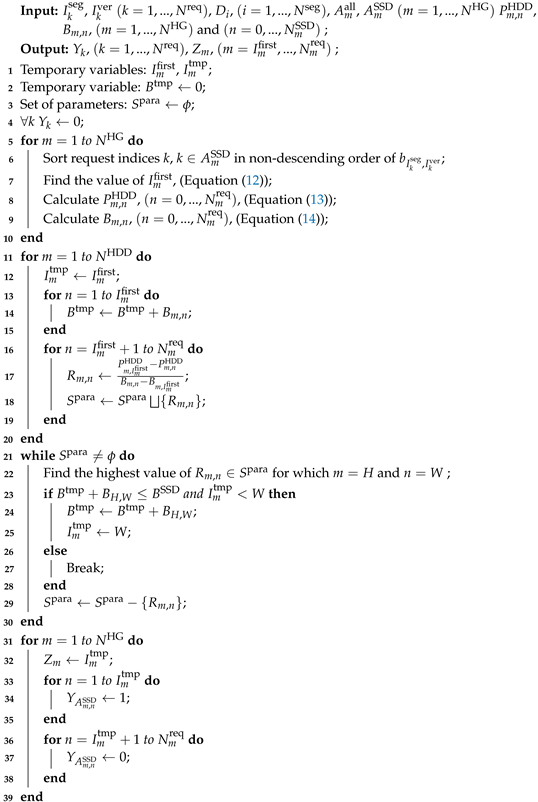

| Algorithm 1: SSD request selection(SRS) algorithm. |

|

6. Experimental Results

6.1. Experimental Setup

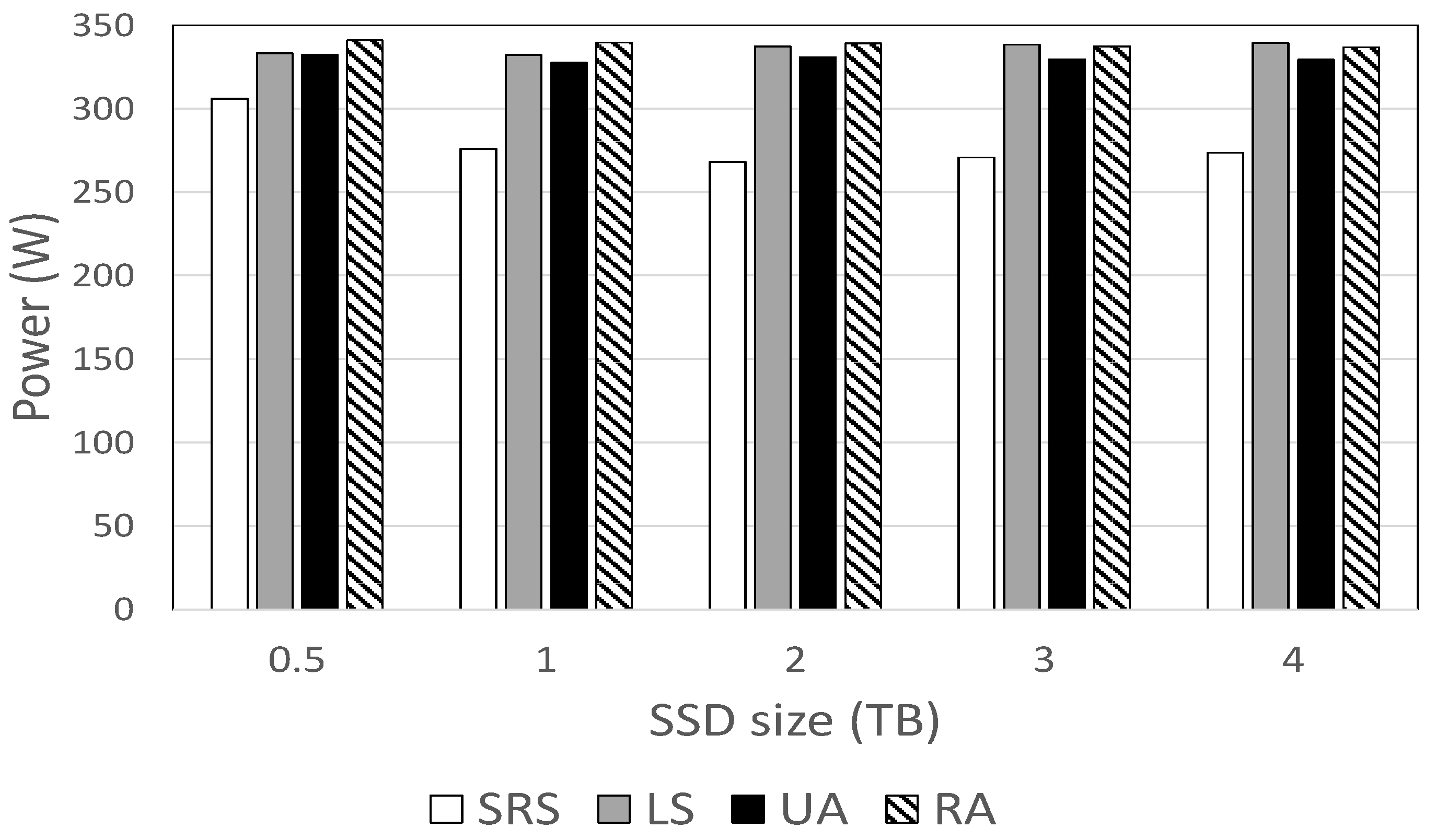

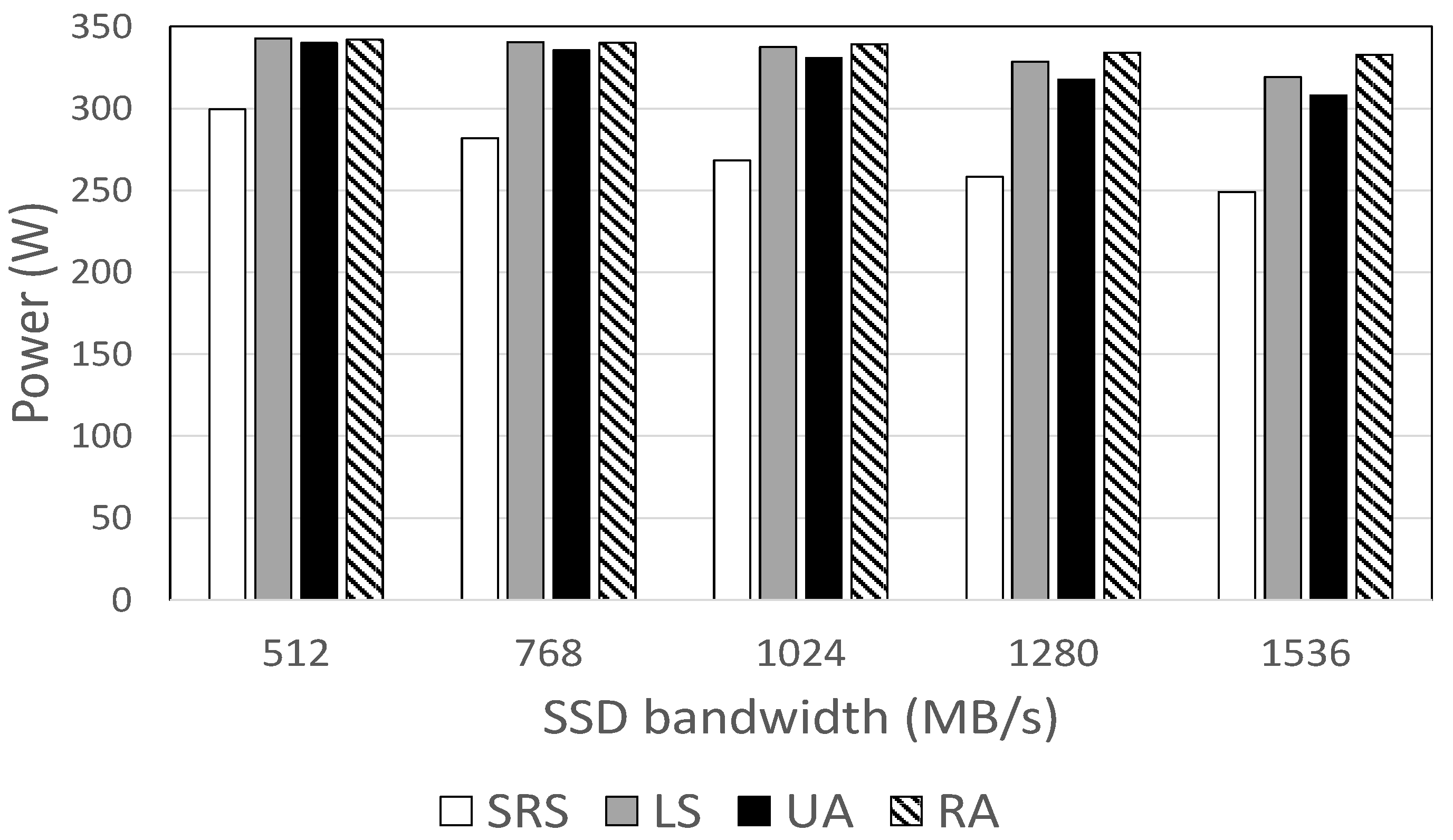

- Lowest bitrate first selection (LS): To reduce the number of seek operations on the HDD, it is required to handle requests for the lowest bitrate possible on the SSD [10]. The LS scheme first selects the request with the lowest bitrate as long as it satisfies the SSD bandwidth limit.

- Random allocation (RA): This method randomly selects requests handled by the SSD subject to the SSD bandwidth limitation.

- Uniform allocation (UA): This method alternately selects the requests for the lowest bitrate version from each HDD group one by one subject to the SSD bandwidth limitation.

- HVP: High-bitrate versions are popular, (, , , , , , , ).

- LVP: Low-bitrate versions are popular, (, , , , , , , ).

- MVP: Medium-bitrate versions are popular, (, , , , , , , ).

| Resolution | 1920 × 1080 | 1600 × 900 | 1280 × 720 | 1024 × 600 | 854 × 480 | 640 × 360 | 426 × 240 |

|---|---|---|---|---|---|---|---|

| Bitrate (Mbps) | 15.36 | 10.64 | 9.60 | 4.55 | 3.04 | 1.70 | 0.76 |

6.2. HDD Power Consumption Comparison for Different SSD Sizes

6.3. HDD Power Consumption Comparison for SSD Bandwidth

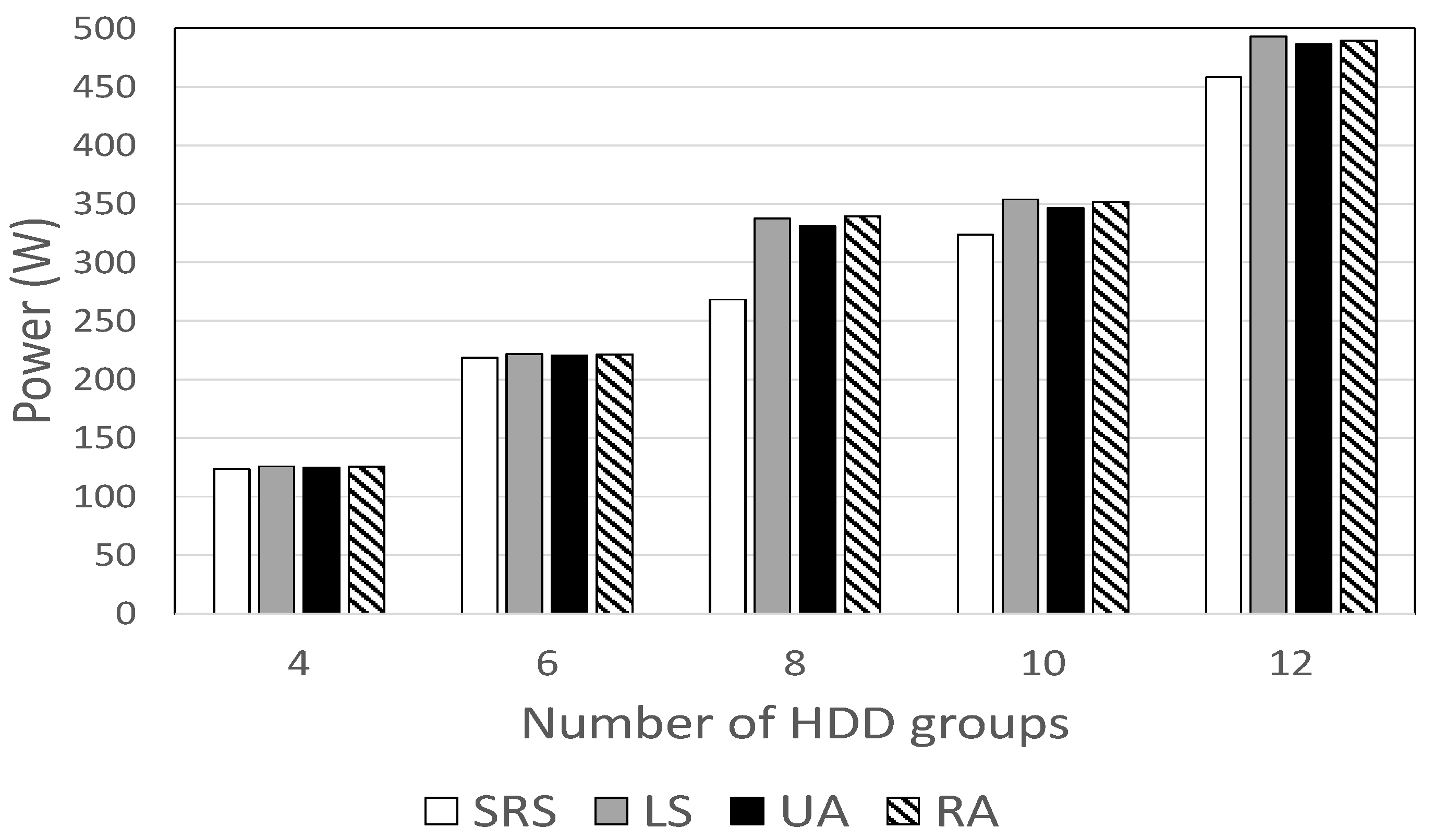

6.4. Effect of the Number of HDD Groups

6.5. Effect of Version Popularity

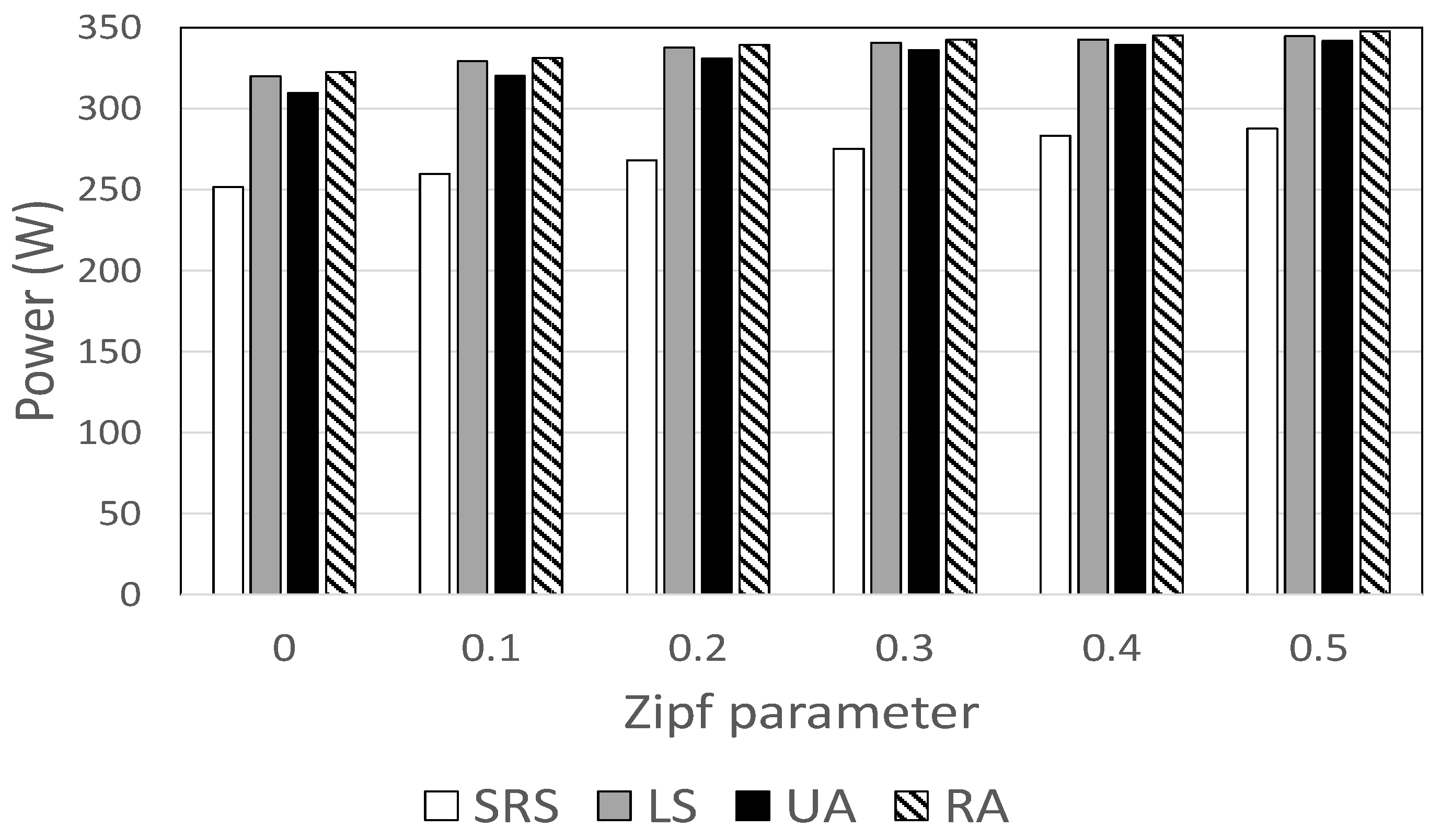

6.6. Effect of Zipf Parameters

6.7. Comparison of Power Consumption in Gamma Popularity Distribution

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Available online: https://www.insiderintelligence.com/insights/netflix-subscribers/ (accessed on 12 May 2022).

- Available online: https://fortunelords.com/youtube-statistics/ (accessed on 12 May 2022).

- Krishnappa, D.; Zink, M.; Sitaraman, R. Optimizing the video transcoding workflow in content delivery networks. In Proceedings of the ACM Multimedia Systems Conference, Portland, OR, USA, 18–20 March 2015; pp. 37–48. [Google Scholar]

- Gao, G.; Wen, Y.; Westphal, C. Dynamic priority-based resource provisioning for video transcoding with heterogeneous QoS. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 1515–1529. [Google Scholar] [CrossRef]

- Mao, H.; Netravali, R.; Alizadeh, M. Neural adaptive video streaming with Pensieve. In Proceedings of the ACM Special Interest Group on Data Communication, New York, NY, USA, 19–23 August 2017; pp. 197–210. [Google Scholar]

- Spiteri, K.; Urgaonkar, R.; Sitaraman, R. BOLA: Near-optimal bitrate adaptation for online videos. In Proceedings of the IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–14 April 2016; pp. 1–9. [Google Scholar]

- Lee, D.; Song, M. Quality-aware transcoding task allocation under limited power in live-streaming system. IEEE Syst. J. 2021, 1–12. [Google Scholar] [CrossRef]

- Available online: https://support.google.com/youtube/answer/2853702 (accessed on 12 May 2022).

- Zhao, H.; Zheng, Q.; Zhang, W.; Du, B.; Li, H. A segment-based storage and transcoding trade-off strategy for multi-version VoD systems in the cloud. IEEE Trans. Multimed. 2017, 19, 149–159. [Google Scholar] [CrossRef]

- Song, M. Minimizing power consumption in video servers by the combined use of solid-state disks and multi-speed disks. IEEE Access 2018, 6, 25737–25746. [Google Scholar] [CrossRef]

- Han, H.; Song, M. QoE-aware video storage power management based on hot and cold data classification. In Proceedings of the ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Amsterdam, The Netherlands, 15 June 2018; pp. 7–12. [Google Scholar]

- Kadekodi, S.; Rashmi, K.; Ganger, G. Cluster storage systems gotta have HeART: Improving storage efficiency by exploiting disk-reliability heterogeneity. In Proceedings of the USENIX Conference on File and Storage Technologies, Boston, MA, USA, 25–28 February 2019; pp. 345–358. [Google Scholar]

- Kadekodi, S.; Maturana, F.; Subramanya, S.; Yang, J.; Rashmi, K.; Ganger, G. PACEMAKER: Avoiding HeART attacks in storage clusters with disk-adaptive redundancy. In Proceedings of the USENIX Symposium on Operating Systems Design and Implementation, Online, 4–6 November 2020; pp. 369–385. [Google Scholar]

- Matko, V.; Brezovec, B. Improved data center energy efficiency and availability with multilayer node event processing. Energies 2018, 11, 2478. [Google Scholar] [CrossRef] [Green Version]

- Bosten, T.; Mullender, S.; Berber, Y. Power-reduction techniques for data-center storage systems. ACM Comput. Surv. 2013, 45, 3. [Google Scholar] [CrossRef]

- Fernández-Cerero, D.; Fernández-Montes, A.; Velasco, F. Productive efficiency of energy-aware data centers. Energies 2018, 11, 2053. [Google Scholar] [CrossRef] [Green Version]

- Dayarathna, M.; Wen, Y.; Fan, R. Data center energy consumption modeling: A survey. IEEE Commun. Surv. Tutor. 2016, 18, 732–794. [Google Scholar] [CrossRef]

- Yuan, H.; Ahmad, I.; Kuo, C.J. Performance-constrained energy reduction in data centers for video-sharing services. J. Parallel Distrib. Comput. 2015, 75, 29–39. [Google Scholar] [CrossRef]

- Niu, J.; Xu, J.; Xie, L. Hybrid storage systems: A survey of architectures and algorithms. IEEE Access 2018, 6, 13385–13406. [Google Scholar] [CrossRef]

- Li, W.; Baptise, G.; Riveros, J.; Narasimhan, G.; Zhang, T.; Zhao, M. Cachededup: In-line deduplication for flash caching. In Proceedings of the USENIX Conference on File and Storage Technologies, Santa Clara, CA, USA, 27 February–2 March 2016; pp. 301–314. [Google Scholar]

- Arteaga, D.; Cabrera, J.; Xu, J.; Sundararaman, S.; Machines, P.; Zhao, M. Cloudcache: On-demand flash cache management for cloud computing. In Proceedings of the USENIX Conference on File and Storage Technologies, Santa Clara, CA, USA, 27 February–2 March 2016; pp. 355–369. [Google Scholar]

- Ryu, M.; Ramachandran, U. Flashstream: A multi-tiered storage architecture for adaptive HTTP streaming. In Proceedings of the ACM Multimedia Conference, New York, NY, USA, 21–25 October 2013; pp. 313–322. [Google Scholar]

- Manjunath, R.; Xie, T. Dynamic data replication on flash SSD assisted Video-on-Demand servers. In Proceedings of the IEEE International Conference on Computing, Networking and Communications, Maui, HI, USA, 30 January–2 February 2012; pp. 502–506. [Google Scholar]

- Machida, F.; Hasebe, K.; Abe, H.; Kato, K. Analysis of optimal file placement for energy-efficient file-sharing cloud storage system. IEEE Trans. Sustain. Comput. 2022, 7, 75–86. [Google Scholar] [CrossRef]

- Karakoyunlu, C.; Chandy, J. Exploiting user metadata for energy-aware node allocation in a cloud storage system. J. Comput. Syst. Sci. 2016, 82, 282–309. [Google Scholar] [CrossRef]

- Behzadnia, P.; Yuan, W.; Zeng, B.; Tu, Y.; Wang, X. Dynamic power-aware disk storage management in database servers. In Proceedings of the International Conference on Database and Expert Systems Applications, Porto, Portugal, 5–8 September 2016; pp. 315–325. [Google Scholar]

- Khatib, M.; Bandic, Z. PCAP: Performance-aware power capping for the disk drive in the cloud. In Proceedings of the USENIX USENIX Conference on File and Storage Technologies, Santa Clara, CA, USA, 22–25 February 2016; pp. 227–240. [Google Scholar]

- Segu, M.; Mokadem, R.; Pierson, J. Energy and expenditure aware data replication strategy. In Proceedings of the IEEE International Conference on Cloud Computing, Milan, Italy, 8–13 July 2019; pp. 421–426. [Google Scholar]

- Hu, C.; Deng, Y. Aggregating correlated cold data to minimize the performance degradation and power consumption of cold storage nodes. J. Supercomput. 2019, 75, 662–687. [Google Scholar] [CrossRef]

- Park, C.; Jo, Y.; Lee, D.; Kang, K. Change your cluster to cold: Gradually applicable and serviceable cold storage design. IEEE Access 2019, 7, 110216–110226. [Google Scholar] [CrossRef]

- Lee, J.; Song, C.; Kim, S.; Kang, K. Analyzing I/O request characteristics of a mobile messenger and benchmark framework for serviceable cold storage. IEEE Access 2017, 5, 9797–9811. [Google Scholar] [CrossRef]

- Chai, Y.; Du, Z.; Bader, D.; Qin, X. Efficient data migration to conserve energy in streaming media storage systems. IEEE Trans. Parallel Distrib. Syst. 2012, 23, 2081–2093. [Google Scholar] [CrossRef] [Green Version]

- Song, M.; Lee, Y.; Kim, E. Saving disk energy in video servers by combining caching and prefetching. ACM Trans. Multimed. Comput. Commun. Appl. 2014, 10, 15. [Google Scholar] [CrossRef]

- Salkhordeh, R.; Hadizadeh, M.; Asadi, H. An efficient hybrid I/O caching architecture using heterogeneous SSDs. IEEE Trans. Parallel Distrib. Syst. 2019, 30, 1238–1250. [Google Scholar] [CrossRef] [Green Version]

- Tomes, E.; Altiparmak, N. A comparative study of HDD and SSD RAIDs’ impact on server energy consumption. In Proceedings of the IEEE International Conference on Cluster Computing, Honolulu, HI, USA, 5–8 September 2017; pp. 625–626. [Google Scholar]

- Huang, T.; Chang, D. TridentFS: A hybrid file system for non-volatile RAM, flash memory and magnetic disk. Softw. Pract. Exp. 2016, 46, 291–318. [Google Scholar] [CrossRef]

- Hui, J.; Ge, X.; Huang, X.; Liu, Y.; Ran, Q. E-hash: An energy-efficient hybrid storage system composed of one SSD and multiple HDDs. Springer Lect. Notes Comput. Sci. 2012, 7332, 527–534. [Google Scholar]

- Chen, C.; Zhang, X.; Zhao, D.X.K.; Zhang, T. Realizing low-cost flash memory based video caching in content delivery systems. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 984–996. [Google Scholar]

- Zhang, Z.; Chan, S. An approximation algorithm to maximize user capacity for an auto-scaling VoD system. IEEE Trans. Multimed. 2021, 23, 3714–3725. [Google Scholar]

- Schindler, J.; Griffin, J.; Lumb, C.; Ganger, G. Track-aligned extents: Matching access patterns to disk drive characteristics. In Proceedings of the USENIX Conference on File and Storage Technologies, Monterey, CA, USA, 20–30 January 2002; pp. 175–186. [Google Scholar]

- Pisinger, D. Algorithms for Knapsack Problems. Ph.D. Thesis, University of Copenhagen, Copenhagen, Denmark, 1995. [Google Scholar]

- Available online: https://www.seagate.com/www-content/datasheets/pdfs/ironwolf-pro-14tb-DS1914-7-1807US-en_US.pdf (accessed on 12 May 2022).

- Available online: https://www.seagate.com/www-content/datasheets/pdfs/skyhawk-3-5-hddDS1902-7-1711US-en_US.pdf (accessed on 12 May 2022).

- Available online: https://www.westerndigital.com/ko-kr/products/internal-drives/wd-gold-sata-hdd#WD1005FBYZ (accessed on 12 May 2022).

- Dan, A.; Sitaram, D.; Shahabuddin, P. Dynamic batching policies for an on-demand video server. ACM/Springer Multimed. Syst. J. 1996, 4, 112–121. [Google Scholar] [CrossRef]

- Yu, H.; Zheng, D.; Zhao, B.Y.; Zheng, W. Understanding user behavior in large-scale Video-on-Demand systems. In Proceedings of the ACM European Conference on Computer Systems, New York, NY, USA, 18–21 April 2006; pp. 333–344. [Google Scholar]

- Zhou, Y.; Chen, L.; Yang, C.; Chiu, D. Video popularity dynamics and its implications for replication. IEEE Trans. Multimed. 2015, 17, 1273–1285. [Google Scholar] [CrossRef]

- Available online: https://en.wikipedia.org/wiki/Gamma_distribution (accessed on 12 May 2022).

| Notation | Meaning |

|---|---|

| Number of video segments | |

| Number of video versions | |

| Number of video requests | |

| jth bitrate version for segment i | |

| bitrate of version | |

| Number of HDDs in HDD group | |

| Number of HDD groups | |

| Segment length (seconds) | |

| Seek time of HDDs in group m | |

| Transfer rate of HDDs in group m | |

| HDD group index of segment i | |

| Time needed to read version in segment | |

| I/O utilization for | |

| Access probability for | |

| Popularity of segment i | |

| , , , | Seek, active, idle and standby power for HDDs in group m |

| Energy gain by serving from SSD | |

| Size of in MB | |

| Variable indicating whether is cached on SSD | |

| Size of SSD in MB | |

| Array of all request indices to HDD group m | |

| Array of requests for SSD among | |

| Number of requests in | |

| Video segment index for request k | |

| Version index for request k | |

| Variable indicating whether request k is served by SSD | |

| Nth element in | |

| I/O Utilization of HDD group m when requests in are served by SSD | |

| , | Seek and active energy when requests from to are served by SSD |

| , | Idle and standby energy when requests from to are served by SSD |

| , | Seek and active time when requests from to are served by SSD |

| , | Idle and standby time when requests from to are served by SSD |

| Variable indicating whether requests from to are served by SSD | |

| Lowest value of n that satisfies the condition: | |

| Power for HDD m when requests from to are served by SSD | |

| SSD bandwidth of SSD in MB/s | |

| SSD bandwidth when requests from to are served by SSD |

| Parameters | Description | Default Values | Ranges Used in the Experiments |

|---|---|---|---|

| SSD size | 2 TB | 500 GB ∼ 4 TB | |

| SSD bandwidth | 1 GB/s | 0.5 GB/s ∼ 1.5 GB/s | |

| Version popularity | N/A | MVP | HVP, MVP, LVP |

| Zipf parameter | 0.271 | 0.0 ∼ 0.5 | |

| Number of the HDD groups | 8 | 4 ∼ 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, K.; Song, M. Energy-Saving SSD Cache Management for Video Servers with Heterogeneous HDDs. Energies 2022, 15, 3633. https://doi.org/10.3390/en15103633

Kim K, Song M. Energy-Saving SSD Cache Management for Video Servers with Heterogeneous HDDs. Energies. 2022; 15(10):3633. https://doi.org/10.3390/en15103633

Chicago/Turabian StyleKim, Kyungmin, and Minseok Song. 2022. "Energy-Saving SSD Cache Management for Video Servers with Heterogeneous HDDs" Energies 15, no. 10: 3633. https://doi.org/10.3390/en15103633

APA StyleKim, K., & Song, M. (2022). Energy-Saving SSD Cache Management for Video Servers with Heterogeneous HDDs. Energies, 15(10), 3633. https://doi.org/10.3390/en15103633