Abstract

Accurately forecasting carbon prices is key to managing associated risks in the financial market for carbon. To this end, the traditional strategy does not adequately decompose carbon prices, and the kernel extreme learning machine (KELM) with a single kernel function struggles to adapt to the nonlinearity, nonstationarity, and multiple frequencies of regional carbon prices in China. This study constructs a model, called the VMD-ICEEMDAN-RE-SSA-HKELM model, to forecast regional carbon prices in China based on the idea of ‘decomposition–reconstruction–integration’. The VMD is first used to decompose carbon prices and the ICEEMDAN is then used to decompose the residual term that contains complex information. To reduce the systematic error caused by increases in the mode components of carbon price, range entropy (RE) is used to reconstruct the results of its secondary decomposition. Following this, HKELM is optimized by the sparrow search algorithm and used to forecast each subseries of carbon prices. Finally, predictions of the price of carbon are obtained by linearly superimposing the results of the forecasts of each of its subseries. The results of experiments show that the secondary decomposition strategy proposed in this paper is superior to the traditional decomposition strategy, and the proposed model for forecasting carbon prices has significant advantages over a considered reference group of models.

1. Introduction

The warming climate is a global environmental problem that threatens the sustainable development of human societies. It is mainly caused by excessive carbon emissions, and emissions reduction has thus emerged as the common goal of all countries to mitigate the harmful effects of climate change [1]. The emission reduction of carbon dioxide will contribute enormously to meeting the global climate target [2]. To promote carbon emission reduction, the carbon trading market, based on rights to carbon dioxide emissions, has developed rapidly in recent decades. As the largest developing country and the single largest emitter of greenhouse gases [3,4], China has been attempting to reduce its greenhouse gas emissions. It has established pilot carbon trading markets in Beijing, Tianjin, Shanghai, Chongqing, Guangzhou, Hubei, Shenzhen, and Fujian since 2013. China’s national carbon emissions trading market was officially launched online on 16 July 2021, and is a significant step in helping the country to reduce its carbon footprint and meet its emissions reduction targets. However, the national carbon market remains in its infancy, and needs to be developed in terms of coverage, system design, and market operation. China’s national carbon market still relies on regional carbon markets to continue to provide information and experience [5]. Regional carbon markets are active and help promote regional energy transformation and the development of green and low-carbon industries [6]. The carbon trading market is a powerful means for China to realize the goal of peak carbon dioxide emissions and carbon neutrality. Changes in the price of carbon in the market have an important impact on industry, energy, agricultural development, and stock investment. Accurate forecasting of regional carbon market prices in China cannot only inform theoretical research on forecasting but can also provide an effective basis for regulators to formulate policies on carbon pricing and for investors to avoid risks caused by changes in price.

The remainder of this paper is organized as follows: Section 2 contains the literature review. Section 3 describes the main methods and theories used here, and then details the proposed model for compound forecasting of the price of carbon: the VMD-ICEEMDAN-RE-SSA-HKELM. Section 4 provides an empirical analysis of forecasting regional carbon market prices in China based on the proposed model. Section 5 summarizes the main conclusions of this study and suggests directions for future research in the area.

2. Literature Review

Current methods for forecasting regional carbon prices in China can be divided into three types: traditional econometric models, artificial intelligence (AI)-based methods, and a combination of models. Traditional econometric models, including the generalized autoregressive conditional heteroscedasticity (GARCH) model [7], the structural vector autoregressive model [8], and the E-GARCH model [9], have been widely applied to forecast pilot carbon prices in China. Although the traditional econometric model has strong explanatory power, it cannot adequately describe the nonlinear characteristics of the time series of carbon price. Compared with the traditional statistical model, AI-based methods of forecasting have better capabilities regarding learning, generalization, speed of calculation, and forecasting of nonlinear time series data. They have thus been widely used to forecast carbon prices. Han et al. (2019) introduced the backpropagation neural network (BPNN) to reflect the nonlinear patterns of weekly carbon prices in Shenzhen [10]. A neural network premised on the radial basis function (RBF) was optimized by particle swarm optimization and used to forecast carbon prices. The results showed that it can provide more accurate forecasts than BPNN [11]. Xie et al. (2022) [12] used the long short-term memory network and random forest model to forecast carbon prices in the Hubei and Guangdong carbon markets, and demonstrated the applicability of these models for carbon price forecasting.

Fluctuations in the financial market for carbon prices in China have complex characteristics, such as nonlinearity, nonstationarity, and a wide range, due to the influence of the macro-economy; the prices of coal, oil, and natural gas; trading policies; and trading volumes [13,14]. A single model can only capture limited information regarding carbon prices and cannot adequately describe any complex fluctuations in price. A combination of models with a decomposition algorithm is the most common method for forecasting carbon prices. Signal decomposition can deal with the nonlinearity and nonstationarity of the data on carbon prices from a multi-scale perspective to identify their internal laws and essential characteristics [15]. Li and Lu (2015) [16] used empirical mode decomposition (EMD) and the GARCH model to forecast nonstationary carbon prices and proved that EMD can decompose unsteady data on carbon prices into a steady series. Yao et al. (2017) [17] used EMD and SVM to forecast carbon prices and showed that the forecasting ability of the combined model is better than the ability of AR and SVM alone. However, mode aliasing can easily occur when EMD is used to decompose carbon prices. Considering this problem, EEMD, VMD, and other methods for signal processing have been used to decompose carbon prices. Zhou et al. (2018) developed a hybrid architecture that combines the EEMD, extreme learning machine (ELM), SVM, and PSO-ELM for forecasting the price of carbon, and their results showed that the forecasting accuracy of the combined model was higher than each of the ELM, BPNN, and SVM [18]. LSSVM converges more quickly and is simpler than SVM. Wu and Liu (2020) forecasted carbon prices based on EEMD and LSSVM and verified that EEMD can reduce the complexity regarding fluctuations in the carbon price and yield better forecasts than the single LSSVM [19]. However, EEMD still encounters the problem of residual noise. A combination of the complementary ensemble-based empirical mode decomposition with adaptive noise (CEEMDAN) and RBFNN yields high accuracy in terms of predicting carbon prices [20]. However, the CEEMDAN method may still incur residual noise, and signal information in the early stage of decomposition lags [21]. Hao et al. (2020) designed a hybrid method that integrates the improved complementary ensemble-based empirical mode decomposition with adaptive noise (ICEEMDAN), the sample entropy (SE) algorithm, and the weighted regularized-based extreme learning machine to forecast carbon prices. The results showed that ICEEMDAN can improve the accuracy of carbon price forecasting to a greater extent than each of EMD and EEMD [22]. Zhou and Chen (2021) designed the ICEEMDAN-SSA-ELM model to forecast carbon prices, and the empirical results proved that the results of the decomposition of prices obtained by ICEEMDAN are more regular than those of CEEMDAN and EEMD. Moreover, the forecasting performance of ELM was better than the performances of BP and LSSVM [23]. VMD can solve the problem of mode aliasing and decompose carbon prices to render them more stable [24]. Chai et al. (2021) [25] proposed a combination of VMD, SE, and the PSO-ELM model to forecast carbon prices, and showed that VMD has a better decomposition ability than EMD. Sun et al. (2021) proposed a combination of VMD, range entropy, SVM, and the bidirectional short-term memory model to forecast carbon prices, and showed that VMD yields better decomposition in a shorter time than EEMD and CEEMD, and range entropy can strike a balance between the complexity of data on carbon prices and systemic forecasting errors [26]. Sun and Huang (2020) proposed a secondary decomposition algorithm based on the EMD-VMD-BP model to forecast carbon prices and showed that it can forecast carbon prices more accurately than EMD [27]. Zhou et al. (2021) used VMD to decompose the first intrinsic mode function (IMF1) generated by EMD decomposition and combined it with a kernel-based extreme learning machine (KELM) optimized by SSA to construct the EMD-VMD-SSA-KELM model for forecasting carbon prices. Their results proved that the decomposition of carbon prices based on EMD-VMD can improve the forecasting performance, and that the KELM model optimized by SSA delivers a better forecasting performance than KELM, LSSVM, and ELM [28]. Zhou and Wang (2021) used VMD to decompose IMF1 generated by the CEEMDAN decomposition of initial carbon prices, and then used ELM to forecast carbon prices. Their results showed that the model is effective and stable at forecasting carbon prices [29].

To sum up, significant advances have been made in the literature on forecasting the price of carbon. However, the following shortcomings persist: (1) prevalent studies have used KELM with a single kernel to forecast carbon prices, and this struggles to adapt to the complex fluctuations in the series of carbon prices. (2) Research on the decomposition of carbon prices is incomplete and insufficient and tends to affect forecasts. (3) Previous studies have directly forecasted the mode components of carbon prices after secondary decomposition, but the forecasting of multiple modes increases systematic error.

The contributions of this paper are as follows: (1) we introduce a hybrid kernel-based extreme learning machine (HKELM) that is optimized by SSA to forecast carbon prices. It improves the accuracy of the forecasts. (2) ICEEMDAN is used after VMD to decompose the residual term of the carbon price that contains complex information. This ensures the full decomposition of nonlinear and nonstationary carbon price series, and optimizes the accuracy of the forecasting of the complex residual term. (3) Range entropy (RE) is used to reconstruct the modes of the carbon price to reduce systemic error.

3. Methods

This section briefly introduces the VMD algorithm, ICEEMDAN algorithm, range entropy, and SSA-HKELM model.

3.1. Variational Mode Decomposition

VMD is a method of signal decomposition [30]. It is used to split the original signal into several, more regular, band-limited intrinsic mode functions, abbreviated as VMF components, to solve the problem of mode aliasing in EMD to yield a more accurate decomposition. VMD has a stricter theoretical framework than EMD and has significant advantages in solving the problems of signal noise and mode aliasing [31].

Given the original time series of carbon prices y, the VMD algorithm transfers the process of their decomposition to a variational framework for signal-adaptive decomposition by searching for the optimal solution of the constrained variational model. It can decompose the carbon price into a series of VMF components representing information on the frequency of fluctuations in the original time series of carbon prices. The constrained variational problem is as follows:

In Equation (1), K is the number of decomposed VMF components of the carbon price by VMD, and and represent each VMF and its central frequency, respectively.

To find the optimal solution of the above constrained problem of variational optimization, the quadratic penalty term is introduced to reduce interference by Gaussian noise, and the Lagrange multiplier is introduced to increase the strictness of the constraint. Equation (1) is transformed into the following unconstrained optimization problem:

The alternating direction multiplier-based iterative algorithm is used to optimize the mode components and center frequency, and search for the saddle point of the augmented Lagrange function. , , and are alternately optimized after iteration. The formulae for them are as follows:

Once the condition for stopping the iterations, , is satisfied, the process of carbon price decomposition ends. Then, the original signal of the carbon price is decomposed into K components. In Equation (5), is the tolerance of noise that satisfies the requirements of fidelity of the decomposition of signals of the carbon price. According to the above VMD method, the sum of the VMF data is subtracted from the original time sequence of carbon prices to obtain the residual term of VMD.

3.2. Improved Complementary Ensemble-Based Empirical Mode Decomposition with Adaptive Noise

To solve the problems of mode aliasing, redundant noise, and pseudo-components being obtained after the use of the EMD, EEMD, and CEEMDAN algorithms, the ICEEMDAN method was proposed by Colominas et al. (2014) [32]. It can solve the problem of modal aliasing and eliminate residual noise in the mode components. It has a better ability than the other methods to extract components at different scales in a nonstationary and complex time series.

Let represent the operator of the j-th IMF produced by EMD. represents the local mean obtained from the upper and lower envelopes of the sequence, represents the residual term containing complex information after VMD, represents the i-th component of the added white noise, , is the parameter that controls the white noise energy, and , and is the maximum number of iterations. The j-th mode component produced by ICEEMDAN is denoted by . Based on the flow of the EMD algorithm, the time steps of the ICEEMDAN algorithm are as follows:

Step 1: The first residue can be gained by EMD calculation:

where is the first residue in Equation (6).

Step 2: Calculate the first mode IMF1 based on Equation (7):

Step 3: Calculate the second residual as the mean of local means, and define the second mode:

Step 4: Calculate the j-th mode:

Step 5: Repeat step 4 until the residual can no longer be decomposed or the maximum number of iterations is reached.

3.3. Range Entropy

After VMD and ICEEMDAN processing, the original series of carbon prices is decomposed into several, relatively stable, mode series. However, if the decomposed mode components are forecasted directly, error can easily accumulate owing to the large number of forecasts. Therefore, it is necessary to reconstruct the mode components of the carbon price to make the information more concentrated and reduce the cumulative forecasting error. We reconstruct the modes of the carbon price according to the complexity of the time series of each mode. Approximate entropy and SE are common methods used to measure the complexity of time series signals. However, the calculation of the approximate entropy is biased owing to the inherent comparison among its own data segments. The value of the approximate entropy is related to the length of the data, and it has poor consistency. SE is more accurate than approximate entropy. The higher the self-similarity of the sequence, the lower its SE. The more complex the sequence is, the higher the SE [33]. However, the SE is affected by changes in the amplitude of the signal [34]. To solve these problems, Omidvarnia proposed range entropy (RE) [35]. RE is more robust to changes in nonstationary signals than approximate entropy and SE. To improve the objectivity of the reconstruction and reduce the cumulative error in forecasting, this paper uses RE to reconstruct the sequences of modes of the carbon price. Specifically, RE is used to measure the complexity of the signals of each mode of the carbon price, and modes with lower entropy values are merged into a new subseries in each case of decomposition. The steps of the algorithm for range entropy are given below.

For the time series of each mode component of the carbon price , , is the length of the data.

Step 1: The time series is converted into a group of m-dimensional vectors in order, namely, , where .

Step 2: Calculate the distance between and , defined as :

.

Step 3: Given the similarity tolerance , for each , count . Then, calculate its ratio to the to , recorded as :

In Equation (11), , , and is the number of instances of .

Step 4: Calculate the average value of :

Step 5: Increase the number of dimensions to , repeat Steps 1 to 3, and calculate the average value of :

Range entropy is defined as:

When N is finite, the above is the estimated value of range entropy:

The value of range entropy is related to and r. In this paper, is set to 2 and r to 0.5. The advantage of reconstructing the modes of the carbon price using range entropy is that it is not excessively sensitive to changes in the amplitude of the signal. We can then objectively measure the complexity of signals of the time series of the modes of the carbon price after secondary decomposition.

3.4. Hybrid Kernel-Based Extreme Learning Machine Optimized by the Sparrow Search Algorithm

3.4.1. Hybrid Kernel-Based Extreme Learning Machine

The KELM model is a neural network algorithm based on ELM, which uses kernel mapping instead of random mapping. It was proposed by Huang [36]. Compared with the ELM algorithm, KELM has a better generalization ability, the capability of solving regression forecasting problems, and stabler forecasting results [37]. KELM is faster than the SVM algorithm and can obtain a better or similar forecasting accuracy [38]. The forecasting performance of different kernel functions is different [39]. The standard KELM algorithm with a single kernel function struggles to adapt to the complex fluctuations in each subseries of carbon prices. HKELM, with a hybrid kernel function, has a better learning ability and generalization performance than KELM [40], and can improve its forecasting performance [41]. Therefore, we use the HKELM model to forecast each subseries of the carbon price to overcome the defects of KELM and avoid the subjectivity of artificially selecting a single kernel function.

The training dataset of each subseries of carbon prices is , where is the input to the training sample of the subseries of carbon prices. It represents the lag in the historical data affecting each subseries. is the output of the forecasting model, and represents each subseries of carbon prices after the decomposition and reconstruction of the carbon price. The standard output of KELM is:

In the above, represents a unit diagonal matrix, represents a kernel matrix, represents the target output matrix, and represents the regularization coefficient. The larger is, the higher the accuracy of the model, and the smaller is, the stronger the generalization ability of the model. Therefore, it is important to determine an appropriate value of .

The common kernel functions of KELM are as follows:

- ➀

- RBF kernel function:

- ➁

- Poly-kernel function:

where a, c, and d are parameters of the kernel functions. The selection of an appropriate kernel function is important for the KELM regression. The RBF kernel function and the poly-kernel function are two common kernel functions. The former is a typical local kernel function with a weak generalization ability and strong learning ability while the latter is a typical global kernel function. The poly-kernel function has a better generalization ability than the RBF kernel function, but its learning ability is poorer [42]. The hybrid kernel function is a combination of the RBF kernel function and the poly-kernel function. It has a better learning ability and generalization performance than both and can forecast complex carbon prices more accurately. The hybrid kernel function is expressed as:

Because each subseries of carbon prices has prominent linear or nonlinear fluctuations that have a certain persistence, the single KELM algorithm struggles to capture the different characteristics of the complex fluctuation of each subseries. HKELM with the hybrid kernel function can better represent the time series of carbon prices, and we thus use it to forecast each subseries. However, the forecasting of carbon prices based on HKELM is affected by the regularization coefficient ; parameters a, c, and d of the kernel function; and weight used in the model. To improve the accuracy, it is necessary to optimize each parameter.

3.4.2. Sparrow Search Algorithm

Xue and Shen proposed SSA in 2020 [43]. Compared with other intelligent optimization algorithms, such as PSO, it has the advantages of a stronger optimization ability, faster convergence, higher stability, and stronger robustness [44]. We use SSA to optimize the regularization coefficient; the parameters a, c, and d of the kernel function; and the weight of HKELM.

SSA obtains the results of the optimization by imitating the foraging process of sparrows. According to it, the SSA divides the sparrow population into two parts: discoverers and joiners. Suppose there are N sparrows in a D-dimensional search space, and the position of the sparrow i is: , i = 1, 2, …, N. represents the position of the i-th sparrow. Discoverers usually account for 10% to 20% of the total sparrow population. In each iteration, the position update formula is as follows:

In Equation (18), t is the current number of iterations; is the maximum number of iterations; is a random number in the interval (0,1]; is a random number subject to a standard normal distribution; represents a matrix of size 1 × d, all elements of which are 1; ∈ [0, 1] is a warning value; and ∈ [0.5, 1] is a safe value. When , there are no predators around the foraging location, and discoverers can continue to expand the search scope. When , predators have appeared around the foraging location, and the discoverers sound an alarm and lead all sparrows to a safe place for foraging.

Sparrows other than discoverers are all joiners. In the process of foraging, a small number of joiners always pay attention to the dynamics of the discoverers. Once a discoverer finds a better food source, the joiners receive this information from it and follow. The location of the joiners is updated as follows:

In Equation (19), is the worst position of the sparrow in D dimensions at the t-th iteration of the population, and is its optimal position at the (t + 1)-th iteration. When , the i-th joiner cannot obtain food. To increase its energy, it needs to fly to other places for feeding. When , the i-th joiner randomly finds a position near the current best position for foraging.

Sparrows used for reconnaissance and early warning generally account for 10% to 20% of the total population. For the convenience of the expression, these sparrows are called vigilant, and their positions are updated as follows:

In Equation (20), is the globally optimal location; β is a random digit subjected to N (0, 1); K represents the direction of the sparrow’s movement and is a random number in the interval [1, 1]; is the fitness value of the current sparrow; and are the global optimal and worst fitness values, respectively; and is a minimal constant used to avoid the situation where the denominator is zero.

When , this means that if the vigilant sparrow is along the edge of the group, the global optimal position and its surroundings are safe. When , this indicates that if the vigilant sparrow is at the center of the population, there are predators in the flock. It approaches other sparrows over time to adapt to the environmental search strategy to reduce the risk of predation.

When the SSA is used to optimize the parameters of the HKELM model for each subsequence of carbon prices, the selection of the fitness function is important. Because the root mean-squared error can directly reflect the forecasting performance of the HKELM regression, it is taken as the fitness function in this paper.

3.5. Framework of the Proposed Carbon Price Forecasting Model

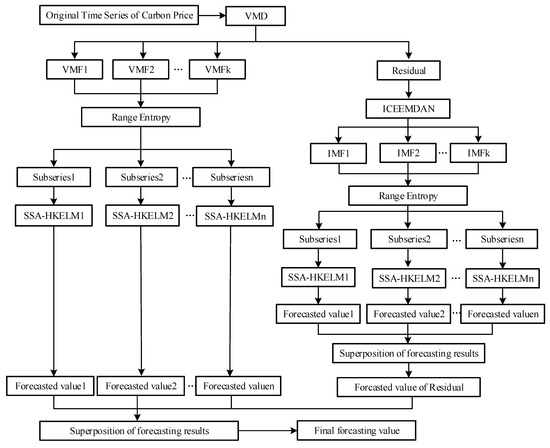

In view of the nonstationary, nonlinear, multi-frequency, and complex fluctuation-related characteristics of regional carbon prices in China, we construct a combined forecasting model based on secondary decomposition and the hybrid kernel-based extreme learning machine. The process diagram of the VMD-ICEEMDAN-RE-SSA-HKELM model is shown in Figure 1.

Figure 1.

Flowchart of the proposed model for forecasting the price of carbon, VMD-ICEEMDAN-RE-SSA-HKELM.

According to Figure 1, the steps of modeling are as follows:

- (1)

- VMD technology is used to decompose the original sequence of carbon prices to obtain each VMF, and the sum of the data is subtracted from the original time sequence of carbon prices to obtain the residual term of VMD.

- (2)

- Reconstruction and forecasting are carried out after VMD decomposition. We calculate RE of each VMF, and merge VMF components with lower entropies into a subseries. Each subseries is then forecasted using the SSA-HKELM model, and the results of each VMD-RE are obtained.

- (3)

- ICEEMDAN technology is used to decompose the residual term after VMD has been applied to the original sequence of carbon prices. Range entropy is introduced to measure the complexity of the signals of each IMF of the residual term, and IMF components with lower entropy values are merged into a new subseries. The SSA-HKELM model is then used to separately forecast each subseries of the residual term after applying ICEEMDAN-RE, and the results of the forecasts of each are further superimposed to obtain the final results of the residual term of the carbon price.

- (4)

- The results of the forecasting of each VMF and the residual term after the decomposition of the original sequence of carbon prices are superimposed to obtain the final forecasts of the carbon price.

4. Empirical Analysis of Price Forecasting of China’s Regional Carbon Markets

4.1. Sample Selection and Criteria for Evaluating Forecasts

We used regional market prices of carbon in China as the research object. Given that the trading volume of Hubei’s carbon market accounts for a large portion of that of the country, data on carbon pricing in this market from 3 January 2017 to 18 October 2021 were used. The sample size was 1158, and the data were obtained from the website of the Hubei Carbon Emission Trading Center (http://www.hbets.cn/list/13.html, accessed on 19 October 2021). All data on carbon prices and the models were processed in MATLAB 2019b. The routine statistical results of all sample data are shown in Table 1.

Table 1.

Descriptive statistics of the carbon price.

The standard deviation of the transaction price in Hubei’s carbon market in Table 1 is 8.21, indicating that the data were relatively discrete. The difference between the maximum and minimum values was about 42 yuan/ton of carbon dioxide, indicating that the data were widely dispersed. Due to the impacts of policies, the macro-economy, and the market mechanism, the trading price in the carbon market was highly volatile.

Given the characteristics and limitations of the evaluation criteria in practice, it is difficult for any single evaluation index to comprehensively evaluate the advantages and disadvantages of the results of forecasting. To assess the proposed model, we used the root mean-squared error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE). The directional symmetry value (DS) was used to measure the capability of the proposed method to forecast the trend of the carbon price. In Table 2, is the forecasted value of the carbon price, is the actual value, and N is the number of data items. The smaller RMSE, MAE, and MAPE were, and the larger DS was, the better the forecasts of the model.

Table 2.

Evaluation criteria for the proposed model for forecasting the price of carbon.

4.2. Analysis of the Decomposition and Reconstruction of the Market Price of Carbon

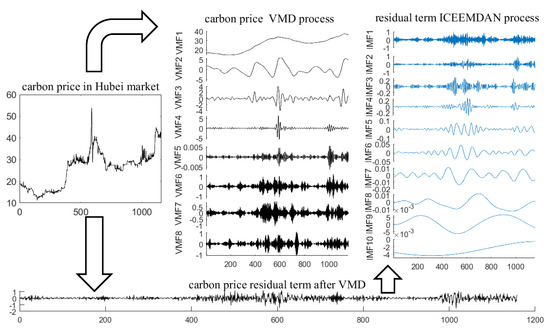

VMD was used to decompose the data on the time series of Hubei’s carbon prices in the sample period. The number K of components of the mode of decomposition of carbon prices needed to be set in advance. Different values of K lead to significant changes in the accuracy of carbon price forecasting. If the value of K is too small, the accuracy of decomposition decreases, and the complexity of the original sequence cannot be reduced. If the value of K is too large, it leads to excessive decomposition, and increases the amount of calculation and worsens the decomposition [45,46]. As ICEEMDAN can automatically decompose the data into an appropriate number of modes of the carbon price, we set the value of K by referring to the number of decompositions made by ICEEMDAN, which was eight. Specifically, eight VMF components were obtained. The results of the decomposition are shown in Figure 2. A residual term of the carbon price was obtained after VM, which was complex and fluctuated violently. It contained rich information on the carbon price. If this information is ignored in the forecasting model, the overall forecast is less accurate. Therefore, the residual term was subjected to ICEEMDAN, and the decomposed modes are shown in the upper-right part of Figure 2. The process of decomposition of VMD-ICEEMDAN is shown in Figure 2.

Figure 2.

Secondary decomposition of the carbon price using VMD-ICEEMDAN.

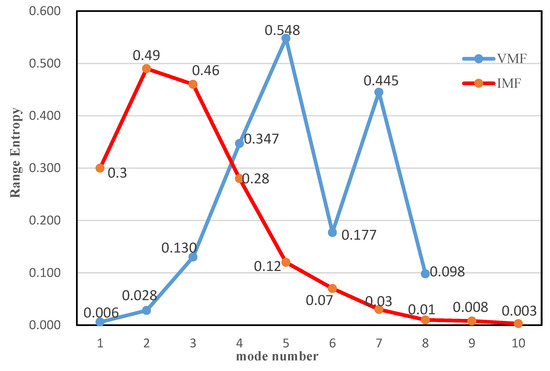

To reduce the cumulative error in the forecasted carbon prices, the RE algorithm was used to analyze the complexity of each VMF and IMF. The results are shown in Figure 3.

Figure 3.

Range entropy analysis of the decomposition of the carbon price.

Figure 3 shows (1) an analysis of the range entropy of the results of VMD decomposition (VMFs) of the carbon price. The range entropies of VMF1, VMF2, and VMF8 were low, which indicates that their signals were not complex and had a long memory. They were merged into a new series. Because the range entropy values of VMF3–VMF7 were high with significant differences, the fluctuations in their signals were complicated and required separate forecasting. They were not reconstructed.

Figure 3 also shows (2) an analysis of the range entropy of the results of the decomposition of ICEEMDAN (IMFs) for the residual term of the carbon price. The range entropy values of IMF6–IMF10 were low, which indicates that their signals were not complex and had a long memory. IMF6–IMF10 were thus merged into a new subseries. The entropy values of IMF1 to IMF5 were high, which indicates that their signals were complex. They were not reconstructed but were forecasted separately.

Finally, 12 subseries were obtained as shown in Table 3.

Table 3.

Results of the reconstruction of the carbon price.

4.3. Partial Autocorrelation Test of Subseries of the Carbon Price

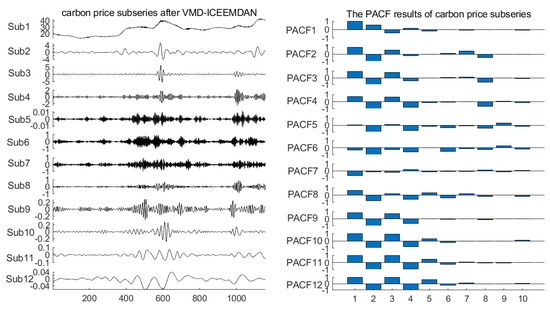

After the decomposition and reconstruction of the original carbon price, the input variables for the forecasting model of each subseries needed to be determined. The partial autocorrelation test was used to analyze the impact of historical data on each subseries of the carbon price at the 95% confidence level (Figure 4).

Figure 4.

Reconstructed results of the carbon price and the results of their PACF test.

According to the results of the PACF test, each subseries of carbon prices was more regular after decomposition and reconstruction, and was affected by its own historical time series data. In general, recent historical data had a greater impact on the fluctuations in each subsequence of carbon prices than longer-term historical data. This shows that each reconstructed subsequence of carbon prices had a certain persistence, and its historical time series data contained important information for forecasting the price of carbon. Considering the results of the partial autocorrelation test of each subseries above, and given how large input variables in the HKELM forecasting model affected its accuracy and redundancy, the input variables of each forecasting model for the subseries of carbon prices determined in this paper are shown in Table 4.

Table 4.

Input variables of each forecasting model of the subseries of carbon prices.

4.4. Analysis of the Forecasted Carbon Price

We used the data on the carbon price in the Hubei market from 3 January 2017 to 10 November 2020 as the training set, with a total of 928 groups of data being used to train the forecasting model. The data on carbon prices from 11 November 2020 to 18 October 2021 were used as the test set, with a total of 222 groups of data. To ensure the accuracy of the SSA-HKELM model, each subseries of the data on carbon prices needed to be normalized. We used the mapminmax function to normalize the input and output variables of each subseries to the interval [−1, 1]. Then, HKELM with the RBF kernel function and poly-kernel function was used to forecast each subseries of carbon prices. The optimal regularization parameters, parameters of the kernel function, and weights of the HKELM model were obtained by the SSA algorithm during model training. The results of the optimization of the parameters of HKELM for each subseries of carbon prices are shown in Table 5.

Table 5.

The results of the optimization of the parameters of HKELM for subseries of carbon prices.

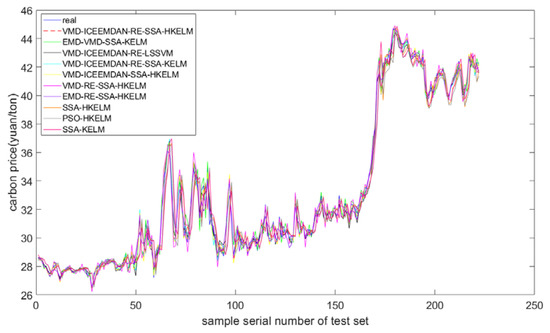

To investigate the forecasting performance of the model, 222 groups of data from 11 November 2020 to 18 October 2021 were used as the test set, and each subseries of carbon prices was tested by using the HKELM model optimized by SSA. The results of the forecasting of each subseries were linearly superimposed to obtain the final results of the predicted price of carbon. To verify the validity of the proposed model, it was compared with a reference group consisting of single forecasting models, such as SSA-KELM, PSO-HKELM, and SSA-HKELM; a combination of decomposition models, such as EMD-RE-SSA-HKELM and VMD-RE-SSA-HKELM; and combinations of secondary decomposition-based forecasting models, such as VMD-ICEEMDAN-SSA-HKELM, VMD-ICEEMDAN-RE-SSA-KELM, VMD-ICEEMDAN-RE-LSSVM, and EMD-VMD-SSA-KELM. The forecasting results of these models for the carbon price of Hubei are shown in Figure 5. They show that the proposed model achieved a high accuracy. This verifies its feasibility for predicting regional prices in the carbon markets in China. Table 6 gives a comparison of the models in forecasting carbon prices in the Hubei market.

Figure 5.

Results of the predicted carbon prices in the Hubei market.

Table 6.

Comparison of the models in forecasting carbon prices in the Hubei market.

Table 6 shows that KELM consisted of the RBF kernel; EMD-RE-SSA-HKELM represents a combined model with only EMD-based single decomposition; VMD-RE-SSA-HKELM represents a model that ignored the residual term of the carbon price after VMD; VMD-ICEEMDAN-SSA-HKELM represents a model that directly forecasted all modes of the secondary decomposition of the carbon price without reconstruction; VMD-ICEEMDAN-RE-SSA-KELM represents KELM with the RBF kernel to forecast each subseries of carbon prices after secondary decomposition and reconstruction; VMD-ICEEMDAN-RE-LSSVM represents the use of the LSSVM model to forecast each subseries of carbon prices after secondary decomposition and reconstruction; EMD-VMD-SSA-KELM is a combined model proposed in the literature [28]; and the combination of models proposed here is denoted by VMD-ICEEMDAN-RE-SSA-HKELM.

Table 6 shows that RMSE, MAE, and MAPE of the VMD-ICEEMDAN-RE-SSA-HKELM model were 0.2493, 0.1796, and 0.0056, respectively, and errors in it were smaller than in the other models in terms of the accuracy of forecasting the price of carbon. DS of the proposed model was the highest at 0.9054. This shows that the accuracy of forecasting of the carbon price and its trend by the proposed model, for the Hubei carbon market, were the highest of all single models and combined models considered.

A comparison of the results shows the following: (1) the proposed model (VMD-ICEEMDAN-RE-SSA-HKELM) was superior to VMD-ICEEMDAN-RE-SSA-KELM and VMD-ICEEMDAN-RE-LSSVM in terms of forecasting the price of carbon. HKELM was able to fully exploit the advantages of each kernel function to describe data on the fluctuations in the carbon price, which improved the forecasts of the subseries of carbon prices. (2) The forecasting performance of the combinations of models based on secondary decomposition was better than that of the single decomposition models. In comparison with the EMD, VMD, and VMD-ICEEMDAN models, ICEEMDAN better decomposed the residual term for the carbon price generated by VMD decomposition to significantly improve the forecasts. The VMD model was superior to the EMD model because it better decomposed the nonlinear and nonstationary carbon prices. For example, it solved the problem of modal aliasing in EMD and reduced the complexity of the series of carbon prices. Moreover, the residual term was decomposed by ICEEMDAN and incorporated into the model. This allowed for clarification of the trend of changes in and the frequency of carbon prices, and more comprehensively described the impact of all historical signals of the carbon prices on future trends. (3) The proposed model was superior to VMD-ICEEMDAN-SSA-HKELM because its reconstruction of the modes of the carbon prices based on range entropy reduced the cumulative error in the forecasted prices of carbon. (4) Among the single forecasting models, the SSA-HKELM model was better than the PSO-HKELM model because it was optimized by SSA to obtain the best parameters of regularization and the kernel and weights to avoid the subjective selection of these parameters. (5) Combinations of the models for forecasting the prices of carbon based on decomposition were superior to the single models. These included SSA-KELM, PSO-HKELM, and SSA-HKELM. This is because the original trend of the price of carbon was highly complex, such that a single model could not capture its nonlinearity and nonstationarity. (6) Forecasts of the proposed model were significantly better than those of EMD-VMD-SSA-KELM because the secondary decomposition technology of the former fully decomposed the carbon price.

In short, to forecast regional carbon prices in China based on secondary decomposition and HKELM, the combined model can completely decompose the complex series of market prices of carbon, and can significantly improve the accuracy of forecasting the price of carbon and its change trends.

4.5. Robustness

To further verify the robustness of the proposed model, we applied it to daily carbon prices of the Guangzhou Carbon Emission Exchange from 3 January 2017 to 18 October 2021. The results are shown in Table 7. The relative values of the evaluation indicators did not change significantly from the results from Hubei, which shows that the proposed model for forecasting regional carbon prices in China is robust against varying markets.

Table 7.

Comparison of the methods for forecasting the price of carbon in the Guangzhou market.

5. Conclusions

Forecasting regional carbon prices is an important area of research on the financial market for carbon. Given that the traditional model used to predict regional prices in China is flawed, this paper proposed a forecasting model based on secondary decomposition and HKELM, and verified its performance on data on carbon prices from the Hubei market. The VMD method was used to decompose the original carbon price, ICEEMDAN was applied to decompose the residual term after VMD, and range entropy was used to reconstruct sequences with less complex signals than in the original series of data. The HKELM model was used to forecast each subseries, and PACF was used to select the input variables of each subseries of the model. The parameters of the HKELM model were optimized by SSA. Finally, forecasts of each subseries of carbon prices were superimposed to obtain the final forecast for the carbon price in the market. The results of comparative empirical analysis showed that the proposed model is superior to prevalent models in terms of forecasting the price of carbon.

The proposed model has the following advantages in comparison with prevalent models: (1) ICEEMDAN decomposes the complex residual term of the carbon price after VMD into several, more regular, subsequences to improve the accuracy of forecasting. Secondary decomposition is superior to the traditional model for secondary decomposition. (2) The use of HKELM to forecast each subseries of carbon prices helps determine the characteristics of fluctuation and the internal laws of different subseries of carbon prices to improve the accuracy and stability of the forecasts. (3) The complexity in the signals of each mode of carbon prices was analyzed using range entropy, and was used to reconstruct sequences with less complex signals to reduce the forecasting error and the workload required to forecast the price of carbon.

However, this study still has certain limitations. Firstly, although the proposed model is highly accurate, the trend of carbon prices in regional markets in China is determined by a number of complex factors, such as the supply and demand relationship in the carbon market; the prices of coal, crude oil, and natural gas; the macro-economic environment; climate change; and major public disasters. Such factors that are influential for the carbon market should be incorporated into the proposed model in future research in the area to improve forecasts of the carbon price. Secondly, this article only considered empirical research on the regional carbon price in China. Research on the carbon market price can be expanded and applied to the EU carbon price to verify the wide applicability of the model.

Author Contributions

Conceptualization, B.H. and Y.C.; methodology, B.H.; software, B.H.; validation, B.H. and Y.C.; formal analysis, B.H.; data curation, B.H.; writing—original draft preparation, B.H. and Y.C.; writing—review and editing, B.H. and Y.C.; visualization, B.H.; supervision, Y.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the major projects of the National Social Science Fund to study and explain the spirit of the Fourth Plenary Session of the 19th CPC Central Committee, grant number 20ZDA084.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sun, H.; Samuel, C.A.; Amissah, J.C.K.; Taghizadeh-Hesary, F.; Mensah, I.A. Non-linear nexus between CO2 emissions and economic growth: A comparison of OECD and B&R countries. Energy 2020, 212, 118637. [Google Scholar] [CrossRef]

- Sun, H.; Edziah, B.K.; Sun, C.; Kporsu, A.K. Institutional quality and its spatial spillover effects on energy efficiency. Socio-Econ. Plan. Sci. 2021, 101023. [Google Scholar] [CrossRef]

- Li, X.; Li, Z.; Su, C.; Umar, M.; Shao, X. Exploring the asymmetric impact of economic policy uncertainty on China’s carbon emissions trading market price: Do different types of uncertainty matter? Technol. Forecast. Soc. Chang. 2022, 178, 121601. [Google Scholar] [CrossRef]

- Zheng, J.; Mi, Z.; Coffman, D.; Milcheva, S.; Shan, Y.; Guan, D.; Wang, S. Regional development and carbon emissions in China. Energy Econ. 2019, 81, 25–36. [Google Scholar] [CrossRef]

- Huang, W.; Wang, Q.; Li, H.; Fan, H.; Qian, Y.; Klemeš, J. Review of recent progress of emission trading policy in China. J. Clean. Prod. 2022, 349, 131480. [Google Scholar] [CrossRef]

- Liu, B.; Sun, Z.; Li, H. Can Carbon Trading Policies Promote Regional Green Innovation Efficiency? Empirical Data from Pilot Regions in China. Sustainability 2021, 13, 52891. [Google Scholar] [CrossRef]

- Ren, C.; Lo, A.Y. Emission trading and carbon market performance in Shenzhen, China. Appl. Energy 2017, 193, 414–425. [Google Scholar] [CrossRef] [Green Version]

- Zeng, S.; Nan, X.; Liu, C.; Chen, J. The response of the Beijing carbon emissions allowance price (BJC) to macroeconomic and energy price indices. Energy Policy 2017, 106, 111–121. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Z.; Xu, Y. Carbon price volatility: The case of China. PLoS ONE 2018, 13, e0205317. [Google Scholar] [CrossRef] [Green Version]

- Han, M.; Ding, L.; Zhao, X.; Kang, W. Forecasting carbon prices in the Shenzhen market, China: The role of mixed-frequency factors. Energy 2019, 171, 69–76. [Google Scholar] [CrossRef]

- Huang, Y.; Hu, J.; Liu, H.; Liu, S. Research on price forecasting method of China’s carbon trading market based on PSO-RBF algorithm. Syst. Sci. Control. Eng. 2019, 7, 40–47. [Google Scholar] [CrossRef]

- Xie, Q.; Hao, J.; Li, J.; Zheng, X. Carbon price prediction considering climate change: A text-based framework. Econ. Anal. Policy 2022, 74, 382–401. [Google Scholar] [CrossRef]

- Liu, J.; Wang, P.; Chen, H.; Zhu, J. A combination forecasting model based on hybrid interval multi-scale decomposition: Application to interval-valued carbon price forecasting. Expert Syst. Appl. 2022, 191, 116267. [Google Scholar] [CrossRef]

- Fan, J.; Todorova, N. Dynamics of China’s carbon prices in the pilot trading phase. Appl. Energy 2017, 208, 1452–1467. [Google Scholar] [CrossRef] [Green Version]

- Zhu, B. A novel multiscale ensemble carbon price prediction model integrating empirical mode decomposition, genetic algorithm and artificial neural network. Energies 2012, 5, 355–370. [Google Scholar] [CrossRef]

- Li, W.; Lu, C. The research on setting a unified interval of carbon price benchmark in the national carbon trading market of China. Appl. Energy 2015, 155, 728–739. [Google Scholar] [CrossRef]

- Yao, Y.; Lv, J.; Zhang, C. Price formation mechanism and price forecast of Hubei carbon market. Stat. Decis. 2017, 19, 166–169. [Google Scholar]

- Zhou, J.; Yu, X.; Yuan, X. Predicting the carbon price sequence in the Shenzhen Emissions Exchange using a multiscale ensemble forecasting model based on ensemble empirical mode decomposition. Energies 2018, 11, 1907. [Google Scholar] [CrossRef] [Green Version]

- Wu, Q.; Liu, Z. Forecasting the carbon price sequence in the Hubei emissions exchange using a hybrid model based on ensemble empirical mode decomposition. Energy Sci. Eng. 2020, 8, 2708–2721. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Ma, X.; Huang, K.; Azimi, M. Carbon trading volume and price forecasting in China using multiple machine learning models. J. Clean. Prod. 2020, 249, 119386. [Google Scholar] [CrossRef]

- Jiang, P.; Liu, Z. Variable weights combined model based on multi-objective optimization for short-term wind speed forecasting. Appl. Soft. Comput. 2019, 82, 105587. [Google Scholar] [CrossRef]

- Hao, Y.; Tian, C.; Wu, C. Modelling of carbon price in two real carbon trading markets. J. Clean. Prod. 2020, 244, 118556. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, D. Carbon Price forecasting based on improved CEEMDAN and extreme learning machine optimized by sparrow search algorithm. Sustainability 2021, 13, 94896. [Google Scholar] [CrossRef]

- Sun, G.; Chen, T.; Wei, Z.; Sun, Y.; Zang, H.; Chen, S. A carbon price forecasting model based on variational mode decomposition and spiking neural networks. Energies 2016, 9, 54. [Google Scholar] [CrossRef] [Green Version]

- Chai, S.; Zhang, Z.; Zhang, Z. Carbon price prediction for China’s ETS pilots using variational mode decomposition and optimized extreme learning machine. Ann. Oper. Res. 2021. [Google Scholar] [CrossRef]

- Sun, S.; Jin, F.; Li, H.; Li, Y. A new hybrid optimization ensemble learning approach for carbon price forecasting. Appl. Math. Model. 2021, 97, 182–205. [Google Scholar] [CrossRef]

- Sun, W.; Huang, C. A carbon price prediction model based on secondary decomposition algorithm and optimized back propagation neural network. J. Clean. Prod. 2020, 243, 118671. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, S. A carbon price prediction model based on the secondary decomposition algorithm and influencing factors. Energies 2021, 14, 1328. [Google Scholar] [CrossRef]

- Zhou, J.; Wang, Q. Forecasting carbon price with secondary decomposition algorithm and optimized extreme learning machine. Sustainability 2021, 13, 58413. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Li, H.; Jin, F.; Sun, S.; Li, Y. A new secondary decomposition ensemble learning approach for carbon price forecasting. Knowl.-Based Syst. 2021, 214, 106686. [Google Scholar] [CrossRef]

- Colominas, M.A.; Schlotthauer, G.; Torres, M.E. Improved complete ensemble EMD: A suitable tool for biomedical signal processing. Biomed. Signal Process. Control. 2014, 14, 19–29. [Google Scholar] [CrossRef]

- Jia, S.; Ma, B.; Guo, W.; Li, Z.S. A sample entropy based prognostics method for lithium-ion batteries using relevance vector machine. J. Manuf. Syst. 2021, 61, 773–781. [Google Scholar] [CrossRef]

- Ru, Y.; Li, J.; Chen, H.; Li, J. Epilepsy Detection Based on Variational Mode Decomposition and Improved Sample Entropy. Comput. Intell. Neurosci. 2022, 2022, 6180441. [Google Scholar] [CrossRef]

- Omidvarnia, A.; Mesbah, M.; Pedersen, M.; Jackson, G. Range entropy: A bridge between signal complexity and self-similarity. Entropy 2018, 20, 962. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B-Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Tang, Z.; Wu, J.; Du, X.; Chen, K. Multi-step-ahead crude oil price forecasting based on two-layer decomposition technique and extreme learning machine optimized by the particle swarm optimization algorithm. Energy 2021, 229, 120797. [Google Scholar] [CrossRef]

- Qi, X.; Li, K.; Yu, X.; Zhang, Z.; Lou, J. Transformer top oil temperature interval prediction based on kernel extreme learning machine and bootstrap method. Proc. CSEE 2017, 37, 5821–5828. [Google Scholar]

- Zhou, C.; Yin, K.; Cao, Y.; Intrieri, E.; Ahmed, B.; Catani, F. Displacement prediction of step-like landslide by applying a novel kernel extreme learning machine method. Landslides 2018, 15, 2211–2225. [Google Scholar] [CrossRef] [Green Version]

- Hou, Z.; Lao, W.; Wang, Y.; Lu, W. Hybrid homotopy-PSO global searching approach with multi-kernel extreme learning machine for efficient source identification of DNAPL-polluted aquifer. Comput. Geosci. 2021, 155, 104837. [Google Scholar] [CrossRef]

- Li, T.; Qian, Z.; He, T. Short-Term Load Forecasting with Improved CEEMDAN and GWO-Based Multiple Kernel ELM. Complexity 2020, 2020, 1209547. [Google Scholar] [CrossRef]

- Lv, L.; Wang, W.; Zhang, Z.; Liu, X. A novel intrusion detection system based on an optimal hybrid kernel extreme learning machine. Knowl.-Based Syst. 2020, 195, 105648. [Google Scholar] [CrossRef]

- Xue, J.K.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Xin, J.; Chen, J.; Li, C.; Lu, R.; Li, X.; Wang, C.; Zhu, H.; He, R. Deformation characterization of oil and gas pipeline by ACM technique based on SSA-BP neural network model. Measurement 2022, 189, 110654. [Google Scholar] [CrossRef]

- Niu, M.; Hu, Y.; Sun, S.; Liu, Y. A novel hybrid decomposition-ensemble model based on VMD and HGWO for container throughput forecasting. Appl. Math. Model. 2018, 57, 163–178. [Google Scholar] [CrossRef]

- Zhang, Y.; Pan, G.; Chen, B.; Han, J.; Zhao, Y.; Zhang, C. Short-term wind speed prediction model based on GA-ANN improved by VMD. Renew. Energy 2020, 156, 1373–1388. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).