Seven Different Lighting Conditions in Photogrammetric Studies of a 3D Urban Mock-Up

Abstract

1. Introduction

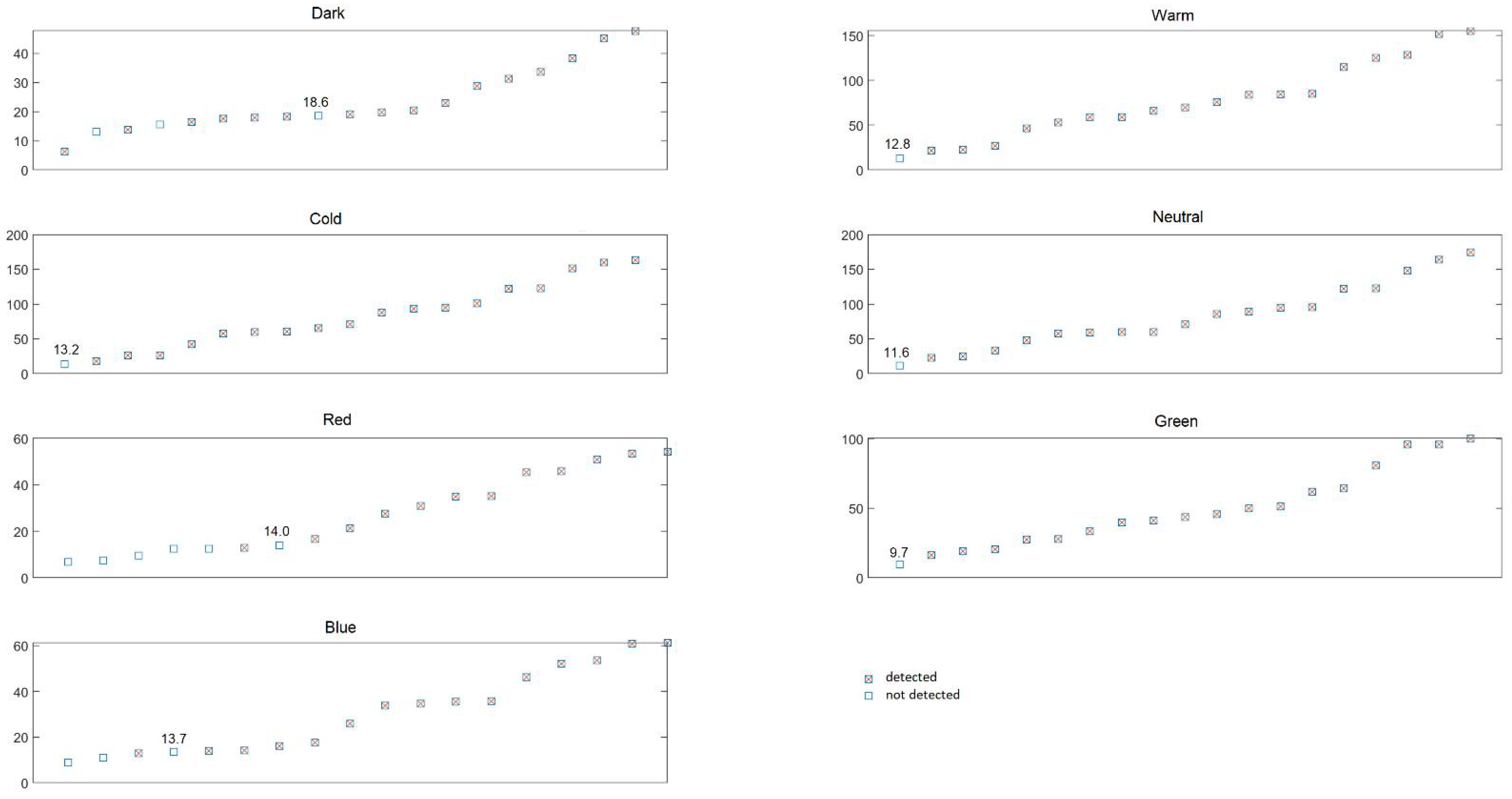

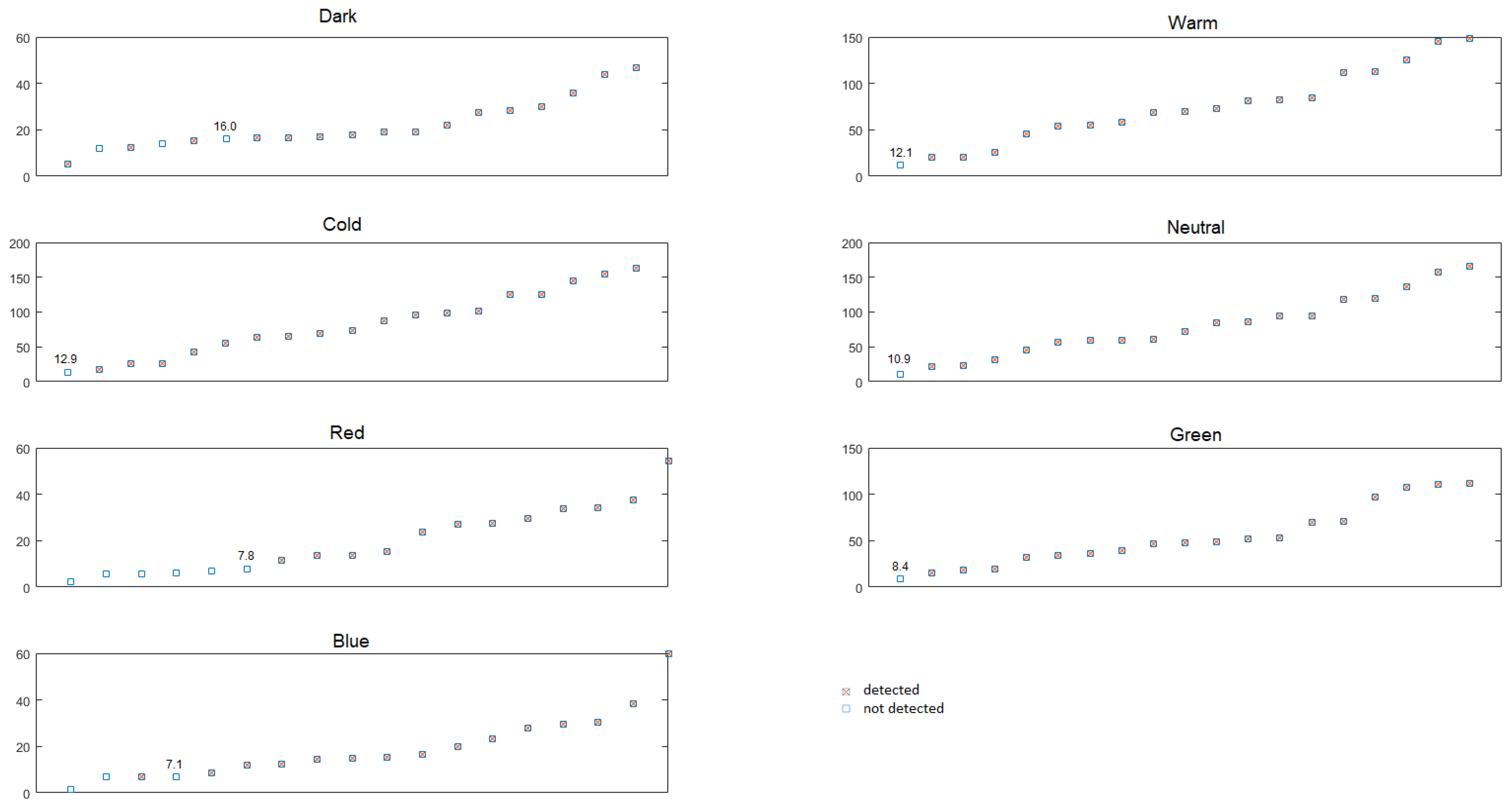

- the general quality of study depending on the scene lighting type. The studied scene was illuminated with white (warm, cold, neutral), red, green, blue light and without additional lighting,

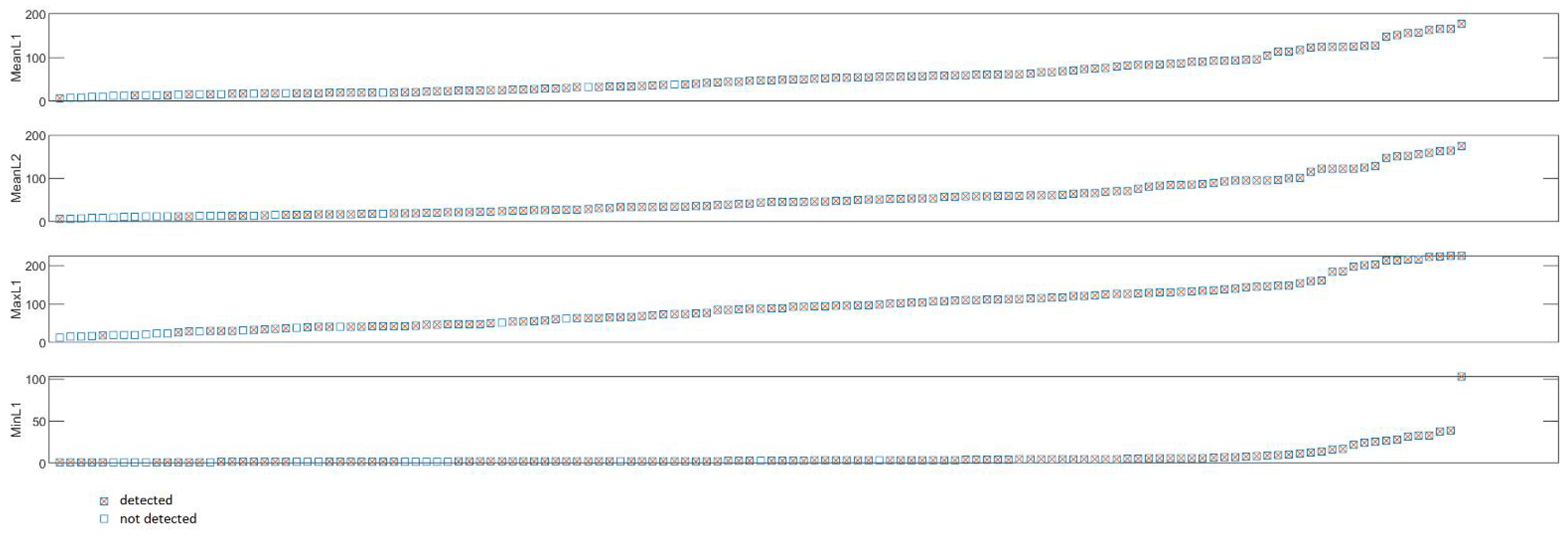

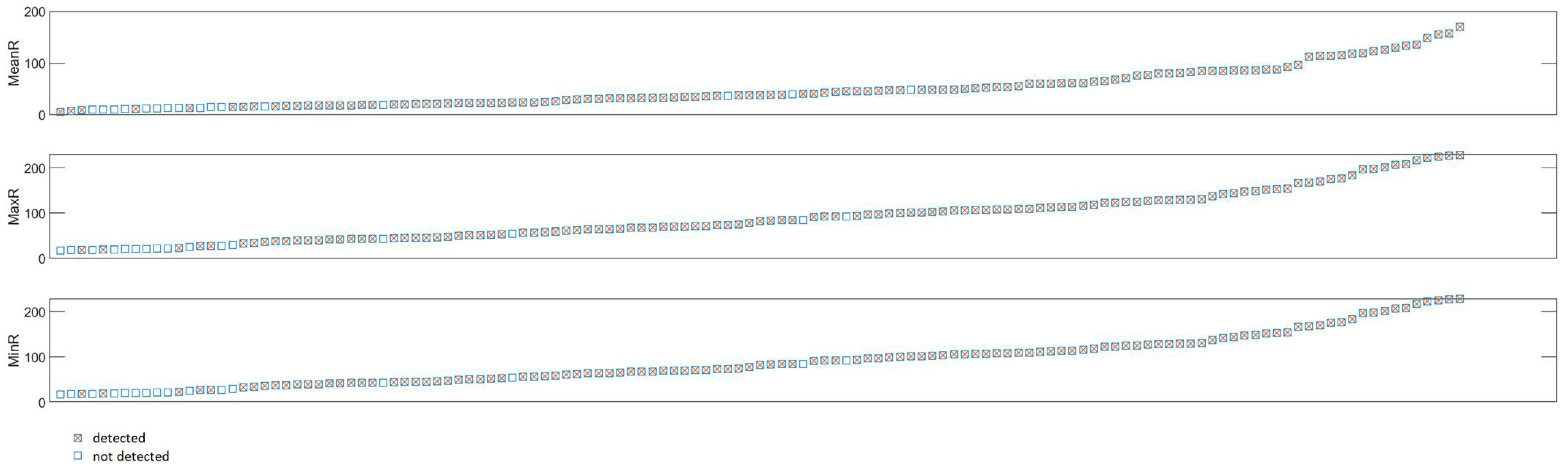

- the dependency between the detectability of F-points and the features that determine the luminosity of F-point discs,

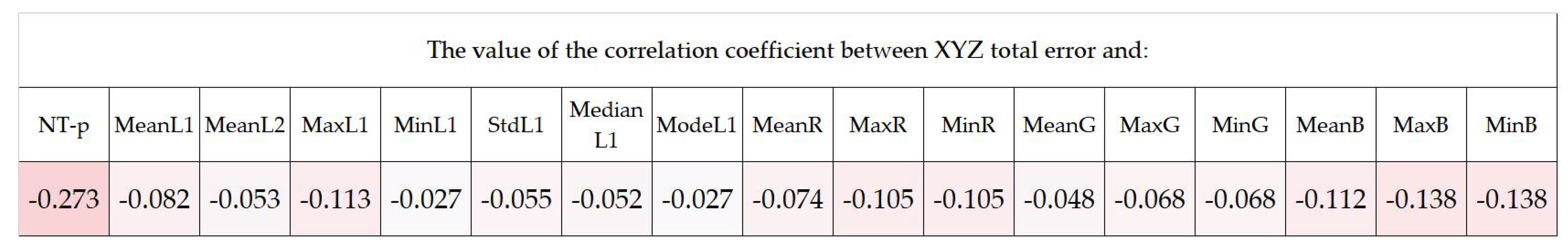

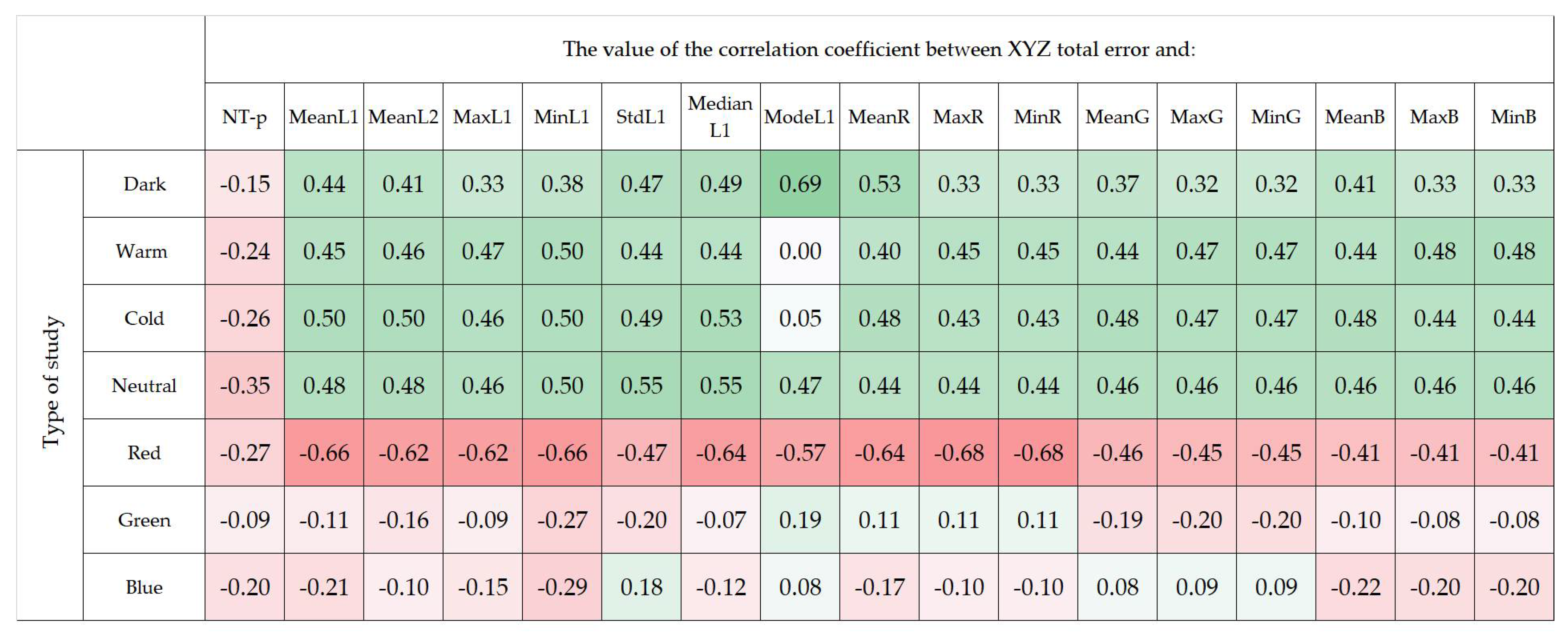

- the dependency between the F-point’s XYZ total error and the features that determine the luminosity of F-point discs,

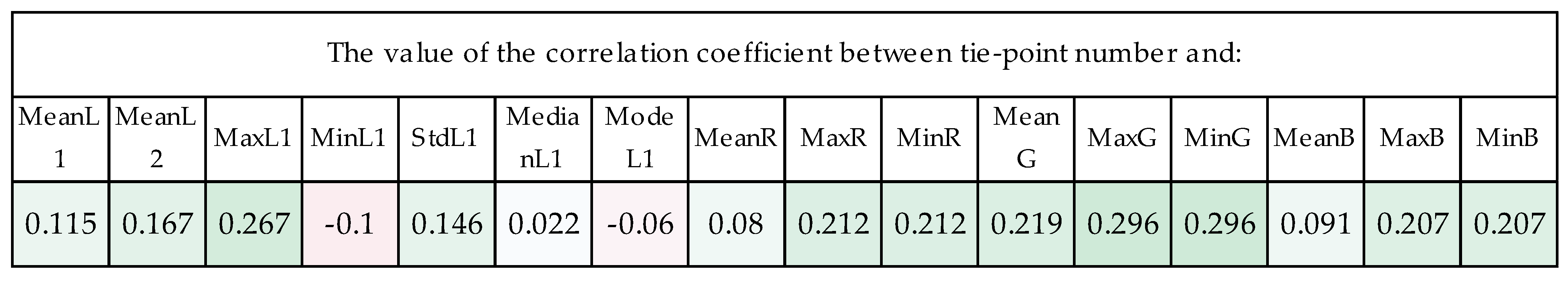

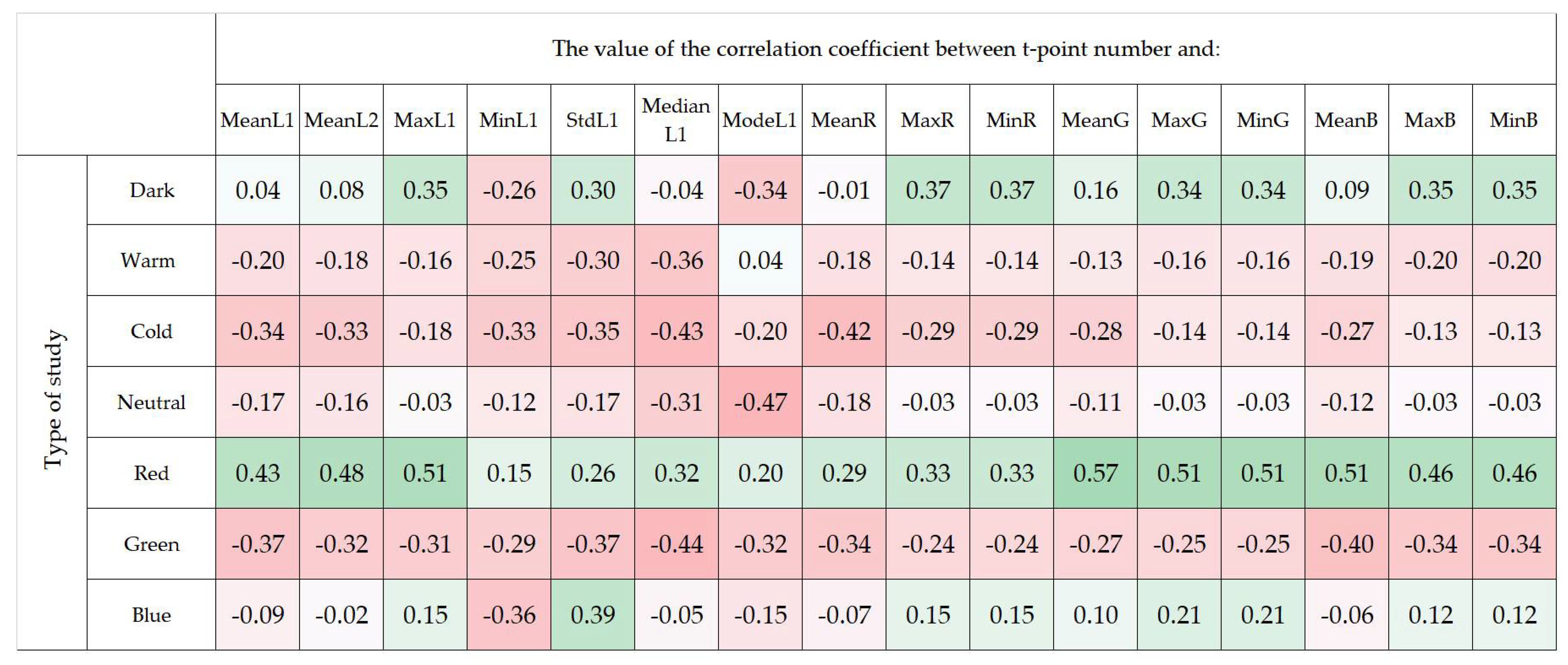

- the dependency between the number of tie-points (point of the analysed object, presented in two or more images) in the F-point’s vicinity and the features that determine the luminosity of F-point discs.

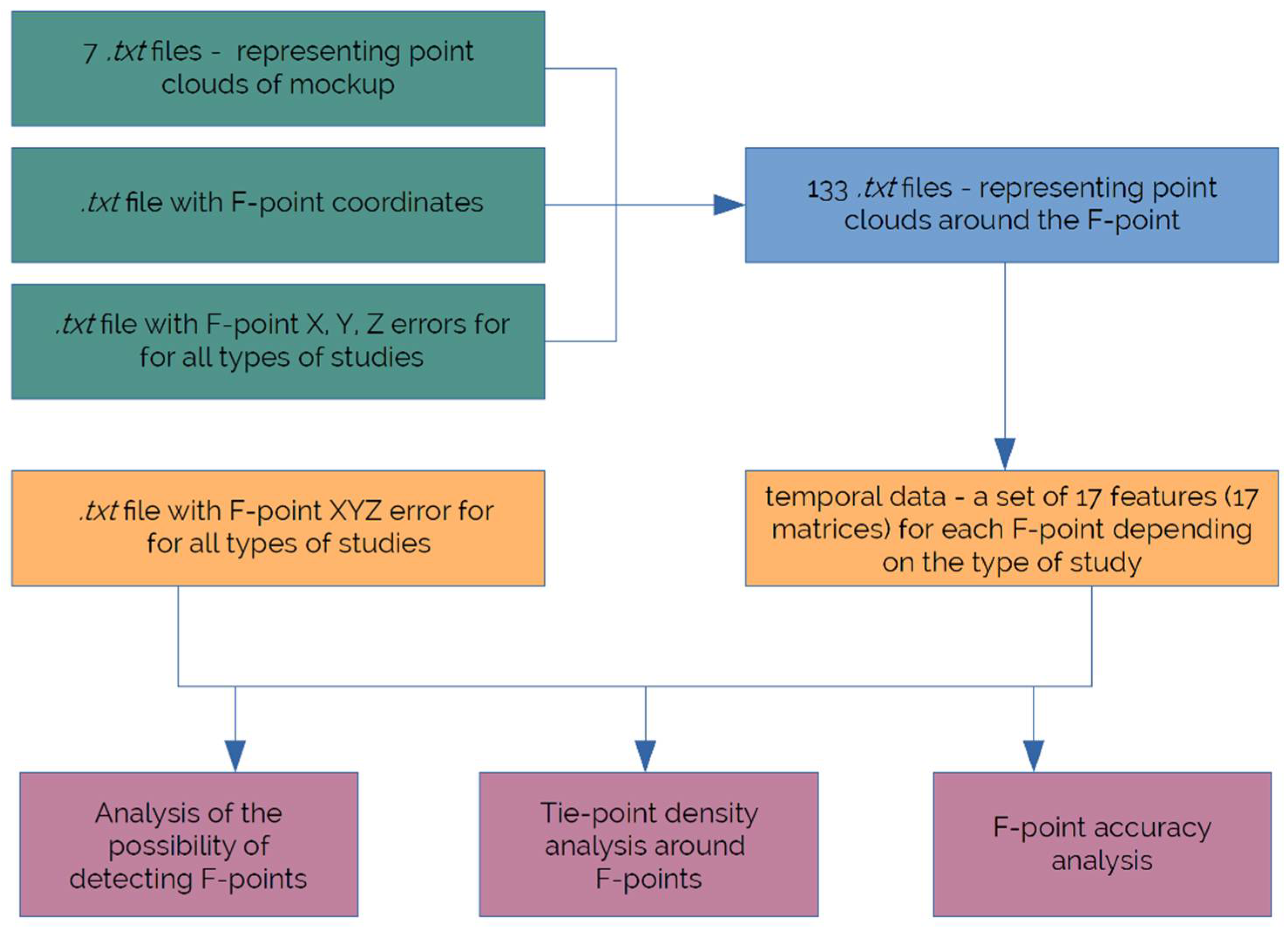

2. Materials and Methods

2.1. Research Procedure and Metrics

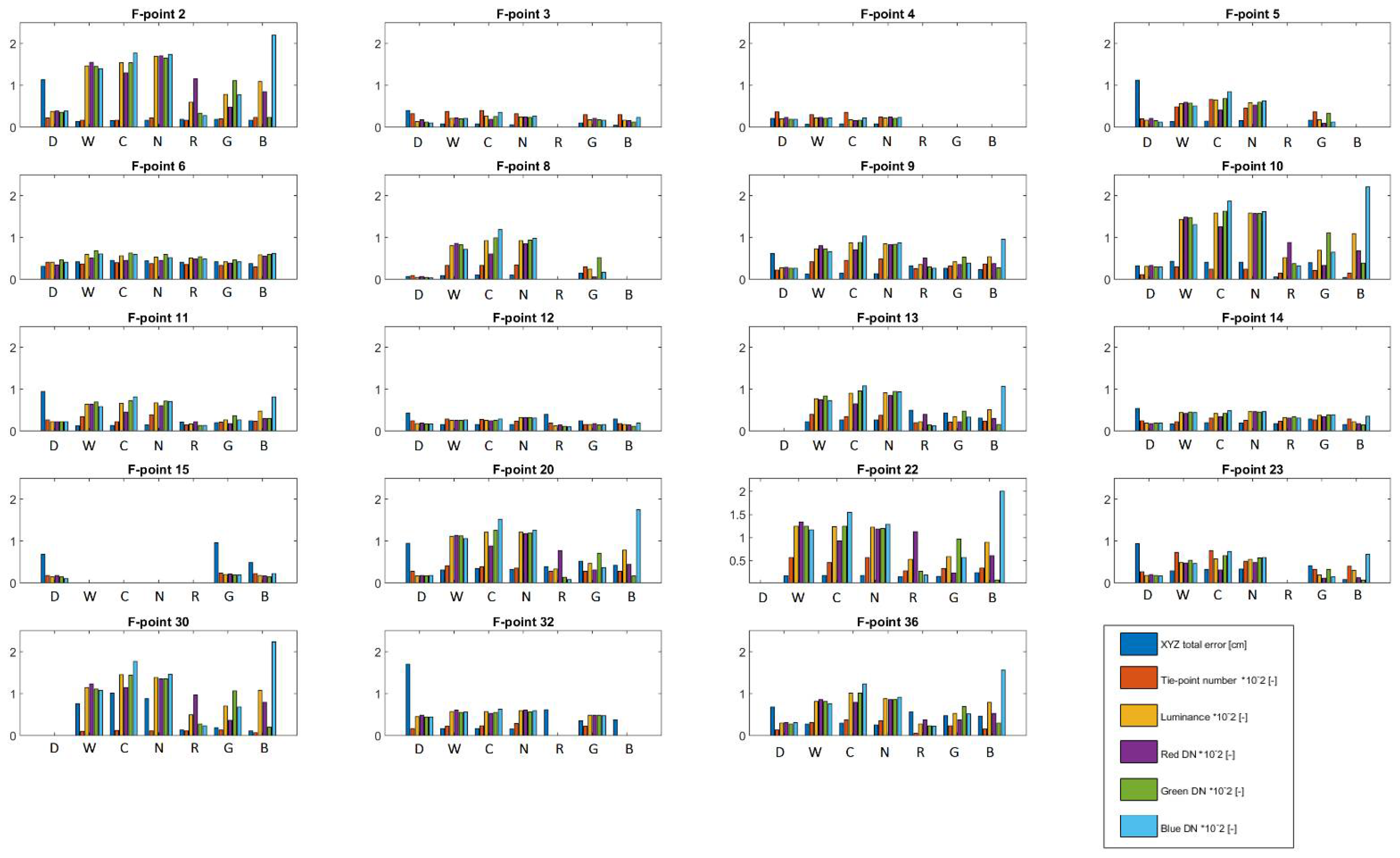

- NT-p—number of tie-points, it is the number of the tie-points extracted from the vicinity of the studied F-point.

- MeanL1—mean luminosity type 1, designated for all extracted tie-points; the luminosity for a single tie-point was designated with the following formula (3):

- MaxL1—maximum luminosity type 1,

- MaxR—maximum spectral response R recorded by the digital camera for the given points,

- MaxG—maximum spectral response G recorded by the digital camera for the given points,

- MaxB—maximum spectral response B recorded by the digital camera for the given points,

- MinL1 is the minimum luminosity type 1;

- MinR is the minimum spectral response R recorded by the digital camera for the given points;

- MinG is the minimum spectral response G recorded by the digital camera for the given points;

- MinB is the minimum spectral response B recorded by the digital camera for the given points;

- MeanR is the mean spectral response R recorded by the digital camera for the given points;

- MeanG is the mean spectral response G recorded by the digital camera for the given points; and

- MeanB is the mean spectral response B recorded by the digital camera for the given points.

- StdL1 is the standard deviation of luminosity type 1;

- MedianL1 is the median of luminosity type 1; and

- ModeL1 is the mode of luminosity type 1.

2.2. Impact Coefficients and Correlation Assessment

- the possibility of its use (minimum requirement: two compared variables were determined at least on the interval scale, and, in our case, on the ratio scale);

- the prevalence of this coefficient in photogrammetric analyses (it enables a larger group of recipients to understand the results of the relationships here presented); and

- a visual assessment of the figures (e.g., graphs representing the data) showing (in many cases—where it was noticed) a linear relationship.

- Perfect (coefficient value is near ±1);

- High (coefficient value lies between ±0.50 and ±1);

- Medium(coefficient value lies between ±0.30 and ±0.49);

- Low (coefficient value lies between 0 and ±0.29); and

- No correlation (coefficient value is near 0).

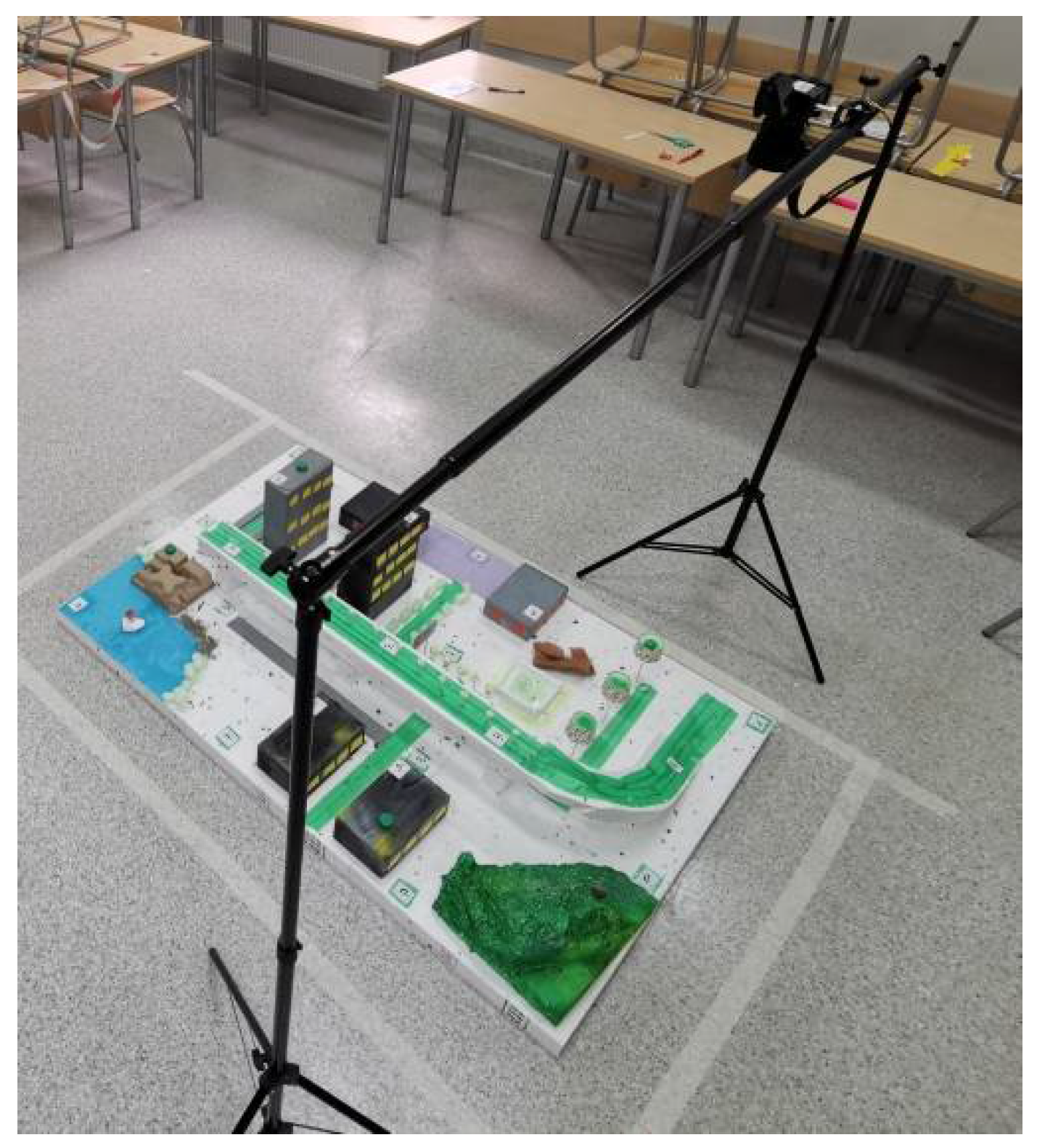

2.3. Object of Analyses

2.4. Hardware and Software

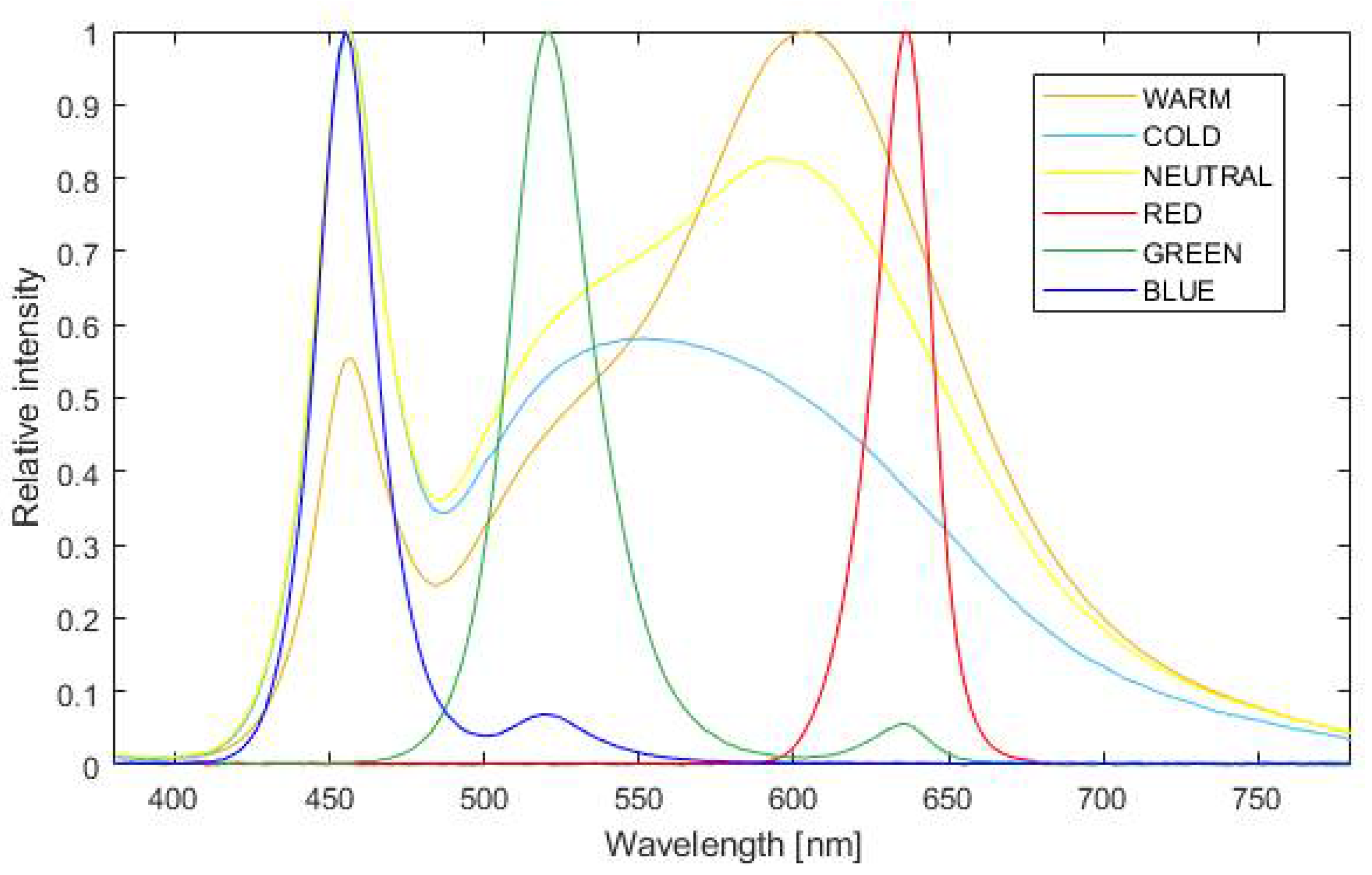

2.5. Data Acquisition

- illuminance (E)—entire luminous flux falling on a surface unit;

- correlated colour temperature (CCT), used in the specification of white light sources to describe the dominant colour tone from warm (orange), through neutral, to cold (blue);

- colour rendering index (CRI), ability of a light source to provide the most faithful representation of the surface’s colours when compared to the reference light source (usually incandescent source). The CRI range is 0 to 100. The higher the CRI, the better the representation of the illuminated objects’ colours;

- spectral power distribution (SPD) describes the power per surface unit per the lighting’s wavelength unit (radiation efficiency); and

- peak wavelength (λp), the wavelength for which the highest peak in the light’s spectral specification is obtained.

3. Results

3.1. Photometric Values of the Illuminated Scenes

3.2. Overall Study Results

3.3. Analysis of the F-Points’ Detection Ability

3.4. Analysis of the Tie-Points’ Density in the F-Points’ Vicinity and Accuracy Analysis

4. Limitations of the Study

5. Conclusions

Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Sekrecka, A.; Wierzbicki, D.; Kedzierski, M. Influence of the Sun Position and Platform Orientation on the Quality of Imagery Obtained from Unmanned Aerial Vehicles. Remote Sens. 2020, 12, 1040. [Google Scholar] [CrossRef]

- Zielinska-Dabkowska, K.M. Make lighting healthier. Nature 2018, 553, 274–276. [Google Scholar] [CrossRef] [PubMed]

- Zielinska-Dabkowska, K.M.; Xavia, K. Protect our right to light. Nature 2019, 568, 451–453. [Google Scholar] [CrossRef] [PubMed]

- Kenarsari, A.E.; Vitton, S.J.; Beard, J.E. Creating 3D models of tractor tire footprints using close-range digital photogrammetry. J. Terramechanics 2017, 74, 1–11. [Google Scholar] [CrossRef]

- Paixão, A.; Resende, R.; Fortunato, E. Photogrammetry for digital reconstruction of railway ballast particles—A cost-efficient method. Constr. Build. Mater. 2018, 191, 963–976. [Google Scholar] [CrossRef]

- Caroti, G.; Piemonte, A.; Martínez-Espejo Zaragoza, I.; Brambilla, G. Indoor photogrammetry using UAVs with protective structures: Issues and precision tests. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3/W4, 137–142. [Google Scholar] [CrossRef]

- Lato, M.J.; Bevan, G.; Fergusson, M. Gigapixel Imaging and Photogrammetry: Development of a New Long Range Remote Imaging Technique. Remote Sens. 2012, 4, 3006–3021. [Google Scholar] [CrossRef]

- Mathys, A.; Semal, P.; Brecko, J.; Van den Spiegel, D. Improving 3D photogrammetry models through spectral imaging: Tooth enamel as a case study. PLoS ONE 2019, 14, e0220949. [Google Scholar] [CrossRef]

- Abdelaziz, M.; Elsayed, M. Underwater photogrammetry digital surface model (DSM) of the submerged site of the ancient lighthouse near qaitbay fort in Alexandria, Egypt. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 1–8. [Google Scholar] [CrossRef]

- Bouroussis, C.A.; Topalis, F.V. Assessment of outdoor lighting installations and their impact on light pollution using unmanned aircraft systems—The concept of the drone-gonio-photometer. J. Quant. Spectrosc. Radiat. Transf. 2020, 253, 107155. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Bobkowska, K. UAV Photogrammetry under Poor Lighting Conditions—Accuracy Considerations. Sensors 2021, 21, 3531. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Levin, N.; Xie, J.; Li, D. Monitoring hourly night-time light by an unmanned aerial vehicle and its implications to satellite remote sensing. Remote Sens. Environ. 2020, 247, 111942. [Google Scholar] [CrossRef]

- Rabaza, O.; Molero-Mesa, E.; Aznar-Dols, F.; Gómez-Lorente, D. Experimental Study of the Levels of Street Lighting Using Aerial Imagery and Energy Efficiency Calculation. Sustainability 2018, 10, 4365. [Google Scholar] [CrossRef]

- Alamús, R.; Pérez, F.; Pipia, L.; Corbera, J. Urban sustainable ecosystems assessment through airborne earth observation: Lessons learned. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 5–10. [Google Scholar] [CrossRef]

- Zielinska-Dabkowska, K.M.; Xavia, K. Global Approaches to Reduce Light Pollution from Media Architecture and Non-Static, Self-Luminous LED Displays for Mixed-Use Urban Developments. Sustainability 2019, 11, 3446. [Google Scholar] [CrossRef]

- Zielińska-Dabkowska, K.M.; Xavia, K.; Bobkowska, K. Assessment of Citizens’ Actions against Light Pollution with Guidelines for Future Initiatives. Sustainability 2020, 12, 4997. [Google Scholar] [CrossRef]

- Hölker, F.; Wolter, C.; Perkin, E.K.; Tockner, K. Light pollution as a biodiversity threat. Trends Ecol. Evol. 2010, 25, 681–682. [Google Scholar] [CrossRef] [PubMed]

- Gaston, K.J.; Bennie, J.; Davies, T.W.; Hopkins, J. The ecological impacts of nighttime light pollution: A mechanistic appraisal. Biol. Rev. 2013, 88, 912–927. [Google Scholar] [CrossRef] [PubMed]

- Schroer, S.; Hölker, F. Impact of Lighting on Flora and Fauna. In Handbook of Advanced Lighting Technology; Springer International Publishing: Cham, Switzerland, 2017; Volume 88, pp. 957–989. [Google Scholar] [CrossRef]

- Owens, A.C.S.; Cochard, P.; Durrant, J.; Farnworth, B.; Perkin, E.K.; Seymoure, B. Light pollution is a driver of insect declines. Biol. Conserv. 2020, 241, 108259. [Google Scholar] [CrossRef]

- Zielinska-Dabkowska, K.M.; Bobkowska, K.; Szlachetko, K. An Impact Analysis of Artificial Light at Night (ALAN) on Bats. A Case Study of the Historic Monument and Natura 2000 Wisłoujście Fortress in Gdansk, Poland. Int. J. Environ. Res. Public Health 2021, 18, 11327. [Google Scholar] [CrossRef] [PubMed]

- Lalak, M.; Wierzbicki, D.; Kędzierski, M. Methodology of Processing Single-Strip Blocks of Imagery with Reduction and Optimization Number of Ground Control Points in UAV Photogrammetry. Remote Sens. 2020, 12, 3336. [Google Scholar] [CrossRef]

- Burdziakowski, P. Polymodal Method of Improving the Quality of Photogrammetric Images and Models. Energies 2021, 14, 3457. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Tysiac, P. Combined Close Range Photogrammetry and Terrestrial Laser Scanning for Ship Hull Modelling. Geosciences 2019, 9, 242. [Google Scholar] [CrossRef]

- Janowski, A.; Szulwic, J.; Ziolkowski, P. Combined Method of Surface Flow Measurement Using Terrestrial Laser Scanning and Synchronous Photogrammetry. In Proceedings of the 2017 Baltic Geodetic Congress (BGC Geomatics), Gdansk, Poland, 22–25 June 2017; pp. 110–115. [Google Scholar] [CrossRef]

- Burdziakowski, P. Increasing the Geometrical and Interpretation Quality of Unmanned Aerial Vehicle Photogrammetry Products using Super-Resolution Algorithms. Remote Sens. 2020, 12, 810. [Google Scholar] [CrossRef]

- Wiącek, P.; Pyka, K. The test field for UAV accuracy assessments. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-1/W2, 67–73. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, S.I.; Ojeda-Bustamante, W.; Marcial-Pablo, M.; Enciso, J. Digital Terrain Models Generated with Low-Cost UAV Photogrammetry: Methodology and Accuracy. ISPRS Int. J. Geo-Inf. 2021, 10, 285. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Bobkowska, K. Accuracy of a low-cost autonomous hexacopter platforms navigation module for a photogrammetric and environmental measurements. In Proceedings of the Environmental Engineering 10th International Conference, Vilnius, Lithuania, 27–28 April 2017. [Google Scholar]

- Krasuski, K.; Wierzbicki, D. Application the SBAS/EGNOS Corrections in UAV Positioning. Energies 2021, 14, 739. [Google Scholar] [CrossRef]

- Damian Wierzbicki; Kamil Krasuski Determining the Elements of Exterior Orientation in Aerial Triangulation Processing Using UAV Technology. Commun.—Sci. Lett. Univ. Zilina 2020, 22, 15–24. [CrossRef]

- Specht, M. Consistency analysis of global positioning system position errors with typical statistical distributions. J. Navig. 2021, 1–18. [Google Scholar] [CrossRef]

- Specht, M.; Stateczny, A.; Specht, C.; Widźgowski, S.; Lewicka, O.; Wiśniewska, M. Concept of an Innovative Autonomous Unmanned System for Bathymetric Monitoring of Shallow Waterbodies (INNOBAT System). Energies 2021, 14, 5370. [Google Scholar] [CrossRef]

- Castilla, F.J.; Ramón, A.; Adán, A.; Trenado, A.; Fuentes, D. 3D Sensor-Fusion for the Documentation of Rural Heritage Buildings. Remote Sens. 2021, 13, 1337. [Google Scholar] [CrossRef]

- Abdelazeem, M.; Elamin, A.; Afifi, A.; El-Rabbany, A. Multi-sensor point cloud data fusion for precise 3D mapping. Egypt. J. Remote Sens. Sp. Sci. 2021. [Google Scholar] [CrossRef]

- Daakir, M.; Zhou, Y.; Pierrot Deseilligny, M.; Thom, C.; Martin, O.; Rupnik, E. Improvement of photogrammetric accuracy by modeling and correcting the thermal effect on camera calibration. ISPRS J. Photogramm. Remote Sens. 2019, 148, 142–155. [Google Scholar] [CrossRef]

- International Telecommunication Union Recommendation ITU-R BT.709-6(06/2015) Parameter Values for the HDTV Standards for Production and International Programme Exchange. Recommendation ITU-R BT.709-6. 2002. Available online: https://www.itu.int/rec/R-REC-BT.709 (accessed on 21 October 2021).

- Vaaja, M.T.; Maksimainen, M.; Kurkela, M.; Rantanen, T.; Hyyppä, H. Approaches for Mapping Night-Time Road Environment Lighting Conditions. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 199–205. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic Press: Cambridge, MA, USA, 2013. [Google Scholar]

- Benesty, J.; Chen, J.; Huang, Y.; Cohen, I. Pearson Correlation Coefficient. In Noise Reduction in Speech Processing. Springer Topics in Signal Processing; Springer: Berlin/Heidelberg, Germany, 2009; Volume 2. [Google Scholar] [CrossRef]

- Mu, Y.; Liu, X.; Wang, L. A Pearson’s correlation coefficient based decision tree and its parallel implementation. Inf. Sci. 2018, 435, 40–58. [Google Scholar] [CrossRef]

- Bobkowska, K.; Janowski, A.; Przyborski, M.; Szulwic, J. The impact of emotions on changes in the correlation coefficient between digital images of the human face. In International Multidisciplinary Scientific GeoConference Surveying Geology and Mining Ecology Management; SGEM: Sofia, Bulgaria, 2017; Volume 17. [Google Scholar] [CrossRef]

- Bobkowska, K.; Janowski, A.; Przyborski, M. Image correlation as a toll for tracking facial changes causing by external stimuli. In Proceedings of the SGEM2015 Conference Proceedings, Albena, Bulgaria, 18–24 June 2015; Volume 1, pp. 1089–1096. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating biomass and nitrogen amount of barley and grass using UAV and aircraft based spectral and photogrammetric 3D features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef]

- Janowski, A.; Kaminski, W.; Makowska, K.; Szulwic, J.; Wilde, K. The method of measuring the membrane cover geometry using laser scanning and synchronous photogrammetry. In Proceedings of the 15th International Multidisciplinary Scientific GeoConference-SGEM 2015, Albena, Bulgaria, 18–24 June 2015, ISSN 1314-2704. [Google Scholar]

- Šarlah, N.; Podobnikar, T.; Ambrožič, T.; Mušič, B. Application of Kinematic GPR-TPS Model with High 3D Georeference Accuracy for Underground Utility Infrastructure Mapping: A Case Study from Urban Sites in Celje, Slovenia. Remote Sens. 2020, 12, 1228. [Google Scholar] [CrossRef]

- Sun, M.; Xu, A.; Liu, J. Line shape monitoring of longspan concrete-filled steel tube arches based on three-dimensional laser scanning. Int. J. Robot. Autom. 2021, 36. [Google Scholar] [CrossRef]

- Yaagoubi, R.; Miky, Y. Developing a combined Light Detecting And Ranging (LiDAR) and Building Information Modeling (BIM) approach for documentation and deformation assessment of Historical Buildings. MATEC Web Conf. 2018, 149, 02011. [Google Scholar] [CrossRef][Green Version]

- Mihu-Pintilie, A. Genesis of the Cuejdel Lake and the Evolution of the Morphometric and Morpho-Bathymetric Parameters. In Natural Dam Lake Cuejdel in the Stânişoarei Mountains, Eastern Carpathians; Springer International Publishing: Cham, Switzerland, 2018; pp. 131–157. [Google Scholar] [CrossRef]

- Huseynov, I.T. The characteristic analysis of continuous light diodes. Mod. Phys. Lett. B 2021, 35, 2150247. [Google Scholar] [CrossRef]

- MK350D Compact Spectrometer. Available online: https://www.uprtek.eu.com/product/uprtek-portable-spectrometer-compact-mk350d/?gclid=CjwKCAjwn6GGBhADEiwAruUcKsFvHVwt3va3Wc5DXs8--FdD_tGSeltNnL5C2Qk4V_kwVzzsg25b6hoCBcYQAvD_BwE (accessed on 21 October 2021).

- Spectrum SMART LED Bulb 13W E-27 Wi-Fi/Bluetooth Biorhytm RGBW CCT DIMM. Available online: https://spectrumsmart.pl/en_GB/p/Spectrum-SMART-LED-bulb-13W-E-27-Wi-FiBluetooth-Biorhytm-RGBW-CCT-DIMM/30 (accessed on 21 October 2021).

- Pepe, M.; Costantino, D. Techniques, Tools, Platforms and Algorithms in Close Range Photogrammetry in Building 3D Model and 2D Representation of Objects and Complex Architectures. Comput. Aided. Des. Appl. 2020, 18, 42–65. [Google Scholar] [CrossRef]

- Kurkov, V.M.; Kiseleva, A.S. Dem accuracy research based on unmanned aerial survey data. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B3-2, 1347–1352. [Google Scholar] [CrossRef]

- Bakuła, K.; Pilarska, M.; Salach, A.; Kurczyński, Z. Detection of Levee Damage Based on UAS Data—Optical Imagery and LiDAR Point Clouds. ISPRS Int. J. Geo-Inf. 2020, 9, 248. [Google Scholar] [CrossRef]

- Han, S.; Hong, C.K. Assessment of parallel computing performance of Agisoft metashape for orthomosaic generation. J. Korean Soc. Surv. Geod. Photogramm. Cartogr. 2019, 37, 427–434. [Google Scholar] [CrossRef]

- Burdziakowski, P.; Zima, P.; Wielgat, P.; Kalinowska, D. Tracking Fluorescent Dye Dispersion from an Unmanned Aerial Vehicle. Sensors 2021, 21, 3905. [Google Scholar] [CrossRef] [PubMed]

- Almevik, G.; Westin, J. Crafting research communication in building history. Form Akad. Forsk. Des. Des. 2021, 14, 1–9. [Google Scholar] [CrossRef]

- Gonçalves, D.F.R. Impact of Image Acquisition Geometry and SfM-MVS Processing Parameters on the 3D Reconstruction of Coastal Cliffs. Universidade de Coimbra: Coimbra, Portuguese, 2020. [Google Scholar]

- Ben Ellefi, M.; Drap, P. Semantic Export Module for Close Range Photogrammetry. In European Semantic Web Conference; Springer: Cham, Switzerland, 2019; pp. 3–7. [Google Scholar] [CrossRef]

- Janowski, A.; Bobkowska, K.; Szulwic, J. 3D modelling of cylindrical-shaped objects from LIDAR data-an assessment based on theoretical modelling and experimental data. Metrol. Meas. Syst. 2018, 25, 47–56. [Google Scholar] [CrossRef]

- Bobkowska, K.; Bodus-Olkowska, I. Potential and Use of the Googlenet Ann for the Purposes of Inland Water Ships Classification. Polish Marit. Res. 2020, 27, 170–178. [Google Scholar] [CrossRef]

- Bobkowska, K.; Nagaty, K.; Przyborski, M. Incorporating iris, fingerprint and face biometric for fraud prevention in e-passports using fuzzy vault. IET Image Process. 2019, 13, 2516–2528. [Google Scholar] [CrossRef]

- Skarlatos, D.; Menna, F.; Nocerino, E.; Agrafiotis, P. Precision potential of underwater networks for archaeological excavation through trilateration and photogrammetry. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W10, 175–180. [Google Scholar] [CrossRef]

- Honório, L.M.; Pinto, M.F.; Hillesheim, M.J.; de Araújo, F.C.; Santos, A.B.; Soares, D. Photogrammetric Process to Monitor Stress Fields Inside Structural Systems. Sensors 2021, 21, 4023. [Google Scholar] [CrossRef]

- Alfio, V.S.; Costantino, D.; Pepe, M. Influence of Image TIFF Format and JPEG Compression Level in the Accuracy of the 3D Model and Quality of the Orthophoto in UAV Photogrammetry. J. Imaging 2020, 6, 30. [Google Scholar] [CrossRef] [PubMed]

- Tabaka, P. Pilot Measurement of Illuminance in the Context of Light Pollution Performed with an Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 2124. [Google Scholar] [CrossRef]

- Tabaka, P.; Rozga, P. Influence of a Light Source Installed in a Luminaire of Opal Sphere Type on the Effect of Light Pollution. Energies 2020, 13, 306. [Google Scholar] [CrossRef]

- Tabaka, P. Influence of Replacement of Sodium Lamps in Park Luminaires with LED Sources of Different Closest Color Temperature on the Effect of Light Pollution and Energy Efficiency. Energies 2021, 14, 6383. [Google Scholar] [CrossRef]

- Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sens. 2019, 12, 22. [Google Scholar] [CrossRef]

- CIE Research Strategy on Defining New Calibration Sources and Illuminants. Available online: https://www.led-professional.com/resources-1/articles/cie-research-strategy-on-defining-new-calibration-sources-and-illuminants (accessed on 21 October 2021).

- Zielinska-Dabkowska, K.M.; Hartmann, J.; Sigillo, C. LED Light Sources and Their Complex Set-Up for Visually and Biologically Effective Illumination for Ornamental Indoor Plants. Sustainability 2019, 11, 2642. [Google Scholar] [CrossRef]

- Wang, H.; Jin, S.; Wei, X.; Zhang, C.; Hu, R. Performance evaluation of SIFT under low light contrast. In Proceedings of the MIPPR 2019: Pattern Recognition and Computer Vision, Wuhan, China, 2–3 November 2019; Liu, Z., Udupa, J.K., Sang, N., Wang, Y., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 7. [Google Scholar] [CrossRef]

- Karami, E.; Prasad, S.; Shehata, M. Image Matching Using SIFT, SURF, BRIEF and ORB: Performance Comparison for Distorted Images. arXiv 2017, arXiv:1710.0272. [Google Scholar]

- Jia, Y.; Wang, K.; Hao, C. An Adaptive Contrast Threshold SIFT Algorithm Based on Local Extreme Point and Image Texture. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; pp. 219–223. [Google Scholar] [CrossRef]

- Zielinska-Dabkowska, K.M.; Xavia, K. Looking up to the stars. A call for action to save New Zealand’s dark skies for future generations to come. Sustainability 2021. under review. [Google Scholar]

- Jägerbrand, A.K.; Bouroussis, C.A. Ecological Impact of Artificial Light at Night: Effective Strategies and Measures to Deal with Protected Species and Habitats. Sustainability 2021, 13, 5991. [Google Scholar] [CrossRef]

| Type | Model | Purpose of Use |

|---|---|---|

| Total Station | Leica TCRP 1201 | measurement of the F-points’ x, y, z coordinates |

| Camera | Nikon D5300 with the Nikkor AF-S 50 mm lens | image acquisition |

| Spectrometer | UPRtek MK350D | measurement of the additional light sources’ photometric values |

| Additional Light Sources | LED SPECTRUM SMART 13W E27 LEDR GBW | mock-up illumination |

| Software | Agisoft Metashape Profesional | photogrammetric elaboration |

| Matlab R2020b | analytical elaboration |

| Sequence Number (n) | Sequence Name | Lighting Parameters: E, CCT, CRI, λp | Description |

|---|---|---|---|

| 1 | DARK | see Table 3 | no artificial lighting |

| 2 | WARM | see Table 3 | illumination using “white” light commonly known as warm |

| 3 | COLD | see Table 3 | illumination using “white” light commonly known as natural |

| 4 | NEUTRAL | see Table 3 | illumination using “white” light commonly known as natural |

| 5 | RED | see Table 3 | illumination using red light |

| 6 | GREEN | see Table 3 | illumination using green light |

| 7 | BLUE | see Table 3 | illumination using blue light |

| Warm | Cold | Neutral | Red | Green | Blue | |

|---|---|---|---|---|---|---|

| E [lx] | 28,750 | 28,580 | 29,160 | 306 | 911 | 334 |

| CCT [K] | 3066 | 5961 | 4383 | 0 | 7841 | 0 |

| CRI [Ra] | 83.65 | 87.20 | 88.35 | 0.00 | 0.00 | 0.00 |

| λp [nm] | 603 | 455 | 454 | 635 | 520 | 454 |

| Parameter Name | Dark | Warm | Cold | Neutral | Red | Green | Blue |

|---|---|---|---|---|---|---|---|

| Number of images: | 43 | 43 | 43 | 43 | 43 | 43 | 43 |

| Recording altitude [m]: | 1.30 | 1.35 | 1.34 | 1.35 | 1.33 | 1.33 | 1.34 |

| Ground resolution [mm/pix]: | 0.1000 | 0.0994 | 0.0992 | 0.0993 | 0.1000 | 0.0996 | 0.0997 |

| Coverage area [m2]: | 2.00 | 2.43 | 2.44 | 2.43 | 2.22 | 2.32 | 2.36 |

| Camera stations: | 42 | 43 | 43 | 43 | 43 | 43 | 43 |

| Tie points: | 24,582 | 128,221 | 132,876 | 129,253 | 43,204 | 60,716 | 40,351 |

| Projections: | 58,154 | 317,335 | 331,564 | 330,151 | 98,604 | 142,292 | 96,208 |

| Reprojection error [pix]: | 0.926 | 0.611 | 0.601 | 0.586 | 0.649 | 0.663 | 0.654 |

| Number of detected F-points: | 16 | 18 | 18 | 18 | 13 | 18 | 16 |

| XY error [cm]: | 0.28 | 0.10 | 0.11 | 0.11 | 0.14 | 0.14 | 0.17 |

| XYZ total error [cm]: | 0.80 | 0.28 | 0.33 | 0.31 | 0.36 | 0.38 | 0.29 |

| XYZ Total Error (cm) | Number of Cases with F-Point | |||||||

|---|---|---|---|---|---|---|---|---|

| F-Point | Type of Case | |||||||

| Dark | Warm | Cold | Neutral | Red | Green | Blue | ||

| 2 | 1.14 | 0.14 | 0.16 | 0.17 | 0.20 | 0.20 | 0.17 | 7 |

| 3 | 0.40 | 0.08 | 0.08 | 0.06 | NaN | 0.11 | 0.05 | 6 |

| 4 | 0.21 | 0.07 | 0.08 | 0.08 | NaN | NaN | NaN | 4 |

| 5 | 1.13 | 0.13 | 0.15 | 0.16 | NaN | 0.18 | NaN | 6 |

| 6 | 0.31 | 0.43 | 0.45 | 0.45 | 0.41 | 0.42 | 0.38 | 7 |

| 8 | 0.07 | 0.09 | 0.10 | 0.11 | NaN | 0.15 | NaN | 5 |

| 9 | 0.61 | 0.13 | 0.14 | 0.14 | 0.32 | 0.27 | 0.24 | 7 |

| 10 | 0.32 | 0.43 | 0.41 | 0.41 | 0.06 | 0.40 | 0.05 | 7 |

| 11 | 0.95 | 0.13 | 0.14 | 0.15 | 0.21 | 0.21 | 0.24 | 7 |

| 12 | 0.43 | 0.16 | 0.16 | 0.15 | 0.40 | 0.25 | 0.29 | 7 |

| 13 | NaN | 0.23 | 0.26 | 0.27 | 0.50 | 0.43 | 0.31 | 6 |

| 14 | 0.54 | 0.17 | 0.20 | 0.19 | 0.18 | 0.30 | 0.16 | 7 |

| 15 | 0.68 | NaN | NaN | NaN | NaN | 0.95 | 0.48 | 3 |

| 20 | 0.94 | 0.31 | 0.34 | 0.33 | 0.39 | 0.52 | 0.43 | 7 |

| 22 | NaN | 0.17 | 0.18 | 0.18 | 0.15 | 0.16 | 0.24 | 6 |

| 23 | 0.94 | 0.30 | 0.32 | 0.33 | NaN | 0.41 | 0.08 | 6 |

| 30 | NaN | 0.76 | 1.01 | 0.89 | 0.13 | 0.20 | 0.11 | 6 |

| 32 | 1.70 | 0.16 | 0.17 | 0.16 | 0.62 | 0.35 | 0.38 | 7 |

| 36 | 0.68 | 0.28 | 0.30 | 0.26 | 0.57 | 0.48 | 0.47 | 7 |

| Dark | Warm | Cold | Neutral | Red | Green | Blue | |

|---|---|---|---|---|---|---|---|

| E [lx] | - | 28,750 | 28,580 | 29,160 | 306 | 911 | 334 |

| CCT [K] | - | 3066 | 5961 | 4383 | 0 | 7841 | 0 |

| CRI [Ra] | - | 83.65 | 87.20 | 88.35 | 0.00 | 0.00 | 0.00 |

| Tie points [-] | 24,582 | 128,221 | 132,876 | 129,253 | 43,204 | 60,716 | 40,351 |

| Projections [-] | 58,154 | 317,335 | 331,564 | 330,151 | 98,604 | 142,292 | 96,208 |

| MeanL2 CP [-] | 21.3 | 113.0 | 120.2 | 118.8 | 28.4 | 62.5 | 31.7 |

| F error [pix] | 57 | 8.3 | 7.6 | 7.6 | 22 | 15 | 19 |

| Cx error [pix] | 63 | 11 | 9.3 | 9.4 | 35 | 21 | 27 |

| Cy error [pix] | 62 | 11 | 9.9 | 10 | 32 | 22 | 26 |

| B1 error | 5.6 | 1.5 | 1.3 | 1.3 | 3.8 | 2.7 | 3.3 |

| B2 error | 5.3 | 1.4 | 1.3 | 1.3 | 3.2 | 2.4 | 3 |

| K1 error | 0.00330 | 0.00070 | 0.00064 | 0.00065 | 0.00160 | 0.00120 | 0.00140 |

| K2 error | 0.07300 | 0.02000 | 0.01800 | 0.01900 | 0.04300 | 0.03300 | 0.03700 |

| K3 error | 0.66000 | 0.19000 | 0.17000 | 0.17000 | 0.39000 | 0.30000 | 0.34000 |

| P1 error | 0.00074 | 0.00008 | 0.00007 | 0.00007 | 0.00026 | 0.00015 | 0.00020 |

| P2 error | 0.00069 | 0.00007 | 0.00006 | 0.00007 | 0.00023 | 0.00014 | 0.00018 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bobkowska, K.; Burdziakowski, P.; Szulwic, J.; Zielinska-Dabkowska, K.M. Seven Different Lighting Conditions in Photogrammetric Studies of a 3D Urban Mock-Up. Energies 2021, 14, 8002. https://doi.org/10.3390/en14238002

Bobkowska K, Burdziakowski P, Szulwic J, Zielinska-Dabkowska KM. Seven Different Lighting Conditions in Photogrammetric Studies of a 3D Urban Mock-Up. Energies. 2021; 14(23):8002. https://doi.org/10.3390/en14238002

Chicago/Turabian StyleBobkowska, Katarzyna, Pawel Burdziakowski, Jakub Szulwic, and Karolina M. Zielinska-Dabkowska. 2021. "Seven Different Lighting Conditions in Photogrammetric Studies of a 3D Urban Mock-Up" Energies 14, no. 23: 8002. https://doi.org/10.3390/en14238002

APA StyleBobkowska, K., Burdziakowski, P., Szulwic, J., & Zielinska-Dabkowska, K. M. (2021). Seven Different Lighting Conditions in Photogrammetric Studies of a 3D Urban Mock-Up. Energies, 14(23), 8002. https://doi.org/10.3390/en14238002