Improving Energy Efficiency on SDN Control-Plane Using Multi-Core Controllers

Abstract

1. Introduction

1.1. SDN on Data Center Networks (DCN)

1.2. Contributions

- The evaluation of processor power on controller energy consumption.

- A parallel implementation of the Ryu controller that improves multicore processors performance.

- A novel energy-reducing solution for SDN controllers not tied to any topology or knowledge of the current topology and that may be applied to any number of controllers in a hierarchical or centralized organization, with no special hardware.

2. Related Work

3. Materials and Methods

3.1. Energy Consumption on SDN

3.2. Energy Consumption on the Control Plane

3.3. Impact of Controller Energy Consumption on a Data Center Network

3.4. Multi-Core and Single-Core

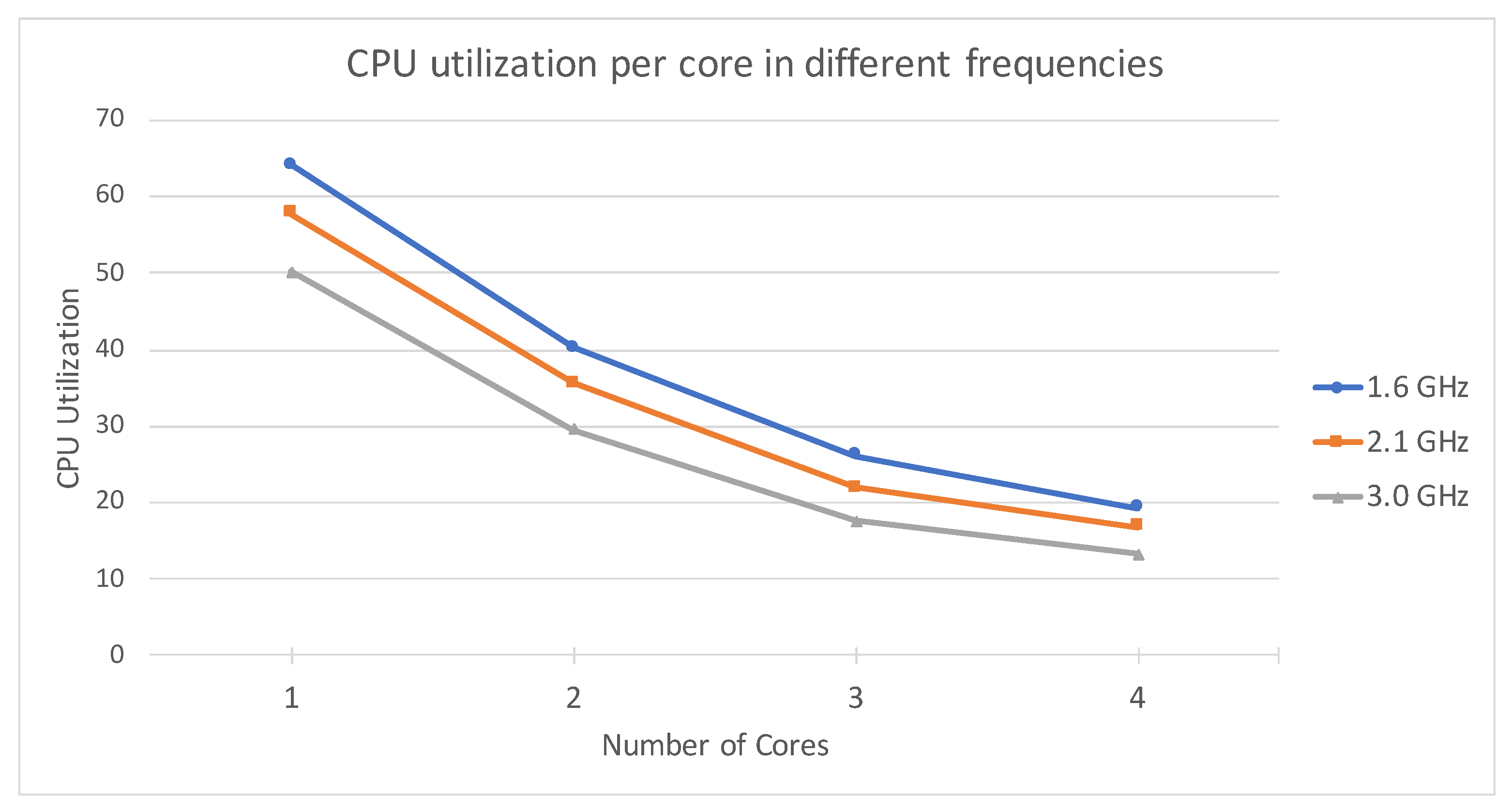

3.5. Comparing Multi-Core and Single-Core Energy Consumption

3.6. Proposed Method—Parallel SDN Controller for a Multicore Platform

| Algorithm 1: Simple multicore switch |

|

3.7. Working Frequency versus Parallelism

3.8. Capping Processor Frequency

4. Simulations and Results

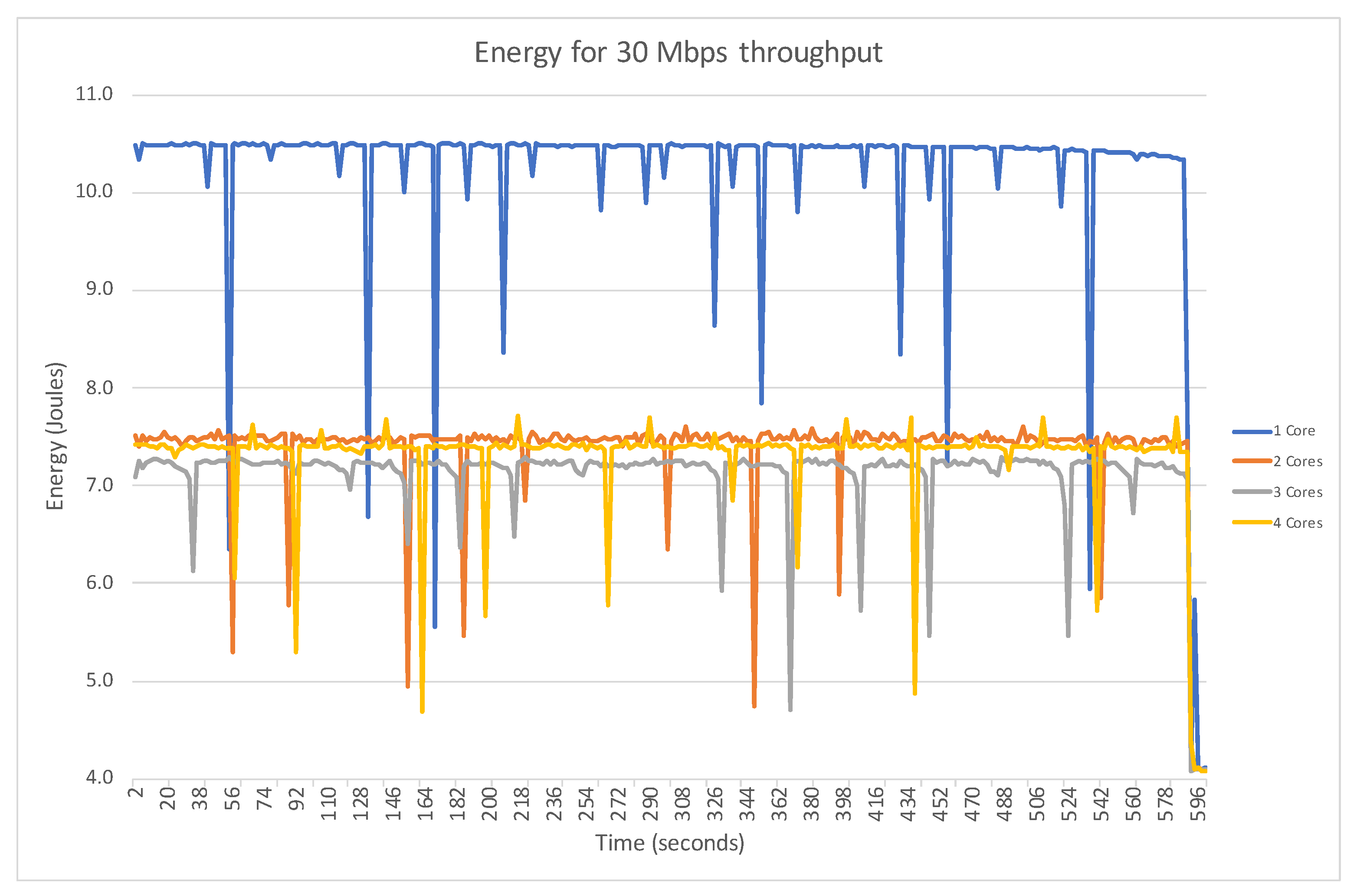

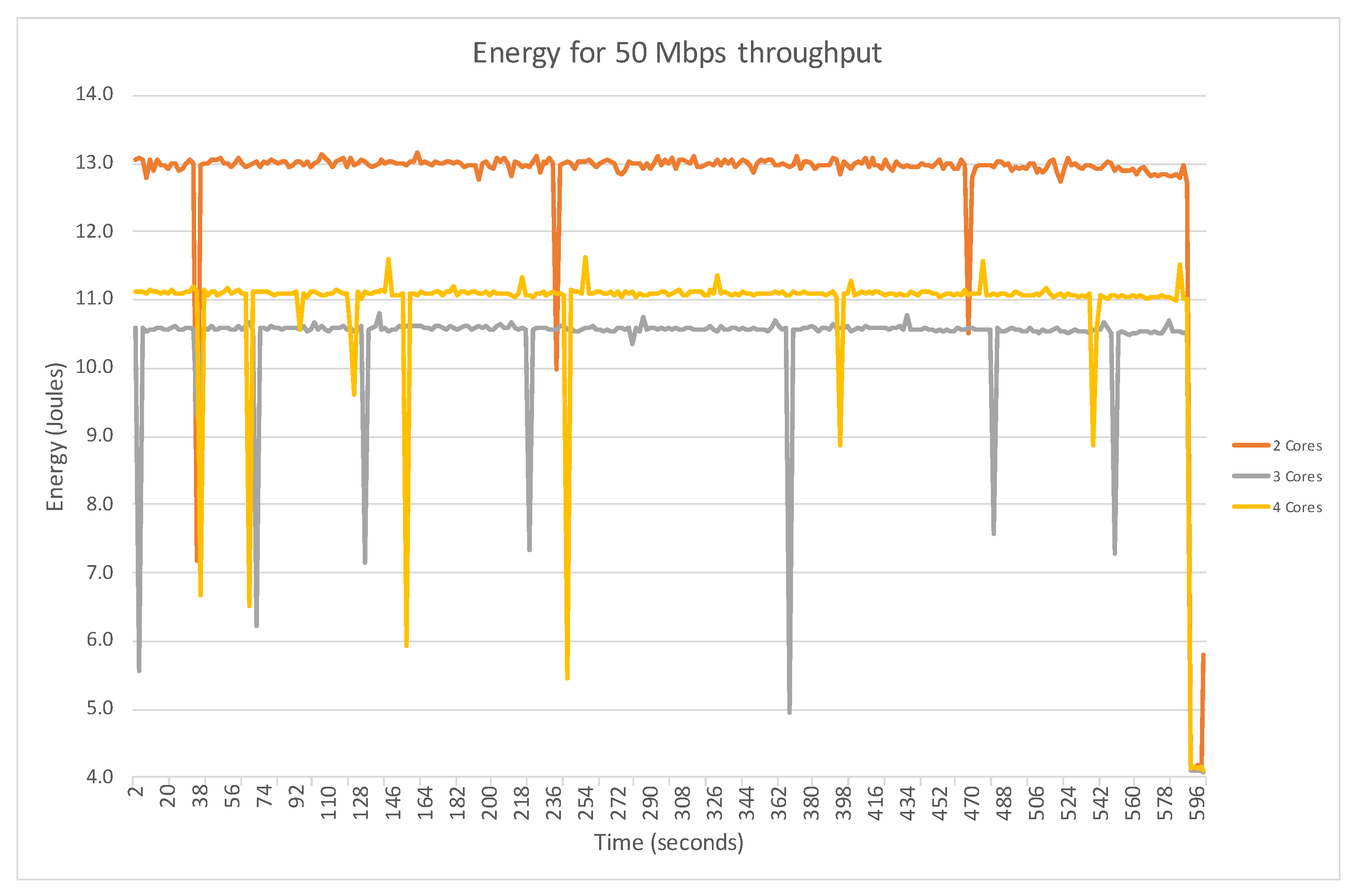

4.1. Evaluation for Constant Throughput (Iperf)

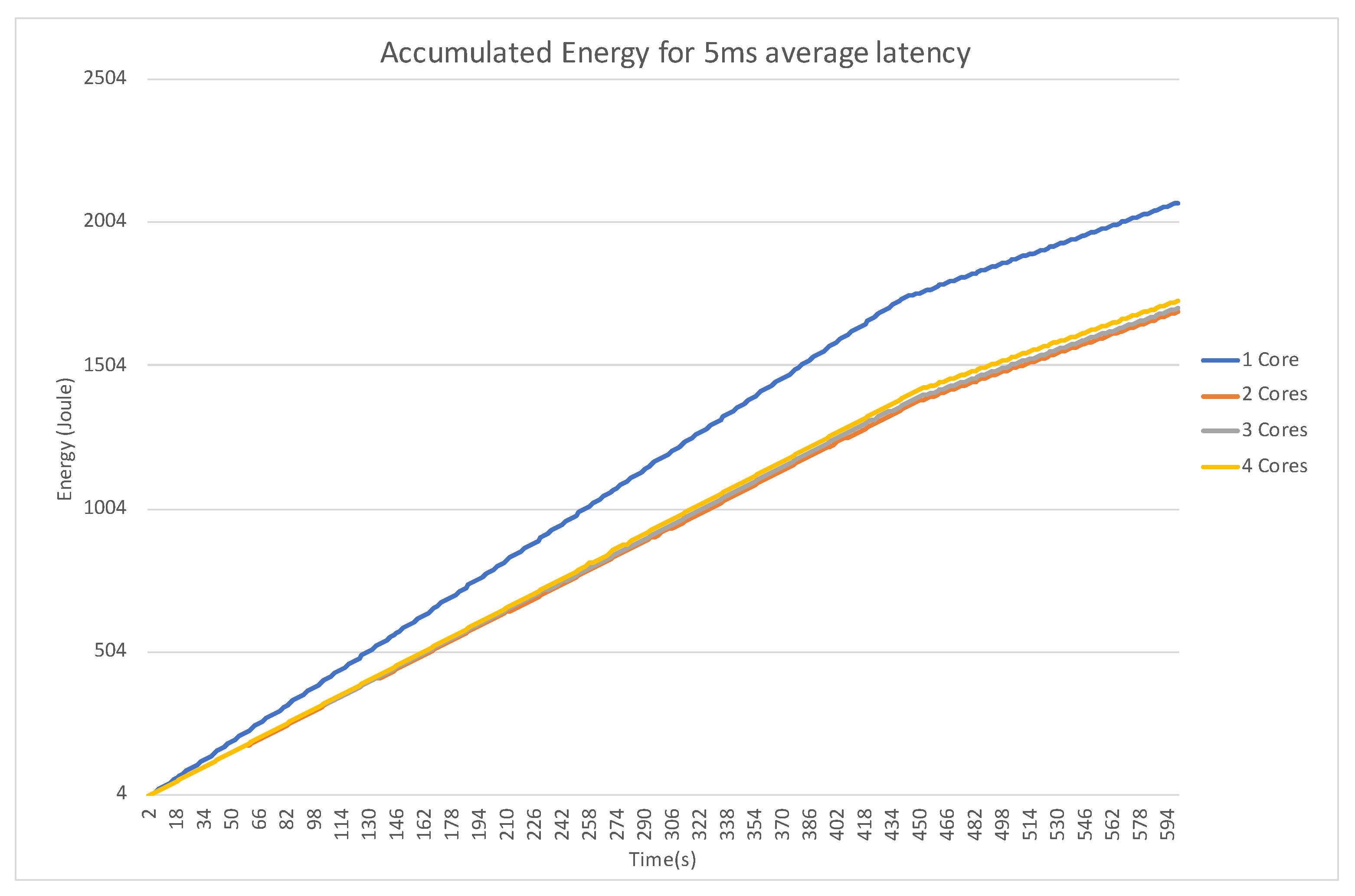

4.2. Evaluation for Maximum Latency Threshold (ICMP)

5. Conclusions and Future Work

Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SDN | Software Defined Network |

| DC | Data Center |

| ICT | Information and Communications Technology |

| PUE | Power Usage Effectiveness |

| NPUE | Network Power Usage Effectiveness |

| CNEE | Communication Network Energy Efficiency |

| TCAM | Ternary Content-Addressable Memory |

| SLA | Service Level Agreement |

| API | Application Programming Interface |

| REST | Representational State Transfer |

| CPU | Central Processing Unit |

| RAPL | Running Average Power Limit |

| MSR | Model-Specific Register |

| OS | Operating System |

| ICMP | Internet Control Message Protocol |

References

- Lis, A.; Sudolska, A.; Pietryka, I.; Kozakiewicz, A. Cloud Computing and Energy Efficiency: Mapping the Thematic Structure of Research. Energies 2020, 13, 4117. [Google Scholar] [CrossRef]

- Van Heddeghem, W.; Lambert, S.; Lannoo, B.; Colle, D.; Pickavet, M.; Demeester, P. Trends in worldwide ICT electricity consumption from 2007 to 2012. Comput. Commun. 2014, 50, 64–76. [Google Scholar] [CrossRef]

- Abts, D.; Marty, M.R.; Wells, P.M.; Klausler, P.; Liu, H. Energy proportional datacenter networks. ACM SIGARCH Comput. Archit. News 2010, 38, 338–347. [Google Scholar] [CrossRef]

- Masanet, E.; Shehabi, A.; Lei, N.; Smith, S.; Koomey, J. Recalibrating global data center energy-use estimates. Science 2020, 367, 984–986. [Google Scholar] [CrossRef]

- Fiandrino, C.; Kliazovich, D.; Bouvry, P.; Zomaya, A.Y. Performance and Energy Efficiency Metrics for Communication Systems of Cloud Computing Data Centers. IEEE Trans. Cloud Comput. 2017, 5, 738–750. [Google Scholar] [CrossRef]

- Kreutz, D.; Ramos, F.M.V.; Esteves Verissimo, P.; Esteve Rothenberg, C.; Azodolmolky, S.; Uhlig, S. Software-Defined Networking: A Comprehensive Survey. Proc. IEEE 2015, 103, 14–76. [Google Scholar] [CrossRef]

- Assefa, B.G.; Özkasap, Ö. A survey of energy efficiency in SDN: Software-based methods and optimization models. J. Netw. Comput. Appl. 2019, 137, 127–143. [Google Scholar] [CrossRef]

- Rodrigues, B.B.; Riekstin, A.C.; Januario, G.C.; Nascimento, V.T.; Carvalho, T.C.M.B.; Meirosu, C. GreenSDN: Bringing energy efficiency to an SDN emulation environment. In Proceedings of the 2015 IFIP/IEEE International Symposium on Integrated Network Management (IM), Ottawa, ON, Canada, 11–15 May 2015; pp. 948–953. [Google Scholar] [CrossRef]

- Assefa, B.G.; Ozkasap, O. RESDN: A Novel Metric and Method for Energy Efficient Routing in Software Defined Networks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 736–749. [Google Scholar] [CrossRef]

- Priyadarsini, M.; Kumar, S.; Bera, P.; Rahman, M.A. An energy-efficient load distribution framework for SDN controllers. Computing 2020, 102, 2073–2098. [Google Scholar] [CrossRef]

- Ruiz-Rivera, A.; Chin, K.W.; Soh, S. GreCo: An Energy Aware Controller Association Algorithm for Software Defined Networks. IEEE Commun. Lett. 2015, 19, 541–544. [Google Scholar] [CrossRef]

- Yonghong, F.; Jun, B.; Jianping, W.; Ze, C.; Ke, W.; Min, L. A Dormant Multi-Controller Model for Software Defined Networking. China Commun. 2014, 11, 45–55. [Google Scholar] [CrossRef]

- Usmanyounus, M.; Islam, S.; Won Kim, S. Proposition and real-time implementation of an energy-aware routing protocol for a software defined wireless sensor network. Sensors 2019, 19, 2739. [Google Scholar] [CrossRef]

- Xu, G.; Dai, B.; Huang, B.; Yang, J.; Wen, S. Bandwidth-aware energy efficient flow scheduling with SDN in data center networks. Future Gener. Comput. Syst. 2017, 68, 163–174. [Google Scholar] [CrossRef]

- Fernández-Fernández, A.; Cervelló-Pastor, C.; Ochoa-Aday, L. Energy Efficiency and Network Performance: A Reality Check in SDN-Based 5G Systems. Energies 2017, 10, 2132. [Google Scholar] [CrossRef]

- Son, J.; Dastjerdi, A.V.; Calheiros, R.N.; Buyya, R. SLA-Aware and Energy-Efficient Dynamic Overbooking in SDN-Based Cloud Data Centers. IEEE Trans. Sustain. Comput. 2017, 2, 76–89. [Google Scholar] [CrossRef]

- Zhao, Y.; Iannone, L.; Riguidel, M. On the performance of SDN controllers: A reality check. In Proceedings of the 2015 IEEE Conference on Network Function Virtualization and Software Defined Network (NFV-SDN), San Francisco, CA, USA, 18–21 November 2015; pp. 79–85. [Google Scholar] [CrossRef]

- Yao, G.; Bi, J.; Li, Y.; Guo, L. On the Capacitated Controller Placement Problem in Software Defined Networks. IEEE Commun. Lett. 2014, 18, 1339–1342. [Google Scholar] [CrossRef]

- Bannour, F.; Souihi, S.; Mellouk, A. Adaptive distributed SDN controllers: Application to Content-Centric Delivery Networks. Future Gener. Comput. Syst. 2020, 113, 78–93. [Google Scholar] [CrossRef]

- Heinzelman, W.; Chandrakasan, A.; Balakrishnan, H. Energy-efficient communication protocol for wireless microsensor networks. In Proceedings of the 33rd Annual Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2000; Volume 1, p. 10. [Google Scholar] [CrossRef]

- Aujla, G.S.; Kumar, N.; Zomaya, A.Y.; Ranjan, R. Optimal Decision Making for Big Data Processing at Edge-Cloud Environment: An SDN Perspective. IEEE Trans. Ind. Inform. 2018, 14, 778–789. [Google Scholar] [CrossRef]

- Hu, Y.; Luo, T.; Beaulieu, N.C.; Deng, C. The Energy-Aware Controller Placement Problem in Software Defined Networks. IEEE Commun. Lett. 2017, 21, 741–744. [Google Scholar] [CrossRef]

- Shehabi, A.; Smith, S.J.; Sartor, D.A.; Brown, R.E.; Herrlin, M.; Koomey, J.G.; Masanet, E.R.; Horner, N.; Azevedo, I.L.; Lintner, W. United States Data Center Energy Usage Report. Berkeley Lab. 2016, 1, 65. [Google Scholar]

- Amdahl, G.M. Validity of the Single Processor Approach to Achieving Large Scale Computing Capabilities. In Proceedings of the Spring Joint Computer Conference, Atlantic City, NJ, USA, 18–20 April 1967; Association for Computing Machinery: New York, NY, USA, 1967; pp. 483–485. [Google Scholar] [CrossRef]

- Gustafson, J.L. Reevaluating Amdahl’s Law. Commun. ACM 1988, 31, 532–533. [Google Scholar] [CrossRef]

- Barros, C.; Silveira, L.; Valderrama, C.; Xavier-de Souza, S. Optimal processor dynamic-energy reduction for parallel workloads on heterogeneous multi-core architectures. Microprocess. Microsyst. 2015, 39, 418–425. [Google Scholar] [CrossRef]

- McKeown, N.; Anderson, T.; Balakrishnan, H.; Parulkar, G.; Peterson, L.; Rexford, J.; Shenker, S.; Turner, J. OpenFlow: Enabling Innovation in Campus Networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 69. [Google Scholar] [CrossRef]

- Ryu. Component-Based Software Defined Networking Framework. Available online: https://ryu-sdn.org/ (accessed on 10 January 2021).

- CPUPower. The Cpupower Software Package. Available online: https://www.kernel.org/doc/readme/tools-power-cpupower-README (accessed on 10 January 2021).

- Linux. CPU Performance Scaling. Available online: https://www.kernel.org/doc/html/v4.14/admin-guide/pm/cpufreq.html (accessed on 10 January 2021).

- Linux. CPU Hotplug in the Kernel. Available online: https://www.kernel.org/doc/html/latest/core-api/cpu_hotplug.html (accessed on 10 January 2021).

- Intel. Intel 64 and IA-32 Architectures Software Developer’s Manual Volume 3: System Programming Guide, 1st ed.; Intel Corporation: Santa Clara, CA, USA, 2019; Volume 3, pp. 471–517. Available online: https://software.intel.com/content/www/us/en/develop/download/intel-64-and-ia-32-architectures-sdm-combined-volumes-3a-3b-3c-and-3d-system-programming-guide.html (accessed on 5 January 2021).

- IPerf. iPerf—The Ultimate Speed Test Tool for TCP, UDP and SCTP. Available online: https://iperf.fr/ (accessed on 10 January 2021).

- Popoola, O.; Pranggono, B. On energy consumption of switch-centric data center networks. J. Supercomput. 2018, 74, 334–369. [Google Scholar] [CrossRef]

- Li, D.; Shang, Y.; Chen, C. Software defined green data center network with exclusive routing. In Proceedings of the IEEE INFOCOM 2014—IEEE Conference on Computer Communications, Toronto, ON, Canada, 27 April–2 May 2014; pp. 1743–1751. [Google Scholar] [CrossRef]

- Najm, M.; Salman, A.; Kamal, A. Network Resource Management Optimization for SDN based on Statistical Approach. Int. J. Comput. Appl. 2017, 177, 5–13. [Google Scholar] [CrossRef]

- Sahay, R.; Blanc, G.; Zhang, Z.; Debar, H. ArOMA: An SDN based autonomic DDoS mitigation framework. Comput. Secur. 2017, 70, 482–499. [Google Scholar] [CrossRef]

- Lantz, B.; Heller, B.; McKeown, N. A network in a laptop: Rapid prototyping for software-defined networks. In Proceedings of the Ninth ACM SIGCOMM Workshop on Hot Topics in Networks, Monterey, CA, USA, 20–21 October 2010; ACM Press: New York, NY, USA, 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Ferguson, A.D.; Gribble, S.; Hong, C.Y.; Killian, C.; Mohsin, W.; Muehe, H.; Ong, J.; Poutievski, L.; Singh, A.; Vicisano, L.; et al. Orion: Google’s Software-Defined Networking Control Plane. In Proceedings of the NSDI 2021: 18th USENIX Symposium on Networked Systems Design and Implementation, Boston, MA, USA, 12–14 April 2021. [Google Scholar]

- Cisco. Cisco Application Policy Infrastructure Controller Data Sheet. Available online: https://www.cisco.com/c/en/us/products/collateral/cloud-systems-management/application-policy-infrastructure-controller-apic/datasheet-c78-739715.html (accessed on 25 April 2021).

- Oliveira, T. Set of Scripts to Evaluate Ryu Multi-Core Energy Consumption. Available online: https://doi.org/10.5281/zenodo.4653312 (accessed on 31 March 2021).

| Description | Multi-Controller | Controller’s Energy | Fault-Tolerant | Partial Topology | Strategy |

|---|---|---|---|---|---|

| [20] | n | y | y | y | routing |

| [21] | y | y | y | n | load balancing |

| [22] | y | y | y | n | controller-placement |

| [16] | y | n | y | n | application-aware |

| [14] | n | n | n | n | routing |

| [15] | y | n | y | n | routing |

| [13] | n | n | n | n | routing |

| [10] | y | y | y | n | load balancing |

| [9] | n | n | n | n | routing |

| Our proposal | y | y | y | y | parallel-processing |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oliveira, T.F.; Xavier-de-Souza, S.; Silveira, L.F. Improving Energy Efficiency on SDN Control-Plane Using Multi-Core Controllers. Energies 2021, 14, 3161. https://doi.org/10.3390/en14113161

Oliveira TF, Xavier-de-Souza S, Silveira LF. Improving Energy Efficiency on SDN Control-Plane Using Multi-Core Controllers. Energies. 2021; 14(11):3161. https://doi.org/10.3390/en14113161

Chicago/Turabian StyleOliveira, Tadeu F., Samuel Xavier-de-Souza, and Luiz F. Silveira. 2021. "Improving Energy Efficiency on SDN Control-Plane Using Multi-Core Controllers" Energies 14, no. 11: 3161. https://doi.org/10.3390/en14113161

APA StyleOliveira, T. F., Xavier-de-Souza, S., & Silveira, L. F. (2021). Improving Energy Efficiency on SDN Control-Plane Using Multi-Core Controllers. Energies, 14(11), 3161. https://doi.org/10.3390/en14113161