Electrical Energy Demand Forecasting Model Development and Evaluation with Maximum Overlap Discrete Wavelet Transform-Online Sequential Extreme Learning Machines Algorithms

Abstract

1. Introduction

2. Theoretical Background

2.1. Online Sequential Extreme Learning Machine Model (OS-ELM)

2.2. Maximum Overlap Discrete Wavelet Transform (MODWT)

3. Data and Methods in the Training Period

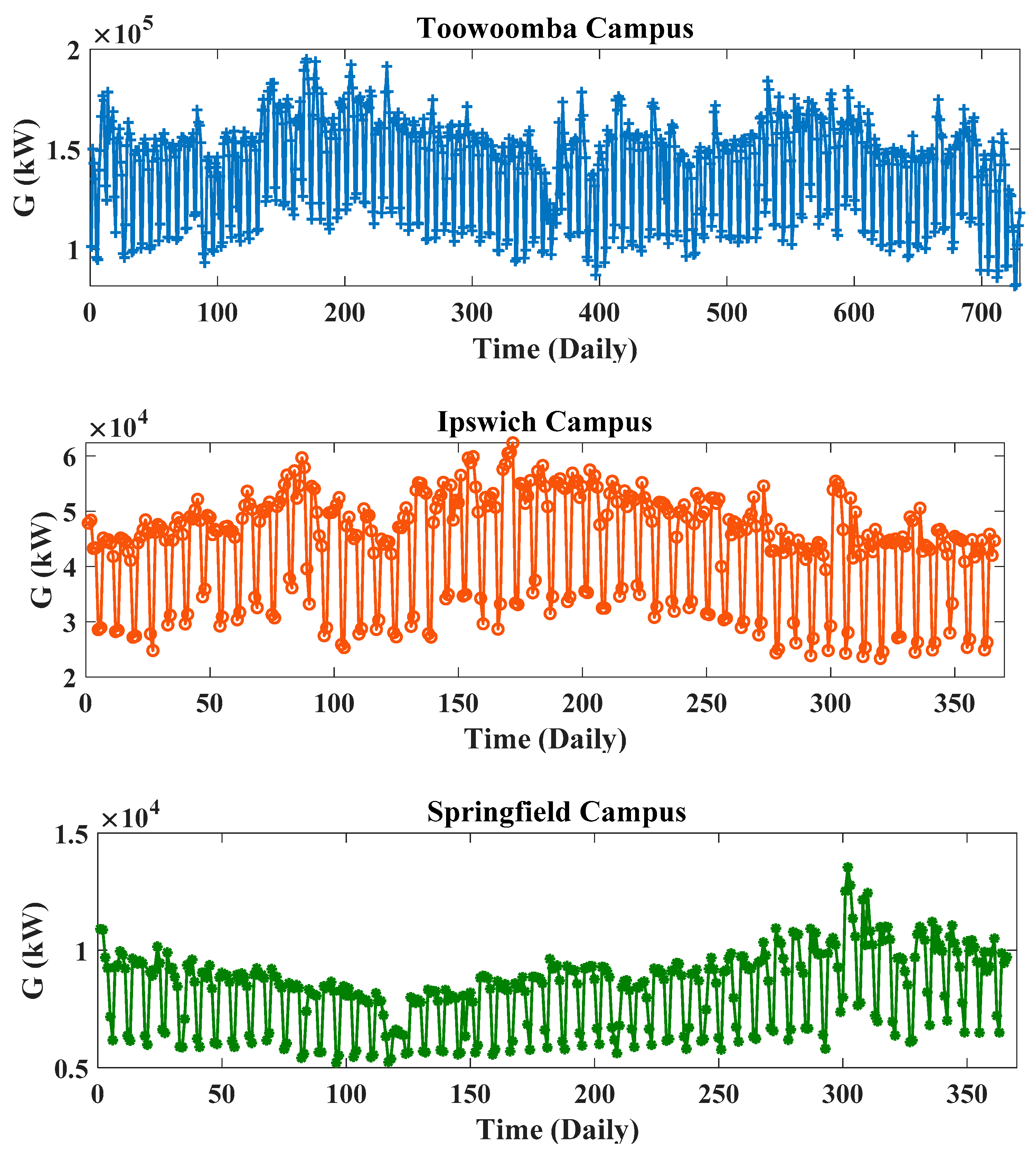

3.1. Study Area and Data

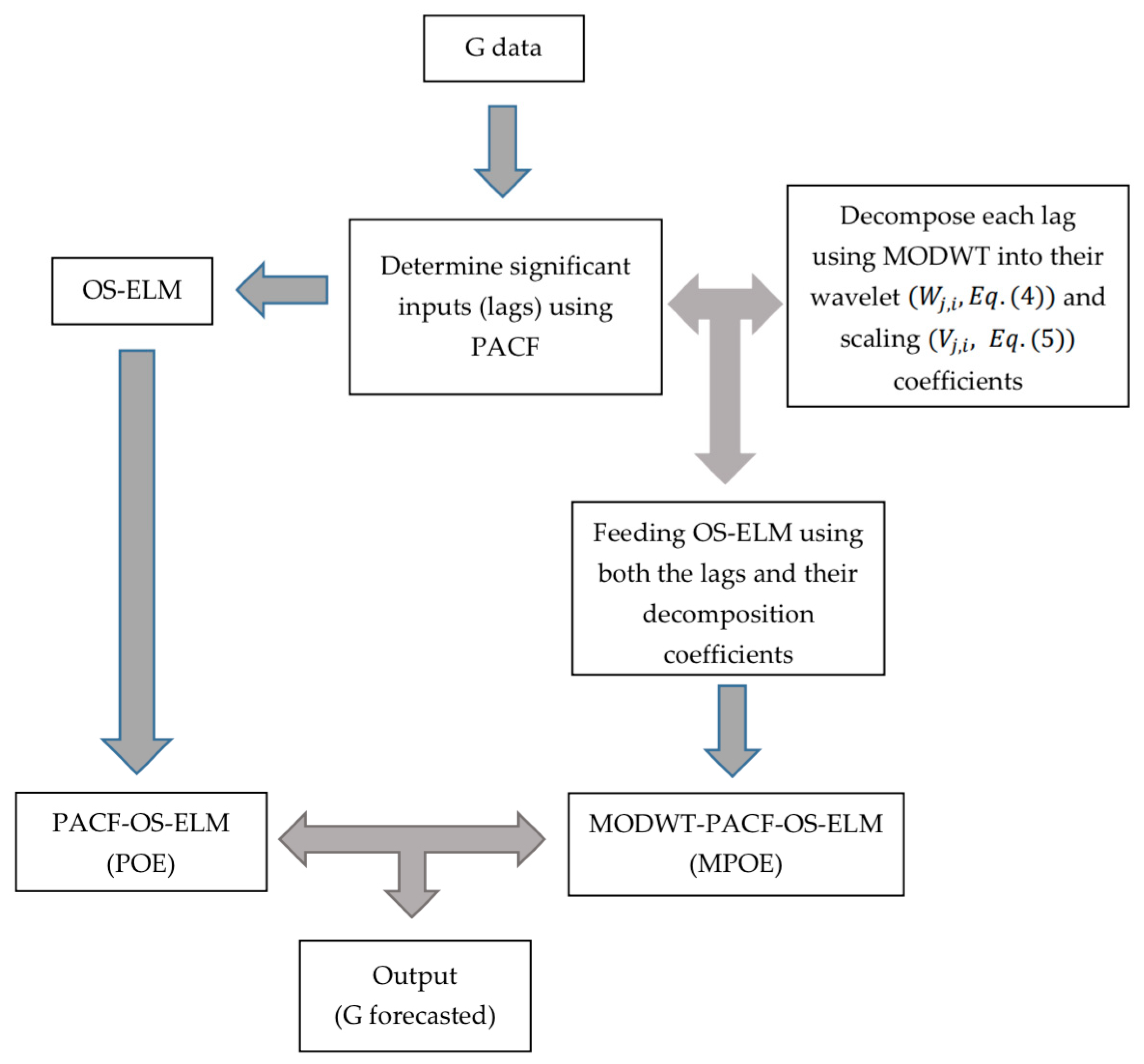

3.2. Forecast Model Development and Validation

4. Model Evaluation and Results in the Testing Period

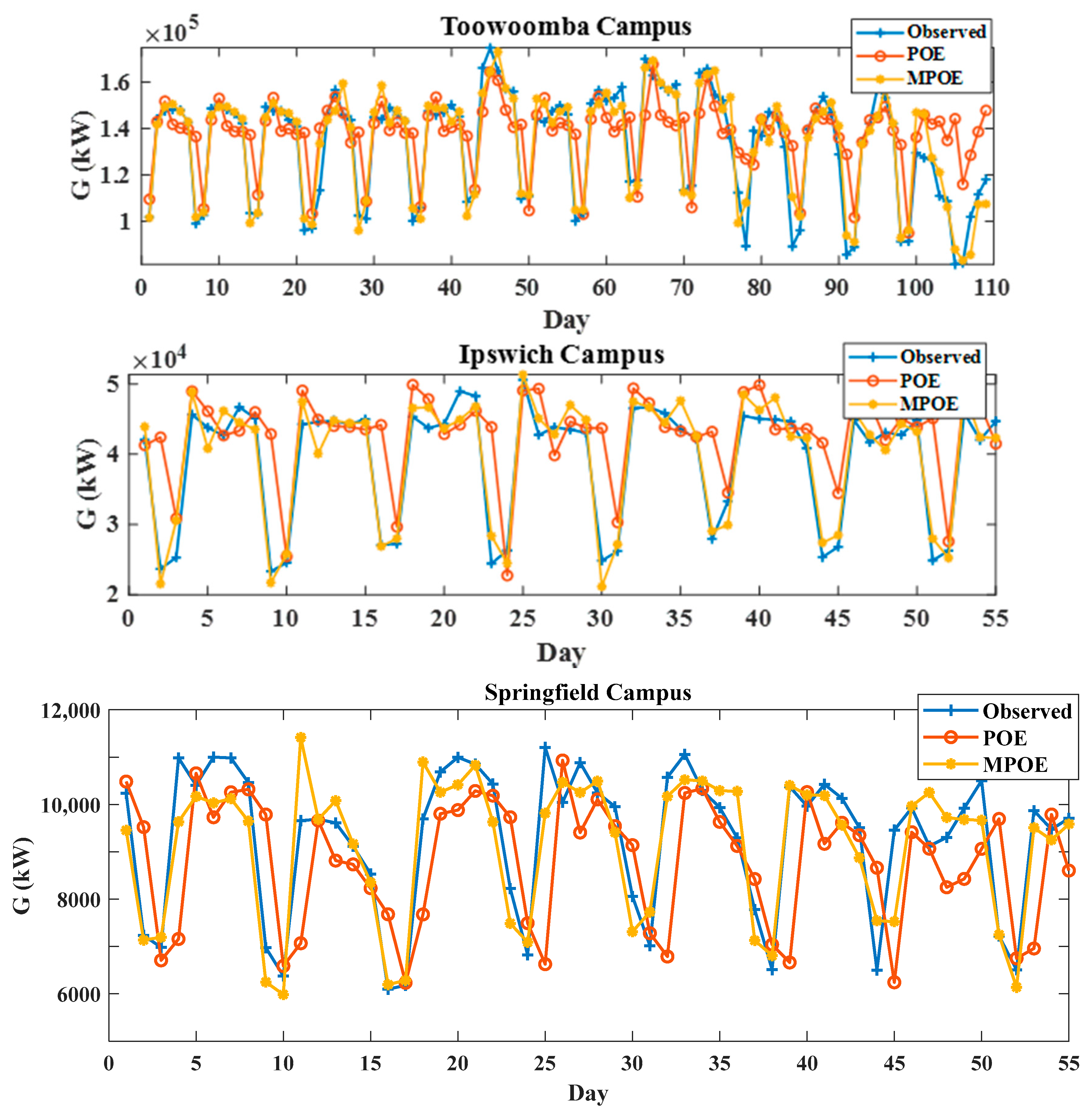

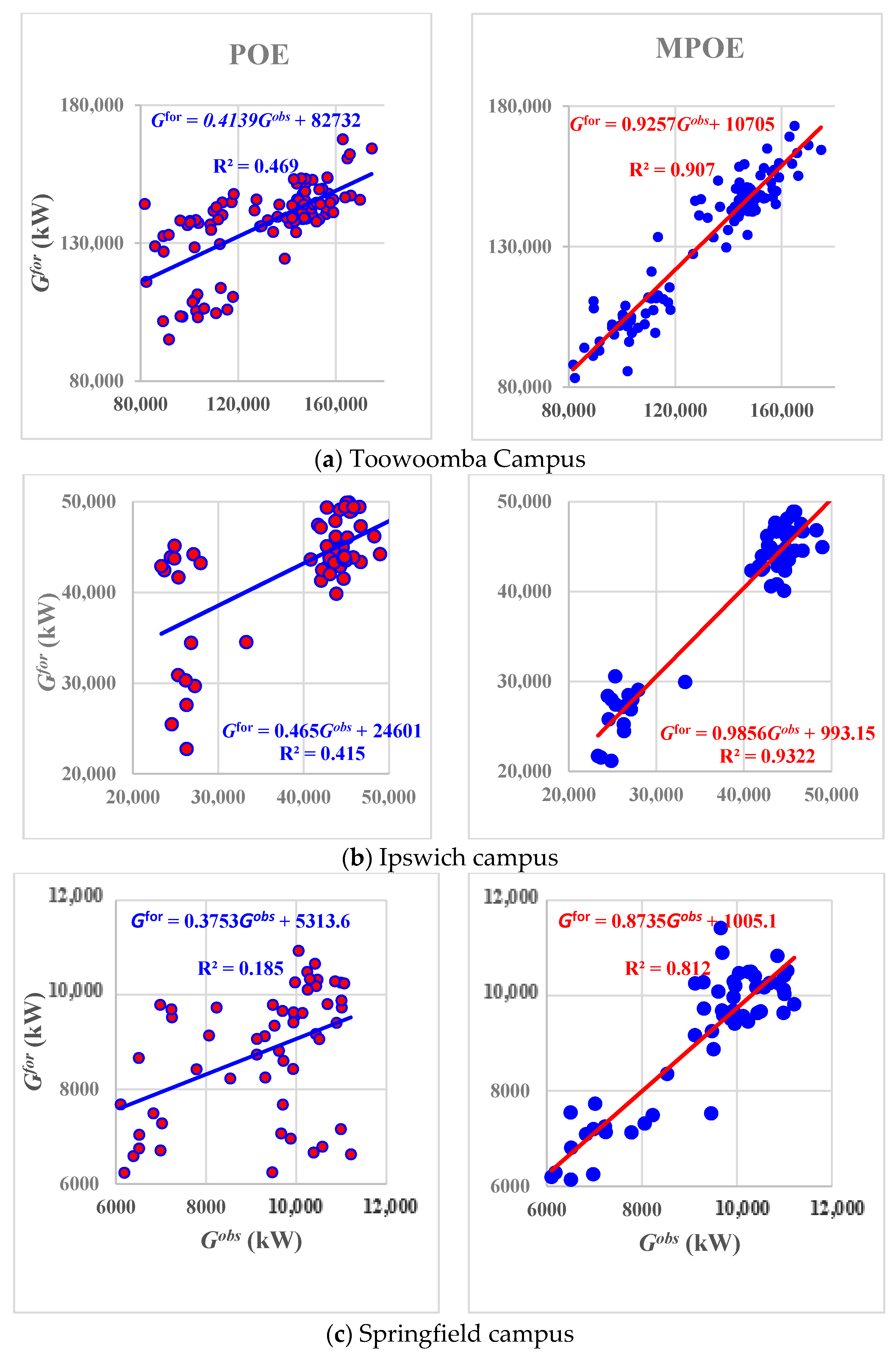

4.1. Model Prediction Quality

4.2. Results and Discussion

5. Challenges and Future Work

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Acronyms

| ci | Threshold of ith hidden node |

| Coiflets wavelet filter | |

| Daubechies wavelet filter | |

| SFLM activation function | |

| Fejer–Korovkin wavelet filter | |

| jth level scaling filter | |

| jth level wavelet filter | |

| int(.) | Nearest integer function |

| k | Number of hidden nodes in SFLM |

| kW | Kilowatts |

| r | Pearson’s correlation coefficient |

| Symlets wavelet filter | |

| weight vectors linking ith hidden node with the input node (SFLM) | |

| ANN | Artificial neural network |

| CRO | Coral reef optimization |

| DWT | Discrete wavelet transform |

| DWT-MRA | Discrete wavelet transform–multiresolution analysis |

| ECMWF | European Centre for Medium Range Weather Forecasts |

| ELM | Extreme learning machine |

| ENS | Nash–Sutcliffe model efficiency coefficient |

| Absolute Forecasted error statistics | |

| G | Electricity demand (kW) |

| ith forecasted value of G (kW) | |

| Mean of forecasted G values (kW) | |

| POE | PACF-OS-ELM |

| Coefficient of determination | |

| RMSE | Root-mean square error |

| RRMSE | Relative root-mean square error, % |

| SC | Scaling coefficient (MODWT) |

| SFLM | single-layer feed-forward neural network |

| ith observed value of G (Kw) | |

| Mean of observed G values (kW) | |

| GGA | grouping genetic algorithm |

| H | SFLM’s hidden layer output matrix |

| H* | Inverse of H matrix |

| IIS | Iterative input selection |

| J | Decomposition level |

| Lj | Width of the jth level filters |

| LM | Legates and McCabe’s Index |

| M | Hidden neuron size |

| MAE | Mean absolute error |

| MAPE | Mean absolute percentage error, % |

| MARS | Multivariate adaptive regression spline |

| MLR | Multiple linear regression |

| MODIS | Moderate Resolution Imaging Spectroradiometer (NASA) |

| MODWT | Maximum overlap discrete wavelet transform |

| MODWT-MRA | Maximum overlap discrete wavelet transform–multiresolution analysis |

| MPOE | MODWT-PACF-OS-ELM |

| MRA | Multiresolution analysis |

| N | Number of values in a data series |

| OS-ELM | Online sequential extreme learning machine |

| PACF | Partial autocorrelation function |

| SVR | Support vector regression |

| MODWT scaling coefficients | |

| MODWT wavelet coefficients | |

| WC1, WC2 | MODWT wavelet coefficients |

| WI | Willmott’s Index |

| WT | Wavelet transforms |

| weight vectors linking ith hidden node with the output node (SFLM) |

References

- Al-Musaylh, M.S.; Deo, R.C.; Adamowski, J.F.; Li, Y. Short-term electricity demand forecasting using machine learning methods enriched with ground-based climate and ECMWF Reanalysis atmospheric predictors in southeast Queensland, Australia. Renew. Sustain. Energy Rev. 2019, 113, 109–293. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Adamowski, J.; Li, Y. Short-term electricity demand forecasting with MARS, SVR and ARIMA models using aggregated demand data in Queensland, Australia. Adv. Eng. Informatics 2018, 35, 1–16. [Google Scholar] [CrossRef]

- Al-Musaylh, M.S.; Deo, R.C.; Li, Y.; Adamowski, J.F. Two-phase particle swarm optimized-support vector regression hybrid model integrated with improved empirical mode decomposition with adaptive noise for multiple-horizon electricity demand forecasting. Appl. Energy 2018, 217, 422–439. [Google Scholar] [CrossRef]

- Ali, M.; Deo, R.C.; Downs, N.J.; Maraseni, T. Multi-stage hybridized online sequential extreme learning machine integrated with Markov Chain Monte Carlo copula-Bat algorithm for rainfall forecasting. Atmospheric Res. 2018, 213, 450–464. [Google Scholar] [CrossRef]

- Deo, R.C.; Wen, X.; Qi, F. A wavelet-coupled support vector machine model for forecasting global incident solar radiation using limited meteorological dataset. Appl. Energy 2016, 168, 568–593. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Raj, N.; Mi, J. Wavelet-based 3-phase hybrid SVR model trained with satellite-derived predictors, particle swarm optimization and maximum overlap discrete wavelet transform for solar radiation prediction. Renew. Sustain. Energy Rev. 2019, 113, 109247. [Google Scholar] [CrossRef]

- Areekul, P.; Senjyu, T.; Urasaki, N.; Yona, A. Neural-wavelet Approach for Short Term Price Forecasting in Deregulated Power Market. J. Int. Counc. Electr. Eng. 2011, 1, 331–338. [Google Scholar] [CrossRef][Green Version]

- Conejo, A.J.; Plazas, M.; Espinola, R.; Molina, A.B. Day-Ahead Electricity Price Forecasting Using the Wavelet Transform and ARIMA Models. IEEE Trans. Power Syst. 2005, 20, 1035–1042. [Google Scholar] [CrossRef]

- Mohammadi, K.; Shamshirband, S.; Tong, C.W.; Arif, M.; Petković, D.; Chong, W.T. A new hybrid support vector machine–wavelet transform approach for estimation of horizontal global solar radiation. Energy Convers. Manag. 2015, 92, 162–171. [Google Scholar] [CrossRef]

- Tan, Z.; Zhang, Y.-J.; Wang, J.; Xu, J. Day-ahead electricity price forecasting using wavelet transform combined with ARIMA and GARCH models. Appl. Energy 2010, 87, 3606–3610. [Google Scholar] [CrossRef]

- Feng, Q.; Wen, X.; Li, J. Wavelet Analysis-Support Vector Machine Coupled Models for Monthly Rainfall Forecasting in Arid Regions. Water Resour. Manag. 2014, 29, 1049–1065. [Google Scholar] [CrossRef]

- Maheswaran, R.; Khosa, R. Comparative study of different wavelets for hydrologic forecasting. Comput. Geosci. 2012, 46, 284–295. [Google Scholar] [CrossRef]

- SehgaliD, V.; Sahay, R.R.; Chatterjee, C. Effect of Utilization of Discrete Wavelet Components on Flood Forecasting Performance of Wavelet Based ANFIS Models. Water Resour. Manag. 2014, 28, 1733–1749. [Google Scholar] [CrossRef]

- Tiwari, M.K.; Chatterjee, C. Development of an accurate and reliable hourly flood forecasting model using wavelet–bootstrap–ANN (WBANN) hybrid approach. J. Hydrol. 2010, 394, 458–470. [Google Scholar] [CrossRef]

- Tiwari, M.K.; Adamowski, J. Urban water demand forecasting and uncertainty assessment using ensemble wavelet-bootstrap-neural network models. Water Resour. Res. 2013, 49, 6486–6507. [Google Scholar] [CrossRef]

- Zhang, B.-L.; Dong, Z.-Y. An adaptive neural-wavelet model for short term load forecasting. Electr. Power Syst. Res. 2001, 59, 121–129. [Google Scholar] [CrossRef]

- Prasad, R.; Deo, R.C.; Li, Y.; Maraseni, T. Input selection and performance optimization of ANN-based streamflow forecasts in the drought-prone Murray Darling Basin region using IIS and MODWT algorithm. Atmospheric Res. 2017, 197, 42–63. [Google Scholar] [CrossRef]

- Dghais, A.A.A.; Ismail, M.T. A comparative study between discrete wavelet transform and maximal overlap discrete wavelet transform for testing stationarity. Int. J. Math. Comput. Phys. Electr. Comput. Eng. 2013, 7, 1677–1681. [Google Scholar]

- Quilty, J.; Adamowski, J. Addressing the incorrect usage of wavelet-based hydrological and water resources forecasting models for real-world applications with best practices and a new forecasting framework. J. Hydrol. 2018, 563, 336–353. [Google Scholar] [CrossRef]

- Barzegar, R.; Moghaddam, A.A.; Adamowski, J.; Ozga-Zielinski, B. Multi-step water quality forecasting using a boosting ensemble multi-wavelet extreme learning machine model. Stoch. Environ. Res. Risk Assess. 2017, 32, 799–813. [Google Scholar] [CrossRef]

- Barzegar, R.; Fijani, E.; Moghaddam, A.A.; Tziritis, E. Forecasting of groundwater level fluctuations using ensemble hybrid multi-wavelet neural network-based models. Sci. Total. Environ. 2017, 599, 20–31. [Google Scholar] [CrossRef]

- Lan, Y.; Soh, Y.C.; Huang, G.-B. Ensemble of online sequential extreme learning machine. Neurocomputing 2009, 72, 3391–3395. [Google Scholar] [CrossRef]

- Liang, N.-Y.; Huang, G.-B.; Saratchandran, P.; Sundararajan, N. A Fast and Accurate Online Sequential Learning Algorithm for Feedforward Networks. IEEE Trans. Neural Networks 2006, 17, 1411–1423. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Yadav, B.; Ch, S.; Mathur, S.; Adamowski, J. Discharge forecasting using an Online Sequential Extreme Learning Machine (OS-ELM) model: A case study in Neckar River, Germany. Measurement 2016, 92, 433–445. [Google Scholar] [CrossRef]

- Percival, D.B.; Walden, A.T. Wavelet Methods for Time Series Analysis; Cambridge University Press: Cambridge, UK, 2000; p. 4. [Google Scholar]

- Rathinasamy, M.; Adamowski, J.; Khosa, R. Multiscale streamflow forecasting using a new Bayesian Model Average based ensemble multi-wavelet Volterra nonlinear method. J. Hydrol. 2013, 507, 186–200. [Google Scholar] [CrossRef]

- Seo, Y.; Choi, Y.; Choi, J. River Stage Modeling by Combining Maximal Overlap Discrete Wavelet Transform, Support Vector Machines and Genetic Algorithm. Water 2017, 9, 525. [Google Scholar] [CrossRef]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; Technical Report. Department of Computer Science and Information Engineering, University of National Taiwan: Taipei, Taiwan, 2003; pp. 1–12. Available online: https://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf (accessed on 15 January 2020).

- Chai, T.; Draxler, R. Root mean square error (RMSE) or mean absolute error (MAE)? – Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Mohammadi, K.; Shamshirband, S.; Anisi, M.H.; Alam, K.A.; Petković, D. Support vector regression based prediction of global solar radiation on a horizontal surface. Energy Convers. Manag. 2015, 91, 433–441. [Google Scholar] [CrossRef]

- Willmott, C.J.; Robeson, S.M.; Matsuura, K. A refined index of model performance. Int. J. Clim. 2011, 32, 2088–2094. [Google Scholar] [CrossRef]

- Willmott, C.J. On the Evaluation of Model Performance in Physical Geography; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1984; pp. 443–460. [Google Scholar]

- Willmott, C.J. Some comments on the evaluation of model performance. Bull. Am. Meteorol. Soc. 1982, 63, 1309–1313. [Google Scholar] [CrossRef]

- Willmott, C.J. On the validation of models. Phys. Geogr. 1981, 2, 184–194. [Google Scholar] [CrossRef]

- Dawson, C.; Abrahart, R.; See, L. HydroTest: A web-based toolbox of evaluation metrics for the standardised assessment of hydrological forecasts. Environ. Model. Softw. 2007, 22, 1034–1052. [Google Scholar] [CrossRef]

- LeGates, D.R.; McCabe, G.J. Evaluating the use of “goodness-of-fit” Measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 1999, 35, 233–241. [Google Scholar] [CrossRef]

- Krause, P.; Boyle, D.P.; Bäse, F. Comparison of different efficiency criteria for hydrological model assessment. Adv. Geosci. 2005, 5, 89–97. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar]

- Li, M.-F.; Tang, X.-P.; Wu, W.; Liu, H. General models for estimating daily global solar radiation for different solar radiation zones in mainland China. Energy Convers. Manag. 2013, 70, 139–148. [Google Scholar] [CrossRef]

- Heinemann, A.B.; Van Oort, P.; Fernandes, D.S.; Maia, A.D.H.N. Sensitivity of APSIM/ORYZA model due to estimation errors in solar radiation. Bragantia 2012, 71, 572–582. [Google Scholar] [CrossRef]

- Zhang, Z.; Hong, W.-C.; Li, J. Electric Load Forecasting by Hybrid Self-Recurrent Support Vector Regression Model With Variational Mode Decomposition and Improved Cuckoo Search Algorithm. IEEE Access 2020, 8, 14642–14658. [Google Scholar] [CrossRef]

- Dong, Y.; Zhang, Z.; Hong, W.-C. A Hybrid Seasonal Mechanism with a Chaotic Cuckoo Search Algorithm with a Support Vector Regression Model for Electric Load Forecasting. Energies 2018, 11, 1009. [Google Scholar] [CrossRef]

- Hong, W.-C.; Dong, Y.; Lai, C.-Y.; Chen, L.-Y.; Wei, S.-Y. SVR with Hybrid Chaotic Immune Algorithm for Seasonal Load Demand Forecasting. Energies 2011, 4, 960–977. [Google Scholar] [CrossRef]

- Jeffrey, S.J.; Carter, J.O.; Moodie, K.B.; Beswick, A.R. Using spatial interpolation to construct a comprehensive archive of Australian climate data. Environ. Model. Softw. 2001, 16, 309–330. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Downs, N.J.; Raj, N. Self-adaptive differential evolutionary extreme learning machines for long-term solar radiation prediction with remotely-sensed MODIS satellite and Reanalysis atmospheric products in solar-rich cities. Remote. Sens. Environ. 2018, 212, 176–198. [Google Scholar] [CrossRef]

- Deo, R.C.; Şahin, M. Forecasting long-term global solar radiation with an ANN algorithm coupled with satellite-derived (MODIS) land surface temperature (LST) for regional locations in Queensland. Renew. Sustain. Energy Rev. 2017, 72, 828–848. [Google Scholar] [CrossRef]

- Wan, Z. MODIS land-surface temperature algorithm theoretical basis document (LST ATBD). Institute for Computational Earth System Science, Santa Barbara. 1999; p. 75. Available online: https://modis.gsfc.nasa.gov/data/atbd/atbd_mod11.pdf (accessed on 10 February 2020).

- Wan, Z.; Zhang, Y.; Zhang, Q.; Li, Z.-L. Quality assessment and validation of the MODIS global land surface temperature. Int. J. Remote. Sens. 2004, 25, 261–274. [Google Scholar] [CrossRef]

- MODIS. MODIS (Moderate-Resolution Imaging Spectroradiometer). 2018. Available online: https://modis.gsfc.nasa.gov/about/media/modis_brochure.pdf (accessed on 10 February 2020).

- Galelli, S.; Castelletti, A. Tree-based iterative input variable selection for hydrological modeling. Water Resour. Res. 2013, 49, 4295–4310. [Google Scholar] [CrossRef]

- Bueno, L.C.; Nieto-Borge, J.C.; García-Díaz, P.; Rodríguez, G.; Salcedo-Sanz, S. Significant wave height and energy flux prediction for marine energy applications: A grouping genetic algorithm—Extreme Learning Machine approach. Renew. Energy 2016, 97, 380–389. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Pastor-Sánchez, Á.; Prieto, L.; Blanco-Aguilera, A.; García-Herrera, R. Feature selection in wind speed prediction systems based on a hybrid coral reefs optimization—Extreme learning machine approach. Energy Convers. Manag. 2014, 87, 10–18. [Google Scholar] [CrossRef]

- Salcedo-Sanz, S.; Casanova-Mateo, C.; Pastor-Sánchez, Á.; Sánchez-Girón, M. Daily global solar radiation prediction based on a hybrid Coral Reefs Optimization—Extreme Learning Machine approach. Sol. Energy 2014, 105, 91–98. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Raj, N.; Mi, J. Deep Learning Neural Networks Trained with MODIS Satellite-Derived Predictors for Long-Term Global Solar Radiation Prediction. Energies 2019, 12, 2407. [Google Scholar] [CrossRef]

| Station | Data Period (dd-mm-yyyy) | Original 15-Mins Data | No. Daily Data Points | Descriptive Statistics for the Whole Daily Datasets | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Total | No. Zeros | Total | Training (70%) | Validation (15%) | Testing (15%) | Minimum (kW) | Maximum (kW) | Mean (kW) | ||

| Toowoomba campus (Main feed) | 01-01-2013 to 31-12-2014 | 70,080 | 60 | 730 | 512 | 109 | 109 | 81,579.70 | 195,037 | 141,328 |

| Ipswich campus (Main feed) | 01-09-2015 to 31-08-2016 | 35,136 | 30 | 366 | 256 | 55 | 55 | 23,336 | 62,378.06 | 43,716.96 |

| Springfield campus (A Block) | 35,136 | 0 | 5214 | 13,536.80 | 8310.05 | |||||

| Station | Model | No. Hidden Neurons | No. Wavelet/Scaling Filter | No. Wavelet Level | No. Models Developed | Best Model | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training | Validation | Wavelet/Scaling Filter | Wavelet Level | Hidden Neuron Size | ||||||||

| r | RMSE (kW) | r | RMSE (kW) | |||||||||

| Toowoomba campus (Main feed) | POE | 100 | Non-wavelet model | 100 | 0.70 | 17715.42 | 0.74 | 16284.72 | Non-wavelet model | 9 | ||

| MPOE | 100 | 30 | 9 | 27,000 | 0.96 | 7260.42 | 0.94 | 8026.74 | fk8 | 2 | 90 | |

| Ipswich campus (Main feed) | POE | 100 | Non-wavelet model | 100 | 0.68 | 6944.43 | 0.67 | 7543.30 | Non-wavelet model | 10 | ||

| MPOE | 100 | 30 | 8 | 24,000 | 0.97 | 2476.19 | 0.90 | 4279.08 | db2/sym2 | 3 | 54 | |

| Springfield campus (A Block) | POE | 100 | Non-wavelet model | 100 | 0.65 | 1036.28 | 0.61 | 1641.08 | Non-wavelet model | 4 | ||

| MPOE | 100 | 30 | 8 | 24,000 | 0.95 | 441.64 | 0.89 | 1164.65 | fk14 | 5 | 76 | |

| Station | Model | WI | ENS | RMSE (kW) | MAE (kW) | MAPE (%) | RRMSE (%) | LM |

|---|---|---|---|---|---|---|---|---|

| Toowoomba campus (Main feed) | POE | 0.76 | 0.42 | 18,030.44 | 12,812.32 | 11.31 | 13.58 | 0.39 |

| MPOE | 0.98 | 0.91 | 7267.62 | 5400.99 | 4.31 | 5.47 | 0.74 | |

| Ipswich campus (Main feed) | POE | 0.75 | 0.23 | 7564.84 | 4860.76 | 16.29 | 19.29 | 0.36 |

| MPOE | 0.98 | 0.93 | 2337.80 | 1980.87 | 5.46 | 5.96 | 0.74 | |

| Springfield campus (A Block) | POE | 0.67 | -0.10 | 1612.92 | 1142.36 | 12.49 | 17.43 | 0.11 |

| MPOE | 0.95 | 0.80 | 692.78 | 540.30 | 5.84 | 7.49 | 0.58 |

| Station | Wilcoxon Signed-Rank Test | T Test |

|---|---|---|

| p Value | p Value | |

| Toowoomba campus (Main feed) | 0.00001 | 0.00001 |

| Ipswich campus (Main feed) | 0.00076 | 0.00053 |

| Springfield campus (A Block) | 0.00018 | 0.00043 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Musaylh, M.S.; Deo, R.C.; Li, Y. Electrical Energy Demand Forecasting Model Development and Evaluation with Maximum Overlap Discrete Wavelet Transform-Online Sequential Extreme Learning Machines Algorithms. Energies 2020, 13, 2307. https://doi.org/10.3390/en13092307

Al-Musaylh MS, Deo RC, Li Y. Electrical Energy Demand Forecasting Model Development and Evaluation with Maximum Overlap Discrete Wavelet Transform-Online Sequential Extreme Learning Machines Algorithms. Energies. 2020; 13(9):2307. https://doi.org/10.3390/en13092307

Chicago/Turabian StyleAl-Musaylh, Mohanad S., Ravinesh C. Deo, and Yan Li. 2020. "Electrical Energy Demand Forecasting Model Development and Evaluation with Maximum Overlap Discrete Wavelet Transform-Online Sequential Extreme Learning Machines Algorithms" Energies 13, no. 9: 2307. https://doi.org/10.3390/en13092307

APA StyleAl-Musaylh, M. S., Deo, R. C., & Li, Y. (2020). Electrical Energy Demand Forecasting Model Development and Evaluation with Maximum Overlap Discrete Wavelet Transform-Online Sequential Extreme Learning Machines Algorithms. Energies, 13(9), 2307. https://doi.org/10.3390/en13092307