1. Introduction

The concept of efficiency, defined as input for output, forms the basis of such performance evaluations. In the case of energy research projects, they are not free for evaluating the performance because they use resources, such as research funding, and personnel for output, such as papers, patents, and technology transfers. Therefore, evaluating the performance between input and output in energy research projects is important in that the results are used to evaluate the efficiency of the organization and its constituent units, which in turn enables the optimal distribution of resources.

However, there are many pitfalls to evaluating the performance of projects or the organizations carrying out such projects. One of these is the issue of variety. In research, both input and output take diverse forms, resulting in the need to select a performance evaluation method that is the most conducive to the development of the organization.

The majority of the energy research projects in the current performance evaluation system are performed by weighting the diverse results of research, multiplying the results by their weight values, and dividing this figure by the amount of research expenses incurred.

Unfortunately, this weighting method poses the following problems.

First, in this method, each output is weighted and then multiplied by its weight value to calculate its individual score, after which the scores of all individual outputs are added to yield an overall score. Since all outputs, regardless of variety, are represented by this single score, the producer tends to focus on achieving a high total score, which can lead to the possibility of output bias. Output bias is the tendency to concentrate on producing an easier output rather than expending effort on a more difficult output, for the purpose of facilitating the achievement of a given total score. Therefore, this system of using a single total score to represent all outputs is problematic because it dilutes the distinctive characteristics of individual outputs, thus opening up the possibility of output bias (skewing) and diminishing the diversity of research performance.

Second, since the evaluation of output is based on weighted scores, the various outputs must be held to the same standard of measurement. Using existing measurement techniques, it is difficult to find a rational method of evaluation when dealing with diverse units of output, such as number, size, and monetary amount, or when an excessively broad range of outputs impedes the assignation of appropriate weight values, as is the case with royalty income.

Therefore, in this study, a type of data envelopment analysis (DEA) technique known as super-efficiency (SE) was used to evaluate the performance of energy research projects. The data envelopment analysis method has been widely used to study energy efficiency as an efficiency assessment method [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14] because it offers several key advantages. When using DEA to analyze efficiency, weight values are automatically calculated in the model itself. Additionally, this method allows for the use of variables based on differing standards of measurement [

8,

15]. However, typically, DEA results in the following problem when applied to the ranking of decision-making units (DMUs). As a method for analyzing the efficiency of DMUs, DEA largely divides efficient DMUs from inefficient ones [

16]. Inefficient DMUs provide the scores needed to determine their ranking, but efficient DMUs all present a score of 1, which makes it difficult to discern a hierarchy among them [

6,

9,

10,

13]. This would not be an issue if the goal was to ascertain the efficiency or inefficiency of DMUs, or to divide DMUs into groups and obtain their mean values. However, if it is necessary to rank the various DMUs comprising the evaluated group, performance evaluation becomes impossible because all efficient DMUs present the first ranking.

Therefore, this study proposes DEA-SE analysis, a form of DEA analysis, as an alternative to the evaluation system currently being used to analyze the efficiency of energy research projects. As DEA-SE is a variant of DEA, it can be applied directly without converting input and output into homogenized variables. It is also free from such technicalities as weight values and measurement units, and enables the accurate determination of ranking, which was impossible using typical DEA techniques [

6,

9,

10,

13].

Accordingly, in this study, actual research project data from a national energy research institute in Korea were used to analyze the performance of energy research projects, and the analysis results obtained using a weighting method were compared with those obtained using the proposed SE method.

The following section examines the performance evaluation method currently used for energy research projects, and the new performance evaluation method proposed in this study as an alternative. In

Section 3, the various methods examined in

Section 2 are subjected to comparative verification. Finally,

Section 4 summarizes the conclusions and implications of this study.

2. Research Methodology and Data

2.1. Research Performance Evaluation Using Weighting Method

The performance evaluation method currently used at the national energy research institute in Korea is a simple efficiency measurement system consisting of a single input variable, represented by research funding, and a single output variable, represented by the sum total of the paper score, patent score, and technology transfer score, as shown in Equation (1):

where

Broadly speaking, performance evaluation of a weighting method has two inherent problems.

First, because the various outputs are represented by a single variable, acquired by adding together the weighted values of all outputs, the distinctive characteristics of individual outputs are obscured. Given this characteristic, there is a possibility of focusing on the output with the highest weight score, or the output that can most easily achieve the target score. That is, once a target score to be achieved has been set, the researcher may choose the most easily achievable of the three variables constituting the total value and focus on producing relevant results, thus making it easier to attain the targeted total score. The problem inherent in this method is that performance output is focused on a single area, thus deterring the future development of research institutions driven by the diversity of output.

Second, since all output scores consist of the number of papers, patents, or technology transfers multiplied by the respective weight values, the determination of the weight values becomes problematic when the variables involved have diverse measurement criteria, such as percentage, monetary amount, and so on. This not only makes the output measurements unsuitable for use but also poses a limitation on the measures of output that may be used in the future, thus constraining the variety of output measurements.

To resolve such problems, this study proposes an SE analysis method based on DEA.

2.2. Literature Review Using DEA and DEA-SE

The DEA method has been widely used to study energy efficiency as an efficiency assessment method [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14]. DEA is recognized in the literature as a powerful method, more suitable for performance measurement activities than traditional econometric methods, such as regression analysis and simple ratio analysis [

8,

15].

It was used by Thakur et al. [

1] to assess the efficiency of Indian state-owned electric utilities, by Mukherjee [

2] to measure the energy efficiency of India’s manufacturing sector, and by Honma and Hu [

3] to measure regional total-factor energy efficiency. DEA methodologies were proposed and used by Sueyoshi et al. [

4] in evaluating the variegated performance of coal-fired power plants and by Shi et al. [

5] in evaluating China’s regional industrial energy efficiency.

More recently, Yang et al. [

6] measured the environmental efficiency of China based on an environmental super-efficiency data envelopment analysis model by using data of 30 provinces in China during the period of 2000–2010. They found that environmental efficiencies across 30 provinces show regional disparities and east areas were more efficient in production while the west ranked last, with central areas ranking in between during the period studied. Additionally, they proposed that policies should be established to further promote production efficiency.

Chodakowska and Nazarko [

7] presented the concept of environmental efficiency analysis based on the method of data envelopment analysis in the case of the existence of desirable and undesirable results and proposed an integration of the environmental DEA method with the concept of technological competitors. As a result, the possibility of applying the concept of DEA to technological competition was presented in the form of classification and benchmarking of the European countries. They also indicated that European countries are highly diversified with regard to the efficiency of environmental performance.

Mardani et al. [

8] reviewed DEA models with regard to energy efficiency. They reviewed and summarized 144 published scholarly papers appearing in 45 high-ranking journals between 2006 and 2015 to provide a comprehensive review of DEA application in energy efficiency. They thereupon showed that DEA has strong promise to be a useful evaluative tool for future analysis related to energy efficiency issues, where the production function between the inputs and outputs was virtually absent or extremely difficult to acquire.

Li et al. [

9] examined three methods of super-efficiency, data envelopment analysis, technique for order preference by similarity to an ideal solution, and complex proportional assessment, to evaluate the system priority and analyze the sensitivity under different decision-making scenarios. They indicated that when decision-makers only consider cost factors, traditional systems are the best choice and that the photovoltaic storage system was the best system in many decision-making scenarios, because of its comprehensive advantages in cost, energy efficiency, and environmental benefit.

Bae et al. [

10] examined the carbon capture, utilization, and storage research performance of 31 countries from the knowledge spillover perspective by using super-efficiency. In this study, the number of patents and research articles from 2000 to 2016 as input variables and the number of research article citations, patent citations, and registration of triadic patent families as of 2017 were used to analyze the efficiency. From the results, the US, European countries, Japan, Australia, and New Zealand showed relatively high efficiency. The results also presented that can provide meaningful information to policymakers to identify ex post Research and Development (R&D) activities and planning.

Chodakowska and Nazarko [

11] conducted a literature review of relevant research papers in the period 2016–2020 for the assessment of sustainable development goals of the European Union. To analyze the results, they used hard data of the International Energy Agency and the results of an EU survey regarding the influence of the socio-economic environment on CO

2 emissions in EU countries. As a result, they proposed hybrid rough set DEA and rough set network DEA models that integrate both approaches. This study demonstrated that a multifaceted and objective assessment is possible by merging concepts from the set theory and operational research.

Ilyas et al. [

12] evaluated the energy efficiency of pastoral and barn dairy farming systems in New Zealand through application of a data envelopment analysis approach. To evaluate the efficiency, the constant return to scale (CCR) and variable return to scale (BCC) of DEA were employed for determining the technical, pure technical, and scale efficiencies of New Zealand pastoral and barn dairy systems. From the results, they recommended energy auditing and use of renewable energy and precision agricultural technology for energy efficiency improvement in both dairy systems.

Song et al. [

13] used the super-efficiency DEA method to measure energy and power efficiency based on panel data from 30 provinces and cities in China from 2009 to 2017. As for a result, the research showed that China’s efficiency level takes the supply-side structural reform as the turning point and presents a volatile upward trend. They proposed that the results can offer a reference for the sustainable comprehensive utilization of China’s energy and power, and provide empirical evidence for other countries to improve the energy and power efficiency from the perspectives of theory and policies.

Xu et al. [

14] analyzed the overall situation of related literature published in 2011–2019 and introduced the definition, measurement, and evaluation variables of energy efficiency. In addition, they reviewed the current DEA model and DEA extension models and applications based on different scenarios. They also proposed further research, such as energy efficiency issues in enterprises and energy efficiency based on a complex data environment.

Results obtained from this review show that the DEA and DEA-SE methods are appropriate for the evaluation of energy efficiency [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14].

2.3. Research Model

Research on measuring multi-input multi-output efficiency began with the work of Koopmans [

17] and Debreu [

18]. They drew on the pareto-optimality technique to define the concept of efficiency. However, such a definition, while offering a systematic method for discriminating between efficient and inefficient states, failed to provide information on degrees of efficiency. To redress this limitation, Farrell [

19] proposed a method for evaluating efficiency by measuring how far the subject of analysis is distanced from the efficient group. Based on this methodology, the DEA CCR model, which uses a linear programming procedure for a frontier analysis of inputs and outputs, was developed by Charnes et al. [

20]. This was followed by the DEA BCC model of Banker et al. [

21]. Their designations, CCR and BCC, are taken from the names of the respective researchers. The DEA CCR model posits constant returns to scale (CRS), whereas the BCC model posits variable returns to scale (VRS), as shown in Equation (2). The efficiency score is derived on the basis of the efficiency of multi-input multi-output. In short, the DEA model evaluates the performance of DMUs with multiple outputs produced by the combination of multiple inputs.

DEA CCR (BCC) input-oriented model [

20,

21]:

DEA categorizes DMUs into efficient and inefficient DMUs. Efficient DMUs are assigned an efficiency score of 1 and the remaining inefficient DMUs take a value that is less than 1. However, even DEA, which can handle multiple inputs and outputs as well as variables with differing measurement criteria, has one crucial disadvantage when applied to the evaluation of energy research projects. Because DEA assigns an efficiency score of 1 to all efficient DMUs, it becomes impossible to assign a hierarchy among them. Hence, ranking is possible only for inefficient DMUs. This limits the efficacy of DEA as an evaluation system for the institute, which must rank all energy research projects, both efficient and inefficient, for the purpose of policy decision-making.

Super efficiency was proposed by Andersen and Petersen [

22] as a way to identify a hierarchy among DMUs, and has since been used in the studies of Zhu [

23], Seiford and Zhu [

24], Lovell and Rouse [

25], and Chen [

26]. The main purpose of SE is to determine a ranking among efficient DMUs. Therefore, the significance of the SE method is that it reassesses efficient DMUs and expresses their efficiencies as values of 1 or more, thus enabling a hierarchical evaluation of efficient DMUs.

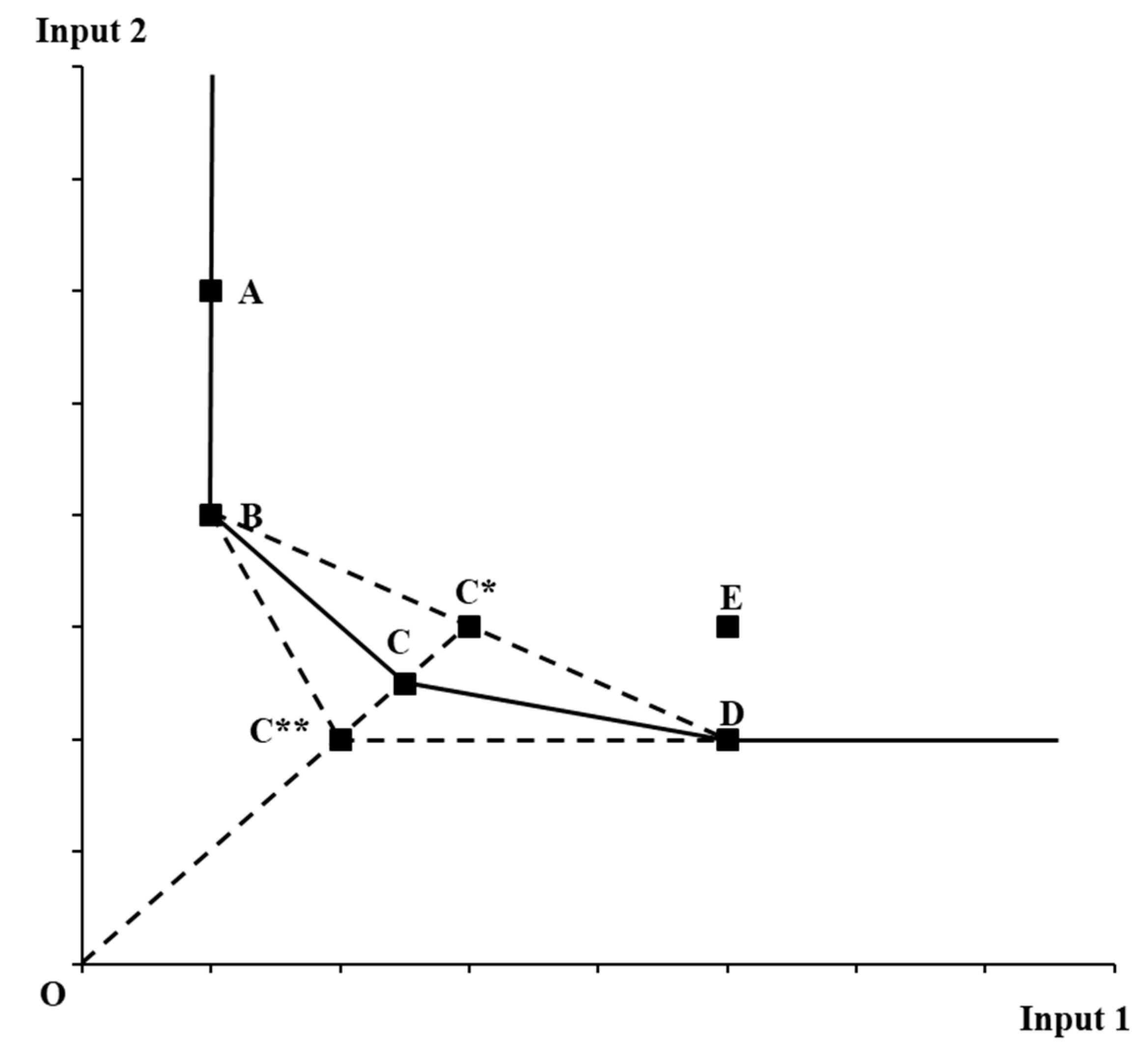

Figure 1 explains the concept of SE. Of the three DMUs B, C, and D, if we assume that only DMU B and DMU D exist and DMU C does not, the frontier line becomes segment BD. However, because DMU C does exist, the frontier line changes from segment BD to segment BCD. That is, DMU C caused the frontier line to change by the distance of CC*. The SE method adds CC*, the margin of efficiency change, and measures the ratio of OC*/OC as the efficiency of DMU C. If DMU-C** exists instead of DMU-C, the frontier line changes from the segment BD to the segment BC**D. In this case, the DEA-SE value becomes the distance of segment OC* divided by segment OC**. This gives efficient DMUs a value of 1 or more, and makes it possible to determine the ranking of efficient DMUs.

In this study, the DEA-SE CRS input-oriented model by Seiford and Zhu [

23] is used to analyze the efficiency of energy projects. The input-oriented model analyzes input efficiency to produce output, and the institute makes decisions on the utilization of limited resources as input based on such analysis. CRS was assumed because doubling the input to two times that of other projects would produce twice as much output.

The DEA-SE model used in this study is delineated as follows.

DEA super-efficiency CRS [

23] (VRS) input-oriented model:

2.4. Variables

The input variable takes into account not only research funding but also research personnel and the research period, and the output variable encompasses royalty income, which has recently emerged as an important factor as a research project’s output. In short, the DEA-SE method takes research funding, research personnel, and research period as input variables, and the paper score, patent score, technology transfer score, and royalty income as output variables.

2.4.1. Input Variables

Like any other organization, the research organization uses such representative economic resources as capital and labor to produce research output. At present, however, research funding is the only variable being used to measure performance. This study saw labor, represented as the number of research personnel, and time, represented as the period of research, as scarce resources of sorts and added them to the input variables. Research personnel and research period are finite resources that cannot be used in infinite amounts. Additionally, such resources are frequently accompanied by the concept of opportunity cost. In carrying out energy research projects, the participation rate and period of research are limited by the conditions of the research institute. Because research is performed within this limited environment, some projects must inevitably be given up, and such factors as research funding, research personnel, and research period are generally regarded as having paramount importance in implementing a research project. Therefore, in this study, research period is measured as months, and research personnel is measured as the number of people participating in a given project.

2.4.2. Output Variables

For the weighting method, three types of output are used to measure performance. Each of these three encompasses a number of subordinate outputs. These subordinate outputs are multiplied by the corresponding weight value assigned by the institute, and the sum of these scores becomes the performance score for the output type in question.

The paper score is composed largely of the quality of output and the regional characteristics of the journal. Accordingly, published papers are divided into SCI/SSCI, SCIE, international/domestic publication papers, and international/domestic conference proceedings, and multiplied by the weight value for the corresponding category to yield the paper score.

The patent score is divided into international and domestic registrations. Each category of output is multiplied by its given weight value to yield the overall patent score.

The technology transfer score only has a single subordinate measure of performance: the number of transfers. Hence, weight values assigned to the number of technology transfers are applied in calculating the overall score.

In addition to these three existing performance variables, this study introduces technology transfer-related income. This is because royalty income is used as a key performance variable in association with evaluating the performance of the entire institute, rather than of individual projects. This study, however, introduces royalties for specific projects as an output variable to the evaluation of output for individual energy research projects. Each weighting score of output variables for the weighting method is shown in

Table 1.

2.4.3. Data Collection

The criteria for empirical analysis data collection used to obtain accurate research results are as follows.

First, all energy research projects were classified according to their time of completion. This is because at the institute, policy performance evaluations for energy research projects are undertaken by year according to the completion date of the projects in question. Second, projects with a total budget under 10,000 US$ were excluded from consideration. Such projects, for the most part, have to do with testing or energy-related events, and thus are not intended purely for research. Third, this study only used data from 2007 or earlier based on the date of project completion. The reason for this is that the national energy research institute data analyzed in this study were collected at the end of 2010 and performance related to SCI, SSCI, or patent registration has a lengthy gestational period of a year or more. Therefore, in this study, 874 data covering the period 2005–2007 were collected. These 874 samples were subjected to a regression analysis, for the purpose of examining the relationship between input and output variables.

To analyze the differences between the weighting method and the SE method, a case analysis was carried out for the 27 energy research projects on energy materials and 293 energy research projects in the entire research division completed in 2007.

These variables are summarized in

Table 2.

2.4.4. Regression Analysis

In many cases, output is predetermined by policy. The types of output currently used for performance evaluation are papers, patents, and technology transfers. These are the factors currently regarded as the most critical for performance evaluation at the institute. In particular, royalty income is a measure of performance that is being actively promoted by policy. Although it is not yet in widespread use due to the difficulty of measurement, once this problem is resolved, royalty income may become more generally used to measure performance at the institute.

In performance evaluations at the weighting method, only a single input variable and a single output variable are used. Specifically, “research funding” is used for input and “performance score of research outputs” is used as the measure for output. This is due to several reasons, including the following.

First, at present, the evaluation method adopted at the national energy institute in Korea cannot use measurements based on disparate criteria without converting or adjusting them in some way. Output variables currently in use at the national energy institute in Korea, such as papers, patents, and technology transfers, can all be measured by counting their number. Hence, the performance score can be derived simply by multiplying the number of papers, patents, and technology transfers by the relevant weight value. Likewise, research funding as the sole input variable used for evaluation can be applied directly without processing.

Second, it is impossible to ascertain the degree of impact that input has on output. However, research funding, as an input factor, is judged to be the most important and can reasonably be expected to affect output. On the other hand, other input variables, such as the number of participating personnel and the period of research, while thought to affect output, have little statistical evidence to support their use.

Third, because DEA is not a statistical method of analysis, particular circumspection is needed in selecting input and output variables. If the wrong variables are used as input or output, their impact will be directly reflected in the evaluation method and this can lead to completely divergent results.

Therefore, this study addressed these problems by performing a regression analysis on new input variables, investigating the correlations among them, and selecting the variables that are statistically significant.

For this purpose, the study employed regression analysis, as shown in Equation (4). The regression analysis was carried out in three stages, represented by Models 1 to 3. First, in Model 1, the relationship between the performance score and research funding, which are, respectively, the output and input currently used at the institute, were examined. Next, Model 2 was Model 1 with the addition of research personnel. Lastly, Model 3 was Model 2 with the addition of the research period. The relationship between the input and output was examined using the three models.

The regression analysis model that incorporates these variables is delineated as follows.

Simple regression model between inputs and outputs:

where

2.5. Research Procedure

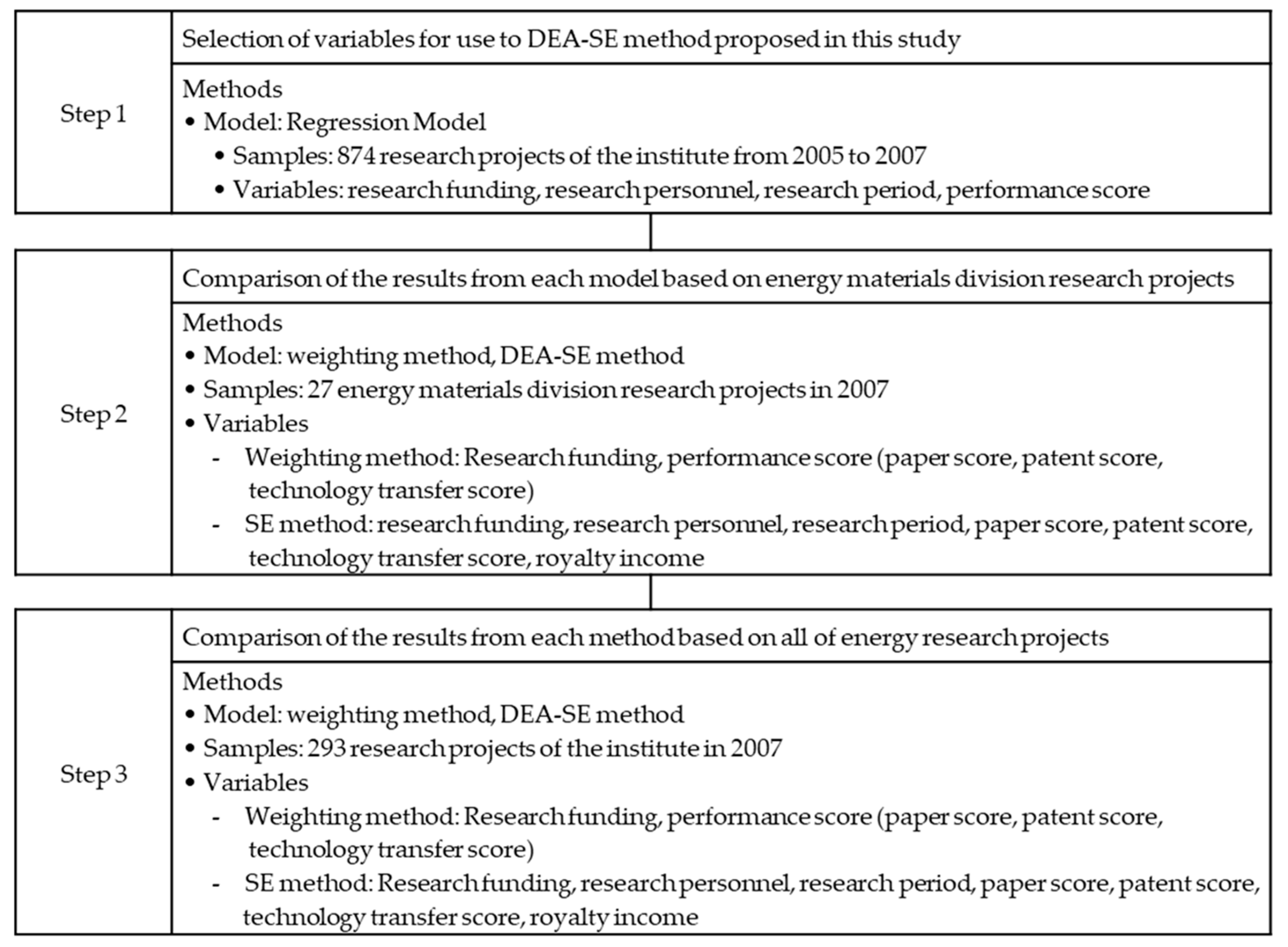

This study was carried out as shown in

Figure 2.

First, regression analysis was performed to investigate correlations between input variables and performance score using 874 research projects of the institute from 2005 to 2007.

Next, the performance evaluation method currently used by the weighting method was used to rank the performance of 27 research projects of energy materials division in 2007. Then, the SE method proposed in this study was used to analyze the performance of 27 research projects, which were used in the weighting method, and the results were compared with those of the weighting method.

Lastly, the effects of the differences between the two methods on the performance of 8 research divisions by using 293 research projects of the institute in 2007 were compared and analyzed.

3. Results of Empirical Analysis

3.1. Results of Regression Analysis for DEA-SE Method

A total of 874 data were used in the regression analysis for the DEA-SE method. The descriptive statistics for this sample are shown in

Table 3 below.

Table 4 shows the correlation results among the variables used in this study. Multicollinearity, which can affect the analytical results, is not observed. The variables exhibiting the highest correlation are research funding and research personnel. Their correlation was 0.554, which was statistically significant at the one percent level. Considering these figures, there was no significant problem between the variables.

Because the DEA-SE analysis is not a statistical analysis method, it requires meticulous and precise assumptions. In this study, the correlations were analyzed and only those variables shown to have a statistically significant effect on output were used as input variables.

Table 5 shows the results of regression analysis.

As shown in

Table 5, the coefficient values of independent variables in the model 1, 2, and 3 were significant at a significance level of 1%. This shows that not only the research fund but also the input number of persons and the input time affect the output.

In addition, the explanatory power of the model increased as variables were added. In Model 2 where the input number of persons was added, the explanatory power of the model increased by about 0.014 from 0.121 to 0.135. In Model 3, where the input time was added to Model 2, the explanatory power of the model increased by about 0.09 from 0.135 to 0.144.

This result shows that, in addition to the research fund, the input number of persons and the input time, which have been neglected until now, are critical variables with regard to the output. Therefore, in the present study, a DEA-SE analysis was performed by using as input variables not only the research fund but also the input number of persons and the input time.

In the regression analysis, the variance inflation factors (VIFs) value is between 1.00 and 1.46, indicating that there are no multicollinearity problems caused by independent variables. The calculated Durbin–Watson value was between 1.59 and 1.62, indicating that there are no problems caused by autocorrelation in the regression model analysis.

3.2. Results of Energy Materials Division

Table 6 lists the evaluation scores for DMUs that were energy research projects in the energy materials division completed in 2007. The weighting method’s evaluation score is derived by dividing the sum of the paper, patent, and technology transfers by the research funding, and converting it to a figure per 100,000 US

$ of research funding.

The weighting method’s performance evaluation scores of DMUs range between 0 and 110 points. Using these scores to rank the DMUs results in the highest ranking being given to DMU-1, whose performance score is 110 points. The lowest performance was shown by DMU-10, 11, 16, 25, and 26 with evaluation scores of 0; these ranked at the bottom. However, even among those with scores of 0, DMUs that used the least amount of resources were ranked higher, showing more efficient use of the limited resources available within the organization. Conversely, DMU-11, which scored 0 points despite having used the largest amount of resources, was assigned the lowest ranking.

In the SE method results, scores of the 27 DMUs range from 0 to 4.178. There were four DMUs with efficiencies greater than 1. DMU-1, with an efficiency score of 4.178, was ranked first, followed by DMU-8 (2.562), DMU-6 (1.259), and DMU-5 (1.231). The lowest rankings were assigned to DMU-10, 11, 16, 25, and 26. DMU-11 ranked lowest among the DMUs with an efficiency score of 0.

In the comparison analysis results of the weighting method and the DEA-SE method, a positive number indicates a higher ranking for the DEA-SE method than for the weighting method and a negative number indicates a lower ranking for the DEA-SE method than for the weighting method. Among the 27 DMUs, 11 DMUs showed discrepancies stemming from differences between the DEA-SE method and the weighting method. The sum of the absolute values of differences between the DEA-SE method and the weighting method is 28.

In particular, in the case of DMU-1, 6, 7, and 8, all of which show patent and/or technology transfer performance, DMU-6, 7, and 8 were ranked higher with the DEA-SE method than with the weighting method. DMU-1 retained its top ranking with the DEA-SE method, but with a relatively greater margin of difference from its immediate successor than was seen with the weighting method. Consequently, it was possible to verify the effect of output related to patents and technology transfers, which was obscured in the weighting method due to its inclusion in a single unified output score. Such a result can have a valid effect on the future production of research results, by promoting more diversified output through the prioritization of DMUs with higher efficiency in distributing the institute’s finite resources.

3.3. Analytical Results by Research Division

Table 7 shows the efficiency evaluation rankings for the research division according to both the weighting method and the DEA-SE method. When using the weighting method, the energy materials division was ranked first with a score of 24.7752 and the energy policy division was ranked eighth with a score of 1.6820. When using the DEA-SE method, the converting and storage division was ranked first with a score of 0.4767 and the energy policy division was eighth with a score of 0.0403.

The energy policy division was ranked eighth across the two methods and thus was shown to be the least efficient. However, the energy policy division, by its very nature, is not very conducive to the production of tangible results, such as papers, patents, and technology transfers. The energy materials division was ranked first using the weighting method but only third using the DEA-SE method. Instead, the converting and storage division, which ranked fourth in the weighting method, was top ranking in the DEA-SE method.

Differences in the results obtained from the weighting method and the DEA-SE method can be inferred from the analytical results shown in

Table 8 and

Table 9.

Table 8 shows the average score per project of each performance score by division for every 100,000 US

$ in funding, and

Table 9 presents the average score per project of each performance score by division. The weighting method uses the score for every 100,000 US

$ in funding, as shown in

Table 8, while the DEA-SE method uses the performance score itself, as shown in

Table 9, without any conversion.

As can be seen in

Table 8, with regard to energy research projects, the entire institute’s average scores per 100,000 US

$ of research funding was 10.88 points for papers, 0.91 for patents, and 0.03 for technology transfers. This result shows that the impact of the patent score or technology transfer score on the overall performance score was minimal, and that skewing of the output has occurred. Using the weighting method, the energy materials division was assigned the highest ranking among the various research divisions. Because the average paper score per 100,000 US

$ of funding for this field is 22.92, and thus higher than other fields, the performance score derived by the weighting method, which combines paper, patent, and technology scores into one, was greatly affected by the paper score. The influence of paper scores can be seen from how paper score ranking is reflected in the weighting method ranking, except in the converting and storage division.

When the DEA-SE method was applied, the converting and storage division took the topmost ranking due to the marked ascendency of its patent score, together with the relative superiority of its technology transfer score, as shown in

Table 9.

As shown in

Table 9, converting and storage division rose three places using the DEA-SE method, and had better performance in the patents and technology transfer score compared to other division. Energy environment and energy materials divisions, which fell in ranking, showed better performance in the paper score but were weaker in the patent score and technology transfer score. New energy division had a higher paper score than converting and storage division but recorded lower scores in the patent score and technology transfer score, thus ranking second under the DEA-SE method.

Therefore, these results show the skewing of output caused by the output preference and the weight setting. On the other hand, evaluation based on the DEA-SE method reflected the characteristics of each research division, and showed that a higher ranking may be achieved by focusing on output that cannot be obtained in other divisions. From the research institute’s perspective, this approach offers the advantage of diversifying output. Additionally, such results indicate that the use of the DEA-SE method will contribute to the balanced production of output.

Table 10 verifies the differences in the rankings in the weighting method and

Table 11 verifies the differences in the rankings in the DEA-SE method. The analytical results shown in these tables identify the variables that affect the rankings in the individual methods. With regard to the weighting method rankings and the DEA-SE method rankings, the 94 DMUs having a performance score of zero were removed, and the other 199 samples were used in the analysis. After removing the median value, the lower 99 samples in Group 1 and the higher 99 samples in Group 2 were used to perform the Mann–Whitney U verification, which is a nonparametric comparison method with two samples.

The results of the ranking difference verification by the weighting method, shown in

Table 10, indicate that the factors affecting the rankings include the research fund, the number of researchers, and the paper score at a significance level of 1% and the patent score at a significance level of 5%. Therefore, the input variables had a greater effect when the research fund and the number of researchers were smaller in the high-ranked Group 2, while the output variables had a greater effect on the ranking when the paper score and the patent score were higher.

The interpretation of the results shows that a higher performance is obtained when a smaller amount of research fund is used and a higher paper score is achieved.

However, as shown in

Table 11, when the DEA-SE method is used, the weight of the individual variables is automatically calculated. Therefore, the actual factors affecting the ranking difference between the two groups are not the input variables but the output variables. Notably, in the DEA-SE method, the input variables did not have an effect, but all four output variables showed an effect at a significance level of 1%. In addition, technology transfer, which did not show an effect in the weighting method, and an additional variable of royalty income respectively showed an effect on the ranking in the DEA-SE method.

Therefore, the ranking was not affected by the input variables, such as the research fund, the number of researchers, and the research period, but the overall accomplishment was increased as research accomplishments were obtained from various sections; in other words, as more research accomplishments that were not produced from other sections were obtained.

The DEA-SE method gave more differences in the paper score and the patent score. This means that maintaining the same ranking in the DEA-SE method as in the weighting method requires more accomplishments. Therefore, the DEA-SE method may be used to prevent the skewing of outputs and to provide a powerful attraction to the production of various outputs.

4. Conclusions and Implications

This study proposed DEA-SE as a type of efficiency analysis methodology suited for evaluating the performance of energy research projects. To evaluate this, the weighting method and the DEA-SE method were employed to assess the efficiency of 293 energy research projects. The results obtained through the various methods were then compared and the differences were analyzed.

The findings may be summarized as follows.

First, the results showed that there are many ranking differences between the evaluation method used in the weighting and the DEA-SE methods. From analyzing 27 energy materials projects, 28 ranking differences were observed between the weight method and DEA-SE method. Additionally, the results by research divisions showed that there was a shift in ranking by three places in converting and storage, one in energy environment, and two in energy materials.

Second, evaluation based on the DEA-SE method showed that a higher ranking may be achieved by focusing on output that cannot be obtained in other divisions. As a result of the comparison analysis of 27 DMUs in the energy materials division, DMU-1, 6, 7, and 8, which have a patent score, technology transfer score, and royalty income higher than 0, were ranked higher with the DEA-SE method than with the weighting method. From analyzing 293 research projects by research divisions, the energy materials division was ranked first using the weighting method but only third using the DEA-SE method. Instead, the converting and storage division, which ranked fourth in the weighting method, took the top ranking in the DEA-SE method. Converting and storage division had better performance in the patents and technology transfer score compared to the other division. The energy environment and energy materials divisions showed better performance in the paper score but were weaker in the patent score and technology transfer score.

These results indicate that there is skewing among the subordinate variables, such as the paper score, patent score, and technology transfer score, that compose the performance score in the weighting method. Accordingly, the most prominent ranking increases for projects involving higher patent and/or technology transfer scores than paper scores in the DEA-SE method. Because the weighting method evaluates performance using the sum of all three performance variables, the impact of patent or technology transfer scores on the overall score remained minimal. Such results demonstrate the problems inherent in the uniform weighting system of the research division.

In conclusion, evaluating research performance using a single total score of weighted values leads to skewing toward output types that are more easily achievable. To resolve this problem, it is necessary to use the DEA-SE method in performance evaluation. When the DEA-SE method was used, DMUs with output that cannot be obtained in other divisions, such as patents, technology transfers, and royalty income, achieved a higher ranking compared to the weighting method. Therefore, if the DEA-SE method is employed in a DMU performance evaluation, the various DMUs will strive harder to produce output other than published papers. Additionally, from the research institute’s perspective, this approach offers the advantage of diversifying output. Accordingly, DEA-SE method is more appropriate for a balanced evaluation of research project performance.

This study was a case study of performance evaluation of energy research projects, and proposes the DEA-SE method. The DEA-SE method is commonly applied to DMUs performance evaluation. However, compared to the general DEA-CRS (VRS) method, the DEA-SE method has not been extensively studied. The significance of this study lies first in it being one of few that have conducted performance evaluation using actual data. Second, it employed regression analysis for the application of DEA-SE. Third, it proposed the DEA-SE method as an alternative to the weight method. Fourth, it presented empirical analysis results for the differences between the weight method and the DEA-SE method. As such, it made significant contributions in terms of policy development.

Based on the above research results, this study proposes three implications as follows.

First, the DEA-SE method makes it possible to derive ranking information for DMUs with inputs and outputs that have diverse standards of measurement. With the DEA-SE method, output types that could not be measured in terms of their number, such as the monetary value of technology transfers, can be incorporated. It likewise allows for the use of input types measured in different units, such as research personnel and research period. Therefore, the DEA-SE method will be a viable alternative for organizations that are reluctant to adopt DEA due to policy issues, such as the difficulty of assigning rankings.

Second, using the DEA-SE method including the DEA method for performance evaluation can minimize the problems relating to weighting and contribute to the production of more balanced output [

15]. There are many difficulties involved in weighting output to induce a balanced mix of output types. The question of how much weight to assign in order to provide incentive for particular types of output harbors such policy risks as opposition from the organization’s members, the need to assign rational weight values, and the possibility of skewing toward other output types. In DEA, each DMU is free to choose any combination of inputs and outputs in order to maximize its relative efficiency [

15]. The relative efficiency or the efficiency score is the ratio of the total weighed output to the total weighed input [

15]. Therefore, the DEA-SE method does not require the setting of weights between variables having different measurement units. That is, performance evaluators do not have to set weights of variables, such as papers and number of commercialized products. They only have to set weights for quantifications in the same category as papers, such as the SCI score and domestic conference proceeding score.

Third, the DEA-SE method proposed in this study is better suited to the evaluation of research performance because it uses a more diversified range of input and output measurements. It takes into account variables heretofore excluded from performance evaluation, such as royalty income, research period, and research personnel. In the case of the DEA-SE model proposed by this study, the input variables cover not only research fund but also research personnel and the research period. Project supervisors will attempt to reduce research personnel and shorten the research period to achieve a higher DEA-SE performance score. As such, by investing the saved resources elsewhere, the team as a whole will be able to achieve higher efficiency than before [

10].

There are some limitations of this study. This study was based on data collected in 2010. The main reason for using past data was due to the sensitivity of data. The data was the actual performance evaluation of the institute. The results can be disclosed for the institute as a whole, but detailed results by department were difficult to disclose to the public immediately. Data by department can be disclosed now that some time has passed, and changes have been made to department names and members, which would lessen the impact of making the data public. Since the proposed model is already in wide use, it can be convenient to apply. However, it is limited in reflecting the unique characteristics of the institute. In future work, the proposed model can be further developed into a rational model that reflects the institute’s unique characteristics. Methods other than DEA should be explored to allow for rational performance evaluation that takes into account characteristics unique to the institute.