Abstract

Data centers (DCs) are becoming increasingly important in recent years, and highly efficient and reliable operation and management of DCs is now required. The generated heat density of the rack and information and communication technology (ICT) equipment is predicted to get higher in the future, so it is crucial to maintain the appropriate temperature environment in the server room where high heat is generated in order to ensure continuous service. It is especially important to predict changes of rack intake temperature in the server room when the computer room air conditioner (CRAC) is shut down, which can cause a rapid rise in temperature. However, it is quite difficult to predict the rack temperature accurately, which in turn makes it difficult to determine the impact on service in advance. In this research, we propose a model that predicts the rack intake temperature after the CRAC is shut down. Specifically, we use machine learning to construct a gradient boosting decision tree model with data from the CRAC, ICT equipment, and rack intake temperature. Experimental results demonstrate that the proposed method has a very high prediction accuracy: the coefficient of determination was 0.90 and the root mean square error (RMSE) was 0.54. Our model makes it possible to evaluate the impact on service and determine if action to maintain the temperature environment is required. We also clarify the effect of explanatory variables and training data of the machine learning on the model accuracy.

1. Introduction

Information and communication technology (ICT) systems have become an important tool for supporting the infrastructure of social life. Consequently, the role of data centers (DCs) for managing information is becoming increasingly important [1]. However, the development of cloud computing, the virtualization of communication technology (ICT), the tendency for high heat density of ICT, and the variety of cooling methods has led to complicated environments in which various factors must be considered [2,3]. Even in such complicated environments, more reliable DC operation is required. In particular, regarding the temperature condition of the server room, there is a recommended temperature for the intake temperature of the ICT device [4]. When temperature management is not done properly, hot spots may occur, resulting in poor service quality and service interruption. Therefore, the DC operator and a DC user often have a service level agreement (SLA) that stipulates the rack intake temperature be kept below a certain level, and thus there is a high demand for proper temperature management [4]. However, due to the introduction of new technology, appropriate temperature management of complicated environments facing dynamical changes in both the spatial and temporal aspects is difficult. In particular, a sudden rise in temperature when the computer room air conditioner (CRAC) is shut down has a great impact on the ICT equipment in the server room, and also affects the continuity of the DC service. In addition, it can be seen that temperature management is important because there is a demand for sudden rise suppression measures such as aisle containment and thermal storage systems for these problems [5,6,7,8,9]. Therefore, we focused on the technology that predicts the rack intake temperature with high accuracy in advance, which realizes appropriate temperature management in the server room.

There are various approaches to predicting temperature. One is to calculate the representative point temperature by means of a heat balance equation. Although this approach can predict representative points, it has difficulty calculating the intake temperature of individual racks. Another approach is to use a transient system simulation tool and a computer fluid dynamics (CFD) tool [10,11,12,13,14,15,16]. While this approach can simulate the temperature in the server room, it is difficult to apply in many rooms because the modeling and calculation time would be enormous. Furthermore, due to variables in the characteristics of the server room, the simulation results and the actual measurements often deviate from each other, and tuning is thus required for each model.

In recent years, studies on the resistance of ICT equipment to high temperatures are underway, but we aim to manage the room temperature environment that satisfies the environmental requirements of existing ICT equipment. Then, we will engage in research and development with the aim of introducing it into the field closer to the actual environment. In response to these issues, we propose a rack temperature prediction model that can be implemented after the CRAC is shut down. Our model is constructed by machine learning with data from the server room and can perform self-learning with high accuracy. In this study, as a case study in a server room, we evaluate the prediction performance and clarify the influence of the learning data and explanatory variables on the model accuracy.

2. Conditions of Verification Data

2.1. Verification Room

An overview of the verification room is provided in Figure 1 and Table 1. We focus on a server room in which no aisle containment is installed, as the temperature change in this case is faster than that when an aisle containment is installed [7]. We focus on predicting the rack intake temperature after the CRAC is shut down.

Figure 1.

Floor plan of verification room.

Table 1.

Specifications of verification server room and computer room air conditioner (CRAC).

2.2. Verification Data

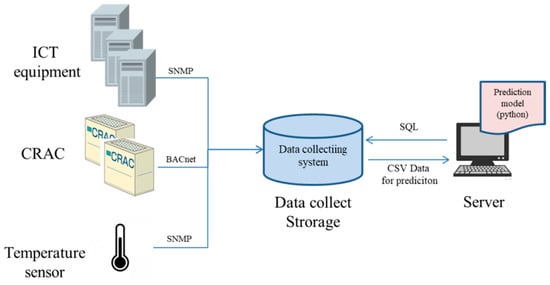

In recent years, Data Center Infrastructure Management (DCIM) systems, which support efficient operation by integrated management of various facilities and equipment in data centers, have become a focus [16,17,18]. We use the DCIM as an onsite data collecting system installed inside a server room (Figure 2). The data collecting system connects to the CRAC, rack intake temperature sensor, and rack power distribution unit (PDU) via local area network (LAN). The system obtains and stores the data of these facilities and devices. The data resolution of the CRAC and the temperature sensor is one minute, and the data resolution of the rack PDU is five minutes. A prediction model can utilize any of the data of a data collecting system, but in this study, we choose only the rack intake temperature, power consumption of ICT equipment, and cooling capacity of the CRAC as verification data. The rack intake temperature is defined as the value of the temperature sensor at the height of the rack intake surface (1.5 m).

Figure 2.

System configuration.

For the purpose of constructing and evaluating the prediction model, we conducted an experiment in which a CRAC was stopped for n minutes and then restarted. The experiment took place from 15 December 2019 to 28 February 2020 and was conducted a total of 60 times, with four different operation patterns and four CRAC stop times (2, 5, 10 and 20 min). The experimental patterns for each air conditioner are listed in Table 2. Some of the experiments exceeded the upper limit of the rack intake temperature (35 °C) for the 20 min suspension pattern, including those in which the experiment was stopped after about 15 min.

Table 2.

CRAC operation patterns and stop times.

3. Appearance of Verification Data

3.1. Basic Aggregation of CRAC Data

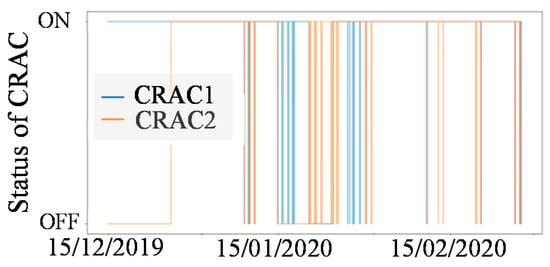

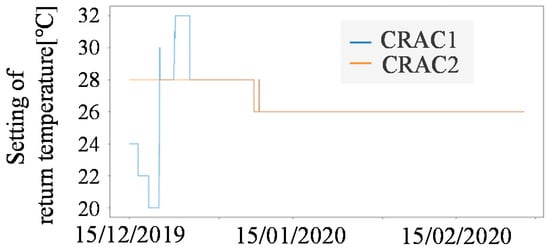

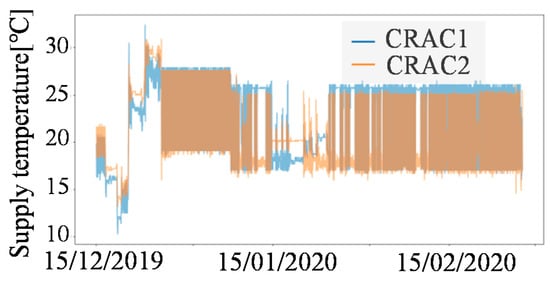

During the experimental period, the return temperature of each CRAC was fixed at 26 °C. In addition, the power consumption and supply temperature of each CRAC changed steadily in accordance with the on/off switching of CRAC1 and CRAC2 (Figure 3, Figure 4, Figure 5 and Figure 6).

Figure 3.

Status of CRAC.

Figure 4.

Setting of return temperature.

Figure 5.

Power consumption of CRAC.

Figure 6.

Supply temperature of CRAC.

3.2. Basic Aggregation of ICT Equipment Power

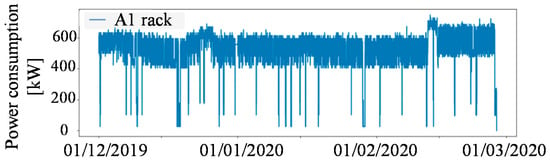

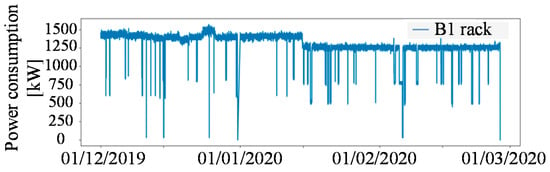

We calculated the power consumption of the ICT equipment for each rack because it represented the intake temperature of the rack. The amount of rack power consumption varied from rack to rack (Figure 7 and Figure 8). We also calculated the correlation coefficient between each rack for each row that was low (Table 3) and found that the trends were different for each one. Some of the racks were not used because they had no equipment mounted on them or the mounted equipment was not in operation.

Figure 7.

Power consumption of A1 rack.

Figure 8.

Power consumption of B1 rack.

Table 3.

Range of correlation coefficient in rack row.

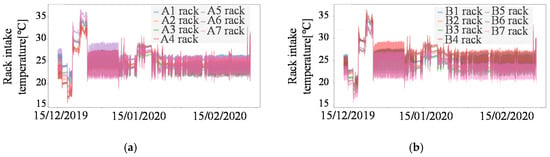

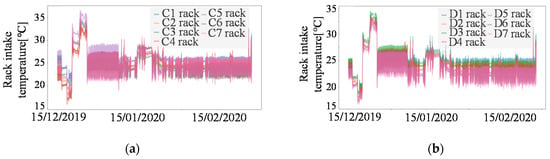

3.3. Basic Aggregation of Rack Intake Temperature

Figure 9 and Figure 10 show the time-series changes of the rack intake temperature of each rack. We can see that the temperature trends of each rack were similar. Also, when comparing each rack in each row, it is clear that the temperature values differed for each rack.

Figure 9.

Time-series change of rack intake temperature in each rack row. (a) Rack intake temperature in A rack row. (b) Rack intake temperature in B rack row.

Figure 10.

Time-series change of rack intake temperature in each rack row. (a) Rack intake temperature in C rack row. (b) Rack intake temperature in D rack row.

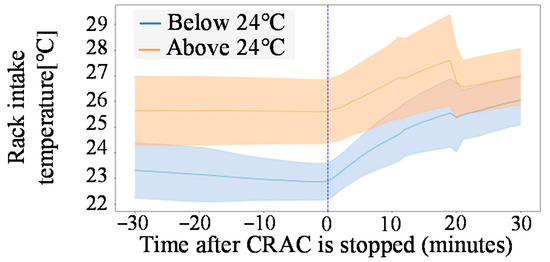

Further, the larger the difference between the outlet temperature of the air conditioner and the rack intake air temperature, the greater the demand for the cooling capacity, and the greater the slope of the increase in the rack intake air temperature after the air conditioner was stopped. As an example, Figure 11 shows the time-series changes of the rack intake temperature when the CRAC return temperature was set to 26 °C and the measured rack intake temperature was classified as either above or below 24 °C. We can see here that the temperature change after the CRAC was shut down depended on the rack intake temperature at the time of stopping. We can also see that the gradient of the temperature rise was larger when the rack intake temperature at the time of stopping was lower than when it was high.

Figure 11.

Time-series changes of rack intake temperature classified as above or below 24 °C. (line: average value, over line: mean + standard deviation (SD), under line: mean − SD).

4. Construction of Prediction Model

4.1. Objective of Prediction and Operation Conditions

As the objective variables in this study, we used the time-series changes of the rack intake temperature after the CRAC was stopped. We also examined two different operation conditions: one where the CRAC suddenly stopped while only one CRAC was operating and the other where one CRAC suddenly stopped while two CRACs were operating. The prediction interval was set to one minute, and the model predicted from one minute after the CRAC was stopped to the restart of the CRAC.

From the basic aggregation of the rack intake temperature, we grasped that the rising gradient of rack intake temperature depends on the rack intake temperature at the time of stopping. As a result, when predicting the time-series changes in the rack intake air temperature after a shutdown, the prediction accuracy may be poor immediately after the CRAC is stopped. Therefore, the objective variable was how much the temperature rose from the rack intake air temperature when the CRAC was stopped.

4.2. Method of Prediction Model

In a previous study, we were studying a model that predicts rack intake temperature after 30 min due to changes in ICT equipment in the server room and confirmed that it is possible to predict with high accuracy when using two methods (gradient boosting decision tree (GBDT) and a state space model) [19]. Based on the results, in the present research as well, these two methods will be initially examined as candidates.

The state space model, which is often used for analyzing time-series data, has the feature of updating the model sequentially according to the state one time before [20]. However, from the following points, it was considered unsuitable as a model for predicting the temperature after the air conditioner was shut down.

- Since the prediction result one time before is used for the next prediction value, the accuracy is likely to decrease. Also, it is difficult to consider the explanatory variables.

- As a result of the basic aggregation of rack intake temperature, the relationship between the objective variables before and after the CRAC shut down cannot be confirmed.

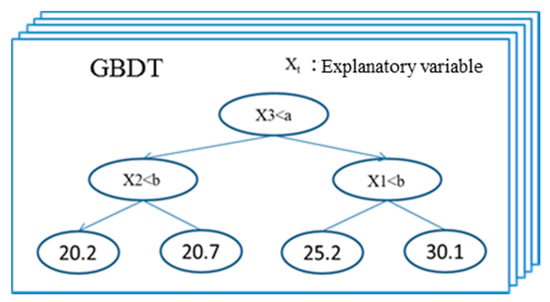

For this reason, we opted to use GBDT, which is a kind of machine learning (Figure 12) [21]. The explanatory variables are listed in Table 4. The hyper-parameters in the model were set to optimize the “number of leaves”, “weight of L2 regularization”, and “number of trees” by the grid search method of Python, and other parameters were set to default values.

Figure 12.

Image of gradient boosting decision tree (GBDT).

Table 4.

Explanatory variables.

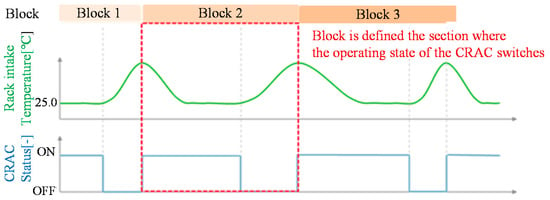

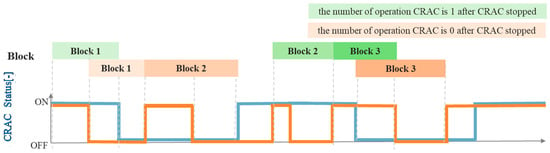

In this study, in order to predict from the time when the CRAC is stopped to the time it starts up again (maximum: 20 min), we define the section where the operating state of the CRAC switches as a block, and the blocks generated by each experiment are used for the construction and evaluation of the model. Figure 13 and Figure 14 show the outline of the method of creating each block in units of the number of operating CRACs.

Figure 13.

Image of blocks (case where only one CRAC is operating).

Figure 14.

Image of blocks (case where both CRACs are operating).

4.3. Approach of Prediction Model Construction

We examined several approaches for modeling the prediction of the rack intake temperature after the CRAC is stopped, as shown in Table 5 (the larger the model number, the finer the model granularity). We expected that a model suitable for each server room could be constructed and that the accuracy would be high. We also assumed that the amount of sample data would decrease when the model granularity is fine. On the basis of these viewpoints, we compared the accuracy of each model using the evaluation index described in the next section.

Table 5.

Outline of each model.

5. Evaluation Index

We evaluated the model accuracy using the four index items listed below. Each evaluation was performed using 5-fold cross-validation. We observed that temperature changes in the same block tend to be similar, so if data with similar tendencies are assigned to various divisions, prediction will be simplified and correct evaluation will not be possible. Therefore, one block sample is allocated to one block. Similarly, if the dataset is biased, we assume that correct evaluation cannot be performed. Therefore, the number of air conditioners after the stop and the number of blocks for each stopping CRAC were distributed evenly (Table 6).

Table 6.

Dataset of block for 5-fold cross-validation.

1. Coefficient of determination (R2):

R2 is used as an index showing the explanatory power of the predicted value of the objective variable.

2. Correct rate:

The ratio of the number with the predicted value at ±0.5 °C of the measured value to the total amount of data is defined as the correct rate.

3. Root mean square error (RMSE):

The accuracy of various methods is evaluated by RMSE, which is commonly used as an index for numerical prediction.

4. Max peak error:

In the server room temperature prediction, it is significant if the actual measured value and the predicted value deviate greatly. Therefore, we define the error in which the actually measured value is larger than the predicted value as the max peak error.

6. Results for Model Granularity

6.1. Accuracy Evaluation of Each Model (All Experimental Patterns)

Table 7 shows the evaluation index values of each model for 60 experimental patterns. We can see that evaluation index numbers 1, 2, and 3 had generally the same good evaluation values. Moreover, in the peak error of evaluation index number 4, we can see that the results of Models 3–5 were worse than those of Models 1 and 2. This demonstrates that Models 1 and 2 had good evaluation values as a whole, and that the relationship between the prediction accuracy and the fineness of the model granularity was not significant. We examine the detailed features of each model in the following subsections.

Table 7.

Evaluation index value of each model (all experiment patterns = 60 patterns).

6.2. Accuracy Evaluation by the Quantity of CRACs after Stopping CRAC

The evaluation index values classified by the quantity of CRACs after the CRAC is stopped are shown in Table 8 and Table 9. In the case of one CRAC suddenly stopping while only one CRAC was operating, Model 1 had the best evaluation index value among all models. In contrast, in the case of one CRAC suddenly stopping while two CRACs were operating, we found that Model 1 had worse evaluation index values compared to the other models. This indicates that the evaluation index values for Model 1 differed depending on the number of stopped air conditioners.

Table 8.

Evaluation index value of each model (the case where one CRAC suddenly stops while only one CRAC is operating).

Table 9.

Evaluation index value of each model (the case where one CRAC suddenly stops while both CRACs are operating).

In addition, since the difference in evaluation index values depending on the number of operating CRACs after one CRAC is stopped for Models 2–4 was not large, we conclude that Model 2–4 is robust against the difference in the number of operating CRACs after one CRAC is stopped.

6.3. Accuracy Evaluation by Stopping CRAC (CRAC1 or CRAC2)

The results of the evaluation index values classified by stopping the CRAC (CRAC1 or CRAC2) are shown in Table 10 and Table 11. In Model 5, there was a significant difference in the evaluation index values due to stopping CRAC (CRAC1 or CRAC2). In the other models, this difference in values was small. These results demonstrate that Models 1–4 are robust against the difference of stopping CRAC (CRAC1 or CRAC2).

Table 10.

Evaluation index value extracting only the data of each model where CRAC1 stopped.

Table 11.

Evaluation index value extracting only the data of each model where CRAC2 stopped.

6.4. Accuracy Evaluation by Stopping Time of CRAC

The results of the evaluation index values classified by the stopping time (above or below 10 min) of the CRAC are shown in Table 12 and Table 13. In Table 12, we can see that Model 1 could predict time-series changes of rack intake temperature after the CRAC was stopped (less than 10 min) with higher accuracy than the other models. On the other hand, as shown in Table 13, we can see that the prediction accuracy decreased after 10 min. The evaluation index values also varied depending on the stopping time of the CRAC for Model 5. Models 2–4 were robust models in that there was no significant difference in the evaluation values with respect to the stopping time of CRAC.

Table 12.

Evaluation index value extracted only for results where the stop time of CRAC is less than 10 min.

Table 13.

Evaluation index value extracted only for results with a stop time of 10 min or more.

6.5. Comprehensive Evaluation of Each Model

In the above subsections, we performed evaluations on five different models. In the following, we examine only Model 2, for the following reasons.

- As discussed in Section 6.1 and Section 6.2, Model 2 had the same good evaluation index value as other models.

- As discussed in Section 6.3 and Section 6.4, Model 2 had consistently high evaluation index values for the number of air conditioners stopped, stopping CRAC, and stopping time of CRAC.

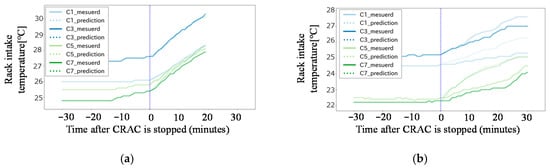

Figure 15 shows the time-series change of the rack intake temperature of the C1, C3, C5, and C7 racks in the actual and predicted values using Model 2 after the CRAC is stopped. As we can see in Figure 15a, the correlation could be accurately predicted. Also, in Figure 15b, we can see that some of the rack intake air temperature predicted values exceeded the measured values by approximately 1 degree, but predictions could still be made by capturing a large correlation.

Figure 15.

Time-series changes of rack intake temperature in C rack row. (a) Rack intake temperature in C rack row (in the case of one CRAC suddenly stopping while only one CRAC is operating). (b) Rack intake temperature in C rack row (in the case of one CRAC suddenly stopping while two CRACs are operating).

7. Consideration of Factors Related to Model Accuracy

7.1. Effect of Explanatory Variables

Optimization of the explanatory variables is crucial from the viewpoints of accuracy improvement and calculation cost. Therefore, here, we provide a detailed consideration of the explanatory variables.

First, in order to consider the effect of each explanatory variable on accuracy, we calculated the feature importance. Table 14 shows the feature importance of each explanatory variable in the prediction in the case of one CRAC suddenly stopping while two CRACs are operating. We found that explanatory variables such as rack position, rack intake temperature one minute before stopping, and CRAC2 cooling capacity one minute before stopping are important in this verification case. These results demonstrate that each explanatory variable has a different influence on accuracy.

Table 14.

Feature importance of each explanatory variable.

Next, to clarify the effect on accuracy when changing each explanatory variable, we examined two cases.

- Same verification was performed when only one explanatory variable is used.

- Same verification was performed when only one explanatory variable is deleted.

Table 15 lists the evaluation index values when only one of the explanatory variables is used. We found that the values decreased when there was only one explanatory variable. We also found that when only the rack intake temperature one minute before was used, the value was better than when only the other explanatory variables were used.

Table 15.

Evaluation index values when only one of the explanatory variables is used.

Table 16 lists the evaluation values when one of the explanatory variables was deleted. We found that the value when the rack intake air temperature was excluded was generally lower than when all the explanatory variables were used. We also found that the value when other explanatory variables were excluded was not significantly different from the case where all the explanatory variables were used.

Table 16.

Evaluation values when one of the explanatory variables was deleted.

From these results, we conclude that the rack intake temperature one minute before is very important for the rack intake temperature prediction accuracy after the CRAC is stopped.

7.2. Effect of Training Data

When operating our proposed model, training data is required. When using a machine learning model, the relationship between accuracy and the quality and quantity of the training data is key. Therefore, in this section, we examine the influence on accuracy from four viewpoints.

7.2.1. Effect of the Number of Samples of Training Data

If we can determine the effect of the number of training data samples on accuracy, we will better understand how many samples need to be collected. To examine the effect on accuracy, we investigated how the evaluation index value changed when the ratio of datasets of training data other than the evaluation dataset of the five-partitioned dataset was changed. Table 17 shows each evaluation index value when the number of training datasets was reduced. We can see here that the R2, correct rate, and RMSE became worse as the number of datasets decreased. In addition, R2 was 0.8 or more when the number of datasets was about 0.4, which indicates that the prediction was possible with high accuracy in this case. On the other hand, when the number of datasets was reduced to 0.2, R2 became less than 0.8, which means the prediction accuracy was not high. As for the correct rate and RMSE, when the number of datasets was 0.2, the results were observed to decrease significantly.

Table 17.

Evaluation index value when the number of training datasets was changed.

From these results, in this verification room, highly accurate predictions were observed if there was a 40% dataset for the number of evaluation data samples in the target room. However, the more accurate the dataset, the more accurate the results obtained. Therefore, it is better to prepare the same number of samples as the evaluation data when possible.

7.2.2. Effect of Limiting CRAC (CRAC1, CRAC2)

In many cases, multiple CRACs are installed in the server room. Depending on whether it is necessary to collect the training data of each CRAC, the number of datasets to be prepared changes greatly. Therefore, to clarify the effect of limiting the number of CRAC datasets, we evaluated the accuracy when the data of each CRAC was excluded from the training dataset, and examined to what extent the creation of training data affects the accuracy for each CRAC.

Table 18 shows the evaluation values when the CRAC1 dataset was excluded from the training data. We found that the accuracy evaluation value was smaller when the training data was excluded compared to when it was not excluded, but there was no significant change. Also, comparing the evaluation index values of each CRAC, we can see that there was no significant difference.

Table 18.

Evaluation values when the CRAC1 dataset was excluded from the training data.

Next, Table 19 shows the evaluation index values when the CRAC2 dataset was excluded from the training data. We found that the evaluation index value was worse than the result without exclusion. Also, comparing the accuracy evaluation values of each CRAC, we can see that there was no significant difference. Although this change was slight, it possibly stems from the fact that we performed more than five experiments for CRAC2 and the number of samples was higher. Therefore, in this target room, even when only the training data with CRAC2 was used, it was possible to make predictions with the same accuracy as when the CRAC1 or CRAC2 were stopped. There is a possibility that preparing training datasets can be reduced. However, in a larger server room where there are more CRACs than in our evaluation, the conditions would differ greatly, so further study is necessary to consider whether the same phenomenon can be applied.

Table 19.

Evaluation values when the CRAC2 dataset was excluded from the training data.

7.2.3. Effect of Stopping Time

It is very difficult to collect training data on the stopping time of the CRAC in a server room when a service is actually being provided because this would affect the service. In addition, the longer the air conditioner is stopped, the higher the temperature will be, so it is crucial to understand the air conditioner stop time required for the training data and model construction.

Therefore, for the purpose of examining the stopping time of training data, we examined how the evaluation index value changes in the training dataset when the dataset with a long stop time is excluded (Table 20). We found that the evaluation value decreased when the dataset with a shorter air conditioner stop time was used. When only the dataset of less than ten minutes was used, the R2 fell below 0.8, which means a correct prediction could not be made. We assume there are two major reasons for this.

Table 20.

Evaluation index value when the stopping time of training data was excluded.

First, the size of the number of samples used for training had an effect. For samples with a short air conditioner downtime, the number of samples that could be acquired in one case was small. Therefore, the number of datasets that could be used in this verification decreased sharply as the downtime became shorter.

Second, extrapolation seemed to be difficult, which led to a decrease of the evaluation index values. We conclude that it is difficult to predict events that are not included in the training data.

7.2.4. Effect of Data Acquisition Time before CRAC Is Stopped

In our model, we use some of the data before CRAC is stopped as training data. We assumed that the required specifications for the data collection system, the implementation method of the prediction program, and the usage method of the prediction would change depending on how much previous information is required to achieve highly accurate prediction. Therefore, we examined the influence on accuracy by changing the time before stopping, which can be used as learning data.

Table 21 shows the accuracy evaluation values when the time before stopping that can be used as learning data was changed. When the data of 1 min ago was used, all evaluation values showed the best value. Also, when using only data older than one hour as the learning data, the value was lower than that of the data 1 or 30 min ago. We found that there was no significant difference in the coefficient of determination when using data older than one hour ago. These results demonstrate that it is necessary to use the immediately preceding data in order to achieve highly accurate prediction.

Table 21.

Evaluation index based on the time before stopping available.

8. Conclusions

In this study, we have proposed and evaluated a machine learning model that predicts the time-series change of the rack intake air temperature after a CRAC has stopped. Our main contributions are as follows. In addition, this study is a case study of a server room. In the future, we will study the applicability to various server rooms, and the applicability of the model to racks and ICT devices that the load fluctuations affect that do not exist during the learning period.

- We showed that a robust model can be constructed by utilizing the machine learning model.

- We clarified the effect of each explanatory variable on the accuracy.

- In order to construct this prediction model, we found it is necessary to prepare the same number of training datasets as the number of validation datasets.

- In the training dataset, the effect on accuracy of limiting CRAC was small.

- When using this prediction model, we found it is necessary to use the immediately preceding data.

Author Contributions

Both authors contributed to this work as follows: Conceptualization, K.S. and M.K.; Methodology, K.S.; Software, K.S. and T.A.; Validation, K.S. and M.K.; Formal Analysis, K.S.; Investigation, K.S. and M.K.; Resources, M.K.; Data Curation, K.S. and T.A.; Writing–Original Draft Preparation, K.S.; Writing–Review & Editing, K.S. and K.M.; Visualization, K.S.; Supervision, M.S. and T.W.; Project Administration, M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Andrae, A.; Edler, T. On global electricity usage of communication technology: Trends to 2030. Challenges 2015, 6, 117–157. [Google Scholar] [CrossRef]

- ASHRAE TC 9.9. Datacom Equipment Power Trends and Cooling Application Second Edition; American Society of Heating Refrigerating and Air-Conditioning Engineers Inc.: Atlanta, GA, USA, 2012. [Google Scholar]

- Geng, H. Data Center Handbook; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2015. [Google Scholar]

- ASHRAE TC 9.9. Data Center Power Equipment Thermal Guidelines and Best Practices; American Society of Heating Refrigerating and Air-Conditioning Engineers Inc.: Atlanta, GA, USA, 2016; Available online: https://tc0909.ashraetcs.org/documents/ASHRAE_TC0909_Power_White_Paper_22_June_2016_REVISED.pdf (accessed on 3 August 2020).

- Niemann, J.; Brown, K.; Avelar, V. Impact of Hot and Cold Aisle Containment on Data Center Temperature and Efficiency; Schneider Electric White Paper; Schneider Electric’s Data Center Science Center: Foxboro, MA, USA, 2017. [Google Scholar]

- Tsukimoto, H.; Udagawa, Y.; Yoshii, A.; Sekiguchi, K. Temperature-rise suppression techniques during commercial power outages in data centers. In Proceedings of the 2014 IEEE 36th International Telecommunications Energy Conference (INTELEC), Vancouver, BC, Canada, 28 September–2 October 2014. [Google Scholar]

- Tsuda, A.; Mino, Y.; Nishimura, S. Comparison of ICT equipment air-intake temperatures between cold aisle containment and hot aisle containment in datacenters. In Proceedings of the 2017 IEEE International Telecommunications Energy Conference (INTELEC), Broadbeach, QLD, Australia, 22–26 October 2017. [Google Scholar]

- Garday, D.; Housley, J. Thermal Storage SystemProvides Emergency DataCenter Cooling; Intel Corporation: Santa Clara, CA, USA, 2007. [Google Scholar]

- Lin, M.; Shao, S.; Zhang, X.S.; VanGilder, J.W.; Avelar, V.; Hu, X. Strategies for data center temperature control during a cooling system outage. Energy Build. 2014, 73, 146–152. [Google Scholar] [CrossRef]

- Sakaino, H. Local and global global dimensional CFD simulations and analyses to optimize server-fin design for improved energy efficiency in data centers. In Proceedings of the Fourteenth Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems, Orlando, FL, USA, 27–30 May 2014. [Google Scholar]

- Winbron, E.; Ljung, A.; Lundström, T. Comparing performance metrics of partial aisle containments in hard floor and raised floor data centers using CFD. Energies 2019, 12, 1473. [Google Scholar] [CrossRef]

- Lin, P.; Zhang, S.; VanGilde, J. Data Center Temperature Rise during a Cooling System Outage; Schneider Electric White Paper; Schneider Electric’s Data Center Science Center: Foxboro, MA, USA, 2013. [Google Scholar]

- Kummert, M.; Dempster, W.; McLean, K. Simulation of a data center cooling system in an emergency situation. In Proceedings of the Eleventh International IBPSA Conference, Glasgow, Scotland, 27–30 July 2009. [Google Scholar]

- Zavřel, V.; Barták, M.; Hensen, J.L.M. Simulation of data center cooling system in an emergency situation. Future 2014, 1, 2. [Google Scholar]

- Andrew, S.; Tomas, E. Thermal performance evaluation of a data center cooling system under fault conditions. Energies 2019, 12, 2996. [Google Scholar]

- Demetriou, D.; Calder, A. Evolution of data center infrastructure management tools. ASHRAE J. 2019, 61, 52–58. [Google Scholar]

- Brown, K.; Bouley, D. Classification of Data Center Infrastructure Management (DCIM) Tools; Schneider Electric White Paper; Schneider Electric’s Data Center Science Center: Foxboro, MA, USA, 2014. [Google Scholar]

- Sasakura, K.; Aoki, T.; Watanabe, T. Temperature-rise suppression techniques during commercial power outages in data centers. In Proceedings of the 2017 IEEE International Telecommunications Energy Conference (INTELEC), Broadbeach, QLD, Australia, 22–26 October 2017. [Google Scholar]

- Sasakura, K.; Aoki, T.; Watanabe, T. Study on the prediction models of temperature and energy by using dcim and machine learning to support optimal management of data center. In Proceedings of the ASHRAE Winter Conference 2019, Atlanta, GA, USA, 12–16 January 2019. [Google Scholar]

- Harvey, A.; Koopman, S. Diagnostic checking of unobserverd-components time series models. J. Bus. Econ. Stat. 1992, 10, 377–389. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting Systems; ACM: San Francisco, CA, USA, 2016. [Google Scholar]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).