1. Introduction

With the rapid development of the economy, the demand for electricity increases rapidly. To meet the electricity demand, renewable energies and distributed electricity generation have emerged as an important part of electrical energy. The randomness of these kinds of energies may bring a impulse to the power grid, thus the thermal power generation requires accurate planning according to the renewable energy generation and social electricity consumption to balance the power generation and consumption. The high randomness of renewable energy generation calls for a more flexible and intelligent scheduling technology to solve the problem [

1]. At the same time, the rapid increase in both type and quantity of the electricity consumption leads to drastic variation in the electrical load, which worsens the regional load imbalance severely. As an important functionality of the energy management system, accurate electrical load forecasting plays an important role for efficient dispatching and enhanced grid quality [

2].

As a classical time series forecasting problem, short-term load forecasting (STLF) has attracted much attention from both academia and industry since the 1950s, and various methods have been proposed ever since [

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13]. The existing algorithms can be roughly classified into two categories: statistical methods and machine learning methods. Statistical methods include similar day method and regressive-type methods. The similar day method [

3] searches for the historical electrical load segments with the same attribute values (e.g., climate, day of the week, and date) as the current one to be predicted, and then, makes a prediction via weighting the selected historical load segments. Although simple and effective, it depends on the correct choice of affecting factors, and it will degrade severely when some important affecting factors are missing. Regression-type methods analyze the correlation between variables via statistics. When applied to electricity load prediction, electricity loads are regarded as relevant data, while external factors such as temperature and date are copied as independent variables. Then, regression methods model the correlation between loads and external variables [

4,

5]. Regression-type models, including Autoregressive moving average (ARMA) [

6] and many other enhanced models [

7,

8], although taking the non-stationarity of time series and the influence of external factors into consideration, are not designed for the task with typical non-stationarity and numerous external factors. Machine learning methods mainly include shallow networks and deep learning methods. Shallow neural network models are data-driven adaptive method, which simulates the relationship between input and output through nonlinear mapping to learn the patterns hidden in the electrical load [

10,

11,

12]. Among numerous shallow neural network models, the ensemble learning ones usually have excellent performance, which combine multiple prediction algorithms and achieve enhanced prediction accuracy with mild increase of complexity [

13,

14]. Recently, deep learning (DL) models have been deployed widely due to its excellent capability of feature extraction, gradual abstraction and self-adaptive learning without prior knowledge [

15]. The DL-based STLF methods mainly includes three categories: recurrent neural network (RNN), convolutional neural network (CNN), and deep belief network (DBN). As a typical RNN model, long short term memory (LSTM) network partly solves the vanishing gradient and exploding gradient problems of RNN, and achieves state-of-the-art performance [

16,

17,

18,

19,

20,

21]. CNN regards time series as images and reduces the number of parameters in the deep network by sharing convolution kernel parameters. Comparison works have been done and results shown that CNN-based models can achieve the forecasting performance comparable to LSTM given sufficient training samples [

22,

23,

24]. DBN usually adopts the self-encoder structure to conduct pre-training of the network, which alleviates the deep network training [

25,

26].

It is well known that the electrical load features: (i) external factor-induced significant randomness in company with stationarity [

5,

27]; (ii) obvious quasi-periodicity spanning from year, season, month to week [

27]; (iii) complicated load patterns related with temperature (specifically, the electrical load is sensitive to slight temperature changes in higher/lower temperature zones, but insensitive in the mild temperature zones) [

7]; and (iv) data imbalance, i.e., much fewer samples with much more complicated load pattern in higher/lower temperature zones than those in the mild temperature zones.

Considering the above features, the existing regression-based STLF algorithms can model the short-term stationarity of electrical loads, but can characterize the randomness, and result in limited forecasting performance. Shallow neural networks, due to limited learning capability, hav difficulty distilling the complicated patterns of the electrical load. Although presenting the state-of-the-art STLF performance, LSTM-based models cannot avoid vanishing gradient and exploding gradient problems in deep network training, thus have strict constraint on the length of input sequence. Thus, LSTM models cannot fully exploit information of quasi-periodicity over large time span. At the same time, the data imbalance problem of fewer samples in sensitive temperature zones further degrades LSTM models.

To cope with the above problems with LSTM, in this paper, a deep ensemble learning model is proposed which is based on LSTM within active learning [

28,

29] framework under the following principles:

(i) The STLF network is designed within active learning framework to actively select the most key samples from the historical dataset, thus mitigating the constraint on the length of input sequence by LSTM, and the quasi-periodicity feature of loads over large time span can be fully exploited. The active learning framework consists of a selector and a predictor. The selector selects key samples with the most similar patterns as the current load segment to be predicted to fully explore the information hidden in various periodicity of electrical load segments. At the same time, it can solve the problem of data imbalance, thus further improve the STLF performance. The predictor is an ensemble deep learning network composed of LSTM and multi-level preceptor (MLP). By integrating the patterns contained in short-term load segment extracted by LSTM and the information hidden in the segments selected by the selector, it improves the forecasting performance.

(ii) LSTM based deep learning model is developed to effectively extract complicated patterns hidden in the short-term electrical loads.

(iii) Under the principle of ensemble learning, the predictor integrates LSTM and MLP to improve the STLF performance with a little increase of complexity.

Compared with existing STLF models, the proposed model has the following advantages:

(i) The selector of the active learning framework selects several key load segments whose patterns are highly similar to the current load segment to be forecasted, thus the predictor can fully exploit the information with any time span. Then, the proposed framework not only fully utilize LSTM’s excellent learning capability to model the complicated patterns, but also remedies its weak learning ability over large time span series.

(ii) The active selection strategy of historical samples can effectively overcome the performance degradation caused by data imbalance existed in electrical loads.

(iii) The ensemble deep learning model integrating LSTM and MLP improves the prediction performance with mild increase of training complexity of the deep learning model.

The rest of the paper is organized as follows.

Section 2 statistically analyzes the quasi-periodicity of various time spans for the electrical loads and the effects by external factors, laying a foundation for the subsequent structure design of the proposed model and the selection of influencing factors. In

Section 3, the proposed model within active learning framework is detailed. In

Section 4, the proposed model is evaluated on an open dataset and compared with several mainstream STLF models. Finally, the paper is concluded in

Section 5.

2. Statistical Analysis of Load

The electrical load pattern is heavily influenced by both internal factors (e.g., users’ consumption pattern) and external factors (e.g., weather, days of the week, and special events), which makes it uncontrollable. On the one hand, the electrical load patterns show randomness due to the influence of random factors (such as weather change, special events, etc.). On the other hand, it also behaves regularly due to the relative certainty of users’ power consumption pattern. In general, the electrical loads can be decomposed into trend term, periodic term, and stochastic term [

5,

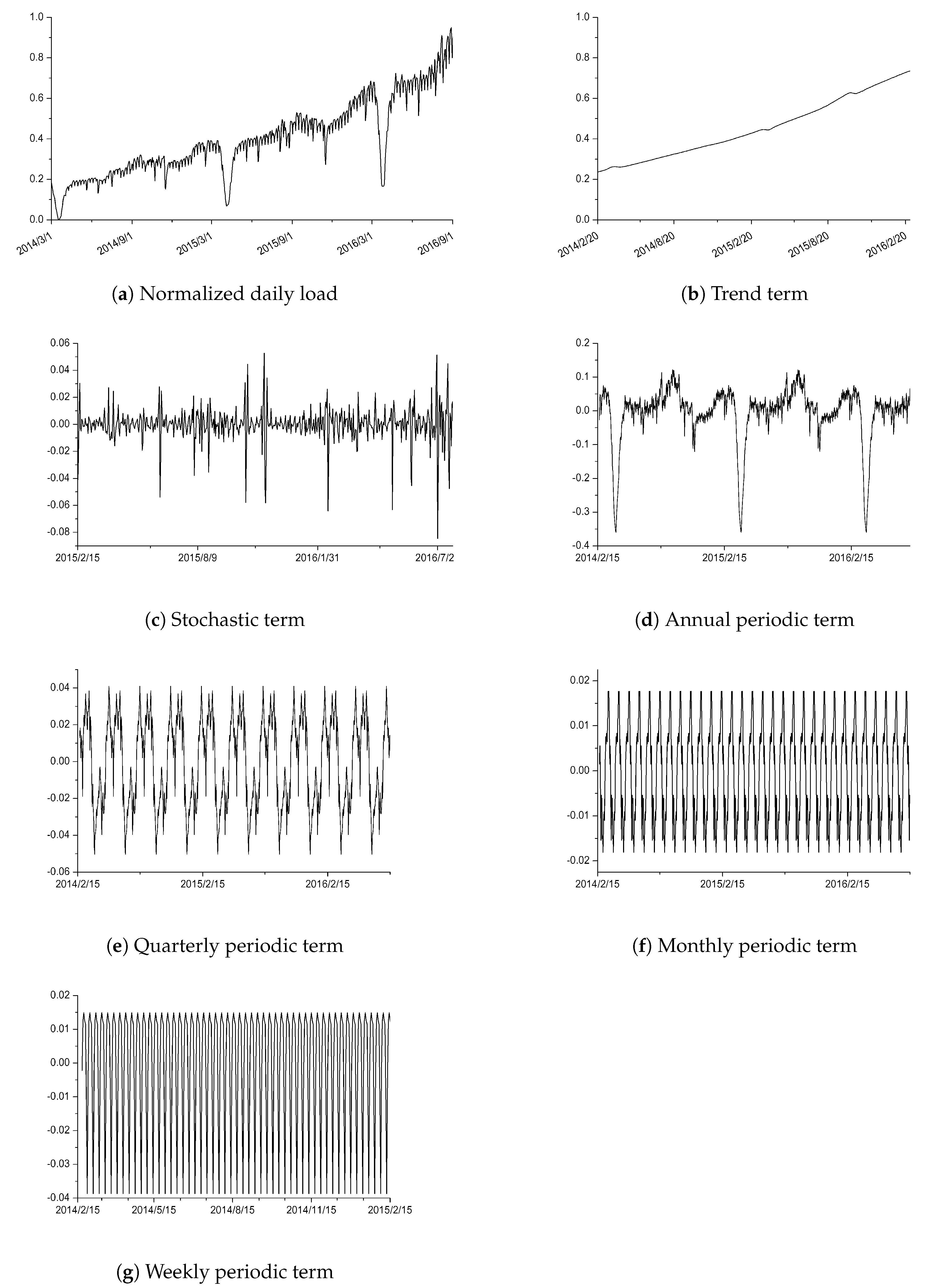

27]. As shown in

Figure 1, the normalized daily electrical load of Hangzhou city in eastern China from 1 January 2014 to 31 March 2017 (

Figure 1a) is decomposed into trend term (

Figure 1b), stochastic term (

Figure 1c) and periodic term, which can be further decomposed into annual periodic term (

Figure 1d), quarterly periodic term (

Figure 1e), monthly periodic term (

Figure 1f) and weekly periodic term (

Figure 1g).

After statistical analysis of power load, we can observe that:

(i)

Figure 1d–g clear shows that the electricity load has quasi-periodicity of various time spans, i.e., the load patterns show great similarity over years, seasons, months, and weeks.

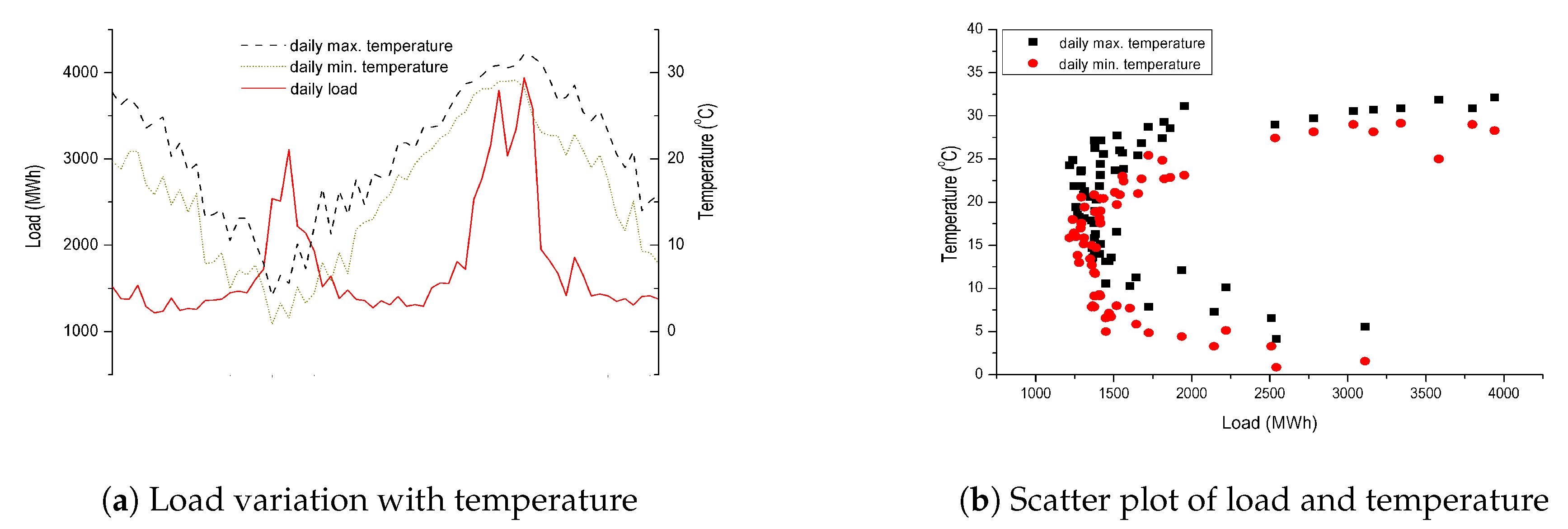

(ii) Electricity consumption is closely related with temperature. The daily electricity consumption in megawatt hour (MWh) and the temperature in degree Celsius (°C) are presented in

Figure 2a, from which it is clear that the electricity consumption in summer and winter is much higher than that in spring and autumn. More specifically, the load variation with the daily maximum/minimum temperature is illustrated in

Figure 2b in the form of scatter plot, from which we can see that load increases significantly in both higher (daily minimum temperature is higher than 27 °C) and low temperature zones (daily maximum temperature lower than 6 °C).

Further, we use Pearson correlation coefficient to analyze the correlation between temperature and electrical load, which is defined by

where

is the daily load at the

nth day,

is the mean of daily load sequence, and

and

are the average daily temperature and its mean, respectively.

Then, the student’s test (T-test) is utilized to test the null hypothesis of “population correlation coefficient is 0”. If T-test is significant, the null hypothesis is rejected and we say temperature and electrical load are linearly dependent; otherwise, they are considered to be linearly independent. Pearson correlation test results, listed in

Table 1, show that a high linear dependence exists between daily temperature and load. Therefore, temperature data will help to improve the prediction.

(iii) Data imbalance problem. It can also be observed from the scatter plot in

Figure 2b that there are fewer samples in higher/lower temperature zones than those in mild temperature zones.

(iv) Randomness induced by special events. As can be seen in

Figure 1a, the load reaches its lowest point in February every year, which is due to the great reduction of electricity consumption during the Spring Festival holiday. In addition, there was an unusual drop in August 2016 due to the strict power restrictions on enterprises during the G20 Summit. These special events have great impact on the load pattern, thus using the load with similar pattern as training samples can improve the accuracy of prediction.

According to the above features of electrical load, we can improve the prediction performance of STLF by: (a) fully exploiting the quasi-periodicity with various periods of electrical load; (b) accurately learning and representing the complicated load patterns; (c) overcoming the data imbalance problem of electrical load; and (d) exploring the effect on load by external factors such as temperature, day of week, and special events.

3. Deep Ensemble Learning Model Based on LSTM within Active Learning Framework

Taking these characteristics into consideration, an enhanced deep ensemble learning model is developed, which features: (a) A LSTM-based deep learning model is used to explore the complicated patterns hidden in electrical load. (b) The selector of the active learning framework actively selects several key samples with the most similar patterns to distill quasi-periodicity information, and eliminates the negative effect of data imbalance. (c) An ensemble model integrating LSTM and MLP greatly improves the model capacity of deep neural network with a little penalty on complexity.

3.1. Improved Active Learning Framework

Active learning [

28,

29] was proposed for model training with sufficient data but few labeled samples. Its main idea is to select the key samples from the unlabeled dataset that affect the learning performance mostly, and then label these key samples artificially. The theoretical basis of active learning is that the model usually depends on only a small number of key samples and most of the useful information is covered by these key samples. If these key samples are selected to train the model, all other samples can be ignored so that the model training is much more efficient. Usually, active learning system consists of a learner and a selector. The learner often employs a supervised learning algorithm to train the model from labeled data, and the selector selects key samples for the learner after labeling. The key of active learning is how to select the key samples. Comparing with the passive learning methods which train the model from all given samples, active learning method has more rapid convergence and overcomes the data imbalance problem.

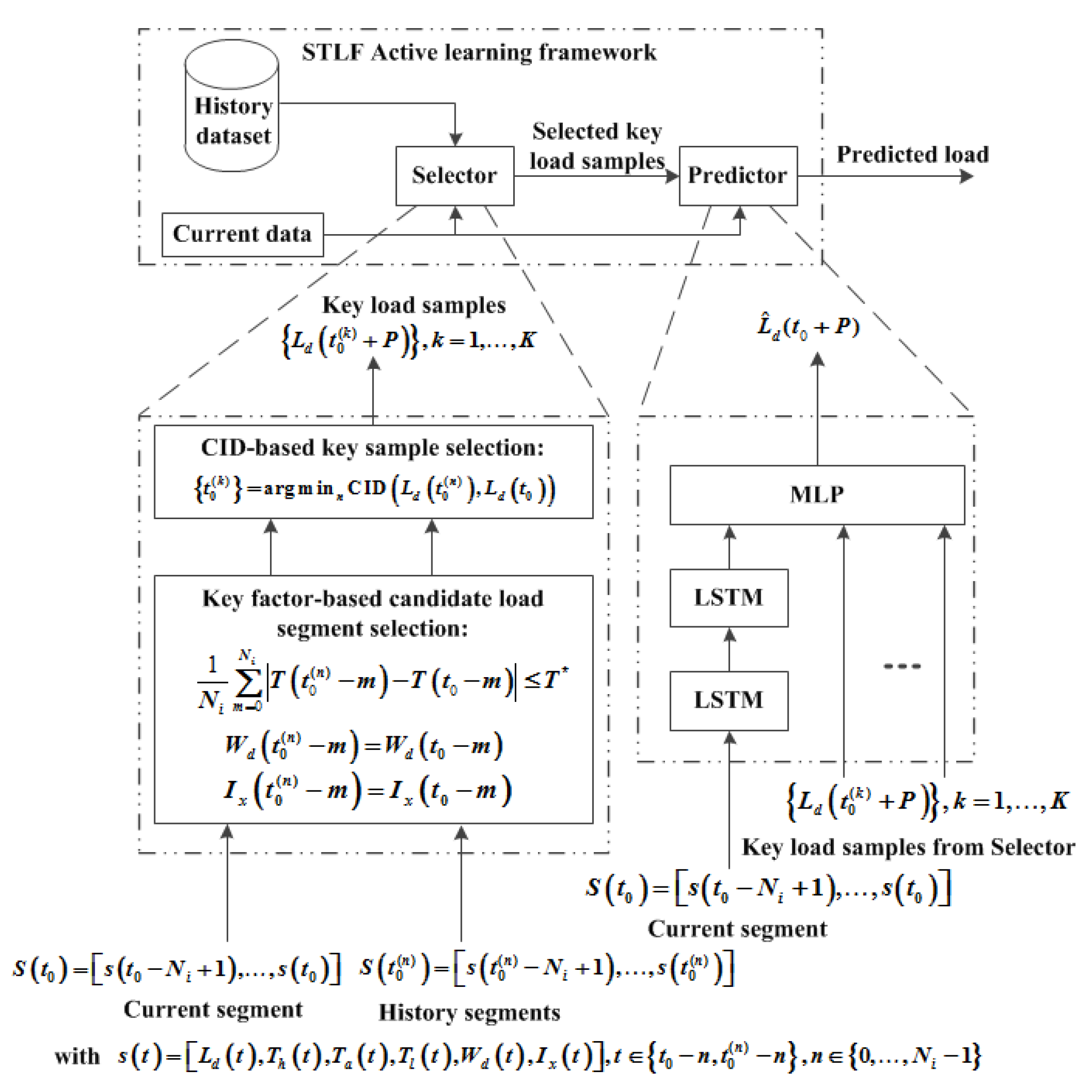

Inspired by actively selecting key samples to accelerate model training and mitigate data imbalance, an active learning framework is developed, and its structure is shown at the upper part of

Figure 3. It is well known that an artificial neural network will produce similar output when similar input is given. For STLF, the similarity of load shape is chosen and then the key samples are those have similar curve shape to the one to be forecasted. These key samples are inputted to the predictor for training the STLF model together with current load segment and corresponding attributes including temperature, holidays or not.

3.2. The Selector Based on Load Shape Similarity

The selector actively selects several load segments most similar to the current load segment as the training samples, thus it is very important to employ a suitable similarity measurement. In this paper, the similarity of load pattern is based on the similarity of load curve, whose theoretical basis is as follows: (a) The trend of load will continue in short term, that is, it is of high possibility that the loads behave similarly in the near future if they are alike in the previous several days. (b) The load pattern is strongly affected by several external factors such as temperature, day of week, and special events. When these key external factors are the same, their effects on the load will be similar.

There are many metrics to measure the similarity of curve shape such as Euclidean distance, Fechet distance [

30], and Hausdorff distance [

31]. For good balance between performance and computation complexity, complexity-invariant distance (CID) is deployed, which is much easier to compute than Fechet distance and Hausdorff distance. CID is a modified Euclidean distance, which weights the Euclidean distance by the curve complexity factor (CCF) [

32]. The CCF comes from a physical intuition: if a curve is stretched to a straight line, a more complicated curve would produce a longer straight line than a simple one. The CID of two daily load curves

and

is defined by

where

is the Euclidean distance between curves

and

of length

N, and

is the CCF defined by

where

.

Similarity metrics of external factors are vital for the selection of key samples as well. Since the load pattern in higher/lower temperature zones differs greatly from that in mild temperature zones, the similarity metric differs according to the daily temperature. Specifically, if the difference of absolute mean daily temperature related to two load segments is less than a threshold

, these two load segments will be considered to be highly similar. In the case shown in

Figure 2a, electrical load fluctuates sharply even the temperature changes mildly in sensitive temperature zone, while it is much insensitive to the temperature change in the mild temperature zone. Therefore, the value of threshold

should be different for these two kinds of temperature zones. In the above example, the value of threshold is 0.5 and 5 for sensitive and insensitive temperature zones, respectively. It should be noted that both sensitive temperature zone and threshold are determined by the statistical analysis of load and corresponding temperature data.

3.3. Deep Ensemble Learning Predictor based on LSTM and MLP

The full utilization of load’s quasi-periodicity information can enhance LSTF. For a long period, it is not necessary to input all the time series, but only the most similar segments as training samples. There exists high correlation between these selected load segments and the current one so that they cannot be merged into a longer series as input to the LSTM. This is because the LSTM model trained by these very similar series will be very similar. At the same time, for LSTM models, the training complexity increases much faster than the input length. Therefore, it is more reasonable to adopt ensemble learning.

LSTM has been employed to learn the inner temporal variation pattern of the load, and

K selected key daily load by selector are combined to fed the predictor so as to extract the information contained in the quasi-periodicity of any period. The structures of both Selector and Predictor are depicted in the lower part of

Figure 3, where the input of LSTM,

, is a segment for

consecutive days. The

th element of

, is a six-tuple vector consisting of daily electrical load

, daily maximum temperature

, daily mean temperature

, daily minimum temperature

, day of the week

, and date type

. Among them,

corresponds to weekday, weekend, long holiday (such as National Day and Spring Festival in China, or Easter, Christmas, etc.) and major event (such as the Olympics, OPEC, G20 and other events that greatly affect the load patterns).

The Selector chooses K samples, , and outputs the daily load elements at day , . MLP combines outputs from both LSTM and Selector to estimate , the daily load at day . The training process is listed below:

Step 1: Data preprocessing.

Step 2: Initiating model parameters

Initial parameters of LSTM network: number of LSTM network layer L, number of input nodes , number of hidden nodes , number of output nodes ;

Number of the most similar historical load segments: K;

MLP parameters: Number of full connection layers Q, number of hidden layer nodes, activation function, cost function;

Step 3: Model training and parameter adjustment

Key sample selection by Selector;

Back-propagation through time (BPTT) is used to train the LSTM network;

Error Back Propagation (BP) is deployed to train the MLP network;

According to the training error curve and test error curve, the network is iteratively trained until the performance converges.

The procedure of key sample selection by Selector is listed below.

Input: , current segment whose element is ;

Output: , the daily load at days of of the selected K samples.

Step 1: Selection of candidate samples from dataset to meet the following three conditions

Condition 1: , where for and for ;

Condition 2: ;

Condition 3: .

Step 2: Calculation of CID distance of the candidate segment to the input segment according to Equation (

2) by

.

Step 3. Choose of Key samples

Selecting K candidate segments with the minimum CID distances as the key samples denoting by ;

Outputting the daily load .

4. Experiment and Result Discussion

4.1. Experiment Settings

The proposed model was evaluated on an open dataset, which contains the daily load (

https://www.torontohydro.com) and weather records (

http://climate.weather.gc.ca) of Toronto, Canada from June 2002 to July 2016. Cross-validation was adopted for performance evaluation, i.e., the dataset was partitioned into two isolated subsets: training dataset and test dataset. The test data were not used in training model for fair performance comparison. The partition ratio of training data and test data was 7:3.

Several classic STLF algorithms were also simulated for reference, including MLP, ARIMA, SVR, LSTM. Tensorflow-gpu and scikit-learn were used in Python simulation environment.

4.2. Hyper-parameters Optimization

The optimization of hyper-parameters of STLF models is to optimize the STLF performance in the form of mean absolute percent error (MAPE), which is defined by

where

is the forecasted value of the electrical load

at the

nth day.

Since the training complexity and the required training samples increase sharply when the layers of LSTM network increase, good balance between MAPE and complexity is concerned for the selection of layers of LSTM network. In other words, when the MAPE performance increases slightly at the cost of sharp increase of complexity, then fewer layers are employed.

The procedure of hyper-parameters and thresholds optimization consists of two phases.

Phase 1: Optimizing standard LSTM model.

The initial settings of STLF models are based on the statistical analysis of dataset, and the optimization procedure of hyper-parameters are based on the cross-validation method.

Through statistical analysis of daily load, typical period of seven is observed. Then, the initial number of input nodes is set to be seven while the number of layers iterates from one. After several rounds of performance evaluation for hyper-parameters adjustment, the settings of the standard LSTM model are determined as , for seven days forecasting and for other days forecasting, and .

Phase 2: Optimizing the proposed model. The LSTM network in the proposed model has the same setting as standard LSTM, and the optimization of MLP network settings follows four steps.

The first step is to choose thresholds for different temperature zones. According to the scatter plot of daily load and temperatures, the high, low and mild temperature zones are defined. Since the mild temperature zone holds large temperature variation, its average temperature variation is calculated with result of 4.26 °C within a week and 5.91 °C within two weeks. Then, is chosen as the initial threshold for mild temperature zone. After computing the ratios between daily variation and temperature variation in three temperature zones, it is found that the ratio in sensitive temperature zones is about ten times of that in mild temperature zone, from which is chosen as the initial value for sensitive temperature zones.

The second step is to initiate the number of key samples. After counting the number of daily segments with high similarity defined by thresholds for different temperature zones, the minimum number of highly similar daily load segments within high temperature zones is 6, and is chosen to be the initial value.

The third step is to initiate the layers and activation function of MLP. The number of layers begins from , and the activation function is chosen from sigmoid function and linear function.

The final step is to optimize hyper-parameters iteratively via cross-validation by changing the parameter settings such as

K,

Q, and activation function. The final model settings are listed in

Table 2.

4.3. Results and Analysis

MAPE was used to evaluate the forecasting performance of STLF.

Table 3 lists the simulation results, from which we can observe:

The proposed model outperforms all reference STLF models in all simulated scenarios, which verifies the effectiveness of the proposed model.

LSTM holds better performance than the other reference models. However, as the prediction time span increases, LSTM degrades significantly. This indicates that the length of input load segment is not enough to accurately predict the power load after seven days, such that longer input segment is needed, which further verifies the advantage of the proposed model.

MLP model shows a similar trend as LSTM, that is, as the prediction time span increases, the predicted performance degrades rapidly. Its performance is limited by both insufficient input load segment and the limited learning capability.

Although ARIMA model is enhanced for non-stationary series by difference operation, its learning capability is insufficient for electrical load featuring typical non-stationarity such that it is difficult to accurately model complicated and diverse patterns.

SVR shows good learning ability whose MAPE performance decreases slowly with the increase of prediction time. The main reason is that it learns from all data, but only selects key samples (support vectors), thus has the ability of active sample selection similar to active learning.

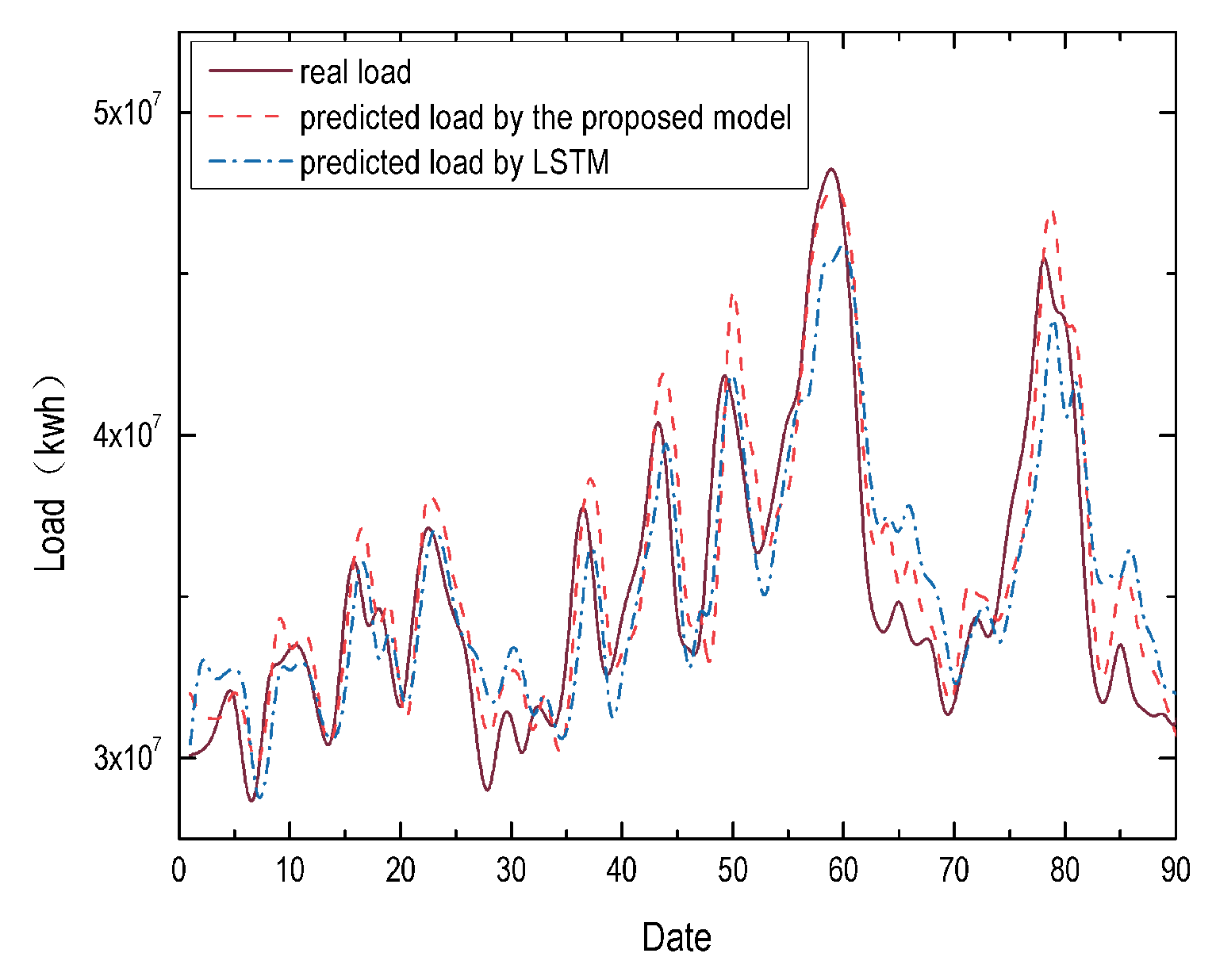

Since the load fluctuates sharply in sensitive temperature zones, it is necessary to evaluate the performance in different seasons.

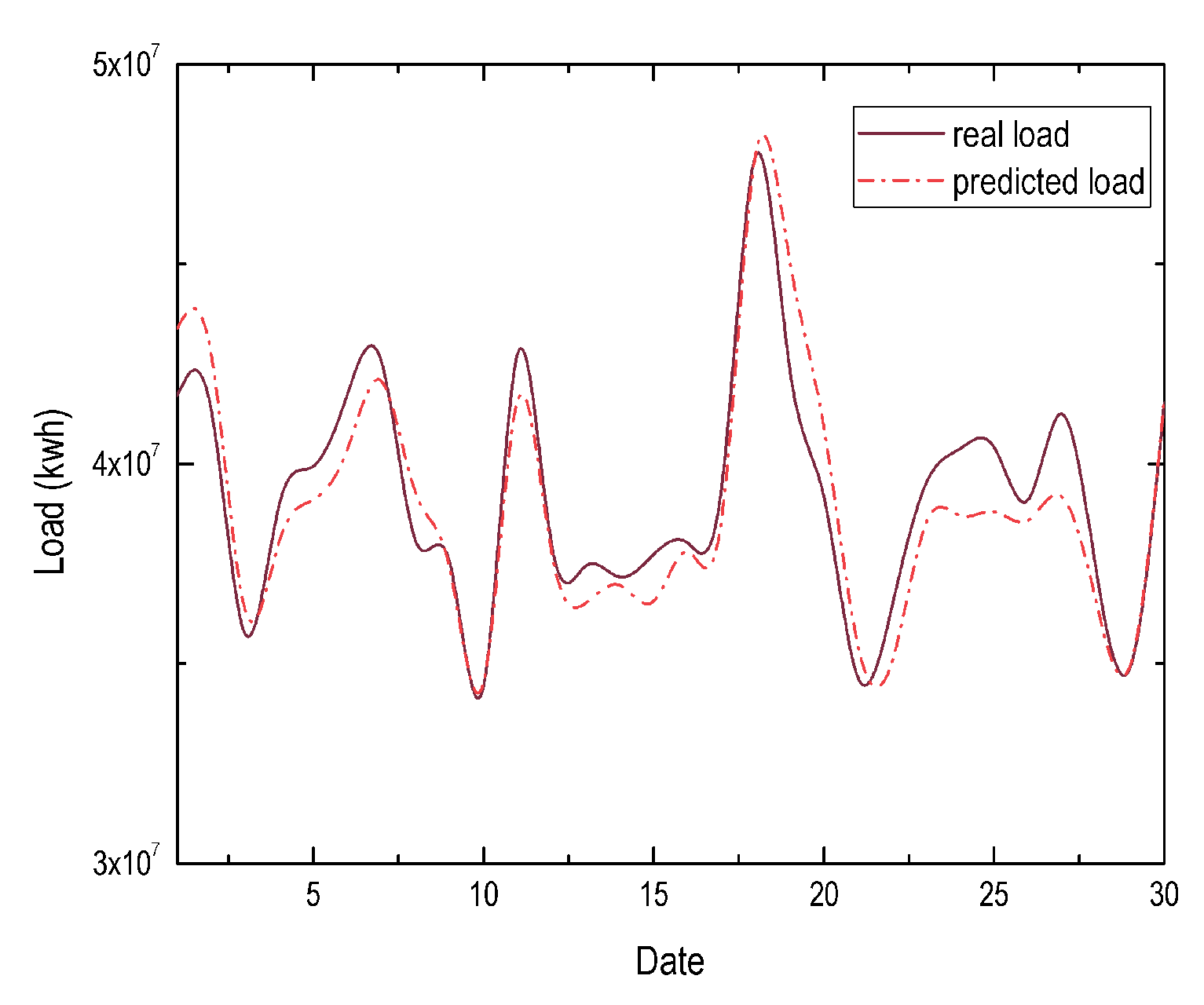

Figure 4 and

Figure 5 present the STLF results in spring and summer, and the results show that the proposed model achieves MAPE of

in spring and

in summer, which are much lower than all reference models. Specifically, the MAPE results of ARIMA, MLP, SVR and LSTM are

, and

in spring, and

, and

in summer, respectively. These results also verify the load patterns in sensitive temperature zones are more complicated, and the proposed model can learn complicated patterns much better.