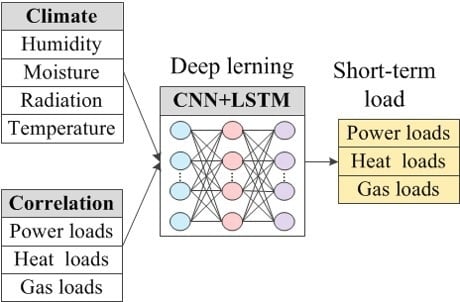

Short-Term Load Forecasting for CCHP Systems Considering the Correlation between Heating, Gas and Electrical Loads Based on Deep Learning

Abstract

1. Introduction

2. Literature Review

- (1)

- The heating, gas, and electrical loads of the CCHP system are highly coupled. Although there is a lot of literature focusing on load forecasting, the prediction of multiple loads considering their coupling has not been found in the literature. This is the first time to design a network to forecast loads, considering the coupling between them.

- (2)

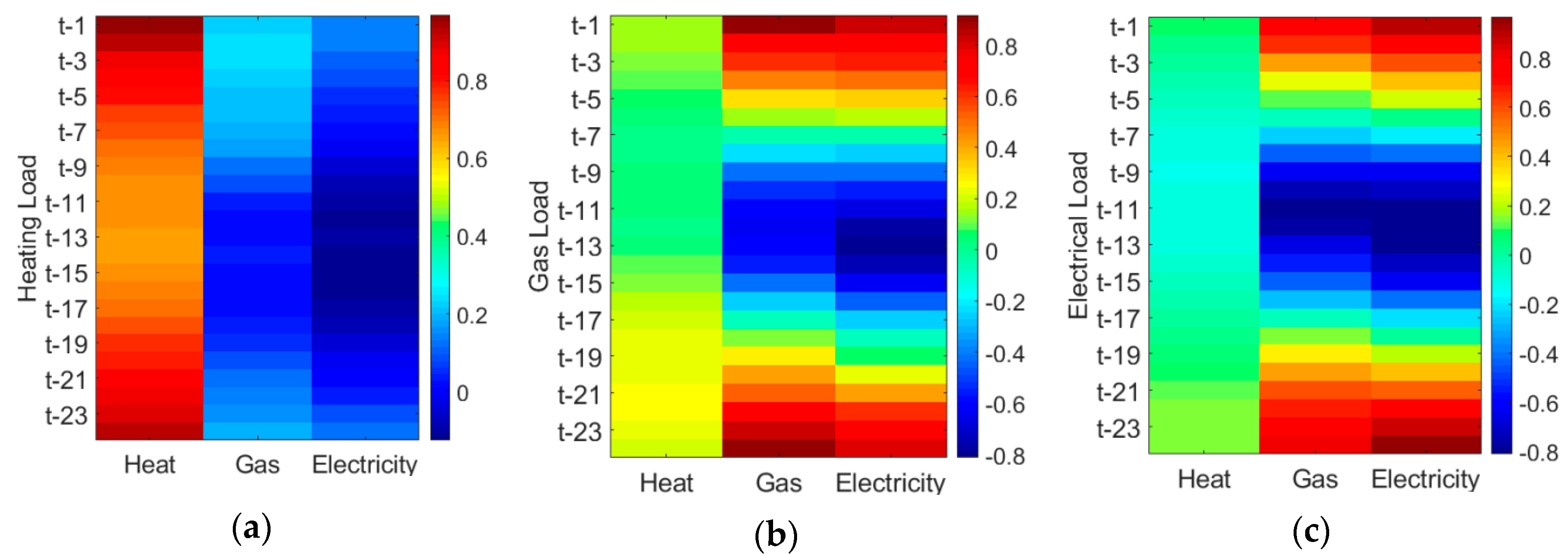

- Pearson correlation coefficient will be utilized to measure the temporal correlation between historical loads and current loads, to give the reason for using the LSTM network.

- (3)

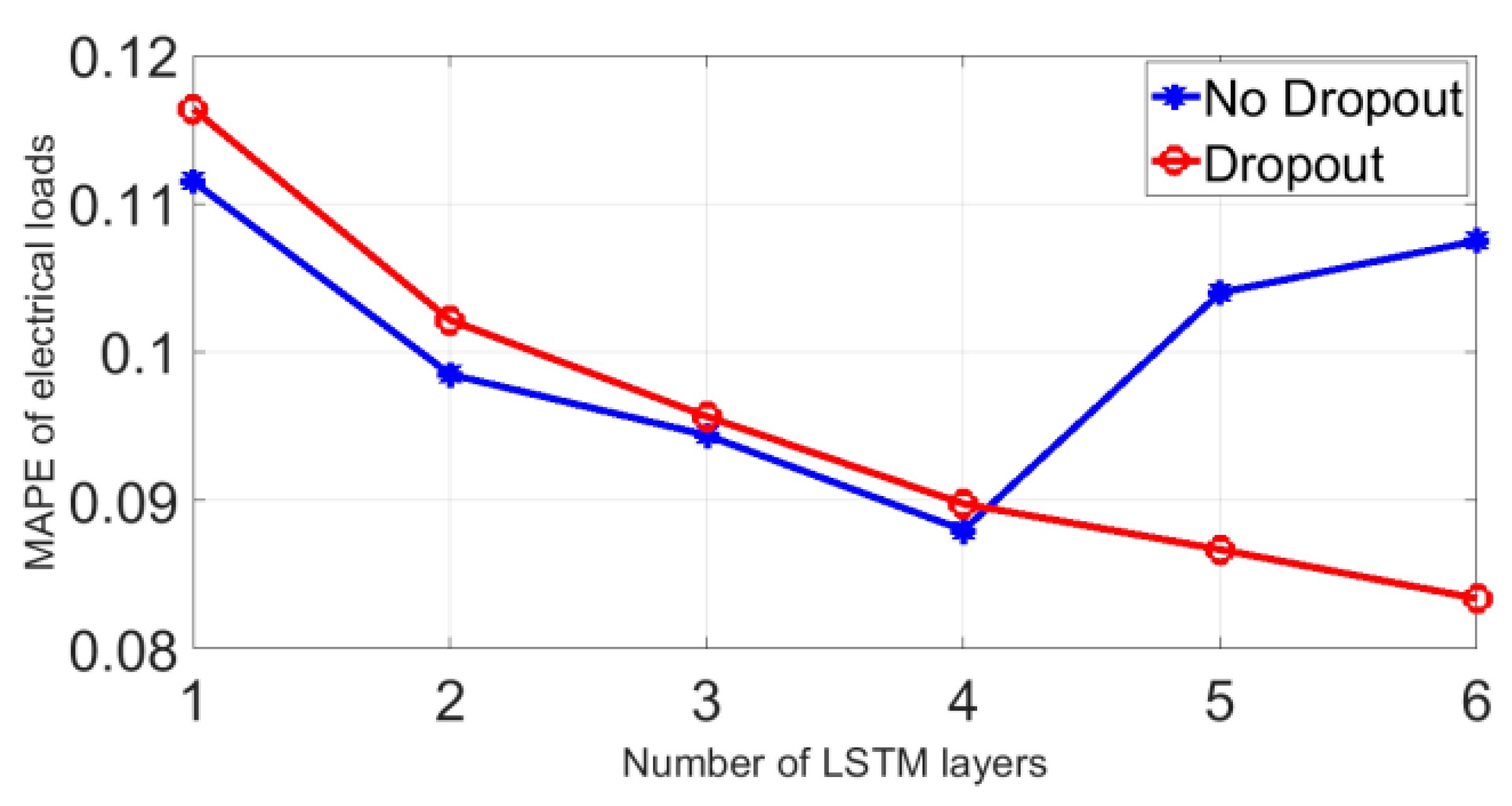

- The Conv1D layer and MaxPooling1D layer are utilized to inherent features that affect heating, gas, and electrical loads. To prevent over-fitting, the dropout is added between LSTM layers. The LSTM network which could take the influence of previous information into account is adopted to forecast these loads.

3. Analysis of Temporal Correlation

4. Deep Learning Framework for Forecast Short-Term Loads

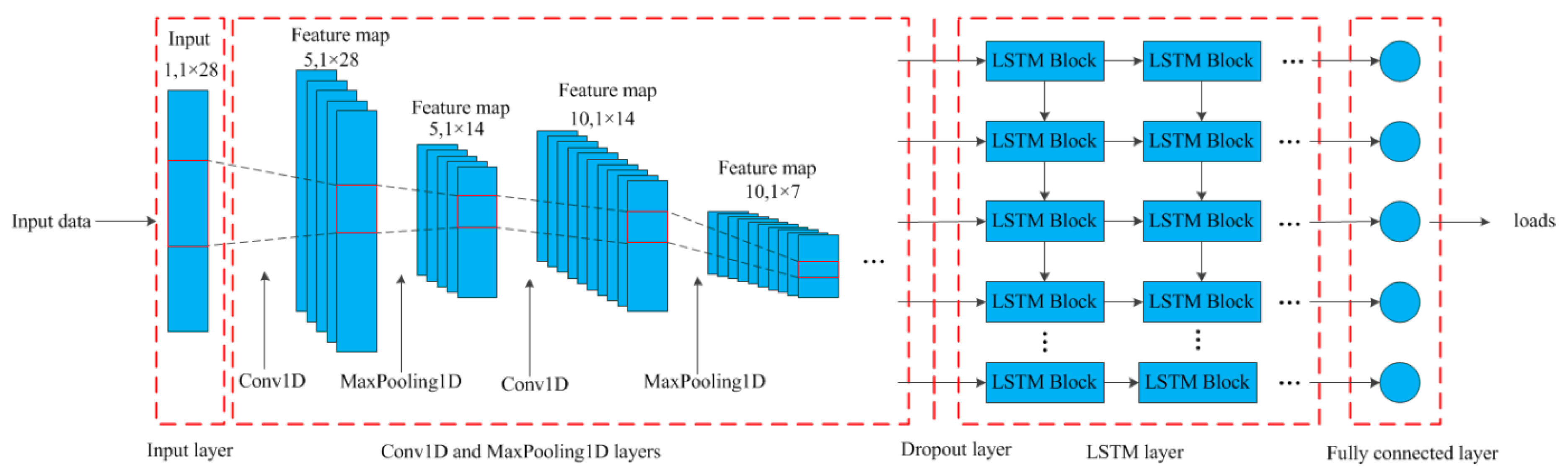

4.1. Conv1D Layer and MaxPooling1D Layer

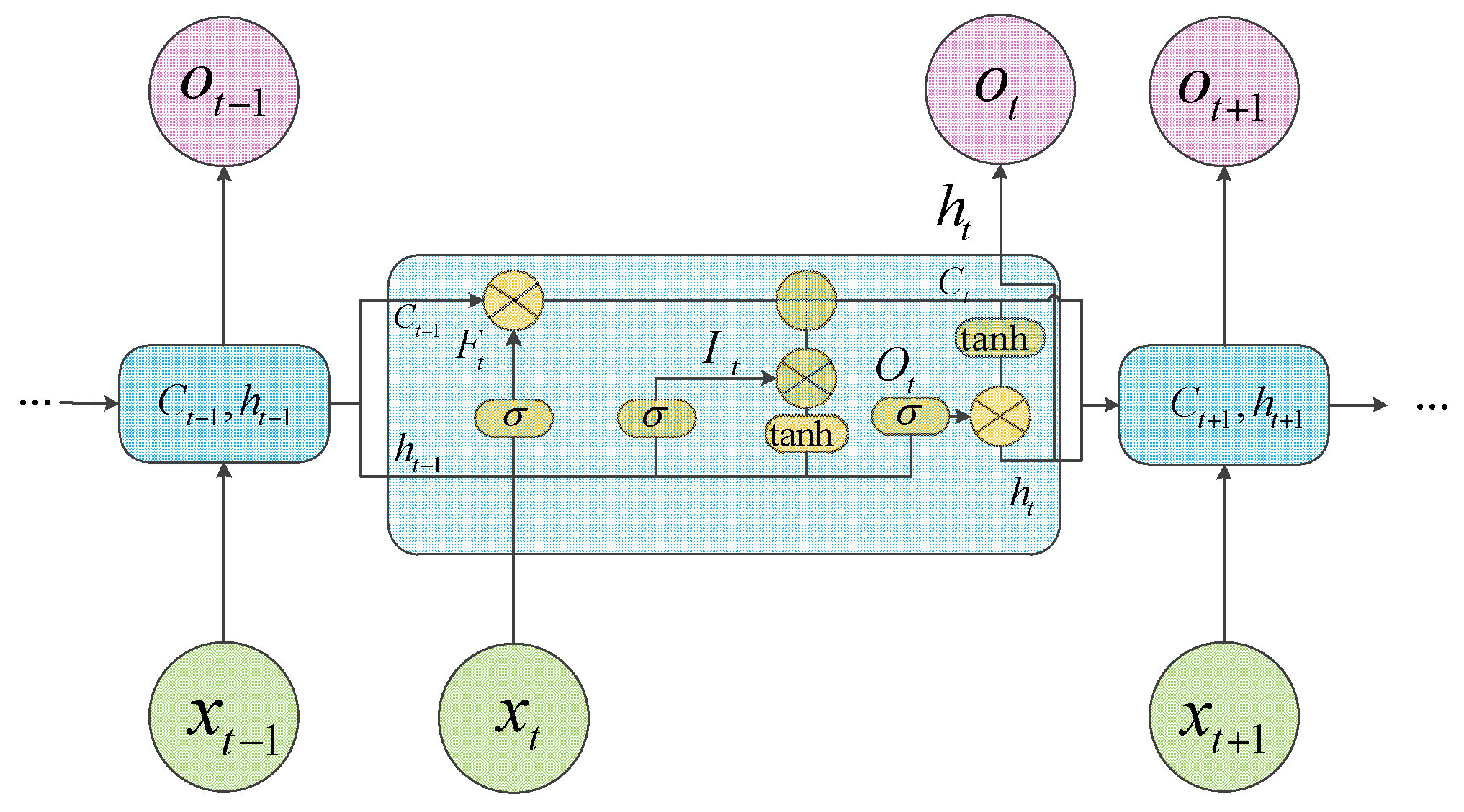

4.2. LSTM Layer

4.3. Dropout Layer

4.4. Framework for Multiple Loads Forecasting Based Deep Learning

- (1)

- The input data include historical loads and environmental factors such as moisture content, humidifying capacity, dry bulb temperature, and total radiation. The min-max normalization is used to bring all input data into the range from 0 to 1.

- (2)

- Next step is to determine the structure of network and parameters, such as the number of LSTM layer, the number of unit in each LSTM layer, the number of CNN layer, the size of kernel weight, the size of pooling, epochs and the size of each batch.

- (3)

- The input data will be sent to Conv1D layers. The MaxPooling1D layer is added between the two Conv1D layers. It extracts the maximum value of the filters and provides useful features while reducing computational cost thanks to data reduction.

- (4)

- In the LSTM layer, the time steps are sent to relevant LSTM block. The number of LSTM layers can be revised arbitrarily because of the sequential character of the output of the LSTM layer.

4.5. Indicators for Evaluating Result

5. Case Study

5.1. Experimental Environment and Parameters

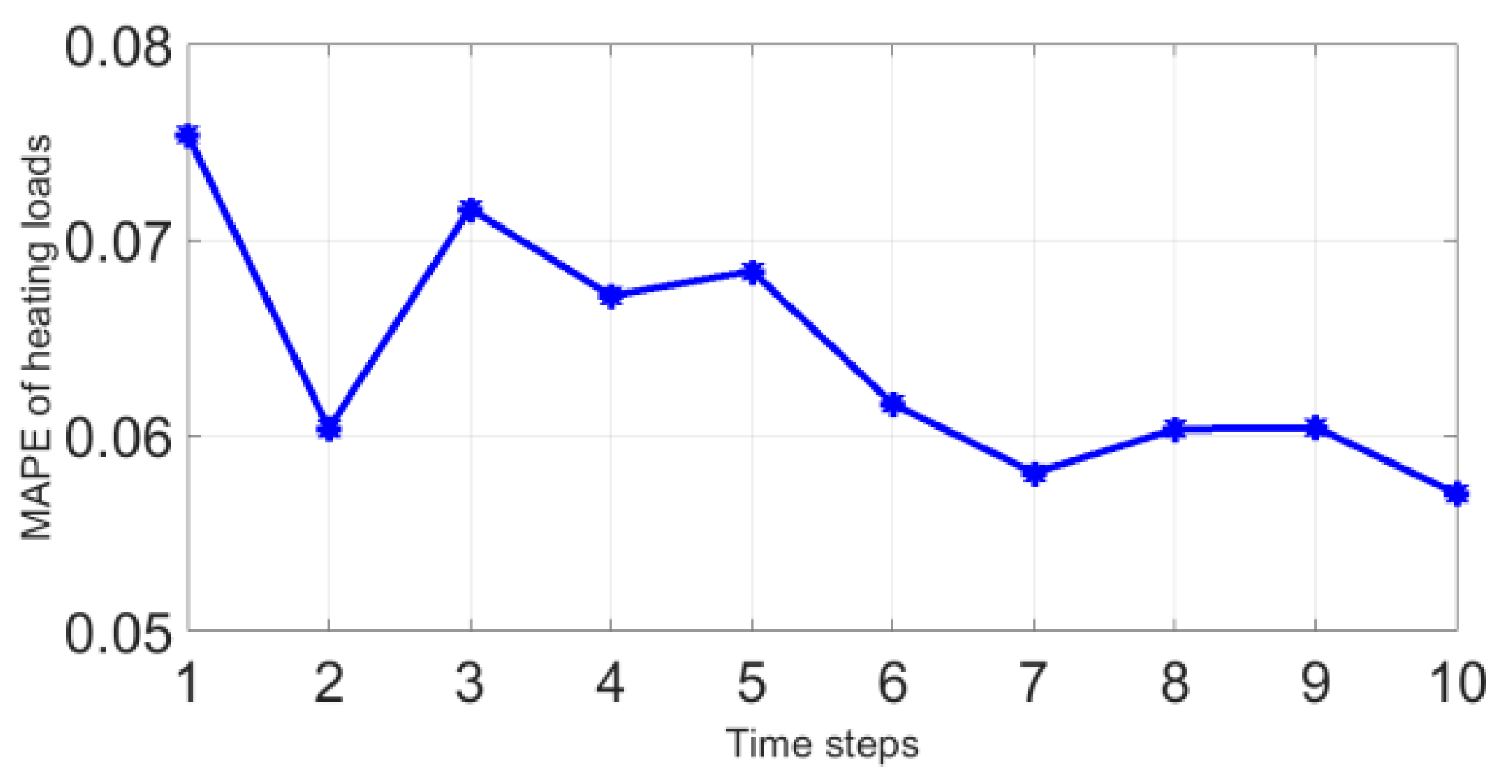

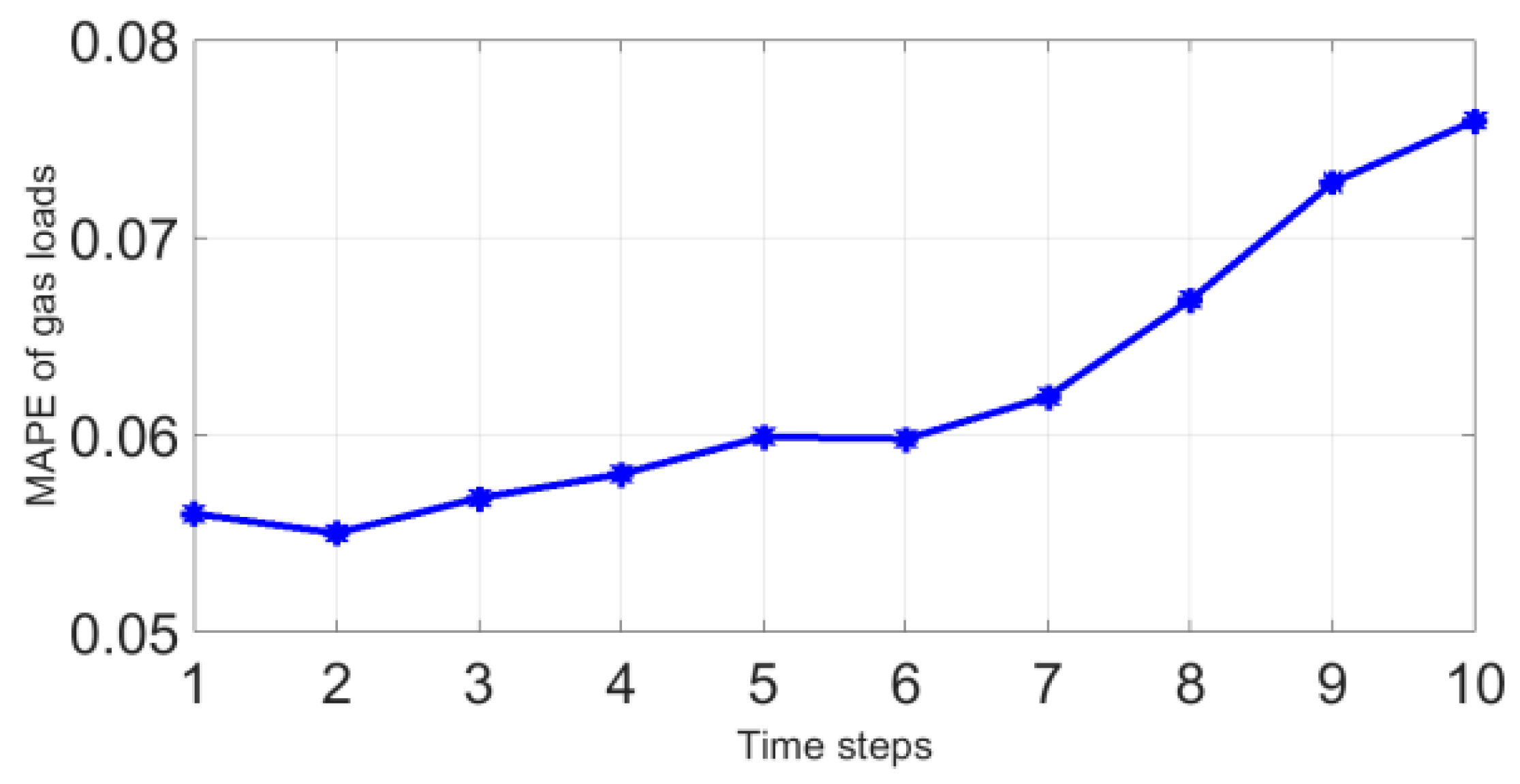

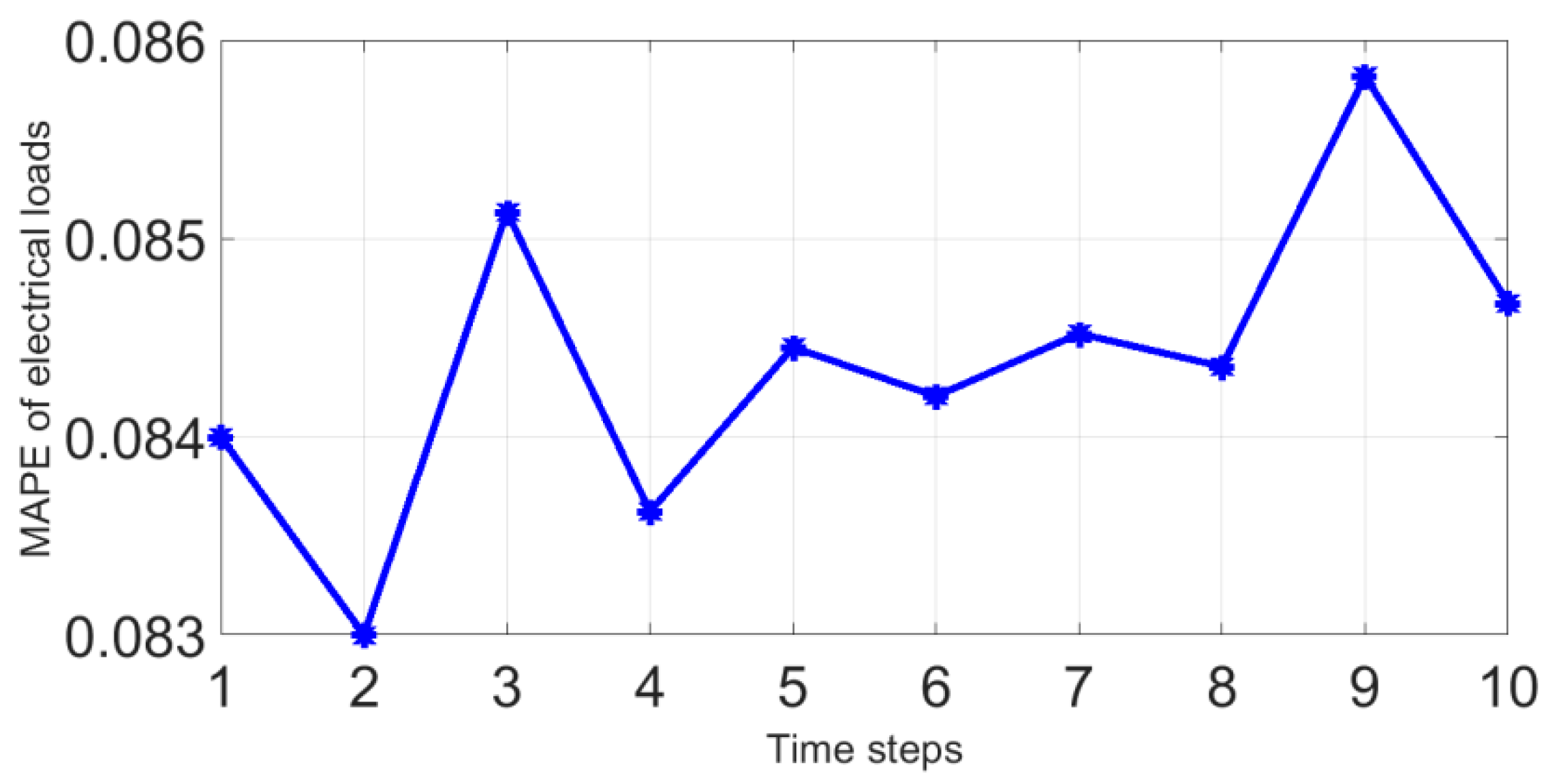

5.2. Performance in Different Time Steps

5.3. The Influence of the Coupling of Heating, Gas and Electrical Loads on the Results

- (1)

- In terms of heating loads, it is obvious that the forecasting accuracy of Case 2 is higher than that of Case 1, which reveals that the heating loads have a strong temporal correlation and considering temporal correlation helps improve the accuracy of the prediction. By comparing the MAPE of Case 1 and Case 2, it is found that taking the gas load as input will reduce the accuracy for forecasting heating load. Similarly, the conclusion is the same for heating load and electrical load. This is because the coupling between the heating load and the other loads is weak, which is consistent with the conclusions of the previous analysis. The addition of the gas load and the electrical load will interfere with features of the input, which makes the accuracy of the prediction worse.

- (2)

- As far as gas load is concerned, it can be found from Case 3 that the gas load also has a strong temporal correlation. Compared with Case 3 and Case 5, it is evident that the correlation between gas load and heating load is very weak. The input of heating load will lead to a decrease in forecasting accuracy of gas load. On the contrary, the coupling between gas load and electrical load is very strong. Adding electrical load as input is helpful to improve accuracy.

- (3)

- The result of Case 4 from Table 4 shows that there is a strong temporal of electrical load. The input of heating load will lead to a decrease in forecasting accuracy of electrical load. The addition of gas load helps to improve the forecasting accuracy of the electrical load. In general, the best input for forecasting electrical load includes environmental factors, gas and electrical loads.

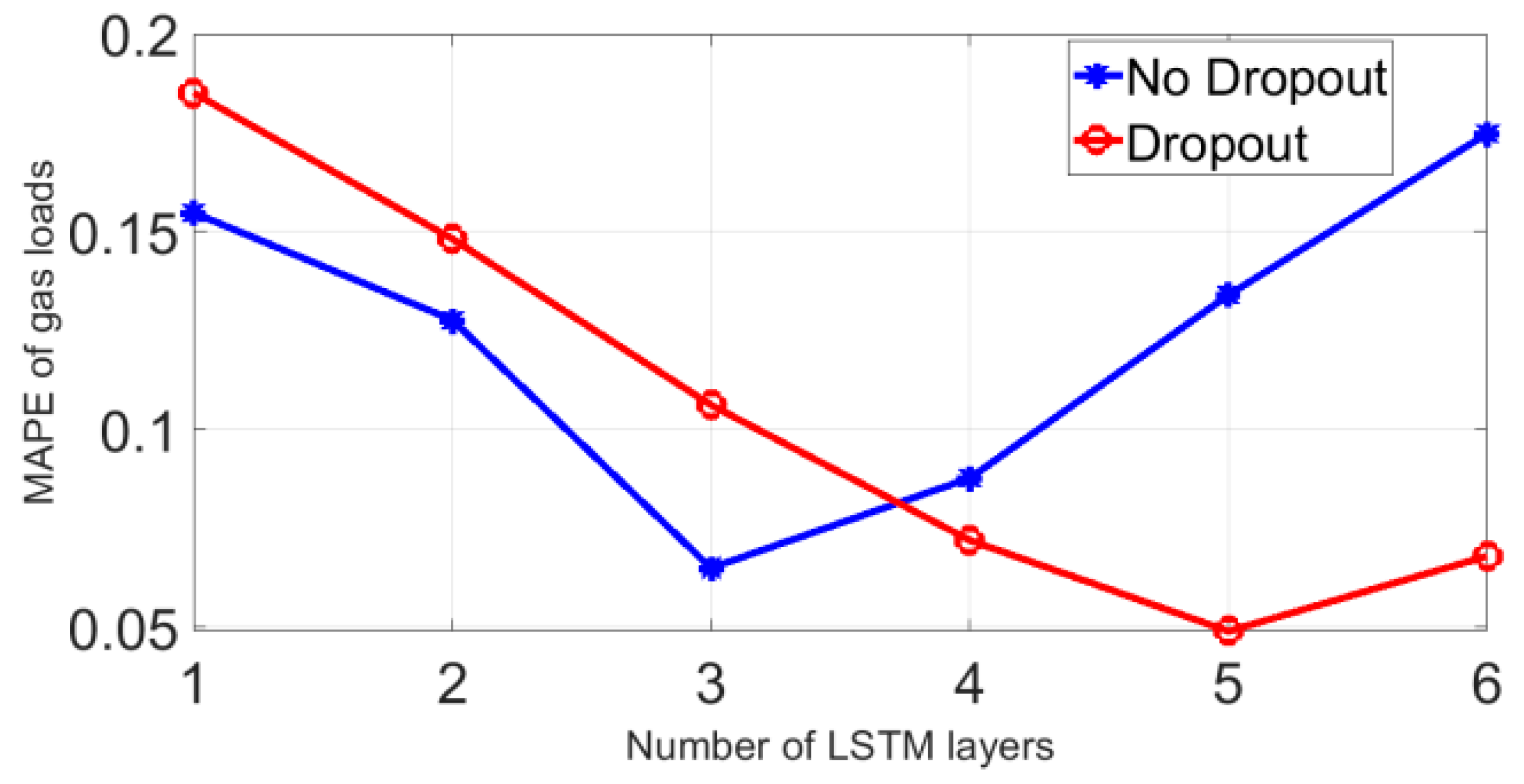

5.4. The Performance of the Dropout Layers

5.5. Benchmarking of Short-Term Load Forecasting Methods

- (1)

- The MAPE of the electrical load is greater than that of the heating and gas load, implying that the electrical load has relatively strong volatility compared with other loads. The heating and gas load are more regular and easier to predict. In this data set, the MAPE of heating, gas, and electrical load is 5.6%, 5.5%, and 8.2%, respectively.

- (2)

- ARIMA has the worst performance because it predicts the load based on the trend of the historical series, without considering the influence of environmental factors. Especially when the environment changes drastically, the forecasting accuracy at the inflection point is very poor. The forecasting accuracy of BP network and SVM is low because of the limitations of their models that make it impossible to pre-learn complex data through unsupervised training. Compared with the deep learning network such as CNN and LSTM, the performance of BP network and SVM is relatively poor. CNN can effectively extract the characteristics of input data, and the forecasting accuracy is higher than that of BP network and SVM. However, CNN cannot deal with the temporal correlation of heating, gas, and electrical loads, which leads to the limitation of forecasting accuracy. Combining CNN and LSTM to construct a hybrid model, it can not only effectively extract the features of input data, but also take into account the temporal correlation of loads. Compared with other traditional methods, CNN-LSTM has the highest forecasting accuracy.

- (3)

- Figure 12, Figure 13 and Figure 14 demonstrate the real loads and forecasted loads by different methods on a random day, 11 December 2015. As shown in the figures, the proposed approach has a good performance at spikes and troughs. Taking heating load as an example, during peak and trough periods, the heating load has strong volatility and uncertainty, which makes traditional algorithms unable to accurately predict the load during these periods. However, the morning peak at 7:00 a.m. and afternoon valley at 4:00 p.m. are accurately captured by the proposed approach, which further reflects the superiority of the proposed approach.

6. Conclusions

- (1)

- For heating, gas, and electrical loads, there is a strong temporal correlation between the current loads and historical loads. The case study shows that considering historical load series can reduce the error for predicting heating, gas, and electrical loads.

- (2)

- The coupling between the heating loads and the other loads is weak. Taking the gas loads and the electrical loads as input will make the accuracy of the heating loads worse. The coupling between gas loads and electrical loads is very strong. Adding electrical load as input is helpful to improve the accuracy of gas loads. Similarly, adding gas loads to input data is helpful to improve the forecasting accuracy of electrical loads.

- (3)

- The dropout layer can avoid over-fitting to a certain extent, as well as improve the accuracy for predicting heating, gas, and electrical loads. The dropout layer cannot completely solve the over-fitting where the number of network layers is too large.

- (4)

- Compared with other algorithms (BP network, SVM, ARIMA, CNN, and LSTM), the proposed approach has higher forecasting accuracy and can accurately predict the load during peak and trough periods.

- (1)

- We could try to find a technique that can completely solve the over-fitting.

- (2)

- The other deep learning frameworks such as generative adversarial networks (GAN) [39], restricted Boltzmann machines (RBM) [40,41,42], hidden Markov models [43], dilated convolutional neural network [44,45] and graphical models [46], are also used to forecast heating, gas, and electrical loads. These frameworks are widely used in image recognition, signal processing, and image generation. How to apply these frameworks to load forecasting needs further research. Generally speaking, the function of CNN is to extract the features of input data. Different tasks can be accomplished by using the extracted features as input data of classifiers, predictors, and generators. For example, the GAN’s generator consisting of convolution layers can model the power load profiles, and the GAN’s discriminator consisting of convolution layers can classify the power load.

- (3)

Author Contributions

Funding

Conflicts of Interest

References

- Wei, M.; Yuan, W.; Fu, L.; Zhang, S.; Zhao, X. Summer performance analysis of coal-based cchp with new configurations comparing with separate system. Energy 2018, 143, 104–113. [Google Scholar] [CrossRef]

- Wu, J.; Wang, J.; Wu, J.; Ma, C. Exergy and exergoeconomic analysis of a combined cooling, heating, and power system based on solar thermal biomass gasification. Energies 2019, 12, 2418. [Google Scholar] [CrossRef]

- Wegener, M.; Isalgué, A.; Malmquist, A.; Martin, A. 3e-analysis of a bio-solar cchp system for the andaman islands, india—A case study. Energies 2019, 12, 1113. [Google Scholar] [CrossRef]

- Zheng, X.; Wu, G.; Qiu, Y.; Zhan, X.; Shah, N.; Li, N.; Zhao, Y. A minlp multi-objective optimization model for operational planning of a case study cchp system in urban china. Appl. Energy 2018, 210, 1126–1140. [Google Scholar] [CrossRef]

- Wu, B.; Li, K.; Ge, F.; Huang, Z.; Yang, M.; Siniscalchi, S.M.; Lee, C. An end-to-end deep learning approach to simultaneous speech dereverberation and acoustic modeling for robust speech recognition. IEEE J. Sel. Top. Signal Process. 2017, 11, 1289–1300. [Google Scholar] [CrossRef]

- Heo, Y.J.; Kim, S.J.; Kim, D.; Lee, K.; Chung, W.K. Super-high-purity seed sorter using low-latency image-recognition based on deep learning. IEEE Robot. Autom. Lett. 2018, 3, 3035–3042. [Google Scholar] [CrossRef]

- Yan, H.; Wan, J.; Zhang, C.; Tang, S.; Hua, Q.; Wang, Z. Industrial big data analytics for prediction of remaining useful life based on deep learning. IEEE Access 2018, 6, 17190–17197. [Google Scholar] [CrossRef]

- Rachmadi, M.F.; Valdés-Hernández, M.D.C.; Agan, M.L.F.; Di Perri, C.; Komura, T. Segmentation of white matter hyperintensities using convolutional neural networks with global spatial information in routine clinical brain MRI with none or mild vascular pathology. Comput. Med. Imaging Graph. 2018, 66, 28–43. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Neural Inf. Process. Syst. 2012, 141, 1097–1105. [Google Scholar] [CrossRef]

- Holden, D.; Komura, T.; Saito, J. Phase-functioned neural networks for character control. ACM Trans. Graph. 2017, 36, 42. [Google Scholar] [CrossRef]

- Mousas, C.; Newbury, P.; Anagnostopoulos, C. Evaluating the covariance matrix constraints for data-driven statistical human motion reconstruction. In Proceedings of the Spring Conference on Computer Graphics, New York, NY, USA, 28–30 May 2014; pp. 99–106. [Google Scholar]

- Mousas, C.; Newbury, P.; Anagnostopoulos, C.-N. Data-Driven Motion Reconstruction Using Local Regression Models. In Proceedings of the International Conference on Artificial Intelligence Applications and Innovations, Rhodos, Greece, 19–21 September 2014; pp. 364–374. [Google Scholar]

- Suk, H.; Wee, C.Y.; Lee, S.W.; Shen, D. State-space model with deep learning for functional dynamics estimation in resting-state fMRI. NeuroImage 2016, 129, 292–307. [Google Scholar] [CrossRef] [PubMed]

- Kong, W.; Dong, Z.Y.; Jia, Y.; Hill, D.J.; Xu, Y.; Zhang, Y. Short-term residential load forecasting based on lstm recurrent neural network. IEEE Trans. Smart Grid 2019, 10, 841–851. [Google Scholar] [CrossRef]

- Khodayar, M.; Wang, J. Spatio-temporal graph deep neural network for short-term wind speed forecasting. IEEE Trans. Sustain. Energy 2019, 10, 670–681. [Google Scholar] [CrossRef]

- Kouzelis, K.; Bak-Jensen, B.; Mahat, P.; Pillai, J.R. In A simplified short term load forecasting method based on sequential patterns. In Proceedings of the IEEE PES Innovative Smart Grid Technologies, Istanbul, Turkey, 12–15 October 2014; pp. 1–5. [Google Scholar]

- Wang, Z.-X.; Li, Q.; Pei, L.-L. A seasonal gm(1,1) model for forecasting the electricity consumption of the primary economic sectors. Energy 2018, 154, 522–534. [Google Scholar] [CrossRef]

- Zhao, J.; Liu, X. A hybrid method of dynamic cooling and heating load forecasting for office buildings based on artificial intelligence and regression analysis. Energy Build. 2018, 174, 293–308. [Google Scholar] [CrossRef]

- Barman, M.; Dev Choudhury, N.B.; Sutradhar, S. A regional hybrid goa-svm model based on similar day approach for short-term load forecasting in assam, india. Energy 2018, 145, 710–720. [Google Scholar] [CrossRef]

- Chia, Y.Y.; Lee, L.H.; Shafiabady, N.; Isa, D. A load predictive energy management system for supercapacitor-battery hybrid energy storage system in solar application using the support vector machine. Appl. Energy 2015, 137, 588–602. [Google Scholar] [CrossRef]

- Li, K.; Xie, X.; Xue, W.; Dai, X.; Chen, X.; Yang, X. A hybrid teaching-learning artificial neural network for building electrical energy consumption prediction. Energy Build. 2018, 174, 323–334. [Google Scholar] [CrossRef]

- Singh, P.; Dwivedi, P. Integration of new evolutionary approach with artificial neural network for solving short term load forecast problem. Appl. Energy 2018, 217, 537–549. [Google Scholar] [CrossRef]

- Dedinec, A.; Filiposka, S.; Dedinec, A.; Kocarev, L. Deep belief network based electricity load forecasting: An analysis of macedonian case. Energy 2016, 115, 1688–1700. [Google Scholar] [CrossRef]

- Kuan, L.; Zhenfu, B.; Xin, W.; Xiangrong, M.; Honghai, L.; Wenxue, S.; Zijian, Z.; Zhimin, L. In Short-term chp heat load forecast method based on concatenated lstms. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 99–103. [Google Scholar]

- Zhang, F.; Xi, J.; Langari, R. Real-time energy management strategy based on velocity forecasts using v2v and v2i communications. IEEE Trans. Intell. Transp. Syst. 2017, 18, 416–430. [Google Scholar] [CrossRef]

- Wang, L.; Scott, K.A.; Xu, L.; Clausi, D.A. Sea ice concentration estimation during melt from dual-pol sar scenes using deep convolutional neural networks: A case study. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4524–4533. [Google Scholar] [CrossRef]

- Kim, T.-Y.; Cho, S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Swapna, G.; Kp, S.; Vinayakumar, R. Automated detection of diabetes using CNN and CNN-LSTM network and heart rate signals. Procedia Comput. Sci. 2018, 132, 1253–1262. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Network. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016; pp. 1–16. [Google Scholar]

- Panchal, G.; Ganatra, A.; Shah, P.; Panchal, D. Determination of over-learning and over-fitting problem in back propagation neural network. Int. J. Soft Comput. 2011, 2, 40–51. [Google Scholar] [CrossRef]

- Xu, H.; Deng, Y. Dependent evidence combination based on shearman coefficient and pearson coefficient. IEEE Access 2018, 6, 11634–11640. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Qing, X.; Niu, Y. Hourly day-ahead solar irradiance prediction using weather forecasts by lstm. Energy 2018, 148, 461–468. [Google Scholar] [CrossRef]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Sawaguchi, S.; Nishi, H. Slightly-slacked dropout for improving neural network learning on FPGA. ICT Express 2018, 4, 75–80. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Pan, C.; Sun, J.; Tang, C. Multiple sclerosis identification by convolutional neural network with dropout and parametric ReLU. J. Comput. Sci. 2018, 28, 1–10. [Google Scholar] [CrossRef]

- Rekabdar, B.; Mousas, C.; Gupta, B. Generative Adversarial Network with Policy Gradient for Text Summarization. In Proceedings of the 13th IEEE International Conference on Semantic Computing, Newport Beach, CA, USA, 30 January–1 February 2019; pp. 204–207. [Google Scholar]

- Ngiam, J.; Khosla, A.; Kim, M.; Nam, J.; Lee, H.; Ng, A.Y. Multimodal deep learning. In Proceedings of the 28th International Conference on International Conference on Machine Learning, Bellevue, WA, USA, 28 June–2 July 2011; pp. 689–696. [Google Scholar]

- Mousas, C.; Anagnostopoulos, C. Learning Motion Features for Example-Based Finger Motion Estimation for Virtual Characters. 3D Res. 2017, 8, 25. [Google Scholar] [CrossRef]

- Nam, J.; Herrera, J.; Slaney, M.; Smith, J.O. Learning Sparse Feature Representations for Music Annotation and Retrieval. In Proceedings of the 13th International Society for Music Information Retrieval Conference, Porto, Portugal, 8–12 October 2012; pp. 565–570. [Google Scholar]

- Abdelhamid, O.; Mohamed, A.; Jiang, H.; Penn, G. Applying Convolutional Neural Networks concepts to hybrid NN-HMM model for speech recognition. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing, Kyoto, Japan, 25–30 March 2012; pp. 4277–4280. [Google Scholar]

- Rekabdar, B.; Mousas, C. Dilated Convolutional Neural Network for Predicting Driver’s Activity. In Proceedings of the 21st International Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; pp. 3245–3250. [Google Scholar]

- Li, R.; Si, D.; Zeng, T.; Ji, S.; He, J. Deep convolutional neural networks for detecting secondary structures in protein density maps from cryo-electron microscopy. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine, Shenzhen, China, 15–18 December 2016; pp. 41–46. [Google Scholar]

- Saito, S.; Wei, L.; Hu, L.; Nagano, K.; Li, H. Photorealistic Facial Texture Inference Using Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2326–2335. [Google Scholar]

- Amato, F.; Castiglione, A.; Moscato, V.; Picariello, A.; Sperli, G. Multimedia summarization using social media content. Multimed. Tools Appl. 2018, 77, 17803–17827. [Google Scholar] [CrossRef]

- Hu, D.; Li, J.; Liu, Y.; Li, Y. Flow Adversarial Networks: Flowrate Prediction for Gas-Liquid Multiphase Flows across Different Domains. IEEE Trans. Neural Netw. Learn. Syst. 2019, 1–13. [Google Scholar] [CrossRef]

| Program: A part of codes for building the CNN-LSTM network |

| #1 Define the CNN-LSTM Network model = Sequential() model.add(Conv1D(filters=10, kernel_size=3, padding=’same’, strides=1, activation=’relu’,input_shape=(1, Input_num))); model.add(MaxPooling1D(pool_size=2)) model.add(Dropout(rate=0.25)) model.add(Conv1D(filters=20, kernel_size=3, padding=’same’, strides=1, activation=’relu’)) model.add(MaxPooling1D(pool_size=2)) model.add(Dropout(rate=0.25)) model.add(LSTM(units=24,return_sequences=True)) model.add(LSTM(units=16,return_sequences=True)) model.add(LSTM(units=32,return_sequences=True)) model.add(LSTM(units=16,return_sequences=True)) model.add(LSTM(units=16,return_sequences=True)) model.add(LSTM(units=16)) model.add(Dense(units=1, kernel_initializer=’normal’,activation=’sigmoid’)) #2 Compile the CNN-LSTM network model.compile(loss=’mae’, optimizer=’adam’) #3 Fit the CNN-LSTM network history = model.fit(trainX,trainY, epochs=100, batch_size=50,validation_data=(valid3DX, validY), verbose=2, shuffle=False) #4 Predict the loads Predicted_Load = model.predict(testX) |

| Scenes | Input of Network |

|---|---|

| Case 1 | Environmental features |

| Case 2 | Environmental Feature, heating loads |

| Case 3 | Environmental features, gas loads |

| Case 4 | Environmental features, electrical loads |

| Case 5 | Environmental feature heating and gas loads |

| Case 6 | Environmental features heating and electrical loads |

| Case 7 | Environmental features gas and electrical loads |

| Case 8 | Environmental features heating, gas and electrical loads |

| Scenes | Case 1 | Case 2 | Case 5 | Case 6 | Case 8 |

|---|---|---|---|---|---|

| MAPE | 0.145 | 0.057 | 0.065 | 0.062 | 0.073 |

| Scenes | Case 1 | Case 3 | Case 5 | Case 7 | Case 8 |

|---|---|---|---|---|---|

| MAPE | 0.158 | 0.060 | 0.772 | 0.055 | 0.064 |

| Scenes | Case 1 | Case 4 | Case 6 | Case 7 | Case 8 |

|---|---|---|---|---|---|

| MAPE | 0.143 | 0.086 | 0.092 | 0.083 | 0.085 |

| Algorithms | Heating Load | Gas Load | Electrical Load |

|---|---|---|---|

| BP | 0.067 | 0.064 | 0.099 |

| SVM | 0.065 | 0.062 | 0.096 |

| ARIMA | 0.071 | 0.067 | 0.131 |

| CNN | 0.062 | 0.060 | 0.092 |

| LSTM | 0.060 | 0.057 | 0.088 |

| CNN-LSTM | 0.056 | 0.055 | 0.082 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, R.; Guo, W.; Gong, X. Short-Term Load Forecasting for CCHP Systems Considering the Correlation between Heating, Gas and Electrical Loads Based on Deep Learning. Energies 2019, 12, 3308. https://doi.org/10.3390/en12173308

Zhu R, Guo W, Gong X. Short-Term Load Forecasting for CCHP Systems Considering the Correlation between Heating, Gas and Electrical Loads Based on Deep Learning. Energies. 2019; 12(17):3308. https://doi.org/10.3390/en12173308

Chicago/Turabian StyleZhu, Ruijin, Weilin Guo, and Xuejiao Gong. 2019. "Short-Term Load Forecasting for CCHP Systems Considering the Correlation between Heating, Gas and Electrical Loads Based on Deep Learning" Energies 12, no. 17: 3308. https://doi.org/10.3390/en12173308

APA StyleZhu, R., Guo, W., & Gong, X. (2019). Short-Term Load Forecasting for CCHP Systems Considering the Correlation between Heating, Gas and Electrical Loads Based on Deep Learning. Energies, 12(17), 3308. https://doi.org/10.3390/en12173308