Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine

Abstract

:1. Introduction

2. REOS-ELM

3. Dynamic Linearization Technique and the New Updating Formula for REOS-ELM

3.1. Dynamic Linearization Technique

3.2. The Updating Formula Based on Dead-Zone Characteristics

4. The MFAC Method Based on EMREOS-ELM

4.1. Initialization Phase

- and are two random values, , and set .

- Set , , and . denotes the maximum number of hidden nodes, and and denote the minimum number of hidden nodes.

- Measure the output and of system (10).

- Assign random parameters of hidden nodes where , .

- Using the first sample data , initialize and , andandDefine

4.2. Parameter Learning

- Using the kth sample data , calculate , andwhen , and , thenwhen , or or , a new re-learning process begins.

- Measure the output of system (10).

- Set , , and calculatewhere and are two values, and they are important for adjusting network structure; The appropriate and can improve the tracking effect of systems, and too small or too big make EMREOS-ELM become invalid; We can choose those values and based on performance requirements of systems.

- When the tracking error does not meet the requirements of systems, network structure will be adjusted. The core of the proposed EMREOS-ELM is the adjustment of network structure. if then execute II; or I.

- (I)

Using the kth training data and , update the output weightsandwhereand - , , , and go to Section 4.2.

- (II)

- Go to Section 4.3.

4.3. The Adjustment of Network Structure

- Set , and assign random parameters of the th hidden node .

- When network structure is adjusted, it is equivalent to add a new column to , andThen

- The pseudo inverse of the new iswhereandand

- Calculate , and

- Initialize andwhere denotes identify matrix.

- Set as a random number, and .

- Set , , and go to Section 4.2.

4.4. Stability Analysis

- (1)

- where , and and are the maximum eigenvalue and the minimum eigenvalue of the matrix , respectively.

- (2)

- (a)

- (b)

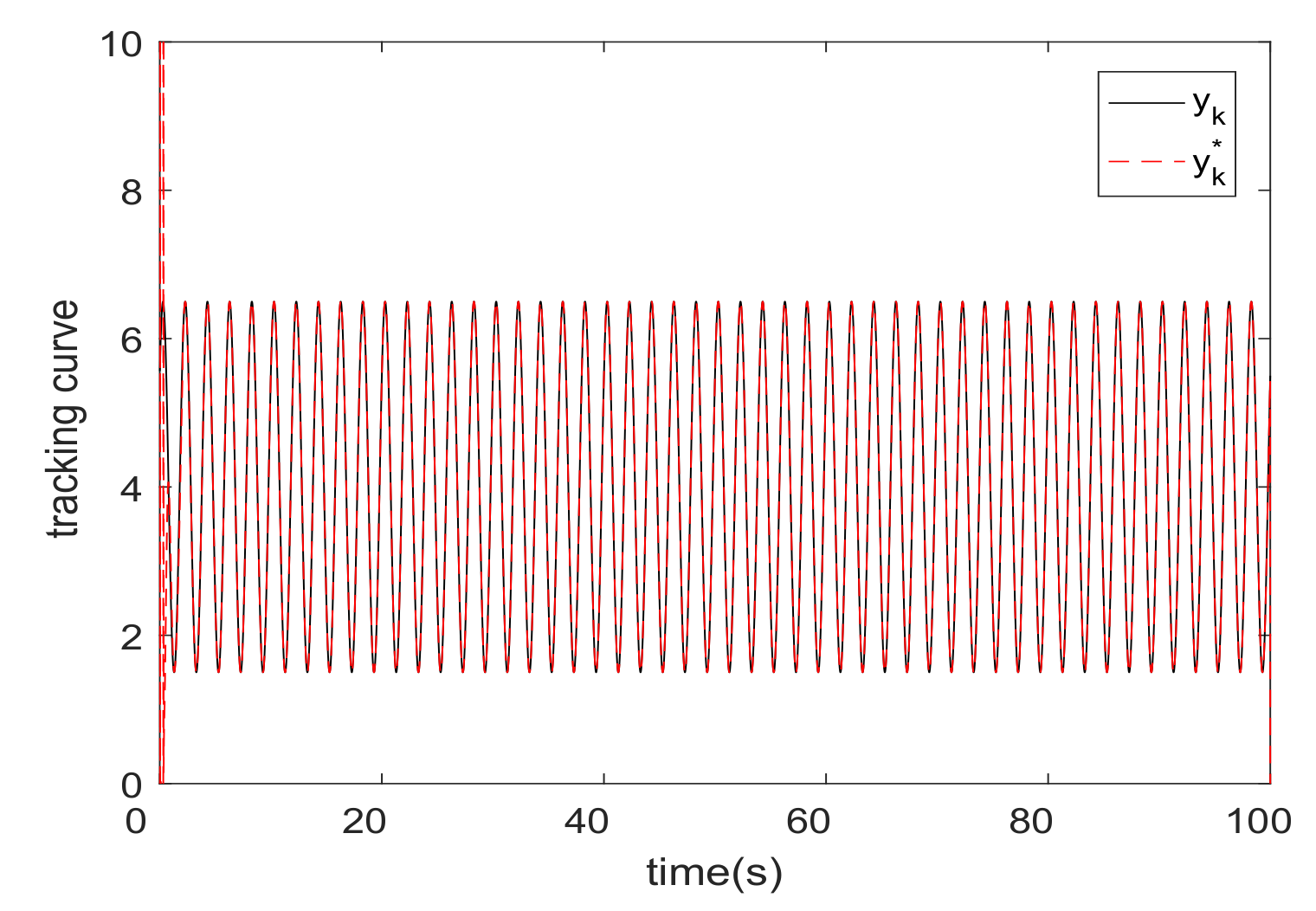

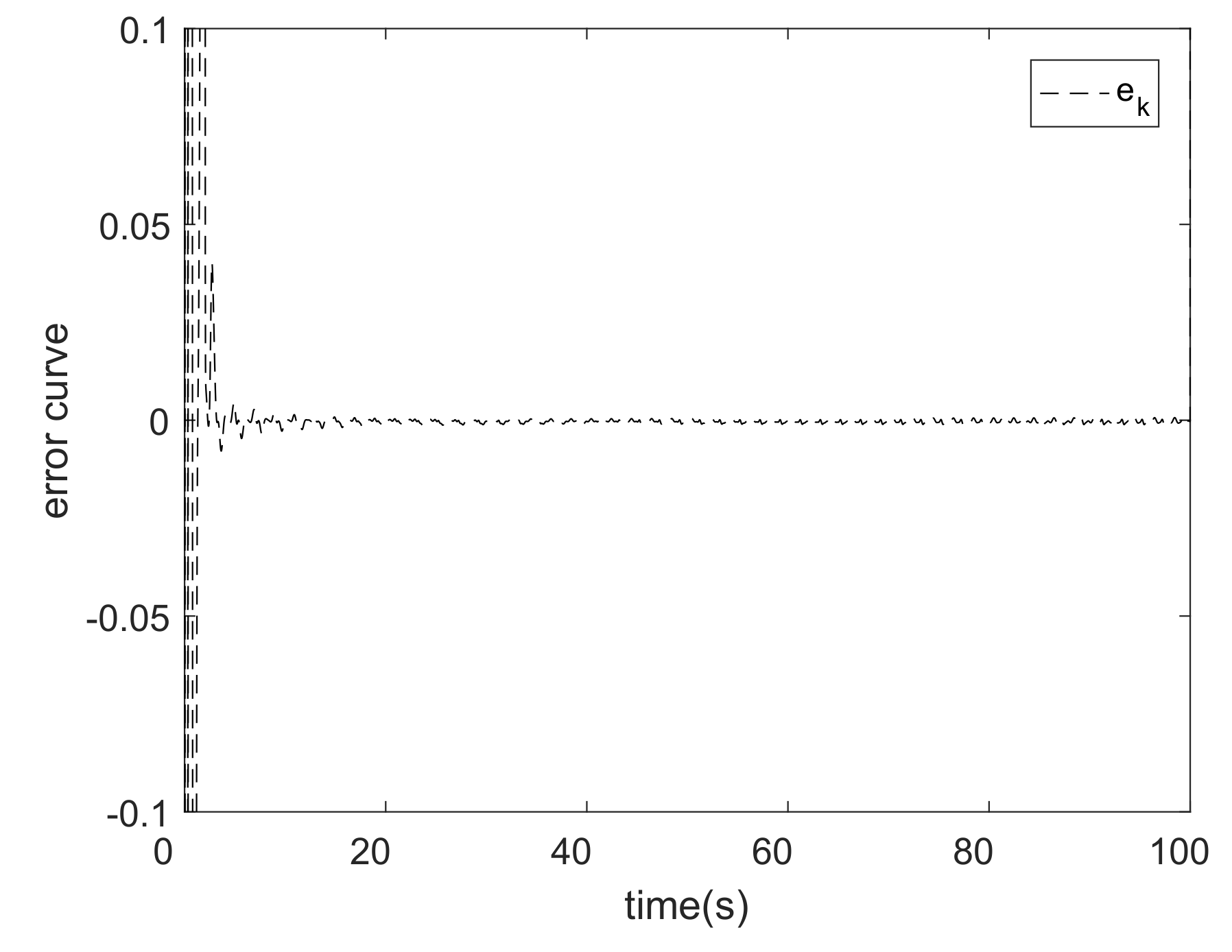

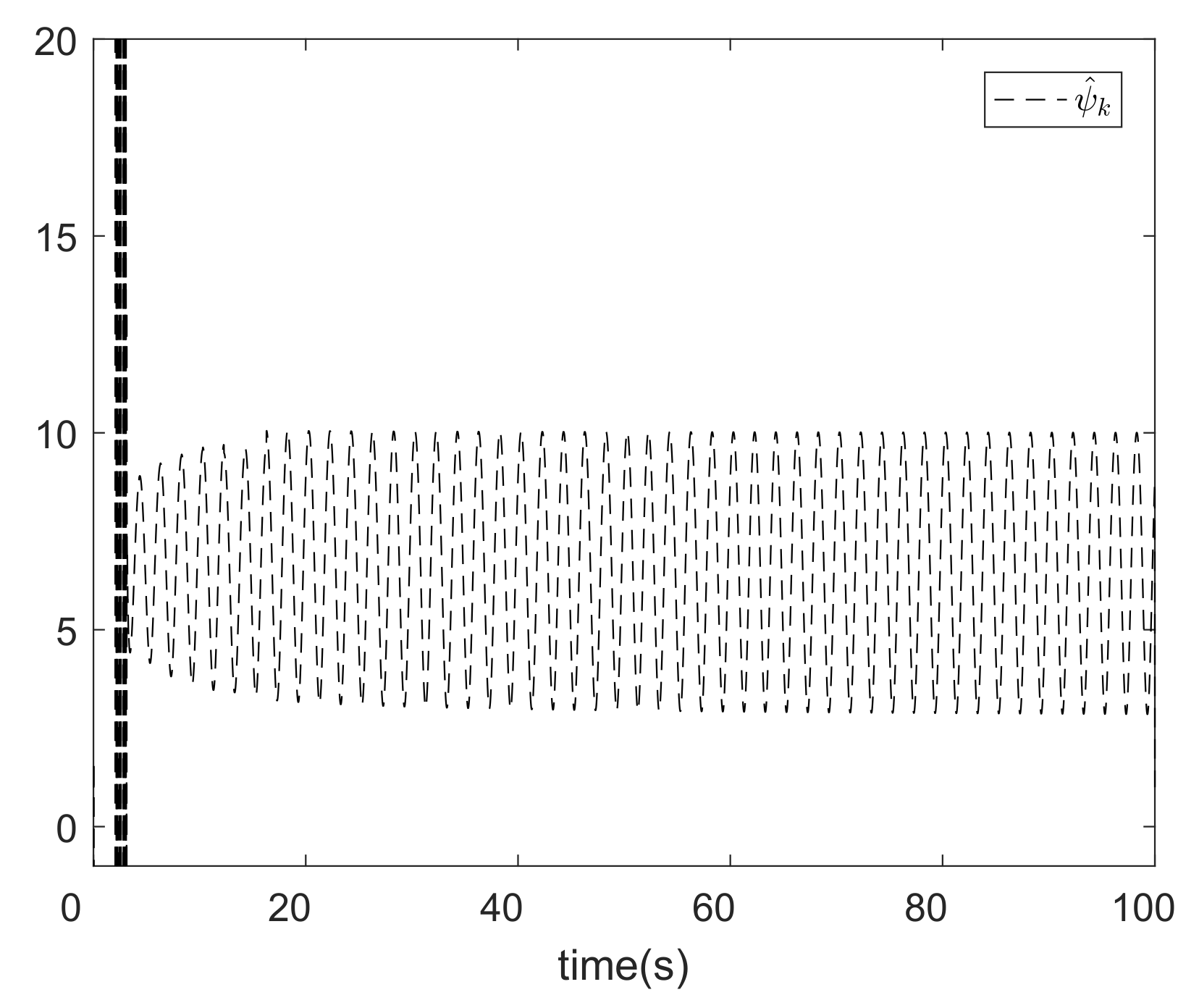

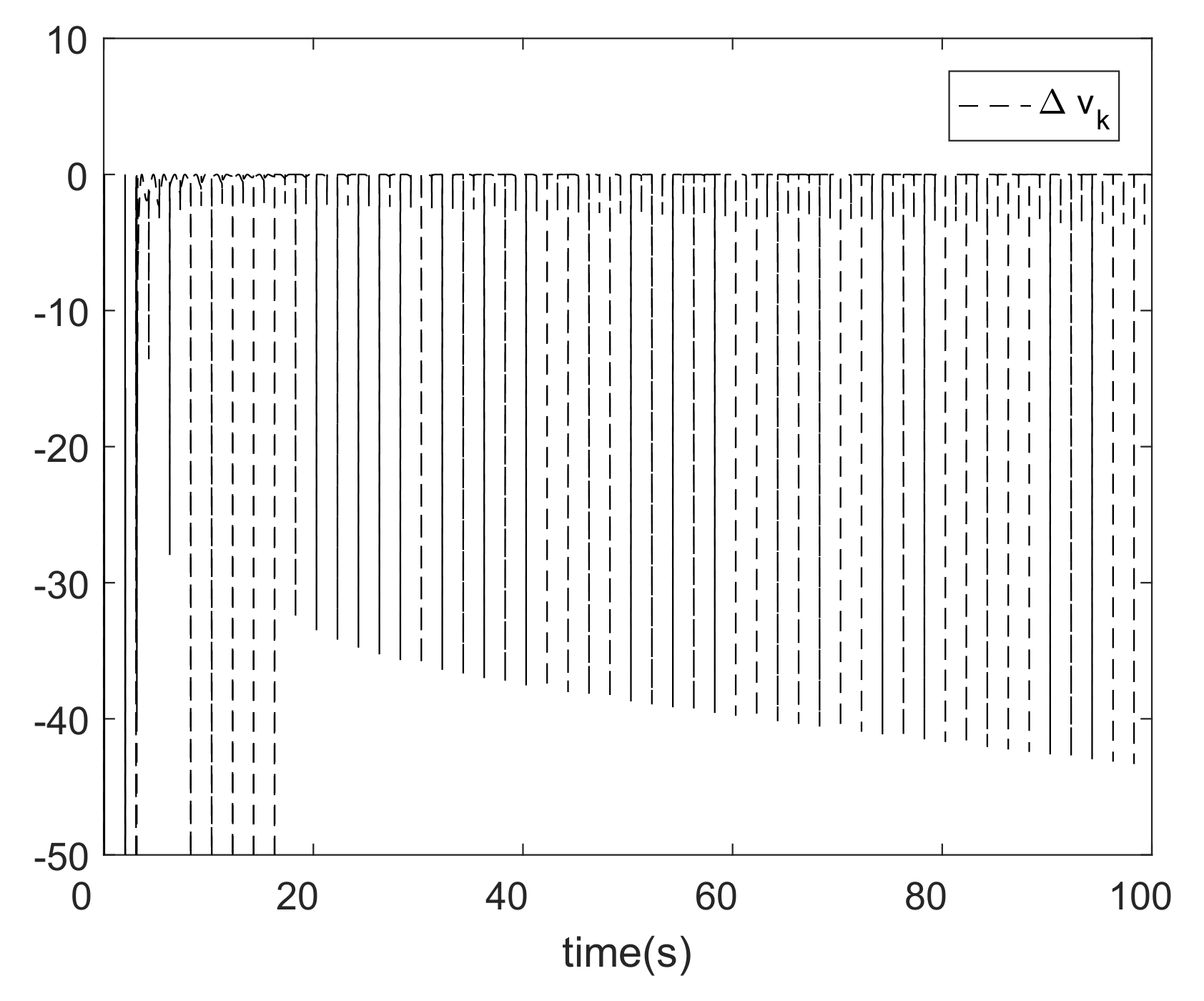

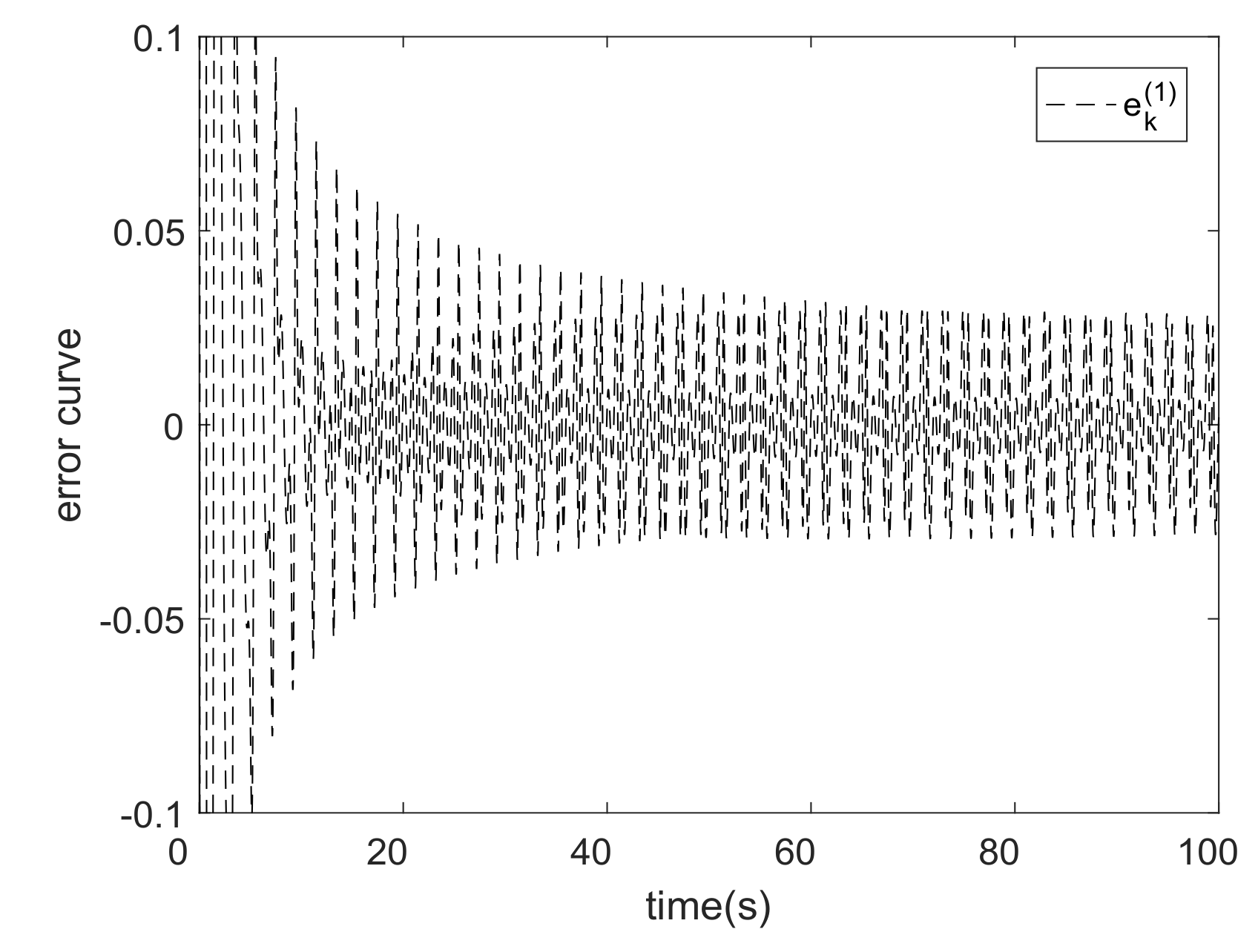

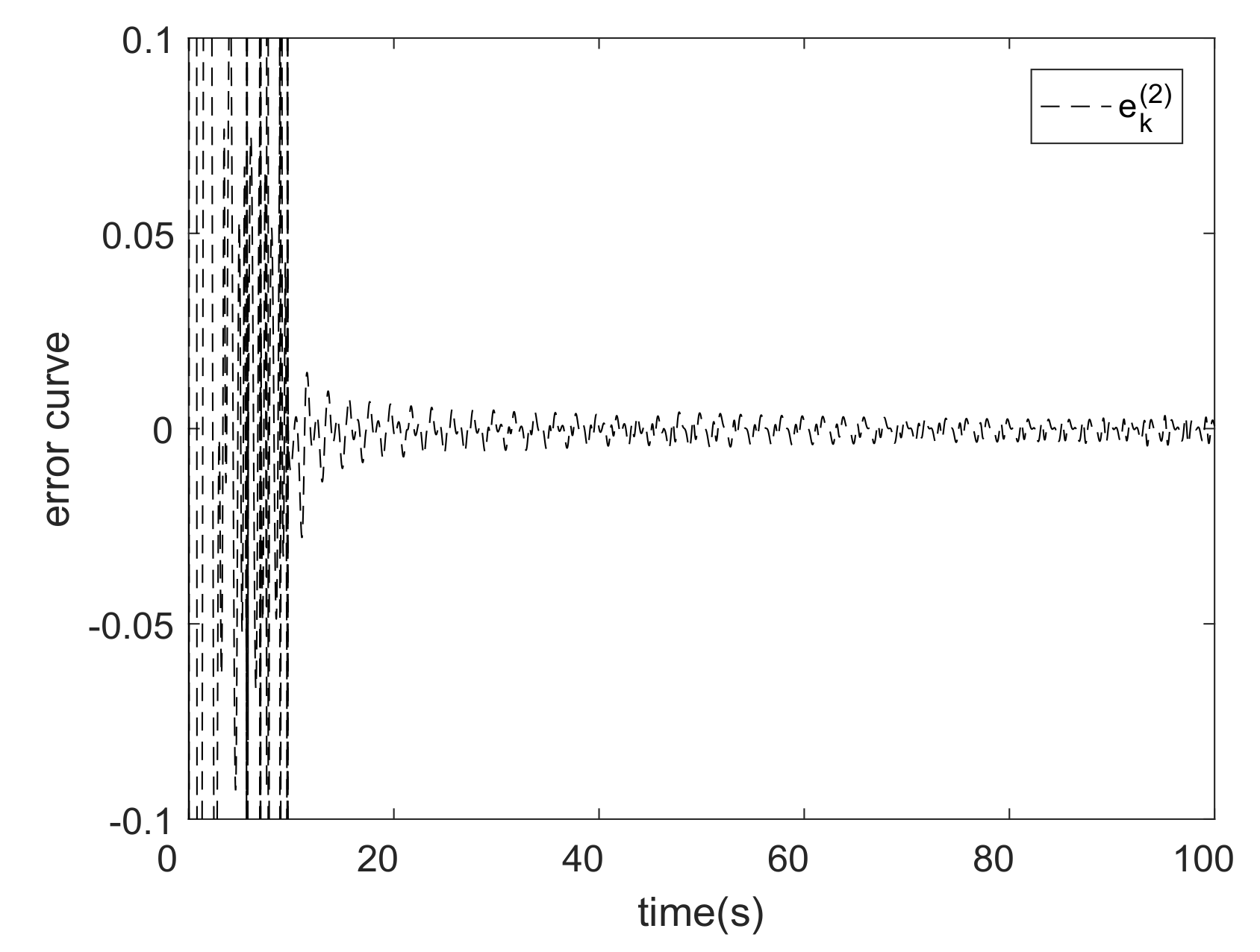

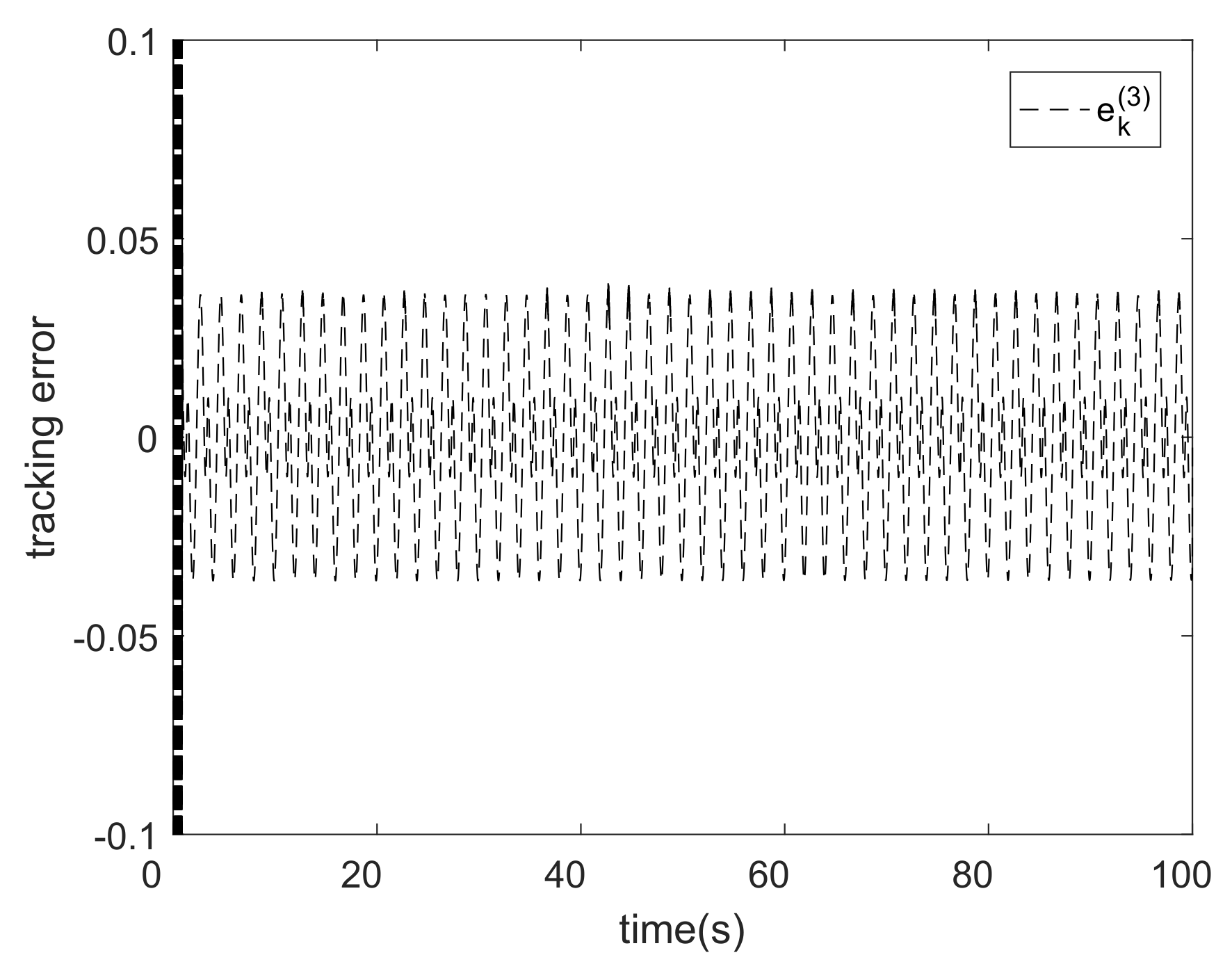

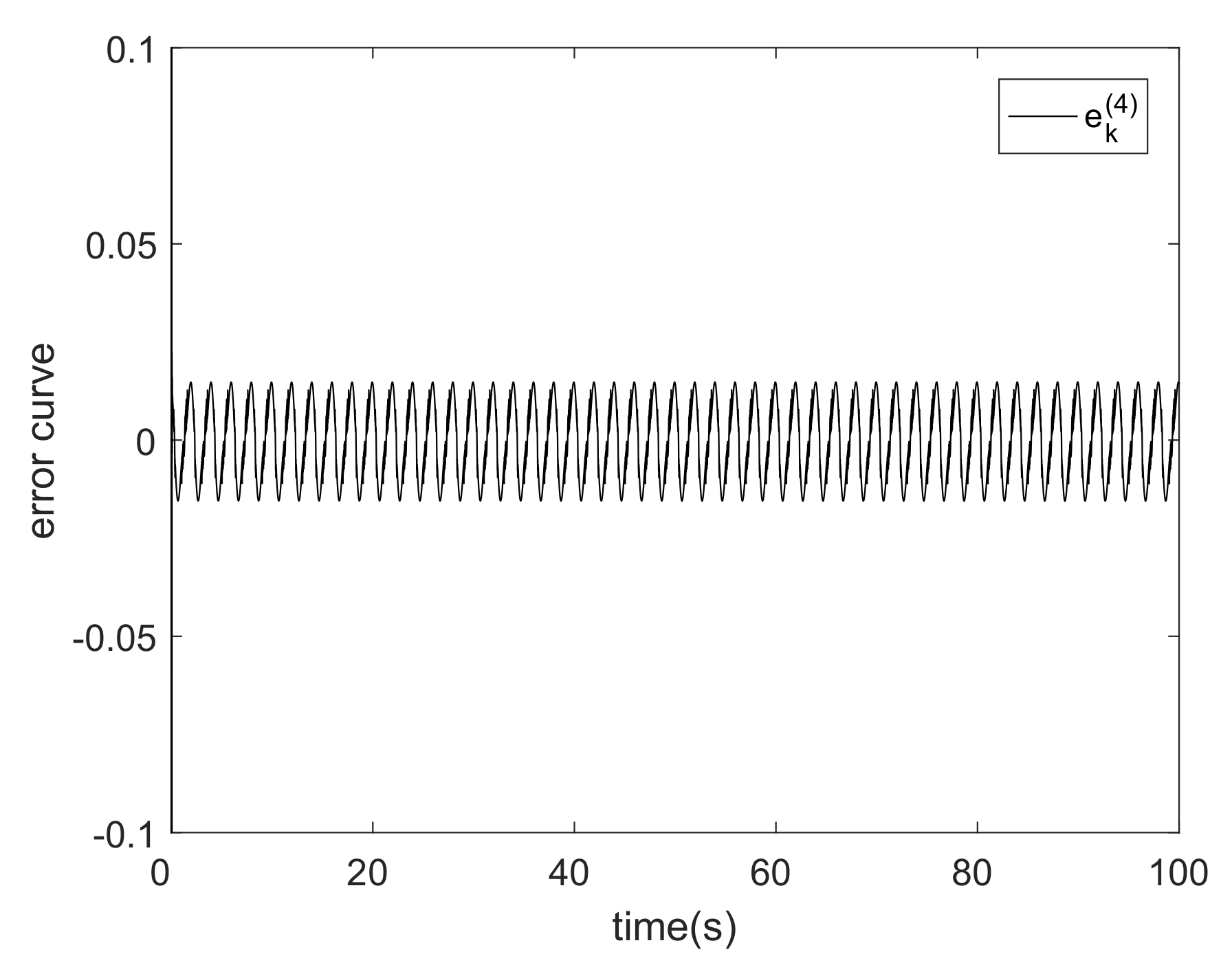

5. Analysis of Experimental Results

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| MFAC | model free adaptive control |

| I/O | input/output |

| DDC | data-driven control |

| PID | proportional-integral derivative |

| UC | unfalsified control |

| RBFNN | radial basis function neural network |

| EMREOS-ELM | error minimized regularized online sequential extreme learning machine |

| ELM | extreme learning machine |

| OS-ELM | online sequential extreme learning machine |

| REOS-ELM | regularized online sequential extreme learning machine |

| EMOS-ELM | error minimized online sequential extreme learning machine |

References

- Hou, Z.S.; Xu, J.X. On data-driven control theory: The state of the art and perspective. Acta Autom. Sin. 2009, 35, 650–667. [Google Scholar] [CrossRef]

- Caponetto, R.; Dongola, G.; Fortuna, L.; Gallo, A. New results on the synthesis of FO-PID controllers. Commun. Nonlinear Sci. 2010, 15, 997–1007. [Google Scholar] [CrossRef]

- Roman, R.C.; Precup, R.E.; David, R.C. Second order intelligent proportional-integral fuzzy control of twin rotor aerodynamic systems. Procedia Comput. Sci. 2018, 139, 372–380. [Google Scholar] [CrossRef]

- Sanchez-Pena, R.S.; Colmegna, P.; Bianchi, F. Unfalsified control based on the controller parameterisation. Int. J. Syst. Sci. 2015, 46, 2820–2831. [Google Scholar] [CrossRef]

- Safonov, M.G.; Tsao, T.C. The unfalsfied control concept and learning. IEEE Trans. Autom. Control 1997, 42, 2819–2824. [Google Scholar] [CrossRef]

- Hou, Z.S.; Huang, W.H. The model-free learning adaptive control of a class of SISO nonlinear systems. In Proceedings of the 1997 American Control Conference, Albuquerque, NM, USA, 6 June 1997; Volume 1, pp. 343–344. [Google Scholar]

- Hou, Z.S.; Jin, S.T. A novel data-driven control approach for a class of discrete-time nonlinear systems. IEEE Trans. Control Syst. Technol. 2011, 19, 1549–1558. [Google Scholar] [CrossRef]

- Tutsoy, O.; Barkana, D.E.; Tugal, H. Design of a completely model free adaptive control in the presence of parametric, non-parametric uncertainties and random control signal delay. ISA Trans. 2018, 76, 67–77. [Google Scholar] [CrossRef]

- Safaei, A.; Mahyuddin, M.N. Adaptive model-free control based on an ultra-local model with model-free parameter estimations for a generic SISO system. IEEE Access 2018, 6, 4266–4275. [Google Scholar] [CrossRef]

- Hung-Yi, C.; Jin-Wei, L. Model-free adaptive sensing and control for a piezoelectrically actuated system. Sensors 2010, 10, 10545–10559. [Google Scholar]

- Xia, Y.; Dai, Y.; Yan, W.; Xu, D.; Yang, C. Adaptive-observer-based data driven voltage control in islanded-mode of distributed energy resource systems. Energies 2018, 11, 3299. [Google Scholar] [CrossRef]

- Hou, Z.S.; Xiong, S.S. On model free adaptive control and its stability analysis. IEEE Trans. Autom. Control 2019, in press. [Google Scholar] [CrossRef]

- He, W.; Meng, T.T.; He, X.Y.; Samge, S.Z. Unified iterative learning control for flexible structures with input constraints. Automatica 2018, 96, 326–336. [Google Scholar] [CrossRef]

- Xuan, Y.; Ruan, X. Reinforced gradient-type iterative learning control for discrete linear time-invariant systems with parameters uncertainties and external noises. IMA J. Math. Control Inf. 2016, 34, 1117–1133. [Google Scholar]

- Miao, Y.; Wei, Z.; Liu, B.B. On iterative learning control for MIMO nonlinear systems in the presence of time-iteration-varying parameters. Nonlinear Dynam. 2017, 89, 2561–2571. [Google Scholar]

- Hjalmarsson, H. Iterative feedback tuning—An overview. Int. J. Adapt. Control Signal Process. 2010, 16, 373–395. [Google Scholar] [CrossRef]

- Heertjes, M.F.; Velden, B.V.D.; Oomen, T. Constrained iterative feedback tuning for robust control of a wafer stage system. IEEE Trans. Control Syst. Technol. 2016, 24, 56–66. [Google Scholar] [CrossRef]

- RiosPatron, E.; Braatz, R. On the identification and control of dynamical systems using neural networks. IEEE Trans. Neural Netw. 1997, 8, 452. [Google Scholar]

- Ren, X.M.; Lewis, F.L.; Zhang, J.L. Neural network compensation control for mechanical systems with disturbances. Automatic 2009, 45, 1221–1226. [Google Scholar] [CrossRef]

- Wang, F.; Chen, B.; Lin, C.; Zhang, J.; Meng, X. Adaptive neural network finite-time output feedback control of quantized nonlinear systems. IEEE Trans. Cybern. 2018, 48, 1839–1848. [Google Scholar] [CrossRef]

- Sun, C.Y.; He, W.; Ge, W.L.; Chang, C. Adaptive neural network control of biped robots. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 315–326. [Google Scholar] [CrossRef]

- Wang, M.S.; Tsai, T.M. Sliding mode and neural network control of sensorless PMSM controlled system for power consumption and performance improvement. Energies 2017, 10, 1780. [Google Scholar] [CrossRef]

- Faria, J.; Pombo, J.; Calado, M.D.R.; Mariano, S. Power management control strategy based on artificial neural networks for standalone PV applications with a hybrid energy storage system. Energies 2019, 12, 902. [Google Scholar] [CrossRef]

- Hou, Z.S.; Liu, S.D.; Tian, T.T. Lazy-learning-based data-driven model-free adaptive predictive control for a class of discrete-time nonlinear systems. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.M.; Hou, Z.S. Data-driven MFAC for a class of discrete-time nonlinear systems with RBFNN. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 1013–1020. [Google Scholar] [PubMed]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Liang, N.Y.; Huang, G.B.; Saratchandran, P.; Sundararajan, N. A fast and accurate online sequential learning algorithm for feedforward networks. IEEE Trans. Neural Netw. 2006, 17, 1411–1423. [Google Scholar] [CrossRef] [PubMed]

- Jia, C.; Li, X.L.; Wang, K.; Ding, D.W. Adaptive control of nonlinear system using online error minimum neural networks. ISA Trans. 2016, 65, 125–132. [Google Scholar] [CrossRef] [PubMed]

- Li, X.L.; Jia, C.; Liu, D.X.; Ding, D.W. Adaptive control of nonlinear discrete-time systems by using OS-ELM neural networks. Abstr. Appl. Anal. 2014, 2014, 1–11. [Google Scholar] [CrossRef]

- Huynh, H.T.; Won, Y. Regularized online sequential learning algorithm for single-hidden layer feedforward neural networks. Pattern Recognit. Lett. 2011, 32, 1930–1935. [Google Scholar] [CrossRef]

- Gao, X.H.; Wong, K.I.; Wong, P.K.; Chi, M.V. Adaptive control of rapidly time-varying discrete-time system using initial-training-free online extreme learning machine. Neurocomputing 2016, 194, 117–125. [Google Scholar] [CrossRef]

- Huang, G.B.; Chen, L.; Siew, C.K. Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 2006, 17, 879–892. [Google Scholar] [CrossRef] [PubMed]

- Hong, Z.S. NonParametric Model and Adaptive Control Theory; Science Press: Beijing, China, 1999. [Google Scholar]

- Kun, L.J. RBF Neural Network Adaptive Control Matlab Simulation; Tsinghua University Pre: Beijing, China, 2014. [Google Scholar]

- Narendra, K.S. Identification and control for dynamic systems using neural networks using neural network. IEEE Trans. Inform. Theory 1990, 1, 4–27. [Google Scholar]

| The MFAC Algorithm Based on REOS-ELM(L = 8) | The MFAC Algorithm Based on REOS-ELM(L = 20) | The MFAC Algorithm Based on EMREOS-ELM | RBFNN (L = 9) | The MFAC Algorithm Based on RLS | The MFAC Algorithm Based on IREOS-ELM | |

|---|---|---|---|---|---|---|

| 1 | 0.150672 | 0.002515 | 0.001562 | 0.550053 | 4.449964 | 0.012889 |

| 2 | 0.019459 | 0.040914 | 0.001705 | 0.502426 | 2.534129 | 0.433985 |

| 3 | 0.703282 | 0.014989 | 0.005451 | 0.564361 | 2.274406 | 0.016331 |

| 4 | 0.172610 | 0.150750 | 0.027222 | 0.504968 | 1.909120 | 0.017123 |

| 5 | 0.083149 | 0.244061 | 0.004806 | 0.402855 | 1.824217 | 0.007621 |

| 6 | 0.696841 | 0.009112 | 0.006475 | 0.411857 | 2.548398 | 0.004997 |

| 7 | 1.568039 | 0.051220 | 0.014730 | 0.591908 | 2.222298 | 0.182836 |

| 8 | 0.322919 | 0.024096 | 0.00295 | 0.584721 | 1.839572 | 0.009986 |

| 9 | 0.051606 | 0.576331 | 0.00295 | 0.524870 | 1.799829 | 0.048388 |

| 10 | 0.158293 | 0.678617 | 0.003559 | 0.696158 | 2.231448 | 0.001695 |

| 11 | 0.055251 | 0.004448 | 0.014255 | 0.551650 | 2.409947 | 0.015570 |

| 12 | 0.008733 | 0.004131 | 0.019071 | 0.493070 | 1.913370 | 0.046355 |

| 13 | 0.376944 | 0.008184 | 0.004649 | 0.553147 | 2.946602 | 0.012967 |

| 14 | 0.297452 | 0.01459 | 0.002863 | 0.633631 | 1.906357 | 0.054175 |

| 15 | 0.132094 | 0.329650 | 0.002116 | 0.730532 | 2.456959 | 0.016178 |

| 16 | 0.099835 | 0.194384 | 0.036917 | 0.779908 | 3.446312 | 0.017376 |

| 17 | 0.222907 | 0.002091 | 0.011495 | 0.431327 | 1.952456 | 0.049845 |

| 18 | 0.021288 | 0.002629 | 0.005704 | 0.561705 | 2.045576 | 0.049984 |

| 19 | 0.481443 | 0.004517 | 0.025093 | 0.582250 | 2.380352 | 0.007150 |

| 20 | 0.018625 | 0.212689 | 0.001724 | 0.664968 | 2.012102 | 0.016439 |

| average value | 0.282072 | 0.128496 | 0.009729 | 0.565818 | 2.354829 | 0.051094 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| The MFAC algorithm based on EMREOS-ELM | 9 | 9 | 10 | 11 | 10 | 14 | 9 | 9 | 10 | 10 | 10 | 10 | 9 | 11 | 10 | 11 | 11 | 11 | 10 | 9 |

| The MFAC algorithm based on IREOS-ELM | 10 | 11 | 10 | 9 | 12 | 11 | 11 | 11 | 10 | 10 | 12 | 10 | 9 | 11 | 10 | 14 | 11 | 9 | 9 | 10 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Ma, H. Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine. Energies 2019, 12, 3241. https://doi.org/10.3390/en12173241

Zhang X, Ma H. Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine. Energies. 2019; 12(17):3241. https://doi.org/10.3390/en12173241

Chicago/Turabian StyleZhang, Xiaofei, and Hongbin Ma. 2019. "Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine" Energies 12, no. 17: 3241. https://doi.org/10.3390/en12173241

APA StyleZhang, X., & Ma, H. (2019). Data-Driven Model-Free Adaptive Control Based on Error Minimized Regularized Online Sequential Extreme Learning Machine. Energies, 12(17), 3241. https://doi.org/10.3390/en12173241