Non-Intrusive Load Monitoring System Based on Convolution Neural Network and Adaptive Linear Programming Boosting

Abstract

1. Introduction

2. Related Work

3. Three–Step Non–Intrusive Load Monitoring System

| Algorithm 1: TNILM |

| Requirement: X, the current of appliances; Y, the type of appliances; L0, learning rate for 1D–CNN; L1, the length for interval window of 1D–CNN; L2, Number of decision trees in ALPBoost; L3, learn rate for ALPBoost. The experiment in this paper used the default values: L0 = 0.001, L1 = 10, L2 = 100, L3 = 0.1. 1: Set the depth of 1D–CNN; 2: Update the parameters of 1D–CNN and ALPBoost; 3: Apply the 1D–CNN to extract characteristics from single–appliance and multiple–appliance; 4: Apply the ALPBoost to judge the type of appliances in operation; 5: Discuss the accuracy of the method according to (8) in Section 3.3. If the accuracy does not meet the requirements, return to Step 2; Otherwise, output the result and terminate the computation. |

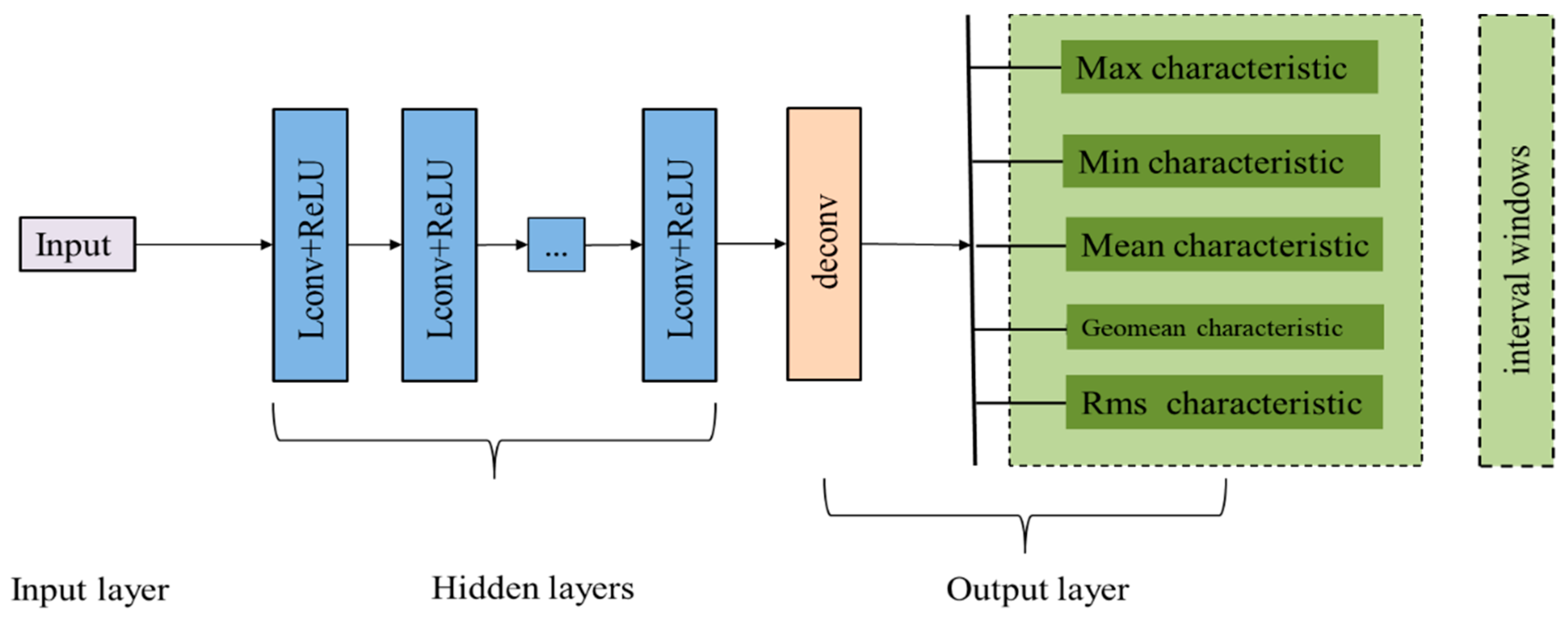

3.1. D Convolutional Neural Network

- For the input, input data are the original current of the appliance measured by a meter over a period of time, containing single–appliance and multiple–appliance. Meanwhile, the current data must be obtained during the use of electrical appliances;

- For each hidden layer, the 1D convolution values are calculated by the Lconv function and a one–dimensional convolution kernel, and then they will be activated by the function ReLU;

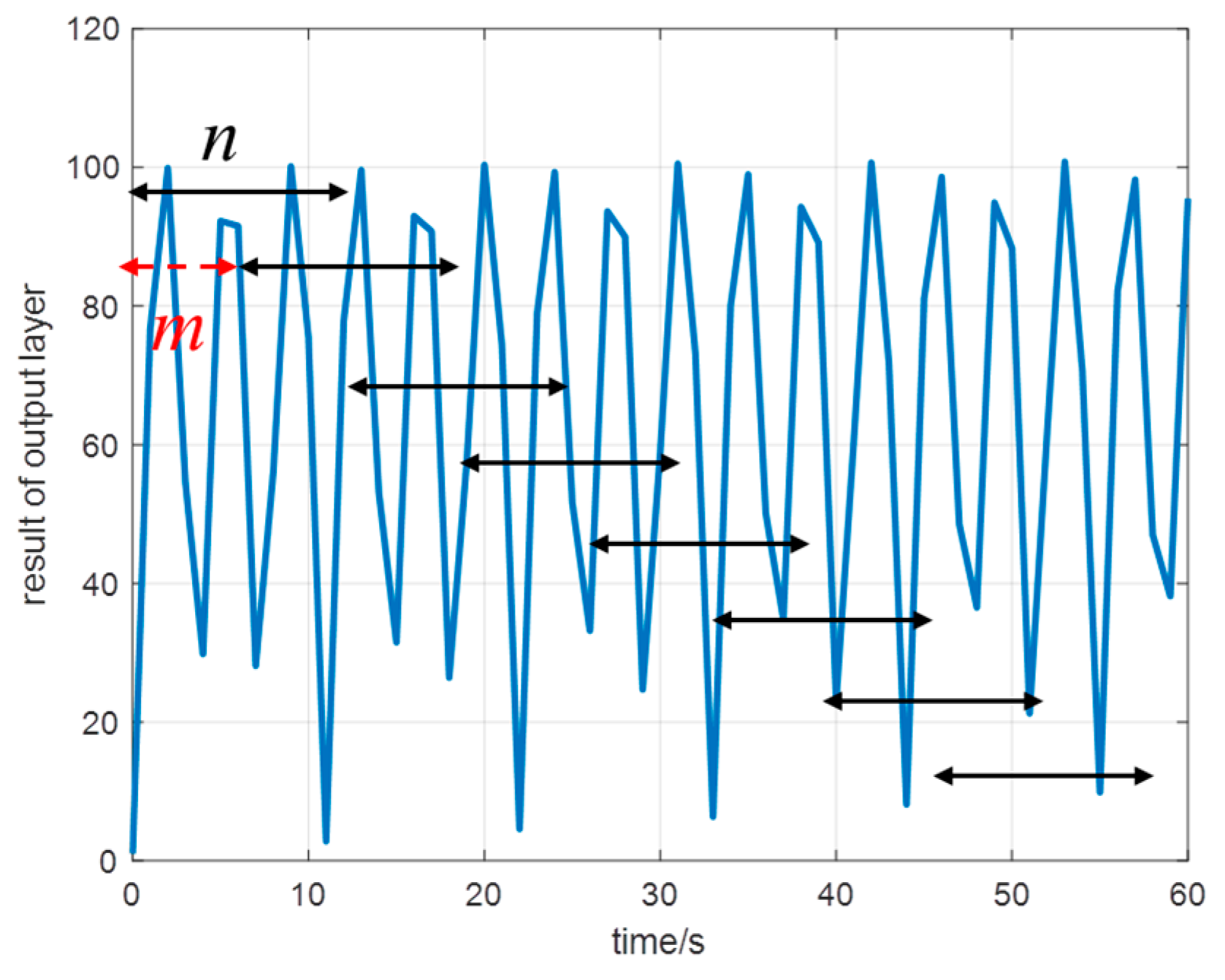

- For the output layer, the interval windows are applied to extract characteristics from the result of hidden layers, which are transient characteristics. Here the optimal window width is obtained by the smoothing technique in [45]. In the hidden layers, we have the 1D convolution function Lconv;where represents the convolution value, and conv(.) denotes the convolution operator and the vectors, u and v, respectively, denote the convolution kernel and the current of appliances. We take the stride as 1, which means the step size of the convolution calculation.

- For the input, the kernel is

- For the second hidden layer, the kernel is

- For the Dth hidden layer, the kernel iswhere denotes the size of the kernel vector is 1 row j column.

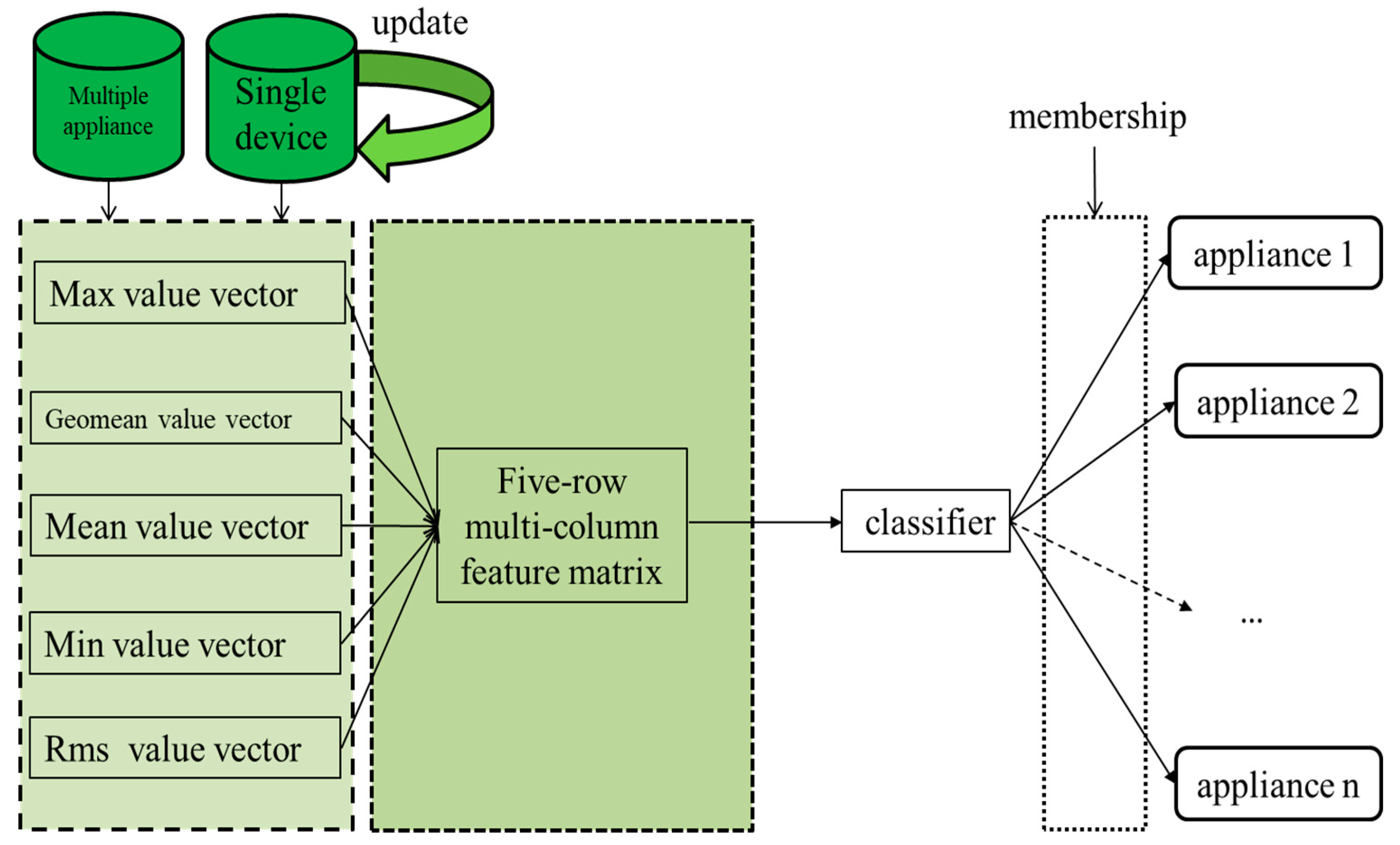

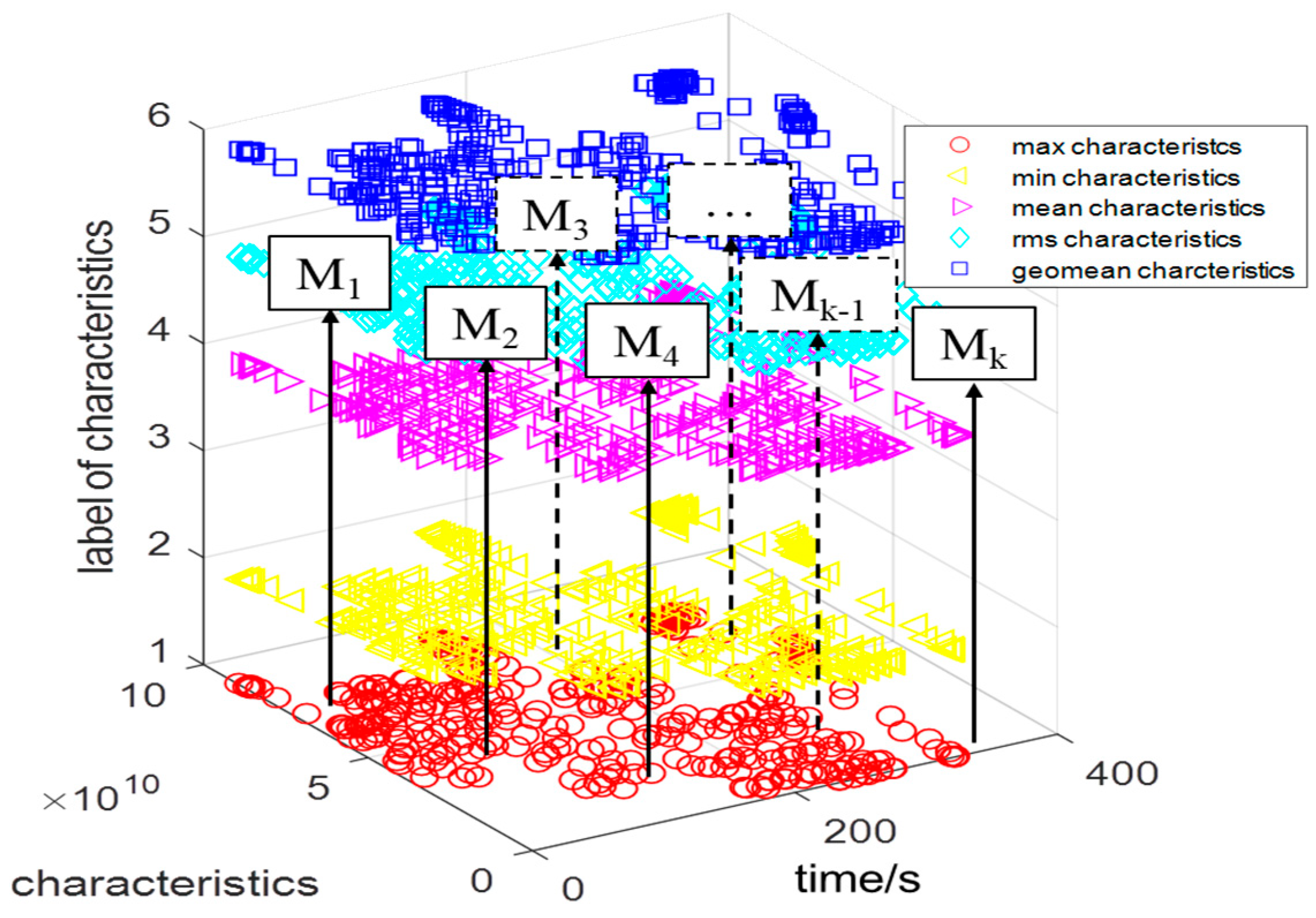

3.2. Adaptive Linear Programming Boosting

- Changing fixed weights into adaptive weights in I: Initiation of Algorithm 2;

- Changing fixed thresholds into adaptive thresholds for single–appliance and multiple–appliance identification in II: Iterate of Algorithm 2;

- Adding two steps to determine the type of appliance with the III: Identification in Algorithm 2, given the value , the detail will be presented in Algorithm 2.

| Algorithm 2: ALPBoost |

| Input: Training set X = {x1, x2,…,xl}, xi∈X Training labels Y = {y1, y2,…,yl }, yi∈{−1,0,1} Output: Classification function f: X→{−1,0,1} I: Initiation: 1: Construct normalized weights: ←, n = 1,2,…,l; 2: Construct the objective function: (xn;w); 3: Initialization the objective function value: ←0; 4: Initialization iterations count: J ←1; II. Iteration Adaptive convergence threshold , N represent the number of iterations; if (xn;w)+≤ θj (j = 1,2,..,N) then break; 1: Update the objective function: hJ ← ; 2: Update iterations: J ← J + 1; 3: Update the objective function value: ; 4: ← solution of ALPBoost dual; 5: α ← Lagrangian multipliers of solution to ALPBoost dual problem; III: Identification 1: Construct classification function: mn ← count(sign() = 1); 2: (if mn ≥ Mn, = 1) ← the membership of appliance; 3: if ∈ () then x is the appliance j. |

3.3. Parameter Update

| Algorithm 3: update process |

4. Experiment Results

4.1. Single–Appliance Identification of TNILM

4.2. Multiple–Appliance Identification of TNILM

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pérez–Lombard, L.; Ortiz, J.; Pout, C. A review on buildings energy consumption information. Energy Build. 2008, 40, 394–398. [Google Scholar] [CrossRef]

- Bamberger, Y.; Baptista, J.; Belmans, R.; Buchholz, B.M.S.; Chebbo, M.; Doblado, M.; Efthymiou, V.; Gallo, L.; Handschin, E.; Hatziargyriou, N.; et al. Vision and Strategy for Europe’s Electricity Networks of the Future; Office for Official Publications of the European Communities: Luxemburg, 2006. [Google Scholar]

- Chui, K.T.; Lytras, M.D.; Visvizi, A. Energy sustainability in smart cities: Artificial intelligence, smart monitoring, and optimization of energy consumption. Energies 2018, 11, 2869. [Google Scholar] [CrossRef]

- Zeifman, M.; Roth, K. Nonintrusive appliance load monitoring: Review and outlook. IEEE Trans. Consum. Electron. 2011, 57, 76–84. [Google Scholar] [CrossRef]

- Du, S.; Li, M.; Han, S.; Jonathan, S.; Lim, H. Multi–pattern data mining and recognition of primary electric appliances from single non–intrusive load monitoring data. Energies 2019, 12, 992. [Google Scholar] [CrossRef]

- Hsueh–Hsien, C. Non–Intrusive demand monitoring and load identification for energy management systems based on transient feature analyses. Energies 2012, 5, 4569–4589. [Google Scholar]

- Froehlic, H.J.; Larson, E.; Gupta, S.; Cohn, G.; Reynolds, M.; Patel, S. Disaggregated end–use energy sensing for the smart grid. IEEE Pervasive Comput. 2010, 10, 28–39. [Google Scholar] [CrossRef]

- Bergman, D.C.; Jin, D.; Juen, J.P.; Tanaka, N.; Gunter, C.A. Distributed Non–Intrusive Load Monitoring, Innovative Smart Grid Technologies; University of Illinois at Urbana Champaign: Champaign, IL, USA, 2011; pp. 1–8. [Google Scholar]

- Chang, H.H.; Lian, K.L.; Su, Y.C.; Lee, W.J. Power–spectrum–based wavelet transform for nonintrusive demand monitoring and load identification. IEEE Trans. Ind. Appl. 2014, 50, 2081–2089. [Google Scholar] [CrossRef]

- Cominola, A.; Giuliani, M.; Piga, D.; Castelletti, A.; Rizzoli, A.E. A hybrid signature–based iterative disaggregation algorithm for non–intrusive load monitoring. Appl. Energy 2017, 185, 331–344. [Google Scholar] [CrossRef]

- Patel, S.N.; Robertson, T.; Kientz, J.A.; Reynolds, M.S.; Abowd, G.D. At the Flick of a Switch: Detecting and Classifying Unique Electrical Events on the Residential Power Line; Ubicomp: Copenhagen, Denmark, 2007; Volume 4717, pp. 271–288. [Google Scholar]

- Gillis, J.M.; Alshareef, S.M.; Morsi, W.G. Nonintrusive load monitoring using wavelet design and machine learning. IEEE Trans. Smart Grid 2017, 7, 320–328. [Google Scholar] [CrossRef]

- Lin, Y.H.; Tsai, M.S. Development of an improved time–frequency analysis–based nonintrusive load monitor for load demand identification. IEEE Trans. Instrum. Meas. 2014, 63, 1470–1483. [Google Scholar] [CrossRef]

- Chen, F.; Dai, J.; Wang, B.; Sahu, S.; Naphade, M.; Lu, C.T. Activity analysis based on low sample rate smart meters. In Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 21–24 August 2011; pp. 240–280. [Google Scholar]

- Rahimi, S.; Chan, A.D.C.; Goubran, R.A. Nonintrusive load monitoring of electrical devices in health smart homes. In Proceedings of the 2012 IEEE International Instrumentation and Measurement Technology Conference, Graz, Austria, 13–16 May 2012; Volume 8443, pp. 2313–2316. [Google Scholar]

- Srinivasan, D.; Ng, W.S.; Liew, A.C. Neural–network–based signature recognition for harmonic source identification. IEEE Trans. Power Deliv. 2005, 21, 398–405. [Google Scholar] [CrossRef]

- Chang, H.H.; Chen, K.L.; Tsai, Y.P.; Lee, W.J. A new measurement method for power signatures of nonintrusive demand monitoring and load identification. IEEE Trans. Ind. Appl. 2012, 48, 764–771. [Google Scholar] [CrossRef]

- Yang, H.T.; Chang, H.H.; Lin, C.L. Design a neural network for features selection in non–intrusive monitoring of industrial electrical loads. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2007, 32, 582–595. [Google Scholar]

- Hassan, T.; Javed, F.; Arshad, N. An empirical investigation of v–i trajectory based load signatures for non–intrusive load monitoring. IEEE Trans. Smart Grid 2014, 5, 870–878. [Google Scholar] [CrossRef]

- Gupta, S.; Reynolds, M.S.; Patel, S.N. ElectriSense: Single–point sensing using EMI for electrical event detection and classification in the home. In Proceedings of the ACM International Conference on Ubiquitous Computing, New York, NY, USA, 26–29 September 2010; pp. 139–148. [Google Scholar]

- Liu, Y.; Chen, M. A review of nonintrusive load monitoring and its application in commercial building IEEE. In Proceedings of the International Conference on Cyber Technology in Automation, Control, and Intelligent Systems, Hong Kong, China, 4–7 June 2014; pp. 623–629. [Google Scholar]

- Saitoh, T.; Aota, Y.; Osaki, T.; Konishi, R.; Sugahara, K. Current sensor based non–intrusive appliance recognition for intelligent outlet. In Proceedings of the International Technical Conference on Circuits Systems, Computers and Communications (ITC–CSCC 2008), Tottori, Japan, 6–9 July 2008. [Google Scholar]

- Zoha, A.; Gluhak, A.; Imran, M.A.; Rajasegarar, S. Non–Intrusive load monitoring approaches for disaggregated energy sensing: A survey. Sensors 2012, 12, 16838–16866. [Google Scholar] [CrossRef] [PubMed]

- Kolter, J.Z.; Batra, S.; Ng, A.Y. Energy disaggregation via discriminative sparse coding. In Proceedings of the International Conference on Neural Information Processing Systems. Curran Associates, Copenhagen, Denmark, 26–29 September 2010; pp. 1153–1161. [Google Scholar]

- Shao, H.; Marwah, M.; Ramakrishnan, N. A Temporal Motif Mining Approach to Unsupervised Energy Disaggregation: Applications to Residential and Commercial Buildings Twenty–Seventh AAAI Conference on Artificial Intelligence; AAAI Press: Menlo Park, CA, USA, 2013; pp. 1327–1333. [Google Scholar]

- Kim, H.S. Unsupervised Disaggregation of Low Frequency Power Measurements Eleventh Siam International Conference on Data Mining. In Proceedings of the Eleventh SIAM International Conference on Data Mining, SDM 2011, Mesa, AZ, USA, 28–30 April 2011; pp. 747–758. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermenet, P.; Angulov, D.; Erhan, D.; Vanhockw, V.; Rabinovich, A. Going deeper with convolutions. arXiv 2014, arXiv:1409.4842v1, 1–9. [Google Scholar]

- Demiriz, A.; Bennett, K.P.; Shawe–Taylor, J. Linear Programming Boosting via Column Generation. Mach. Learn. 2002, 46, 225–254. [Google Scholar] [CrossRef]

- Ahmadi, H.; Martí, J.R. Load decomposition at smart meters level using eigenloads approach. IEEE Trans. Power Syst. 2015, 30, 3425–3436. [Google Scholar] [CrossRef]

- Watkins, A.; Timmis, J.; Boggess, L. Artificial immune recognition system (airs): An immune–inspired supervised learning algorithm. Genet. Program. Evolvable Mach. 2004, 5, 291–317. [Google Scholar] [CrossRef]

- Parson, O.; Ghosh, S.; Weal, M.; Roger, A. Non–Intrusive Load Monitoring Using Prior Models of General Appliance Types. In Proceedings of the Twenty–Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 356–362. [Google Scholar]

- Saitoh, T.; Osaki, T.; Konishi, R.; Sugahara, K. Current sensor based home appliance and state of appliance recognition. SICE J. Control Meas. Syst. Integr. 2010, 3, 86–93. [Google Scholar] [CrossRef]

- Yang, G.; Huang, T.S. Human Face Detection in Complex Background. Pattern Recognition. 1994, 27, 53–63. [Google Scholar] [CrossRef]

- Tsai, M.S.; Lin, Y.H. Modern development of an adaptive non–intrusive appliance load monitoring system in electricity energy conservation. Appl. Energy 2012, 96, 55–73. [Google Scholar] [CrossRef]

- Johnson, M.J.; Willsky, A.S. Bayesian nonparametric hidden semi–Markov models. J. Mach. Learn. Res. 2012, 14, 673–701. [Google Scholar]

- Parson, O.; Ghosh, S.; Weal, M.; Rogers, A. An unsupervised training method for non–intrusive appliance load monitoring. Artif. Intell. 2014, 217, 1–19. [Google Scholar] [CrossRef]

- Lin, Y.H.; Tsai, M.S. Non–Intrusive load monitoring by novel neuro–fuzzy classification considering uncertainties. IEEE Trans. Smart Grid 2014, 5, 2376–2384. [Google Scholar] [CrossRef]

- Du, L.; Restrepo, J.A.; Yang, Y.; Harley, R.G.; Habetler, T.G. Nonintrusive, Self–organizing, and probabilistic classification and identification of plugged–in electric loads. IEEE Trans. Smart Grid 2013, 4, 1371–1380. [Google Scholar] [CrossRef]

- Kolter, J.Z.; Johnson, M.J. REDD: A public data set for energy disaggregation research. SustKDD 2011, 9. [Google Scholar]

- Guo, Z.; Wang, Z.J.; Kashani, A. Home appliance load modeling from aggregated smart meter data. IEEE Trans. Power Syst. 2014, 30, 254–262. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, G. Residential appliances identification and monitoring by a nonintrusive method. IEEE Trans. Smart Grid 2012, 3, 80–92. [Google Scholar] [CrossRef]

- Zeile, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 818–833. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems. Curran Associates, Lake Tahoe, Nevada, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Haykin, S.; Kosko, B. Gradient Based Learning Applied to Document Recognition IEEE; Wiley–IEEE Press: Hoboken, NJ, USA, 2009; pp. 306–351. [Google Scholar]

- Härdle, W.; Müller, M.; Sperlich, S.; Werwatz, A. Nonparametric and Semiparametric Models; Springer–Verlag: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Quinlan, J.R. Bagging, boosting. In Proceedings of the Thirteenth National Conference on Artificial Intelligence, Oregon, Portland, 4–8 August 1996; pp. 725–730. [Google Scholar]

- Ridi, A.; Gisler, C.; Hennebert, J. A Survey on Intrusive Load Monitoring for Appliance Recognition[C]// 2014 22nd International Conference on Pattern Recognition (ICPR). IEEE Comput. Soc. 2014, 94, 3702–3707. [Google Scholar]

- Rätsch, G.; Onoda, T.; Müller, K.R. Soft margins for AdaBoost. Mach. Learn. 2001, 42, 287–320. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by random forest. R News 2002, 23, 18–22. [Google Scholar]

- Schapire, R.E. Improved boosting algorithms using confidence–rated predictions. Mach. Learn. 1999, 37, 297–336. [Google Scholar] [CrossRef]

| Method Category | Algorithm Category | Application Scenario | Accuracy | |

|---|---|---|---|---|

| mathematical optimization | Factorial Hidden Markov Models [10,24,26,39] | household appliances | 70–95% | |

| 0–1 multidimensional knapsack algorithm [13] | Several common household appliances | 85–90% | ||

| pattern recognition | supervised learning | KNN [14,15,33] | Common household appliances | 78–100% |

| Neural Network [9,15,16,17,18,35] | 70–100% | |||

| SVM or AdaBoost [19,20,21,22] | 85–99% | |||

| unsupervised learning | Hidden Markov Models [24,26,39,40] | Several common household appliances | 52–98% | |

| self–organizing map (SSOM)/Bayesian [38]; Fuzzy C–Means clustering [37]; integrate mean–shift clustering [41]; | 70–85.5% | |||

| No | Appliance | Training Accuracy (%) | Testing Accuracy (%) |

|---|---|---|---|

| 1 | Fan | 97.1 | 95.8 |

| 2 | Microwave | 97.3 | 93.6 |

| 3 | Kettle | 100 | 100 |

| 4 | Laptop | 95.5 | 93.3 |

| 5 | Incandescent | 96.3 | 94.0 |

| 6 | Energy saving lamp | 96.4 | 93.2 |

| 7 | Printer | 98.0 | 95.5 |

| 8 | Water dispenser | 100 | 98.8 |

| 9 | Air conditioner | 98.5 | 95.1 |

| 10 | Hair dryer | 100 | 100 |

| 11 | TV | 97.5 | 94.2 |

| No | Classifier | Training Accuracy (%) | Testing Accuracy (%) |

|---|---|---|---|

| 1 | SVM | 90.2 | 87.3 |

| 2 | KNN | 92.5 | 90.6 |

| 3 | Random Forest | 93.4 | 91.6 |

| 4 | AdaBoostM2(tree) [48] | 95.5 | 92.8 |

| 5 | LPBoost(tree) | 96.1 | 93.7 |

| 6 | ALPBoost | 97.7 | 95.4 |

| Type | Real Including Appliances | Predict Including Appliances | Hypothetical Accuracy (%) |

|---|---|---|---|

| 1 | Kettle, Printer | Kettle, Printer | 100 |

| 2 | Fan, Microwave, Laptop | Fan, Microwave, Laptop | 100 |

| 3 | Energy saving lamp, Water dispenser, Hair dryer | No12, Water dispenser, Hair dryer | 66.7 |

| 4 | Laptop, Incandescent, Water dispenser, Hair dryer, TV | Laptop, No12, Water dispenser, Hair dryer, TV | 80.0 |

| 5 | Kettle, Laptop, Incandescent, Printer, Air conditioner | Kettle, Laptop, Incandescent, Printer, Air conditioner | 100 |

| 6 | No0, Microwave, Hair dryer | No12, Microwave, Hair dryer | 80 |

| 7 | No0, Laptop, Incandescent, Water dispenser, Air conditioner | No0, Laptop, No12, Water dispenser, Air conditioner | 80 |

| Type | N | Training Accuracy (%) | Testing Accuracy (%) |

|---|---|---|---|

| 1 | 2 | 95.6 | 92.9 |

| 2 | 3 | 94.2 | 91.7 |

| 3 | 5 | 92.4 | 90.8 |

| 4 | total | 94.1 | 91.8 |

| No | Classifier | Training Average Accuracy (%) | Testing Average Accuracy (%) |

|---|---|---|---|

| 1 | SVM | 85.6 | 82.1 |

| 2 | KNN | 82.3 | 80.5 |

| 3 | Random Forest | 89.6 | 85.4 |

| 4 | AdaBoostM2 | 90.8 | 88.7 |

| 5 | LPBoost | 92.3 | 90.5 |

| 6 | ALPBoost | 94.1 | 91.8 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Min, C.; Wen, G.; Yang, Z.; Li, X.; Li, B. Non-Intrusive Load Monitoring System Based on Convolution Neural Network and Adaptive Linear Programming Boosting. Energies 2019, 12, 2882. https://doi.org/10.3390/en12152882

Min C, Wen G, Yang Z, Li X, Li B. Non-Intrusive Load Monitoring System Based on Convolution Neural Network and Adaptive Linear Programming Boosting. Energies. 2019; 12(15):2882. https://doi.org/10.3390/en12152882

Chicago/Turabian StyleMin, Chao, Guoquan Wen, Zhaozhong Yang, Xiaogang Li, and Binrui Li. 2019. "Non-Intrusive Load Monitoring System Based on Convolution Neural Network and Adaptive Linear Programming Boosting" Energies 12, no. 15: 2882. https://doi.org/10.3390/en12152882

APA StyleMin, C., Wen, G., Yang, Z., Li, X., & Li, B. (2019). Non-Intrusive Load Monitoring System Based on Convolution Neural Network and Adaptive Linear Programming Boosting. Energies, 12(15), 2882. https://doi.org/10.3390/en12152882