1. Introduction and Background

Due to the decreasing trends of feed-in tariffs (a premium rate paid for electricity fed back into the electricity grid from a designated renewable electricity generation source) for solar-generated electricity in many countries (including Australia), there has been an accelerated interest and need for versatile energy management systems (EMS) for end-users to increase the generation of electricity and the capacity for power transmission from various regions, both remote and metropolitan, to meet the rising consumer energy demands [

1]. EMS are able to monitor, control and optimize the transmission and use of solar and conventional energies [

2]. However, the prediction error on the power output from a solar power system can cause a negative effect on the system profitability. Considering this, an accurate predictive tool for solar can help reduce the uncertainty of power generation (solar photovoltaic), as well as to increase the conversion efficiency (solar thermal) in the future. Such tools can be used to explore and evaluate the sustainability of long-term solar-powered energy installations in all regions, irrespective of their location.

The magnitude of power generated by a solar photovoltaic (PV) system and conversion efficiency (solar air heater, solar water heater, solar concentrator) is largely a function of the global solar radiation (

GSR) [

3]. However, stochastic components of solar energy variability depend on the cloud coverage characteristics, as well as factors including the aerosol, dust particles, smoke and airborne pollutants that are largely difficult to measure on an ongoing basis and, therefore, must be derived from remotely-sensed data products. In addition to this, the intermittency and randomness in atmospheric variables and a lack of data for remote or regional sites make the prediction of long-term availability of

GSR to support a future solar PV, as well as a solar thermal system quite challenging. Although

GSR is one of the most commonly-monitored meteorological variables, measurement stations remain sparse, particularly in the Southern Hemisphere [

4]. Even if a measurement station has been set up, the measured data can be unreliable and questionable, due to a lack of regular maintenance and issues with calibration of the instruments in a regional or remote location [

5]. To surmount these issues, the opportunity to adopt satellite-derived predictors to estimate long-term

GSR presents an alternative and viable avenue for future exploration of solar energy.

To explore solar energy potentials, many techniques have been developed to predict

GSR, which can be largely categorized as follows: (I) empirical models with simple mathematical equations: linear, quadratic and polynomial equations to emulate the links between

GSR and its related meteorological variables; (II) remote sensing retrieval that is based on images from satellites used to predict

GSR [

6]; (III) soft computing or data-driven models that apply artificial intelligence techniques to model the erratic behaviour of

GSR received on the Earth’s surface. The requirement for any predictive model for solar applications is that it must be an appropriately representative model developed, calibrated and validated to extract intrinsic features related to

GSR prediction.

Data-driven models are becoming increasingly promising tools for electrical power [

7,

8] and solar radiation prediction [

9,

10,

11,

12,

13,

14]. Single hidden layer (SHL) neural networks, using the artificial neural network (ANN) as a black-box tool, have been designed for both short-term [

15] and long-term prediction of

GSR [

13,

16]. A recent study in Australia designed an ANN model at four locations using the European Centre for Medium Range Weather Forecasting (ECMWF) reanalysis data as an input [

10]. In spite of the acceptable performance in this study, inputs were selected from a limited set of meteorological variables from weather stations (e.g., latitude, rainfall, sunshine duration, humidity, temperature) and, therefore, did not consider additional predictors, such as those available in satellite data repositories that could possibly influence

GSR.

To address the potential problems associated with the inadequacy of data, the opportunity to use satellite products from the National Aeronautics and Space Administration (NASA), Goddard Online Interactive Visualization and Analysis Infrastructure (GIOVANNI) repository is an alternative avenue to generate

GSR forecasts, particularly feeding the model with important variables such as land surface temperature, cloud-free days, aerosol optical depth and cloud temperature that are highly likely to moderate the amount of solar radiation received at the Earth’s surface. In fact, recent studies have utilized land surface temperature with other satellite-derived variables to model long-term

GSR in regional Queensland and over the Australian sub-continent [

9,

13], although none have used a sophisticated method (e.g., deep learning algorithms).

Recently, to address potential limitations of ANNs, particularly arising from the algorithm being a single hidden layer neuronal system, a number of newer neural network techniques such as deep learning (DL) have also been implemented [

17] and shown to generate a superior accuracy compared to a single hidden layer model. Deep learning is designed to use a neural network structure similar to the ANN to represent inputs and target data. These models use multiple feature extraction layers and learn the complex relationships within the data more efficiently. These DL methods have been widely implemented in medical imaging, speech recognition and natural language processing, autonomous driving and computer vision. However, there have been only a few prior studies that have employed a DL model for

GSR prediction, especially using satellite-derived predictor datasets.

To address the limitations of single hidden layer neuronal models, this paper adopts the deep neural network (DNN) and deep belief network (DBN), the two fundamental categories of DL algorithms, coupled with satellite-derived data to predict long-term

GSR, where monthly averaged daily values are modelled for solar cities in Australia. These solar cities have previously been established as potential future sites for solar energy projects that have a low cloud cover and limited aerosol concentrations and are thus well suited for solar energy. To provide a sound context for developing DNN and DBN models to predict the

GSR, the merits of DL models include the capability to extract much deeper and naturally inherent data features within a predictor-target matrix, mainly to provide more accurate predictions [

18]. For example, a DNN approach is able to boost the predictive power of the ANN model by deepening and replicating its hidden layers and also leveraging its internal structures to model the

GSR accurately. Moreover, a DBN model [

19] is able to avoid the problem of overfitting and also avoiding the learning mode being halted when a local optima emerges in a feature space. The merits of deep learning models can, therefore, help address the unavoidable drawbacks of conventional approaches, e.g., an ANN model [

20].

Many studies are currently using deep learning for time series forecasts [

21,

22,

23,

24]. Some results reveal a DBN model’s superiority over a linear autoregressive and a conventional back-propagation neural network (i.e., ANN) model. Furthermore, literature on

GSR prediction problems using deep learning approaches has rather been limited to short-term forecast horizons (i.e., minutes and hours), and these studies have used deep learning based on long short term memory network or convolutional neural networks. However, a longer forecast horizon (i.e., weekly or monthly model) can be useful for exploring the long-term prospects of solar energy [

25], leading to better policy, implementation of new solar powered sites and expansion of solar energy systems in remote and regional locations where solar radiation may be in abundance [

9,

10,

13].

A literature review, particularly the related review articles [

26,

27,

28], shows that the current literature is relatively scarce, and even non-existent, in terms of prior studies conducted to predict monthly

GSR using deep learning approaches. From a practical point of view, the future planning for an electricity grid certainly requires the prediction of solar radiation a few months ahead of time [

29]; therefore, a monthly predictive model is particularly desirable. That model can be useful for agricultural crop growth [

30], production of algal-derived biofuels [

31], and key decisions made for many applications, where the estimation of long-term solar radiation may be required.

The aims of this study are as follows: (1) to design and implement a deep learning (DL) approach using deep belief network (DBN) and deep neural network (DNN) algorithms and to evaluate its relative success in estimating the long-term daily average monthly GSR using remotely-sensed MODIS-derived products as the DL model’s input variables. Here, we consider the application study site as Australia’s solar cities, namely: Blacktown [33.77°S, 150.90°E], Adelaide [34.92°S, 138.59°E], Townsville [19.25°S, 146.81°E] and Central Victoria [36.74°S, 144.28°E], all four of which are situated in the dry sub-tropic region and are relatively enriched with solar exposure. The next aims of the study are: (2) to apply wrapper and filter-based feature selection techniques on the MODIS satellite data in order to select the optimum predictor variables for these prescribed DL models; (3) to adopt the Gaussian Emulation Machine approach to perform a sensitivity analysis of MODIS variables to deduce their relative influence on GSR prediction; (4) to benchmark the deep learning models (i.e., DBN and DNN) with a multitude of competing data-driven approaches, namely: single hidden layer (ANN), and ensemble models (random forest regression (RF), extreme gradient boosting regression (XGBR), Gradient Boosting Machine (GBM), and decision tree (DT).

By testing the developed models over Australia’s solar cities, this paper aims to provide valuable contributions to exploring the utility of a deep learning approach of improving other previous studies (e.g., [

10,

11,

12,

13]) where single hidden layer neuronal systems have been used. The novelty lies in the incorporation of MODIS-derived predictors to foster new insights for estimating long-term solar energy for any region that does not have atmospheric monitoring systems. More importantly, it can rely on remote sensing data for

GSR prediction. These models can promote solar energy in remote or regional areas where satellites can be employed for long-term evaluation.

3. Data, Importance and Context of the Study

This study employs monthly averaged daily

GSR records to develop a prediction model using DBN and DNN for four solar cities of Australia: Blacktown [33.77°S, 150.90°E], Adelaide [34.92°S, 138.59°E], Townsville [19.25°S, 146.81°E] and Central Victoria [36.74°S, 144.28°E]. Although the potential for use of solar energy in these regions remains high, deep learning-based models for

GSR are not easily available. Furthermore, in most states in Australia, the electricity is provided through power plants located in the central and southern areas, and because of this, there are huge transmission and distribution costs and losses [

54]. Hence, there is a potential to harness locally available solar energy, particularly in remote sites where solar forecast models are actively being tested [

9,

10,

11,

12,

13], although the studies are using simplistic models rather than deep learning approaches.

Other than focusing on solar city sites with abundant solar radiation, this study purposely adopts MODIS satellite variables to model

GSR since historical data related to the target variable (

GSR) play a key role in helping evaluate solar energy availability. Remote sensing data have already been identified as a practical predictor for solar problems [

55], so in this view, the coupling of a deep learning model with satellite-derived products is a major improvement over the use of station-based data mainly because the acquisition of satellite imagery can be feasible for inaccessible sites with no measurement infrastructure as long as a footprint is identified. For long-term forecast horizons (e.g., monthly), satellite data remain abundant for a diverse range of spatial and temporal resolutions and, recently, have been adopted in global solar radiation prediction problems [

9,

13]. Although recent studies have considered solar radiation models trained with MODIS datasets, these were limited to cloud-free predictor variables and land surface temperature. Considering this, significant MODIS data have not been used in previous studies [

56], although a recent study [

12] has estimated solar radiation using MODIS-derived predictors without a deep learning model.

3.1. MODIS Satellite-Derived Predictor Data

To design a deep learning model for

GSR prediction over long time horizons, monthly predictor data have been extracted from 1 March 2000–2018 from NASA’s Goddard Online Interactive Visualization and Analysis Infrastructure (GIOVANNI) repository.

Table 1 lists the predictors. The objective variable (i.e., integer values of land surface daily global solar radiation) were downloaded from a ground-based source, the Scientific Information for Land Owners (SILO) database. The Long Paddock SILO database is operated by Queensland Government Department of Environment and Science in the Department of Science, Information Technology, Innovation and the Arts (DSITIA). This data cover each of the four solar cities [

57].

The GIOVANNI data offer a fast and flexible method to explore links between physical, chemical and biological parameters useful for inter-comparing multiple satellite sensors and algorithms [

58]. Since only a relatively short investment of time and effort is required to become familiar with the GIOVANNI system, a main advantage is its ease-of-use so that researchers who are unfamiliar with remote sensing can use the system to determine their data needs applicable to their topic area [

59]. Missions, instruments, or projects providing data products available in GIOVANNI are useful for

GSR modelling as they include the Atmospheric Infrared Sounder (AIRS), Tropical Rainfall Measuring Mission (TRMM), Ozone Measuring Instrument (OMI), Moderate Resolution Imaging Spectroradiometer (MODIS), Modern Era Retrospective-analysis for Research and Applications (MERRA) project and North American Land Data.

In this paper, data from a MODIS instrument on-board Terra (EOS AM) and Aqua (EOS PM) satellites have been utilized. These satellite (MODIS) meteorological data are widely and freely available for public access [

60] and, therefore, useful for solar energy exploration and modelling in a diverse range of sites.

3.2. Data Preparation, Feature Selection and Sensitivity Analysis

Before the

GSR model was developed, all inputs were normalized in the range of (0, 1) [

9]. Normalization was done to have the same range of values for each of the inputs to the models. This normalization procedure guarantees stable convergence of weight and biases [

61,

62].

where

X, Xmin and

Xmax represent input data and minimum and maximum values, respectively.

After this, the data were segregated into training and testing sets. Since there is no rule for data segregation, we used an earlier researcher’s approach [

63,

64] to divide into 80% (training) and 20% (testing) sub-sets, but 10% of the training data were separated again for the purpose of model validation, mainly to eliminate issues related to a model bias through a cross-validation process.

In this paper, a total of five filter- and 10 wrapper-based feature selection (FS) algorithms were employed to extract the most important MODIS-derived predictors related to the target (i.e.,

GSR).

Table 2a,b outlines the FS algorithms. By removing irrelevant, noisy or redundant features from the original space, FS can alleviate the problem of overfitting, improve the performance [

65] and save time and space costs that are normally an issue of consideration in a deep learning algorithm [

66]. Importantly, through an FS strategy, we can also get deeper insights into the MODIS and

GSR data by analysing the importance of all and the most relevant features that can affect the future sustainability of solar energy.

For this study, FS divided into two categories, filters and wrappers, has been used. The filter method was used as a pre-processing step using criteria that did not involve any learning, and by doing that, it did not consider the effect of a selected feature subset on the performance of the algorithm [

67,

68]. Wrapper methods, on the other hand, were used to evaluate a subset of features according to the accuracy of a predictor [

65], where search strategies were used to yield nested subsets of variables, and the variable selection was based on the performance of a learned algorithm [

69]. In accordance with

Table 2a,b, this study had multiple FS algorithms to select the most optimal predictors of long-term

GSR carefully.

Other than incorporating the FS strategy, we also performed a sensitivity test to examine the statistical relationships between

GSR and its selected variables. To estimate

GSR in a region with limited predictors, a solar engineer may be interested in checking the importance of a given set of predictors that effectively contribute to a predictive model. This information is useful for decision making in solar power plant design, especially in selecting the most appropriate predictors for

GSR and enhancing the understanding of the correct measurements to obtain when those data are used. In this study, we employed a global sensitivity analysis method using the Gaussian Emulation Machine (GEM-SA) software [

70]. For detailed information on this technique, readers can consult [

71]. To deduce which of the MODIS-derived inputs produced a substantial effect on the target variable (

GSR), two GEM-SA parameters were used: the main effect (

ME) and the total effect (

TE). The

ME enumerates the influence of just one parameter varying in its own in relation to

GSR, while the

TE comprises the

ME plus any variance due to possible interactions between that parameter and all of the other inputs varying at the same time [

72].

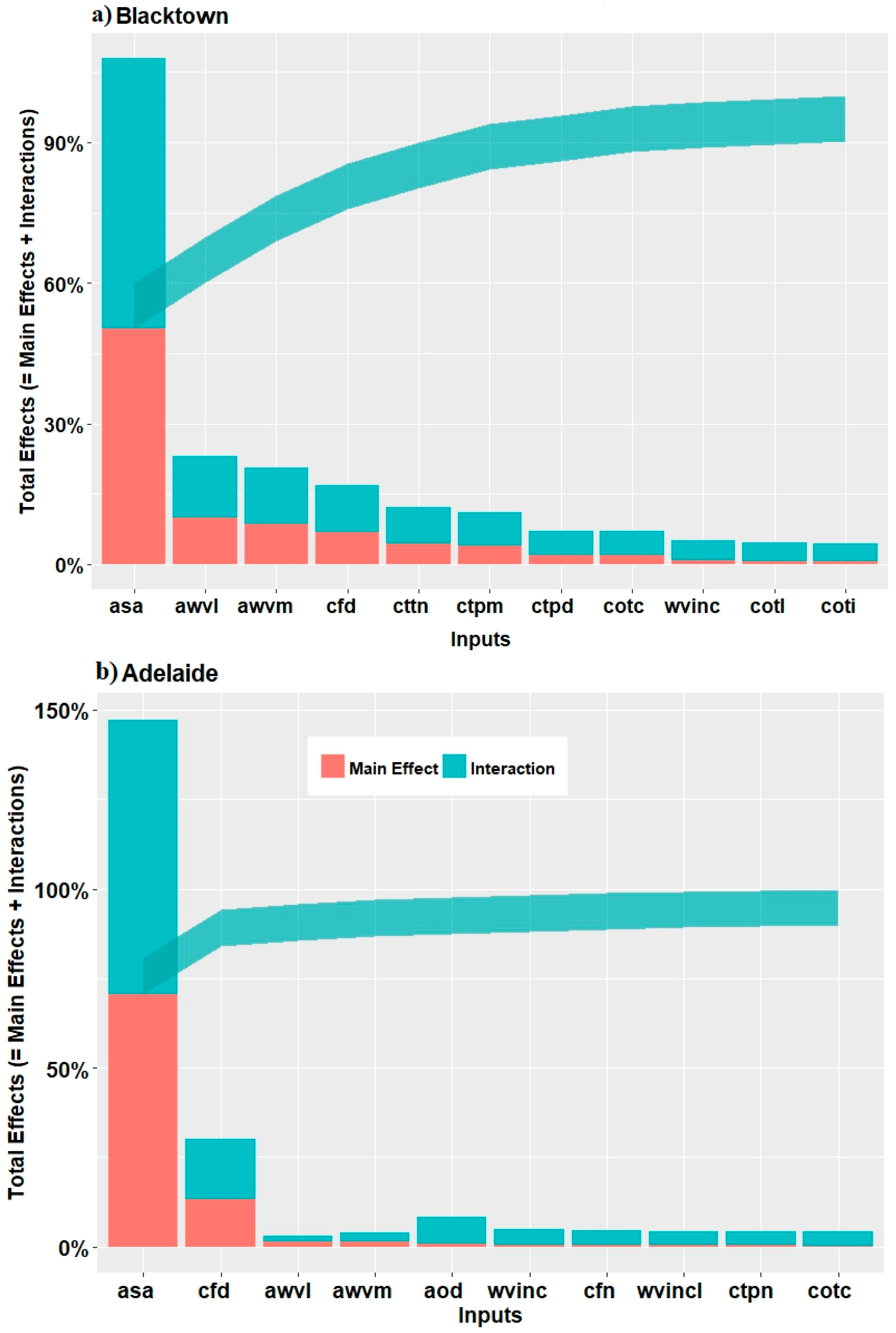

Figure 2 is a “Lowry plot” and shows the relative contribution to the total variance in

GSR, from each selected MODIS input. Notably, the vertical bars show

ME and

TE for each input ranked in order of their main importance, whilst the lower and upper bounds show the cumulative sum of the main and total effect, respectively. This analysis shows that almost 72%, 50% and 27% of the variance in

GSR was due to the

asa variable (i.e., aerosol scattering angle) for the Adelaide, Blacktown and Townsville study sites, respectively. In contrast, for the case of Central Victoria, almost 40% of the total variance in

GSR was due to

awvm (i.e., medium atmospheric water vapour) compared to about 23% due to

asa. For Adelaide, however, the second highest contribution was derived from day-time cloud fraction (

cfd ≈ 27%). It can therefore be concluded that for Adelaide, these input variables are important and are likely to affect the performance of the deep learning models if they are neglected. Similarly, for Central Victoria, the low atmospheric water vapour

(awvl), aerosol scattering angle (

asa), and day-time cloud fraction (

cfd) were found to be the second, third and fourth highest contributors analysed by the GEM-SA method, whereas the other MODIS input variables appeared to have a negligible effect (<5%).

In contrast to the above results, for the case of Blacktown, all of the other MODIS-derived inputs had a negligible effect on GSR with less than 10% of the total variance. It can therefore be concluded that to include 90% of the variance, the first six MODIS parameters (asa, awvl, awvm, cfd and cttn) are required in modelling GSR for Blacktown. Similarly, the first 10 MODIS parameters (asa, awvm cfm, awvl, cfd, ctpm, awvh, cotc and dbael) are required for Townsville to include 90% of the variance. The effect of MODIS inputs on the target variable can be easily identified with the GEM-SA method. As revealed in this analysis, it should be noted that the most important MODIS inputs are not the same for all four locations; hence, a sensitivity analysis of FS-based inputs is necessary to identify more clearly the role of these predictors in modelling the objective variable.

3.3. Deep Learning Predictive Model Design

In this study, deep learning was implemented in Python with the Keras Deep Learning library together with Theano [

73] used for modelling

GSR in a computer with an Intel core

i7 processor @ 3.3 GHz and with 16 GB RAM memory.

3.3.1. Deep Belief Networks

After a feature selection process and sensitivity analysis of MODIS-derived predictors, a DBN model architecture was designed. This study followed the notion that there is no theoretical basis to set a correct number of layers in a deep learning model. Indeed, insufficient hidden layers means that there could be no proper feature space, resulting in an under-fitted model, but too many layers can lead to the issues of over-fitting, as well as an “ill-posed” problem with higher computational costs [

74]. Considering these, a trial and error method was adopted to determine the optimal structure of a DBN model, selected carefully from a total of 12 different neuronal architectures.

For the DBN model, this study used back-propagation for all trained models, but the activation functions were switched between rectified linear unit (ReLU) and the sigmoid equation with a regularization parameter used for fine tuning. The finer details of DBM models are as follows.

- (1)

Back-propagation was used to adjust weights, using the derivative chain principle on model errors that were propagated from the last to the first layer. The two parameters implemented were the batch size [

2,

5] and epochs (100, 200), where training samples were divided into groups of the same size. Notably, the batch size refers to the samples in each group fed to the network before weight updates are performed, whereas epochs are related to the iterations of fine-tuning. Generally, the network can undergo fine-tuning with a smaller batch size or a larger number of epochs [

75] including a large iteration set of 1000 in this study.

- (2)

To avoid overfitting, a least absolute shrinkage and selection operator (i.e.,

L2 or lasso regularization) was used to update the cost function by adding a regularization term [

76], such that the weights were reduced, to assume a neural network with a smaller weight matrix, leading to a cost-efficient DBN model. This is likely to reduce the overfitting [

77], so in this study, we used the

L2 regularization as 0.01.

- (3)

The learning rate for stacked restricted Boltzmann machine (RBM) and back-propagation were fixed to 0.01 and 0.001 for the DBN model design following earlier studies [

78].

In accordance with

Table 2, the input nodes were selected by the feature selection algorithm, and hidden layers were deduced by trial and error with analysis of the influence on training performance. As a result, one hidden layer was used at first, and increased up to two layers with variable layers and neurons to optimize the predictive model. This resulted in 12 distinct DBN architectures where the DBN

10 model was the optimal model.

Table 3 lists the effect of feature selection in designing the optimal DBN model, where the relative root mean square (

RRMSE %) generated for a selected study site, Adelaide in the model training phase, is illustrated. Evidently, MODIS-based predictors acquired through the particle swarm optimization (PSO) algorithm yielded the lowest

RRMSE (≈ 2.98%) in the training DBN

10 model, as identified in

Table 3.

Similarly (not shown here), the MODIS-derived predictors analysed by the genetic algorithm with DBN11 (RRMSE ≈ 3.25%), analysed with step feature selection for DBN2 (RRMSE ≈ 3.79%) and the relief algorithm with DBN2 (RRMSE ≈ 3.71%), yielded the lowest RRMSE compared to the other DBN models for Blacktown, Townsville and Central Victoria, respectively. In addition, the increase in neurons in the hidden layers above 50 was seen to increase training errors for Adelaide, with RRMSE being elevated by 65%, 174%, 81%, 62%, 45%, 55%, 19% and 21% for DBN2, DBN3, DBN4, DBN5, DBN6, DBN7, DBN8 and DBN9, respectively (not shown here). In this study, a total of 180 DBN architectures were developed to generate the optimal GSR predictive model.

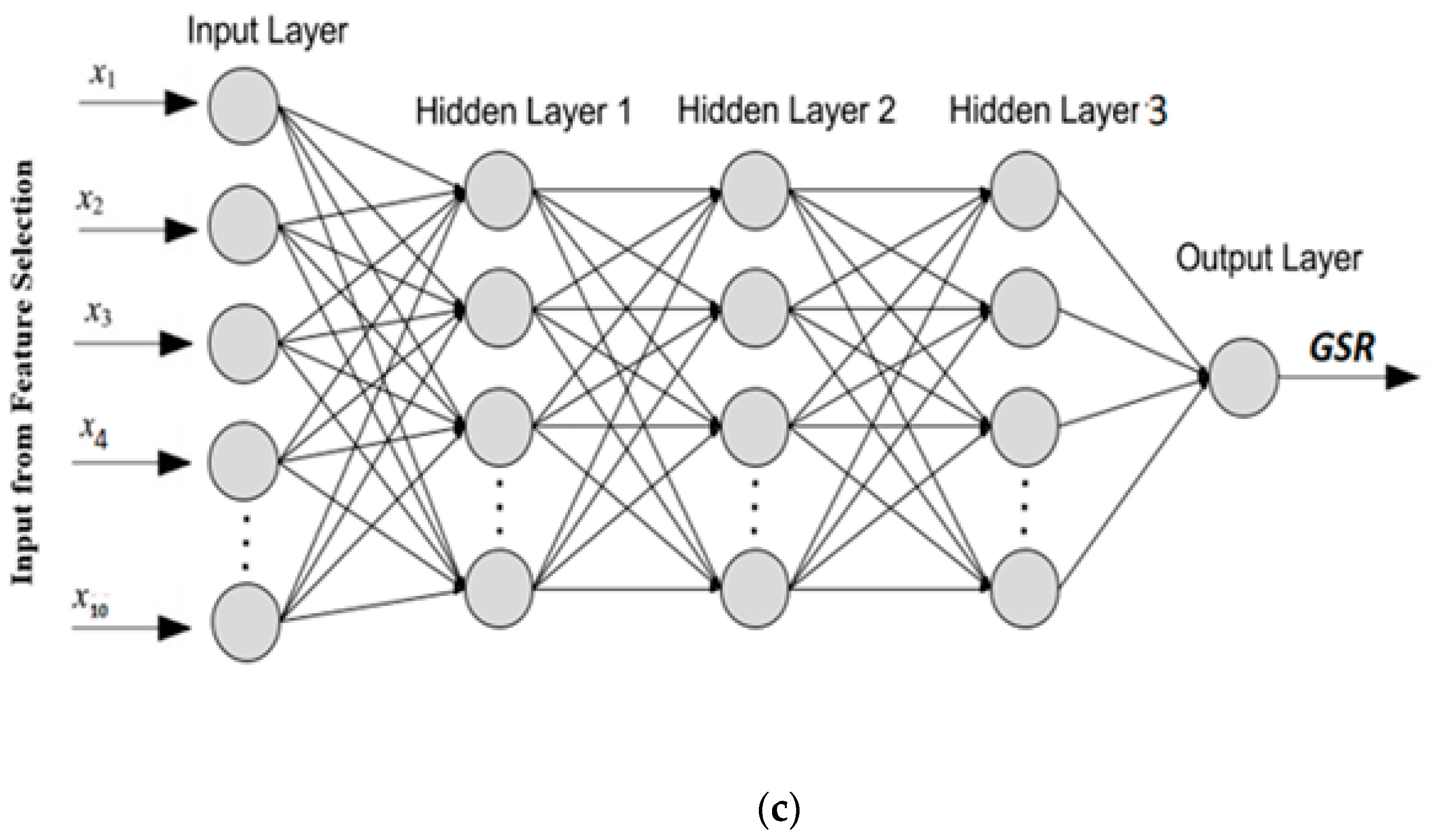

3.3.2. Deep Neural Network

To design a competing deep learning approach for

GSR prediction, the next objective model, DNN with 3 hidden layers, 1 input layer where MODIS-derived predictor variables were incorporated from the FS process and 1 output layer corresponding to the target (i.e.,

GSR), was designed. As with the case of DBN (

Section 3.3.1, there appeared to be no preferred method to optimize a DNN model, so a trial and error approach was implemented, where the number of neurons in the hidden layer, activation function, batch size, and number of neurons were tested randomly to satisfy the most accurate

GSR model.

Specifically, the modelling experiments were executed 10 times for the same DNN configuration to attain the best result, with the various steps as follows.

In each trial and error, DNN was trained using popular algorithms: AdaGrad, RMSProp, AdaDelta, Adam, Adamax, Nadam and

SGD. It is noteworthy that the Adam algorithm is normally quite popular [

79], given it is an enhanced combination of

RMSProp and the

moments techniques [

80]. In this study, we utilized all seven algorithms to determine the optimal DNN architecture.

To avoid overfitting, three measures were employed. First, we added

L2 regularization to penalize the weights in the deep neural network. Second, the dropout technique was used to omit the subset of hidden units at each iteration of a training procedure [

81]. Third, early stopping was applied by monitoring the validation performance with the last 10% of the training dataset, in accord with earlier studies [

82].

In total, six distinct DNN model architectures with different hyperparameters were developed.

Table 4 shows the architecture for one study site (Central Victoria), where the best model designed with the

Adam and

SGD algorithms was shown to generate the lowest

RRMSE.

3.3.3. Comparison Models

To benchmark the objective deep learning model (i.e., DBN and DNN), this study used the

Scikit package [

83] to design a Python-based predictive model for

GSR with XGBoost [

84], gradient boosting regression [

85], decision tree [

86] and the random forest regressor [

87]. For the tuning of the regression model’s hyperparameters, the study used a grid search [

88] package where several parameters like the maximum depth of the tree (

max_depth), the number of samples required to split an internal node (

min_samples_split), the number of features to consider when looking for the best split (

max_features) and the others were tuned.

Table 5a,b shows a full list of parameters tuned by the grid search method with 10-fold cross-validation, where the optimal parameter for each of the study sites, yielding the lowest

RRMSE, is shown.

For the ANN model, MATLAB 2017b software was utilized [

89]. In this study, ANN with various hidden neurons in its hidden layer (1–50) was used with the Levenberg–Marquardt back-propagation algorithm (

trainlm) [

90] including the hyperbolic tangent and logarithmic sigmoid as activation functions, tested in hidden and output layers, respectively. The ANN model with the low

RRMSE and high correlation coefficient (

r) was selected.

3.4. Model Performance Criteria

To evaluate the performance of the proposed deep learning models against their comparative counterparts, statistical metrics were employed. Commonly-used metrics like

RMSE,

MAE and Pearson’s correlation coefficient (

r), together with skill score metrics (

) defined in Equation (7) are as follows.

where

refers to the error (

RMSE) obtained in the predicted results employed to assess model performance. Here,

is the

RMSE of a persistence model. The persistence model, which is also called the naive predictor, considers that the

GSR at

t + 1 equals the

GSR at

t. The interpretation of this metric is that a value of

close to zero will indicate that the performance of the model is similar to that of the persistence model.

By contrast, if this metric is a positive value, the models under study are likely to outperform the persistence model (which is the baseline), whereas if attains a negative value, then the persistence model is likely to be better than the models under study. This study also utilized the normalized performance indicators based on the Nash–Sutcliffe coefficient (ENS), Willmott’s index (WI) and the Legate’s and McCabe’s index (LM), which provide advanced assessment of models relative to the ENS and WI values.

In addition to these metrics, this study considered absolute percentage bias (

APB) and Kling–Gupta efficiency (

KGE) as key performance indicators for

GSR prediction. The optimal value of

APB is 0.0, with low-magnitude values indicating accurate model simulation; whereas,

KGE is a model evaluation criterion that can be decomposed into the contribution of the mean, variance and correlation on model performance [

91]. A

KGE value of unity is considered as the perfect fit. Similarly, underestimation bias and overestimation bias of models are represented by positive and negative values of

APB, respectively.

The mathematical derivation is as follows:

where

and

are the predicted (i.e., estimated) and measured values, respectively, and

refers to the average value of the respective data in the tested set.

4. Results and Discussion

In this section, the results generated by the DBN and DNN algorithms within the testing phase are presented to ascertain the appropriateness of the two deep learning methods used for

GSR prediction, tested at diverse sites including Australia’s solar cities. These were also benchmarked against single hidden layer (i.e., ANN) and ensemble models (random forest regression (RF), extreme gradient boosting regression (XGBoost), Gradient Boosting Machine (GBM) and decision trees (DT). It is noteworthy that the results are only presented for DNN

10 and DBN

2, the two optimized models in accordance with

Table 4 and

Table 5.

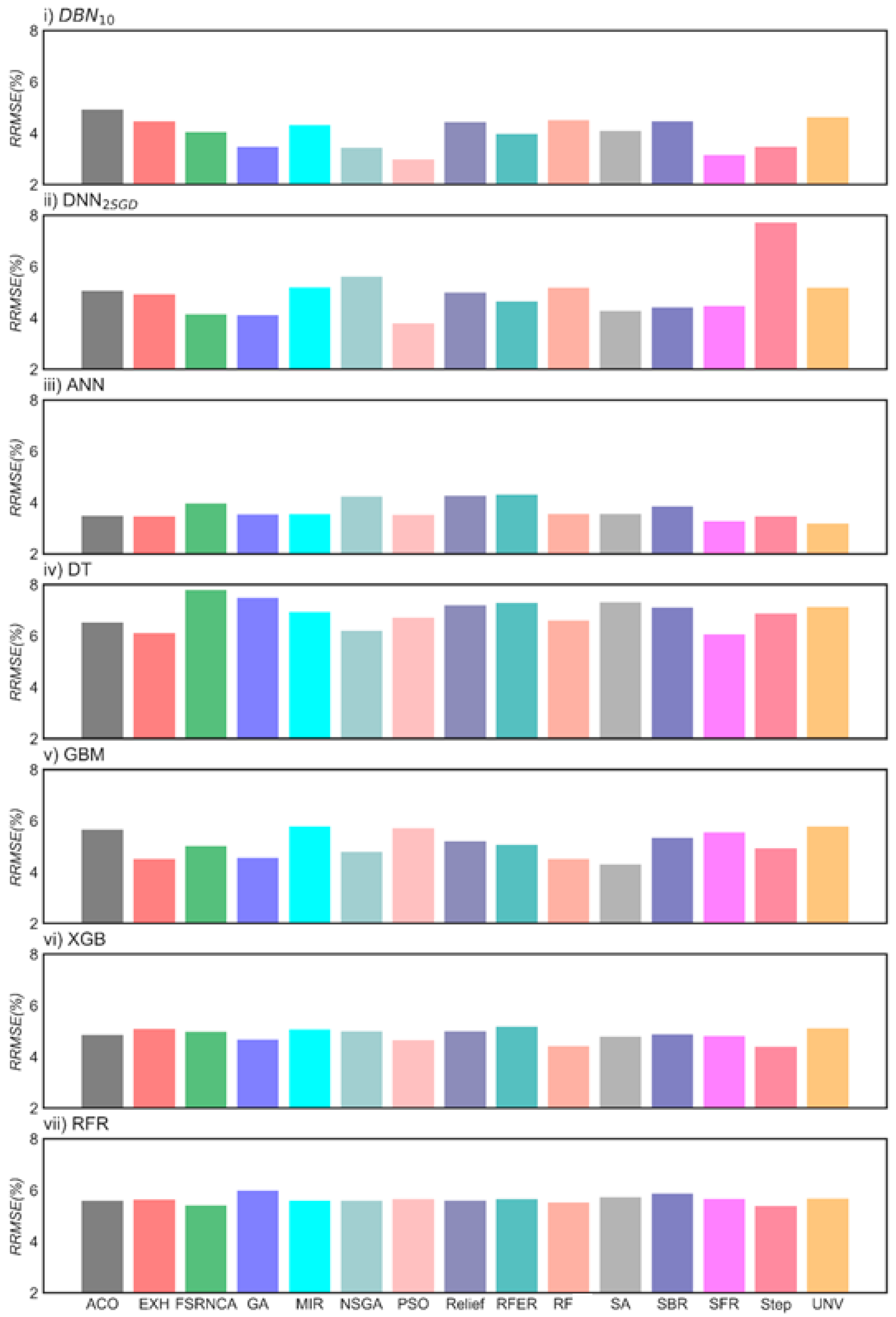

Figure 3 shows this for Adelaide, where the optimal model was selected on the basis of the lowest

RRMSE. It should be noted that for this location, the optimized models (DBN

10 and DNN

2) (where SGD was used as the back-propagation algorithm) with input selected by the PSO approach were seen to yield a better result. Similarly, for the ANN model, the inputs screened by the nondominated sorting genetic algorithm (NSGA) feature selection approach appeared to be better, and similarly for the DT, GBM, RF and XGB models, inputs screened by the sequential forward selection (SFR), SA, sequential backward selection (SBR), step and NSGA appeared to yield a relatively low

RRMSE compared to other feature selection methods.

The model designation is as follows: DBN10 = Deep Belief Network 10, DNN2SGD = Deep Neural Network 2 with SGD as back-propagation, ANN = neural network, DT = decision tree, RF = random forest regression, GBM = gradient boosting machine and XGBR= extreme gradient boosting regression.

In this study, a feature selection (FS) and sensitivity analysis process utilizing a Lowry plot (

Figure 2) were combined to deduce the best FS method for

GSR prediction. Note that the nomenclature of any model is designated as FS-(model name), for example, for the Adelaide study site, the model names are designated as PSO-DBN

10, PSO-DNN

2SGD, PSO-ANN, PSO-DT, PSO-GBM, PSO-RF and PSO-XGBR. The number that appears after the respective model name, for example, DBN

10, is used to represent Deep Belief Network Model Number 10, as deduced from

Table 3. Similarly, the subscript (SGD, AdaGrad, Adam) in the model names represent the back-propagation algorithm that was used in training the neural network model. For the ANN, however, only one back-propagation algorithm (LM), the most popular algorithm, was used, so the subscript is not mentioned.

Table 6 shows the training root mean squared error generated for different FS algorithms integrated with deep learning and its respective comparative algorithms, required to select the critical MODIS-derived predictors. In accordance with this evaluation, for the DBN

10 model, the GA appeared to be the best FS algorithm for the study site Blacktown, whereas the relief algorithm was the best for the case of Central Victoria, and the PSO algorithm was the best for both the Adelaide and Townsville study sites. However, when the DNN

2 model was evaluated, root mean squared errors were slightly higher for each FS algorithm compared to those obtained from DBN

10, but these predictive errors remained much lower than all single hidden layer and ensemble models, thus confirming the superiority of deep learning over the less sophisticated models. When both deep learning models (i.e., DBN

10 and DNN

2) were evaluated individually, there appeared to be a clear consensus that the DBN

10 model exceeded the performance of DNN

2, used with its best FS approach.

Table 7 compares deep learning models

vs. the counterpart models in the testing phase, measured by the correlation coefficient (

r), root mean squared error (

RMSE), mean absolute error (

MAE) and skill score (

RMSEss). As mentioned earlier, only the optimally-trained models with the lowest

MAE and

RMSE, the highest

r and the

RMSEss values are shown. Between the deep learning and comparative (SHL and ensemble) models, the DBN model yielded better

GSR predictions for all four solar cities. This is evident, for example, when comparing the DBN accuracy statistics (i.e.,

r ≈ 0.994

, RMSE ≈ 0.546 MJ·m

−2·day

−1, MAE ≈ 0.450 MJ·m

−2·day

−1 and

RMSEss ≈ 0.824 for Blacktown GA-DBN

10) with the equivalent ANN and GBM models result statistics (

r ≈ 0.989

, RMSE ≈ 0.739 MJ m

−2·day

−1, M

AE ≈ 0.536 MJ·m

−2·day

−1 and RM

SEss ≈ 0.739 for Blacktown GA-ANN and

r ≈ 0.988,

RMSE ≈ 0.664 MJ·m

−2·day

−1,

MAE ≈ 0.568 0 MJ·m

−2·day

−1 and

RMSEss ≈ 0.787 for Blacktown GA-GBM). Comparatively better results for DBN

10 models were also seen for all of the other solar cities, confirming the reliability of this deep learning approach, as a viable estimator

GSR, with implications for long-term solar energy assessments.

In conjunction with the statistical score metrics, the relative prediction errors were used to show the alternative “goodness-of-fit” for the predicted in relation to the observed

GSR data.

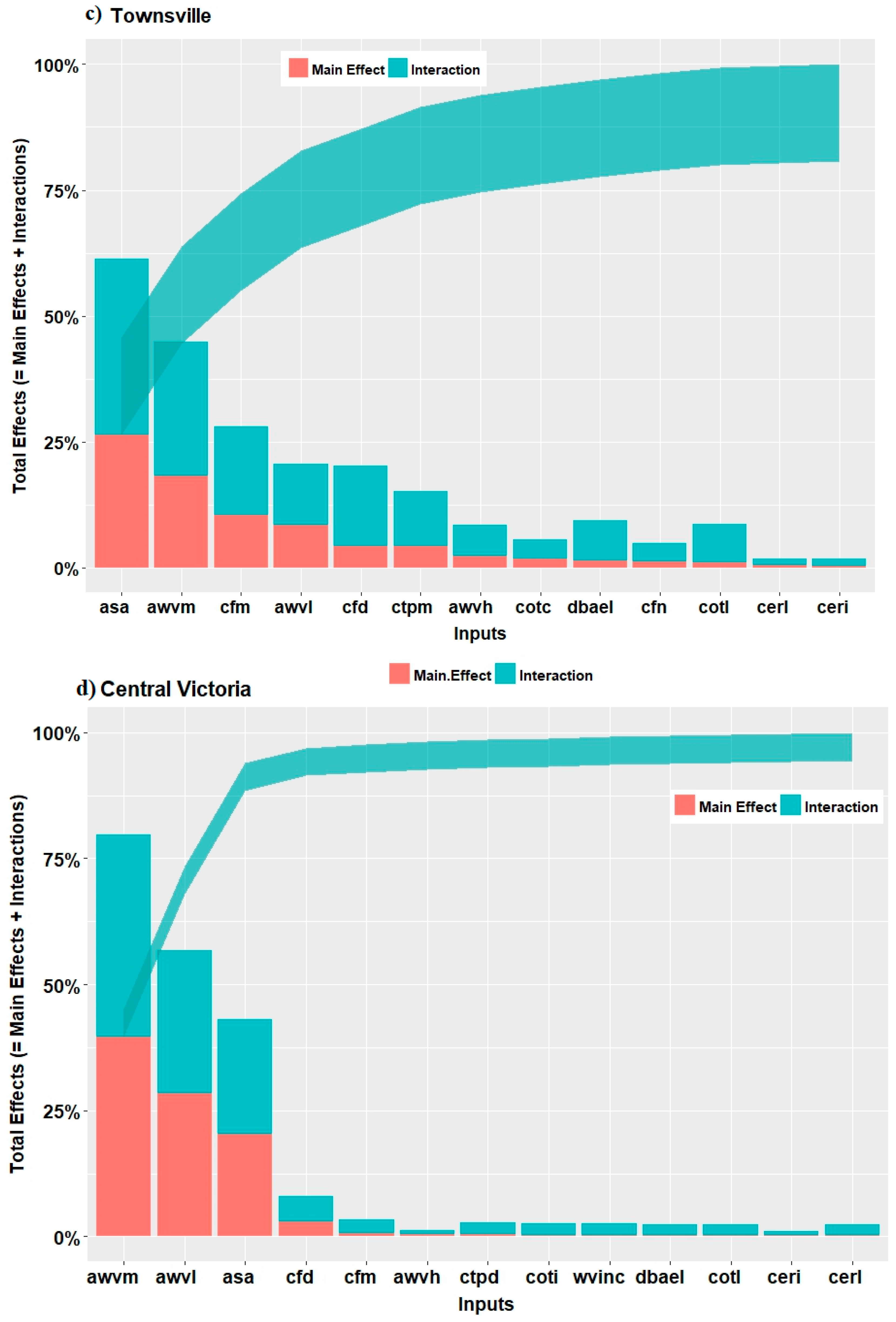

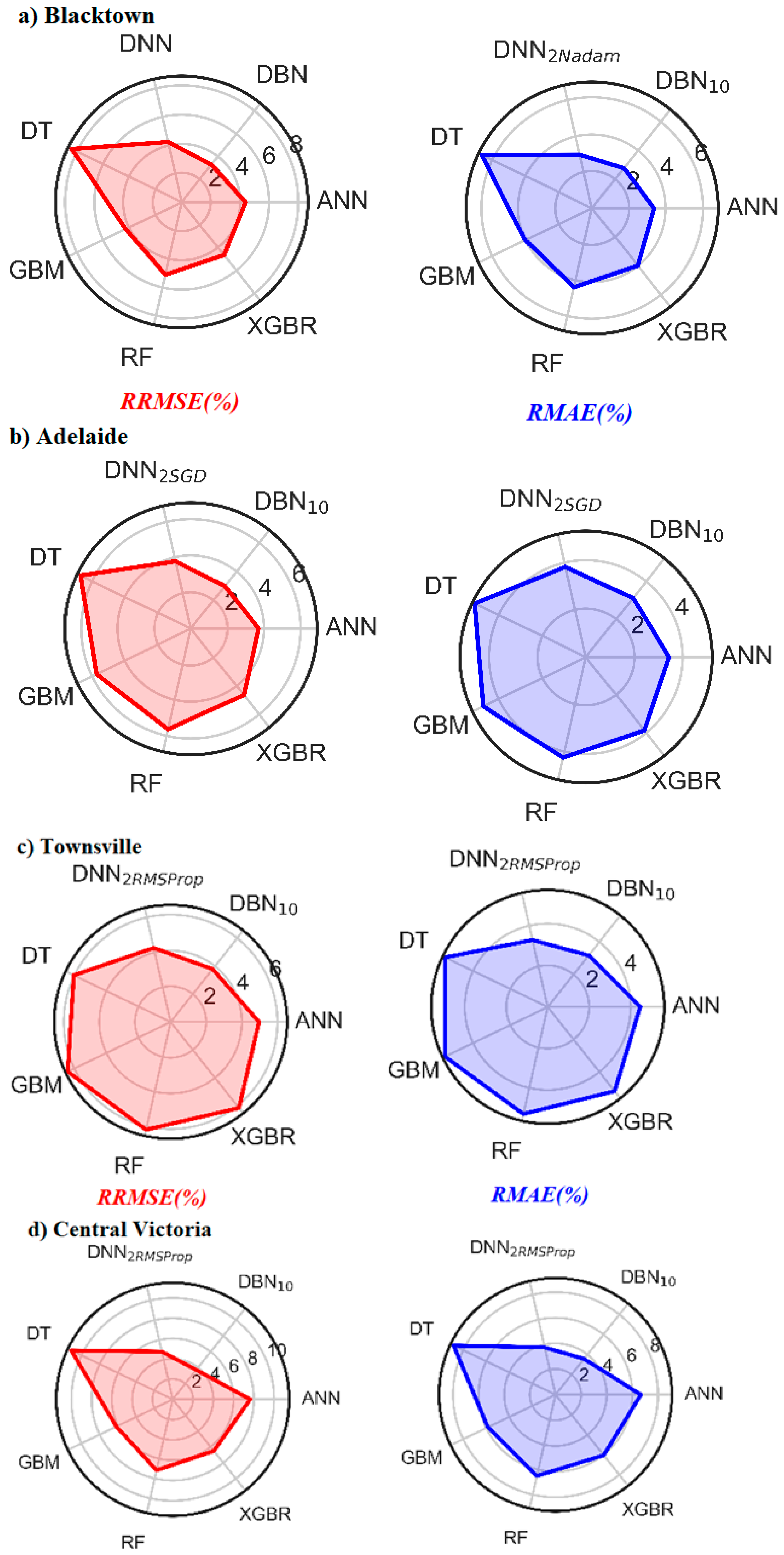

Figure 4 shows the radar plots in the model’s testing phase for DNN

2 and DBN

10 in terms of the

RRMSE (%) and

RMAE % values. Note that these percentage errors were also used as alternative metrics to enable the model comparison at geographically-diverse sites [

92]. It can be seen that the DBN model yielded high precision (with the lowest

RRMSE and

RMAE) followed by the comparative (SHL and ensemble) models.

For the optimal DBN model, the RRMSE and RMAE were found to be 3.279/2.763%, 2.989/3.124%, 3.713/3.572% and 3.792/3.175% for the study site Blacktown (GA-DBN10), Adelaide (PSO-DBN10), Central Victoria (Relief-DBN10), and Townsville (PSO-DBN10), respectively. On the other hand, the other deep learning model lagged behind the accuracy of DBN with 4.240/2.970%, 3.774/3.825%, 4.830/3.781% and 4.256/3.175% for Blacktown (GA-DNN2Nadam), Adelaide (PSO-DNN2SGD), Central Victoria (Relief-DNN2SGD) and Townsville (PSO-DNN2RMSProp), showing relatively good performance, compared to the SHL and ensemble models.

The SHL and ensemble models’ performances were lower than those of the DBN and DNN models, except for the study site Adelaide, where the PSO-ANN model (RRMSE/RMAE) was lower (3.702/3.442%) than the DNN model (RRMSE/RMAE ≈ 3.774/3.825%). In accordance with these outcomes, the relative measures concurred on the suitability of deep learning for GSR prediction at all four solar cities selected across Australia.

It is of interest to this study that a numerical quantification of model performance using Willmott’s index (

WI), Nash–Sutcliffe (

ENS) and Legates–McCabe’s index (

LM) was made where these metrics should ideally be unity for a perfect model. Subsequently, these results (

Table 8) indicated that deep learning is able to attain a dramatic improvement in comparison to SHL and the ensemble model (

Table 8).

The highest magnitude of WI ≈ 0.997, ENS ≈ 0.995 and LM ≈ 0.933 was registered for the Blacktown study site for a GA-DBN10 model. Intriguingly, the lowest value of WI ≈ 0.943, ENS ≈ 0.891 and LM ≈ 0.689 was registered at the Townsville study site for the PSO-RF model. Further, the GA-DBN10 model noted the increment in WI, ENS and LM by 5.2%, 9.06% and 21.02%, respectively, compared to the counterpart (SHL and ensemble) models for the Blacktown study site. A similar trend was also demonstrated for the other solar cities. Hence, it is evident that a deep learning model has a better potential to predict GSR over long-term periods.

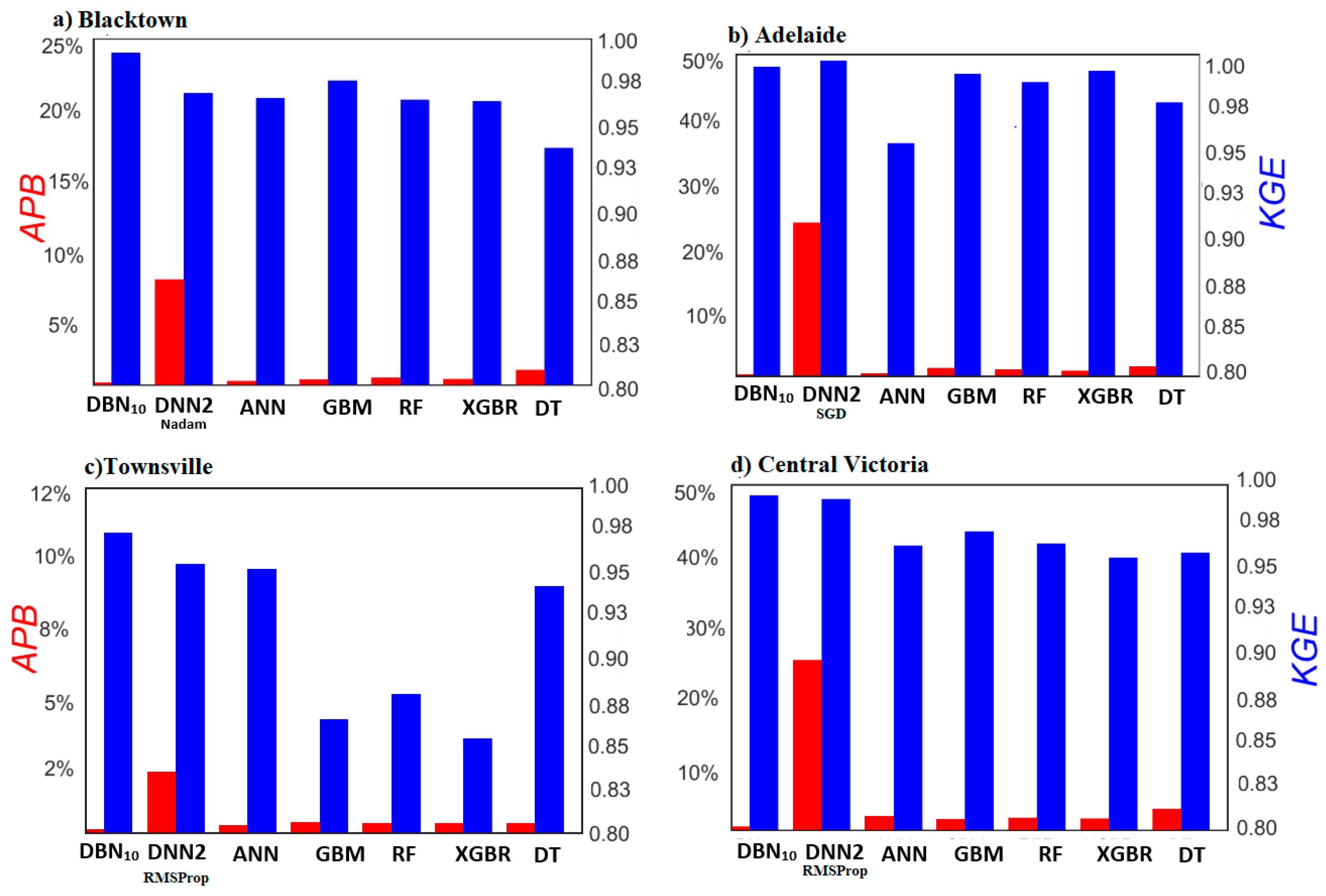

In this paper, a comprehensive evaluation of the deep learning approach for

GSR predictions was made in terms of the absolute percentage bias (

APB, %) and Kling–Gupta efficiency (

KGE) in the testing phase (

Figure 5). The evaluation of

KGE and

APB for these solar cities showed that the DBN

10 model constituted the best performing approach.

For example, KGE ≥ 0.99 and APB ≤ 0.025 for the case of Blacktown (GA-DBN10), Adelaide (PSO-DBN10), Central Victoria (Relief-DBN10) and Townsville (PSO-DBN10). Indeed, this plot shows that the magnitude of KGE was oriented toward unity, and the magnitude of APB was oriented toward zero for all deep learning models for all solar cities in consideration. Concurrent with the earlier findings, the deep learning model can be considered as a trustworthy and powerful tool for the prediction of long-term GSR, at least by the evidence generated so far.

Further insights were gained by checking the correspondence between the predicted and actual

GSR. Comparing the prescribed deep learning models with an earlier study using wavelet support vector machine models (W-SVM) [

14] applied in Australia, it became evident that the precision of the present model was relatively good for prediction of daily averaged monthly global solar radiation. In fact, in that study, their W-SVM model for the Townsville study produced a regression line equation

GSRpred =

0.849 ×

GSRobs +

3.02, whereas the present deep learning model generated

GSRpred =

0.969 ×

GSRobs +

0.678 and

GSRpre =

0.939 ×

GSRobs +

1.196 for DBN (PSO-DBN

10) and DNN (PSO-DNN

2RMSProp), respectively. It is therefore clear that the prescribed approaches exceeded the performance of earlier studies.

To assess a model’s stability for predicting

GSR, the spread of the prediction errors is illustrated with the help of a violin plot [

92] (

Figure 6). In plotting this, all of the sites’ actual and predicted

GSR were considered. It should be noted that a violin plot is a synergistic combination of a box plot and density trace that is rotated and placed on each side to show the distribution shape of these data. The interquartile range is represented by a thick black bar in the centre, whereas 95% confidence intervals are represented by the thin black line or whisker, and the median is represented by the dot.

The shape of the violin displays the frequencies of the values. As can be seen on the figure (

Figure 6) with a wider section of the plot, the prediction error (

PE) generated by the DBN model had a high probability of a value of zero as compared to the benchmark models. Likewise, the median error (white dot) for a deep learning model was lower than that of comparative (SHL and ensemble) models, and the shape of distribution (extremely thin on each end and wide in the middle) indicates that the

PE of DBN were highly concentrated around the median. Overall, it is noteworthy that the DBN model enjoyed superior performance relative to its comparative (SHL and ensemble) models tested for all four Australian solar cities.

To draw a more conclusive argument on the suitability of the deep learning model for

GSR prediction,

Table 9 shows the prediction error (%), with its respective normalized frequency of the datum points, in each error bracket tested for each of the four solar cities. The normalized frequency is presented as a percentage of the predicted points in each error bracket in terms of the total data points in the testing period. Consistent with the earlier results, the most accurate prediction was obtained by using a deep learning model as the

PE (%) attained the maximum value for the lowest range (e.g., Townsville ≈ 81 % (DBN and DNN) within [0 ⩽ |PE| < 4]) compared to the comparative (SHL and ensemble) models (e.g., Townsville ≈ 72.3 % (XGBR) within [0 ⩽ |PE| < 4]).

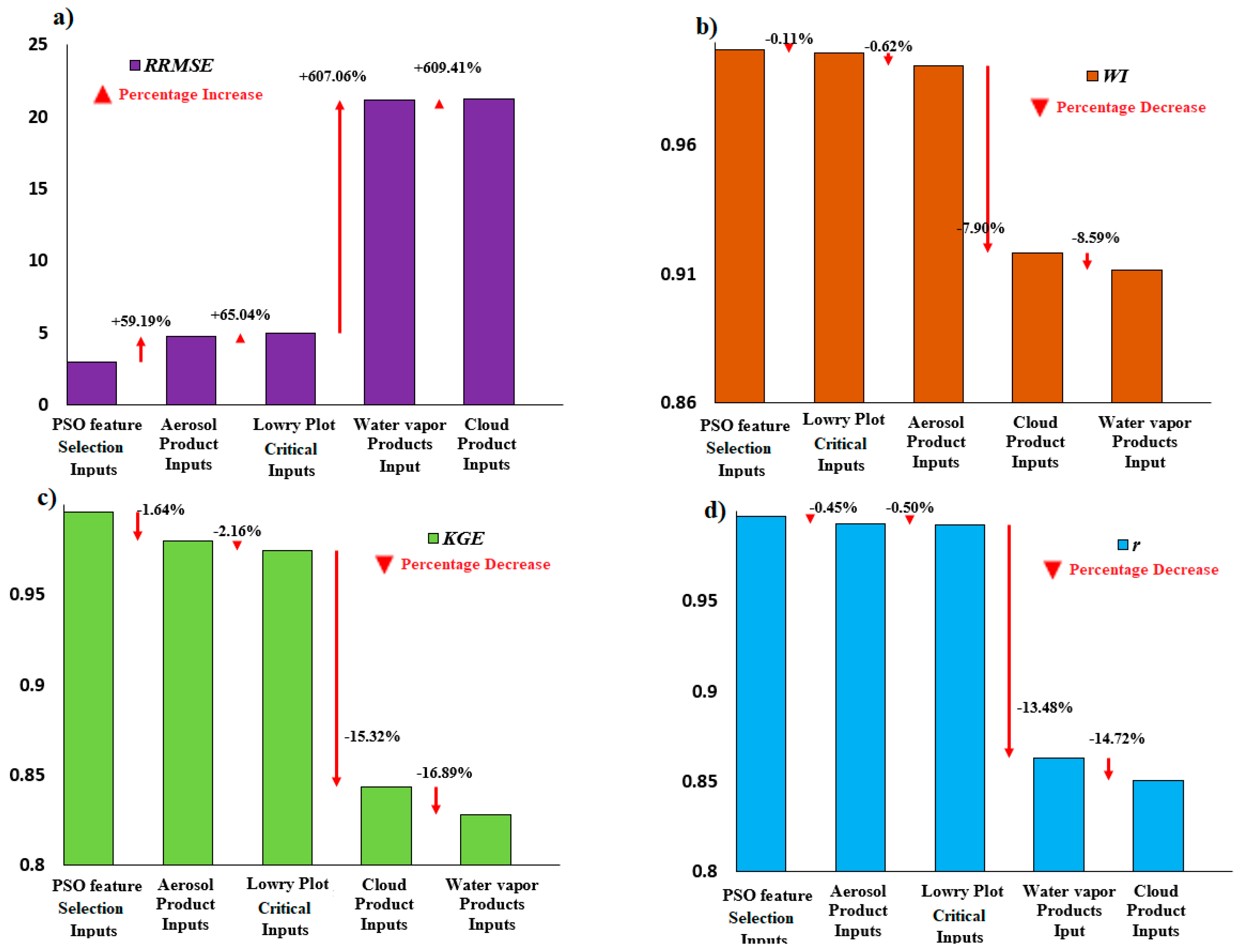

In

Figure 7, the sensitiveness of clouds, water vapour and aerosols (i.e., the three main contributors to the fluctuations in global solar radiation) including these GEM-SA critical parameters (Lowry plot,

Figure 2) as the predictors of

GSR are explored more closely for the case of the Adelaide study site. The four DBN models were tested with the cloud parameters (i.e.,

cfd, cfn, cotc, cotl, ctpm and

cttd), aerosol parameters (

asa and

aod), atmospheric water vapour (

awvl and

awvm) and GEM-SA critical parameters (

asa,

cfd, awvl, and

awvm) to arrive at conclusive arguments. The model results were compared with the original model PSO-DBN

10 (

Table 3).

From the graph (

Figure 7), it is deduced that the PSO feature selection gave the best prediction with the lowest

RRMSE and the highest values of

KGE,

WI and

r. When only aerosol products from the MODIS data repository were used as a potential input, the

RRMSE appeared to increase by 65%, whereas the

WI,

KGE and

r-values decreased by 66.8%, 67% and 66.9%, respectively. Similarly, with the cloud and water vapour product as a potential input, the

RRMSE increased much greater than 600%, and

KGE,

WI and

r decreased by more than 70%.

Furthermore, with only four critical parameters from GEM-SA (Lowry plot, asa, cfd, awvl and awvm) as an input, the RRMSE was lower than that of the aerosol, cloud and water vapour product as a potential input. Therefore, it is evident that the cloud and aerosol products were very important predictors and should not be neglected, for GSR predictions at the selected study sites.

6. Conclusions

Deep learning models were developed for Australia’s solar cities (i.e., Adelaide, Blacktown, Townsville, and Central Victoria) to estimate long-term GSR. These cities are heterogeneously distributed and represent a significant variation in their climatic conditions. In order to predict the monthly averaged daily GSR as an output, publicly-available MODIS satellite data (aerosol, cloud and water vapour) from GIOVANNI were extracted as the most relevant predictors. Fifteen different wrapper and filter-based feature selection algorithms were applied, with sensitivity analysis of all MODIS-derived predictors using GEM-SA to select the optimum input for the prediction of GSR. The data were segregated 80% for training, and 20% data were used for testing. A total of 180 deep belief networks (12 DBN models, 15 feature selections) and 630 deep neural network models were developed for each site. The developed models were benchmarked with single hidden layer and ensemble models including neural network, gradient boosting machine, extreme gradient boosting regression, decision tree and random forest regression models.

A holistic evaluation via statistical metrics and diagnostic plots revealed that the DBN model generated superior prediction in comparison with the benchmark models (viz., ANN, GBM, XGBR, DT and RF). The site comparison showed that the DBN model had the best performance at Blacktown (

Table 7) with the lowest

RRMSE ≈ 2.988% and

RMAE ≈ 2.76% and highest

r ≈ 0.994 and

RMSEss ≈ 0.824 in predicting

GSR. Similarly, the DBN model outperformed all the benchmark models for all sites (

Figure 5) in terms of absolute percentage bias and Kling–Gupta efficiency (e.g.,

KGE ≈ 0.992 and

APB ≈ 0.027 for Blacktown using the DBN model,

KGE ≈ 0.855 and

APB ≈ 0.049 for Townsville using the XGBR model). Furthermore, the regression plot of actual versus predicted

GSR demonstrated that, with a slope closer to unity and an intercept closer to zero, the DBN was best in

GSR estimation and even outperformed the previous study [

93] using a W-SVM model for

GSR estimation at Townsville. In addition to this, the sensitivity analysis of the predictor variables demonstrated that aerosol, cloud, and water vapour parameters as input parameters played a significant role in the prediction of

GSR (

Figure 7). This is a clearly understandable finding, as cloud and aerosol have obvious noticeable effects on sky brightness during daylight hours.

The findings of this study ascertained that with appropriate feature selection (such as PSO, GA and GEM-SA for sensitivity analysis), the deep learning model effectively captured the nonlinear dynamics and interactions amongst the input parameters and GSR in generating optimally-combined and -stabilized predictions for all four study sites. The DL model yielded good results for estimating monthly averaged daily GSR, either better than or comparable to many previous studies reported in the literature. One can conclude that the method derived here can be implemented as a suitable alternative and be successfully applied to similar regions.