Monitoring and Identification of Road Construction Safety Factors via UAV

Abstract

1. Introduction

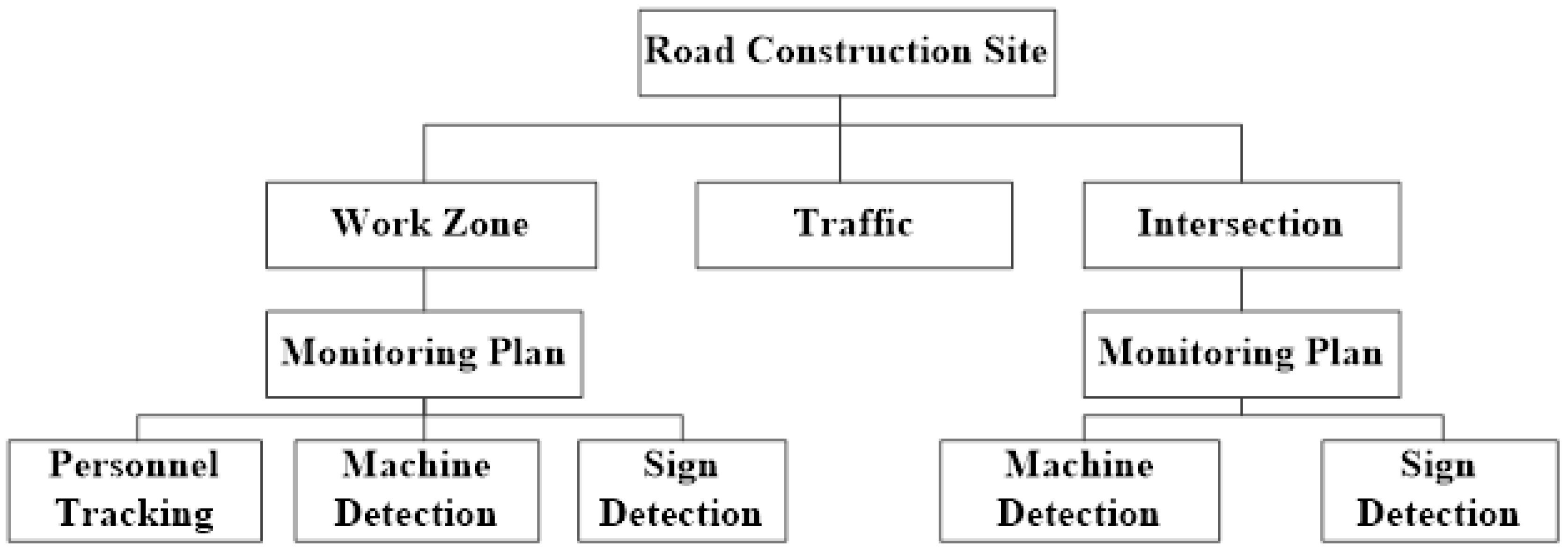

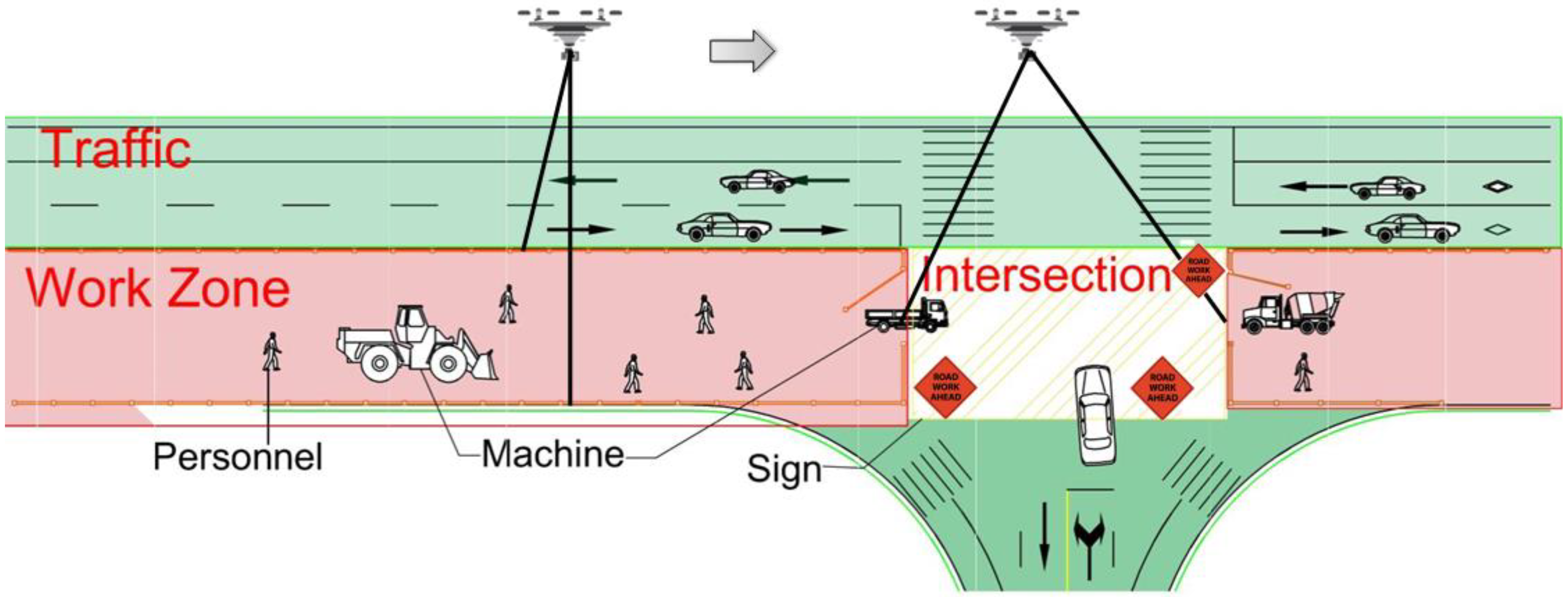

2. Monitoring Framework

2.1. Partition of Construction Site

2.1.1. Work Zone Monitoring Plan

2.1.2. Intersection Monitoring Plan

2.2. UAV Settings

2.2.1. Flight Altitude

2.2.2. Flight Speed

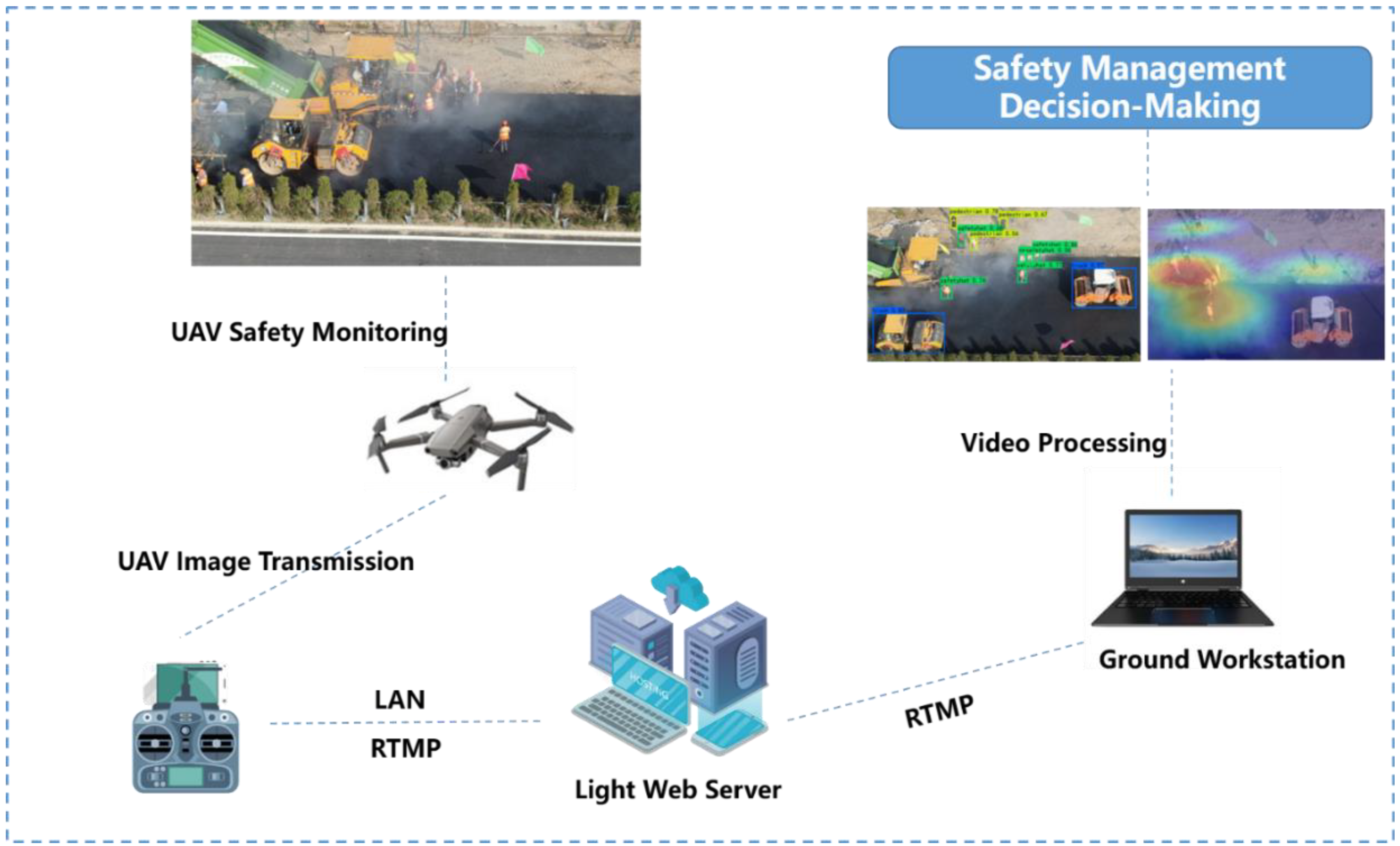

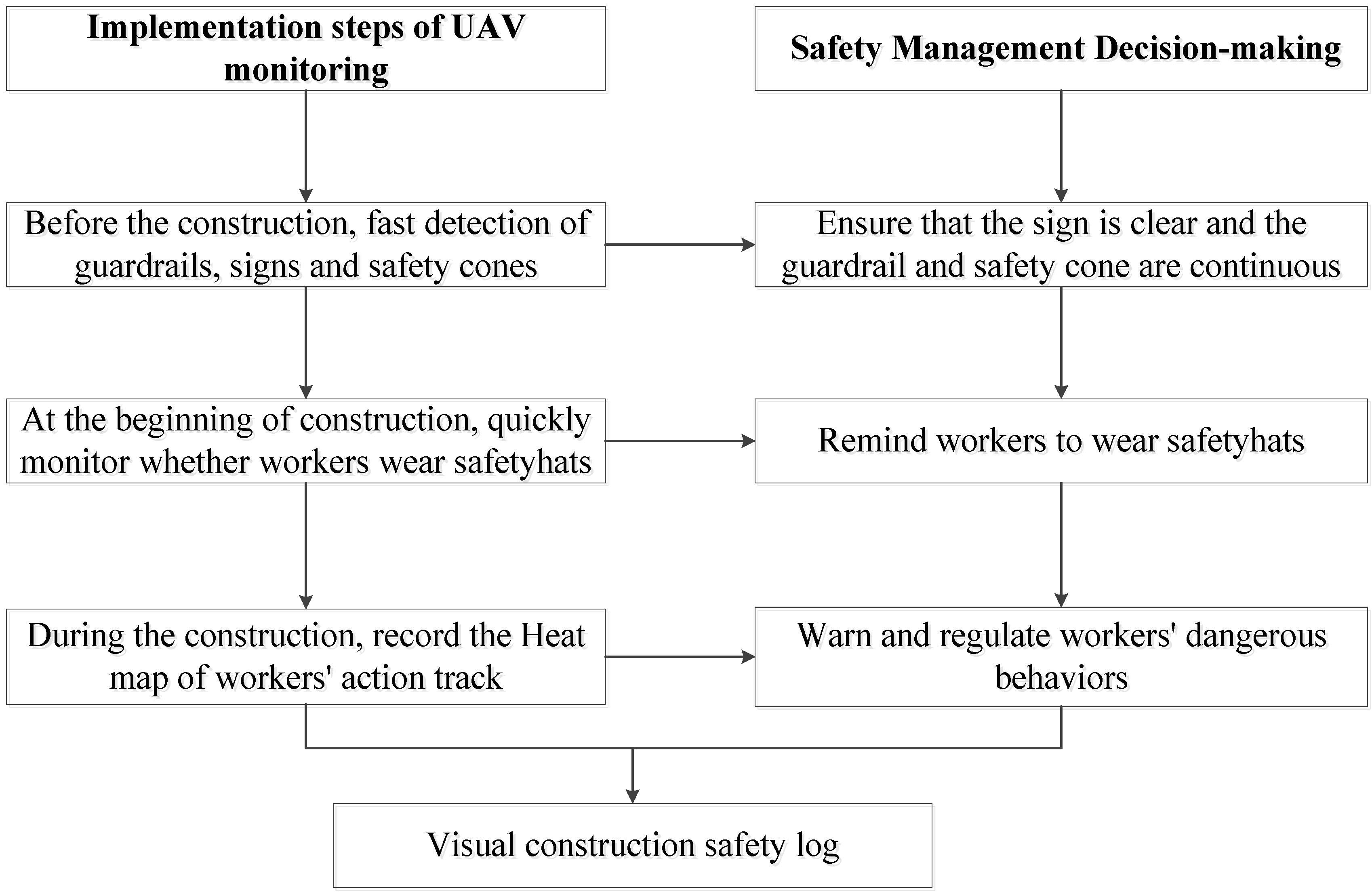

2.3. UAV Monitoring and Safety Management Decision-Making

3. Deep Learning Algorithms

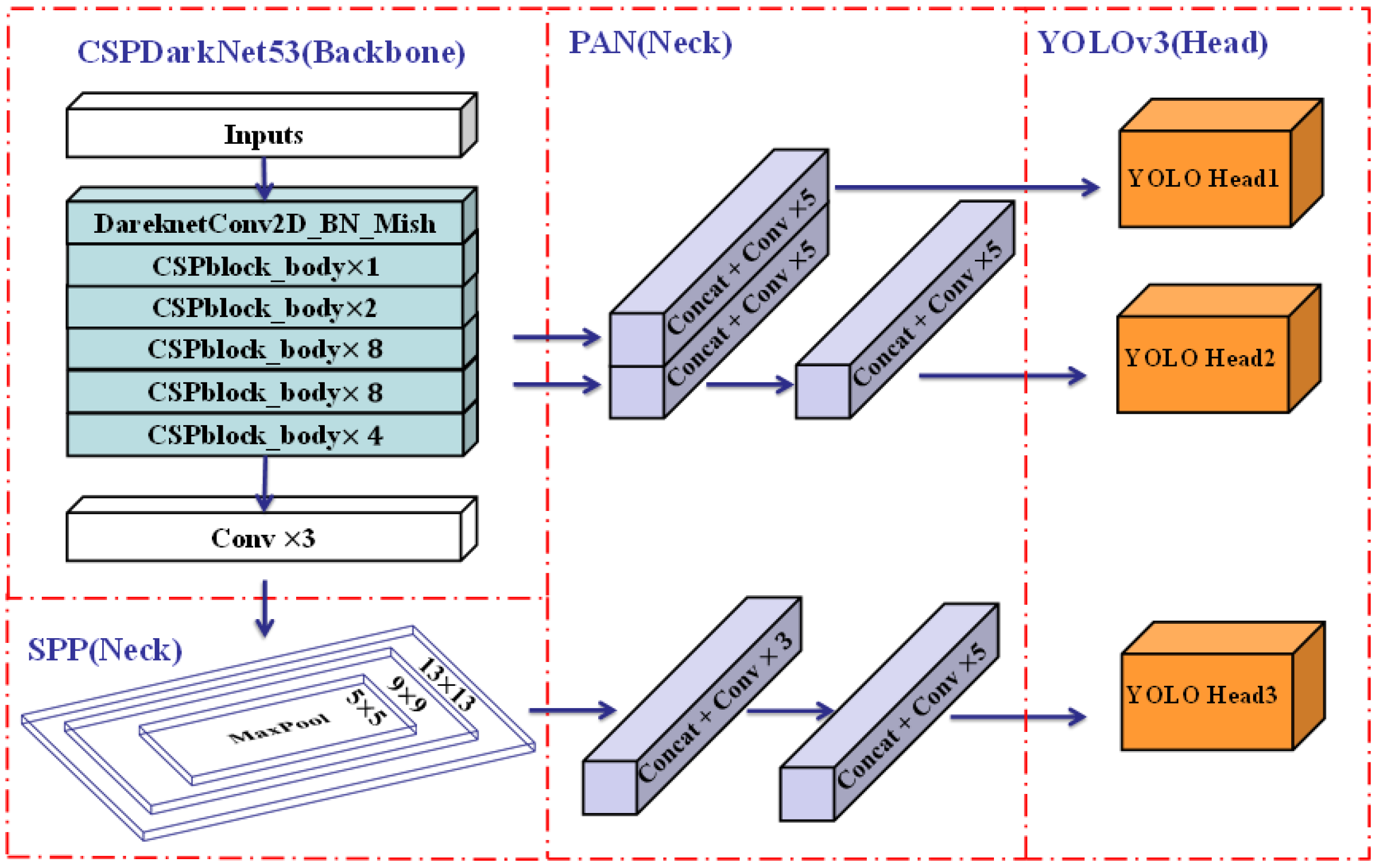

3.1. Object Detection Based on YOLOv4

3.2. Tracking Heat Map Generation Based on YOLOv4-DeepSORT

4. Training and Evaluation

4.1. Dataset

4.2. Training Parameters

4.2.1. Pre-Trained Weights

4.2.2. Anchor Size

4.2.3. Parameters

4.3. Evaluation Metrics

5. Field Validation

5.1. General Information

5.2. Object Detection Results

5.3. Tracking Heat Map Results

5.4. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Gannapathy, V.R.; Subramaniam, S.K.; Diah, A.M.; Suaidi, M.K.; Hamidon, A.H. Risk factors in a road construction site. Proceedings of the World Academy of Science. Eng. Technol. 2008, 46, 640–643. [Google Scholar]

- Subramaniam, S.K.; Ganapathy, V.R.; Subramonian, S.; Hamidon, A.H. Automated traffic light system for road user’s safety in two lane road construction sites. WSEAS Trans. Circuits Syst. 2010, 2, 71–80. [Google Scholar]

- Dobromirov, V.; Meike, U.; Evtiukov, S.; Bardyshev, O. Safety of transporting granular road construction materials in urban environment. Transp. Res. Procedia 2020, 50, 86–95. [Google Scholar] [CrossRef]

- Nkurunziza, D. Investigation into Road Construction Safety Management Techniques. Open J. Saf. Sci. Technol. 2020, 10, 81–90. [Google Scholar] [CrossRef]

- Glendon, A.I.; Litherland, D.K. Safety climate factors, group differences and safety behaviour in road construction. Saf. Sci. 2001, 39, 157–188. [Google Scholar] [CrossRef]

- Cooke, T.; Lingard, H.; Blismas, N. Multi-level safety climates: An investigation into the health and safety of workgroups in road construction. In Proceedings of the 14th Rinker International Conference, Gainesville, FL, USA, 9–11 March 2008; pp. 349–361. [Google Scholar]

- Zadobrischi, E.; Dimian, M. Inter-Urban Analysis of Pedestrian and Drivers through a Vehicular Network Based on Hybrid Communications Embedded in a Portable Car System and Advanced Image Processing Technologies. Remote Sens. 2021, 13, 1234. [Google Scholar] [CrossRef]

- Park, J.W.; Kim, K.; Cho, Y.K. Framework of automated construction-safety monitoring using cloud-enabled BIM and BLE mobile tracking sensors. J. Constr. Eng. Manag. 2017, 143, 05016019. [Google Scholar] [CrossRef]

- Xu, Q.; Chong, H.Y.; Liao, P.C. Collaborative information integration for construction safety monitoring. Autom. Constr. 2019, 102, 120–134. [Google Scholar] [CrossRef]

- Wu, J.; Peng, L.; Li, J.; Zhou, X.; Zhong, J.; Wang, C.; Sun, J. Rapid safety monitoring and analysis of foundation pit construction using unmanned aerial vehicle images. Autom. Constr. 2021, 128, 103706. [Google Scholar] [CrossRef]

- Seo, J.; Han, S.; Lee, S.; Kim, H. Computer vision techniques for construction safety and health monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, L.Y.; Jiang, Y.X.; Li, W.; Wang, Y.S.; Zhao, H.; Wu, W.; Zhang, X.J. Research and Application of Intelligent Monitoring System Platform for Safety Risk and Risk Investigation in Urban Rail Transit Engineering Construction. Adv. Civ. Eng. 2021, 2021, 9915745. [Google Scholar] [CrossRef]

- Liu, W.; Meng, Q.; Li, Z.; Hu, X. Applications of Computer Vision in Monitoring the Unsafe Behavior of Construction Workers: Current Status and Challenges. Buildings 2021, 11, 409. [Google Scholar] [CrossRef]

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement distress detection using convolutional neural networks with images captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Bu, T.; Zhu, J.; Ma, T. A UAV Photography–Based Detection Method for Defective Road Marking. J. Perform. Constr. Facil. 2022, 36, 04022035. [Google Scholar] [CrossRef]

- Ahmed, F.; Mohanta, J.C.; Keshari, A.; Yadav, P.S. Recent Advances in Unmanned Aerial Vehicles: A Review. Arab. J. Sci. Eng. 2022, 47, 7963–7984. [Google Scholar] [CrossRef]

- Yu, T.; Deng, B.; Gui, J.; Zhu, X.; Yao, W. Efficient Informative Path Planning via Normalized Utility in Unknown Environments Exploration. Sensors 2022, 22, 8429. [Google Scholar] [CrossRef]

- Mehta, P.; Gupta, R.; Tanwar, S. Blockchain envisioned UAV networks: Challenges, solutions, and comparisons. Comput. Commun. 2020, 151, 518–538. [Google Scholar] [CrossRef]

- Qadir, Z.; Ullah, F.; Munawar, H.S.; Al-Turjman, F. Addressing disasters in smart cities through UAVs path planning and 5G communica-tions: A systematic review. Comput. Commun. 2021, 168, 114–135. [Google Scholar] [CrossRef]

- Li, X.; Tan, J.; Liu, A.; Vijayakumar, P.; Kumar, N.; Alazab, M. A Novel UAV-Enabled Data Collection Scheme for Intelligent Transportation System Through UAV Speed Control. IEEE Trans. Intell. Transp. Syst. 2020, 22, 2100–2110. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, G.; Wu, X.; Wang, Y.; Ma, Y. An Enhanced Viola-Jones Vehicle Detection Method from Unmanned Aerial Vehicles Imagery. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1845–1856. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Aloqaily, M.; Al Ridhawi, I.; Guizani, M. Energy-Aware Blockchain and Federated Learning-Supported Vehicular Networks. IEEE Trans. Intell. Transp. Syst. 2021, 23, 22641–22652. [Google Scholar] [CrossRef]

- Zhan, H.; Liu, Y.; Cui, Z.; Cheng, H. Pedestrian detection and behavior recognition based on vision. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 771–776. [Google Scholar]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Lei, L.; Zou, H. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Pérez-Hernández, F.; Tabik, S.; Lamas, A.; Olmos, R.; Fujita, H.; Herrera, F. Object Detection Binary Classifiers methodology based on deep learning to identify small objects handled similarly: Application in video surveillance. Knowl.-Based Syst. 2020, 194, 105590. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Net. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Liu, N.; Xu, D. Advanced Deep-Learning Techniques for Salient and Category-Specific Object Detection: A Survey. IEEE Signal Process. Mag. 2018, 35, 84–100. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Zhou, P.; Xu, D. Learning Rotation-Invariant and Fisher Discriminative Convolutional Neural Networks for Object Detection. IEEE Trans. Image Process. 2018, 28, 265–278. [Google Scholar] [CrossRef]

- Liu, H.; Fan, K.; Ouyang, Q.; Li, N. Real-Time Small Drones Detection Based on Pruned YOLOv4. Sensors 2021, 21, 3374. [Google Scholar] [CrossRef]

- Shi, Q.; Li, J. Objects detection of UAV for anti-UAV based on YOLOv4. In Proceedings of the 2020 IEEE 2nd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Weihai, China, 14–16 October 2020; pp. 1048–1052. [Google Scholar]

- Parico AI, B.; Ahamed, T. Real time pear fruit detection and counting using YOLOv4 models and deep SORT. Sensors 2021, 21, 4803. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Zhang, Q.; Liang, X.; Wang, Y.; Zhou, C.; Mikulovich, V.I. Traffic Lights Detection and Recognition Method Based on the Improved YOLOv4 Algorithm. Sensors 2022, 22, 200. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao HY, M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhang, X.; Hao, X.; Liu, S.; Wang, J.; Xu, J.; Hu, J. Multi-target tracking of surveillance video with differential YOLO and DeepSort. In Proceedings of the Eleventh International Conference on Digital Image Processing (ICDIP 2019), Guangzhou, China, 10–13 May 2019; International Society for Optics and Photonics: Bellingham, WA, USA, 2019; Volume 11179, p. 111792L. [Google Scholar]

- Chan, Z.Y.; Suandi, S.A. City tracker: Multiple object tracking in urban mixed traffic scenes. In Proceedings of the 2019 IEEE International Conference on Signal and Image Processing Applications (ICSIPA), Kuala Lumpur, Malaysia, 17–19 September 2019; pp. 335–339. [Google Scholar]

- Doan, T.N.; Truong, M.T. Real-time vehicle detection and counting based on YOLO and DeepSORT. In Proceedings of the 2020 12th International Conference on Knowledge and Systems Engineering (KSE), Can Tho, Vietnam, 12–14 November 2020; pp. 67–72. [Google Scholar]

- Rezaei, M.; Azarmi, M. DeepSOCIAL: Social Distancing Monitoring and Infection Risk Assessment in COVID-19 Pandemic. Appl. Sci. 2020, 10, 7514. [Google Scholar] [CrossRef]

- Chen, X.; Li, Z.; Yang, Y.; Qi, L.; Ke, R. High-Resolution Vehicle Trajectory Extraction and Denoising from Aerial Videos. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3190–3202. [Google Scholar] [CrossRef]

- Ke, R.; Li, Z.; Tang, J.; Ke, R. Real-time traffic flow parameter estimation from UAV video based on ensemble classifier and optical flow. IEEE Trans. Intell. Transp. Syst. 2018, 20, 54–64. [Google Scholar] [CrossRef]

- Wang, J.; Guo, H.; Li, Z.; Song, A.; Niu, X. Quantile Deep Learning Model and Multi-objective Opposition Elite Marine Predator Optimization Algorithm for Wind Speed Prediction. Appl. Math. Model. 2022, in press. [Google Scholar] [CrossRef]

- Baidya, R.; Jeong, H. YOLOv5 with ConvMixer Prediction Heads for Precise Object Detection in Drone Imagery. Sensors 2022, 22, 8424. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Zhu, J. Road-Construction Dataset. 2022. Available online: https://github.com/zjq2007333/road_construction.git (accessed on 9 November 2022).

| Parameter | Value |

|---|---|

| Sensor size | 12.8 mm × 9.6 mm CMOS |

| Focal length | 10 mm (fixed) |

| Resolution | 5472 × 3648 |

| Weight | 907 g |

| Max. flight time | 31 min |

| Monitoring Area | Altitude (m) | Speed (km/h) | Shooting Area (m × m) |

|---|---|---|---|

| Division | 15 | 50 | 19.2 × 14.4 |

| Work Zone | 10 | 18 | 12.8 × 9.6 |

| Cross Area | 15 | 0 | 19.2 × 14.4 |

| Parameters | Value |

|---|---|

| Pre-trained weight | YOLOv4_weight |

| Anchor size | Scale 1: [8, 29], [15, 27], [11, 43] |

| Scale 2: [16, 51], [18, 68], [23, 64] | |

| Scale 3: [111, 77], [325, 157], [232, 325] | |

| Freeze training epochs | 50 |

| Unfreeze training epochs | 250 |

| Batch_size | Freeze: 4 |

| Unfreeze: 2 | |

| Learning rate | 10-2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, C.; Zhu, J.; Bu, T.; Gao, X. Monitoring and Identification of Road Construction Safety Factors via UAV. Sensors 2022, 22, 8797. https://doi.org/10.3390/s22228797

Zhu C, Zhu J, Bu T, Gao X. Monitoring and Identification of Road Construction Safety Factors via UAV. Sensors. 2022; 22(22):8797. https://doi.org/10.3390/s22228797

Chicago/Turabian StyleZhu, Chendong, Junqing Zhu, Tianxiang Bu, and Xiaofei Gao. 2022. "Monitoring and Identification of Road Construction Safety Factors via UAV" Sensors 22, no. 22: 8797. https://doi.org/10.3390/s22228797

APA StyleZhu, C., Zhu, J., Bu, T., & Gao, X. (2022). Monitoring and Identification of Road Construction Safety Factors via UAV. Sensors, 22(22), 8797. https://doi.org/10.3390/s22228797