1. Introduction

Double-entry bookkeeping has shaped financial record-keeping for centuries and supports consistent tracking of monetary flows between accounts (

Sangster, 2016). Increasing transactional complexity and regulatory expectations expose limits in transparency and auditability. Triple-Entry (TE) accounting introduces a cryptographic third entry that records contextual metadata, thereby strengthening verifiability (

Sarin, 2022;

Sgantzos et al., 2023;

Grigg, 2005,

2024;

Faccia & Mosteanu, 2019;

Cai, 2021).

Prior proposals show important progress yet stop short of an operational solution. Esersky’s inventory-centred “Russian Triple-Entry” targeted synchronisation across books (

Faccia et al., 2020). Ijiri’s Momentum Accounting added a third axis of “force” for economic measurement (

Ijiri, 1986). Grigg’s approach removed reconciliation through shared cryptographic receipts (

Grigg, 2005,

2024). REA shifted attention to enterprise-level shared ledgers (

McCarthy, 1982). X-Accounting integrated blockchain and AI as a triple-axis model for accountability and auditing (

Faccia et al., 2020;

Faccia & Mosteanu, 2019;

Faccia et al., 2021). These strands face adoption hurdles, gaps in accrual integration, or weak compatibility with current ERP and AIS infrastructures (

Groblacher & Mizdrakovič, 2019).

A persistent gap remains. TE proposals enrich records, yet the literature offers no systematic method to transform those records into analytical features for oversight at scale. Traditional audit techniques struggle with volume, velocity, and heterogeneity in TE data (

Chowdhury, 2021;

Petratos, 2024;

Ibanez et al., 2023;

Cai, 2021). Some work frames TE as an audit tool (

Dixit et al., 2024;

Qadir & Muhamed, 2022) and places it within distributed ledger ecosystems and DeFi contexts (

Schuldt & Peskes, 2023). However, the field lacks a framework that links TE to machine learning workflows for anomaly detection, compliance monitoring, and forecasting (see

Table 1 for comparative analyses).

This study addresses the gap with a conceptual framework that integrates TE with machine learning. The framework demonstrates how cryptographically sealed financial entries and contextual metadata are transformed into structured features for supervised and unsupervised analysis, time-series modelling, and rules-based monitoring (

Chowdhury, 2021;

Petratos, 2024;

Ibanez et al., 2023;

Cai, 2021). The contribution bridges the gap between accounting design and computational methods, advancing theory and supporting practice for auditors, regulators, and system designers (

Sarin, 2022;

Sgantzos et al., 2023;

Grigg, 2005,

2024;

McCarthy, 1982;

Faccia & Mosteanu, 2019;

Faccia et al., 2021;

Groblacher & Mizdrakovič, 2019;

Petratos, 2024;

Ibanez et al., 2023;

Dixit et al., 2024;

Qadir & Muhamed, 2022;

Schuldt & Peskes, 2023;

Cai, 2021).

Research objectives are threefold. First, define a TE data representation that supports feature engineering and scalable analysis. Second, map tasks such as fraud detection, anomaly identification, compliance checks, and forecasting to suitable model families and evaluation metrics. Third, position TE alongside alternatives for transparency and privacy, and clarify where privacy-preserving computation complements the framework (

Zou et al., 2019;

Inder, 2023;

Alkan, 2021;

Dai & Vasarhelyi, 2017;

Bónson & Bednárová, 2019;

Fullana & Ruiz, 2021;

Rizal Batubara et al., 2019;

Shrestha & Kim, 2019;

Elommal & Manita, 2021).

The scope focuses on enriched transactional records that include identifiers, timestamps, counterparties, and contractual references in addition to debits and credits. The framework targets organisational settings that adopt permissioned or public ledgers, with emphasis on transparency and continuous assurance. Privacy-preserving computation appears as a complement where required by governance or law (

Zou et al., 2019;

Inder, 2023;

Bónson & Bednárová, 2019;

Fullana & Ruiz, 2021;

Rizal Batubara et al., 2019;

Shrestha & Kim, 2019;

Elommal & Manita, 2021).

The remainder of this paper proceeds as follows:

Section 2 outlines TE foundations and data elements.

Section 3 links TE to advanced analytics and machine learning.

Section 4 details the framework from feature engineering to modelling and evaluation.

Section 5 compares TE with multiparty computation for privacy-preserving analysis.

Section 6 discusses implications, limitations, and organisational impact and then identifies directions for future research.

Smart contracts execute agreed logic on a distributed platform and write outcomes to a ledger (

Zou et al., 2019). TE records the third entry on a distributed ledger and secures an immutable transaction history (

Inder, 2023). TE supplies verifiable records. Smart contracts automate execution and embed controls in code.

Table 2 contrasts scope, verification, immutability, transparency, automation, conditionality, governance, fraud prevention, auditing, and limitations across both concepts (

Inder, 2023). This pairing cuts manual reconciliation and strengthens audit trails for permissioned participants (

Inder, 2023).

TE improves trust by recording three perspectives: the parties, a neutral ledger, and increasing the traceability of value flows across linked transactions (

Groblacher & Mizdrakovič, 2019). TE aligns with distributed ledgers that support independent verification without the need for central authorities (

Cai, 2021). Broader reporting also benefits when contextual data extends beyond monetary effects to environmental, social, and relationship dimensions, with potential relevance to ESG reporting (

Cai, 2021;

Groblacher & Mizdrakovič, 2019). Alternatives exist, yet many reduce visibility or shift assurance to third parties rather than shared verification (

Alkan, 2021;

Dai & Vasarhelyi, 2017;

Bónson & Bednárová, 2019;

Fullana & Ruiz, 2021;

Rizal Batubara et al., 2019). Pros and Cons of Triple-Entry Accounting are disclosed in

Table 3 and then later summarised.

Advantages include stronger transparency and auditability through an additional verification layer, earlier error and fraud detection via visible inconsistencies, and alignment with distributed ledgers for multiparty processes (

Cai, 2021;

Alkan, 2021). Limitations include compliance cost, operational complexity, synchronisation risk across three records, privacy exposure in highly transparent settings, and immature reporting standards for enriched data (

Cai, 2021). Simpler entities may gain little from full TE adoption (

Cai, 2021).

TE supports decentralised Finance and ledger systems by adding an independent cryptographic record for each transaction. This strengthens assurance without central authorities and aligns with immutable, shared data structures (

Faccia & Mosteanu, 2019;

Inder, 2023;

Cai, 2021). Value provenance, accountability, and verifiability improve when TE entries link financial amounts to contextual attributes that span chains of events (

Faccia & Mosteanu, 2019;

Inder, 2023;

Cai, 2021).

Transparency enables independent validation of ordering and inclusion of transactions in settings without a central authority (

Cai, 2021). Immutability relies on public rules and visible histories that enable continuous verification and validation. Transparent ledgers facilitate auditability, compliance monitoring, and the detection of errors or fraud. Equal visibility supports censorship resistance and accountability through public scrutiny of code, execution, and state (

Cai, 2021;

Rizal Batubara et al., 2019).

Multiple approaches offer partial assurance with trade-offs. Compliance reporting delivers minimal disclosure for regulatory purposes and restricts information scope (

Alkan, 2021). Private or restricted ledgers preserve confidentiality yet weaken independent verification (

Alkan, 2021;

Dai & Vasarhelyi, 2017). Selective disclosure releases aggregates rather than granular records (

Alkan, 2021;

Fullana & Ruiz, 2021). Third-party attestation shifts trust to external auditors (

Dai & Vasarhelyi, 2017). Proprietary formats and manual reconciliation hinder interaction and reduce timeliness (

Alkan, 2021). Anonymised metadata lowers linkability yet reduces context. Cryptographic and systems techniques, including zero-knowledge proofs, privacy committees, off-chain data availability, homomorphic encryption, differential disclosure, and sampling, support verification without full transparency, with varied assumptions about trust and cost (

Bónson & Bednárová, 2019;

Fullana & Ruiz, 2021;

Rizal Batubara et al., 2019;

Shrestha & Kim, 2019;

Elommal & Manita, 2021). TE remains the most direct route to transparency and shared integrity, while privacy-preserving methods complement TE when confidentiality requirements apply (

Bónson & Bednárová, 2019;

Fullana & Ruiz, 2021;

Rizal Batubara et al., 2019;

Shrestha & Kim, 2019;

Elommal & Manita, 2021).

This paper contributes to the literature by clarifying the methodological pathway that links triple-entry (TE) records with machine learning (ML) processes. Previous studies have focused either on conceptual TE models or on blockchain-based implementations, but none have systematically shown how enriched accounting data can be operationalised as ML features for oversight, anomaly detection, and forecasting. The proposed framework adds value in three ways. First, it translates TE records into structured inputs for supervised, unsupervised, and time-series models, which has not been demonstrated in prior work. Second, it integrates TE with multiparty computation (MPC), showing how privacy-preserving methods complement transparency-oriented accounting models. Third, it outlines practical implications for auditors, regulators, and system designers, providing a roadmap that bridges theoretical innovation with applied oversight. These contributions advance the literature from abstract discussions of TE towards concrete analytical workflows that are testable in empirical settings.

2. Foundations of Triple-Entry Accounting

Traditional double-entry bookkeeping has provided a reliable structure for financial record-keeping; however, it omits critical contextual information, such as the parties involved, the timing of events, and the rationale behind transactions. This limitation restricts its ability to support auditability, fraud detection, and compliance in increasingly complex financial environments. Triple-Entry (TE) accounting addresses these gaps by introducing a third entry that records cryptographically sealed contextual metadata alongside debit and credit transactions. This additional entry creates an immutable link between financial records and their non-financial attributes, offering a unified and verifiable view of each transaction. When incorporating identifiers, timestamps, contractual references, and other metadata, TE transforms bookkeeping into a richer dataset that is transparent, auditable, and resistant to manipulation.

The significance of TE extends beyond theoretical improvements. The enriched records provide a foundation for systematic analysis through advanced computational methods. Contextual attributes allow financial systems to detect patterns, trace asset provenance, and establish more robust audit trails, which are not possible in traditional frameworks. These features are essential for enhancing accountability and transparency, particularly in distributed ledger environments.

The value of TE accounting, therefore, lies not only in creating secure and immutable records but also in its potential for integration with machine learning. The structured combination of financial and contextual data offers a suitable substrate for algorithms that automate oversight, identify anomalies, and forecast financial trajectories. This capability situates TE as more than an accounting innovation: it becomes a data architecture designed for continuous assurance. The next section builds on this foundation by examining how advanced analytics can transform TE records into actionable intelligence.

2.1. Limitations of Traditional Double-Entry Bookkeeping

Traditional double-entry bookkeeping, while a stalwart in accounting practices, grapples with several key limitations that TE accounting seeks to rectify (

Chowdhury, 2021;

Hambiralovic & Karlsson, 2018). The current system lacks transparency, as it focuses solely on monetary exchanges between accounts, omitting vital contextual details that are crucial for verifying transactions and detecting anomalies. Furthermore, the inability to connect financial data with non-financial information creates a disjointed perspective on transactions, impeding comprehensive analysis and audit trails. This deficiency not only obscures potential fraud and errors but also complicates compliance adherence, as the absence of transaction context can hinder regulatory assessments and evaluations. Moreover, the limited viewpoint provided by the traditional double-entry system restricts strategic analysis by overlooking interconnected attributes, such as location, product, and customer data, which are essential for obtaining deeper operational insights. Finally, the system’s inability to trace asset provenance across successive transactions undermines the clarity and integrity of historical financial records, weakening independent verification and heightening the risk of unnoticed anomalies.

2.2. Overview of Triple-Entry Accounting: Principles and Framework

The principles and framework of TE accounting represent a significant advancement from traditional double-entry systems (

Groblacher & Mizdrakovič, 2019;

Rahmawati et al., 2023). By expanding beyond the financial debits and credits paradigm, TE accounting introduces a third “entry” dedicated to capturing contextual metadata for each transaction, offering a comprehensive view of the “why” behind financial flows (

Sarin, 2022). In this framework, financial elements are conventionally recorded as debits and credits, while non-financial attributes, such as parties involved, transaction locations, item descriptions, and tags, are meticulously defined as metadata fields. Each transaction is uniquely identifiable through shared reference keys, ensuring a robust linkage between financial and contextual entries that are both immutable and transparent. This model not only bolsters auditability and transparency but also facilitates deeper insights and analytics by providing a unified and verifiable view of both financial and contextual activities. TE enables the seamless integration of financial and non-financial data in a structured and immutable manner. It not only strengthens compliance and audit processes but also provides a resilient foundation for advanced analytics approaches within accounting practices.

In TE Accounting, a concept that enhances traditional accounting introduces a third entry to the transaction, and specific operational mechanisms define its implementation. This innovative framework covers not only the debit and credit entries characteristic of double-entry accounting but also a third entry that is cryptographically sealed to provide an additional layer of transparency and security. Therefore, it is essential to elaborate on the process through which this data is captured, stored, and linked to financial transactions. Non-financial metadata can encompass a wide range of information, including timestamps, transaction details, and external data such as contracts or agreements. Non-financial metadata enhances the understanding of financial transactions within TE Accounting (

Spilnyk et al., 2020). This data, captured during transactions, offers essential context to comprehend the significance and implications of financial entries. Various methods, such as manual input, automated data feeds, or integration with external systems, are employed to collect this metadata, ensuring a comprehensive view of each transaction’s context and purpose. In TE Accounting, the secure storage of non-financial metadata alongside financial data is paramount for maintaining a comprehensive transaction record (

Sgantzos et al., 2023). This practice ensures that each transaction is richly documented with contextual information, offering a deeper understanding of the financial entries. TE leverages secure databases or blockchain technology, ensuring the integrity and immutability of the combined dataset, which provides a reliable and transparent record of the transactional history. The linkage between financial transactions and their associated non-financial metadata is essential for constructing a comprehensive understanding of the transactional context and history (

Sunde & Wright, 2023). TE establishes a connection between each financial entry and its pertinent non-financial metadata, forming a holistic perspective that clarifies transactions in detail. This linkage is facilitated through the use of unique identifiers or cryptographic hashes, serving as the glue that binds together the financial and non-financial components, ensuring a cohesive and transparent representation of the transactional data. TE incorporates detailed explanations of its operational mechanisms, including the capture, storage, and linking of non-financial metadata within the TE Accounting framework, which enables a more comprehensive understanding of its practical application and benefits.

3. The Role of Advanced Analytics in Triple-Entry Accounting

The analytical potential of TE accounting lies in the structured metadata it produces. Unlike traditional records, TE creates machine-readable datasets that combine financial values with contextual attributes such as time, counterparties, and contractual references. It makes TE a natural candidate for machine learning (ML) applications in oversight and transparency.

Existing studies have already demonstrated the role of ML in auditing and financial analysis, showing how classification, clustering, and anomaly detection can identify irregularities and support predictive decision-making (

Murdoch & Detsky, 2013;

Cho et al., 2020;

Zhang et al., 2022;

Petratos, 2024). Applying these approaches to TE records extends their scope: contextual metadata becomes a predictor variable that enhances the detection of fraudulent behaviour, strengthens compliance monitoring, and improves financial forecasting.

TE records are preprocessed into features suitable for ML algorithms to operationalise this integration. Numeric transaction data is normalised, categorical attributes are encoded, and textual descriptions are vectorised. Once prepared, datasets can be analysed using supervised learning for fraud detection and compliance classification, unsupervised learning for clustering transactions and detecting anomalies, and time-series methods for forecasting financial flows. These techniques enable oversight functions that exceed human capacity to monitor distributed and complex ledgers.

The benefits of this analytical integration are clear. Fraud detection models can flag suspicious activities earlier than conventional audit processes. Clustering models help regulators and auditors identify unusual transaction patterns. Forecasting models help predict cash flows or compliance risks, enhancing the predictive capacity of accounting oversight. The significance of these outcomes lies not only in their technical feasibility but also in their implications for accountability in digital Finance.

3.1. Motivation for Applying Machine Learning and Data Mining

The integration of ML and data mining into TE accounting data unveils a spectrum of compelling reasons driving this technological fusion. ML’s prowess lies in uncovering obscured patterns and relationships within vast and diverse transaction datasets, offering insights that extend beyond human perception. Predictive models, a hallmark of ML, provide a methodical approach to foresee outcomes, predict risks, identify anomalies, and estimate future financial trajectories based on historical metadata trends. ML automates monitoring processes and anomaly detection. ML algorithms provide continuous oversight, swiftly flagging irregularities and conserving manual efforts. This proactive monitoring extends to the most relevant tasks of auditing and governance, ensuring real-time alignment with policies rather than sporadic assessments, thereby fostering a culture of perpetual compliance adherence. Moreover, ML-generated patterns and predictive guidance provide managers with evidence-based support for strategic decision-making across various organisational domains. Addressing the challenges posed by the extensive data volumes inherent in TE accounting, ML excels in extracting intelligence from interconnected datasets at a scale that surpasses traditional tools.

Additionally, ML classifiers and rules streamline compliance efforts by systematically checking for violations and mitigating the costs associated with oversight through manual reviews. ML spotlights outliers and enhances forensic capabilities, making it instrumental in combating fraud and errors, and bringing to light both intentional and unintentional financial reporting discrepancies. Furthermore, the iterative learning process facilitated by ML fosters organisational knowledge enhancement over time, with insights garnered from feedback loops contributing to the model’s accuracy as it focuses on both recurring and novel transactions. In the context of TE Accounting, the selection of ML algorithms is paramount, with certain types, such as clustering and classification algorithms, holding particular relevance due to their distinct characteristics and capabilities. Clustering algorithms, such as K-means or hierarchical clustering, are well-suited for Triple-Entry data due to their ability to identify patterns and group transactions based on similarities (

Li et al., 2024).This feature is invaluable for anomaly detection within financial data, enabling the identification of irregularities or potential fraudulent activities within transaction records. On the other hand, classification algorithms, such as decision trees or Support Vector Machines (SVM), are essential in TE Accounting by enabling the categorisation of transactions into distinct classes based on historical patterns and data features(

Elommal & Manita, 2021). These algorithms can help assign labels to transactions, such as legitimate or suspicious, thereby aiding in the identification of potentially fraudulent transactions or unusual patterns that deviate from the norm.

Moreover, the interpretability and explainability of these algorithms are crucial in the financial domain, as they provide insights into the reasoning behind classification or clustering decisions, which is essential for auditing and compliance purposes (

Zhang et al., 2022).

ML leverages clustering and classification algorithms in Triple-Entry Accounting. Thus, organisations can enhance their ability to analyse and interpret financial data effectively, leading to improved risk management, fraud detection, and decision-making processes of financial transactions.

3.2. Benefits of Transparency, Insights and Fraud Detection Empowering

TE accounting with ML yields a wealth of advantages spanning transparency, insights, and fraud detection. It integrates ML into the accounting framework, enhancing visibility into the full transaction context through the interconnectedness of financial and metadata records. ML algorithms further enhance transparency by automatically identifying anomalies and outliers (

Awosika et al., 2024). This fusion also fortifies compliance and governance efforts through real-time monitoring, alerts, and the application of classification and rules to pinpoint regulatory issues at a detailed level. Strategic decision-making gains a competitive edge as data-driven predictive patterns and associations offer objective guidance, increasing the likelihood of optimisation opportunities across various dimensions. The process of continuous organisational learning is facilitated through feedback loops, which refine models and knowledge over iteratively analysed data, thereby influencing well-informed planning and decision-making in the long term. Moreover, automated fraud and error detection mechanisms enable the identification of unusual profiles and transactions that exceed set thresholds, thereby reducing oversight costs while bolstering forensic investigation support. Proactive risk prediction is enabled through predictive models that forecast potential risks, liabilities, and problematic areas, allowing for preemptive controls to be implemented and thereby strengthening risk mitigation strategies compared to reactive post-event approaches. Ultimately, this amalgamation of TE accounting with ML not only enhances credibility for stakeholders by providing intelligent oversight but also minimises disruptions stemming from compliance issues or reporting inaccuracies, solidifying the strategic benefits across governance, compliance, and decision-making functions within organisations.

4. A Machine Learning Approach

The integration of machine learning with Triple-Entry (TE) accounting requires a structured methodology that transforms enriched financial and contextual data into analytical features suitable for algorithmic processing. The purpose is not to provide a generic overview of machine learning but to demonstrate how specific techniques can operationalise TE accounting to achieve transparency, compliance, and oversight. The approach involves several stages. Raw TE data, composed of financial records and contextual metadata, is first prepared through feature engineering techniques. Suitable algorithms are then applied, depending on the analytical objectives such as fraud detection, anomaly identification, compliance monitoring, or forecasting. Finally, results are evaluated using metrics aligned with each task, and insights are mapped to applications in accounting, auditing, and regulatory reporting.

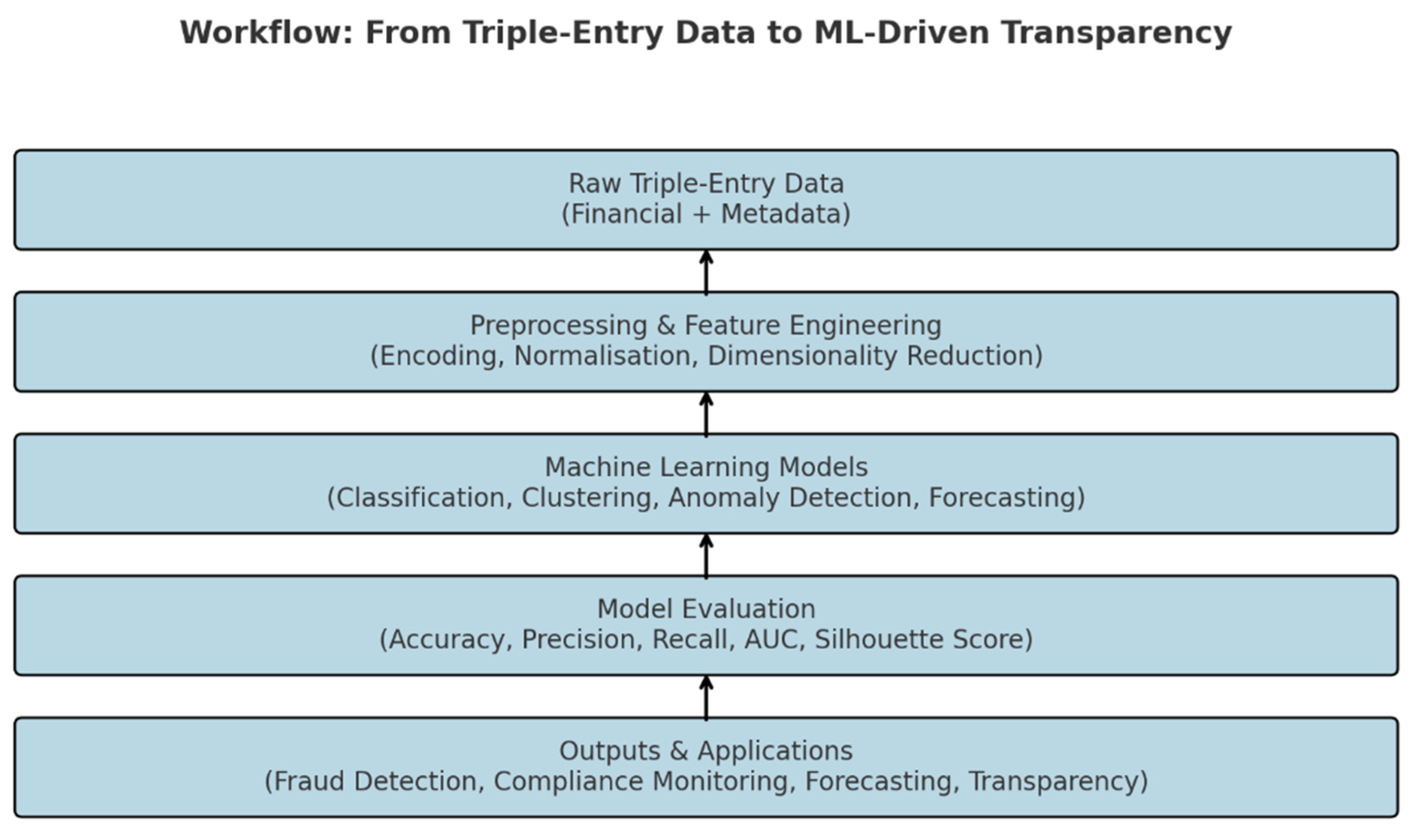

Figure 1 illustrates this workflow, showing the pathway from raw TE data to practical outcomes. It highlights the transformation from input features through modelling and evaluation to the delivery of outputs, such as enhanced fraud detection and continuous auditing. This visual representation clarifies the process and links technical steps to research and practical objectives.

The methodology for representing TE data as features relates to converting financial records into target or output variables, while metadata attributes are reshaped into predictive input features. Categorical variables are managed through dimensional modelling techniques, and data irregularities are addressed using feature encoding methods. Various algorithms and models are then employed for different purposes: classification models such as logistic regression (

Bekoe et al., 2018), decision trees (

Nawaiseh & Abbod, 2021), and neural networks (

Sanchez-Serrano et al., 2020) are utilised to predict transaction types or outcomes, while clustering algorithms like K-means (

Sahoo & Sahoo, 2021), hierarchical clustering (

Thiprungsri, 2019), and Density-Based Spatial Clustering of Applications with Noise (DBSCAN) (

Bhattacharya & Lindgreen, 2020) are used to identify natural segments within similar records. Association rule mining techniques (

Bhattacharya & Lindgreen, 2020) like Apriori (

Ioannou et al., 2021) and Equivalence Class Transformation (ECLAT) (

Tsapani et al., 2020) are applied to uncover correlations, and anomaly detection algorithms including Isolation Forests (

Bakumenko & Elragal, 2022), Local Outlier Factor (LOF) (

Kuna et al., 2014), and One Class Support Vector Machine (OCSVM) (

Wilfred, 2021) are employed to detect outliers. Predictive modelling methods, such as linear or logistic regression (

Nawaiseh & Abbod, 2021), Recurrent Neural Networks (RNNs) (

Jan, 2021), and time-series analysis, are utilised for forecasting purposes. Model evaluation is carried out using realistic train/test datasets, with performance metrics tailored to each specific problem, such as accuracy, precision, recall for classification tasks (

Nawaiseh & Abbod, 2021), silhouette score for clustering (

Suppiah & Arumugam, 2023;

Byrnes, 2019), confidence and support for associations (

Suppiah & Arumugam, 2023), and F-score (

Miharsi et al., 2024), Receiver Operating Characteristic (ROC) Area Under the Curve (AUC) (

Carrington et al., 2022) for anomalies, with strategies in place to combat overfitting and data imbalances. This systematic approach aims to transform TE data into actionable intelligence by utilising a diverse array of ML algorithms tailored to the unique analytical objectives at hand.

Methodology for Representing Triple-Entry Data as Features

In the proposed methodology, financial attributes such as debit and credit amounts serve as target or label variables, while metadata fields (for example, account identifiers, location, timestamps, and transaction descriptions) are converted into predictive features. Dimensional modelling is applied to manage categorical variables, while feature encoding techniques such as one-hot encoding or embeddings address textual or categorical inputs. Normalisation ensures numeric comparability across debit and credit amounts, and missing values are treated using imputation strategies to preserve dataset consistency. Feature selection is crucial for reducing dimensionality and eliminating redundancy. Statistical tests such as analysis of variance (ANOVA) or recursive feature elimination are used to retain attributes with the greatest predictive value. These prepared inputs are then passed to machine learning models selected according to the research objective: classification and anomaly detection for fraud-related analysis, clustering for pattern discovery, and time-series models for forecasting. Evaluation is conducted using metrics appropriate to the chosen algorithm. For instance, classification tasks are evaluated using precision, recall, and F1-score; clustering is assessed using the silhouette score; and anomaly detection is evaluated using the area under the ROC curve. The methodology, therefore, creates a consistent link between TE accounting data and machine learning outputs, ensuring that the technical process supports transparency and auditability. In the methodology for representing TE data as features, financial records are designated as target or label variables, with debit and credit amounts potentially being predicted or classified as the output or label. Fields such as account and transaction IDs serve as unique identifiers (

Ibanez et al., 2021). Metadata attributes are converted into predictive features, with categorical variables like location encoded using techniques such as one-hot encoding, dates transformed into integer time deltas or periods encoded using one-hot encoding, and text fields processed using methods like TF-IDF 8 or word embeddings 9. Dimensional modelling manages categorical variables by creating separate tables for textual fields like product or customer, extracting categorical variables from these dimension tables, and employing one-hot encoding (

Sarwar et al., 2023). Feature engineering is essential in structuring inputs appropriately, involving the grouping of related fields into composite features when meaningful, normalising to standardise numeric ranges, such as debit amounts, and handling missing values or sparse attributes. Feature selection techniques are utilised for dimensionality reduction (

Chowdhury, 2021), identifying unimportant or correlated inputs through methods like Analysis of Variance (ANOVA) (

Surana & Bhanawat, 2022) and employing recursive feature elimination to enhance algorithm performance. This structured approach enables ML algorithms to uncover valuable patterns and relationships within TE data, facilitating tasks such as classification, clustering, and prediction for various analytical objectives. The workflow is described in

Figure 1.

Figure 1.

Workflow for transforming Triple-Entry (TE) data into ML-driven transparency. The process begins with raw financial and contextual records, which are preprocessed and engineered into features. Selected ML models (classification, clustering, anomaly detection, forecasting) are then applied, evaluated with appropriate metrics, and used to support applications such as fraud detection, compliance monitoring, forecasting, and transparency in financial reporting.

Figure 1.

Workflow for transforming Triple-Entry (TE) data into ML-driven transparency. The process begins with raw financial and contextual records, which are preprocessed and engineered into features. Selected ML models (classification, clustering, anomaly detection, forecasting) are then applied, evaluated with appropriate metrics, and used to support applications such as fraud detection, compliance monitoring, forecasting, and transparency in financial reporting.

5. The Relation Between Triple-Entry Accounting and Multiparty Computation (MPC)

Multiparty Computation (MPC), also known as the protocol of oblivious transfer, aims to develop techniques that enable parties to collaboratively compute a function using their inputs while maintaining the privacy of those inputs (

Evans et al., 2018). MPC fits well with blockchains as it enables privacy-preserving and decentralised computations in a transparent and incentive-compatible manner—all of which are important requirements for many blockchain use cases (

Zhou et al., 2021). TE accounting and MPC can be complementary technologies. TE alone cannot replace the cryptographic security guarantees provided by MPC-based solutions for multiparty computations on blockchains, as shown in

Table 4. MPC is still needed for that core functionality (

Ibanez et al., 2021;

McCallig et al., 2019;

Cao et al., 2024). The differences between the two lie in their core functionalities: MPC stands out as a groundbreaking method that facilitates secure computations among multiple parties without exposing their private inputs, thereby ensuring a high level of confidentiality. This technique, underpinned by robust cryptographic principles, offers security assurances even in scenarios where some parties may collude or act maliciously.

In contrast, TE Accounting, a system designed for the immutable and auditable recording of financial transactions, lacks the same comprehensive security guarantees inherent in MPC. While TE Accounting excels in maintaining transaction records on a decentralised ledger, it falls short in enabling secure distributed computations akin to the capabilities provided by MPC. They can be combined to create a robust framework: MPC protocols offer a secure avenue for computing transactions and business logic, with the outcomes subsequently documented on-chain in a triple-entry format for enhanced transparency. Integrating zero-knowledge proofs (

Sun et al., 2021), generated via MPC, with TE accounting could provide mathematical verification of transaction validity. Moreover, on-chain MPC could uphold regulatory compliance logic, channelling the results into TE accounting systems for comprehensive governance and oversight. MPC and TE accounting possess complementary strengths: MPC excels in secure computations, while TE accounting ensures transparency and accountability. When integrating the two, MPC can manage confidential on-chain computations, while TE accounting securely records the outputs in an immutable manner, leveraging the strengths of both systems for a comprehensive and efficient solution.

The Potential of Integrating Triple-Entry Accounting with Multiparty Computation (MPC)

MPC can significantly enhance triple-entry accounting on blockchain networks through various applications, such as privacy-preserving audits and compliance checks, secure record linkage, regulatory calculations, fraud detection, dispute resolution, encrypted query execution, and risk modelling. Privacy-preserving audits and compliance checks can be conducted using MPC, allowing sensitive financial data, such as transactions and Know-Your-Customer (KYC) records, to undergo auditing or compliance logic checks without disclosing the underlying information. The pass/fail results can then be securely recorded in the TE system. MPC also enables secure record linkage, facilitating the linking of related records, such as transactions, across different parties or systems in a privacy-preserving manner. This linkage supports functionalities such as netting and reconciliation within the TE logs.

Furthermore, MPC can power fraud detection algorithms, enabling the private execution of complex ML/AI models on transaction data to identify issues, with the findings reported anonymously in a triple-entry format. Regulatory calculations, including determining prudential standards and capital adequacy ratios, can be privately computed using MPC on financial data before public reporting. In the event of disputes, MPC protocols can be employed to verify facts or compute ledger states privately, aiding in dispute resolution while minimising data disclosure. Users can utilise encrypted queries through MPC to run queries on the triple-entry ledger, extracting private analytics without revealing the queries or results. Lastly, for risk modelling purposes, MPC can be leveraged to analyse counterparty risk measures, conduct stress tests, and perform portfolio analyses privately, enhancing risk assessment across the network. As previously stated, MPC is essential in transforming privacy-preserving audits in the domain of TE accounting as it provides a secure means to verify transactions and maintain compliance without jeopardising sensitive financial information. For instance, a consortium of financial institutions adopts MPC protocols to conduct secure audit trail verifications, enabling the comprehensive validation of transaction histories across multiple parties without revealing individual transaction details. Similarly, as already mentioned above, compliance logic checks on KYC records can be performed without disclosing personal information, ensuring regulatory compliance while safeguarding privacy through MPC (

Chen et al., 2021). In another scenario, MPC facilitates the secure linkage of cross-institutional transaction records for collaborative analysis without sharing sensitive details, supporting functions such as netting and reconciliation while preserving data privacy (

Li et al., 2024). Moreover, leveraging MPC, a financial consortium can detect fraudulent activities within transaction data by privately executing complex ML/AI fraud detection models, reporting findings anonymously in a triple-entry format to identify irregularities without compromising individual transaction privacy. These examples illustrate how MPC can effectively conduct privacy-preserving audits and compliance checks in TE accounting systems, ensuring data confidentiality, audit integrity, and regulatory compliance.

6. Discussion

The framework developed in this study highlights how the integration of machine learning with Triple-Entry accounting advances the transparency and auditability of financial systems.

Unlike earlier proposals that emphasise TE’s conceptual novelty or blockchain compatibility, this framework directly links TE to ML-driven audit processes. Prior literature stops short of demonstrating this operational integration, often treating TE as a theoretical extension without specifying how data can be transformed for algorithmic use. The added value of this study lies in demonstrating the full chain from data representation to model evaluation, positioning TE not merely as a recording system but as a data architecture optimised for computational oversight.

The contribution of this work lies in clarifying the technical pathway from enriched accounting records to analytical outputs that support fraud detection, compliance monitoring, and predictive assessment. It is not a generic review, but a structured proposal that demonstrates how TE data can be operationalised through ML-driven processes. The claims made in this section are based on two levels of evidence. First, they reflect the structured review of existing literature on TE, blockchain, and ML applications in auditing and compliance. Second, they are derived from the conceptual model outlined in

Section 4, which demonstrates how TE records can be transformed into ML features, processed by suitable algorithms, and evaluated using clear metrics. Distinguishing between these sources avoids conflating general industry observations with the specific contribution of this paper. From a practical standpoint, the framework shows that anomaly detection models applied to TE datasets have direct implications for fraud prevention and continuous oversight. Similarly, clustering and classification methods provide regulators and auditors with tools for detecting irregularities that exceed human capacity to monitor complex distributed records. The integration with multiparty computation extends these applications into privacy-preserving environments, supporting compliance checks and secure collaborative analytics. At the same time, limitations must be acknowledged. The framework remains conceptual and lacks empirical testing or simulation. As a result, the findings should be interpreted as a foundation for future studies rather than validated outcomes. Nevertheless, the contribution is to provide a roadmap that guides researchers and practitioners in designing pilot implementations, case studies, and experimental prototypes to test the proposed integration in real-world settings.

Empowering TE accounting with ML presents a domain of possibilities and considerations. The benefits of analytics are profound, as TE accounting generates extensive structured financial and metadata that can be harnessed for advanced analytics using ML and AI. This utilisation unlocks insights and applications that enhance transparency, decision-making processes, and governance practices. Nevertheless, addressing privacy concerns is essential. Implementing proper techniques such as anonymisation and secure MPC is essential to safeguard individual privacy while still enabling ML on aggregated TE data for analytical advantages. Regulatory considerations are integral in shaping the environment of integrating ML with TE accounting, particularly across different jurisdictions. Regulators are essential in establishing standards and guidelines related to data privacy, model governance, and other relevant aspects to ensure the ethical and compliant utilisation of ML with sensitive financial records. These regulations can either facilitate or hinder the adoption of such systems, depending on their stringency and adaptability to new technological advancements.

Furthermore, the application of analytics to TE data, in conjunction with alternative data sources, has the potential to enhance financial inclusion by enabling a better assessment of credit risk for individuals with limited traditional credit histories, thereby promoting broader financial access. The introduction of ML into TE accounting could spur the emergence of new business models, with startups potentially offering TE accounting and analytical services through innovative methods such as blockchain technology. This innovation has the potential to significantly improve compliance, auditing practices, and risk management functions within organisations. As this transformation progresses, the professional context in accounting, auditing, and related fields is poised to undergo significant evolution. While certain job roles may transform, there is an anticipated overall increase in demand for skilled professionals due to the integration of advanced analytics capabilities, necessitating the development of new skill sets within these industries. Security of models is paramount in this data-driven environment. Implementing robust defences against adversarial attacks on ML systems is crucial to safeguard against potential manipulation of risk and fraud analyses derived from TE data. Additionally, the emphasis on explainability in models becomes increasingly critical to ensure that stakeholders can understand and validate the analyses generated by these sophisticated systems, fostering trust and transparency in the decision-making process. As such, a comprehensive understanding and adherence to regulatory frameworks will be essential in ML integration, TE accounting, and regulatory compliance across diverse jurisdictions.

6.1. Key Implications and Organisational Impact

Empowering TE accounting with ML carries significant implications and potential organisational impacts. Through advanced analytics, there is a notable increase in transparency and trust, fostering deeper insights that enhance accountability and stakeholder trust. Compliance and governance are bolstered as ML can automatically detect anomalies and non-compliant patterns, reinforcing internal controls and risk management practices. Auditing processes are optimised with continuous monitoring and customised alerts, reducing manual efforts for auditors and enabling proactive issue identification. Predictive decision-making becomes feasible through forecasting trends, risks, and anomalies, facilitating a shift from reactive to preventative strategies. Process automation and efficiencies are realised as tasks, such as reconciliations, are automated end-to-end using ML-powered workflow tools, freeing up resources for more strategic endeavours. Cultivating a data-driven culture becomes paramount, enabling widespread data access and self-service analytics that nurture evidence-based strategies and discussions centred on data points. Workforce reskilling becomes imperative as employees require training on new tools, analytics skills, and revised work methodologies, which can potentially lead to job disruptions. Transition costs pose a challenge, necessitating upfront investments in platforms, reskilling initiatives, and change management efforts that require adequate budget allocations and organisational support. Vendor management strategies become crucial as organisations may consider outsourcing certain capabilities, introducing risks related to data security and model reliability that demand robust governance frameworks. The debate between centralised and decentralised systems emerges, where decentralised structures can enhance collaboration, but centralised control often aids in compliance efforts. Understanding these implications and organisational impacts effectively is essential for harnessing the full potential of TE accounting empowered by ML in the modern business environment.

6.2. Addressing Key Challenges in Data and Systems: Quality, Scalability and Beyond

Empowering TE accounting with ML poses key challenges that necessitate careful consideration alongside potential solutions for effective implementation. Data quality is a critical issue, as TE data is often noisy, incomplete, or erroneous. Employing ML techniques such as data cleaning, imputation, and validation is essential to enhance data quality and reliability. Scalability presents another hurdle, given the massive volume of TE data. Leveraging distributed ML platforms that can efficiently process vast datasets on cloud infrastructure can effectively address scalability concerns. For instance, consider the case study of a leading financial services firm that implemented a distributed ML system on cloud servers, allowing it to process large volumes of transaction data in real-time, improving operational efficiency and scalability. Model bias and fairness represent significant challenges, with the potential for certain groups to be unfairly treated by ML models. Techniques such as adversarial debiasing during training sessions can help mitigate biases and promote fair outcomes (

Singh et al., 2021;

Faccia, 2019). A successful example is the implementation of adversarial debiasing in a credit scoring system by a fintech company, resulting in more equitable lending decisions and a reduction in bias. Privacy and security concerns surrounding sensitive financial data necessitate stringent access controls and the adoption of privacy-preserving techniques, such as federated learning or differential privacy, when utilising data for ML purposes. Collaborating with regulators and establishing partnerships can provide essential oversight and regulatory guidance, ensuring compliance with data privacy regulations. As mentioned above, the interpretability of ML models is crucial for validation and recourse, emphasising the importance of unveiling how insights are derived. Implementing explainable AI methods enhances model interpretability and transparency. For instance, a global audit firm successfully integrated explainable AI techniques into its fraud detection models, improving model transparency and auditability. Integration challenges arise when combining TE data, ML platforms, and analytics tools, requiring meticulous system design and effective change management practices.

Utilising microservices can streamline integration processes and facilitate smoother transitions. A case study of a multinational corporation adopting microservices 18 architecture for their ML-powered TE accounting system resulted in improved agility and scalability. The absence of standardised benchmarks poses a challenge for evaluating model performance. Industry consortia are essential in establishing benchmarking standards and facilitating performance evaluations. For example, the establishment of a benchmarking consortium in the financial services sector led to the development of standardised metrics for evaluating ML models in transaction processing. Moreover, the skills shortage across multidisciplinary fields encompassing accounting, data science, and compliance underscores the importance of developing a talent pool equipped with the requisite skills. Training programs and collaborations with academic institutions are instrumental in bridging these skill gaps and nurturing a workforce that is adept at managing TE accounting, empowered by ML. Addressing these challenges with strategic solutions and by leveraging successful case studies is fundamental in realising the full potential of this transformative integration within organisational frameworks.

7. Conclusions

This paper develops a framework that links Triple-Entry (TE) accounting with machine learning (ML) to operationalise transparency and oversight in financial reporting. The main contribution is methodological: a structured pathway is outlined, from enriched TE records through feature engineering and model selection to applications such as fraud detection, compliance monitoring, and forecasting. A critical comparison with multiparty computation (MPC) further clarifies how privacy-preserving mechanisms complement TE in distributed environments.

The study is qualitative and conceptual. It draws on existing literature and synthesises approaches into a unified model but does not include empirical testing or simulation. This limitation restricts immediate applicability, although the framework establishes a foundation for future experiments and pilot projects.

Practical implications are evident for auditors, regulators, and system designers. The proposed workflow supports continuous auditing, improves anomaly detection in distributed ledgers, and provides regulatory bodies with technical directions for embedding transparency in digital Finance. Future research should test the framework using real or simulated datasets, evaluate algorithmic performance on TE records, and explore sector-specific applications in supply chain management, insurance, and capital markets. Developing scalable, privacy-aware ML architectures remains an essential next step for translating this conceptual contribution into operational practice.