1. Introduction

Imagine it is the year 2030—every major hedge fund now provides consumers the ability to invest into their custom Generative AI (GenAI) fund that makes trading decisions at a lower cost than traditional funds. These GenAI-run funds will be built on top of both commercial and bespoke large language models (LLMs) to manage every stage of stock investment from stock price prediction to full portfolio management. Investment firms, banks, and hedge funds want to enjoy the benefits of deploying GenAI, such as reduced staffing costs, increased productivity, and improved data insights (

Aggarwal et al., 2025).

There are also drawbacks to deploying GenAI for stock investment: If all the GenAI-run funds are heavily coordinated, they could all give a “sell” signal simultaneously, triggering a crash like in 1929. If they all provide a “buy” signal, the GenAI-run funds could inadvertently cause a global bubble like the dotcom bubble of the early 2000s. Additionally, following patterns of investment in conventional funds, consumers could invest in several GenAI funds. However, if these consumers are not informed about the coordination between the GenAI-run funds’ stock investment decisions, they might falsely assume that spreading money across GenAI-run funds will reduce investment risk to safeguard against market volatility. These are examples of systemic risks that are becoming more urgent to solve as GenAI models like ChatGPT, DeepSeek, and Claude are deployed across more industries and countries. The systemic risk introduced by deploying GenAI models makes critical global systems like the stock market more fragile and vulnerable to rapid fluctuations.

This not a hypothetical scenario. This year, the Bank of England recognized systemic risk as a critical issue when it stated that AI deployed in the financial sector could lead to a market crisis (

Makortoff, 2025). GenAI already has already introduced systemic and firm-level risk. Because all of the current large language models (LLMs) are built using transformer architecture (

Vaswani et al., 2023) and trained on the same limited financial data like stock prices and economic indicators, investment decisions based on LLMs are also likely similar. GenAI and LLM make using machine learning techniques to perform investment actions more accessible to firms with less resources and technical expertise. Decisions made by GenAI introduce coordinated, homogeneous actions into the global industries, thereby increasing systemic risk, which makes the entire industry and the system around it less resilient.

Two types of risk impact the development of financial models and trading strategies: endogenous and exogenous risk (

Usman et al., 2024). Existing risk analysis for GenAI focuses on endogenous risk from model performance or design and its impact, such as consumer harm or bias. The less-studied second type of risk, exogenous risk, stems from external factors like macroeconomic changes, natural disasters, or sudden regulatory changes. Exogenous risk factors create unpredictable and volatile market conditions with negative consequences for the reliability of LLM predictions. Exogenous risk also creates a fragile stock market that is influenced by hype-cycle and bandwagon investing where many investors heavily invest in the same field without proper valuation studies, such as the over-investment cryptocurrency in 2020 (

Ascenta Wealth, 2023). However, current AI risk research focuses only on endogenous risk, and there is little quantitative research to help regulators and industry firms understand the exogeneous risk introduced by deploying GenAI into complex global systems.

Homogeneous outcomes from LLMs used to perform stock price predictions are an example of algorithmic bandwagon investing because similar price predictions cause multiple models to simultaneously buy or sell the same stocks. Modern Portfolio Theory uses the mathematical concepts of covariance and correlation to reduce risk in stock portfolio management. This research uses them to determine the level of homogeneity between the stock prices predicted by different LLMs. If most LLMs consistently produce stock price predictions that are positively covariant and correlated with the predictions from other LLMs, then this demonstrates that LLMs have the capability to introduce more systemic risk to the financial industry through coordinated buy/sell actions. Coordinated actions algorithmically manipulate the stock market while making the system more fragile against shocks like policy or industry changes.

Research Goals

Current GenAI benchmarks use accuracy to measure comparative performance of LLMs in the finance industry (endogenous risk) but lack a system-level view to measure coordinated buy and sell actions triggered by positively covariant stock price predictions (exogenous risk). This research aims to bridge the gap in exogenous risk measurement for the deployment of GenAI in the stock trading industry.

This research provides a concrete, quantitative framework to understand how the covariance relationship between LLMs can introduce different levels of systematic risk to the financial industry when applied to a diverse set of stocks, industries, listed exchange countries, and time frames. Originally, this research hoped to determine whether there is a set of Generative AI models that can be deployed in a group to reduce systemic risk. So, this research does not measure the accuracy of stock price predictions from LLMs (endogenous risk from model performance), only the relationship between them (exogenous risk to firms and the finance industry). This research also identifies potential data-driven technical, cultural, and regulatory mechanisms for governing GenAI deployment to prevent systemic risk using the stock trading industry as a case study.

The research goals are achieved through a combination of quantitative research and qualitative analysis in which

I design and conduct an experiment to calculate the covariance and correlation between stock price predictions from a geographically diverse set of large language models (LLMs) to understand the relationship between model outputs across geopolitically important regions and industries for multiple investment time frames, reported in the the

Section 4;

Based on these calculations, the

Section 5 explains how the observed positively correlated stock price predictions from LLMs make algorithmic market manipulation more likely and introduce systemic risk across regions and industries, especially for short time frames;

Finally, policy recommendations are offered for how international governance bodies, governments, and private companies can collaborate through multi-level policy initiatives to reduce the systemic risk of GenAI deployment to preserve a resilient global financial system.

2. Literature Review

As trading frequencies increase and exchanges continue to grow their listed options, machine learning (ML) and artificial intelligence (AI) are increasingly used to efficiently predict stock values and inform portfolio management using a type of Generative AI (GenAI) called large language models (LLMs). This section introduces research about how LLMs can be used to accurately manage stock portfolios, the growing international regulations for AI, and current methods for measuring AI risk.

2.1. Large Language Models for Stock Trading

In the finance industry, BloombergGPT was the first well-known use of LLMs for financial tasks, which trained on Bloomberg’s large proprietary historical financial data (

S. Wu et al., 2023). Similarly, FinGPT provides an open source LLM trained for financial tasks (

H. Yang et al., 2023). Realizing the potential to develop their own proprietary LLMs and possessing the development resources, large hedge funds are devoting internal resources to developing their own LLMs for stock portfolio management. In fact, the popular LLM Deepseek was the outcome of research conducted by the Chinese quantitative trading fund, HighFlyer (

Baptista, 2025).

AI portfolio management assists traders in balancing risk and return through predictive modeling (

Gerlein et al., 2016). Recently, LLMs, such as ChatGPT and proprietary financial models like BloombergGPT, extract meaningful insights from numerical market data, financial reports, specialized domain language, and sentiment analysis from news articles (

Carta et al., 2021;

L. Yang et al., 2022). LLMs used for stock trading can also leverage large amounts of historical and real-time data to develop more efficient trading strategies (

Kumar et al., 2024). Empirical studies show that AI-managed portfolios often outperform human-managed funds due to their ability to process large datasets and identify optimal investment strategies (

Miner et al., 2024).

The performance of LLMs for stock price prediction and portfolio management is further enhanced with graph network techniques that represent the relationships and interdependence between assets. For example, integrating graph neural networks (GNNs) (

Chen et al., 2023) or Decoupled Graph Diffusion Neural Networks (DGDNNs) (

You et al., 2024) with LLMs increased the accuracy of the model’s stock price predictions. Integrating hierarchical transformer models enabled the development of a model that can accurately adapt to rapidly changing market conditions (

L. Yang et al., 2022).

For high-impact use cases like finances, providing explanations can increase consumer trust so that they can understand how the AI model made a decision (

Theis et al., 2023). However, the complexity of LLMs and lack of insight into the model functionality has historically made their outputs difficult to interpret (

M. Wang et al., 2024). Some research generates explanations for stock predictions, making LLMs increasingly valuable for industry use (

Koa et al., 2024).

2.2. Risk in Artificial Intelligence

Two types of risk impact the development of financial models and trading strategies (

Usman et al., 2024):

First, endogenous risk comes from within the model design such as overfitting, skewed or stale data, bias, and model instability. Endogenous risks can cause AI trading strategies to perform poorly in real world conditions in comparison to backward-looking training (

X. Zhang et al., 2022), leading to unreliable predictions and unintended financial consequences. In addition, training models on stale and outdated data can lead to inaccurate predictions and inefficient trading decisions (

Tian & Nagayasu, 2023), but risk-reduction by frequent model monitoring and retraining is costly (

Stackpole, 2024).

To manage endogenous risk, AI engineers use techniques like adversarial testing, ensemble modeling, and explainability frameworks (

Bier, 2025;

X. Zhang et al., 2022). Risk reduction best practices have also been developed at universities and corporations for engineers to understand and prevent harmful outcomes from LLMs (

Flores, 2024;

Schwartz, 2024;

Stackpole, 2024). While some research emphasizes the importance of robust validation to help mitigate AI-related risks in financial markets (

Vuković et al., 2025), current benchmarks and evaluation processes focus on endogenous risk, model accuracy to actual stock price changes, and profitability of model actions.

The second, less studied risk is exogenous risk. Exogenous risk comes from external factors like macroeconomic changes, political instability, natural disasters, and sudden regulatory changes. These factors create unpredictable and volatile market conditions with negative consequences for the accuracy and reliability of LLM predictions. Stock market changes are influenced by the actions of dedicated humans working as financial analysts, market predictors, investment portfolio managers, and traders. When LLMs replace humans in these roles, they become influential actors who can shape the stability and movement of the market. When the macroeconomic environment is also impacted by several LLM-based portfolio managers acting independently, research shows both firm and industry risk systemic risk may increase because of similar trading behaviors between the LLMs (

Babina et al., 2024;

Tian & Nagayasu, 2023). While AI may improve some risk by providing more accurate stock price predictions, the financial market may become more fragile, volatile, and prone to crisis or crash because of correlated trading strategies across the LLMs (

Danielsson et al., 2019). The findings suggest that AI-driven trading models, when widely adopted, may create self-reinforcing feedback loops that exacerbate financial crises rather than mitigate them. Traditional metrics for evaluating risk exposure like Value at Risk or the Sharpe Ratio (

Meyer, 2015) provide valuable insights, but fail to capture systemic risk to the industry. New counterfactual risk assessments propose evaluating AI decision-making under hypothetical scenarios to reduce exogenous risk (

Green, 2020).

2.3. Current AI Evaluation Methods

Current AI evaluation methods only focus on endogenous risk by evaluating LLMs in stock trading based on their accuracy on established benchmark tests. While LLMs often outperform traditional statistical methods in identifying trends and anomalies in stock prices (

Liang et al., 2023), model accuracy still depends on data quality, feature selection, and model robustness. LLMs still suffer from overfitting and lack of real-time adaptability that make their outputs less reliable in volatile financial markets (

Y. Chang et al., 2023;

Sawada et al., 2023;

X. Wang et al., 2024;

Q. Ye et al., 2023). Because current benchmarking methods are insufficient for an era of rapid AI development (

Eriksson et al., 2025), to meet the evaluation demands, new holistic benchmarks have been developed to ensure LLM stock predictions are robust enough to meet the demands of real-world conditions and complex tasks (

McIntosh et al., 2024;

Zhuang et al., 2024). PIXIU was developed as a new benchmark specifically designed for the finance industry to test LLM’s ability to process and follow prompt tasks concerning a variety of financial tasks, document types, and industry-specific data (

Xie et al., 2023). One study showed that while LLMs can accurately predict stock market movement, LLMs do not produce trading strategies that align with traditional investment behavior by humans (

Henning et al., 2025).

Current AI evaluation methods lack a framework for measuring the exogenous (systemic) risk to larger systems introduced by LLMs.

2.4. GenAI Governance

In general, existing AI regulations share the common principles of transparency, fairness, and security, which can all help mitigate systemic risk. For example, financial institutions are increasingly required to implement human oversight in AI-run investment portfolios by developing failure modes to disrupt concerning trends in AI decisions (

Raji et al., 2022). Because many jurisdictions acknowledge that bias in AI models can lead to unfair trading advantages or discriminatory financial practices, fairness audits and algorithmic transparency measures have been proposed (

W. Wu et al., 2020). Regulations like the European AI Act, Securities and Exchange Commission (SEC) guidelines in the United States, and China’s AI governance policies all emphasize algorithmic accountability, although with varying levels of enforcement mechanisms and oversight capabilities (

Sheehan & Du, 2022).

Though China and the Unites States have produced the most commercially available LLMs, China has a much more centralized and comprehensive AI governance regulations. The EU was the first jurisdiction to have a comprehensive AI Act, despite only having one commercial LLM, Mistral from France. The EU determination to regulate demonstrates their desire to govern and control emerging technology before they become integrated into society and more difficult to change course (

MacKenzie & Wajcman, 1999). By passing the comprehensive EU AI Act, the EU positioned itself as a thought leader in the AI governance space, while the United States focuses primarily on technical leadership. Recently, DeepSeek’s release and China’s technical rise has challenged the United States’ leadership (

LeadLeo Research Institute, 2024;

Tipranks, 2025). As an early thought leader, the EU can then have more negotiation power against powerful international firms like OpenAI and Google, and the region will be less vulnerable to these firm’s profit-driven whims. In this way, the EU is using regulation as a method for control against imported LLMs that will often lack European cultural understanding. The European AI Act, especially paired with existing data use restrictions from GDPR, imposes significant compliance costs, which may discourage rapid AI innovation in favor of slower timelines and ethical development (

Hoffmeister, 2024). Because firms must simultaneously use resources for compliance-related legal fees and product development, early regulations like the EU AI Act can create barriers to entry for smaller firms developing new models. In this way, the AI Act can serve as an import tax to prevent foreign models from being adopted in Europe.

Continued Challenges for AI Risk Mitigation

Although many regions share AI governance principles, global AI governance to mitigate risk for the financial sector still faces several challenges for regulators (

Lawrence et al., 2023): First, legal systems are slow and fail to keep up with rapidly evolving AI innovation, leading to inconsistent enforcement (

Dixon, 2022). Small government offices make oversight of large internet companies, each potentially with many incidents of noncompliance, difficult to sustain. While regulatory offices could employ their own AI tools to help increase efficiency, staffing and financial limitations will hinder governance efforts at scale. Scale-up is the largest challenge for regulators and auditors advancing forward who not only need to audit LLM producers, but also LLM users, already over 50% of firms with over 5000 employees (

Eastwood, 2024).

Second, regulation still does not require the real-time adaptive risk monitoring that could hinder unintentional market manipulation from correlated GenAI trading decisions that could spiral market fluctuations into full crashes (

H. Zhang et al., 2024).

Third, audits for AI systems are complicated because AI can be viewed by policy makers as a black box without advanced explanatory components (

Y. Li & Goel, 2024). Algorithmic registries in China and the California data broker registry continue to suffer from lag because update timelines for registry and models align more with annual political timelines than new product releases, which can happen multiple times a year (

OpenAI, 2025). Even the EU AI Act, which requires explainability and documentation of financial decisions made by AI, still struggles with capacity to enforce and fines levied for noncompliance are still minimal against technology giants like Meta (

Gotsch & Puchan, 2024).

Fourth, the opportunity cost of policies for risk mitigation may hinder rapid AI innovation because regulation imposes significant compliance costs (

Hoffmeister, 2024). Because there are no global standards and regulations for AI in the financial sector, firms operating across multiple jurisdictions must navigate a diverse and complex set of AI compliance requirements or choose not to partake in that market. Though, large banks and other multinational corporations in the financial sector are more accustomed to navigating complex international regulatory environments and already have large legal departments to help adapt their practices to local requirements.

2.5. Current Gap

As AI adoption expands in stock trading, developing new risk-aware performance metrics will be crucial to protect global financial stability to assess both exogenous and endogenous risks. Current AI regulation fails to keep up with the rapid pace of innovation while also focusing only on endogenous risks like consumer harm without providing frameworks to improve financial resilience.

Without a robust understanding of the exogenous risks of AI deployment in stock trading, AI software could manipulate the market, causing an AI-driven crash similar to the 2008 financial crisis. Data-driven AI governance and regulation will be crucial for protecting critical global systems from unpredictable and drastic changes that can impact the financial stability and livelihood of every person in the world.

This paper fills the gap in two ways, first it centers exogenous risk unlike most previous research about widespread deployment of LLMs in the financial. It also demonstrates the need for cultural changes and AI regulation that keep pace with innovation, especially in the absence of proven technical solutions to reduce systemic risk. This paper fills this gap by providing a metric based on the covariance of LLM stock price predictions to measure the systemic risk from deploying LLMs to manage portfolio management. This metric can then inform international collaboration to create global governance on AI in the financial industry that reduces risk of market manipulation and ensures a stable financial system.

3. Materials and Methods

This section defines the methods and data used to understand the systemic risk associated with broadly deploying LLMs for stock portfolio management across the financial sector. Investors distribute risk among a portfolio using covariance between stocks (

Banton, 2023). Similarly, having a metric to measure the relationship between outputs of GenAI models can help understand and reduce systemic risk of deploying a portfolio of GenAI models across an industry, like stock portfolio management. This experiment measures risk by calculating the covariance between stock price predictions from various large language models (LLMs) listed in

Table 1.

There are six main steps in completing this experiment:

- (1)

Determine a set of LLMs and stocks listed to be used to predict prices;

- (2)

Determine time frame for stock price predictions;

- (3)

Build a database of financial indicators to provide context to stock predictions;

- (4)

Design a simple prompt to use financial indicators and past stock prices to predict future stock prices;

- (5)

Calculate the covariance and correlation coefficient between the saved results from the LLMs to identify correlated LLM outputs for stock price prediction;

- (6)

Automate the prompting process across a set of LLMs and save results from prompts.

This project investigates eight (8) LLMs, each for 11 stocks, over five (5) different time periods, producing 440 tasks input to LLMs, which output stock price predictions. Data was collected between 18 and 27 March 2025.

3.1. LLM and Stock Selection

Training and deploying LLMs is a computationally, energy-, and talent-intensive task. This means that only a few well-resourced companies in developed countries have succeeded in developing commercially viable LLMs. However, the financial sector has global reach. For this reason, I selected a set of eight LLMs with geographically diverse origins that represented not only the major players in generative AI, but countries with large impact in the financial sector based on stock trading volume (

World Bank, 2022). These countries have more resources for research and development, so LLM-based stock portfolio management is likely to be implemented there first.

As shown in

Table 2, stocks are selected to represent three unique sectors (automobile, technology, and communications) across the US, China, and Europe to provide diversity for listed exchange and location of company headquarters. These three industries were selected because they are each focus points of geopolitical competition. If coordinated action from LLM impacts the stock market in these industries, it impacts not just firms’ profitability but regional competitiveness and soft power in the global sphere. For automobiles, the rise of emerging electric vehicles produced by Chinese companies like BYD threatens profitability of traditional companies car companies from the United States and Europe (

Hoskins, 2025). In technology, there is high competition for e-commerce and semiconductors, which can both be impacted by rising tariffs (

Liao, 2019;

Samuel, 2025). In communication, competition to roll out 5G networks and increase market share (

China rolls out ‘one of the world’s largest’ 5G networks, 2019) that can be influenced by political tension, like when the Chinese company Huawei was excluded from European 5G network infrastructure (

Cerulus & Wheaton, 2022). Two index funds were also selected to represent larger market trends rather than individual firm stock performance.

For the automobile industry, General Motors (GM), BYD (1211.HK), and Mercedes Benz (MBGYY) were selected. For the technology industry, Amazon (AMZN), Alibaba (BABA), and Infineon (IFNNY) were selected. For the communications industry, AT&T (T), Deutsche Telekom (DTE.DE), and China Mobile (0941.HK) were selected. The two index funds are the S&P 500 (SPX) and the iShares China Large-Cap ETF (FXI).

3.2. Time Frame Selection

Typically, investments kept for under five years are considered short-term investments that provide faster liquidity and more risk (

timevsrisk, 2010), while those kept for over five years are considered long-term investments that provide more stability. Because short term trading and short sales are thought to be major influences in the 2008 financial crisis (

Boehmer et al., 2011), this research analyzes stock pricing in five levels of short investment time frames (1 day, 1 week, 1 month, 1 quarter, and 1 year) each for 5 periods. All time frames are retroactive. By analyzing stock price predictions on these short-term frequencies, I measure the potential risk of a future financial crisis caused by correlated outputs from LLM-based stock portfolio managers. Sample dates are shown in

Table 3.

3.3. Financial Indicators Database

Financial (or economic) indicators provide insights into the macroeconomic environment that could influence changes in stock prices over time. From the large variety of financial indicators used in previous studies to supply context for stock price prediction (

Lin & Lobo Marques, 2024), this paper uses four: Consumer Confidence Index, Unemployment Rate, Long-Term Interest Rate, and Inflation Rate. These financial indicators are chosen because they provide insight into each region’s economic conditions that can impact consumer spending and investment behavior, which then impacts company performance and stock prices. Data for financial indicators are collected from OECD data (

OECD, 2024).

3.4. Prompt Design and Engineering

The prompt design is simple because the goal of this research is to measure the relationship between the responses of unmanipulated and undiversified LLM outputs. The prompts contain two sections: the role that defines the context and the expected format for input and outputs.

The role is given to the LLM at the beginning of the conversation to guide future responses. The task provides the financial indicators for the time period, stock symbol, country, and target date for the model to use for its stock price predictions. Each task produces one stock price prediction.

The role:

Your role is a stock portfolio manager in charge of predicting future stock prices based on a previous stock price and financial indicators for the country the stock is listed in. The previous price for a single stock and financial indicators will be given to you in the following format. For example:

Stock Symbol: ABC

Previous Price: 234.4

Date Time: 2017-09-14

Country: China

Unemployment Rate (%): 3.0

Consumer Confidence Index (points): 100.0

Long Term Interest Rate (%): 4.1

Inflation Rate (%): 1.3

Your task is to predict the opening price for the stock on the specified date. Please format the output as a row of csv data. For example:

Stock, PredictedPrice, DateTime

ABC, 240.8, 2017-09-15

An example task:

Predict the opening price of AMZN stock on 2022-10-11 given the following stock, previous price, and financial indicators. If 2022-10-11 is a weekend, give a prediction for the last closing price.

Stock Symbol: AMZN

Previous Price: 114.08

Date Time: 2022-07-11

Country: US

Unemployment Rate (%): 3.50

Consumer Confidence Index (points): 96.25

Long Term Interest Rate (%): 2.90

Inflation Rate (%): 8.0028

3.5. Covariance and Correlation Calculations

Covariance measures whether two variables move together in the same trend. Correlation measures the strength of their relationship or trend. For example, if the correlation coefficient is 0, there is no relationship between the two variables’ trend, but if the correlation coefficient is −1 or 1, then the variables have a perfectly linear or inverse linear relationship, respectively. In Modern Portfolio Theory, covariance describes the relationship between the returns of two stocks held in a portfolio and the amount of risk in the portfolio. Calculating the covariance between stocks helps determine which stocks to include or exclude from the portfolio to balance the risk of loss (

Banton, 2023). A balanced portfolio will have a mix of stocks that are positively and negatively covariant with other stocks in the portfolio, so that a drop in one stock does not mean there will likely be a drop in other correlated stocks, which would severely hurt the portfolio value.

I borrow this idea of portfolio balancing but apply it to the larger stock trading system. For example, assume the “portfolio” to be balanced for risk is the larger stock trading system and each “asset” held is an LLM-based stock portfolio manager. The risk flooding the portfolio with highly covariant “assets” means that the LLMs give very similar responses. This means that if they all predict high values for future stock prices, several actors will likely initiate a buy of the stock, potentially increasing the risk of a bubble. Likewise, if the LLMs all predict low values for future stock prices, several actors will likely initiate selling of the stock, potentially increasing the risk of a market crash.

By measuring the correlation coefficient between the output of LLMs in a “portfolio” of the larger stock trading system, I measure the risk of unintentional market manipulation caused by widespread deployment of LLMs within a broader, dynamic system. Equations are shown below:

3.6. Automated LLM Prompting System

The methodology described in the previous subsections is automated using Azure Machine Learning Studio and Python 3 code to organize the historical stock prices and financial indicators into tables, create the prompts with the correct dates and data, query LLM API endpoints, record the LLM stock price predictions, perform covariance calculations, and record the responses and calculations in tables for analysis.

The graphic below demonstrates the flow of data across three sections/boxes as shown in

Figure 1:

The first section, “Prepare Supporting Data”, generates the Stock Database (DB) by querying the StockData.org API for the selected historical prices for stocks and dates for the experiment, and also creates a database for the financial indicators data.

The second section, “Query LLMs for Prediction”, uses the data in the Stock DB and Indictor DB to programmatically construct the “task” prompts. Then, using the API keys for each LLM, which are stored securely in an Azure Key Vault, the code in an Azure Notebook calls the API for each LLM with both the “role” and “task” prompts. The stock predictions returned from these calls are stored in the Stock Predictions DB.

The final section, “Analysis”, has code that uses the values from the Stock Predictions DB to calculate the covariance in multiple ways: pairwise for each model and stock, average for each model by industry, and average for each model by stocks listed country. The results of the covariance calculations are reported in

Section 4.

4. Results

This section reports the results of the investigation to understand the relationship (reported as covariance and correlation) between stock price predictions from large language models (LLMs) from the United States, Canada, France, and China. Investors distribute risk among a portfolio using covariance between stocks (

Banton, 2023). Similarly, having a metric to measure the covariance between LLMs’ stock price predictions, can help industry firms and regulatory bodies understand and reduce systemic risk of deploying a portfolio of GenAI models across the stock trading industry.

The results of this paper do not measure the accuracy of LLMs’ stock prediction (endogenous risk to model and firm performance), only the relationship between them (exogenous risk to the industry).

The correlation is evaluated between the eight selected LLMs overall across all variables, then by each stock, by industry (automobile, telecommunications, technology, and index funds), by listed country for the stock, and by time frame (daily, weekly, monthly, quarterly, and yearly). The goal is to understand how the covariance and correlation relationships between LLMs can introduce different levels of systemic risk to the financial industry when applied to stocks, industries, listed exchange countries, and time frames. A consistent, positively covariant and correlated relationships between stock price predictions from LLMs introduce more systemic risk to the financial industry because similar price predictions from the models leads to coordinated buy/sell actions. Coordinated actions algorithmically manipulate the stock market while making the system less resilient and more fragile against shocks like policy or industry changes.

4.1. How to Read the Graphs

The values reported are interpreted as follows: A positive covariance means that the models are likely to predict stock price movement in the same direction (if model A predicts a stock price increase, model B also predicts the stock price will increase over the same time period). A negative covariance means that the models are likely to predict stock price movement in opposite directions (if model A predicts stock price will increase, model B predicts the stock price will decrease over the same time period). A value of zero covariance means the models are independent for that time period, meaning that their stock price predictions have no relationship. Similarly, the correlation coefficient expresses the strength of the relationship between +1 (completely aligned), 0 (no relationship, independent), and −1 (completely inversely related).

To read the heatmaps in this section, dark purple indicates a a positive relationship between models (model A predicts stock price increase, model B also predicts increase) and light pink indicates a negative relationship (model A predicts stock price increase, model B predicts decrease).

4.2. Overall Model to Model Covariance Comparison

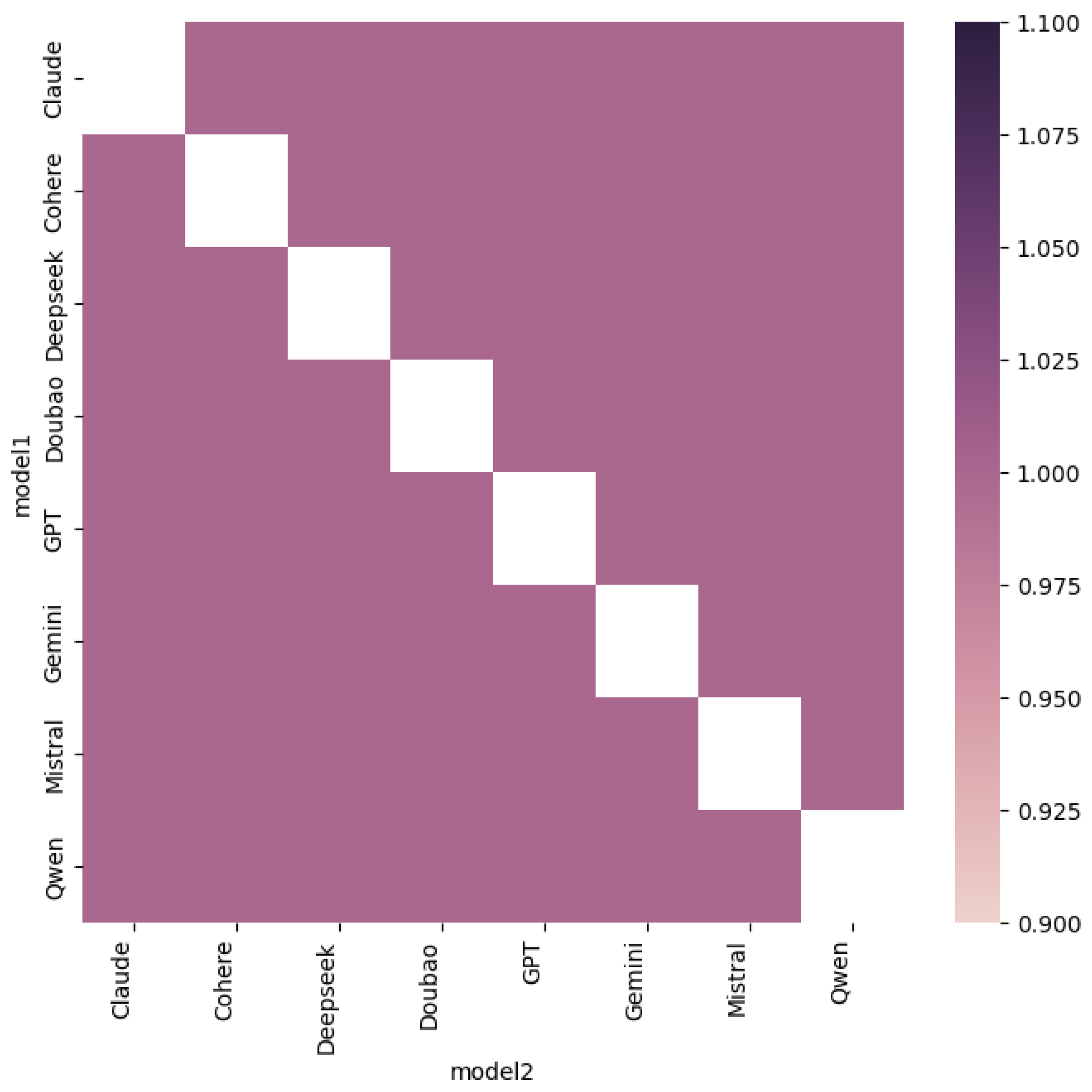

First, I evaluate the overall covariance relationship between the LLMs by comparing stock predictions across industries, time frames, and countries. The heatmap below shows the median covariance (calculated using the return across all time frames and all stocks) is positive for every pair of LLMs. Based on

Figure 2, overall, none of the models are consistently inversely covariant, all of the median covariances positive (dark pink). Now that I observed that the models are mostly positively covariant, meaning they predict the same direction of stock price change, the correlation coefficient is then calculated to understand the strength of the positive relationship between pair-wise sets of models.

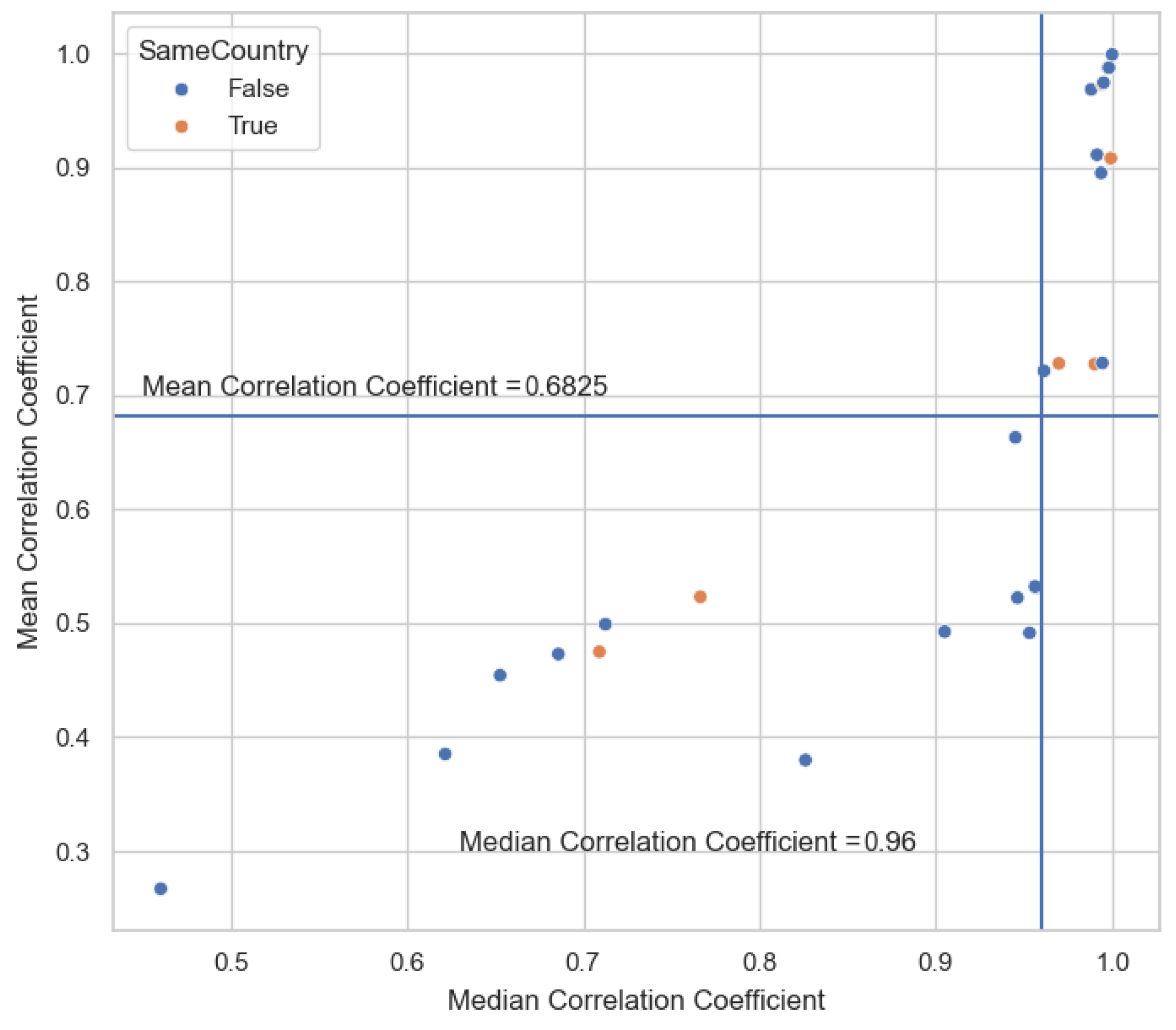

By analyzing the minimum and maximum correlation coefficient between models, I uncover a more nuanced view of the strength of the relationship between model stock price predictions: According the median values in

Figure 3, Claude has the least correlated stock price predictions with any other model. However, all of the relationships between Claude and the seven other models are still positively correlated. According the mean correlation coefficient values, Claude, Mistral, and Qwen (in order from lowest to highest) have the least strong correlation with the predictions from other models. However, the lowest mean pair-wise model correlation is 0.27 between Claude and Mistral. Even the weakest relationship is still positively correlated.

Notably, DeepSeek and GPT have the strongest possible positive median correlation (1.0), which may give credence to allegations that DeepSeek used GPT to train its models (

Olcott & Criddle, 2025). However, the minimum correlation coefficient realized between DeepSeek and GPT is 0.13, meaning that their stock price predictions can disagree at times. In comparison, even the least similar stock price predictions for a few pairs of models (DeepSeek + Gemini, Cohere + Gemini, Cohere + DeepSeek, Cohere + Doubao, and Doubao + Gemini) are always highly positively correlated (above 0.75).

Can choosing LLMs that are developed in different countries lower the correlation between the models? Based on

Figure 4, stock price predictions from LLMs developed in different countries is still positively correlated.

These results show that even among the least correlated models, in general, if one LLM predicts a stock price increase, all LLMs will predict a stock price increase. In practice, this could trigger simultaneous “buy” signals from any GenAI-run stock portfolio manager.

The rest of this chapter analyzes subsets of stock price predictions from the eight selected LLMs to determine if the correlation between model changes based on stock, industry, country where the stock is listed, or time frame.

4.3. Per Stock Comparison

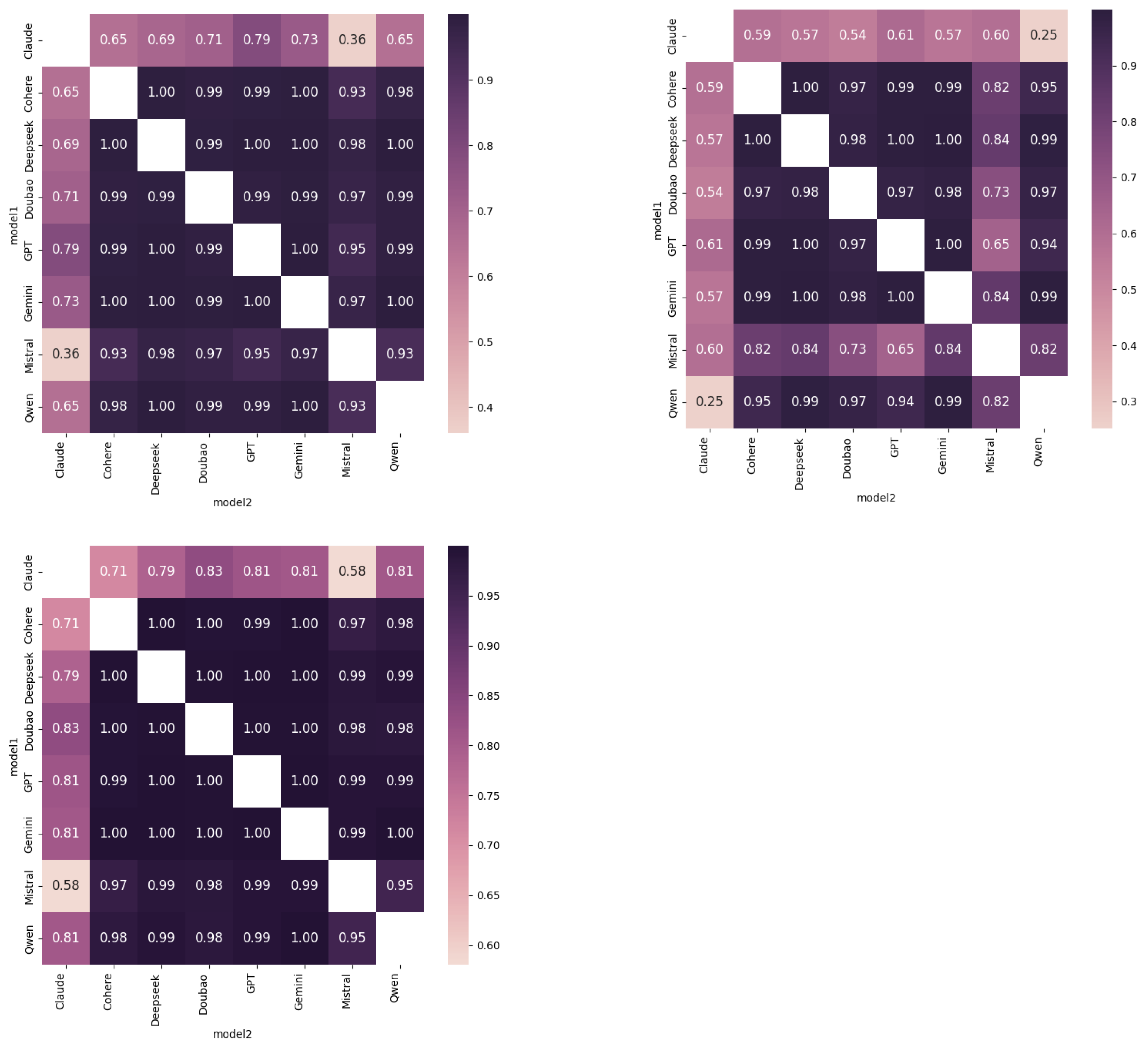

For each of the nine stocks,

Figure 5 displays the correlation between stock price predictions for each of the eight selected LLMs. As in the previous section, dark purple indicates high positive correlation and light pink indicates low or negative correlation. Across stocks, most of the model predictions are positively correlated with a few notable exceptions and trends:

For stock price predictions for AMZN (Amazon), 1211.HK (BYD), DTE.DE (Duestche Telekom), 0941.HK (China Mobile), and T (AT&T), Claude has the weakest correlation with all other LLMs. Still, most of the correlations are positive. Only for DTE.DE does Claude have multiple 0 or near 0 correlation coefficients, which signals near independence of stock price predictions with most other models (Cohere, DeepSeek, GPT, Gemini, and Qwen).

Across the nine stocks, only two models (Claude and Mistral) have a median negative correlation for the stocks IFNNY (Infineon), MGBYY (Mercedes Benz). Two more models (Qwen and Mistral) have a larger negative correlation (−0.46) for stock price predictions for BABA (Alibaba). In both cases, the negative correlation is weak (−0.06 and −0.09, respectively). This does not mean that they are always negatively correlated, only that half of the correlations across all measured time frames were below these numbers, and half above.

Because index funds contain a collection of individual stocks, their change in price is influenced by a larger set of factors. Perhaps for this reason, there is more diversity of correlation between the models price predictions for index funds in comparison to individual stocks. As shown in

Figure 6, Claude is still the model with the weakest correlation to the other LLMs, plus Qwen also demonstrates consistently weaker correlation relationship to a lesser degree. Continuing the trend from the individual stocks analysis, Claude and Mistral have notably lower correlation with each other than with other models. In addition, Qwen and Claude have the only negative median correlation (a weak −0.05) for the S&P 500 Index (SPX). All other correlations are still positive, despite further spread.

This per-stock analysis of model correlation shows that even among the least correlated models, in general, if one LLM predicts a stock price increase, all LLMs will predict a stock price increase. Depending on the stock in question, only the combination of Claude + Qwen, Claude + Mistral, Mistral + Qwen could on average predict conflicting stock price movements based on their negative correlation coefficient. In practice, even these pairs may only trigger different buy or sell actions for IFNNY, MGBYY, SPX, or BABA based on the relationship between their stock price predictions.

4.4. Industry Comparison

Across industries, the median correlation relationship between models is positive and strong (above 0.7). Continuing the trend from the previous sections, as shown in

Figure 7 Claude has the weakest positive correlation relationship between the other models, with the smallest positive correlation with Mistral (0.36 in Automobile industry) and Qwen (0.25 in Communications industry), respectively only 55% and 46% as strong as the next weakest relationship in that industry.

Figure 8 demonstrates that the median correlation values for each industry are bimodal. All industries have a mode group between 0.9 and 1 (nearly linear relationship). Then, Technology has another modal group around 0.8, Automobile around 0.7, and Communications around 0.6. None of the LLMs have a typical negative correlation for the three industries of interest, meaning that if LLMs were used to manage even focused industry-specific stock portfolios, they would all give similar price prediction trends.

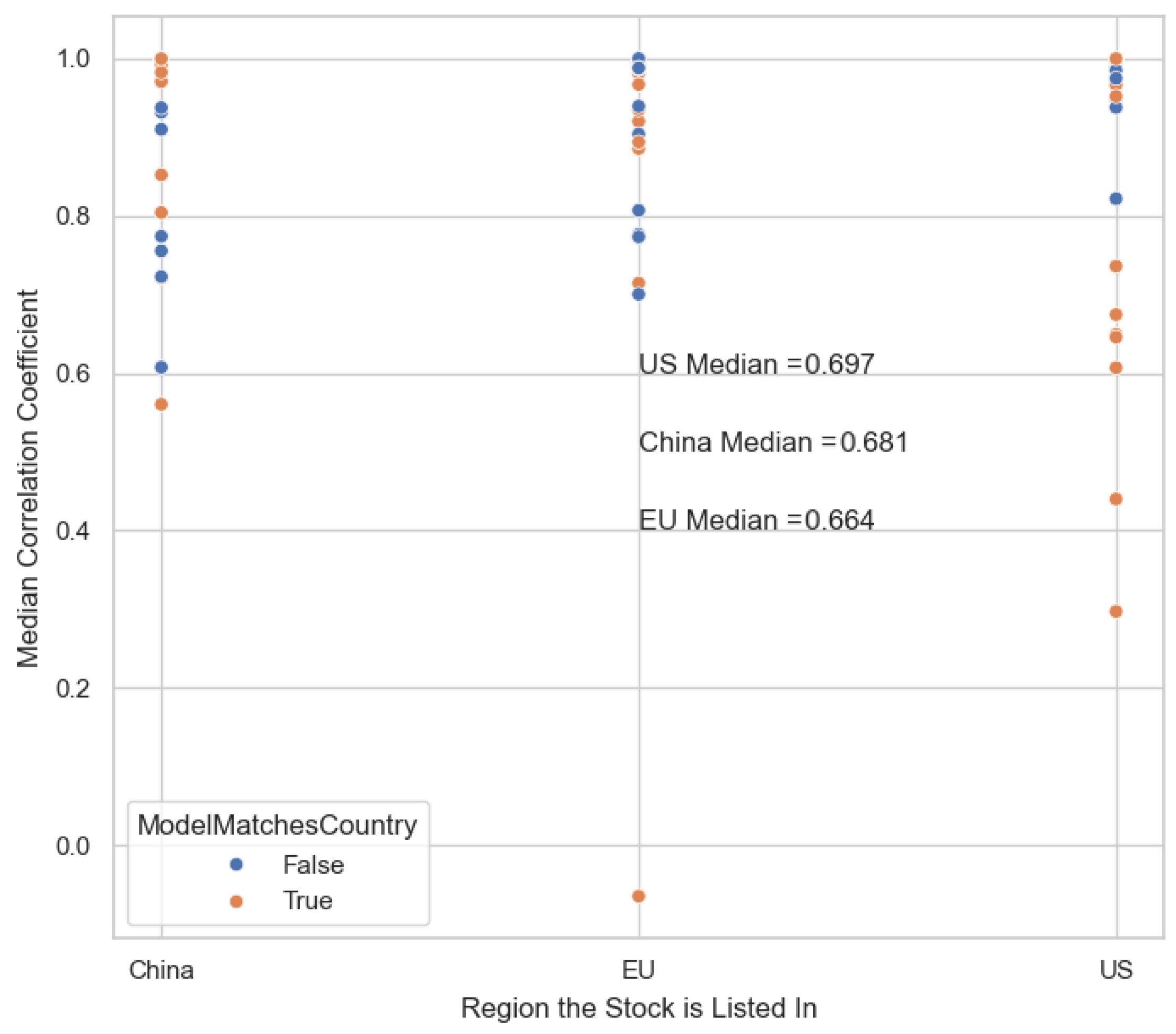

4.5. Stock Listed Region Comparison

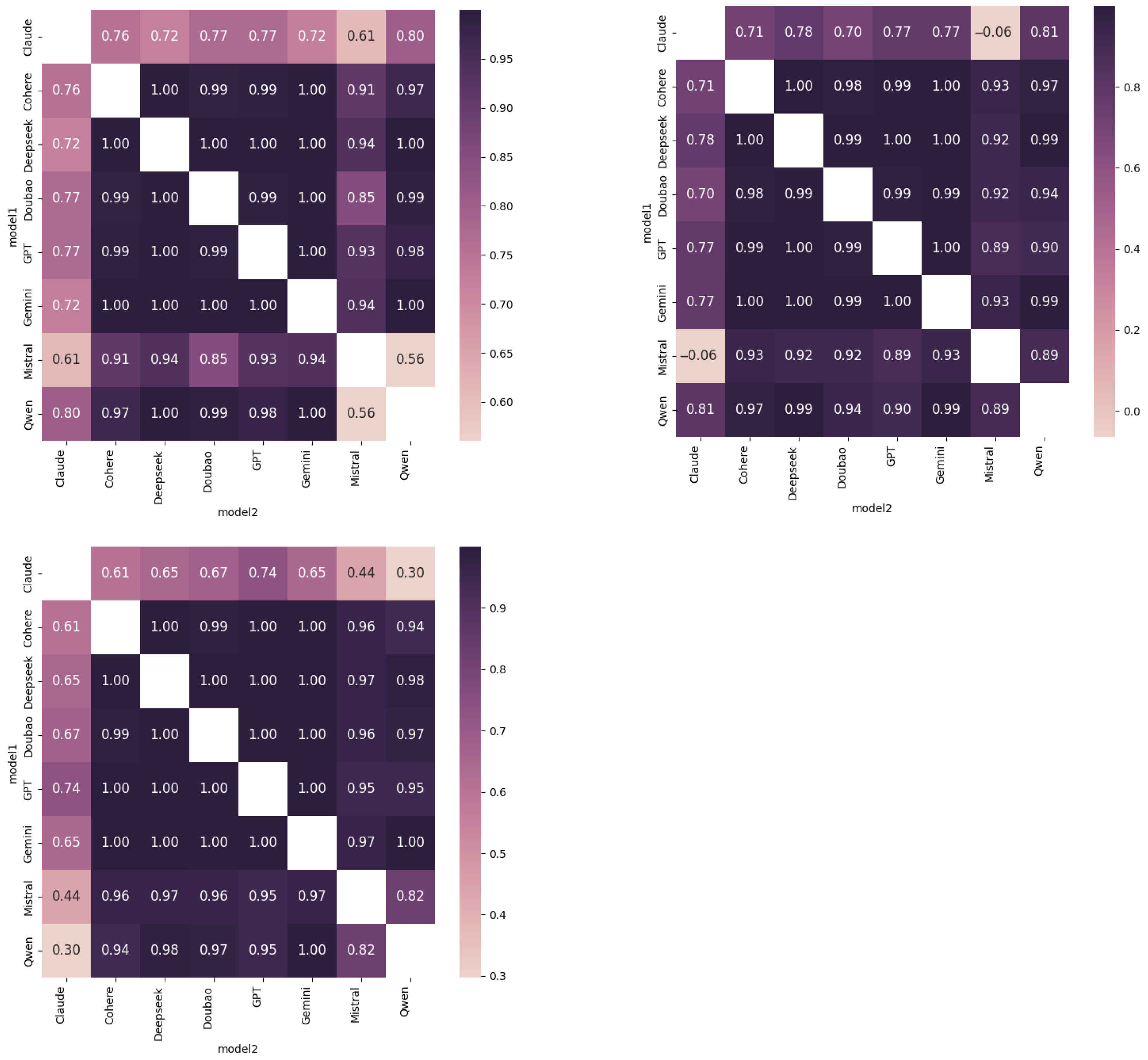

The correlation between LLM stock price predictions varies only a little within a region and is largely positive. Claude still has the weakest correlation with all other LLMs for stocks listed in China and the US, though all but one of those relationships is still positive. Except for Mistral and Claude’s relationship, which is the only median negative relationship in this section (−0.06), LLMs had the strongest positive relationship when predicting stock prices for the EU. This suggests that the stock portfolios heavily invested in European business and stock markets are most vulnerable to algorithmic market manipulation by GenAI-run stock portfolio management.

Figure 9 and

Figure 10 shows the distribution of median correlations across regions. Orange circles are pairs of LLMs where at least one of the models was developed in the same region as the stock is listed in. Based on the cluster of orange points around one in the “China” column, When at least one model is developed in China, that pair of models is more likely to have a high positive correlation if the stock they are predicting the price for is also listed in China. The United States has an opposite trend, where the orange markers have a wider spread among lower positive correlations. These inverse trends might be explained because the stocks and stock markets in the United States are older and have more historical data to interpret in comparison to Chinese stock markets which are only 29 years old (Shanghai and Shenzhen opened in 1990) (

R. Chang, 2021).

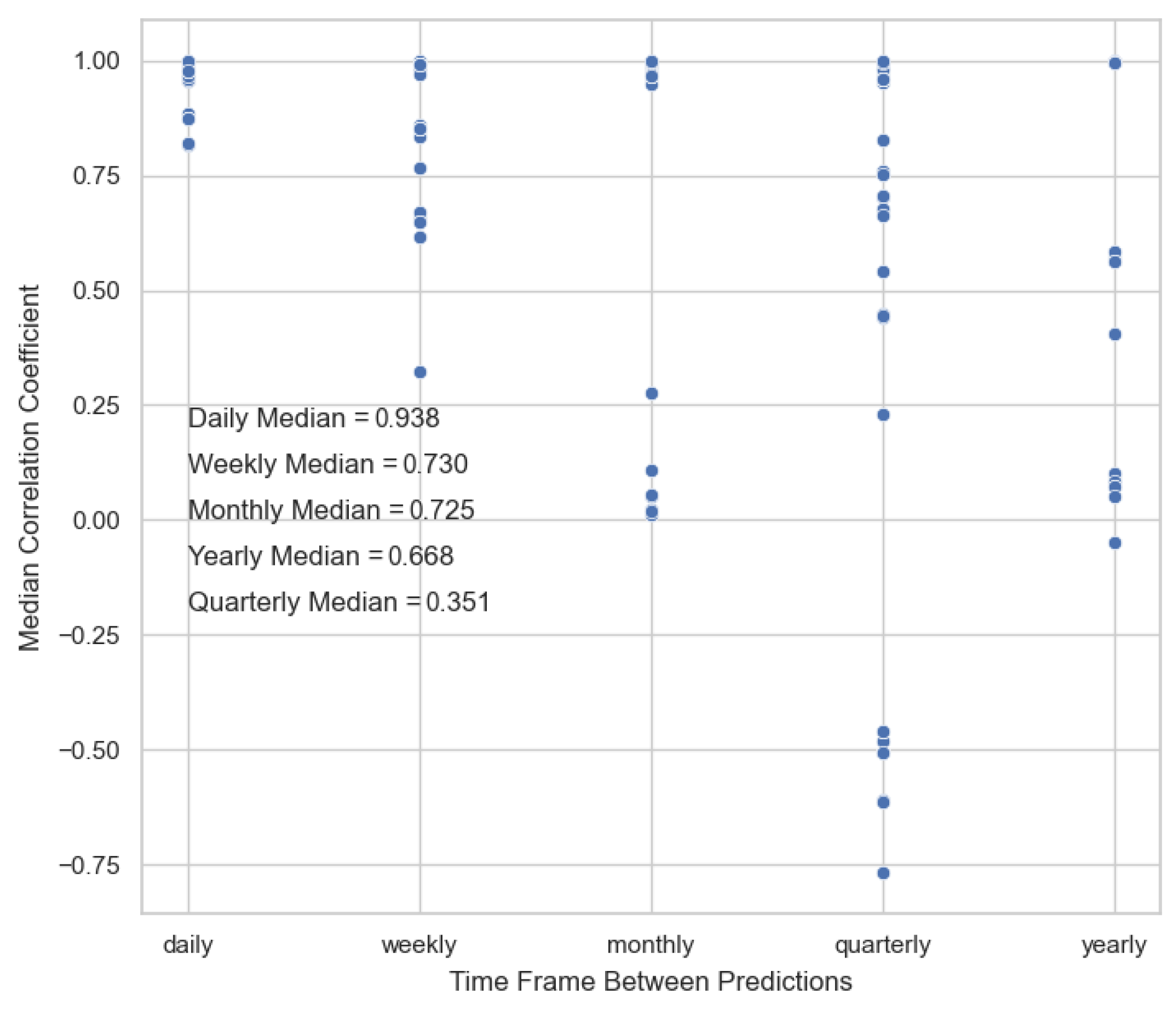

4.6. Impact of Time Frame

Time frame between predictions simulates the different time scales in which individual investors and firms can choose to trade on. This research investigates the relationship between LLMs stock price prediction across five time frames: daily, weekly, monthly, quarterly (three months), and yearly.

As shown in

Figure 11, daily predictions had the strongest positive correlation between all LLMs, with even Claude reporting only median correlations above 0.82. This can be interpreted to mean that the models are more consistent with each other in predicting stock price changes over short time frames when less external factors can impact stock price. Strong positive correlation for all models in daily predictions also means that short-term trades and daytrading activities using LLMs pose a higher risk to the stability of the financial system.

Median correlations between models’ weekly predictions were also generally above 0.8 except for those involving Mistral. For all models except Qwen (0.32), Mistral still had a relatively strong positive correlation relationship (above 0.62).

Median correlations between models’ monthly predictions were generally very strongly positive (above 0.95) minus the notable exception of Claude which had all positive, but weak (under 0.1) median correlations. For monthly price predictions, perhaps Claude is less accurate than the other models or has a hard time reasoning between variable number of days (a month can be 28 to 31 days).

Median correlations between models’ quarterly predictions were more varied. Even typically strongly positively correlated pairs like Doubao and GPT are lowest here at 0.83 (in comparison to 1.0, 1.0, 0.97, and 1.0 in other time frames). There is still a strong positive correlation, but less than other time frames. In the quarterly time frame, Mistral has a medium to strong negative correlation with all other models (ranging from −0.48 to −0.77).

Figure 12 shows the largest spread for correlation is in the quarterly time frame, demonstrating that perhaps models had the least confidence and consistency in their predictions.

Finally, median correlations between models’ yearly predictions were nearly all 1.0 except for those involving Claude or Qwen. In this time frame, Claude generally still had a medium strength positive correlation to all models except Qwen. Qwen had a weak positive to weak negative median correlation to every other model (ranging from −0.05 to 0.1).

In general, correlation between the LLMs’ stock price predictions is stronger as the time frame becomes shorter.

5. Discussion

The Empirical Results section demonstrated that in general and across each subset of data by individual stock, country, industry, and time frame, the outputs of LLMs stock price prediction are positively covariant. This means that if one LLM predicts an increase in stock price, in most situations, most other LLMs will also predict an increase in stock price to some degree. This section discusses how the correlation between LLM-based stock predictions observed can impact the stock trading industry.

5.1. The Impact of LLMs Positive Correlation on Stock Trading

Modern portfolio theory (MPT) (

Markowitz, 1952), which utilizes covariance and correlation coefficients to optimize portfolio construction by quantifying the relationship between asset returns and diversifying risk. In MPT, the covariance matrix measures how stock returns move together, allowing investors to combine assets with low or negative correlations to reduce overall portfolio volatility without sacrificing expected returns (

Elton & Gruber, 1997). For instance, including uncorrelated assets (for example, pairing technology stocks with utilities) can lower portfolio risk more effectively than simply adding more securities (

Bodie et al., 2021). The correlation coefficient (ranging from −1 to +1) further refines this analysis: a coefficient near −1 indicates strong diversification potential, while +1 implies parallel movements that offer no risk reduction. For example, during economic downturns, traditional equities often decline while safe-haven assets like gold or government bonds may appreciate, providing a natural hedge (

Thuy et al., 2024).

By considering the stock investment industry as its own “portfolio”, I build from MPT to use the LLMs as the assets that will impact the stability and risk of the “portfolio”. To minimize systemic risk in the stock investment industry, firms and individual traders should deploy a mix of covariant and inversely covariant LLMs when developing LLM-based stock portfolio management.

This research set out to discover which, if any, LLMs had inversely covariant outputs in stock price predictions. Instead, the research discovered the of the 8 selected LLMs (Claude, Cohere, DeepSeek, Doubao, GPT, Gemini, Mistral, and Qwen), all were positively covariant across across those three sectors (automobile, technology, and communications). Because the stocks selected are positively covariant despite diversity in their age, trading volume, primary product, and geographical regions, I believe LLM stock price predictions would still be positively covariant even in a larger study observing more industries.

Positive covariance across industries means that if one LLM predicts an increase in stock price, in most situations, most other LLMs will also predict an increase in stock price to some degree. Not only are the models consistently positively correlated, but the strength of the correlation relationship is usually very strong (over 0.9). If one LLM gives a “buy” signal because the stock is predicted to increase, then most LLMs are also likely to give a “buy” signal. Likewise, if one LLM gives a “sell” signal because the stock price is predicted to decrease, then most LLMs are likely to also give a “sell” signal. As LLMs become influential actors in the stock market, these coordinated signals could trigger algorithmic market manipulation, creating crashes and bubbles based on similar stock price predictions.

5.2. Existing Technical Solutions Cannot Offset Systemic Risk

Portfolio managers can exploit inverse covariance or negative correlation coefficients to construct more resilient portfolios by strategically pairing assets that move in opposing directions under market stress (

Markowitz, 1952), that is pairs of stocks that are inversely correlated.

In technical systems, AI engineers can mitigate the risks of model regressions and single-point failures by employing ensemble methods, where multiple AI models either implement majority voting or select the most confident prediction as the final output, meaning that the result is not dependent on the performance of a single model (

Dietterich, 2000;

Zhou, 2012). In ensemble learning, the aggregation of diverse models reduces variance and prevents overreliance on any single predictor (

Dietterich, 2000), analogous to how holding uncorrelated stocks minimizes exposure to sector-specific downturns. Just as investors diversify across inversely correlated assets to hedge against risk, combining diverse AI models, such as neural networks, decision trees, and Bayesian classifiers, reduces reliance on any single model’s weaknesses (

Sagi & Rokach, 2018). For instance, if one model perform poorly due to data drift or adversarial attacks, others in the ensemble can compensate, maintaining system resilience without sacrificing performance (

Ganaie et al., 2022) Previous research show that ensembles improve accuracy and reduce variance by 15–30% compared to individual models (

Opitz & Maclin, 1999), underscoring their role as a “diversification strategy” for AI systems. This diversification principle, whether applied to financial assets or machine learning models, demonstrates how combining uncorrelated components can create systems that are more resilient to uncertainty and variability.

Initially, this research set out to determine which set of LLMs would have inversely correlated outcomes. If a set of LLMs was consistently inversely correlated, then our recommendation would be for firms to create ensembles of these LLMs to offset the risk of algorithmic market manipulation. Alternatively, I could recommend that the regulators set up a registry of the LLM used by firms to perform stock trading and determine the optimal distribution of large language models by trading volume that should act in the market without increasing systemic risk. However, the research showed that none of the LLMs are consistently inversely correlated with each other, so no such risk-reducing ensemble model can be developed under current conditions. Therefore, reducing systemic risk requires nontechnical solutions, such as maintaining human oversight to disrupt failures or undesireable trends in AI-run systems (

Raji et al., 2022).

5.3. The Relationship Between Accuracy and Positive Correlation

This research showed that five of the eight of the studied LLMs had median correlation close to 1 (Cohere, DeepSeek, Doubao, Gemini, and GPT). These models have strong positive correlation. When I cross-reference these models to benchmarks for mathematical reasoning, shown in

Table 4, these same highly correlated models also rank higher in accuracy on benchmarks. There may be a relationship between accuracy and correlation, which could mean more accurate models are likely to correctly predict the similar changes in stock price. Because firms are more likely to choose accurate models to build their GenAI-run stock portfolio managers, the popularity of these highly correlated, accurate models could, in turn, introduce more systemic risk if there is model degradation or sudden market shift. Future research could investigate the relationship between accuracy and correlation, and whether the relationship impacts the level of systemic risk introduced into the financial industry. If true, the risk of accidental algorithmic market manipulation could be higher when accurate models are widely deployed.

Likewise, the models that are least correlated across the scenarios of interest rank lowest on mathematical reasoning benchmarks. The source of their low correlation is not a intrinsic feature of the models that can be exploited to mitigate risks in ensemble models, but rather a byproduct of low accuracy which could change in future model releases improve performance.

Table 4 compares each LLM’s median correlation coefficient to all other models with the model’s performance on the MATH level 5 advanced reasoning benchmark (

Epoch AI, 2024). Models that were noted as exceptions to the high positive correlation in certain scenarios, such as Qwen and Mistral, have low MATH Level 5 scores (0.672 and 0.513, respectively). This confirms that the low positive correlation and occasional inverse correlations are more related to the models’ poor accuracy than demonstrating a true inverse relationship that can be exploited for ensemble models to reduce systemic risks.

5.4. Special Impact on Technology Stocks

After DeepSeek was released in January 2025, Nvidia, a semiconductor firm in the United States, experienced the biggest single-day loss in Wall Street history (

Saul, 2025). Companies in the technology industry, such as Microsoft, Google, and Broadcom, also suffered large stock losses because DeepSeek’s release signified a change in the geopolitical climate and global AI race (

Feakin, 2025). Afterwards, some experts claimed that the market had “overreacted”. In this scenario, GenAI-run stock investment could be partially to blame for two reasons: First, according to

Figure 8, the LLMs investigated in this research have the highest correlation (and risk of coordinated buy/sell actions) in the tech industry. Second, previous research has shown that LLMs tend to exacerbate and trigger cascading losses or gains in response to sudden stock movement (

Lin & Lobo Marques, 2024).

These two factors could mean that the same technology (LLMs) that is driving global innovation competition also has the potential for widespread negative impact on the industry that created it. Deploying LLMs for stock investment could become the 21st century Frankenstein’s Monster as we enter an era of increased geopolitical risks, rising tariffs, semiconductor trade war, and global AI competition. In the United States and China, the benefits from large private and government investments into developing Generative AI could be wiped out by small, unexpected changes in the market that are then enhanced by GenAI-run stock investment software whose coordinated action could lead to market manipulation. A sudden decline of stock prices for critical technology firms could greatly impact consumer confidence, decrease individual’s savings and investment plans, and diminish a country’s ability to innovate in the future because of lost capital and research capacity.

5.5. Correlation Is Not Static

Just as the correlation between stocks can change over time based on historical price trends and external factors that impact stock price, LLM correlation is also not a static relationship. LLM correlation as calculated in this research is based on a set of stock price predictions given minimal prompt engineering and market knowledge through financial indicators mentioned in

Section 3. The same LLMs could display different correlation relationships if given more background information to reason on or if this experiment was replicated in a different industry like sports betting or illness diagnosis.

Within the same industry, LLM correlation can also change depending on time frame of interest as shown in

Section 4. or based on model updates. Now that the LLM industry globally has reduced options with fewer investments into developing new foundation models in favor of application-level investment, large corporations with existing LLMs are spending more resources on improving their existing models to remain competitive. This means that the models with low correlation due to poor accuracy will likely be improved with additional training and effort. When this happens, correlation spread will reduce and model outputs for stock price predictions will become even more positively correlated. To have up to date understanding of systemic risk, correlation metrics should be recalculated with each model update release, with some models releasing 15 times per year (

OpenAI, 2025).

The dynamic nature of model correlation has two main consequences: First, model correlation is dependent on training data and the industry of interest. Second, model correlation can change based on model updates, especially those that improve accuracy. These consequences signal that model correlation should be calculated separately for each industry interested in deploying LLMs while reducing systemic risk and that correlation metrics should be recalculated regularly to capture changes to model performance.

5.6. Growing Accessibility of LLM-Based Stock Investment

The emergence of large language models (LLMs) has significantly democratized access to artificial intelligence by providing intuitive, natural language interfaces that lower technical barriers to implementation (

Bommasani et al., 2022). In the financial sector, LLMs are being increasingly adopted for diverse applications including sentiment analysis of market news, automated report generation, and even algorithmic trading strategies (

S. Wu et al., 2023). With the introduction of LLMs, even low-resource firms can use commercially available LLMs to make predictions or perform investment decisions without need to hire expensive technical engineers to create bespoke systems. Previously, the integration between quantitative trading algorithms and AI was largely performed by specialized engineers and firms with larger research, strategy, and risk management departments. Now, GenAI has democratized access to AI and more firms than before can deploy LLMs as-is.

This accessibility is further enhanced by companies like Manus AI, which eliminate traditional integration hurdles by operating directly through web browsers without requiring complex API connections (

manus, 2025). Such innovations enable even small-scale investors and financial advisors to leverage sophisticated AI capabilities that were previously only available to institutional players with substantial technical resources (

Babina et al., 2024). By allowing users to interact with financial data and execute trades through natural language prompts, no-code solutions like Manus promise to further democratize quantitative finance while potentially introducing new risks related to oversight and system robustness (

Financial Stability Board, 2024). As these tools become more prevalent, they may reshape market dynamics by giving retail traders access to capabilities that were once the exclusive domain of hedge funds and investment banks.

5.7. Future Implications of Agentic AI for Stock Investment

Agentic AI is a system built on GenAI that requires limited supervision to perform more advanced reasoning and iterative planning to autonomously solve complex, multi-step problems (

Pounds, 2025). Since 2024, companies around the world, including investment firms, have adopted agentic AI to achieve more advanced AI reasoning. However, the types of external tools and data sources that the agentic AI systems have access to depend on the connections provided through the Model Context Protocol (MCP), which has its own limitations (

Model Context Protocol, 2024). While well-resourced investment firms can afford to purchase additional data to power their agentic AI, most firms will still be using open-source or publicly available data. Because the agentic AI does not automatically increase the diversity of data sources, investment firms using agentic AI can still receive highly correlated and coordinated investment decisions. Therefore, even with multi-step reasoning and planning abilities, investment decisions based on agentic AI still can introduce significant systemic risk to the stock investment industry identified in this paper.

5.8. The Need for Policy Intervention

This research serves as an early warning sign for how large language models (LLMs) interact and manipulate dynamic environments, like the stock market. The high correlation between LLM’s stock price predictions across industries, countries, and time frames demonstrates the potential for GenAI-run stock portfolio managers to produce algorithmic market manipulation that could trigger stock market crashes or bubbles as the LLMs all simultaneously produce similar “buy” or “sell” signals.

Given the consistent positive correlation between LLM stock price predictions, technical mechanisms, such as firms creating ensemble models of multiple LLMs, are an insufficient and intractable solution to reduce systemic risk.

The next section discusses what cultural and regulatory mechanisms can be considered to reduce systemic, exogenous risk in the financial industry that is introduced by widespread deployment of GenAI-run stock portfolio managers. Mitigating systemic risk in the financial industry requires evaluating current systems that govern AI, identifying opportunities and gaps for improvement, and identifying mechanisms to prevent LLM-based collusion or other undesirable market manipulation. To achieve these goals, international and local governance bodies must implement policy interventions to protect critical global systems like the financial industry.

6. Recommendations

6.1. Overview of Policy Options

Each of the key stakeholders in the previous section has a vested interest in the stability of the global financial market and protecting the system from exogenous risk introduced by the deployment of large language models (LLMs) for stock trading. Because the global financial system impacts each stakeholder, each should participate within its capacity in the development of a system of regulation and policies to encourage resilience while enabling further innovation in AI within their jurisdiction.

6.1.1. Technical Standards as Norm Setters

The development of technical standards for AI and related technologies is an alternative pathway for shaping global AI governance policy and norms, especially in industries where interoperability and compliance costs present significant challenges. Technical standards can reduce fragmentation by establishing common frameworks for AI deployment, thereby lowering the high costs of cross-market compliance that currently exist (

Taeihagh, 2021). Early adopters of these standards may bear disproportionate costs in implementation, but their investment can yield long-term benefits in market efficiency and regulatory compliance across regions (

OECD, 2023). Standard-setting bodies, such as the Institute of Electrical and Electronics Engineers (IEEE) and the International Organization for Standardization (ISO), have historically created alignment across industry firms and global regions to achieve technical standards through multilateral negotiations (

Abbott & Snidal, 2000). However, global AI governance is further fragmented by the rise of competing standards, partially driven by geopolitical tensions between the U.S., EU, and China. This fragmentation mirrors the competing, fragmented technology development and supply chains caused by previous 5G and semiconductor regulations (

Cheng & Zeng, 2022).

The development of AI governance and regulation is comparable to the development of cybersecurity governance. At an international level, the United Nations Group of Governmental Experts (UNGGE) spent over 20 years developing norms for state behavior in cyberspace, continuously debating the definition illegal cyber operation (

CyberPeace Institute, 2023). Similarly, AI regulation for the financial industry may be delayed by parallel debates about the definitions of illegal trading behaviors, along with how to prove and attribute incidents of algorithmic collusion or manipulative high-frequency trading patterns (

Financial Stability Board, 2024). However, AI governance in the financial industry may progress comparatively quickly because there are well-established legal frameworks governing acceptable trading behaviors (

Blair, 2013). While technical standards bodies can expedite consensus on interoperability and risk thresholds, their effectiveness depends on whether major economies can set aside growing geopolitical tensions to agree on shared rules rather than diverge into competing economic systems (

De Gregorio & Radu, 2022;

Kello, 2021).

6.1.2. Recommendation Against Industry Self-Regulation

Without multi-level policy intervention, the financial sector would need to combat exogenous risk introduced by LLMs through self-regulation. However, in the financial sector, self-regulation has historically led to increased systemic risk. Without sufficient oversight, the financial sector acts on self-interest by prioritizing short-term profits over long-term stability, creating environments with moral hazard (

Acharya, 2009). Insufficient oversight enabled the risky (and reckless) lending and trading practices that led to the 2008 financial crisis (

National Commission on the Causes of the Financial and Economic Crisis, 2011). Self-regulation methods often lack industry-wide enforcement mechanisms and are vulnerable to lobbying efforts that produce weak standards (

Blair, 2013). Over-reliance on self-regulation can amplify, rather than mitigate, the exogenous risk and a trading sector made more fragile by widespread AI deployment.

6.1.3. Recommendation Against Prohibition

Policies that prohibit AI development and deployment in the stock trading market would reinforce global inequalities in the short term and also be ineffective in risk management in the long term. Historical examples of near-prohibition legislation are the nuclear test ban (

CTBTO Preparatory Commission, 1996) and nuclear arms prohibition (

Arms Control Association, 2024). These policies enabled large wealthy countries like the US and Russia to continue to own nuclear weapons within limitations but prevented similar investment in nuclear technology from smaller countries with less resources who might have developed them later. If parallel prohibitory legislation were enacted against AI, emerging economies’ outside of North America, Europe, and China would lose their ability to compete through innovation in AI, leaving them further behind in the digital divide (

Mulgan, 2015). If interpreted as an attempt to consolidate technological and geopolitical power, international AI prohibitions could also exacerbate geopolitical relations already strained by tariff wars and supply chain decoupling (

Lee, 2018).

In addition to the negative impact on international relations, outright bans for AI use are unlikely to succeed. Just as covert nuclear programs were developed out of the nuclear arms control treaties, AI would likely continue to be used and developed for stock trading but would go unreported. Advocates for a temporary pause of AI development in the US (

Jyoti Narayan & Mukherjee, 2023) were unsuccessful and undermined when a main proponent of the ban, Elon Musk, later launched his own GenAI venture, xAI (

Hammond, 2023). Instead of preventing risks, prohibitions would encourage covert and unregulated deployment of AI.

6.1.4. Recommendation Against Over-Reliance on International Organizations

In isolation, international organizations cannot effectively govern the use of GenAI in stock trading because they lack enforcement mechanisms. Without state-level policies that align with the standards and international law set by international organizations, the standards lack actionable impact. The OECD and UN have both released responsible AI development principles (

OECD, 2023;

UN, 2022), but with limited impact on the commercial development of AI systems because policy and research fields of AI are disconnected (

Zeigermann & Ettelt, 2023).

The 2023 Bletchley Park AI Safety Summit showed promise with its multilateral declaration on frontier AI risks (

AI Safety Summit 2023: The Bletchley Declaration, 2023). However, subsequent summits revealed fractures, as seen when the US and UK refused to sign the agreement at the 2025 Paris summit based on disagreement on risk prioritization (

Tremayne-Pengelly, 2025). Across sectors, international organizations are hindered by lack of support, inconsistent funding, and declining faith in their ability to produce policy outcomes. For example, nearly all of the UN Sustainable Development Goals have limited progress or have regressed despite years of investment (

UN, 2024). Low confidence in international organizations has also been exacerbated by declining US funding as it shifts towards protectionism (

Johnstone & Lincoln, 2022), leaving AI governance without credibility of the support gained from a large powerful country. Over-reliance on international organizations to lead AI governance will produce more policy theater rather than enforceable regulation.

6.1.5. Benefits of Multi-Level Policy Intervention

To avoid these three policy pitfalls, I recommend multi-level policy intervention. A multi-level approach can build on the AI development norms of transparency, safety, and security set by international organizations like the UN or the AI Safety Summit series. This paper recommends collaboration between the private and public sectors. In the private sector, industry-specific norms are set by industry organizations, such as the European Systemic Risk Board (ESRB) and Financial Stability Board (FSB), while investment firms comply with the norms to increase their own resilience and commercial differentiation. At the same time, the public sector should enact regulations that give enforcement bodies like the SEC the capacity to levy fines and punishments against non-compliant investment firms. With this multi-level approach, the risk of AI deployment in stock trading can be mitigated.

6.2. Recommended Private and Public Policy Interventions

Private companies, states, and international organizations struggle to find a sustainable balance between enabling innovation and encouraging the development of GenAI systems that are transparent, fair, and secure. Each government’s policies reflect their national priorities, such as protecting consumer privacy, developing innovation ecosystems, or earning geopolitical power, among other competing priorities. In addition, though the financial market is a global system, each jurisdiction governs the use of GenAI with a different level of oversight, reporting requirements, and enforcement capabilities. The result is the inconsistent GenAI governance landscape described in this section.

The following policy recommendations are based on the benefits of multi-level policy intervention in comparison to self-regulation, prohibition, or only international law. The policy recommendations also assume a collaboration between the public and private sector for each of the regions investigated in the technical portion of this research (the United States, Europe, and China). Any policy enacted to govern the use of Generative AI in stock trading should enable local enforcement bodies to levy fines and increase staffing to expand capacity for audits.

6.2.1. Intervention by Industry Organizations

Industry organizations like the Financial Stability Board (FSB) and should leverage their soft power to establish norms for GenAI models used in stock trading. These organizations can host Responsible AI training for member firms, develop risk mitigation frameworks, define principles for GenAI in stock trading, and evangelize best practices for risk monitoring and coordinated crisis response if models have adverse impact on stock trading stability. As neutral third parties, these industry organizations can build on existing guidelines from the UN, OECD AI Principles, and AI Safety Summits while making industry-specific definitions of transparency, fairness, and security designed for the finance sector.

For example, transparency could be defined as requiring trading firms to inform clients when their investment portfolio is managed by GenAI, disclosing how GenAI models make and execute trade decisions, and explaining potential tax implications of automated trading in plain language. Fairness standards could build on the OECD’s AI fairness standards (

OECD, 2024) to disincentivize algorithmic discrimination such as offering downgraded quality or accuracy models for low-fee clients. Finally, AI security can be encouraged if industry organizations mandate compliance with encryption and data processing safeguards for member firms, as is currently carried out with initiatives like the (

Financial Stability Board, 2025).

Using these tactics, industry organizations can encourage member firms to follow standards to increase stability in the stock trading market even after the widespread deployment of GenAI (

George et al., 2023).

6.2.2. Intervention by Investment Firms

Currently, investment firms, such as Bridgewater and Harvest Fund, differentiate themselves in the market with their unique trading strategies and cultures. While all firms trade according to clients’ risk appetite, some firms invest more heavily in sectors, such as energy or transportation, or focus on different time horizons. These heterogeneous trading strategies currently used by investment firms encourage stability and reduce systemic risk in the stock trading market because they discourage coordinated trading actions among large firms that have unique goals (

Gai et al., 2011). As investment firms make the transition to GenAI-run investment portfolios, firms should digitize their unique firm cultures and trading strategies as they train AI models as a method to reduce systemic risks in the sector. Just as new hires are socialized into a firm’s mission, GenAI models should be trained on proprietary datasets reflecting distinct investment philosophies, risk tolerances, and sector preferences. Larger investment firms with more resources should also allocate a portion of research and development funds to work with academics to further develop culture-preserving training processes for LLMs (

C. Li et al., 2024;

Tenzer et al., 2024).

6.2.3. Current and Recommended Policy in the United States

In the United States, the Securities and Exchange Commission (SEC) has oversight and enforcement capabilities concerning AI-run investment portfolios. Part of the SEC’s purpose is to prevent market manipulation from automated systems and monitor compliance with existing federal financial laws. The Biden administration’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence emphasizes AI accountability, required financial firms to document and audit AI decisions (

Executive order on the safe, secure, and trustworthy development and use of artificial intelligence, 2023). Like the EU AI Act, President Biden’s Executive Order focuses on mitigating endogenous risk for specific AI use cases.

Since President Trump overturned Biden’s order (

Fact sheet: President Donald J. Trump takes action to enhance America’s AI leadership, 2025), the United States again lacks a comprehensive national policy regulating AI development. This shift away from regulation in favor of rapid (potentially high-risk) innovation requires self-governance in the financial industry, a sector discredited following the 2008 financial crisis in the United States. The 2008 financial crisis demonstrated the inadequate regulation, oversight, and risk management in trading firms, which led to the SEC and CFTC to adjust regulations (

Cheng & Zeng, 2022). In the private sector, the Financial Stability Board (FSB) led industry self-regulation by creating initiatives with firms to develop best practices for financial models based on AI (

Financial Stability Board, 2024). This year, a 10-year ban on state-level regulation of AI was proposed but failed to pass the Senate (

Ochs & Zagger, 2025). Even though the ban failed, without a federal AI regulation to manage systemic risk, AI governance depends on a patchwork of state and local legislation, such as California’s regulation for AI training (

Kourinian, 2024), or relies on re-interpretations of previous regulations to fit novel AI use cases.

American technology companies have adopted their own internal responsible AI practices in attempts at self-regulation. For example, Microsoft (

Microsoft, 2024), Meta (

Connect 2024: The responsible approach we’re taking to generative AI, 2025), OpenAI (