Abstract

Intraday high-frequency data of stock returns exhibit not only typical characteristics (e.g., volatility clustering and the leverage effect) but also a cyclical pattern of return volatility that is known as intraday seasonality. In this paper, we extend the stochastic volatility (SV) model for application with such intraday high-frequency data and develop an efficient Markov chain Monte Carlo (MCMC) sampling algorithm for Bayesian inference of the proposed model. Our modeling strategy is two-fold. First, we model the intraday seasonality of return volatility as a Bernstein polynomial and estimate it along with the stochastic volatility simultaneously. Second, we incorporate skewness and excess kurtosis of stock returns into the SV model by assuming that the error term follows a family of generalized hyperbolic distributions, including variance-gamma and Student’s t distributions. To improve efficiency of MCMC implementation, we apply an ancillarity-sufficiency interweaving strategy (ASIS) and generalized Gibbs sampling. As a demonstration of our new method, we estimate intraday SV models with 1 min return data of a stock price index (TOPIX) and conduct model selection among various specifications with the widely applicable information criterion (WAIC). The result shows that the SV model with the skew variance-gamma error is the best among the candidates.

1. Introduction

It is well documented that (a) probability distributions of stock returns are heavy-tailed (both tails of the probability density function go down to zero much slower than in the case of the normal distribution, and as a result, the kurtosis of the distribution exceeds 3), (b) they are often asymmetric around the mean (the skewness of the distribution is either positive or negative), (c) they exhibit volatility clustering (positive autocorrelation among the day-to-day variance of returns) and (d) the leverage effect (the current volatility and the previous return are negatively correlated so that downturns in the stock market tend to predate sharper spikes in the volatility). In the practice of financial risk management, it is imperative to develop a statistical model that can capture these characteristics of stock returns because they are thought to be related to steep drops and rebounds in stock prices during the periods of financial turmoil. Without factoring them into risk management, financial institutions might unintentionally take on a higher risk and as a result would be faced with grave consequences, which we already observed during the Global Financial Crisis.

As a time-series model with the aforementioned characteristics, a family of time-series models called the stochastic volatility (SV) model has been developed in the field of financial econometrics. The standard SV model is a simple state-space model in which the measurement equation is a mere distribution of stock returns with the time-varying variance (volatility) and the system equation is an AR(1) process of the latent log volatility. In the standard setting, both measurement and system errors are supposed to be Gaussian and negatively correlated in order to incorporate the leverage effect into the model. The standard SV model can explain three stylized facts: heavy-tailed distribution, volatility clustering and the leverage effect, but it cannot make the distribution of stock returns asymmetric. Furthermore, although in theory the standard SV model incorporates the heavy-tail behavior of stock returns, many empirical studies demonstrated that it was insufficient to explain extreme fluctuations of stock prices that were caused by large shocks in financial markets.

Based on the plain-vanilla SV model, researchers have developed numerous variants that are designed to capture all aspects of stock returns sufficiently well. The SV model has been pioneered by Taylor (1982), and numerous studies related to the SV model have been conducted so far. The Markov chain Monte Carlo (MCMC) algorithms for SV models, which can be analyzed by numerical method, have been introduced by (Jacquier et al. 1994, 2004). Ghysels et al. (1996) also survey and develop statistical inferences of the SV model including a Bayesian approach. A direct way to introduce a more heavy-tailed distribution to the SV model is to assume that the error term of the measurement equation follows a distribution with much heavier tails than the normal distribution. The Student’s t distribution is a popular choice ( Berg et al. 2004; Omori et al. 2007; Nakajima and Omori 2009; Nakajima 2012 among others). In the literature, the asymmetry in stock returns can be handled by assuming that the error term follows an asymmetric distribution (Nakajima and Omori 2012; Tsiotas 2012; Abanto-Valle et al. 2015 among others). In particular, the generalized hyperbolic (GH) distribution proposed by Barndorff-Nielsen (1977) has recently drawn increasing attention among researchers (e.g., Nakajima and Omori 2012), since it is regarded as a broad family of heavy-tailed distributions such as variance-gamma and Student’s t, as well as their skewed variants such as skew variance-gamma and skew Student’s t.

As an alternative to the SV model, the realized volatility (RV) model (e.g., Andersen and Bollerslev 1997, 1998) is often applied to evaluation of daily volatility. A naive RV estimator is defined as the sum of squared intraday returns. It converges to the daily integrated volatility as the time interval of returns becomes shorter. Due to this characteristic, RV is suitable for foreign exchange markets, which are open for 24 h a day continuously, though this may not be the case for stock markets. Most stock markets close at night, and some of them, including the Tokyo Stock Exchange, have lunch breaks when no transactions take place. It is well known that the naive RV estimator is biased for such stock markets. Nonetheless, since RV is a convenient tool for volatility estimation, researchers have developed various improved estimators of RV as well as robust estimators of its standard error. For example, Mykland and Zhang (2017) proposed a general nonparametric method called the observed asymptotic variance for assessing the standard error of RV.

Traditionally, empirical studies with the SV model as well as the RV model focused on daily volatility of asset returns. However, the availability of high-frequency tick data and the advent of high-frequency trading (HFT), which is a general term for algorithmic trading in full use of high-performance computing and high speed communication technology, has shifted the focus of research on volatility from closing-to-closing daily volatility to intraday volatility in a very short interval (e.g., 5 min or shorter). This shift paved the way for a new type of SV model. In addition to the traditional stylized facts on daily volatility, intraday volatility is known to exhibit a cyclical pattern during trading hours. On a typical trading day, the volatility tends to be high immediately after the market opens, but it gradually declines in the middle of trading hours. In the late trading hours, the volatility again becomes higher as it nears the closing time. This U-shaped trend in volatility is called intraday seasonality in the literature (see Chan et al. 1991 among others). Although it is crucial to take the intraday seasonality into consideration in estimation of any intraday volatility models, only a few studies (e.g., Stroud and Johannes 2014; Fičura and Witzany 2015a, 2015b) explicitly incorporate it into their volatility models.

In this paper, we propose to directly embed intraday seasonality into the SV model by approximating the U-shaped seasonality pattern with a linear combination of Bernstein polynomials. In order to capture skewness and excess kurtosis in high-frequency stock returns, we employ two distributions (variance-gamma and Student’s t) and their skewed variants (skew variance-gamma and skew Student’s t) in the family of GH distributions as the distribution of stock returns in the SV model. The complicated SV models generally tend to be inefficient for analyzing in a primitive form. In order to solve the problem, numerous studies concerned with efficiency of the SV model have been developed. Omori and Watanabe (2008) introduce a sampling method with block unit for asymmetric SV models, which can sample disturbances from their conditional posterior distribution simultaneously. As another approach to optimize computation, a Sequential Monte Carlo (SMC) algorithm for Bayesian semi-parametric SV model was designed by Virbickaite et al. (2019). The ancillarity-sufficiency interweaving strategy (ASIS) proposed by Yu and Meng (2011) is highly effective to improve MCMC sampling effeciency. We discuss ASIS in detail in Section 3. Needless to say, since the proposed SV model is intractably complicated, we develop an efficient Markov chain Monte Carlo (MCMC) sampling algorithm for full Bayesian estimation of all parameters and state variables (latent log volatilities in our case) in the model.

The rest of this paper is organized as follows. In Section 2, we introduce a reparameterized Gaussian SV model with leverage and intraday seasonality and derive an efficient MCMC sampling algorithm for its Bayesian estimation. In addition, we show the conditional posterior distributions and prepare for application of ASIS. In Section 3, we extend the Gaussian SV model to the case of variance gamma and Student’s t error as well as their skewed variants. In Section 4, we report the estimation results of our proposed SV models with 1 min return data of TOPIX. Finally, conclusions are given in Section 5.

2. Stochastic Volatility Model with Intraday Seasonality

Consider the log difference of a stock price in a short interval (say, 1 or 5 min). We divide trading hours evenly into T periods and normalize them so that the length of the trading hours is equal to 1; that is, the length of each period is and the time stamp of the t-th period is . Note that the market opens at time 0 and closes at time 1 in our setup. Let denote the stock return in the t-th period (at time in the trading hours) and consider the following stochastic volatility (SV) model of with intraday seasonality:

and

It is well known that the estimate of the correlation coefficient is negative in most stock markets. This negative correlation is often referred to as the leverage effect. Note that the stock volatility in the t-th period (the natural logarithm of the conditional standard deviation of ) is

where is the filtration that represents all available information at time . Hence, the stock volatility in the SV model (1) is decomposed into two parts: a linear combination of covariates and the unobserved AR(1) process . In this paper, we regard as the intraday seasonal component of the stock volatility, though it can be interpreted as any function of covariates in a different situation. On the other hand, is supposed to capture volatility clustering. We call the latent log volatility since it is unobservable.

Although the intraday seasonal component is likely to be a U-shaped function of time stamps (the stock volatility is higher right after the opening or near the closing, but it is lower in the middle of the trading hours), we have no information about the exact functional form of the intraday seasonality. To make it in a flexible functional form for the intraday seasonality, we assume that is a Bernstein polynomial

where is called a Bernstein basis polynomial of degree n:

According to the Weierstrass approximation theorem, the Bernstein polynomial (2) can approximate any continuous function on as n goes to infinity. In practice, however, the number of observations T is finite. Thus, we need to choose a finite n via a model selection procedure. We will discuss this issue in Section 4.

Although the parameterization of the SV model in (1) is widely applied in the literature, we propose an alternative parameterization that facilitates MCMC implementation in non-Gaussian SV models. By replacing the covariance matrix in (1) with

we obtain an alternative formulation of the SV model:

Since in (4) the variance of is no longer equal to one, the interpretation of and in (4) is slightly different from the original one in (1). Nonetheless, the SV model (4) has essentially the same characteristics as (1). Since the correlation coefficient in (3) is

the sign of always coincides with the correlation coefficient and the leverage effect exists if . To distinguish in (4) from the correlation parameter in (1), we call the leverage parameter in this paper.

Note that the inverse of (3) is

and the determinant of (3) is . Using

we can easily show that the SV model (4) is equivalent to

where

In the alternative formulation of the SV model (5), we can interpret as a common shock that affects both the stock return and the log volatility and as an idiosyncratic shock that affects only.

The likelihood for the SV model (5) given the observations , and the latent log volatility is

where

and . Since follows a stationary AR(1) process, the joint probability distribution of is , where

is a tridiagonal matrix, and it is positive definite as long as . Thus, the joint p.d.f. of is

The prior distributions for in our study are

Then the joint posterior density of for the SV model (5) is

where is the prior density of the parameters in (10).

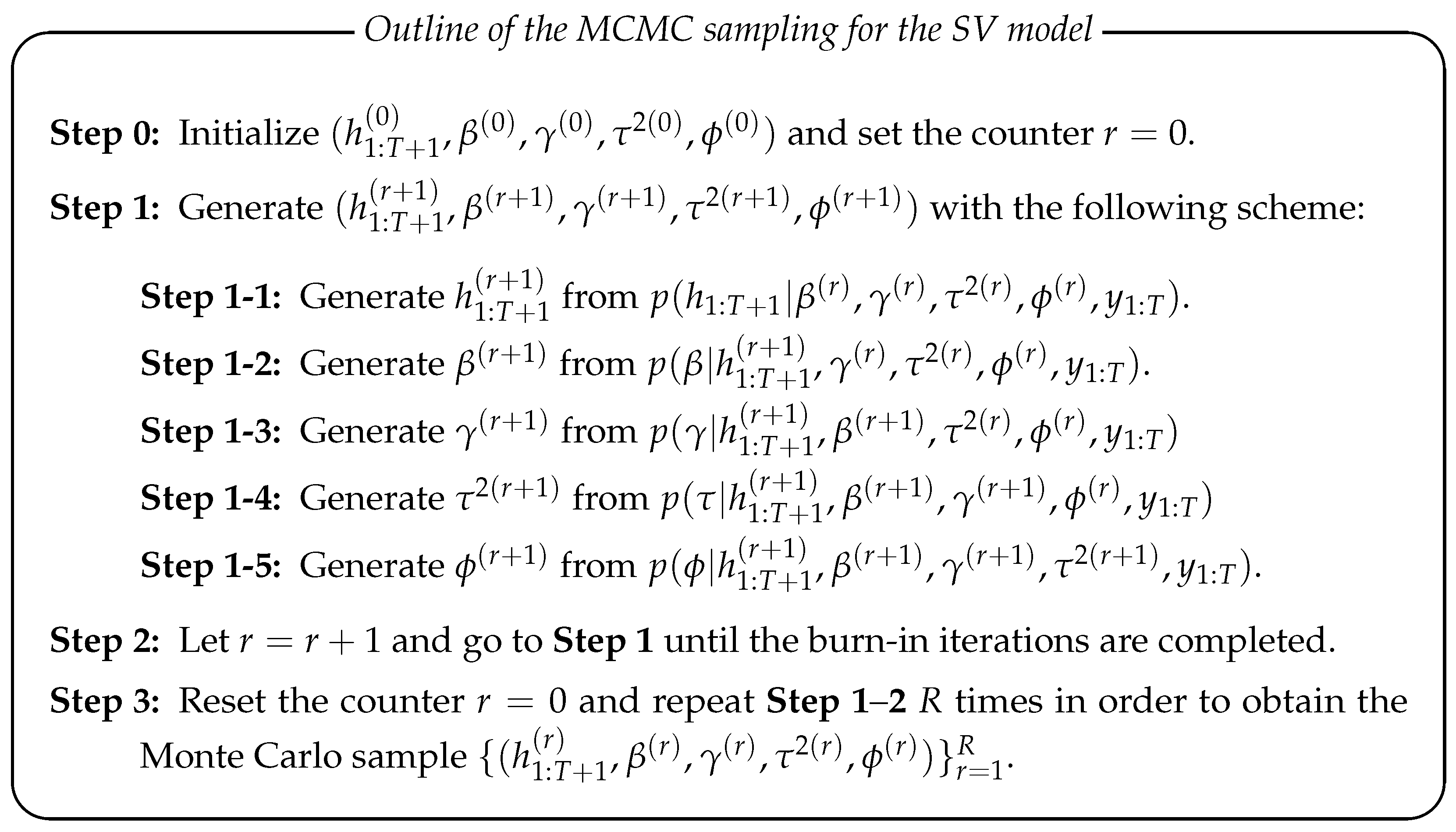

Since analytical evaluation of the joint posterior distribution (11) is impractical, we apply an MCMC method to generate a random sample from the joint posterior distribution (11) and numerically evaluate the posterior statistics necessary for Bayesian inference with Monte Carlo integration. The outline of the standard MCMC sampling scheme for the posterior distribution (11) is given as follows:

Although the above MCMC sampling scheme is ubiquitous in the literature of the SV model, the generated Monte Carlo sample tends to exhibit strongly positive autocorrelation. To improve efficiency of MCMC implementation, Yu and Meng (2011) proposed an ancillarity-sufficiency interweaving strategy (ASIS). In the literature of the SV model, Kastner and Frühwirth-Schnatter (2014) applied ASIS to the SV model of daily US-dollar/Euro exchange rate data with the Gaussian error. Their SV model did not include either intraday seasonality or the leverage effect since they applied it to daily exchange rate data that exhibited no leverage effect in most cases. We extend the algorithm developed by Kastner and Frühwirth-Schnatter (2014) to facilitate the converge of the sample path in the SV model (5). The basic principle of ASIS is to construct MCMC sampling schemes for two different but equivalent parameterizations of a model with missing/latent variables ( in our case) and generate the parameters alternately with each of them.

According to Kastner and Frühwirth-Schnatter (2014), the SV model (5) is in a non-centered parameterization (NCP). On the other hand, we may transform as

and rearrange the SV model (5) as

The above SV model (13) is in a centered parameterization (CP).

The posterior distribution in the CP form (13) is equivalent to the one in the NCP form (5) in the sense that they give us the same posterior distribution of . Let us verify this claim. The likelihood for the SV model (13) given the observations and the latent log volatility is

where

Note that the joint p.d.f. of is

where . With the prior of in (10), the joint posterior density of for the SV model (13) is obtained as

Note that is unchanged between the NCP form (11) and the CP form (17). Although the latent variables are transformed with (12), the “marginal” posterior p.d.f. of is unchanged, because

where the Jacobian .

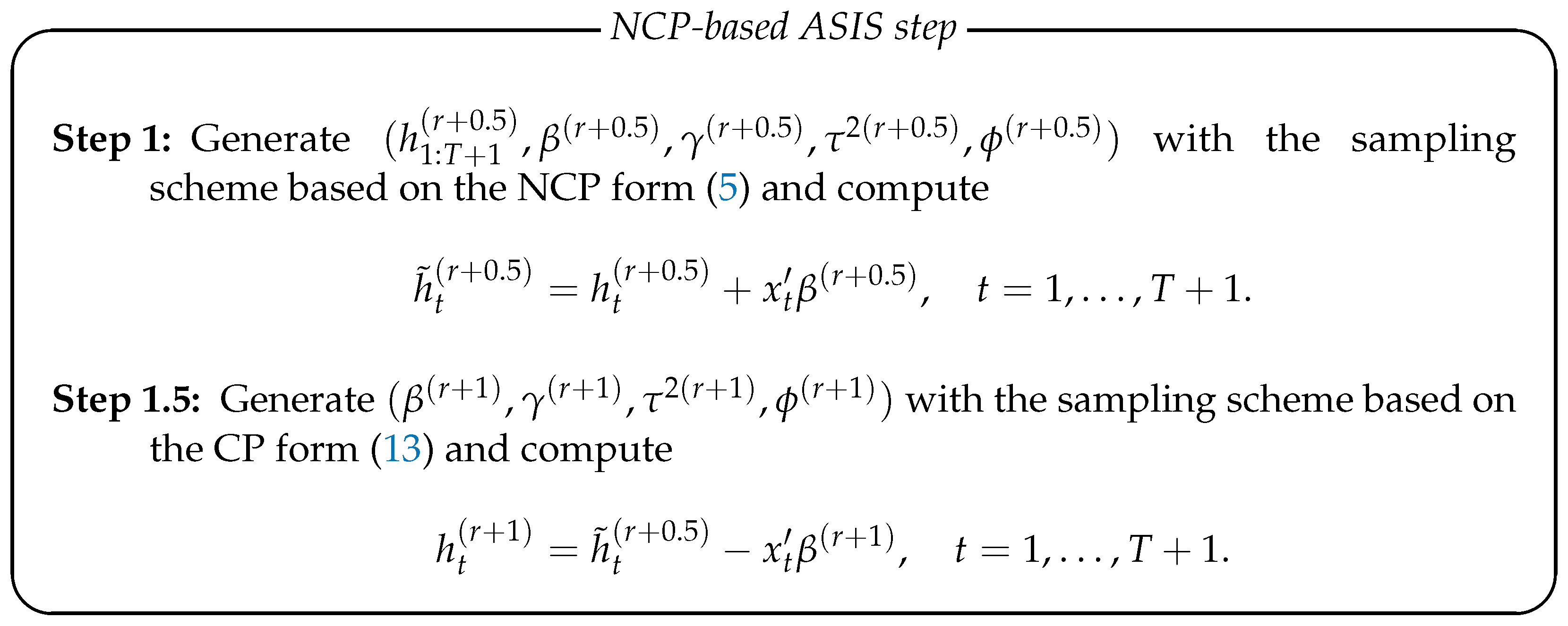

With this fact in mind, we can incorporate ASIS into the MCMC sampling scheme by replacing Step 1 with

Note that we generate a new latent log volatility from its conditional posterior distribution in the NCP form (11) only once at the beginning of Step 1. This is the reason we call it the NCP-based ASIS step. After this update, we merely shift the location of by (Step 1) or by (Step 1.5). In ASIS, these shifts are applied with probability 1 even if all elements in are not updated at the beginning of Step 1, which is highly probable in practice because we need to use the MH algorithm to generate . Although we also utilize the MH algorithm to generate , as explained later, the acceptance rate of in the MH step is much higher than that of in our experience. Thus, we expect that both and will be updated more often than itself. As a result, the above ASIS step may improve mixing of the sample sequence of . Conversely, we may apply the following CP-based ASIS step:

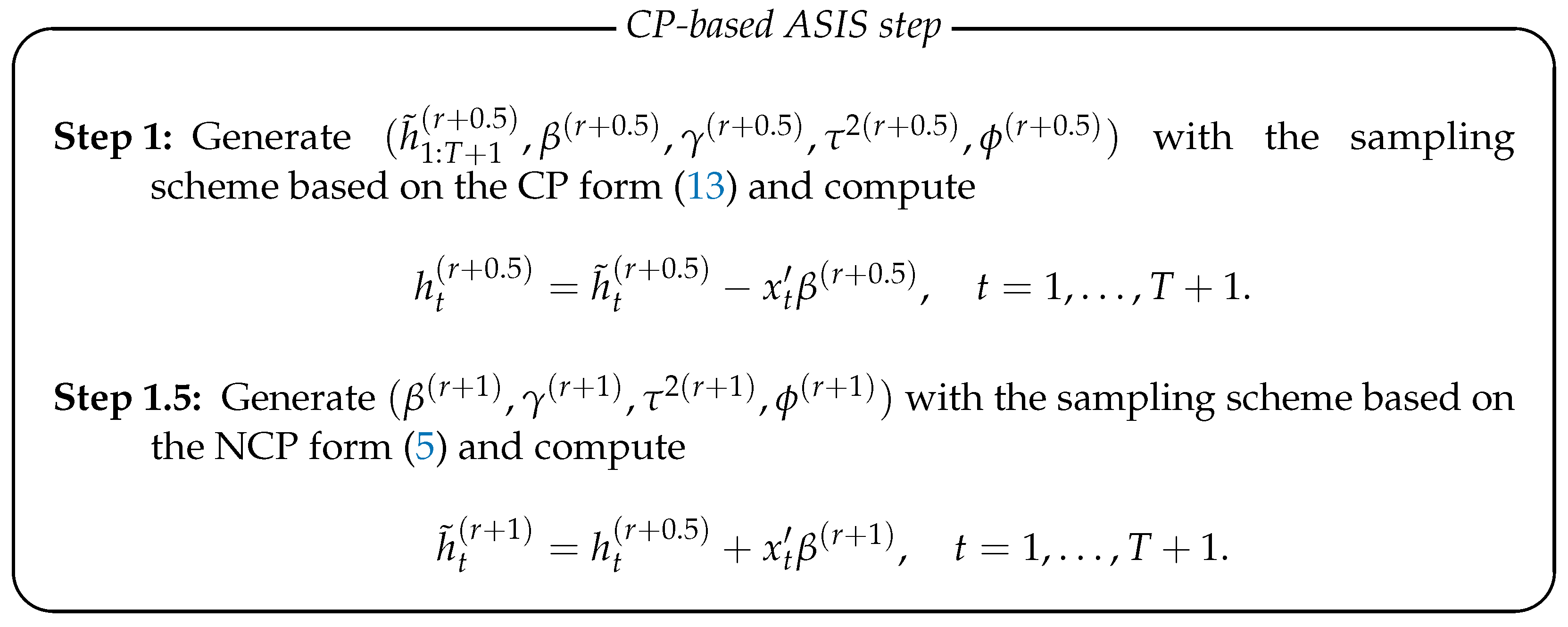

In the CP-based ASIS step, we generate from its conditional posterior distribution in the CP form (17) once. The rest is the same as in the NCP-based ASIS step except that the order of sampling is reversed.

In the NCP form, the conditional posterior distributions for are

where

In the CP form, the conditional posterior distributions for are

where

Derivations of the conditional posterior distributrions are shown in Appendix A.

3. Extension: Skew Heavy-Tailed Distributions

3.1. Mean-Variance Mixture of the Normal Distribution

It is a well-known stylized fact that probability distributions of stock returns are almost definitely heavy-tailed (the probability density goes down to zero much slower than the normal distribution) and often have non-zero skewness (they are not symmetric around the mean). Although introduction of stochastic volatility and leverage makes the distribution of skew and heavy-tailed, it may not be sufficient to capture those characteristics of real data. For this reason, instead of the normal distribution, we introduce a skew heavy-tailed distribution to the SV model.

In our study, we suppose that in (5) is expressed as a mean-variance mixture of the standard normal distribution:

where stands for the generalized inverse Gaussian distribution with the probability density:

where

and is the modified Bessel function of the second kind. The family of generalized inverse Gaussian distributions includes

- exponential distribution (),

- gamma distribution (),

- inverse gamma distribution (),

- inverse Gaussian distribution ()

Under the assumption (26), the distribution of belongs to the family of generalized hyperbolic distributions proposed by Barndorff-Nielsen (1977), which includes many well-known skew heavy-tailed distributions such as

- skew variance gamma (VG) distribution(),

- skew t distribution (),

where . In general, the skew VG distribution is a mean-variance mixture of the standard normal distribution with . To make the estimation easier, we set and so that the skew VG distribution has only two free parameters (. Thus, we have two additional parameters in the SV model. Since determines whether the distribution of is symmetric or not while determines how heavy-tailed the distribution is, we call the asymmetry parameter and the tail parameter, respectively. In our study, we use the above three skew heavy-tailed distributions as alternatives to the normal distribution. To distinguish each model specification, we use the following abbreviations:

| SV-N: | stochastic volatility model with the normal error, |

| SV-G: | stochastic volatility model with the VG error, |

| SV-SG: | stochastic volatility model with the skew VG error, |

| SV-T: | stochastic volatility model with the Student-t error, |

| SV-ST: | stochastic volatility model with the skew t error. |

In this setup, the SV model with heavy-tailed error is formulated as

It is straightforward to show that the conditional probability density of given is given by

where ,

and

Since it is impractical to evaluate the multiple integral in (29), we generate along with and form their joint posterior distribution. In this setup, the likelihood used in the posterior simulation is

We suppose that the prior distributions for and are

As for the other parameters, we keep the same ones as in (10).

3.2. Conditional Posterior Distributions

3.2.1. Latent Log Volatility

Our sampling scheme for is basically the same as before. We first approximate the log likelihood with the second-order Taylor expansion around the mode and construct a proposal distribution of with the approximated log likelihood. Then, we apply a multi-move MH sampler for generating from the conditional posterior distribution. The sole differences are the functional form of and .

where is the indicator function. Each diagonal element of is

and the off-diagonal element is

For the NCP form, we use and in (A3). For the CP form, we replace them with and in (A23).

3.2.2. Regression Coefficients

The sampling scheme for is the same as before. For the NCP form, and are given by

respectively. For the CP form, the conditional posterior distribution of are given by

where

3.2.3. Leverage Parameter

Their conditional posterior distribution of is given by

For the NCP form, we use and in (A3). For the CP form, we replace them with and in (A23).

3.2.4. Random Scale

Using the Bayes theorem, we obtain the conditional posterior distribution of as

where

For the NCP form, we use and in (A3). For the CP form, we replace them with and in (A23).

To improve the performance of the MCMC algorithm, we apply a generalized Gibbs sampler by Liu and Sabatti (2000) to after we generate them from the conditional posterior distribution (41). This is rather simple. All we need to do is to multiply each of by a random number c that is generated from

3.2.5. Asymmetry Parameter

Using the Bayes theorem, we obtain the conditional posterior distribution of as

For the NCP form, we use and in (A3). For the CP form, we replace them with and in (A23).

3.2.6. Tail Parameter

The explicit form of the conditional posterior density of is not available. Therefore, we apply the MH algorithm for generating . Note that the gamma density for SV-SG in (31) is identical to the inverse gamma density for SV-ST in (31) as a function of if we exchange with . Since we use the same gamma prior for in either case, the resultant conditional posterior density should be the same in both SV-SG and SV-ST. Therefore, it suffices to derive the MH algorithm for SV-ST.

The sampling strategy for is basically the same as for , which was originally proposed by Watanabe (2001). We first consider the second-order Taylor expansion of the log conditional posterior density of :

with respect to in the neighborhood of , i.e.,

where

and is the polygamma function of order s. Note that if . See Theorem 1 in Watanabe (2001) for the proof. By applying the completing-the-square technique to (46), we obtain the proposal distribution for the MH algorithm:

where

If we use the mode of as , always holds due to the global concavity of . Thus, is effectively identical to .

4. Empirical Study

As an application of our proposed models to real data, we analyze high-frequency data of the Tokyo Stock Price Index (TOPIX), a market-cap-weighted stock index based on all domestic common stocks listed in the Tokyo Stock Exchange (TSE) First Section, which is provided by Nikkei Media Marketing. We use the data in June 2016, when the referendum for the UK’s withdrawal from the EU (Brexit) was held on the 23rd of the month. The result of the Brexit referendum was announced during the trading hours of the TSE on that day. That news made the Japanese Stock Market plunge significantly. The Brexit referendum is arguably one of the biggest financial events in recent years. We can thus analyze the effect of the Brexit referendum on the volatility of the Japanese stock market. Another reason for this choice is that Japan has no holiday in June, so all weekdays are trading days. There are five weeks in June 2016. Since the first week of June 2016 includes 30 and 31 May and the last week includes 1 July, we also include them in the sample period.

The morning session of TSE starts at 9:00 a.m. and ends at 11:30 a.m. while the afternoon session of TSE starts at 12:30 a.m. and ends at 3:00 p.m., so both sessions last for 150 min. We treat the morning session and the afternoon session as if they are separated trading hours, and normalize the time stamps so that they take values within . As a result, corresponds to 9:00 a.m. for the morning session, while it corresponds to 12:30 a.m. for the afternoon session. In the same manner, corresponds to 11:30 a.m. for the morning session, while it corresponds to 3:00 p.m. for the afternoon session. In this empirical study, we estimate the Bernstein polynomial of the intraday seasonality in each session by allowing in (2) to differ from session to session.

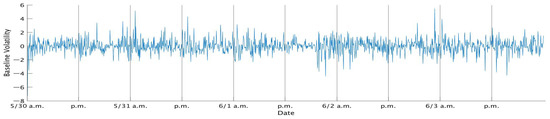

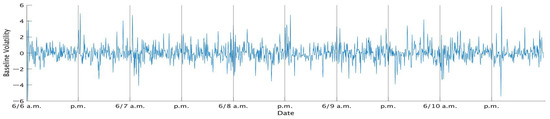

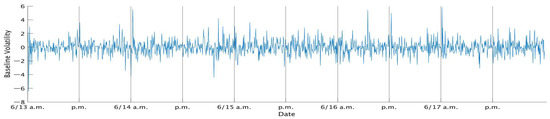

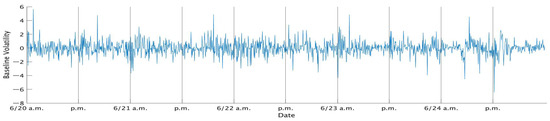

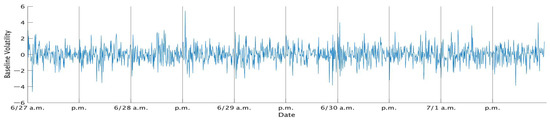

We pick prices at every 1 min and compute 1 min log difference of prices as 1 min stock returns. Thus, the number of observations per session is 150. Furthermore, we put together all series of 1 min returns in each week. As a result, the total number of observations per week is . In addition, to simplify the interpretation of the estimation results, we standardize each week-long series of 1 min returns so that the sample mean is 0 and the sample variance is 1. Table 1 shows the descriptive statistics of the standard 1 min returns of TOPIX in each week, while Figure 1, Figure 2, Figure 3, Figure 4 and Figure 5 show the time series plots of the standardized 1 min returns for each week.

Table 1.

Descriptive statistics of standardized TOPIX 1 min returns in June 2016.

Figure 1.

Standardized returns of the TOPIX data in the first week of June 2016.

Figure 2.

Standardized returns of the TOPIX data in the second week of June 2016.

Figure 3.

Standardized returns of the TOPIX data in the third week of June 2016.

Figure 4.

Standardized returns of the TOPIX data in the fourth week of June 2016.

Figure 5.

Standardized returns of the TOPIX data in the fifth week of June 2016.

We consider five candidates (SV-N, SV-G, SV-SG, SV-T, SV-ST) in the SV model (28) and set the prior distributions as follows:

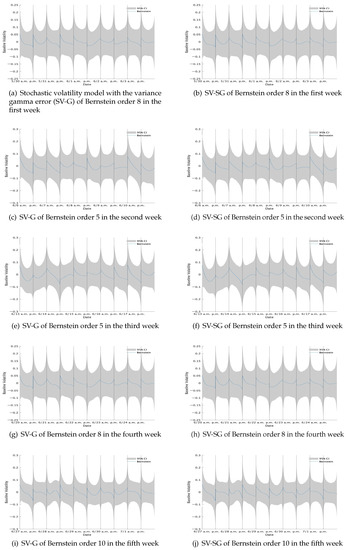

We vary the order of the Bernstein polynomial from 5 to 10. In sum, we try 30 different model specifications for the SV model (28). In the MCMC implementation, we generate 10,000 draws after the first 5000 draws are discarded as the burn-in periods. To select the best model among the candidates, we employ the widely applicable information criterion (WAIC, Watanabe 2010). We compute the WAIC of each model specification with the formula by Gelman et al. (2014). The results are reported in Table 2, Table 3, Table 4, Table 5 and Table 6. According to these tables, SV-G or SV-SG is the best model in all months. It may be a notable finding since the SV model with the variance-gamma error has hardly been applied in the previous studies.

Table 2.

Widely applicable information criterion (WAIC) values of TOPIX returns (week 1).

Table 3.

WAIC values of TOPIX returns (week 2).

Table 4.

WAIC values of TOPIX returns (week 3).

Table 5.

WAIC values of TOPIX returns (week 4).

Table 6.

WAIC values of TOPIX returns (week 5).

For the selected models, we compute the posterior statistics (posterior means, standard deviations, 95% credible intervals and inefficiency factors) of the parameters and report them in Table 7, Table 8, Table 9, Table 10 and Table 11. The inefficiency factor measures how inefficient the MCMC sampling algorithm is (see e.g., Chib 2001). In these tables, the 95% credible intervals of the leverage parameter and the asymmetric parameter contain 0 for all specifications. Thus, we may conclude that the error distribution of 1 min returns of TOPIX is not asymmetric. In addition, most of the marginal posterior distributions of are concentrated near 1, even though the uniform prior is assumed for . This suggests that the latent log volatility is strongly persistent, which is consistent with findings by previous studies on the stock markets. Regarding the tail parameter , its marginal posterior distribution is centered around 2–6 in most models, which indicates that the excess kurtosis of the error distribution is high.

Table 7.

Estimation results for TOPIX returns (week 1).

Table 8.

Estimation results for TOPIX returns (week 2).

Table 9.

Estimation results for TOPIX returns (week 3).

Table 10.

Estimation results for TOPIX returns (week 4).

Table 11.

Estimation results for TOPIX returns (week 5).

As for the intraday seasonality, the estimates of themselves are not of our interest. Instead we show the posterior mean and the 95% credible interval of the Bernstein polynomial in Figure 6. These figures show that some of the trading days exhibit the well-known U-shaped curve of intraday volatility, but others slant upward or downward. At the beginning on the day of Brexit (23 June), the market began with a highly volatile situation, but the volatility gradually became lower. During the afternoon session, the volatility was kept in a stable condition.

Figure 6.

Intraday seasonality with Bernstein polynomial approximation.

5. Conclusions

In this paper, we extended the standard SV model into a more general formulation so that it could capture key characteristics of intraday high-frequency stock returns such as intraday seasonality, asymmetry and excess kurtosis. Our proposed model uses a Bernstein polynomial of time stamps as the intraday seasonal component of the stock volatility, and the coefficients in the Bernstein polynomial are simultaneously estimated along with the rest of the parameters in the model. To incorporate asymmetry and excess kurtosis into the standard SV model, we assume that the error distribution of stock returns in the SV model belongs to a family of generalized hyperbolic distributions. In particular, we focus on two sub-classes of this family: skew Student’s t distribution and skew variance-gamma distribution. Furthermore we developed an efficient MCMC sampling algorithm for Bayesian inference on the proposed model by utilizing all without a loop (AWOL), ASIS and the generalized Gibbs sampler.

As an application, we estimated the proposed SV models with 1 min return data of TOPIX in various specifications and conducted model selection with WAIC. The model selection procedure chose the SV model with the variance-gamma-type error as the most suitable one. The estimated parameters indicated strong excess kurtosis in the error distribution of 1 min returns, though the asymmetry was not supported since both leverage parameter and asymmetry parameter were not significantly different from zero. Furthermore our proposed model successfully extracted intraday seasonal patterns in the stock volatility with Bernstein polynomial approximation, though the shape of the intraday seasonal component was not necessarily U-shaped.

Author Contributions

M.N. had full access to the data in the study and takes responsibility for the accuracy and integrity of the data and its analyses. Study concept and design: All authors. Acquisition, analysis, or interpretation of data: All authors. Drafting of the manuscript: M.N. Critical revision of the manuscript for important intellectual content: T.N. Statistical analysis: All authors. Administrative, technical or material support: All authors. Study supervision: T.N. All authors have read and agreed to the published version of the manuscript

Funding

This study was funded by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Number 19K01592.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Conditional Posterior Distributions

In Appendix A, we derive the conditional posterior distribution of the latent log volatility and that of each parameter in the SV model for both NCP and CP.

Appendix A.1. NCP Form

Appendix A.1.1. Latent Log Volatility h1:T+1

The conditional posterior density of the latent log volatility is

We apply the Metropolis-Hastings (MH) algorithm to generate from (A1). To derive a suitable proposal distribution for the MH algorithm, we first consider consider the second-order Taylor approximation of in the neighborhood of :

where is the gradient vector of :

and is the Hessian matrix of times :

which is a band matrix.

Let us derive the explicit form of each element in and . By defining

the log density of (7) is rewritten as

Note that

where . Each element of is derived as

for ,

for , and

for . The diagonal element in is given as

for ,

for , and

for . Furhtermore the first off-diagonal element of is derived as

for . In summary,

Since the log prior density of is

the conditional posterior density of (A1) can be approximated by

where C is the normalizing constant of the conditional posterior density and

By completing the square in (A9), we have

where

Therefore the right-hand side of (A8) is approximately proportional to the pdf of the following normal distribution:

Recall that both and V are tridiagonal matrices. Thus, is also tridiagonal. Since the Cholesky decomposition of a tridiagonal matrix and the inverse of a triangular matrix can be efficiently computed if they exist, is readily generated from (A11) with

where L is a lower triangular matrix obtained by the Cholesky decomposition as

The above algorithm, which is called the all without a loop (AWOL) in Kastner and Frühwirth-Schnatter (2014), has been applied to Gaussian Markov random fields (e.g., Rue 2001) and state-space models (e.g., Chan and Jeliazkov 2009; McCausland et al. 2011).

Hoping that the approximation (A8) is sufficiently accurate, we use (A11) as the proposal distribution in the MH algorithm. In practice, however, we need to address two issues:

- 1

- the choice of is crucial to make the approximation (A8) workable.

- 2

- the acceptance rate of the MH algorithm tends to be too low when is a high-dimensional vector.

We address the former issue by using the mode of the conditional posterior density as . The search of the mode is performed by the following recursion:

- Step 1:

- Initialize and set the counter .

- Step 2:

- Update by .

- Step 3:

- Let and go to Step 2 unless is less than the preset tolerance level.

In our experience, it mostly attains convergence in a few iterations.

We address the latter issue by applying a so-called block sampler. In the block sampler, we randomly partition into several sub-vectors (blocks), generate each block from its conditional distribution given the rest of the blocks and apply the MH algorithm to each generated block. Without loss of generality, suppose the proposal distribution (A11) is partitioned as

where and (we ignore the dependence on for brevity) and is the block to be updated in the current MH step, while contains either elements that were already updated in the previous MH steps or those to be updated in the following MH steps. It is well known that the conditional distribution of given is given by

Note that the inverse of the covariance matrix in (A12) is

Furthermore, if we let denote the upper-right block of , we have

Therefore the conditional distribution of given in (A13) is rearranged as

Recall that is tridiagonal and so is by construction. Thus, we can apply the AWOL algorithm:

to generate from (A14). In essence, our approach is an AWOL variant of the block sampler proposed by Omori and Watanabe (2008).

Appendix A.1.2. Regression Coefficients β

The sampling scheme for the regression coefficients is almost identical to the one for the log volatility . Let denote given and the parameters other than . In the same manner as (A2), consider the second-order Taylor approximation of in the neighborhood of :

where is the gradient vector of and is the Hessian matrix of times . Since , we have

Therefore, and are obtained as

With the prior , the conditional posterior density of can be approximated by

By completing the square as in (A16), the proposal distribution for the MH algorithm is derived as

where

The search algorithm for is the same as .

Since the dimension of is considerably smaller than , it is not necessary to apply the block sampler in our experience.

Appendix A.1.3. Leverage Parameter γ

Since we use the standard conditionally conjugate prior distributions for , the conditional posterior distribution is given by

Appendix A.1.4. Variance τ2

Since we use the standard conditionally conjugate prior distribution for , the conditional posterior distribution is given by

Appendix A.1.5. AR(1) Coefficient ϕ

Once the state variables are generated, the conditional posterior density of is given by

By completing the square, we have

With the above expression in mind, we use the following truncated normal distribution:

as the proposal distribution for in the MH algorithm.

Appendix A.2. CP Form

Appendix A.2.1. Latent Log Volatility

The sampling scheme from the conditional posterior distribution of :

is based on the MH algorithm, which is similar to the case of the NCP form. To construct the proposal distribution of , we consider the second-order Taylor approximation of in the neighborhood of as in (A2). We first derive the explicit form of each element in and . By defining

the log density of in (15) is rewritten as

Since the log prior density of is

the conditional posterior density of (A22) can be approximated by

where C is the normalizing constant of the conditional posterior density and

By completing the square in (A26), we have

where

Therefore, the right-hand side of (A25) is approximately proportional to the pdf of the following normal distribution:

which we use as the proposal distribution in the MH algorithm. We obtain in (A28) with the same search algorithm as in the case of the NCP form and apply the block sampler to improve the acceptance rate in the MH algorithm.

Appendix A.2.2. Regression Coefficients β

By ignoring the terms that do not depend on , we can rearrange the density of in (15) as

where

By defining and , we have

Then the conditional posterior distribution of is given by

By completing the square, we have the conditional posterior distribution of as

where

Since ,

where .

Appendix A.2.3. Leverage Parameter γ

Replacing and in (19) with and , respectively, we have

Appendix A.2.4. Variance τ2

It is straightforward to show that the conditional posterior distribution of is

Appendix A.2.5. AR(1) Coefficient ϕ

Replacing in derivation of (A21) with , we have

We use (A34) as the proposal distribution for in the MH algorithm.

References

- Abanto-Valle, C. A., V. H. Lachos, and Dipak K. Dey. 2015. Bayesian estimation of a skew-Student-t stochastic volatility model. Methodology and Computing in Applied Probability 17: 721–38. [Google Scholar] [CrossRef]

- Andersen, Torben G., and Tim Bollerslev. 1997. Intraday periodicity and volatility persistence in financial markets. Journal of Empirical Finance 4: 115–58. [Google Scholar] [CrossRef]

- Andersen, Torben G., and Tim Bollerslev. 1998. Answering the skeptics: Yes, standard volatility models do provide accurate forecasts. International Economic Review 39: 885–905. [Google Scholar] [CrossRef]

- Barndorff-Nielsen, Ole. 1977. Exponentially decreasing distributions for the logarithm of particle size. Proceedings of the Royal Society A. Mathematical, Physical and Engineering Sciences 353: 401–19. [Google Scholar]

- Berg, Andreas, Renate Meyer, and Jun Yu. 2004. DIC as a model comparison criterion for stochastic volatility models. Journal of Business and Economic Statistics 22: 107–20. [Google Scholar] [CrossRef]

- Chan, Joshua CC, and Ivan Jeliazkov. 2009. Efficient simulation and integrated likelihood estimation in state space models. International Journal of Mathematical Modelling and Numerical Optimisation 1: 101–20. [Google Scholar] [CrossRef]

- Chan, Kalok, Kakeung C. Chan, and G. Andrew Karolyi. 1991. Intraday volatility in the stock index and stock index futures markets. The Review of Financial Studies 4: 657–84. [Google Scholar] [CrossRef]

- Chib, Siddhartha. 2001. Markov chain Monte Carlo methods: Computation and inference. In Handbook of Econometrics. Edited by James J. Heckman and Edward E. Leamer. Amsterdam: North Holland, pp. 3569–649. [Google Scholar]

- Fičura, Milan, and Jiri Witzany. 2015a. Estimating Stochastic Volatility and Jumps Using High-Frequency Data and Bayesian Methods. Available online: https://ssrn.com/abstract=2551807 (accessed on 8 December 2020).

- Fičura, M., and J. Witzany. 2015b. Applications of Mathematics & Statistics in Economics. Available online: http://amse2015.cz/doc/Ficura_witzany.pdf (accessed on 8 December 2020).

- Gelman, Andrew, Jessica Hwang, and Aki Vehtari. 2014. Understanding predictive information criteria for Bayesian models. Statistics and Computing 24: 997–1016. [Google Scholar] [CrossRef]

- Ghysels, Eric, Andrew C. Harvey, and Eric Renault. 1996. Stochastic volatility. In Handbook of Statistics. Edited by Maddala G. S and Calyampudi Radhakrishna Rao. Amsterdam: Elsevier Science, pp. 119–91. [Google Scholar]

- Jacquier, Eric, Nicholas G. Polson, and Peter E. Rossi. 1994. Bayesian analysis of stochastic volatility models. Journal of Business & Economic Statistics 12: 371–89. [Google Scholar]

- Jacquier, Eric, Nicholas G. Polson, and Peter E. Rossi. 2004. Bayesian analysis of stochastic volatility models with fat-tail and correlated errors. Journal of Econometrics 122: 185–212. [Google Scholar] [CrossRef]

- Kastner, Gregor, and Sylvia Frühwirth-Schnatter. 2014. Ancillarity-sufficiency interweaving strategy (ASIS) for boosting MCMC estimation of stochastic volatility models. Computational Statistics & Data Analysis 76: 408–23. [Google Scholar]

- Liu, Jun S., and Chiara Sabatti. 2000. Generalised Gibbs sampler and multigrid Monte Carlo for Bayesian computation. Biometrika 87: 353–69. [Google Scholar] [CrossRef]

- McCausland, William J., Shirley Miller, and Denis Pelletier. 2011. Simulation smoothing for state-space models: A computational efficiency analysis. Computational Statistics & Data Analysis 55: 199–212. [Google Scholar]

- Mykland, Per A., and Lan Zhang. 2017. Assessment of Uncertainty in High Frequency Data: The Observed Asymptotic Variance. Econometrica 85: 197–231. [Google Scholar] [CrossRef]

- Nakajima, Jouchi. 2012. Bayesian analysis of generalized autoregressive conditional heteroskedasticity and stochastic volatility: Modeling leverage, jumps and heavy-tails for financial time series. Japanese Economic Review 63: 81–103. [Google Scholar] [CrossRef]

- Nakajima, Jouchi, and Yasuhiro Omori. 2009. Leverage, heavy-tails and correlated jumps in stochastic volatility models. Computational Statistics & Data Analysis 53: 2335–53. [Google Scholar]

- Nakajima, Jouchi, and Yasuhiro Omori. 2012. Stochastic volatility model with leverage and asymmetrically heavy-tailed error using GH skew Student’s t-distribution. Computational Statistics & Data Analysis 56: 3690–704. [Google Scholar]

- Omori, Yasuhiro, Siddhartha Chib, Neil Shephard, and Jouchi Nakajima. 2007. Stochastic volatility with leverage: Fast and efficient likelihood inference. Journal of Econometrics 140: 425–49. [Google Scholar] [CrossRef]

- Omori, Yasuhiro, and Toshiaki Watanabe. 2008. Block sampler and posterior mode estimation for asymmetric stochastic volatility models. Computational Statistics & Data Analysis 52: 2892–910. [Google Scholar]

- Rue, Håvard. 2001. Fast sampling of Gaussian Markov random fields. Journal of the Royal Statistical Society 63: 325–38. [Google Scholar] [CrossRef]

- Stroud, Jonathan R., and Michael S. Johannes. 2014. Bayesian modeling and forecasting of 24-hour high-frequency volatility. Journal of the American Statistical Association 109: 1368–84. [Google Scholar] [CrossRef]

- Taylor, Stephen John. 1982. Financial returns modelled by the product of two stochastic processes-A study of the daily sugar prices 1961–1975. In Time Series Analysis: Theory and Practice 1. Edited by Anderson O. D. Amsterdam: North Holland, pp. 203–26. [Google Scholar]

- Tsiotas, Georgios. 2012. On generalised asymmetric stochastic volatility models. Computational Statistics & Data Analysis 56: 151–72. [Google Scholar]

- Watanabe, Toshiaki. 2001. On sampling the degree-of-freedom of Student’s-t disturbances. Statistics & Probability Letters 52: 177–81. [Google Scholar]

- Watanabe, Sumio. 2010. Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. Journal of Machine Learning Research 11: 3571–94. [Google Scholar]

- Virbickaitė, Audronė, Hedibert F. Lopes, M. Concepción Ausín, and Pedro Galeano. 2019. Particle learning for Bayesian semi-parametric stochastic volatility model. Econometric Reviews 38: 1007–23. [Google Scholar] [CrossRef]

- Yu, Yaming, and Xiao-Li Meng. 2011. To center or not to center: That is not the question—An ancillarity-sufficiency interweaving strategy (ASIS) for boosting MCMC efficiency. Journal of Computational and Graphical Statistics 20: 531–70. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).