Abstract

To model multivariate, possibly heavy-tailed data, we compare the multivariate normal model (N) with two versions of the multivariate Student model: the independent multivariate Student (IT) and the uncorrelated multivariate Student (UT). After recalling some facts about these distributions and models, known but scattered in the literature, we prove that the maximum likelihood estimator of the covariance matrix in the UT model is asymptotically biased and propose an unbiased version. We provide implementation details for an iterative reweighted algorithm to compute the maximum likelihood estimators of the parameters of the IT model. We present a simulation study to compare the bias and root mean squared error of the ensuing estimators of the regression coefficients and covariance matrix under several scenarios of the potential data-generating process, misspecified or not. We propose a graphical tool and a test based on the Mahalanobis distance to guide the choice between the competing models. We also present an application to model vectors of financial assets returns.

1. Introduction

Many applications involving models for multivariate data underline the limitations of the classical multivariate Gaussian model, mainly due to its inability to model heavy tails. It is then natural to turn attention to a more flexible family of distributions, for example the multivariate Student distribution.

In one dimension, the generalized Student distribution encompasses the Gaussian distribution as a limit when the number of degrees of freedom or shape parameter tends to infinity, allowing for heavier tails when the shape parameter is small. As we will see, a first difficulty in higher dimensions is that there are several kinds of multivariate Student distributions; see for example () and more recently (). A nice summary of the properties of the multivariate Student distribution that we will use later on in this paper, and its comparison with the Gaussian multivariate, can be found in ().

Before going further, let us mention that it is not so easy to have a clear overview of the results in terms of Student regression models for at least three reasons. The first reason is that this topic is scattered, with some papers in the statistical literature and others in the econometrics literature, sometimes without cross-referencing. The second reason is that the word “multivariate” is sometimes misleading since, as we will see, the multivariate Student is used to define a univariate regression model. At last, the distinction between models UT and IT (see below) is not always clearly announced in the papers. Other miscellaneous reasons are that some authors just fit the distribution without covariates and finally that some authors consider the degrees of freedom as fixed, whereas others estimate it. Our first purpose here is to lead the reader through this literature and gather the results concerning the maximum likelihood estimators of the parameters in the multivariate UT and IT models with a common notation. In the present paper, we consider a multivariate dependent vector and a linear regression model with different assumptions on the error term distribution. The most common and convenient assumption is the Gaussian distribution. For a Gaussian vector, the assumption of independent coordinates is equivalent to the assumption of uncorrelated coordinates. Such an equivalence is no longer true when considering a multivariate Student distribution. We thus consider two cases: uncorrelated (UT) on the one hand and independent Student (IT) error vectors on the other hand.

The purpose of this paper is to contribute to the UT and IT models as well as to their comparisons. First of all, for the UT model, we extend to the multivariate case the results of () for the derivation of the maximum likelihood estimators and Zellner’s formula ( ()) for the bias of the covariance matrix estimator, and we prove that it does not vanish asymptotically. For the multivariate IT model, in the same spirit as (), we provide details for the implementation of an iterative reweighted algorithm to compute the maximum likelihood estimators of the parameters. We devise a simulation study to measure the impact of misspecification on the bias, variance, and mean squared error of these different parameters’ estimates under several data-generating processes (Gaussian, UT, and IT) and try to answer the question: what are the consequences of a wrong specification? Finally we introduce a new procedure for model selection based on the knowledge of the distribution of the Mahalanobis distances under the different data-generating processes (DGP).

One application attracted our attention in the finance literature. The work in () identified the Student distribution with between three and five degrees of freedom, with a concentration around four, as the typical distribution for modeling the distribution of log-returns of world stock indices. They embedded the Student t in the class of generalized hyperbolic distributions, itself a subclass of the normal/independent family. For bivariate returns, the work in () compared a multivariate Student IT model with an alternative model obtained by a more complex mixing representation from the point of view of asymptotic tail dependence. The work in () insisted on the fact that the choice of distribution matters when optimizing the portfolio. They found that the Student UT model performs the best in the class of symmetric generalized hyperbolic distributions. The work in () advocated using a multivariate IT model for fitting the joint distribution of stock returns for a few fixed values of the degrees of freedom parameter and showed that this model outperforms the multivariate Gaussian.

In Section 2, after recalling the univariate results, we extend the results of () for the derivation of the maximum likelihood estimators and its properties in the UT model and propose an iterative implementation for the IT model. We present the results of the simulation study in Section 3 and of the model selection strategy in Section 4 using a toy example and a dataset from finance. Section 5 summarizes the findings and gives recommendations.

2. Multivariate Regression Models

2.1. Literature Review

In order to define a Student regression model, even in the univariate case (single dependent variable), one needs to use the multivariate Student distribution to describe the joint distribution of the vector of observations for the set of statistical units. There are mainly two options, which were described in () for the case of univariate regression. Indeed, the property of the equivalence between the independence and uncorrelatedness for components of a Gaussian vector are not satisfied anymore for a multivariate Student vector. One option, which we will call the IT model (for independent t-distribution) in the sequel, considers that the components of the random disturbance vector of the regression model are independent with the same marginal Student distribution. The second option, which we will call the UT model (for uncorrelated t-distribution), postulates a joint multivariate Student distribution for the vector of disturbances. Note that in both models, the marginal distribution of each component still is Student univariate.

The work in () introduced a univariate Student regression model of the type UT with known degrees of freedom and studied the corresponding maximum likelihood and Bayesian estimators (with some adapted priors). The work in () considered the case of univariate Student regression with the UT model and with unknown degrees of freedom and derived an estimator of the degrees of freedom and subsequent estimators of the other parameters. However, () showed that this estimator was not consistent. Using one possible representation of the multivariate Student distribution, () embedded univariate Student regression with the UT model in a larger family of regression models (with normal/independent error distributions) and developed EM algorithms to compute their maximum likelihood estimates, as in ().

In the framework of the spherical error distribution, which includes the Student error model as a special case, the work in () proved an extension to the multivariate case of Zellner’s result stating that inference about the parameters corresponds closely to that under normal theory. Motivated by a financial application, the work in () used a multivariate UT Student regression model with moment estimators instead of maximum likelihood and allowing the degrees of freedom to be unknown.

The univariate IT model was introduced in () and compared to the UT model in ().

Concerning multivariate IT Student distributions, there was first a collection of results or applications for the case without regressors. The work in () used a representation of the multivariate IT Student distribution to derive an algorithm of the EM type for computing the maximum likelihood parameter estimators. They used the framework of normal mixture distributions in which the Student distribution can be expressed as a combination of a Gaussian random variable and an inverse gamma random variable. More recently, the work in () proposed a more robust extension, replacing maximum likelihood by a kind of M-estimation method based on the minimization of a q-entropy criterion. For the multivariate Student IT model, the work in () derived the normal equations for the maximum likelihood estimators and their asymptotic properties with known degrees of freedom in a framework that encompasses our multivariate Student regression case. The work in () illustrated this multivariate IT model on several examples. The work in () considered the framework of normal/independent error distributions (same as normal variance mixtures) and derived the EM algorithm for the maximum likelihood estimators in a model with covariates. The works in () and () developed extensions of the EM algorithm for the multivariate IT model with known or unknown degrees of freedom, with or without covariates and with or without missing data. The work in () fit a multivariate IT distribution to multiparty electoral data. The work in () attracted attention to the fact that maximum likelihood inference can encounter problems of unbounded likelihood when the number of degrees of freedom is considered unknown and has to be estimated. Before engaging in the use of the multivariate Student distribution, it is wise to read (), which explained some traps to be avoided. One difficulty indeed is to be aware that some authors parametrize the multivariate Student distribution using the covariance matrix, while others use the scatter matrix, sometimes with the same notation for either one.

We consider the following version of the Student p-multivariate distribution denoted by with being the p-vector of means, being the covariance matrix, and the degrees of freedom. It is defined, for a p-vector , by the probability density function:

where denotes the transpose operator, and is the usual Gamma function.

Note that the assumption implies the existence of the first two moments of the distribution and that the above density function is parametrized in terms of the covariance matrix. In most of the literature on multivariate Student distributions, the density is rather parametrized as a function of the scatter matrix . Using the covariance matrix parametrization facilitates the comparison with the Gaussian distribution. We first recall some results in the univariate regression context.

2.2. Univariate Regression Case Reminder

In the univariate regression case and for a sample of size n, we have a one-dimensional dependent variable , , whose values are stacked in a vector , and K explanatory variables defining a design matrix including the constant.

The regression model is written as where is a -dimensional vector of parameters and the error term is an n-dimensional vector. If we consider that the design matrix is fixed with rank or look at the distribution of conditional on , the usual assumptions are the following. The errors , , are independent and identically distributed (i.i.d.) with expectation zero and equal variance . In this context, it is well known that the least squares estimator of is equal to:

while the classical estimator is where . These estimators are unbiased. In the case of a Gaussian error distribution, the estimator coincides with the maximum likelihood estimator of , while the maximum likelihood estimator of is equal to multiplied by and is only asymptotically unbiased. In the Gaussian case, there is an equivalence between the being independent or uncorrelated. However, this property is no longer true for a Student distribution. This means that one should distinguish the case of uncorrelated errors from the case of independent errors. The case where the errors , , follow a joint n-dimensional Student distribution with diagonal covariance matrix and equal variance is called the UT model, and its coordinates are uncorrelated, but not independent. Interestingly, the maximum likelihood method for the UT model with known degrees of freedom leads to the least squares estimator (2) of ( ()). This property is true for more general distributions as long as the likelihood is a decreasing function of . Concerning the error variance, the maximum likelihood estimator is and is biased even asymptotically (). For the independent case, we assume that the errors , , are i.i.d. with a Student univariate distribution and known degrees of freedom. The maximum likelihood estimators belong to the class of M-estimators, which are studied in detail in Chapter 7 of (). These estimators are defined through implicit equations and can be computed using an iterative reweighted algorithm.

In what follows, we consider the case of a multivariate dependent variable and propose to gather and complete the results from the literature. As we will see, the results derived in the multivariate case are very similar to their univariate counterpart. In particular, the maximum likelihood estimator of the error covariance matrix is biased for the uncorrelated Student model, while there is a need to define an iterative algorithm for the independent Student model.

2.3. The Multivariate Regression Model

Let us consider a sample of size n, and for , let us denote the L-dimensional dependent vector by:

For K explanatory variables, the design matrix is of size and is given by:

for , with the -vector , the identity matrix with dimension L, and ⊗ the usual Kronecker product. The parameter of interest is a vector given by:

where , for , and the L-vector of errors is denoted by:

for . We consider the linear model:

with and . Using matrix notations, we can write Model (3) as:

with the -vectors:

and the matrix:

In what follows, we make different assumptions on the distribution of and recall (for Gaussian and IT) or derive (for UT) the maximum likelihood estimators of the parameter and of the covariance matrix of .

2.4. Multivariate Normal Error Vector

Let us first consider Model (4) with independent and identically distributed error vectors , , following a multivariate normal distribution with an L-vector of means equal to zero and an covariance matrix . This model is denoted by N, and the subscript N is used to denote the error terms , , and the parameters and of the model. The maximum likelihood estimators of and are:

where (see, e.g., Theorem 8.4 from ()).

The estimator is an unbiased estimator of while the bias of is equal to and tends to zero when n tends to infinity (see, e.g., Theorems 8.1 and 8.2 from ()).

For data such as financial data, it is well known that the Gaussian distribution does not fit the error term well. Student distributions are known to be more appropriate because they have heavier tails than the Gaussian. As for the univariate case, for Student distributions, the independence of coordinates is not equivalent to their uncorrelatedness, and we consider below two types of Student distributions for the error term. In Section 2.5, the error vector is assumed to follow a Student distribution with dimensions and a particular block diagonal covariance matrix. More precisely, we assume that the error vectors , , are identically distributed and uncorrelated but are not independent. In Section 2.6, however, we consider independent and identically distributed error vectors , , with an L-dimensional Student distribution.

2.5. Uncorrelated Multivariate Student (UT) Error Vector

Let us consider Model (4) with uncorrelated and identically distributed error vectors , , such that the vector follows a multivariate Student distribution with known degrees of freedom and covariance matrix . The matrix is the common covariance matrix of the , . This model is denoted by UT, and the subscript is used to denote the error terms , , and the parameters , , and of the model. This model generalizes the model proposed by () to the case of multivariate s. We derive the maximum likelihood estimators of and in Proposition 1 and give the bias of the covariance estimator in Proposition 2. The proofs of the propositions are given in the Appendix A.

Proposition 1.

The maximum likelihood estimators of and are given by:

where .

The next proposition gives the bias of the maximum likelihood estimators and generalizes Zellner’s result ( (), p. 402) to the multivariate UT model. The maximum likelihood estimator of coincides with the least squares and with the method of moment estimators and is unbiased. This is no longer the case for the maximum likelihood estimator of , which is biased even asymptotically. This gives an example of a maximum likelihood estimator that is not asymptotically unbiased in a context where the random variables are not independent. It illustrates that the independence assumption is crucial to derive the usual properties of the maximum likelihood estimators. Note that the method of moments estimator is a consistent estimator of (see ()).

Proposition 2.

The estimator is unbiased for . The estimator is biased for even asymptotically. More precisely,

A consequence of Proposition 2 is that an asymptotically unbiased estimator of is given by .

2.6. Independent Multivariate Student Error Vector

Let us consider Model (4) using the notations of Section 2.3 with i.i.d. , , following a Student distribution with L dimensions and known degrees of freedom . We denote this model by IT and the parameters of the model by and . The IT model is a particular case of () where the B matrix in Expression (2.1) in () is equal to zero.

Following (), we derive the maximum likelihood estimators for the IT model.

Proposition 3.

The maximum likelihood estimators of and in the IT regression model satisfy the following implicit equations:

These estimators are consistent estimators of and (see Theorem 3.2 in ()). In order to compute them, we propose to implement the following iterative reweighted algorithm in the same spirit as in () for the univariate case (see also ()).

Step 0: Let:

Step k→ Step (), :

The process is iterated until convergence. Note that this algorithm is given in detail in Section 7.8 of () for a general class of univariate regression M-estimators. It is also sometimes called IRLS for iteratively-reweighted least squares and can be seen as a particular case of the EM algorithm ( ()).

Table 1 gathers the likelihoods and thus summarizes the three models of interest.

Table 1.

Distribution of the error vector in the Gaussian, UT, and IT models.

3. Simulation Study

3.1. Design

This study aims at comparing the properties of the estimators of and as defined in the previous section for the multivariate Gaussian (N), the uncorrelated multivariate Student (UT), and the independent multivariate Student (IT) error distributions, under several scenarios for the DGP. Note that for the UT model, we used the asymptotically unbiased estimator to estimate . We considered a variety of degrees of freedom for the Student IT and UT models with a focus on values between three and five. We used the function rmvt from the R package mvnfast to simulate the Student distributions. For a sample size and a number of replications N = 10,000, we simulated an explanatory variable following a Gaussian distribution . The parameter vector and the covariance matrix are respectively chosen to be:

Note that similar results are obtained with other choices of parameters.

For each DGP, we calculate a number of Monte Carlo performance measures of the estimators proposed in Section 2. The performances are measured by the Monte Carlo relative bias (RB) and the mean squared error (MSE), which are defined for an estimator of a parameter by:

We also compute a relative root mean squared error (RRMSE) with respect to a baseline estimator as:

In our case, the baseline estimator is the maximum likelihood estimator (MLE) corresponding to the DGP. For example, in Table 2, the RRMSE of the for the Gaussian DGP is the ratio of the MSE of with the degrees of freedom and the MSE of . Note that if , then the RRMSE of is equal to one.

Table 2.

Relative bias and relative root mean squared error of the estimators of () for the corresponding DGP (Gaussian, UT, and IT).

3.2. Estimators of the Parameters

Table 3 reports the bias and the MSE of the Gaussian MLE estimator , the UT MLE estimator (), and the IT MLE estimator () when the model is well specified, i.e., under the corresponding DGP. The bias and MSE of the estimators of are small and comparable under the Gaussian and the UT DGP, but smaller for the IT DGP. Note that, in our implementation, the results of the algorithm for the IT estimators are very similar to those obtained using the function heavyLm from the R package heavy.

Table 3.

Bias and MSE of the maximum likelihood estimators of for the corresponding DGP (Gaussian, UT, and IT).

In Table 2, we start considering misspecifications and report the corresponding relative values RB and RRMSE of the same estimators and the same DGP as in Table 3 with all possible combinations of DGP and estimation methods. The results indicate that the RB of are all very small. If the DGP is Gaussian and the estimator is IT, the RRMSE of coordinates of is about . However, if the DGP is IT and the estimator is Gaussian, the RRMSE of coordinates of is higher (from 1.46–1.48). Hence for the Gaussian DGP, we do not loose too much efficiency using the IT estimator with three degrees of freedom. Inversely, we loose much more efficiency when using for the IT DGP with three degrees of freedom.

In order to consider more degrees of freedom (3, 4, and 5), we now drop the bias and focus on the RRMSE. Table 4 indicates that the RRMSE of is very similar and close to one, with a maximum of , except for the case of the N estimator under the IT DGP, where it can reach . The work in () provided theoretical asymptotic efficiencies of the Student versus the Gaussian estimators, the ratio of asymptotic variances being equal to The values obtained in Table 2 are very similar to these asymptotic values.

Table 4.

The root relative mean squared errors of .

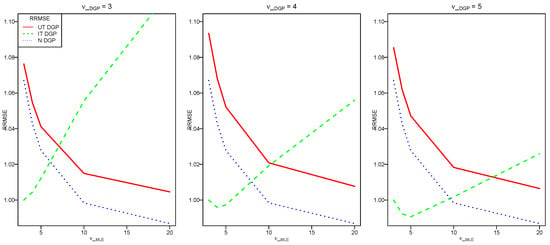

Figure 1 shows the performances in terms of RRMSE of the IT estimators under different DGP as a function of the degrees of freedom of the IT estimator (). The considered DGP are the Gaussian, UT, and IT DGP with the degrees of freedom (respectively, , ) on the left (respectively, middle, right) plot. Overall, the RRMSE of for the IT DGP has a down trend and then an up trend, while for the Gaussian and the UT DGP, the RRMSE are decreasing when increases. The maximum RRMSE of is around 1.09 under the UT DGP and is around 1.08 under the Gaussian DGP. It decreases then to one when increases to twenty under the Gaussian and the UT DGP; thus, the risk under misspecification is not very high. The curve is U-shaped under the IT DGP with a minimum when . The worst performance is when is small and is large. The RRMSE of with is similar than the one with .

Figure 1.

The RRMSE of the IT estimator of for the UT DGP in solid line, for the IT DGP in dashed line, and for the Gaussian DGP in dotted line with (respectively, ) on the left (respectively, middle, right) plot.

3.3. Estimators of the Variance Parameters

Table 5 reports the biases and the MSE of for the Gaussian DGP, the UT () DGP, and the IT () DGP. The bias and the MSE of are very similar and small for all cases. The MSE of the Gaussian estimators and are small under the Gaussian DGP, but they are higher under the UT and IT DGP. The biases and MSE of the IT estimator and are small under the IT DGP, but high under the Gaussian and the UT DGP. Besides, Table 5 also indicates that there is no method that estimates the variances well under the UT DGP.

Table 5.

The bias and the MSE of .

As before, we now consider misspecified cases and focus on relative bias in Table 6. We observe that the relative bias for is negligible in all situations. The RB for and are also quite small (less than around 5%) when using the Gaussian estimator for all DGP. This is also true when using the IT estimator for the IT DGP with the same degrees of freedom . There are some biases for and if the DGP is Gaussian or UT and the estimator is IT. For this estimator, the relative bias of is around 100% for the Gaussian DGP, 96% for the UT DGP with and , and 22% for the UT DGP with and . The RB for and are also quite high (up to 50%) for the IT estimator when the DGP is IT with . To summarize, in terms of the RB of the variance estimators, the Gaussian estimator yields better results than the IT estimator.

Table 6.

The RB of with .

Finally, Table 7 presents the RRMSE in the same cases. It shows that the RRMSE of varies from 0.94–1.09 for all DGP except for the case of the IT DGP with the Gaussian estimator, which ranges between 1.42 and 3.21. Besides, if the DGP is Gaussian and the estimator is IT or if the DGP is IT and the estimator is Gaussian, the RRMSE of and are high in particular for or : we loose a lot of efficiency in these misspecified cases. To conclude, we have seen from Table 6 that the RB of and are smaller for the Gaussian estimator than for the IT estimator. However, in terms of RRMSE, there is no clear advantage in using the Gaussian estimator with respect to the IT estimator.

Table 7.

The RRMSE of in the Gaussian DGP, the UT DGP (), and the IT DGP ().

It should be noted that for , the Student distribution has no fourth-order moment, which may explain the fact that the covariance estimators have large MSE.

In order to allow the reproducibility of the empirical analyses contained in the present and the following sections, some Supplementary Material is available at the following link: http://www.thibault.laurent.free.fr/code/jrfm/.

4. Selection between the Gaussian and IT Models

In this section, we propose a methodology to select a model between the Gaussian and independent Student models and to select the degrees of freedom for the Student in a short list of possibilities. Following the warnings of () and the empirical results of (), (), and (), we decided to focus on a small selection of degrees of freedom and fit our models without estimating this parameter, considering that a second step of model selection will make the choice. Indeed, there is a limited number of interesting values, which are between three and eight (for larger values, the distribution gets close to being Gaussian). The work in (), p.883, proposed the likelihood ratio test for the univariate case. In what follows, we use the fact that the distribution of the Mahalanobis distances is known under the two DGP, which allows building a Kolmogorov–Smirnov test and using Q-Q plots. Unfortunately, this technique does not apply to the UT model for which the n observations are a single realization of the multivariate distribution. One advantage of this approach is that the Mahalanobis distance is a one-dimensional variable, whereas the original observations have L dimensions.

4.1. Distributions of Mahalanobis Distances

For an L-dimensional random vector , with mean , and covariance matrix , the squared Mahalanobis distance is defined by:

If is a sample of size n from the L-dimensional Gaussian distribution , the squared Mahalanobis distance of observation i, denoted by , follows a distribution. If and are unknown, then the squared Mahalanobis distance of observation i can be estimated by:

where and is the sample covariance matrix. The work in () (see also ()) proved that this square distance follows a Beta distribution, up to a multiplicative constant:

where L is the dimension of . For large n, this Beta distribution can be approximated by the chi-square distribution . According to () (p. 172), already provides a sufficiently large sample for this approximation, which is the case in all our examples below. If we now assume that is a sample of size n from the L-dimensional Student distribution , then the squared Mahalanobis distance of observation i, denoted by and properly scaled, follows a Fisher distribution (see ()):

If and are unknown, then the squared Mahalanobis distance of observation i can be estimated by:

where and are the MLE of and . Note that in the IT model, is no longer equal to . Up to our knowledge, there is no result about the distribution of .

In the elliptical distribution family, the distribution of Mahalanobis distances characterizes the distribution of the observations. Thus, in order to test the normality of the data, we can test whether the Mahalanobis distances follow a chi-square distribution. Similarly, testing the Student distribution is equivalent to testing whether the Mahalanobis distances follow the Fisher distribution. There are two difficulties with the approach. The first one is that the estimated Mahalanobis distances are not a sample from the chi-square (respectively, the Fisher) distribution because there is dependence due to the estimation of the parameters. The second one is that, in our case, we not only estimate and , but we are in a regression framework where is linear combination of regressors, and we indeed estimate its coefficients. In what follows, we will ignore these two difficulties and consider that, for large n, the distributions of the estimated Mahalanobis distances behave as if and were known.

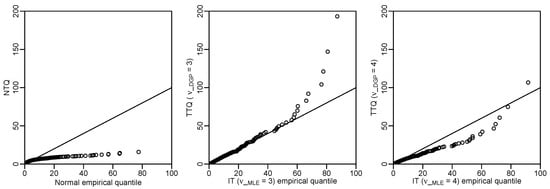

We propose to implement several Kolmogorov–Smirnov tests in order to test different null hypothesis: Gaussian, Student with three degrees of freedom, and Student with four degrees of freedom. As an exploratory tool, we also propose drawing Q-Q plots of the Mahalanobis distances with respect to the chi-square and the Fisher distribution ().

4.2. Examples

This section illustrates some applications of the proposed methodology for selecting a model. We use a real dataset from finance and three simulated datasets with the same DGP as in Section 3.

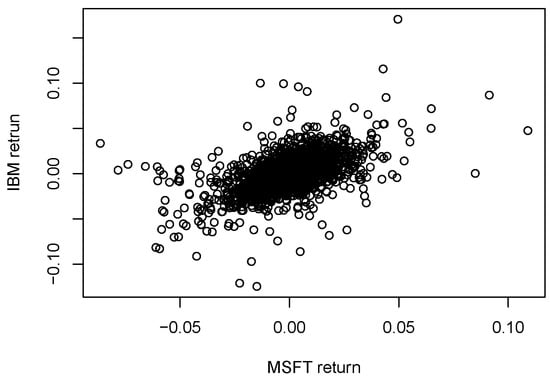

The real dataset is the daily closing share price of IBM and MSFT, which are imported from Yahoo Finance from 3 January 2007–27 September 2018 using the quantmod package in R. It contains observations. Let be the daily share price of IBM and MSFT and be the log-price increment (return) (see ()) over a day period, then:

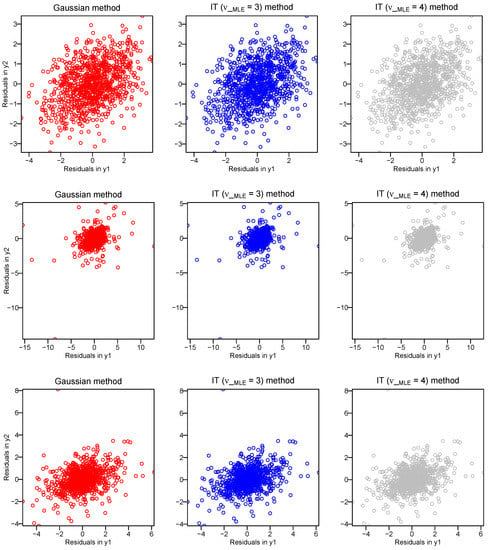

The three other datasets are simulated using the same model as in Section 3 with the Gaussian DGP, the IT DGP with , and the IT DGP with and with sample size . Figure 2 (respectively, Figure 3) displays the scatterplots of the financial data (respectively, the three toy data).

Figure 2.

Financial data: scatterplot of returns.

Figure 3.

Toy data: scatterplots of residuals in the Gaussian DGP (respectively, the IT DGP with , the IT DGP with ) on the first row (respectively, the second row, the third row).

We compute the Gaussian and the IT estimators as in Section 3. We then calculate the squared Mahalanobis distances of the residuals and use a Kolmogorov–Smirnov test for deciding between the models. For the financial data, we have no predictor. We test the Gaussian (respectively the Student with three degrees of freedom, the Student with four degrees of freedom) null hypothesis. When testing one of the null hypotheses, we use the estimator corresponding to the null. Moreover, when the null hypothesis is Student, we use the corresponding degrees of freedom for computing the maximum likelihood estimator. We do reject the null hypothesis if the p-value is smaller than . Note that we could adjust the level of by taking into account multiple testing.

Table 8 shows the p-values of these tests. For the simulated data, at the level, we do not reject the Gaussian assumption when the DGP is Gaussian. Similarly, we do not reject the Student distribution with three (respectively, four) degrees of freedom when the DGP is the IT with degrees of freedom (respectively, ). For the financial data, we do not reject the Student distribution with three degrees of freedom, but we do reject the Gaussian distribution and the Student distribution with four degrees of freedom.

Table 8.

All datasets: the p-values of the Mahalanobis distances tests with the null hypothesis and the corresponding estimators.

Figure 4 shows the Q-Q plots comparing the empirical quantiles of the Mahalanobis distances for the normal (respectively, the IT (), the IT ()) estimators on the horizontal axis to the theoretical quantiles of the Mahalanobis distances for the normal (respectively, the IT (), the IT ()) on the vertical axis for the financial data. These Q-Q plots are coherent with the results of the tests in Table 8. The IT model with three degrees of freedom fits our financial data well.

Figure 4.

Financial data: Q-Q plots of the Mahalanobis distances for the normal, IT (), and IT () estimators.

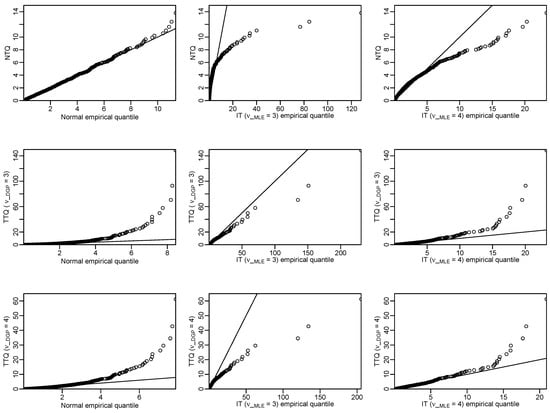

Figure 5 displays the Q-Q plots for the toy DGP: the Gaussian DGP in the first column, the IT DGP with in the second column, and the IT DGP with in the third column. The first row compares the empirical quantiles to the normal case quantiles, the second to the Student case quantiles with , and the third row to Student case quantiles with The Q-Q plots on the diagonal confirm that the fit is good when the model is correct. The other Q-Q plots outside the diagonal correctly reveal a clear deviation from the hypothesized model.

Figure 5.

Toy data: Q-Q plots of the Mahalanobis distances of the residuals for the normal (respectively, the IT with , the IT with ) case empirical quantiles against the normal (respectively, the IT with , the IT with ) case theoretical quantiles in the first row (respectively, the second row, the third row).

To summarize the findings of this study, let us first say that there may be an abusive use of the Gaussian distribution in applications due to its simplicity. We have seen that considering the Student distribution instead is just slightly more complex, but feasible, and that one can test this choice. Concerning the two Student models, we have seen that the UT model is simpler to fit than the IT model, but has limitations due to the fact that it assumes a single realization, which restricts the properties of the maximum likelihood estimators and prevents the use of tests against the other two models.

5. Conclusions

We have compared three different models: the multivariate Gaussian model and two different multivariate Student models (uncorrelated or independent). We have derived some theoretical properties of the Student UT model and proposed a simple iterative reweighted algorithm to compute the maximum likelihood estimators in the IT model. Our simulations show that using a multivariate Student IT model instead of a multivariate Gaussian model for heavy tail data is simple and can be viewed as a safeguard against misspecification in the sense that there is more to loose if the DGP is Student and one uses a Gaussian model than in the reverse situation. Finally, we have proposed some graphical tools and a test to choose between the Gaussian and the IT models. The IT model fits our finance dataset quite well. There is still work to do in the direction of improving the model selection procedure to overcome the fact that the parameters are estimated and hence the hypothetical distribution is only approximate. Let us mention that it is also possible to adapt our algorithm for the IT model to the case of missing data. We intend to work in the direction of allowing different degrees of freedom for each coordinate. It may be also relevant to consider an alternative estimation method by generalizing the one proposed in () to the multivariate regression case. Finally, another perspective is to consider multivariate errors-in-variables models, which allow incorporating measurement errors in the response and the explanatory variables. A possible approach is proposed in ().

Supplementary Materials

In order to allow the reproducibility of the empirical analyses contained in the present paper, some Supplementary Material is available at the following link: http://www.thibault.laurent.free.fr/code/jrfm/.

Author Contributions

T.H.A.N., C.T.-A. and A.R.-G., Methodology, analysis, review, and editing; T.H.A.N., writing, original draft preparation; C.T.-A. and A.R.-G. supervision and validation; T.L. and T.H.A.N., data curation.

Funding

This research received no external funding

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| EM | Expectation-maximization |

| MLE | Maximum likelihood estimator |

| N | Normal (Gaussian) model |

| IT | Independent multivariate Student |

| UT | Uncorrelated multivariate Student |

| RB | Relative bias |

| MSE | Mean squared error |

| RRMSE | Root relative mean squared error |

| DGP | Data-generating process |

Appendix A

Proof of Proposition 1.

Using Expression (1), the joint density function of is:

Therefore, the logarithm of is:

In order to maximize as a function of , we follow the same argument as in Theorem 8.4 from () for the Gaussian case and obtain that the minimum of is obtained for:

Besides, taking the partial derivative of (A1) as a function of , we obtain:

Let:

We have:

Solving and letting , we have:

The expression of in (A3) can be simplified by noting that:

Replacing the expression of from (A4) into , we get:

Finally,

Proof of Proposition 2.

The property is immediate. In order to facilitate the derivation of the proof for , we write Model (4) as:

where:

Let and . We have , and following (), Theorem 8.2,

Since , for , and:

References

- Bilodeau, Martin, and David Brenner. 1999. Theory of Multivariate Statistics (Springer Texts in Statistics). Berlin: Springer, ISBN 978-0-387-22616-3. [Google Scholar]

- Croux, Christophe, Mohammed Fekri, and Anne Ruiz-Gazen. 2010. Fast and robust estimation of the multivariate errors in variables model. Test 19: 286–303. [Google Scholar] [CrossRef]

- Dempster, Arthur P., Nan M. Laird, and Donald B. Rubin. 1978. Iteratively Reweighted Least Squared for Linear Regression when Errors are Normal/Independent distributed. Multivariate Analysis V 5: 35–37. [Google Scholar]

- Dogru, Fatma Zehra, Y. Murat Bulut, and Olcay Arslan. 2018. Double Reweighted Estimators for the Parameters of the Multivariate t distribution. Communications in Statistics-Theory and Methods 47: 4751–71. [Google Scholar] [CrossRef]

- Fernandez, Carmen, and Mark F. J. Steel. 1999. Multivariate Student t- Regression Models: Pitfalls and Inference. Biometrika Trust 86: 153–67. [Google Scholar] [CrossRef]

- Fung, Thomas, and Eugene Seneta. 2010. Modeling and Estimating for Bivariate Financial Returns. International Statistical Review 78: 117–33. [Google Scholar] [CrossRef]

- Fraser, Donald Alexander Stuart. 1979. Inference and Linear Models. New York: McGraw Hill, ISBN 9780070219106. [Google Scholar]

- Fraser, Donald Alexander Stuart, and Kai Wang Ng. 1980. Multivariate regression analysis with spherical error. Multivariate Analysis 5: 369–86. [Google Scholar]

- Gnanadesikan, Ram, and Jon R. Kettenring. 1972. Robust estimates, residuals, and outlier detection with multiresponse data. Biometrics 28: 81–124. [Google Scholar] [CrossRef]

- Hofert, Marius. 2003. On Sampling from the Multivariate t Distribution. The R Journal 5: 129–36. [Google Scholar] [CrossRef]

- Hu, Wenbo, and Alec N. Kercheval. 2009. Portfolio optimization for Student t and skewed t returns. Quantitative Finance 10: 129–36. [Google Scholar] [CrossRef]

- Huber, Peter J., and Elvezio M. Ronchetti. 2009. Robust Statistics. Hoboken: Wiley, ISBN 9780470129906. [Google Scholar]

- Johnson, Norman L., and Samuel Kotz. 1972. Student multivariate distribution. In Distribution in Statistics: Continuous Multivariate Distributions. Michigan: Wiley Publishing House, ISBN 9780471443704. [Google Scholar]

- Kan, Raymond, and Guofu Zhou. 2017. Modeling non-normality using multivariate t: implications for asset pricing. China Finance Review International 7: 2–32. [Google Scholar] [CrossRef]

- Katz, Jonathan N., and Gary King. 1999. A Statistical Model for Multiparty Electoral Data. American Political Science Review 93: 15–32. [Google Scholar] [CrossRef]

- Kelejian, Harry H., and Ingmar R. Prucha. 1985. Independent or Uncorrelated Disturbances in Linear Regression. Economics Letters 19: 35–38. [Google Scholar] [CrossRef]

- Kent, John T., David E. Tyler, and Yahuda Vard. 1994. A curious likelihood identity for the multivariate t-distribution. Communications in Statistics-Simulation and Computation 23: 441–53. [Google Scholar] [CrossRef]

- Kotz, Samuel, and Saralees Nadarajah. 2004. Multivariate t Distributions and Their Applications. Cambridge: Cambridge University Press, ISBN 9780511550683. [Google Scholar]

- Lange, Kenneth, Roderick J. A. Little, and Jeremy Taylor. 1989. Robust Statistical Modeling Using the t-Distribution. International Statistical Review 84: 881–96. [Google Scholar] [CrossRef]

- Lange, Kenneth, and Janet S. Sinsheimer. 1993. Normal/Independent Distributions and Their Applications in Robust Regression. Journal of Computational and Graphical Statistics 2: 175–98. [Google Scholar] [CrossRef]

- Liu, Chuanhai, and Donald B. Rubin. 1995. ML estimation of the t distribution using EM and its extensions, ECM and ECME. Statistica Sinica 5: 19–39. [Google Scholar]

- Liu, Chuanhai. 1997. ML Estimation of the Multivariate t Distribution and the EM Algorithm. J. Multivar. Anal. 63: 296–312. [Google Scholar] [CrossRef]

- Maronna, Ricardo Antonio. 1976. Robust M-Estimators of Multivariate Location and Scatter. The Annals of Statistics 4: 51–67. [Google Scholar] [CrossRef]

- McNeil, Alexander J., Rüdiger Frey, and Paul Embrechts. 2005. Quantitative Risk Management: Concepts, Techniques and Tools. Vol. 3, Princeton: Princeton University Press. [Google Scholar]

- Platen, Eckhard, and Renata Rendek. 2008. Empirical Evidence on Student-t Log-Returns of Diversified World Stock Indices. Journal of Statistical Theory and Practice 2: 233–51. [Google Scholar] [CrossRef]

- Prucha, Ingmar R., and Harry H. Kelejian. 1984. The Structure of Simultaneous Equation Estimators: A generalization Towards Nonnormal Disturbances. Econometrica 52: 721–36. [Google Scholar] [CrossRef]

- Roth, Michael. 2013. On the Multivariate t Distribution. Report Number: LiTH-ISY-R-3059. Linkoping: Department of Electrical Engineering, Linkoping University. [Google Scholar]

- Seber, George Arthur Frederick. 2008. Multivariate Observations. Hoboken: John Wiley & Sons, ISBN 9780471881049. [Google Scholar]

- Singh, Radhey. 1988. Estimation of Error Variance in Linear Regression Models with Errors having Multivariate Student t-Distribution with Unknown Degrees of Freedom. Economics Letters 27: 47–53. [Google Scholar] [CrossRef]

- Small, N. J. H. 1978. Plotting squared radii. Biometrics 65: 657–58. [Google Scholar] [CrossRef]

- Sutradhar, Brajendra C., and Mir M. Ali. 1986. Estimation of the Parameters of a Regression Model with a Multivariate t Error Variable. Communication Statistics Theory and Method 15: 429–50. [Google Scholar] [CrossRef]

- Zellner, Arnold. 1976. Bayesian and Non-Bayesian Analysis of the Regression Model with Multivariate Student-t Error Terms. Journal of the American Statistical Association 71: 400–5. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).