Breaking the Code: Considerations for Effectively Disseminating Mass Notifications in Healthcare Settings

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sampling and Data Collection

2.2. Survey Design

2.2.1. Demographic Information

2.2.2. Code Identification

2.2.3. Experience with Hospital Emergency Codes

2.3. Ethical Considerations

2.4. Data Analysis

2.5. Software

3. Results

3.1. Sample Characteristics

3.2. Facility Codes

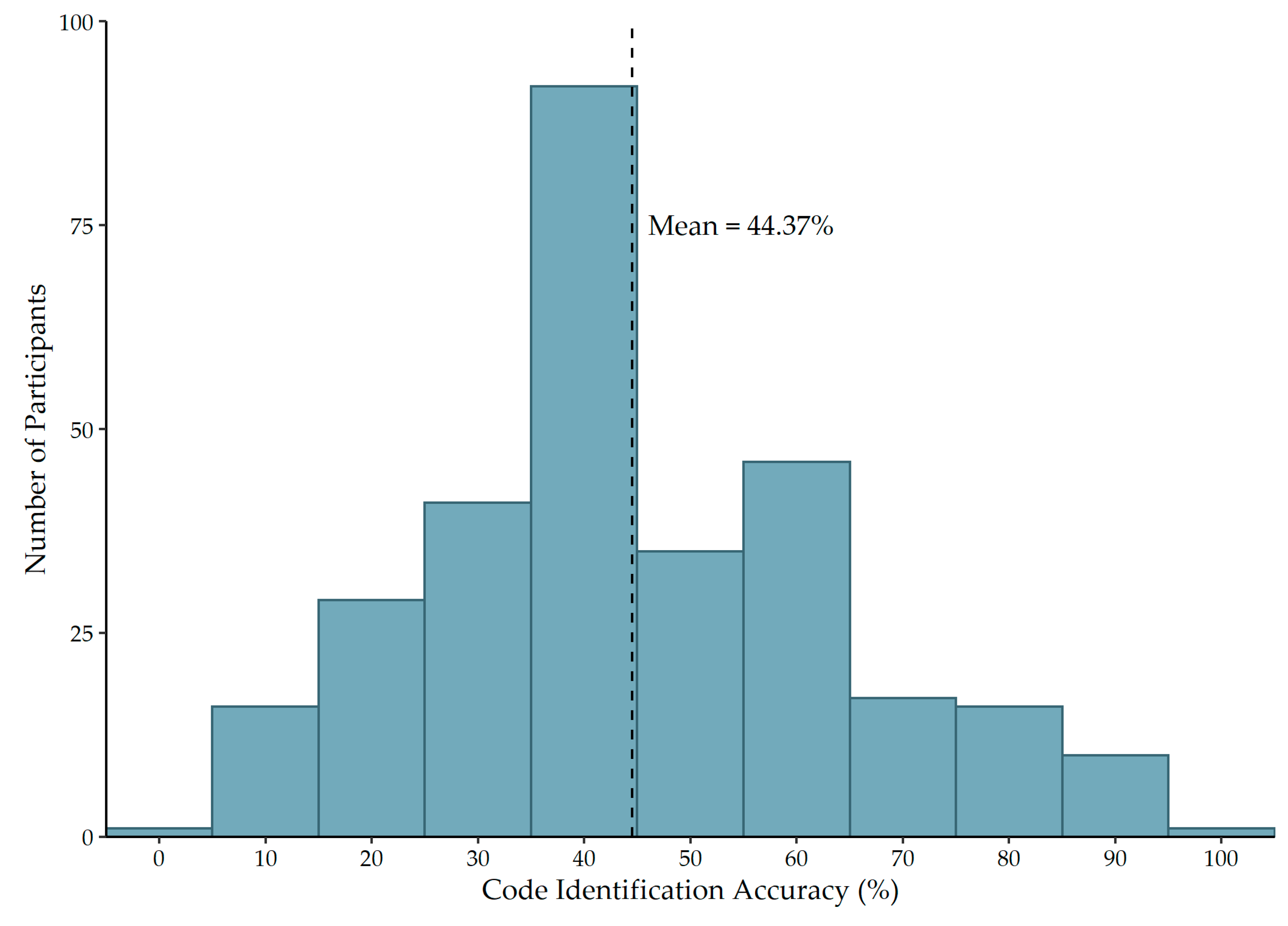

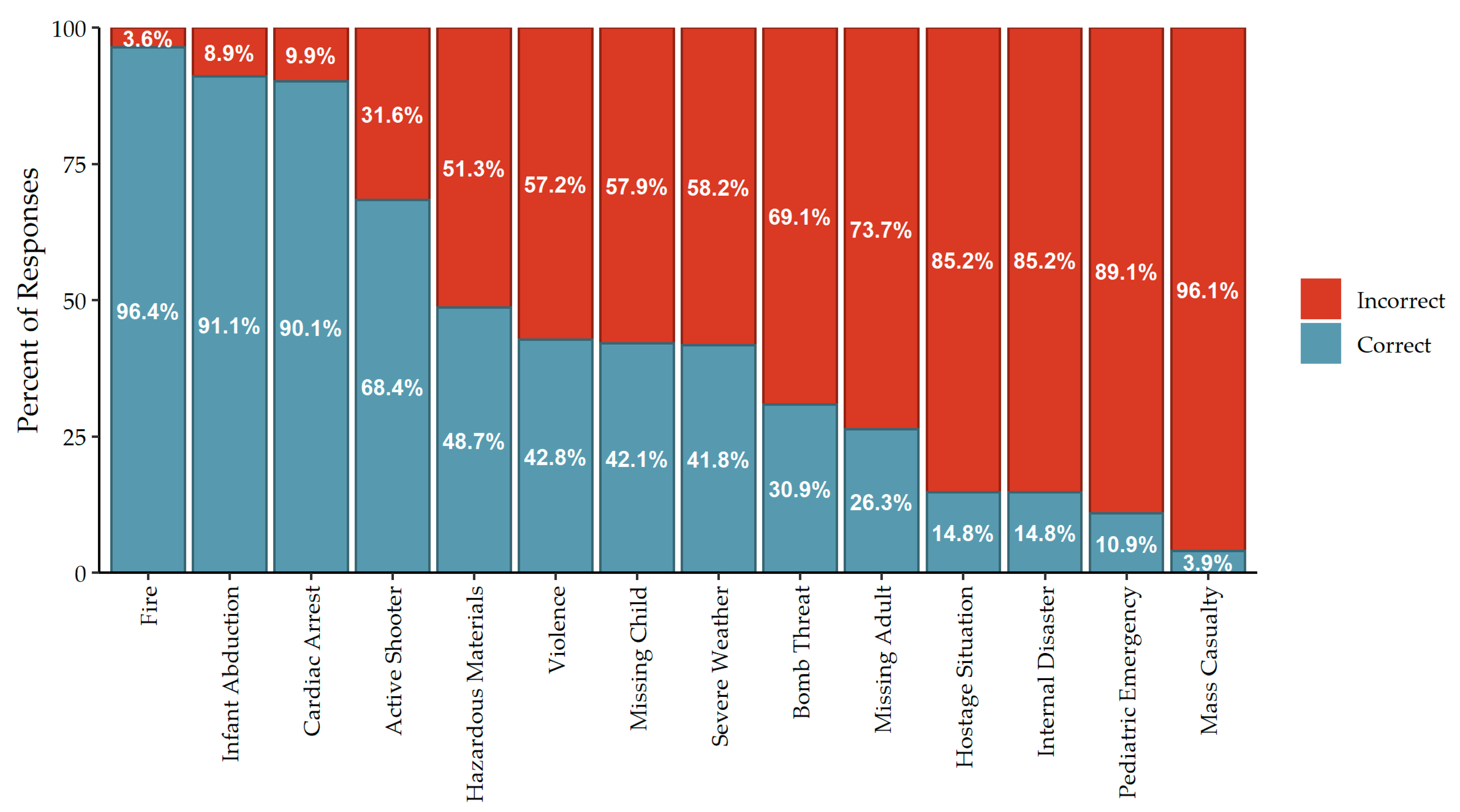

3.3. Code Identification Accuracy

3.4. Code Identification Score Associations

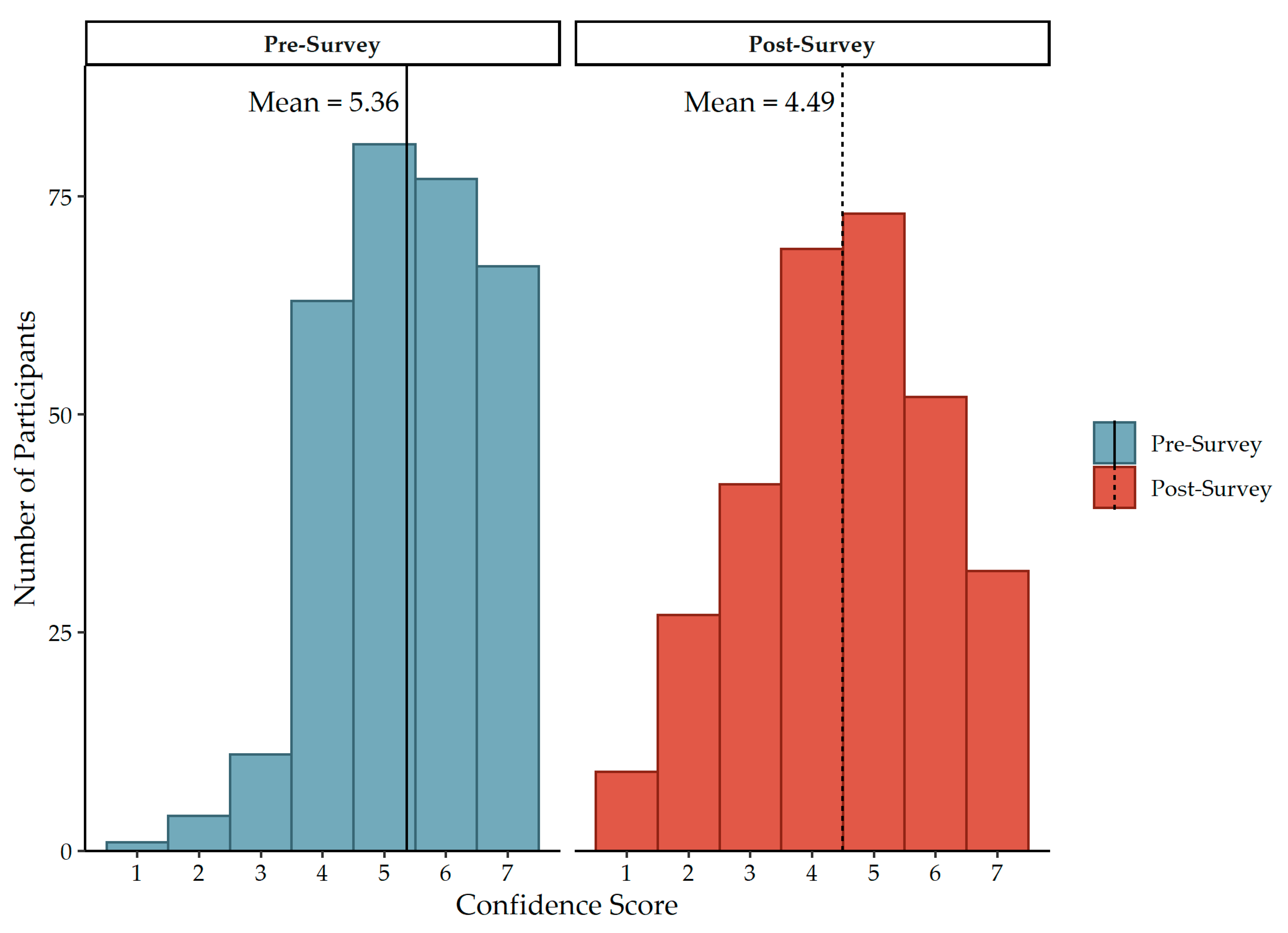

3.5. Confidence Scores

3.6. Change in Confidence Score Associations

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bauer, L.; Bravo-Lillo, C.; Cranor, L.; Fragkaki, E. Warning Design Guidelines; Carnegie Mellon University: Pittsburgh, PA, USA, 2013. [Google Scholar]

- Laughery, K.; Wogalter, M. Designing Effective Warnings. Rev. Hum. Factors Ergon. 2006, 2, 241–271. [Google Scholar] [CrossRef]

- Aguirre, B. The Lack of Warnings Before the Saragosa Tornado. Int. J. Mass Emergencies Disasters 1988, 6, 65–74. [Google Scholar]

- National Research Council. Public Response to Alerts and Warnings on Mobile Devices: Summary of a Workshop on Current Knowledge and Research Gaps; The National Academies Press: Washington, DC, USA, 2011; ISBN 978-0-309-18513-4. [Google Scholar] [CrossRef]

- Rogers, M.B.; Amlôt, R.; Rubin, G.J.; Wessely, S.; Krieger, K. Mediating the social and psychological impacts of terrorist attacks: The role of risk perception and risk communication. Int. Rev. Psychiatry 2007, 19, 279–288. [Google Scholar] [CrossRef] [PubMed]

- Sheppard, B. Mitigating Terror and Avoidance Behavior through the Risk Perception Matrix to Augment Resilience. J. Homel. Secur. Emerg. Manag. 2011, 8, A26. [Google Scholar] [CrossRef]

- Dauksewicz, B.W. Hospitals should replace emergency codes with plain language. J. Healthc. Risk Manag. 2019, 38, 32–41. [Google Scholar] [CrossRef]

- Centers Medicare and Medicaid Services. Emergency Preparedness Requirements for Medicare and Medicaid Participating Providers and Suppliers. 2016. Available online: https://www.federalregister.gov/documents/2016/09/16/2016-21404/medicare-and-medicaid-programs-emergency-preparedness-requirements-for-medicare-and-medicaid (accessed on 6 August 2022).

- The Joint Commission. New and Revised Standards in Emergency Management; The Joint Commission: Oakbrook Terrace, IL, USA, 2021; p. 10. [Google Scholar]

- Slota, M. The Joint Commission: Emergency Management Plans and Codes; Elsevier: Amsterdam, The Netherlands, 2017; Available online: https://3ohond29fxl43b8tjw115y91-wpengine.netdna-ssl.com/wp-content/uploads/E_Mgmt_Plans_and_Codes.pdf (accessed on 6 August 2022).

- The Joint Commission. Overhead Emergency Codes. Available online: https://www.jointcommission.org/-/media/tjc/documents/resources/emergency-management/em-overhead-emergency-codes-posterpdf.pdf (accessed on 6 August 2022).

- California Hospital Association. Hospital Emergency Code Standardization Survey: Survey Report. 2014. Available online: https://www.calhospitalprepare.org/sites/main/files/file-attachments/2014emercodesurveyfinal.pdf (accessed on 6 August 2022).

- Waldo, D. Standardization of Emergency Code Calls in Oregon. 2009. Available online: https://www.oahhs.org/assets/documents/files/emergency-code-calls.pdf (accessed on 6 August 2022).

- Lee, H.-J.; Lee, O. Perceptions of hospital emergency color codes among hospital employees in Korea. Int. Emerg. Nurs. 2018, 40, 6–11. [Google Scholar] [CrossRef]

- Kurien, N.; Choudhary, M. Hospital Emergency Color Codes: A Way to Disseminate Unexpected. Int. J. Emerg. Trauma Nurs. 2019, 5, 1–5. [Google Scholar]

- Ashworth, I.; El, D.A.; ElDeib, O.; Altoub, A.; Pasha, F.; Butt, T. Variability of emergency color codes for critical events between hospitals in Riyadh. Ann. Saudi Med. 2015, 35, 450–455. [Google Scholar] [CrossRef]

- Tham, D.S.Y.; Sowden, P.T.; Grandison, A.; Franklin, A.; Lee, A.K.W.; Ng, M.; Park, J.; Pang, W.; Zhao, J. A systematic investigation of conceptual color associations. J. Exp. Psychol. Gen. 2020, 149, 1311–1332. [Google Scholar] [CrossRef]

- Gilbert, A.N.; Fridlund, A.J.; Lucchina, L.A. The color of emotion: A metric for implicit color associations. Food Qual. Prefer. 2016, 52, 203–210. [Google Scholar] [CrossRef]

- Or, C.K.L.; Wang, H.H.L. Color–concept associations: A cross-occupational and -cultural study and comparison. Color Res. Appl. 2014, 39, 630–635. [Google Scholar] [CrossRef]

- Colling, R.; York, T.W. Emergency Preparedness—Planning and Management. Handb. Loss Prev. Crime Prev. 2012, 520–538. [Google Scholar] [CrossRef]

- Colorado Hospital Association. Emergency Code Implementation Guide. 2012. Available online: https://cha.com/wp-content/uploads/2017/06/1-Emergency-Code-Implementation-Guide.pdf (accessed on 6 August 2022).

- Florida Hospital Association. Overhead Emergency Codes: 2014 Hospital Guidelines. 2014. Available online: https://www.scribd.com/document/293778467/Hospital-Emergency-Codes-Final (accessed on 6 August 2022).

- Padilla-Elías, N.; Peña-Orellana, M.; Rivera-Gutiérrez, R.; González Sanchez, J.; Marín, H.; Alonso-Serra, H.; Millan, L.; Monserrrate-Vázquez, P. Diversity of Emergency Codes in Hospitals. Int. J. Clin. Med. 2013, 4, 499–503. [Google Scholar] [CrossRef]

- Mapp, A.; Goldsack, J.; Carandang, L.; Buehler, J.W.; Sonnad, S.S. Emergency codes: A study of hospital attitudes and practices. J. Healthc. Prot. Manag. 2015, 31, 36–47. [Google Scholar]

- McMahon, M.M. The Many Codes for a Disaster: A Plea for Standardization. Disaster Manag. Response 2007, 5, 1–2. [Google Scholar] [CrossRef]

- Van Houtven, C.H.; DePasquale, N.; Coe, N.B. Essential Long-Term Care Workers Commonly Hold Second Jobs and Double- or Triple-Duty Caregiving Roles. J. Am. Geriatr. Soc. 2020, 68, 1657–1660. [Google Scholar] [CrossRef]

- Bailey, K.A.; Spletzer, J.R. A New Measure of Multiple Job Holding in the U.S. Economy. Labour Econ. 2021, 71, 102009. [Google Scholar] [CrossRef]

- AMN Healthcare. Survey: 2021 Temporary Allied Staffing Trends. 2021. Available online: https://www.amnhealthcare.com/amn-insights/surveys/survey-2021-temporary-allied-staffing-trends/ (accessed on 6 August 2022).

- Seo, S.; Spetz, J. Demand for Temporary Agency Nurses and Nursing Shortages. INQUIRY J. Health Care Organ. Provis. Financ. 2013, 50, 216–228. [Google Scholar] [CrossRef]

- Janoske, M.; Liu, B.; Sheppard, B. Understanding Risk Communication Best Practices: A Guide for Emergency Managers and Communicators; U.S. Department of Homeland Security: College Park, MD, USA, 2012; p. 36. [Google Scholar]

- Prickett, K.J.; Bellino, J. White Paper: Plain Langauge Emergency Alert Codes: The Importance of Direct Impact Statements in Hospital Emergency Alerts. Available online: https://asprtracie.hhs.gov/technical-resources/resource/5136/white-paper-plain-language-emncy-alert-codes-the-importance-of-direct-impact-statements-in-hospital-emncy-alerts (accessed on 6 August 2022).

- Demir, F. Presence of patients’ families during cardiopulmonary resuscitation: Physicians’ and nurses’ opinions. J. Adv. Nurs. 2008, 63, 409–416. [Google Scholar] [CrossRef]

- McClenathan, C.B.M.; Torrington, C.K.G.; Uyehara, C.F.T. Family Member Presence During Cardiopulmonary Resuscitation: A Survey of US and International Critical Care Professionals. Chest 2002, 122, 2204–2211. [Google Scholar] [CrossRef]

- Jensen, L.; Kosowan, S. Family Presence During Cardiopulmonary Resuscitation: Cardiac Health Care Professionals’ Perspectives. Can. J. Cardiovasc. Nurs. 2011, 21, 23–29. [Google Scholar]

- Jabre, P.; Belpomme, V.; Azoulay, E.; Jacob, L.; Bertrand, L.; Lapostolle, F.; Tazarourte, K.; Bouilleau, G.; Pinaud, V.; Broche, C.; et al. Family Presence during Cardiopulmonary Resuscitation. N. Engl. J. Med. 2013, 368, 1008–1018. [Google Scholar] [CrossRef] [PubMed]

- O’Connell, K.J.; Farah, M.M.; Spandorfer, P.; Zorc, J.J. Family Presence During Pediatric Trauma Team Activation: An Assessment of a Structured Program. Pediatrics 2007, 120, e565–e574. [Google Scholar] [CrossRef] [PubMed]

- Dill, K.; Gance-Cleveland, B. With You Until the End: Family Presence During Failed Resuscitation. J. Spec. Pediatric Nurs. 2005, 10, 204–207. [Google Scholar] [CrossRef]

- Oak Ridge Institute for Science and Education. The Psychology of a Crisis—How Knowing This Helps Communications. 2016. Available online: https://www.orau.gov/hsc/ercwbt/content/ERCcdcynergy/CERC%20Course%20Materials/Instructor%20PPT%20Slides/Psychology%20of%20a%20Crisis.pdf (accessed on 6 August 2022).

- Wallace, S.; Finley, E. Standardized Emergency Codes May Minimize “Code Confusion”. P. Patient Saf. Advis. 2015, 12, 1–6. [Google Scholar]

- Jeong, S.; Lee, O. Correlations between emergency code awareness and disaster nursing competencies among clinical nurses: A cross-sectional study. J. Nurs. Manag. 2020, 28, 1326–1334. [Google Scholar] [CrossRef]

- R Core Team. R: A Language And Environment For Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- RStudio Team. RStudio: Integrated Development for R; RStudio, Inc.: Boston, MA, USA, 2019. [Google Scholar]

- Microsoft Corporation. Windows Server 2016; Microsoft Corporation: Redmond, WA, USA, 2019. [Google Scholar]

- de Mendiburu, F. Agricolae: Statistical Procedures for Agricultural Research. 2021. Available online: https://cran.r-project.org/package=agricolae (accessed on 6 August 2022).

- Robinson, D.; Hayes, A.; Couch, S. Broom: Convert Statistical Objects into Tidy Tibbles. 2022. Available online: https://cran.r-project.org/package=broom (accessed on 6 August 2022).

- Torchiano, M. Effsize: Efficient Effect Size Computation. Zenodo 2020. [Google Scholar] [CrossRef]

- FC, M.; Davis, T.L.; Wickham, H. Ggpattern: ’Ggplot2’ Pattern Geoms. 2022. Available online: https://cran.r-project.org/package=ggpattern (accessed on 6 August 2022).

- Chang, W. Extrafont: Tools For Using Fonts. 2022. Available online: https://cran.r-project.org/package=extrafont (accessed on 6 August 2022).

- Sjoberg, D.D.; Whiting, K.; Curry, M.; Lavery, J.A.; Larmarange, J. Reproducible summary tables with the gtsummary package. R J. 2021, 13, 570–580. [Google Scholar] [CrossRef]

- Müller, K. Here: A Simpler Way To Find Your Files. 2020. Available online: https://cran.r-project.org/package=here (accessed on 6 August 2022).

- Wickham, H.; Bryan, J. Readxl: Read Excel Files. 2022. Available online: https://cran.r-project.org/package=readxl (accessed on 6 August 2022).

- Kassambara, A. Rstatix: Pipe-Friendly Framework For Basic Statistical Tests. 2021. Available online: https://cran.r-project.org/package=rstatix (accessed on 6 August 2022).

- Wickham, H.; Seidel, D. Scales: Scale Functions For Visualization. 2022. Available online: https://cran.r-project.org/package=scales (accessed on 6 August 2022).

- Rich, B. Table1: Tables of Descriptive Statistics in Html. 2021. Available online: https://cran.r-project.org/package=table1 (accessed on 6 August 2022).

- Wickham, H.; Averick, M.; Bryan, J.; Chang, W.; McGowan, L.D.; François, R.; Grolemund, G.; Hayes, A.; Henry, L.; Hester, J.; et al. Welcome to the tidyverse. J. Open Source Softw. 2019, 4, 1686. [Google Scholar] [CrossRef]

- Ooms, J. Writexl: Export Data Frames to Excel ’Xlsx’ Format. 2021. Available online: https://cran.r-project.org/package=writexl (accessed on 6 August 2022).

- Kusulas, M.P.; Drenis, A.; Cooper, A.; Fishbein, J.; Crevi, D.; Stein Etess, M.; Bullaro, F. “Code Green Active” Curriculum: Implementation of an Educational Initiative to Increase Awareness of Active Shooter Protocols Among Emergency Department Staff. Pediatric Emerg. Care 2022, 38, e1485. [Google Scholar] [CrossRef]

- Lanctôt, N.; Guay, S. The aftermath of workplace violence among healthcare workers: A systematic literature review of the consequences. Aggress. Violent Behav. 2014, 19, 492–501. [Google Scholar] [CrossRef]

- Maddox, S.A.; Hartmann, J.; Ross, R.A.; Ressler, K.J. Deconstructing the Gestalt: Mechanisms of Fear, Threat, and Trauma Memory Encoding. Neuron 2019, 102, 60–74. [Google Scholar] [CrossRef] [PubMed]

- Labrague, L.J.; Hammad, K.; Gloe, D.S.; McEnroe-Petitte, D.M.; Fronda, D.C.; Obeidat, A.A.; Leocadio, M.C.; Cayaban, A.R.; Mirafuentes, E.C. Disaster preparedness among nurses: A systematic review of literature. Int. Nurs. Rev. 2018, 65, 41–53. [Google Scholar] [CrossRef]

- Said, N.B.; Chiang, V.C.L. The knowledge, skill competencies, and psychological preparedness of nurses for disasters: A systematic review. Int. Emerg. Nurs. 2020, 48, 100806. [Google Scholar] [CrossRef]

- Code of Federal Regulations. 45 CFR Part 160 Subpart A—General Provisions. 2022. Available online: https://www.ecfr.gov/current/title-45/subtitle-A/subchapter-C/part-160/subpart-A (accessed on 6 August 2022).

- Code of Federal Regulations. 45 CFR Part 164—Security and Privacy. 2022. Available online: https://www.ecfr.gov/current/title-45/subtitle-A/subchapter-C/part-164 (accessed on 6 August 2022).

| Facility | ||||||

|---|---|---|---|---|---|---|

| A, N = 99 1 | B, N = 21 1 | C, N = 59 1 | D, N = 78 1 | E, N = 47 1 | Overall, N = 304 1 | |

| Position Type | ||||||

| Clinical | 74 (75%) | 14 (67%) | 39 (66%) | 49 (63%) | 20 (43%) | 196 (64%) |

| Non-clinical | 25 (25%) | 7 (33%) | 20 (34%) | 29 (37%) | 27 (57%) | 108 (36%) |

| Typical Shift | ||||||

| Day Shift | 88 (89%) | 19 (90%) | 56 (95%) | 71 (91%) | 45 (96%) | 279 (92%) |

| Night Shift | 1 (1.0%) | 1 (4.8%) | 0 (0%) | 2 (2.6%) | 1 (2.1%) | 5 (1.6%) |

| Equal times on both shifts | 10 (10%) | 1 (4.8%) | 3 (5.1%) | 5 (6.4%) | 1 (2.1%) | 20 (6.6%) |

| Facility Experience | ||||||

| 0–2 years | 28 (28%) | 4 (19%) | 14 (24%) | 31 (40%) | 13 (28%) | 90 (30%) |

| 2–5 years | 30 (30%) | 8 (38%) | 12 (20%) | 13 (17%) | 14 (30%) | 77 (25%) |

| 5–8 years | 8 (8.1%) | 0 (0%) | 8 (14%) | 9 (12%) | 7 (15%) | 32 (11%) |

| >8 years | 33 (33%) | 9 (43%) | 25 (42%) | 25 (32%) | 13 (28%) | 105 (35%) |

| Healthcare Experience | ||||||

| 0–2 years | 15 (15%) | 1 (4.8%) | 7 (12%) | 21 (27%) | 7 (15%) | 51 (17%) |

| 2–5 years | 26 (26%) | 5 (24%) | 11 (19%) | 11 (14%) | 14 (30%) | 67 (22%) |

| 5–8 years | 10 (10%) | 2 (9.5%) | 8 (14%) | 9 (12%) | 8 (17%) | 37 (12%) |

| >8 years | 48 (48%) | 13 (62%) | 33 (56%) | 37 (47%) | 18 (38%) | 149 (49%) |

| Total Facilities in Career | ||||||

| 1 facility | 31 (31%) | 6 (29%) | 34 (58%) | 28 (36%) | 24 (51%) | 123 (40%) |

| 2–3 facilities | 44 (44%) | 9 (43%) | 16 (27%) | 20 (26%) | 17 (36%) | 106 (35%) |

| 4–5 facilities | 10 (10%) | 3 (14%) | 5 (8.5%) | 21 (27%) | 3 (6.4%) | 42 (14%) |

| >5 facilities | 14 (14%) | 3 (14%) | 4 (6.8%) | 9 (12%) | 3 (6.4%) | 33 (11%) |

| Facility | ||||||

|---|---|---|---|---|---|---|

| A, N = 99 1 | B, N = 21 1 | C, N = 59 1 | D, N = 78 1 | E, N = 47 1 | Overall, N = 304 1 | |

| Knowledge of Code Activation Procedures | ||||||

| No | 32 (32%) | 5 (24%) | 14 (24%) | 24 (31%) | 19 (40%) | 94 (31%) |

| Yes | 67 (68%) | 16 (76%) | 45 (76%) | 54 (69%) | 28 (60%) | 210 (69%) |

| Witnessed Code Confusion | ||||||

| No | 53 (54%) | 12 (57%) | 44 (75%) | 50 (64%) | 37 (79%) | 196 (64%) |

| Yes | 46 (46%) | 9 (43%) | 15 (25%) | 28 (36%) | 10 (21%) | 108 (36%) |

| Worked at a Facility with Different Color Codes | ||||||

| No | 55 (56%) | 14 (67%) | 44 (75%) | 50 (64%) | 34 (72%) | 197 (65%) |

| Yes | 31 (31%) | 5 (24%) | 12 (20%) | 22 (28%) | 10 (21%) | 80 (26%) |

| Maybe | 13 (13%) | 2 (9.5%) | 3 (5.1%) | 6 (7.7%) | 3 (6.4%) | 27 (8.9%) |

| Code Type Preference | ||||||

| Color Codes | 52 (53%) | 16 (76%) | 45 (76%) | 58 (74%) | 28 (60%) | 199 (65%) |

| Plain Language | 47 (47%) | 5 (24%) | 14 (24%) | 20 (26%) | 19 (40%) | 105 (35%) |

| Code Exceptions for Plain Language | ||||||

| No | 35 (35%) | 2 (9.5%) | 5 (8.5%) | 9 (12%) | 13 (28%) | 64 (21%) |

| Yes | 64 (65%) | 19 (90%) | 54 (92%) | 69 (88%) | 34 (72%) | 240 (79%) |

| Training at Orientation | ||||||

| No | 28 (28%) | 8 (38%) | 11 (19%) | 29 (37%) | 20 (43%) | 96 (32%) |

| Yes | 71 (72%) | 13 (62%) | 48 (81%) | 49 (63%) | 27 (57%) | 208 (68%) |

| Training During Drills/Exercises | ||||||

| No | 42 (42%) | 6 (29%) | 29 (49%) | 36 (46%) | 22 (47%) | 135 (44%) |

| Yes | 57 (58%) | 15 (71%) | 30 (51%) | 42 (54%) | 25 (53%) | 169 (56%) |

| Annual Training | ||||||

| No | 62 (63%) | 7 (33%) | 17 (29%) | 38 (49%) | 25 (53%) | 149 (49%) |

| Yes | 37 (37%) | 14 (67%) | 42 (71%) | 40 (51%) | 22 (47%) | 155 (51%) |

| Time Since Last Training | ||||||

| <1 month | 23 (23%) | 4 (19%) | 6 (10%) | 11 (14%) | 7 (15%) | 51 (17%) |

| 1–6 months | 21 (21%) | 11 (52%) | 27 (46%) | 34 (44%) | 20 (43%) | 113 (37%) |

| 6–12 months | 25 (25%) | 5 (24%) | 18 (31%) | 20 (26%) | 15 (32%) | 83 (27%) |

| >1 year | 30 (30%) | 1 (4.8%) | 8 (14%) | 13 (17%) | 5 (11%) | 57 (19%) |

| Facility | |||||

|---|---|---|---|---|---|

| A | B | C | D | E | |

| Active Shooter | Color Code Black | Color Code Silver | Color Code Silver | Color Code Silver | Color Code Silver |

| Bomb Threat | Color Code Gray | - | Color Code Gray | Color Code Black | - |

| Cardiac Arrest | Color Code Blue | Color Code Blue | Color Code Blue | Color Code Blue | Color Code Blue |

| Fire | Color Code Red | Color Code Red | Color Code Red | Color Code Red | Color Code Red |

| Hazardous Materials | Color Code Orange | Color Code Orange | Color Code Orange | Color Code Orange | Color Code Orange |

| Hostage Situation | - | Color Code Silver | Color Code Gray | Color Code Silver | Color Code Silver |

| Infant Abduction | Color Code Pink | Color Code Pink | Color Code Pink | Color Code Pink | Color Code Pink |

| Internal Disaster | - | Code Triage B | Code Triage | Code [Facility] | Code Triage B |

| Mass Casualty | Code Triage | Code Triage A | Code Triage | Code Triage | Code Triage A |

| Missing Adult | Color Code Gold | Code E | Color Code Gray | - | - |

| Missing Child | Color Code Gold | Color Code Pink | Color Code Pink | Color Code Pink | Color Code Pink |

| Pediatric Emergency | - | - | - | - | - |

| Severe Weather | Color Code Green | Color Code Gray | Plain Language | Plain Language | Color Code Gray |

| Violence | Code Strong | Plain Language—“Security Stat” | Color Code Gray | Color Code Gray | Plain Language—“Security Stat” |

| Code Identification Score (%) | Change in Confidence Score | ||||||

|---|---|---|---|---|---|---|---|

| n (%) | Mean (SD) 1 | F | p-Value 2 | Mean (SD) 1 | F | p-Value 2 | |

| Facility | 33 | <0.001 | 2 | 0.094 | |||

| A | 99 (33%) | 32.8 (14.8) | −0.90 (1.23) | ||||

| B | 21 (6.9%) | 60.9 (19.8) | −0.29 (0.64) | ||||

| C | 59 (19%) | 58.4 (17.4) | −1.03 (1.02) | ||||

| D | 78 (26%) | 48.9 (15.5) | −0.77 (1.28) | ||||

| E | 47 (15%) | 37.2 (16.6) | −1.02 (1.11) | ||||

| Position Type | 1 | 0.3 | 0.82 | 0.4 | |||

| Clinical | 196 (64%) | 45.3 (19.4) | −0.91 (1.14) | ||||

| Non-clinical | 108 (36%) | 43.0 (19.0) | −0.79 (1.22) | ||||

| Typical Shift | 2.3 | 0.1 | 0.05 | >0.9 | |||

| Day Shift | 279 (92%) | 45.1 (19.3) | −0.87 (1.14) | ||||

| Night Shift | 5 (1.6%) | 47.1 (17.2) | −0.80 (0.84) | ||||

| Equal times on both shifts | 20 (6.6%) | 35.7 (17.5) | −0.80 (1.54) | ||||

| Facility Experience | 3 | 0.031 | 0.31 | 0.8 | |||

| 0–2 years | 90 (30%) | 40.2 (17.2) | −0.94 (1.27) | ||||

| 2–5 years | 77 (25%) | 43.9 (19.6) | −0.86 (1.13) | ||||

| 5–8 years | 32 (11%) | 49.6 (18.1) | −0.72 (1.08) | ||||

| >8 years | 105 (35%) | 47.1 (20.4) | −0.86 (1.13) | ||||

| Healthcare Experience | 3.8 | 0.011 | 0.83 | 0.5 | |||

| 0–2 years | 51 (17%) | 40.5 (15.8) | −1.08 (1.38) | ||||

| 2–5 years | 67 (22%) | 40.3 (16.8) | −0.85 (1.12) | ||||

| 5–8 years | 37 (12%) | 51.4 (21.5) | −0.70 (1.05) | ||||

| >8 years | 149 (49%) | 46.1 (20.2) | −0.85 (1.13) | ||||

| Total Facilities in Career | 2.4 | 0.07 | 1.2 | 0.3 | |||

| 1 facility | 123 (40%) | 48.0 (19.5) | −0.92 (1.14) | ||||

| 2–3 facilities | 106 (35%) | 42.3 (18.1) | −0.71 (1.19) | ||||

| 4–5 facilities | 42 (14%) | 43.0 (20.7) | −0.95 (1.21) | ||||

| >5 facilities | 33 (11%) | 40.5 (19.0) | −1.09 (1.07) | ||||

| Code Identification Score (%) | Change in Confidence Score | ||||||

|---|---|---|---|---|---|---|---|

| n (%) | Mean (SD) 1 | F | p-Value 2 | Mean (SD) 1 | F | p-Value 2 | |

| Knowledge of Code Activation Procedures | 32 | <0.001 | 0.13 | 0.7 | |||

| No | 94 (31%) | 35.6 (14.6) | −0.90 (1.30) | ||||

| Yes | 210 (69%) | 48.5 (19.8) | −0.85 (1.10) | ||||

| Witnessed Code Confusion | 0.07 | 0.8 | 0.19 | 0.7 | |||

| No | 196 (64%) | 44.3 (18.4) | −0.85 (1.10) | ||||

| Yes | 108 (36%) | 44.9 (20.8) | −0.91 (1.28) | ||||

| Worked at a Facility with Different Color Codes | 2.6 | 0.078 | 1.7 | 0.2 | |||

| No | 197 (65%) | 46.3 (19.2) | −0.80 (1.12) | ||||

| Yes | 80 (26%) | 41.2 (19.5) | −0.93 (1.27) | ||||

| Maybe | 27 (8.9%) | 41.0 (17.6) | −1.22 (1.09) | ||||

| Code Type Preference | 4.7 | 0.031 | 0.5 | 0.5 | |||

| Color Codes | 199 (65%) | 46.2 (19.4) | −0.83 (1.14) | ||||

| Plain Language | 105 (35%) | 41.2 (18.6) | −0.93 (1.21) | ||||

| Code Exceptions for Plain Language | 18 | <0.001 | 0.8 | 0.4 | |||

| No | 64 (21%) | 35.6 (17.1) | −0.98 (1.40) | ||||

| Yes | 240 (79%) | 46.9 (19.1) | −0.84 (1.10) | ||||

| Training at Orientation | 5.8 | 0.017 | 0.49 | 0.5 | |||

| No | 96 (32%) | 40.6 (17.7) | −0.94 (1.19) | ||||

| Yes | 208 (68%) | 46.3 (19.7) | −0.84 (1.16) | ||||

| Training During Drills/Exercises | 4.1 | 0.044 | 2.5 | 0.12 | |||

| No | 135 (44%) | 42.0 (19.4) | −0.99 (1.23) | ||||

| Yes | 169 (56%) | 46.5 (19.0) | −0.78 (1.10) | ||||

| Annual Training | 18 | <0.001 | 4.6 | 0.033 | |||

| No | 149 (49%) | 39.8 (17.2) | −1.01 (1.23) | ||||

| Yes | 155 (51%) | 49.0 (20.1) | −0.73 (1.08) | ||||

| Time Since Last Training | 2.2 | 0.093 | 1.4 | 0.2 | |||

| <1 month | 51 (17%) | 43.7 (22.9) | −0.67 (1.11) | ||||

| 1–6 months | 113 (37%) | 47.7 (19.6) | −0.80 (1.18) | ||||

| 6–12 months | 83 (27%) | 43.6 (17.2) | −0.94 (1.10) | ||||

| >1 year | 57 (19%) | 40.1 (17.2) | −1.09 (1.26) | ||||

| Univariate | Multivariate | |||||

|---|---|---|---|---|---|---|

| Beta | 95% CI 1 | p-Value | Beta | 95% CI 1 | p-Value | |

| Facility | ||||||

| A | — | — | — | — | ||

| B | 28.1 | 20.5, 35.7 | <0.001 | 25.6 | 18.3, 33.0 | <0.001 |

| C | 25.6 | 20.4, 30.8 | <0.001 | 21.7 | 16.4, 27.0 | <0.001 |

| D | 16.1 | 11.4, 20.9 | <0.001 | 15.9 | 11.1, 20.8 | <0.001 |

| E | 4.48 | −1.12, 10.1 | 0.12 | 3.92 | −1.46, 9.31 | 0.15 |

| Position Type | −2.35 | −6.87, 2.18 | 0.31 | |||

| Typical Shift | ||||||

| Day Shift | — | — | — | — | ||

| Night Shift | 2.06 | −14.9, 19.0 | 0.81 | 3.18 | −10.4, 16.8 | 0.65 |

| Equal times on both shifts | −9.37 | −18.1, −0.671 | 0.036 | −3.84 | −11.1, 3.42 | 0.3 |

| Facility Experience | ||||||

| 0–2 years | — | — | — | — | ||

| 2–5 years | 3.72 | −2.08, 9.52 | 0.21 | 5.85 | 0.028, 11.7 | 0.05 |

| 5–8 years | 9.39 | 1.70, 17.1 | 0.017 | 4.09 | −4.17, 12.3 | 0.33 |

| >8 years | 6.98 | 1.62, 12.4 | 0.011 | 2.28 | −4.39, 8.95 | 0.5 |

| Healthcare Experience | ||||||

| 0–2 years | — | — | — | — | ||

| 2–5 years | −0.178 | −7.10, 6.74 | 0.96 | −3.66 | −10.7, 3.37 | 0.31 |

| 5–8 years | 10.9 | 2.84, 18.9 | 0.008 | 3.72 | −5.16, 12.6 | 0.41 |

| > 8 years | 5.59 | −0.447, 11.6 | 0.071 | 0.302 | −7.31, 7.91 | 0.94 |

| Total Facilities in Career | ||||||

| 1 facility | — | — | — | — | ||

| 2–3 facilities | −5.65 | −10.6, −0.682 | 0.027 | −2.36 | −6.76, 2.04 | 0.29 |

| 4–5 facilities | −4.94 | −11.6, 1.76 | 0.15 | −6.72 | −12.9, −0.579 | 0.033 |

| >5 facilities | −7.49 | −14.8, −0.143 | 0.047 | −6.29 | −13.0, 0.411 | 0.067 |

| Knowledge of Code Activation Procedures | 12.8 | 8.37, 17.3 | <0.001 | 9.07 | 5.01, 13.1 | <0.001 |

| Witnessed Code Confusion | 0.629 | −3.90, 5.16 | 0.79 | |||

| No | — | — | — | — | ||

| Yes | −5.18 | −10.2, −0.200 | 0.042 | −1.62 | −6.22, 2.98 | 0.49 |

| Maybe | −5.33 | −13.0, 2.37 | 0.18 | 0.726 | −5.84, 7.29 | 0.83 |

| Code Type Preference | −5.01 | −9.53, −0.482 | 0.031 | 0.435 | −3.36, 4.23 | 0.82 |

| Code Exceptions for Plain Language | 11.3 | 6.11, 16.4 | <0.001 | 4.32 | −0.246, 8.89 | 0.065 |

| Training at Orientation | 5.67 | 1.05, 10.3 | 0.017 | 4.2 | 0.316, 8.09 | 0.035 |

| Training During Drills/Exercises | 4.48 | 0.147, 8.82 | 0.044 | 2.12 | −1.52, 5.76 | 0.26 |

| Annual Training | 9.15 | 4.94, 13.4 | <0.001 | 0.781 | −3.01, 4.57 | 0.69 |

| Time Since Last Training | ||||||

| <1 month | — | — | ||||

| 1–6 months | 4.03 | −2.30, 10.4 | 0.21 | |||

| 6–12 months | −0.066 | −6.74, 6.61 | 0.98 | |||

| >1 year | −3.6 | −10.8, 3.64 | 0.33 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harris, C.; Zerylnick, J.; McCarthy, K.; Fease, C.; Taylor, M. Breaking the Code: Considerations for Effectively Disseminating Mass Notifications in Healthcare Settings. Int. J. Environ. Res. Public Health 2022, 19, 11802. https://doi.org/10.3390/ijerph191811802

Harris C, Zerylnick J, McCarthy K, Fease C, Taylor M. Breaking the Code: Considerations for Effectively Disseminating Mass Notifications in Healthcare Settings. International Journal of Environmental Research and Public Health. 2022; 19(18):11802. https://doi.org/10.3390/ijerph191811802

Chicago/Turabian StyleHarris, Curt, James Zerylnick, Kelli McCarthy, Curtis Fease, and Morgan Taylor. 2022. "Breaking the Code: Considerations for Effectively Disseminating Mass Notifications in Healthcare Settings" International Journal of Environmental Research and Public Health 19, no. 18: 11802. https://doi.org/10.3390/ijerph191811802