Data-Driven Threat Analysis for Ensuring Security in Cloud Enabled Systems

Abstract

1. Introduction

2. Related Works

2.1. Threat Analysis Model and Standards

2.2. Cloud Threats

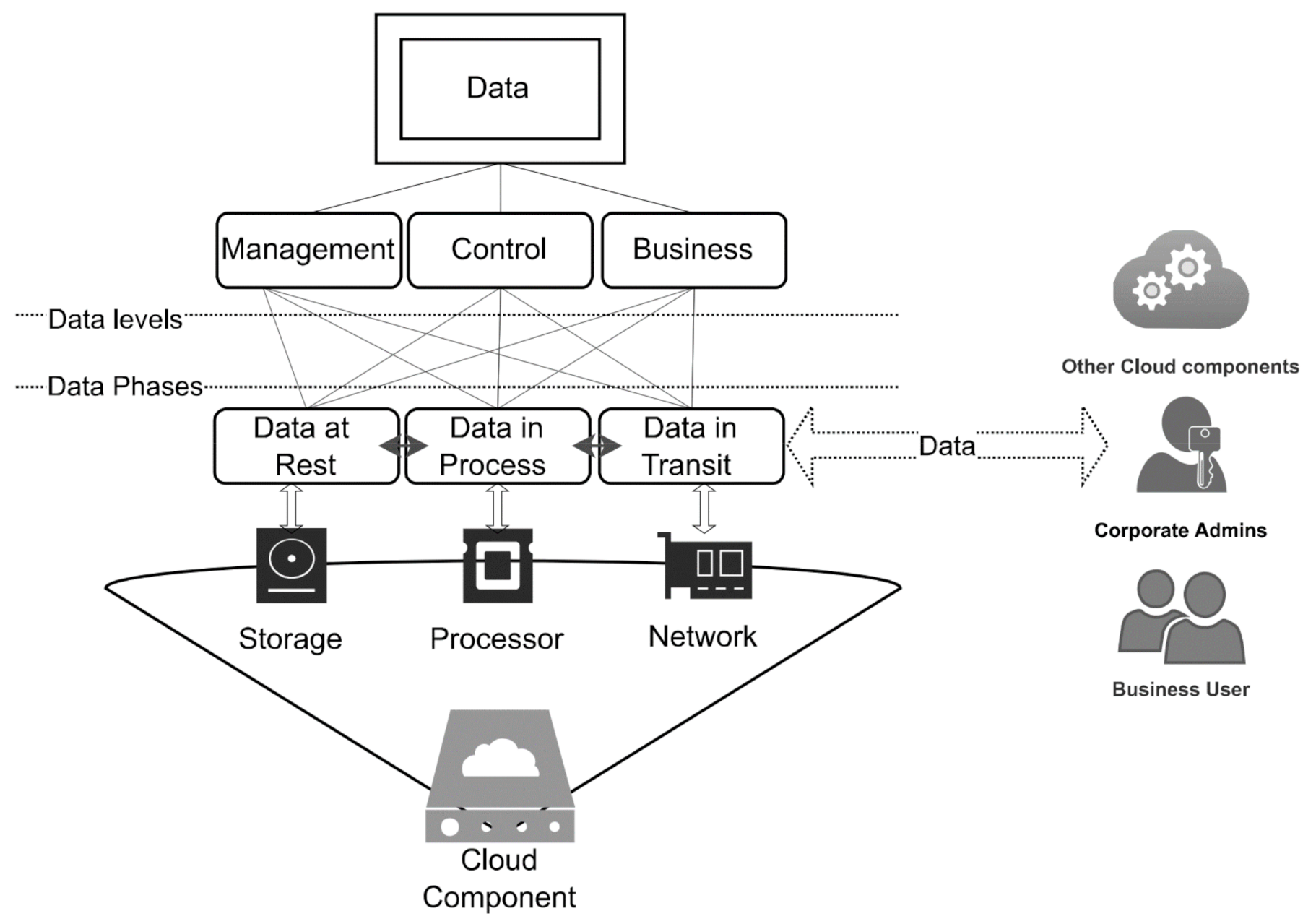

3. Abstraction of Data Level

- Management Data-level (mD) is data generated by the organisation related to managing the cloud components such as—the cloud admin portal or cloud computing resource. The data could include authentication or access methodology.

- Control Data-level (cD) is related to any data that support technology functions such as Application programmable interface (API) calls, routing information, application inquiries, configuration updates, logs, action events or data backup and replication. Control data can be purely operational data or something more to businesses, such as an API request for customer information or identity validation. However, data seeks to be communicated between local and cloud systems.

- Business Data-level (bD) refers to any data related to business services that business users generate to access organisation cloud services such as emails.

- Data is considered at Rest (Dr) when it is stored in CSP infrastructure or in a remote server [19]. Moreover, the data reaches the final stages of the cycles in the cloud, where it should be saved for long-term uses [20]. It can be attacked, modified, and erased from the system while still at a distant place under the control of system security.

- Data in Process (Dp) refers to data at rest that have been accessed by a user or service for a brief period of time, which may be used during the execution of an application. Although such data are not inherently suspicious, a malicious service, software, or hardware might change or leak it. A security breach may be signaled if data are obtained by unauthorised access to system resources via Advanced Persistence Threat or malicious hardware components. For example, an Amazon AWS data leak attack was caused by a rogue Microchip on Supermicro servers utilised by AWS [20].

- Data in Transit (Dt) refers to when data are shared with other systems or users. Data in transit are particularly vulnerable to cloud computing systems, especially when moving through unsecured networks or to an application programming interface (API) that lets programs connect with one another. Business data and operation data, such as admin management identity, are transmitted via the internet in a cloud environment. Data in transit are vulnerable to a variety of cyberattacks, including Man-In-The-Middle (MITM) attacks [21]. According to [22], regardless of the increased regulatory focus on data security, this data phase should be protected since a data breach can influence multiple levels of a business, such as sensitive data exposure, which can result in reputational harm or financial penalties.

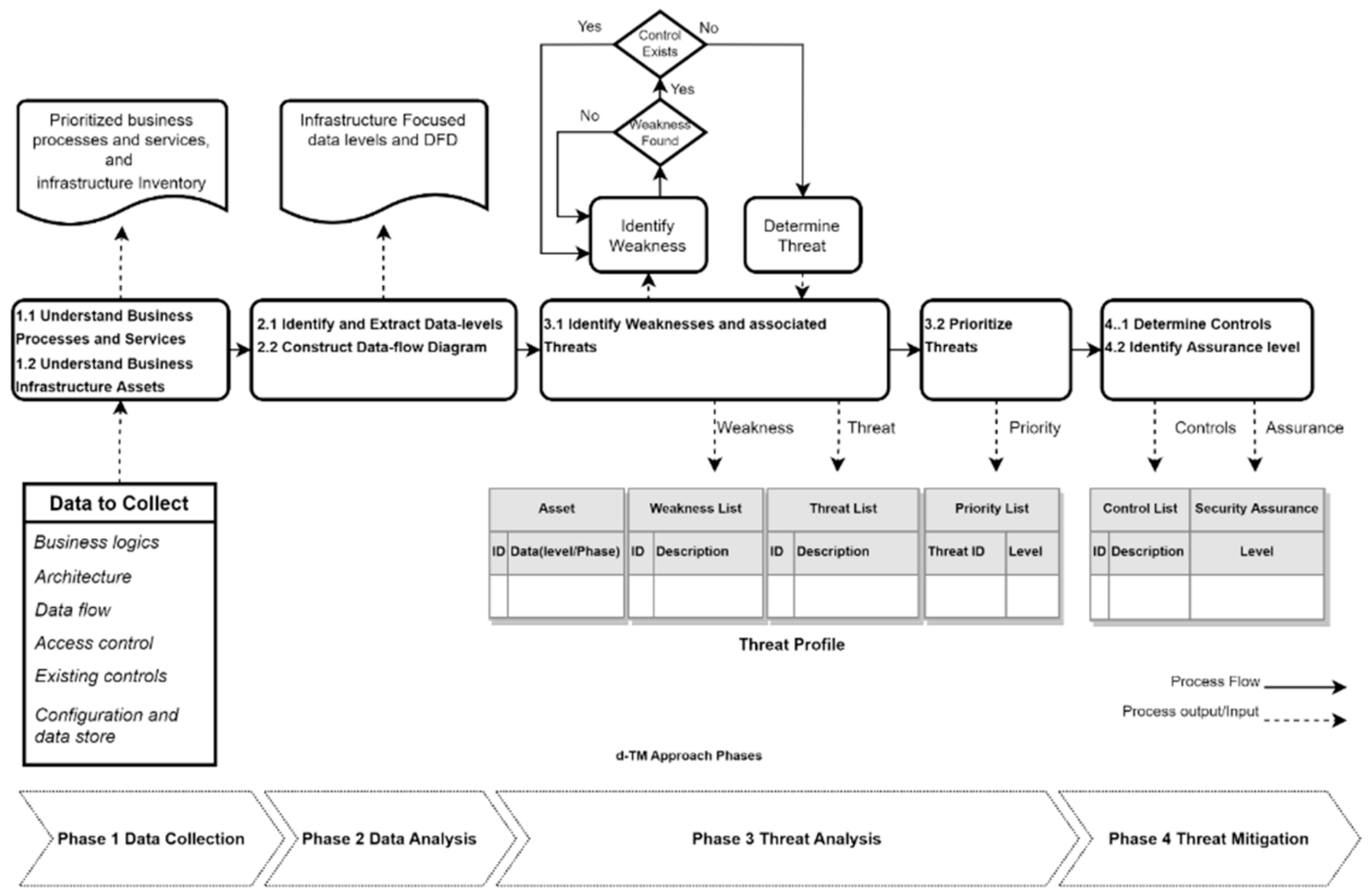

4. The Data Driven Threat Analysis

4.1. Justification for Using Data-Driven Approach

4.2. d-TM Deployed Concepts

4.2.1. Threat Layers in Cloud Computing

- The first layer is the Agent that provides insight into the required tool for cloud users to access data or services such as web browsers. The web browser is used to access any cloud services and a compromised or vulnerable browser could lead to severe threats to data. The browser could hold sensitive information, i.e., session details, encryption keys, or saved credentials. This information could be compromised while saved or processed by rouge software/plugin [23] and also during transition by network attacks such as MITM [24]. It is important to keep the user agent secured because the user could be a cloud admin who accesses the cloud portal for day-to-day operations, and the data could be compromised to a such a level that could lead to cloud takeover due to stolen admin credentials.

- The second layer is the Network which aims to identify any device that interacts with data prior to reaching the business cloud service or server. This layer could include physical or virtual routers, switches, firewalls, or load balancers. Business data for instance at this stage are in a transit state and could be impacted by any configuration manipulation that leads to data leakage. This kind of attack is not limited to the attacker only, as the cloud admin could disrupt data due to a misconfiguration. The network is eventually connected to a computer that provides access to the hosted service.

- The third layer is Compute which represents the platform, software, or operating system that hosts the business application; for example, Linux OS. In cloud technology, the compute layer could have a stack of technologies such as virtual machines or containers. Each computes virtualization technology should be assessed differently, For example, virtual machines run Linux OS as a hosting platform, then top up with a number of different virtual machines with different OS, whereas container-based systems run a single OS with different runtimes [25]. Each deployment should be secure against any attack, where any manipulation in hosting OS drivers could lead to disrupting all guest VM or containers. Once data are received by the compute, the next action is to hand over the data to the installed business service.

- The fourth layer is the Application that includes a single or a set of software. At this stage, data moves from transit to process or store. The application layer could be exposed to many threats due to the direct interface with business and external networks, unlike the compute or network layer where they are not meant to interact with business users.

- The final layer is Storage which describes the final destination for data, where the dataset is to be stored—from process to at-rest state. Storage can be local (attached disks) or remote (network storage). When data is stored in remote storage, threats to data while transitioning or in the process of network storage exist.

4.2.2. Threat Actors

- Business-User represents any human who is legitimately accessing the organisation’s resources and is looking for a specific service benefit.

- Business-Operator represents any human who has legitimate access to resources for administrative duties such as updating, maintaining, or troubleshooting.

- Business-Systems represent any service or process that is legitimately connected or able to connect to the organisation’s resources for business or administrative support, such as IoT.

- Threat-Actor represents any of the types (user, operator, or system) with the intention of abusing, disrupting business operations or gaining illegitimate access to data.

4.2.3. Common Security Knowledge Base

4.3. Threat Analysis Process

4.3.1. Phase 1 Data Collection

- High (H): mission-critical services that offer core functions to the business; consequently, the firm cannot function without it.

- Medium (M): supporting services to the core function, where business interruption is limited and can last for a short length of time.

- Low (L): a service that provides support to fundamental functions and allows the organisation to operate with minimum effect for an extended period of time.

- Non-critical (NC): a service that supports the business but has no influence on core functions, such as a customer satisfaction system

- Asset Type: Information needed to characterise the asset, such as its name, model, and so on. In addition, each asset should be mapped to one of the five d-TM layers which are the agent (Agt), network (Net), compute (Cmp), application (App), and storage (Stg).

- Asset Administration: The access mechanism used by admins to operate the asset; security analyst needs to determine the protocols, tools, access conditions and required privilege to operate the asset.

- Asset Dependency: Inherent dependencies among the asset for service delivery. This property helps understand potential attacks that could be conducted because of a weakness in a dependent system which might result in a sequential failure or breach cascading impact. d-TM considers MITIGATE Classification [29] for dependency types and access mechanisms of assets; hence, dependency can be determined using the following two factors: Dependency Type and Access, where dependency type defines the relationship between two assets. There are five types of dependency among assets (host, exchange, storage, control, or process). The Access mechanism represents how an asset communicates with another asset, there are three types of access direct, local and remote.

4.3.2. Phase 2 Data Analysis

4.3.3. Phase 3 Threat Analysis

- Very High: A very high impact on business continuity and critical business services which requires immediate action.

- High: A significantly high threat to business continuity, and critical business services are interrupted which requires attention in a specific time frame.

- Medium: An intermediate threat to business continuity, and no critical business services are interrupted due to its impact. Furthermore, some supporting business services are impacted, and business can run for longer. Additionally, the apply action should be completed within a timeframe of a year.

- Low: A low threat to business continuity, and no critical business services are interrupted due to its impact with option control. Furthermore, some supporting business services are impacted, and the business can run normally.

- Very Low: A significantly low threat to business continuity, and no critical business services and supporting services are interrupted due to its impact. Additionally, applying action could be optional or ignored.

- Metric 1: Threat-Occurrence represents the probability of a particular threat occurring for an asset with three scales:

- ○

- High (H): The organisation experiences this threat twice or more in a one-year timeframe. Industry researchers forecast this threat as a top-rated attack for similar businesses within one year;

- ○

- Medium (M): The organisation experiences this threat once in a one-year timeframe. Industry researchers forecast this threat as medium-rated attack for similar businesses within one year;

- ○

- Low (L): The organisation experiences this threat once or not at all in a two-year timeframe. Industry researchers forecast this threat as a medium to low-rated attack on similar businesses within the last two years.

- Metric 2: Attacker-Gain represents the goal behind the attack including curiosity, personal gain, personal fame or national interests with three scales including high, medium and log.

- Metric 1: Attacker-Capability refers to overall attacker capabilities such as skills, knowledge, opportunities, and resources that the attacker incorporates to exploit a weakness. Capabilities could be estimated as high, medium or low.

- ○

- High: A sophisticated level of expertise and knowledge with adequate resources to generate opportunities for continuous attacks.

- ○

- Medium: Moderate level of expertise and knowledge with reasonable resources to provide a considerable ability to generate multiple opportunities and continuous attacks.

- ○

- Low: A low level of expertise and knowledge with limited resources and the ability for attack.

- Metric 2: Access-Complexity determines the level of complexity needed to exploit a particular weakness, where each organisation has different levels of access and controls. Attackers often estimate the level of complexity of any attack to find the easiest and success-guaranteed approach to compromise organisation data. Likewise, security analysts evaluate existing access mechanisms and implement security controls for identified gaps; it is important to understand the access complexity that could reduce the likelihood of exploiting existing weaknesses. Access complexity can be estimated as high, medium or low. Table 3 represents the correlation matrix for the likelihood of threat complexity for a particular asset.

- ○

- Multi-level Access: The attacker requires a special access condition, and this condition requires a high level of effort and expertise that could include a multi-stage attack.

- ○

- Single-level Access: The attacker requires a somewhat special access condition, and this condition requires a medium level of effort and expertise.

- ○

- Direct: The attacker requires no special access condition.

- ○

- High (H): The expected impact of the mission-critical services that provide core functions to business is High; the business cannot run without it.

- ○

- Medium (M): The expected impact to the business is medium, where the supporting service is impacted; the business could run for some time.

- ○

- Low (L): The expected impact to the business is low, where businesses run with minimal impact.

4.3.4. Phase 4 Threat Mitigation

- Completeness (Ct): The identified controls are relevant to tackling the threat and related weaknesses. Controls are evaluated specifically in terms of the level of coverage that results in the elimination or reduction of threat impact. Controls can provide none, partial, or full coverage to a specific threat, whereas control capabilities are designed to mitigate threats to specific data levels at specific phases, such as attacks to management data levels in the transit phase.

- ○

- High (H = 3): Control provides the necessary features to mitigate the threat’s likelihood without requiring any additional enhancements or supporting controls.

- ○

- Medium (M = 2): Control provides some features to reduce the threat likelihood; however, additional enhancements or supporting controls are required.

- ○

- Low (L = 1): Control provides a significantly minimal feature to reduce the likelihood of the threat; however, additional enhancements or supporting controls are required.

- Effectiveness (Ef): Effectiveness is the ability of security controls to effectively protect, detect, and respond to threats. This implies it must be capable of preventing the threat from occurring in the first place, recognizing it when it does, and successfully responding to limit the impact.

- ○

- High (H = 3): Control aims to prevent the occurrence of an attack, as well as to detect and respond, when necessary, without the need for any further enhancements or supporting controls.

- ○

- Medium (M = 2): Control aims to provide two essential roles, such as protecting and detecting the occurrence of attack, with no response. However, further enhancements or supporting controls are required.

- ○

- Low (L = 1): Control aims to provide a single essential role such as detecting the occurrence of attack, with no protection and response. However, further enhancements or supporting controls are required.

- Complexity (Cx): When it is difficult to implement or operate control, a number of challenges to the organisation’s security team become evident. To avoid major changes to the overall system, control should be smoothly integrated into organisational systems. Furthermore, operation complexity presents a challenge when the team is unable to operate the control due to a lack of knowledge or because the control provides a complex workflow to apply actions.

- ○

- High (H = 1): Control can integrate seamlessly into organisation infrastructure and the team have the skills to implement and operate the control.

- ○

- Medium (M = 2): Control can be integrated into organisation infrastructure with minimal changes, and the team have no skills to implement and operate the control.

- ○

- Low (L = 3): Integrating control in organisation infrastructure is a complex procedure and the team lack the skills to implement and operate the control.

5. Evaluation

- To understand the applicability of using d-TM;

- To identify any issues relating to the implementation of d-TM.

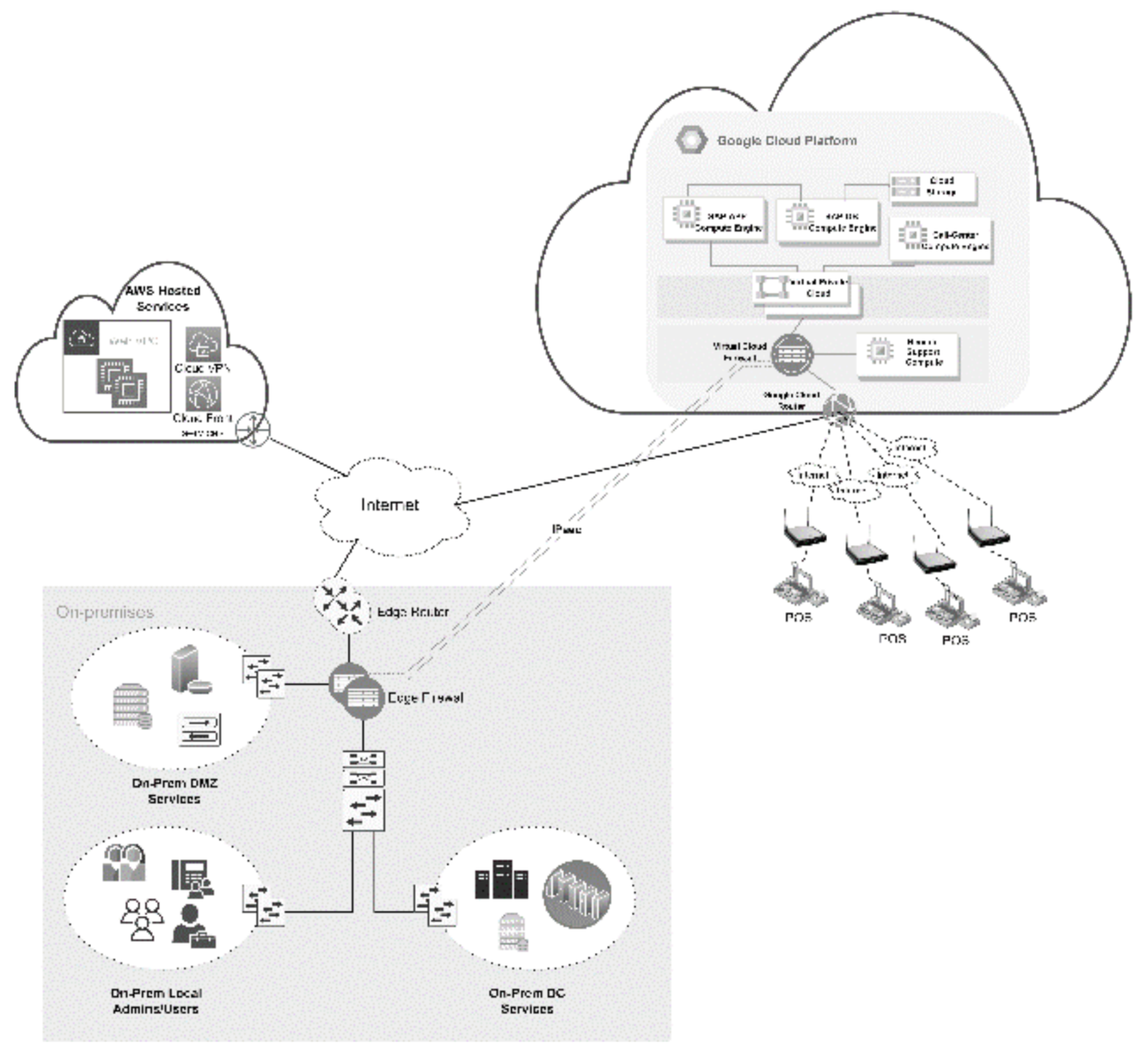

5.1. CaseStudy Scenario

5.2. d-TM Implementation

5.2.1. Phase 1 Data Collection

5.2.2. Phase 2 Data Analysis

- Business User (aka USR), which refers to any staff of sales, finance, or restaurant front-end representatives that use Point-of-Sales machines. This actor has limited privilege and is able to access the SAP application for business-related activities such as registering or monitoring sales orders.

- Business Operator (aka OPR), which refers to any IT Staff working for the organisation that access infrastructure or services for any administration activities such as troubleshooting. This actor has a high privilege that provides full control of the asset.

- Business System (aka SYS) which refers to any system-to-system relationships, such as the SAP application and SAP DB as well as CSP automation controllers and organisation compute. It is a system or process of exchanging data using API calls for instance. The system may have full or restricted privileges, depending on its role. For example, the CSP console has full privilege over organisation compute hosted in the cloud.

5.2.3. Phase 3 Threat Analysis

- The browser runs with the default configuration.

- Some commercial plugins are installed that are not related to business.

- A single profile is used by multiple users to access the windows operation system.

- The PoS machine uses an insecure wireless network, the network is accessed and shared with restaurant staff for personal use.

- The PoS machine data stored in the windows operating system are accessible by restaurant staff.

5.2.4. Phase 4 Threat Mitigation

6. Discussion

6.1. d-TM Process

6.2. Threats and Control

- Assets: A total of five assets were investigated and regarded as significant components in the cloud data path. Data were created either by the business user at the PoS machine or by the system administrator using a corporate laptop to access the cloud. The assets are Ag0, Ag1, Net0, Net3 and App0.

- Weaknesses: A total of seven weaknesses were determined out of the five assets. The seven weaknesses were investigated to reveal the implied threats to data.

- Threats: A total of seven threats were identified out of the five assets assessed. The threats targeted data at infrastructure components in different layer such as agent, network, and application.

- Critical threats: A total of four threats were categorised as critical out of seven identified threats. The four threats required immediate attention in the case study. Denial of service, XSS, and social engineering represented critical threats due to weaknesses in two assets (Net3, App0).

- Data: A total of two cloud assets out of five represent a high risk to data; where threats mainly target two data levels which are management and business data levels. Furthermore, data are at risk while in two phases which are transit and in process.

- Controls: Four controls were identified to mitigate the critical threats. The controls include looking after authentication improvement, input validation and service assurance.

6.3. Comparing with the Existing Works

6.4. Limitations of d-TM

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Cloud Computing Market Size, Share and Global Market Forecast to 2026|COVID-19 Impact Analysis|Markets and Markets. Available online: https://www.marketsandmarkets.com/Market-Reports/cloud-computing-market-234.html (accessed on 1 June 2022).

- Alouffi, B.; Hasnain, M.; Alharbi, A.; Alosaimi, W.; Alyami, H.; Ayaz, M. A Systematic Literature Review on Cloud Computing Security: Threats and Mitigation Strategies. IEEE Access 2021, 9, 57792–57807. [Google Scholar] [CrossRef]

- Cloud Security Challenges in 2020. Available online: https://cloudsecurityalliance.org/blog/2020/02/18/cloud-security-challenges-in-2020/ (accessed on 1 June 2022).

- Abdulsalam, Y.S.; Hedabou, M. Security and privacy in cloud computing: Technical review. Future Internet 2022, 14, 11. [Google Scholar] [CrossRef]

- UcedaVelez, T.; Morana, M.M. Risk Centric Threat Modeling: Process for Attack Simulation and Threat Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Sequeiros, J.B.F.; Chimuco, F.T.; Samaila, M.G.; Freire, M.M.; Inácio, P.R.M. Attack and system modeling applied to IoT, cloud, and mobile ecosystems: Embedding security by design. ACM Comput. Surv. (CSUR) 2020, 53, 1–32. [Google Scholar] [CrossRef]

- Draft NIST Special Publication 800-154 Guide to Data-Centric System. Available online: http://csrc.nist.gov/publications (accessed on 22 February 2022).

- Woodage, J.; Shumow, D. An Analysis of NIST SP 800-90A. In Proceedings of the Advances in Cryptology—EUROCRYPT 2019, 38th Annual International Conference on the Theory and Applications of Cryptographic Techniques, Darmstadt, Germany, 19–23 May 2019; Ishai, Y., Rijmen, V., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2019; Volume 11477. [Google Scholar] [CrossRef]

- Vidal, C.; Choo, K.K.R. Situational Crime Prevention and the Mitigation of Cloud Computing Threats. In Proceedings of the Security and Privacy in Communication Networks, SecureComm 2017 International Workshops, ATCS and SePrIoT, Niagara Falls, ON, Canada, 22–25 October 2017; Lin, X., Ghorbani, A., Ren, K., Zhu, S., Zhang, A., Eds.; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering. Springer: Cham, Switzerland, 2018; Volume 239. [Google Scholar] [CrossRef]

- Islam, S.; Fenz, S.; Weippl, E.; Kalloniatis, C. Migration goals and risk management in cloud computing: A review of state of the art and survey results on practitioners. Int. J. Secur. Softw. Eng. (IJSSE) 2016, 7, 44–73. [Google Scholar] [CrossRef]

- Abdurachman, E.; Gaol, F.L.; Soewito, B. Survey on threats and risks in the cloud computing environment. Procedia Comput. Sci. 2019, 161, 1325–1332. [Google Scholar]

- Alhebaishi, N.; Wang, L.; Singhal, A. Threat Modeling for Cloud Infrastructures. ICST Trans. Secur. Saf. 2019, 5, 156246. [Google Scholar] [CrossRef][Green Version]

- Manzoor, S.; Vateva-Gurova, T.; Trapero, R.; Suri, N. Threat Modeling the Cloud: An Ontology Based Approach. In Proceedings of the Information and Operational Technology Security Systems, IOSec 2018, CIPSEC Project, Heraklion, Crete, Greece, 13 September 2018; Fournaris, A., Lampropoulos, K., Marín Tordera, E., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11398. [Google Scholar] [CrossRef]

- Mitsis, G.; Apostolopoulos, P.A.; Tsiropoulou, E.E.; Papavassiliou, S. Intelligent dynamic data offloading in a competitive mobile edge computing market. Future Internet 2019, 11, 118. [Google Scholar] [CrossRef]

- Al-Mohannadi, H.; Awan, I.; al Hamar, J. Analysis of adversary activities using cloud-based web services to enhance cyber threat intelligence. Serv. Oriented Comput. Appl. 2020, 14, 175–187. [Google Scholar] [CrossRef]

- Jianxing, Y.; Haicheng, C.; Shibo, W.; Haizhao, F. A novel risk matrix approach based on cloud model for risk assessment under uncertainty. IEEE Access 2021, 9, 27884–27896. [Google Scholar] [CrossRef]

- Akinrolabu, O.; Nurse, J.R.C.; Martin, A.; New, S. Cyber risk assessment in cloud provider environments: Current models and future needs. Comput. Secur. 2019, 87, 101600. [Google Scholar] [CrossRef]

- Awaysheh, F.M.; Aladwan, M.N.; Alazab, M.; Alawadi, S.; Cabaleiro, J.C.; Pena, T.F. Security by Design for Big Data Frameworks Over Cloud Computing. IEEE Trans. Eng. Manag. 2021, 1–18. [Google Scholar] [CrossRef]

- Chaudhry, S.A.; Yahya, K.; Al-Turjman, F.; Yang, M.-H. A secure and reliable device access control scheme for IoT based sensor cloud systems. IEEE Access 2020, 8, 139244–139254. [Google Scholar] [CrossRef]

- The Big Hack: How China Used a Tiny Chip to Infiltrate U.S. Companies—Bloomberg. Available online: https://www.bloomberg.com/news/features/2018-10-04/the-big-hack-how-china-used-a-tiny-chip-to-infiltrate-america-s-top-companies (accessed on 8 May 2022).

- Cheng, C.Y.; Colbert, E.; Liu, H. Experimental study on the detectability of man-in-the-middle attacks for cloud applications. In Proceedings of the 2019 3rd IEEE International Conference on Cloud and Fog Computing Technologies and Applications, Cloud Summit 2019, Washington, DC, USA, 8–10 August 2019; pp. 52–57. [Google Scholar] [CrossRef]

- Beata Berecki. How to Protect Data in Motion?|Endpoint Protector. 2019. Available online: https://www.endpointprotector.com/blog/how-to-protect-data-in-motion/ (accessed on 27 January 2022).

- CAPEC—CAPEC-94: Adversary in the Middle (AiTM) (Version 3.7). Available online: https://capec.mitre.org/data/definitions/94.html (accessed on 1 June 2022).

- What Is MITM (Man in the Middle) Attack|Imperva. Available online: https://www.imperva.com/learn/application-security/man-in-the-middle-attack-mitm/ (accessed on 1 June 2022).

- Containers, vs. Virtual Machines|Microsoft Docs. Available online: https://docs.microsoft.com/en-us/virtualization/windowscontainers/about/containers-vs-vm (accessed on 1 June 2022).

- CAPEC—Common Attack Pattern Enumeration and Classification (CAPECTM). 2022. Available online: https://capec.mitre.org/ (accessed on 18 January 2022).

- CAPEC—CAPEC-103: Clickjacking (Version 3.7). Available online: https://capec.mitre.org/data/definitions/103.html (accessed on 1 June 2022).

- CAPEC—CAPEC-61: Session Fixation (Version 3.7). Available online: https://capec.mitre.org/data/definitions/61.html (accessed on 1 June 2022).

- Buhl, R.; MITIGATE. Multidimensional, IntegraTed, Rlsk Assessment Framework and Dynamic, Collaborative Risk ManaGement Tools for Critical Information infrAstrucTurEs. 2017. Available online: https://cordis.europa.eu/project/id/653212 (accessed on 10 June 2022).

- Shostack, A. Threat Modeling: Designing for Security; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- CWE—Common Weakness Enumeration. 2022. Available online: https://cwe.mitre.org/index.html (accessed on 18 January 2022).

- IBM Security. IBM: 2021 X-Force Threat Intelligence Index. 2021. Available online: https://www.cert.hu/sites/default/files/xforce_threat_intelligence_index_2021_90037390usen.pdf (accessed on 10 June 2022).

- SAP S/4HANA Cloud ERP Software Solution. Available online: https://www.sap.com/products/s4hana-erp.html (accessed on 1 June 2022).

- Fortinet Provides Advanced Security for SAP Solutions on Google Cloud. Available online: https://www.fortinet.com/content/dam/fortinet/assets/solution-guides/sb-fortinet-provides-advanced-security-for-sap-workloads-on-google-cloud.pdf (accessed on 12 April 2022).

- CWE—CWE-1000: Research Concepts (4.6). 2022. Available online: https://cwe.mitre.org/data/definitions/1000.html (accessed on 22 January 2022).

- CAPEC—CAPEC List Version 3.7. Available online: https://capec.mitre.org/data/index.html (accessed on 10 June 2022).

- CAPEC—CAPEC-63: Cross-Site Scripting (XSS) (Version 3.7). Available online: https://capec.mitre.org/data/definitions/63.html (accessed on 1 June 2022).

- Joint Task Force. National Institute of Standards and Technology Special Publication 800-53, Revision 5 : Security and Privacy Controls for Information Systems and Organisations. NIST Spec. Publ. 2020. [CrossRef]

- Babu, S.; Bansal, V.; Telang, P. Top 10 Cloud Risks That Will Keep You Awake at Night. Available online: https://docplayer.net/2298521-Top-10-cloud-risks-that-will-keep-you-awake-at-night.html (accessed on 10 June 2022).

- Alexander, C.A.; Wang, L. Cybersecurity, Information Assurance, and Big Data Based on Blockchain. In Proceedings of the IEEE Southeastcon 2019, Huntsville, AL, USA, 11–14 April 2019. [Google Scholar] [CrossRef]

- Giannoutakis, K.M.; Spanopoulos-Karalexidis, M.; Filelis Papadopoulos, C.K.; Tzovaras, D. Next Generation Cloud Architectures. In The Cloud-to-Thing Continuum; Lynn, T., Mooney, J., Lee, B., Endo, P., Eds.; Palgrave Studies in Digital Business & Enabling Technologies; Palgrave Macmillan: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

| Business Service (Bs) | Actor | d-TM Layers | mD | cD | bD | Dr | Dp | Dt |

|---|---|---|---|---|---|---|---|---|

| Bsx | Business-user (USR) | Agtx | ✓ | ● | ● | ● | ||

| Netx | ✓ | ● | ||||||

| Cmpx | ✓ | ● | ||||||

| Appx | ✓ | ○ 1 | ● | ● | ||||

| Stgx | ✓ | ○ 1 | ○ 1 | ○ 1 | ||||

| Bsx | Business-Operator (OPR) | Agtx | ✓ | ● | ● | ● | ||

| Netx | ✓ | ○ 1 | ○ 2 | ○ 2 | ||||

| Cmpx | ✓ | ○ 1 | ○ 2 | ○ 2 | ||||

| Appx | ✓ | ○ 1 | ○ 2 | ○ 2 | ||||

| Stgx | ✓ | ○ 1 | ○ 1,2 | ○ 1,2 | ||||

| Bsx | Business-System (SYS) | Agtx | ✓ | ● | ● | ● | ||

| Netx | ✓ | ● | ||||||

| Cmpx | ✓ | ● | ||||||

| Appx | ✓ | ○ 1 | ● | ● | ||||

| Stgx | ✓ | ○ 1 | ○ 1 | ○ 1 |

| Bt | Threat-Occurrence Likelihood | ||

|---|---|---|---|

| Attacker-Gain Scale | High | Medium | Low |

| High | H | H | M |

| Medium | H | M | L |

| Low | M | L | L |

| Tc | Access-Complexity Levels | ||

|---|---|---|---|

| Attacker-Capability Levels | Multi-Level | Single-Level | Direct |

| High | M | L | L |

| Medium | H | M | L |

| Low | H | H | M |

| Threats Priority | Bi | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| High | Medium | Low | ||||||||

| Tc | Low | Medium | High | Low | Medium | High | Low | Medium | High | |

| Bt | ||||||||||

| High | VH | VH | H | H | H | M | M | M | L | |

| Medium | VH | H | M | H | M | L | M | L | VL | |

| Low | H | M | M | M | L | L | L | VL | VL | |

| Process ID | Process Name and Description | Relevant Service ID | Service Name and Description | Service Criticality |

|---|---|---|---|---|

| Bp0 | Sales Operation This process is critical to the company’s business continuity, where sales operations are the main source of revenue for the company. | Bs0 | SAP Platform This system provides a platform for sales representatives performing day-to-day sales tasks such as sales, checkout, balance, and purchasing. On the other hand, it provides management with information about all sales data and needs for planning and supporting the sales process. | H |

| Bs0 | ||||

| Asset details Assetid (Name/Role, Brand name, SW version) | Asset Administration Mgmt. (port, agent, access, privilege) | Asset Dependency Dep. (asset, type, access) | ||

| Agt0 (Web Browser, Google Chrome, 101.0.4951.54) | - | - | ||

| Agt1 (SSH Terminal, Putty, 0.74) | - | - | ||

| Net0 (PoS Internet Modem, ZNID, S3.1.135) | Mgmt. (443, Agt0, W/LAN, Local Admin) | Dep. (Internet link, Exchange, Direct) | ||

| Net3 (Cloud vFW, Fortigate-VM, 5.4) | Mgmt. ((443, GCP-Console, GCP-Shell), Agt0, LAN, Security Admin group) | Dep. (GCP GW, Exchange, Local Network) | ||

| Dep. (Jump-Server, Exchange, Local Network) | ||||

| Dep. (SAP Application, Exchange, Local Network) | ||||

| Dep. (GCP Cloud Portal, Control, Local Network) | ||||

| Cmp0 (Cloud Compute-1, SUSE Linux, 15) | Mgmt. ((22, GCP-Console, GCP-Shell), (Agt1, Agt0), Remote (Internet), GCP Admin group) | Dep. (SAP Application, Process, Direct) | ||

| Dep. (Cloud vFW, Exchange, Local Network) | ||||

| Dep. (Cloud DNS, Process, Remote Network) | ||||

| Dep. (GCP Cloud Portal, Control, Local Network) | ||||

| App0 (SAP Application-1, Netweaver, 7.5) | Mgmt. ((443, GCP-Console), Agt0, Remote(Internet), GCP Admin group) | Dep. (SAP DB, (Process, Store), Local Network) | ||

| Dep. (Cloud Compute-1, Host, Direct) | ||||

| Dep. (GCP Cloud Portal, Control, Local Network) | ||||

| Service (Actor, Asset, Data-Level) | Data Phases Information |

|---|---|

| Bs0 (USR, Agt0,bD) | (Dr)—Browser saves session information locally such as certificates, cookies, and history. Additionally, Data are stored in a single user profile for all employees. (Dp)—Non-business Open-source plugins are installed. (Dt)—Data are sent over wireless systems used for business and non-business purposes. |

| Bs0 (USR, Net0,bD) | (Dt)—PoS Modem is loaded with the default setting except for the wireless setting (WPA2 encryption). The admin page is available to access from any wireless SSID using HTTP as well as locally stored credentials. |

| Bs0 (USR, Net3,bD) | (Dt)—Cloud vFW external interface eth0 is configured with a public IP address using a basic setting. Data volume is not restricted. Traffic is allowed based on any source to the SAP app using the IP address and port (443). However, the admin console is not accessed from the internet. |

| Bs0 (USR, App0,bD) | (Dp)—SAP application is running as system privilege. The SAP application uses basic user names and passwords for user authentication. SAP applications exchange data during processing over multiple ports with SAP DB. (Dt)—SAP application data are sent over HTTPS to business users. SAP application data are forwarded to Net3 using private VN. SAP application authentication data are sent over LDAP protocol. |

| Bs0 (OPR, Agt1,mD) | (Dr)—GCP computes and cloud vFW identification information such as IP, port, and username is saved in the putty software for easy access. The private key file for cloud computing is stored locally on the system admin machine. (Dp)—The putty software is installed as a trusted system-level process. The putty software does not require authentication to run. (Dt)—The putty software sends data over ssh or sftp based on IP address as an identifier. |

| Assetid (Data-Level, Data-Phase) | Weaknesses | Threats | Criticality (Bt, Tc, Bi) |

|---|---|---|---|

| Agt0 ((bD, mD), (Dr, Dp, Dt)) | CWE-494: Download of Code Without Integrity Check [35] | CAPEC-662: Adversary in the Browser (AiTB) [36] | (M, M, L) → L |

| Agt0 ((bD, mD), (Dr)) | CWE-921: Storage of Sensitive Data in a Mechanism without Access Control [35] | CAPEC-196: Session Credential Falsification through Forging [36] | (L, L, M) → L |

| Agt1 (mD, Dr) | CWE-922: Insecure Storage of Sensitive Information [35] | CAPEC-529: Malware-Directed Internal Reconnaissance [36] | (M, M, H) → H |

| Net0 (md, Dt) | CWE-319: Cleartext Transmission of Sensitive Information [35] | CAPEC-102: Session Sidejacking [36] | (M, L, L) → VL |

| Net0 (mD, Dp) | CWE-284: Improper Access Control [35] | CAPEC-1: Accessing Functionality Not Properly Constrained by ACLs [36] | (M, M, L) → L |

| Net3 (mD, Dp) | CWE-308: Use of Single-factor Authentication [35] | CAPEC-151: Identity Spoofing [36] | (H, M, H) → VH |

| Net3 (bD, Dt) | CWE-770: Allocation of Resources Without Limits or Throttling [35] | CAPEC-125: Flooding [36] | (H, H, H) → VH |

| App0 ((bD, mD), Dp) | CWE-308: Use of Single-factor Authentication [35] | CAPEC-151: Identity Spoofing [36] | (H, M, H) → VH |

| App0 (bD, Dp) | CWE-20: Improper Input Validation [35] | CAPEC-63: Cross-Site Scripting (XSS) [37] | (H, H, H) → VH |

| Assetid (Data-Level, Data-Phase) | Threats (Criticality) | Controls | Assurance Level (Ct, Ef, Cx) → OAL |

|---|---|---|---|

| Net3 (mD, Dp) | CAPEC-151 (VH) | IA-2(1)-Identification Additionally, Authentication [38] | (H, H, M) → 8 (H) |

| Net3 (bD, Dt) | CAPEC-125 (VH) | SC-5(3)-Denial-Of-Service Protection [38] | (H, M, L) → 8(H) |

| App0 ((bD, mD), Dp) | CAPEC-151 (VH) | IA-2(1)-Identification Additionally, Authentication [38] | (H, H, M) → 8 (H) |

| App0 (bD, Dp) | CAPEC-63 (VH) | SI-10(5)-Information Input Validation [38] | (H, H, L) → 9 (H) |

| Factors\Models | PASTA | STRIDE | d-TM |

|---|---|---|---|

| Threat Modelling Methodology | Attack-Centric | Threat-centric | Data-Centric |

| Stages | 7 | N/A | 4 |

| Consideration of Business | Yes | No | Yes |

| Threats Criticality and Control | Yes | No | Yes |

| Identification of Attack Surface | Attacks to:

| Threats to:

| Threat to Data in:

|

| Data Area of Focus | Single category, three phases | Single category | Three categories: Management/Control/Business Three phases. |

| Threat Modelling Components |

|

|

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alwaheidi, M.K.S.; Islam, S. Data-Driven Threat Analysis for Ensuring Security in Cloud Enabled Systems. Sensors 2022, 22, 5726. https://doi.org/10.3390/s22155726

Alwaheidi MKS, Islam S. Data-Driven Threat Analysis for Ensuring Security in Cloud Enabled Systems. Sensors. 2022; 22(15):5726. https://doi.org/10.3390/s22155726

Chicago/Turabian StyleAlwaheidi, Mohammed K. S., and Shareeful Islam. 2022. "Data-Driven Threat Analysis for Ensuring Security in Cloud Enabled Systems" Sensors 22, no. 15: 5726. https://doi.org/10.3390/s22155726

APA StyleAlwaheidi, M. K. S., & Islam, S. (2022). Data-Driven Threat Analysis for Ensuring Security in Cloud Enabled Systems. Sensors, 22(15), 5726. https://doi.org/10.3390/s22155726