Vision-Based Module for Herding with a Sheepdog Robot

Abstract

:1. Introduction

2. Related Works

3. System Architecture

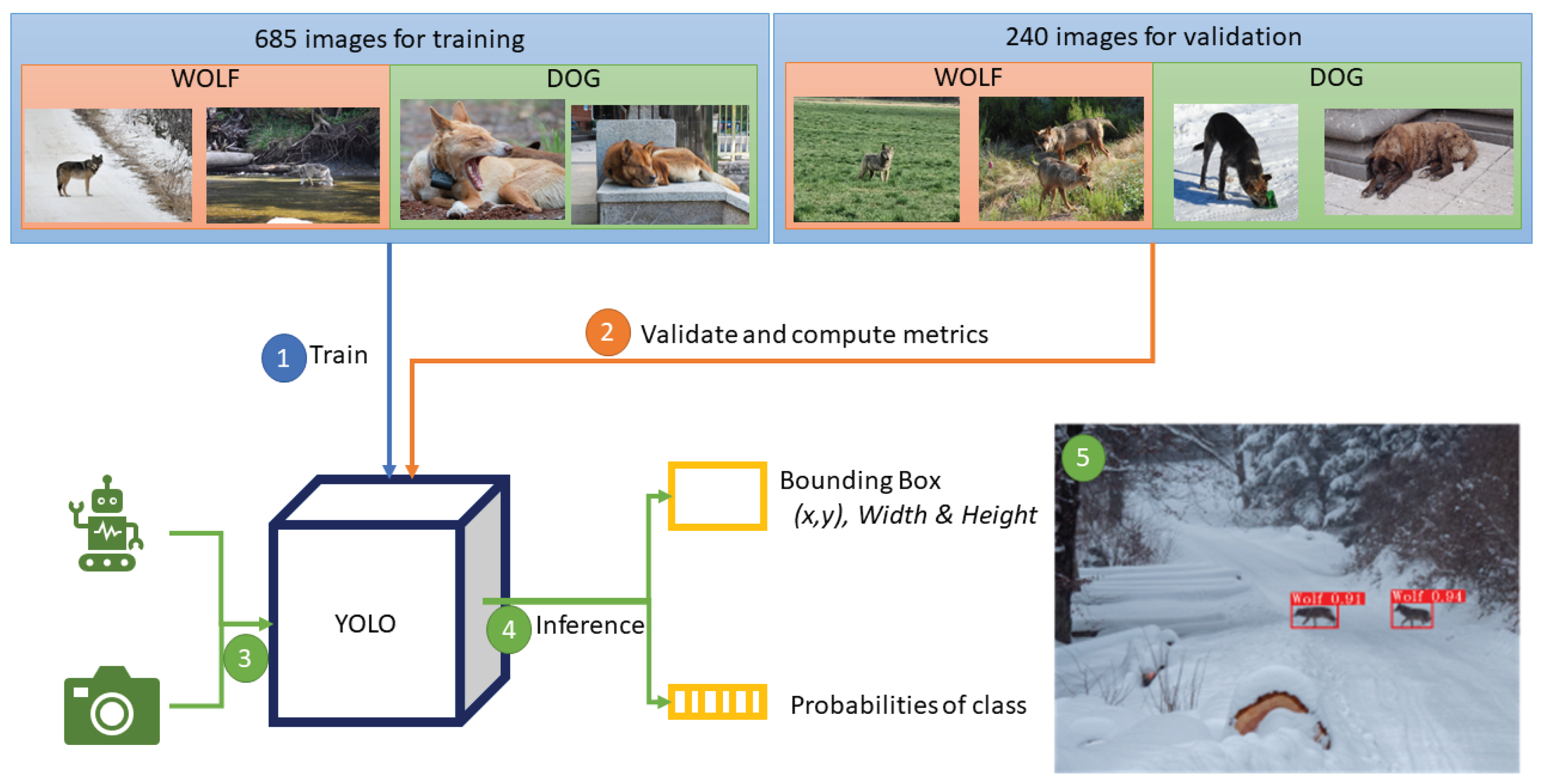

4. Vision Module

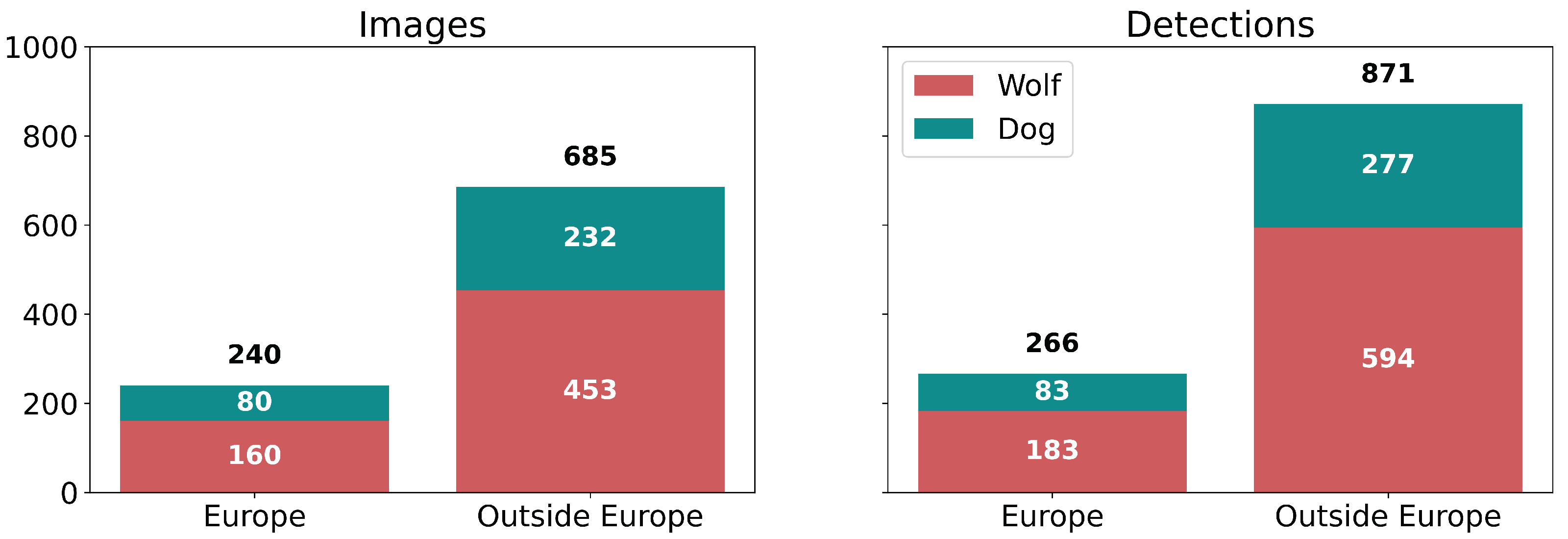

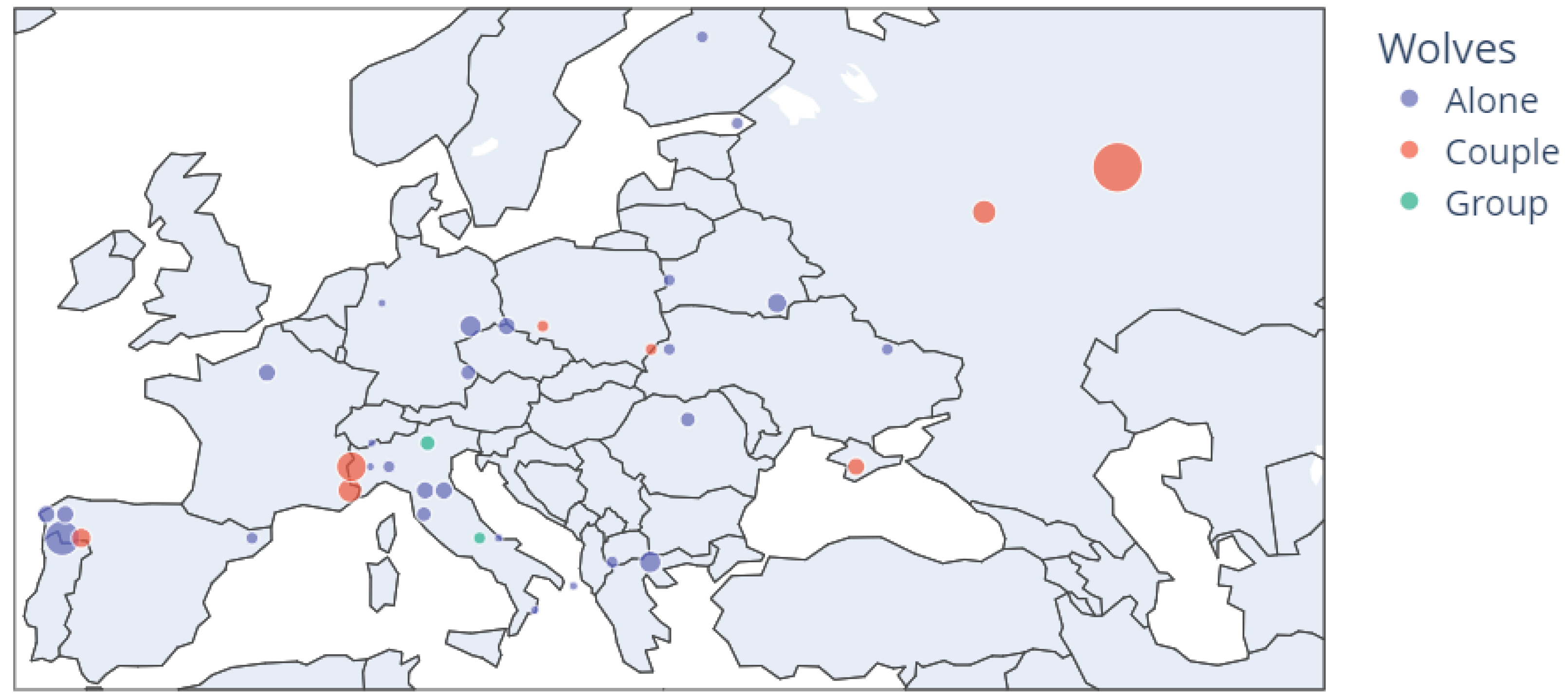

4.1. Data Acquisition and Labelling

4.2. Object Detection Architectures

- True Positives () are those objects detected by the model with an greater than the considered threshold ();

- False Positives () are the detected objects whose is less than the fixed threshold ();

- False Negatives () stand for those objects that are not detected;

- True Negatives () are the number of objects detected by the model when actually the image does not have such objects.

- Precision: measures how many of the predicted outputs labelled as true predictions are correctly predicted:

- Recall: measures how many of the real true predictions are correctly predicted:

- COCO metric ( or ): evaluates 10 between 50% and 95% with steps of 5% of mean Average Precision as

- PASCAL VOC metric ( or ): evaluates at 50%.

4.3. SSD

4.4. YOLO

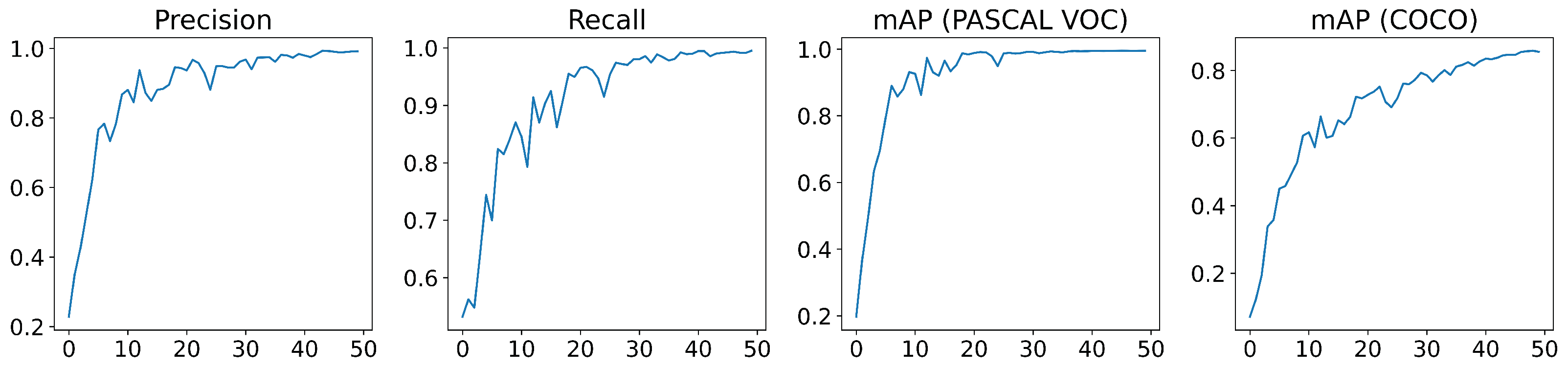

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| COCO | Common Objects in Context |

| FPS | Frame per second |

| IoU | Intersection over Union |

| LoRa | Long-Range Radio |

| mAP | mean Average Precision |

| PAZ | Perception for Autonomous Systems |

| PLF | Precision Livestock Farming |

| ROS | Robot Operating System |

| SGD | Stochastic Gradient Descent |

| SSD | Single-Shot MultiBox Detector |

| UGVs | Unmanned Ground Vehicles |

| VF | Virtual Fencing |

| VOC | Visual Object Classes |

| YOLO | You Only Look Once |

References

- Delaby, L.; Finn, J.A.; Grange, G.; Horan, B. Pasture-Based Dairy Systems in Temperate Lowlands: Challenges and Opportunities for the Future. Front. Sustain. Food Syst. 2020, 4, 543587. [Google Scholar] [CrossRef]

- Campos, P.; Mesa, B.; Álvarez, A. Pasture-Based Livestock Economics under Joint Production of Commodities and Private Amenity Self-Consumption: Testing in Large Nonindustrial Privately Owned Dehesa Case Studies in Andalusia, Spain. Agriculture 2021, 11, 214. [Google Scholar] [CrossRef]

- Lessire, F.; Moula, N.; Hornick, J.L.; Dufrasne, I. Systematic Review and Meta-Analysis: Identification of Factors Influencing Milking Frequency of Cows in Automatic Milking Systems Combined with Grazing. Animals 2020, 10, 913. [Google Scholar] [CrossRef] [PubMed]

- Yu, H.; Li, Y.; Oshunsanya, S.O.; Are, K.S.; Geng, Y.; Saggar, S.; Liu, W. Re-introduction of light grazing reduces soil erosion and soil respiration in a converted grassland on the Loess Plateau, China. Agric. Ecosyst. Environ. 2019, 280, 43–52. [Google Scholar] [CrossRef]

- Herlin, A.; Brunberg, E.; Hultgren, J.; Högberg, N.; Rydberg, A.; Skarin, A. Animal Welfare Implications of Digital Tools for Monitoring and Management of Cattle and Sheep on Pasture. Animals 2021, 11, 829. [Google Scholar] [CrossRef]

- Schillings, J.; Bennett, R.; Rose, D.C. Exploring the Potential of Precision Livestock Farming Technologies to Help Address Farm Animal Welfare. Front. Anim. Sci. 2021, 2, 639678. [Google Scholar] [CrossRef]

- Niloofar, P.; Francis, D.P.; Lazarova-Molnar, S.; Vulpe, A.; Vochin, M.C.; Suciu, G.; Balanescu, M.; Anestis, V.; Bartzanas, T. Data-driven decision support in livestock farming for improved animal health, welfare and greenhouse gas emissions: Overview and challenges. Comput. Electron. Agric. 2021, 190, 106406. [Google Scholar] [CrossRef]

- Samperio, E.; Lidón, I.; Rebollar, R.; Castejón-Limas, M.; Álvarez-Aparicio, C. Lambs’ live weight estimation using 3D images. Animal 2021, 15, 100212. [Google Scholar] [CrossRef]

- Bernués, A.; Ruiz, R.; Olaizola, A.; Villalba, D.; Casasús, I. Sustainability of pasture-based livestock farming systems in the European Mediterranean context: Synergies and trade-offs. Livest. Sci. 2011, 139, 44–57. [Google Scholar] [CrossRef]

- Chen, G.; Lu, Y.; Yang, X.; Hu, H. Reinforcement learning control for the swimming motions of a beaver-like, single-legged robot based on biological inspiration. Robot. Auton. Syst. 2022, 154, 104116. [Google Scholar] [CrossRef]

- Mansouri, S.S.; Kanellakis, C.; Kominiak, D.; Nikolakopoulos, G. Deploying MAVs for autonomous navigation in dark underground mine environments. Robot. Auton. Syst. 2020, 126, 103472. [Google Scholar] [CrossRef]

- Lindqvist, B.; Karlsson, S.; Koval, A.; Tevetzidis, I.; Haluška, J.; Kanellakis, C.; akbar Agha-mohammadi, A.; Nikolakopoulos, G. Multimodality robotic systems: Integrated combined legged-aerial mobility for subterranean search-and-rescue. Robot. Auton. Syst. 2022, 154, 104134. [Google Scholar] [CrossRef]

- Osei-Amponsah, R.; Dunshea, F.R.; Leury, B.J.; Cheng, L.; Cullen, B.; Joy, A.; Abhijith, A.; Zhang, M.H.; Chauhan, S.S. Heat Stress Impacts on Lactating Cows Grazing Australian Summer Pastures on an Automatic Robotic Dairy. Animals 2020, 10, 869. [Google Scholar] [CrossRef] [PubMed]

- Vincent, J. A Robot Sheepdog? ‘No One Wants This,’ Says One Shepherd. 2020. Available online: https://www.theverge.com/2020/5/22/21267379/robot-dog-rocos-boston-dynamics-video-spot-shepherd-reaction (accessed on 12 January 2022).

- Matheson, C.A. iNaturalist. Ref. Rev. 2014, 28, 36–38. [Google Scholar] [CrossRef]

- Tedeschi, L.O.; Greenwood, P.L.; Halachmi, I. Advancements in sensor technology and decision support intelligent tools to assist smart livestock farming. J. Anim. Sci. 2021, 99, skab038. [Google Scholar] [CrossRef]

- Porto, S.; Arcidiacono, C.; Giummarra, A.; Anguzza, U.; Cascone, G. Localisation and identification performances of a real-time location system based on ultra wide band technology for monitoring and tracking dairy cow behaviour in a semi-open free-stall barn. Comput. Electron. Agric. 2014, 108, 221–229. [Google Scholar] [CrossRef]

- Spedener, M.; Tofastrud, M.; Devineau, O.; Zimmermann, B. Microhabitat selection of free-ranging beef cattle in south-boreal forest. Appl. Anim. Behav. Sci. 2019, 213, 33–39. [Google Scholar] [CrossRef]

- Bailey, D.W.; Trotter, M.G.; Knight, C.W.; Thomas, M.G. Use of GPS tracking collars and accelerometers for rangeland livestock production research1. Transl. Anim. Sci. 2018, 2, 81–88. [Google Scholar] [CrossRef]

- Stygar, A.H.; Gómez, Y.; Berteselli, G.V.; Costa, E.D.; Canali, E.; Niemi, J.K.; Llonch, P.; Pastell, M. A Systematic Review on Commercially Available and Validated Sensor Technologies for Welfare Assessment of Dairy Cattle. Front. Vet. Sci. 2021, 8, 634338. [Google Scholar] [CrossRef]

- Aquilani, C.; Confessore, A.; Bozzi, R.; Sirtori, F.; Pugliese, C. Review: Precision Livestock Farming technologies in pasture-based livestock systems. Animal 2022, 16, 100429. [Google Scholar] [CrossRef]

- Mansbridge, N.; Mitsch, J.; Bollard, N.; Ellis, K.; Miguel-Pacheco, G.G.; Dottorini, T.; Kaler, J. Feature Selection and Comparison of Machine Learning Algorithms in Classification of Grazing and Rumination Behaviour in Sheep. Sensors 2018, 18, 3532. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, K.; Karlsson, J.; Liuska, M.; Hartikainen, M.; Hansen, I.; Jørgensen, G.H. A sensor-fusion-system for tracking sheep location and behaviour. Int. J. Distrib. Sens. Netw. 2020, 16. [Google Scholar] [CrossRef]

- Norouzzadeh, M.S.; Nguyen, A.; Kosmala, M.; Swanson, A.; Palmer, M.S.; Packer, C.; Clune, J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, E5716–E5725. [Google Scholar] [CrossRef] [Green Version]

- Khatri, K.; Asha, C.C.; D’Souza, J.M. Detection of Animals in Thermal Imagery for Surveillance using GAN and Object Detection Framework. In Proceedings of the 2022 International Conference for Advancement in Technology (ICONAT), Goa, India, 21–22 January 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Andrew, W.; Greatwood, C.; Burghardt, T. Visual Localisation and Individual Identification of Holstein Friesian Cattle via Deep Learning. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2850–2859. [Google Scholar] [CrossRef] [Green Version]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Chen, G.; Tait, A.; Schneider, D. Automated cattle counting using Mask R-CNN in quadcopter vision system. Comput. Electron. Agric. 2020, 171, 105300. [Google Scholar] [CrossRef]

- Xu, B.; Wang, W.; Falzon, G.; Kwan, P.; Guo, L.; Sun, Z.; Li, C. Livestock classification and counting in quadcopter aerial images using Mask R-CNN. Int. J. Remote Sens. 2020, 41, 8121–8142. [Google Scholar] [CrossRef] [Green Version]

- Wang, D.; Shao, Q.; Yue, H. Surveying wild animals from satellites, manned aircraft and unmanned aerial systems (UASs): A review. Remote Sens. 2019, 11, 1308. [Google Scholar] [CrossRef] [Green Version]

- Anderson, D.M.; Estell, R.E.; Holechek, J.L.; Ivey, S.; Smith, G.B. Virtual herding for flexible livestock management –a review. Rangel. J. 2014, 36, 205–221. [Google Scholar] [CrossRef]

- Handcock, R.N.; Swain, D.L.; Bishop-Hurley, G.J.; Patison, K.P.; Wark, T.; Valencia, P.; Corke, P.; O’Neill, C.J. Monitoring Animal Behaviour and Environmental Interactions Using Wireless Sensor Networks, GPS Collars and Satellite Remote Sensing. Sensors 2009, 9, 3586–3603. [Google Scholar] [CrossRef] [Green Version]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J.M. Detection of Cattle Using Drones and Convolutional Neural Networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef] [Green Version]

- Schroeder, N.M.; Panebianco, A.; Musso, R.G.; Carmanchahi, P. An experimental approach to evaluate the potential of drones in terrestrial mammal research: A gregarious ungulate as a study model. R. Soc. Open Sci. 2020, 7, 191482. [Google Scholar] [CrossRef] [Green Version]

- Ditmer, M.A.; Werden, L.K.; Tanner, J.C.; Vincent, J.B.; Callahan, P.; Iaizzo, P.A.; Laske, T.G.; Garshelis, D.L. Bears habituate to the repeated exposure of a novel stimulus, unmanned aircraft systems. Conservation Physiology 2019, 7, coy067. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rümmler, M.C.; Mustafa, O.; Maercker, J.; Peter, H.U.; Esefeld, J. Sensitivity of Adélie and Gentoo penguins to various flight activities of a micro UAV. Polar Biol. 2018, 41, 2481–2493. [Google Scholar] [CrossRef]

- Meena, S.D.; Agilandeeswari, L. Smart Animal Detection and Counting Framework for Monitoring Livestock in an Autonomous Unmanned Ground Vehicle Using Restricted Supervised Learning and Image Fusion. Neural Process. Lett. 2021, 53, 1253–1285. [Google Scholar] [CrossRef]

- Ruiz-Garcia, L.; Lunadei, L.; Barreiro, P.; Robla, I. A Review of Wireless Sensor Technologies and Applications in Agriculture and Food Industry: State of the Art and Current Trends. Sensors 2009, 9, 4728–4750. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jawad, H.M.; Nordin, R.; Gharghan, S.K.; Jawad, A.M.; Ismail, M. Energy-Efficient Wireless Sensor Networks for Precision Agriculture: A Review. Sensors 2017, 17, 1781. [Google Scholar] [CrossRef] [Green Version]

- Gresl, J.; Fazackerley., S.; Lawrence., R. Practical Precision Agriculture with LoRa based Wireless Sensor Networks. In Proceedings of the Proceedings of the 10th International Conference on Sensor Networks—WSN4PA, Vienna, Austria, 9–10 February 2021; INSTICC, SciTePress: Setúbal, Portugal, 2021; pp. 131–140. [Google Scholar] [CrossRef]

- Axelsson Linkowski, W.; Kvarnström, M.; Westin, A.; Moen, J.; Östlund, L. Wolf and Bear Depredation on Livestock in Northern Sweden 1827–2014: Combining History, Ecology and Interviews. Land 2017, 6, 63. [Google Scholar] [CrossRef] [Green Version]

- Laporte, I.; Muhly, T.B.; Pitt, J.A.; Alexander, M.; Musiani, M. Effects of wolves on elk and cattle behaviors: Implications for livestock production and wolf conservation. PLoS ONE 2010, 5, e11954. [Google Scholar] [CrossRef] [Green Version]

- Cavalcanti, S.M.C.; Gese, E.M. Kill rates and predation patterns of jaguars (Panthera onca) in the southern Pantanal, Brazil. J. Mammal. 2010, 91, 722–736. [Google Scholar] [CrossRef] [Green Version]

- Steyaert, S.M.J.G.; Søten, O.G.; Elfström, M.; Karlsson, J.; Lammeren, R.V.; Bokdam, J.; Zedrosser, A.; Brunberg, S.; Swenson, J.E. Resource selection by sympatric free-ranging dairy cattle and brown bears Ursus arctos. Wildl. Biol. 2011, 17, 389–403. [Google Scholar] [CrossRef] [Green Version]

- Wells, S.L.; McNew, L.B.; Tyers, D.B.; Van Manen, F.T.; Thompson, D.J. Grizzly bear depredation on grazing allotments in the Yellowstone Ecosystem. J. Wildl. Manag. 2019, 83, 556–566. [Google Scholar] [CrossRef]

- Bacigalupo, S.A.; Dixon, L.K.; Gubbins, S.; Kucharski, A.J.; Drewe, J.A. Towards a unified generic framework to define and observe contacts between livestock and wildlife: A systematic review. PeerJ 2020, 8, e10221. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef] [Green Version]

- Mammeri, A.; Zhou, D.; Boukerche, A.; Almulla, M. An efficient animal detection system for smart cars using cascaded classifiers. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, Australia, 10–14 June 2014; pp. 1854–1859. [Google Scholar] [CrossRef]

- Komorkiewicz, M.; Kluczewski, M.; Gorgon, M. Floating point HOG implementation for real-time multiple object detection. In Proceedings of the 22nd International Conference on Field Programmable Logic and Applications (FPL), Oslo, Norway, 29–31 August 2012; pp. 711–714. [Google Scholar] [CrossRef]

- Munian, Y.; Martinez-Molina, A.; Alamaniotis, M. Intelligent System for Detection of Wild Animals Using HOG and CNN in Automobile Applications. In Proceedings of the 2020 11th International Conference on Information, Intelligence, Systems and Applications (IISA), Piraeus, Greece, 15–17 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Munian, Y.; Martinez-Molina, A.; Alamaniotis, M. Comparison of Image segmentation, HOG and CNN Techniques for the Animal Detection using Thermography Images in Automobile Applications. In Proceedings of the 2021 12th International Conference on Information, Intelligence, Systems Applications (IISA), Chania Crete, Greece, 12–14 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Sharma, V.; Mir, R.N. A comprehensive and systematic look up into deep learning based object detection techniques: A review. Comput. Sci. Rev. 2020, 38, 100301. [Google Scholar] [CrossRef]

- Ren, J.; Wang, Y. Overview of Object Detection Algorithms Using Convolutional Neural Networks. J. Comput. Commun. 2022, 10, 115–132. [Google Scholar]

- Elgendy, M. Deep Learning for Vision Systems; Manning: Shelter Island, NY, USA, 2020. [Google Scholar]

- Allken, V.; Handegard, N.O.; Rosen, S.; Schreyeck, T.; Mahiout, T.; Malde, K. Fish species identification using a convolutional neural network trained on synthetic data. ICES J. Mar. Sci. 2019, 76, 342–349. [Google Scholar] [CrossRef]

- Huang, Y.P.; Basanta, H. Bird image retrieval and recognition using a deep learning platform. IEEE Access 2019, 7, 66980–66989. [Google Scholar] [CrossRef]

- Zualkernan, I.; Dhou, S.; Judas, J.; Sajun, A.R.; Gomez, B.R.; Hussain, L.A. An IoT System Using Deep Learning to Classify Camera Trap Images on the Edge. Computers 2022, 11, 13. [Google Scholar] [CrossRef]

- González-Santamarta, M.A.; Rodríguez-Lera, F.J.; Álvarez-Aparicio, C.; Guerrero-Higueras, A.M.; Fernández-Llamas, C. MERLIN a Cognitive Architecture for Service Robots. Appl. Sci. 2020, 10, 5989. [Google Scholar] [CrossRef]

- Alliance, L. LoRaWAN Specification. 2022. Available online: https://lora-alliance.org/about-lorawan/ (accessed on 24 April 2022).

- Cruz Ulloa, C.; Prieto Sánchez, G.; Barrientos, A.; Del Cerro, J. Autonomous thermal vision robotic system for victims recognition in search and rescue missions. Sensors 2021, 21, 7346. [Google Scholar] [CrossRef]

- Suresh, A.; Arora, C.; Laha, D.; Gaba, D.; Bhambri, S. Intelligent Smart Glass for Visually Impaired Using Deep Learning Machine Vision Techniques and Robot Operating System (ROS). In Robot Intelligence Technology and Applications 5; Kim, J.H., Myung, H., Kim, J., Xu, W., Matson, E.T., Jung, J.W., Choi, H.L., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 99–112. [Google Scholar]

- Lee, J.; Wang, J.; Crandall, D.; Šabanović, S.; Fox, G. Real-Time, Cloud-Based Object Detection for Unmanned Aerial Vehicles. In Proceedings of the 2017 First IEEE International Conference on Robotic Computing (IRC), Taichung, Taiwan, 10–12 April 2017; pp. 36–43. [Google Scholar] [CrossRef]

- Puthussery, A.R.; Haradi, K.P.; Erol, B.A.; Benavidez, P.; Rad, P.; Jamshidi, M. A deep vision landmark framework for robot navigation. In Proceedings of the 2017 12th System of Systems Engineering Conference (SoSE), Waikoloa, HI, USA, 18–21 June 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Reid, R.; Cann, A.; Meiklejohn, C.; Poli, L.; Boeing, A.; Braunl, T. Cooperative multi-robot navigation, exploration, mapping and object detection with ROS. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast City, Australia, 23 June 2013; pp. 1083–1088. [Google Scholar] [CrossRef]

- Zhiqiang, W.; Jun, L. A review of object detection based on convolutional neural network. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 11104–11109. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2009, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. (IJCV) 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Panda, P.K.; Kumar, C.S.; Vivek, B.S.; Balachandra, M.; Dargar, S.K. Implementation of a Wild Animal Intrusion Detection Model Based on Internet of Things. In Proceedings of the 2022 Second International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 23–25 February 2022; pp. 1256–1261. [Google Scholar] [CrossRef]

- Figueiredo, A.M.; Valente, A.M.; Barros, T.; Carvalho, J.; Silva, D.A.; Fonseca, C.; de Carvalho, L.M.; Torres, R.T. What does the wolf eat? Assessing the diet of the endangered Iberian wolf (Canis lupus signatus) in northeast Portugal. PLoS ONE 2020, 15, e0230433. [Google Scholar] [CrossRef] [PubMed]

- Github: VISORED. Available online: https://github.com/uleroboticsgroup/VISORED (accessed on 28 May 2022).

- Li, D.; Wang, R.; Chen, P.; Xie, C.; Zhou, Q.; Jia, X. Visual Feature Learning on Video Object and Human Action Detection: A Systematic Review. Micromachines 2022, 13, 72. [Google Scholar] [CrossRef] [PubMed]

- Jeong, J.; Park, H.; Kwak, N. Enhancement of SSD by concatenating feature maps for object detection. arXiv 2017, arXiv:1705.09587. [Google Scholar]

- Li, Z.; Zhou, F. FSSD: Feature Fusion Single Shot Multibox Detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Tan, L.; Huangfu, T.; Wu, L.; Chen, W. Comparison of RetinaNet, SSD, and YOLO v3 for real-time pill identification. BMC Med. Inform. Decis. Mak. 2021, 21, 324. [Google Scholar] [CrossRef] [PubMed]

- Liu, P.; Qi, B.; Banerjee, S. Edgeeye: An edge service framework for real-time intelligent video analytics. In Proceedings of the 1st International Workshop on Edge Systems, Analytics and Networking, Munich, Germany, 10–15 June 2018; pp. 1–6. [Google Scholar]

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2015, arXiv:1512.02325v5. [Google Scholar]

- Arriaga, O.; Valdenegro-Toro, M.; Muthuraja, M.; Devaramani, S.; Kirchner, F. Perception for Autonomous Systems (PAZ). arXiv 2020, arXiv:cs.CV/2010.14541. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767v1. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934v1. [Google Scholar]

- Adarsh, P.; Rathi, P.; Kumar, M. YOLO v3-Tiny: Object Detection and Recognition using one stage improved model. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 687–694. [Google Scholar] [CrossRef]

- Github: Ultralytics. yolov5. Available online: https://github.com/ultralytics/yolov5 (accessed on 28 May 2022).

| Model | Precision | Recall | Inference | FPS | ||

|---|---|---|---|---|---|---|

| SSD | 85.49 | 93.33 | 85.49 | 47.87 | 80 | 12.5 |

| YOLOv3 | 99.35 | 99.56 | 99.49 | 88.63 | 31.24 | 32.01 |

| YOLOv3t | 94.86 | 94.97 | 98.63 | 71.98 | 15.62 | 64.01 |

| YOLOv5n | 95.88 | 96.13 | 99.07 | 75.86 | 15.62 | 64.02 |

| YOLOv5s | 98.54 | 98.54 | 99.47 | 82.78 | 15.62 | 64.01 |

| YOLOv5m | 99.17 | 99.52 | 99.49 | 85.53 | 15.62 | 64.02 |

| YOLOv5l | 99.47 | 99.61 | 99.50 | 87.57 | 31.24 | 32.01 |

| YOLOv5x | 99.38 | 99.73 | 99.50 | 88.24 | 46.86 | 21.34 |

| Problem | Results |

|---|---|

| Holstein Friesian cattle detection and count [24] | Animal detection: accuracy of 96.8% Counting animals: 63.1% top-1 accuracy |

| Cattle count [27] | Counting animals: accuracy of 92% Bounding box prediction (localisation): AP of 91% |

| Livestock, Sheep, Cattle detection [28] | Precision rate: 95.5%, 96% and 95% Recall with IoU of 0.4: 95,2%, 95% and 95.4% |

| Animal detection in thermal images [25] | with YOLOv4: 75.98% with YOLOv3: 84.52% with Faster-RCNN: 98.54% |

| Wolf and Dog detection (Vision Module) | with YOLOv3: 99.49% (FPS: 32) with YOLOv5m: 99.49% (FPS: 64) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Riego del Castillo, V.; Sánchez-González, L.; Campazas-Vega, A.; Strisciuglio, N. Vision-Based Module for Herding with a Sheepdog Robot. Sensors 2022, 22, 5321. https://doi.org/10.3390/s22145321

Riego del Castillo V, Sánchez-González L, Campazas-Vega A, Strisciuglio N. Vision-Based Module for Herding with a Sheepdog Robot. Sensors. 2022; 22(14):5321. https://doi.org/10.3390/s22145321

Chicago/Turabian StyleRiego del Castillo, Virginia, Lidia Sánchez-González, Adrián Campazas-Vega, and Nicola Strisciuglio. 2022. "Vision-Based Module for Herding with a Sheepdog Robot" Sensors 22, no. 14: 5321. https://doi.org/10.3390/s22145321

APA StyleRiego del Castillo, V., Sánchez-González, L., Campazas-Vega, A., & Strisciuglio, N. (2022). Vision-Based Module for Herding with a Sheepdog Robot. Sensors, 22(14), 5321. https://doi.org/10.3390/s22145321