Automated Software Evaluation in Screening Mammography: A Scoping Review of Image Quality and Technique Assessment

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Stage 1—Identifying the Research Question

2.2. Stage 2—Identifying Relevant Studies

- Mammography or tomosynthesis

- Automated software/image quality/positioning/compression assessment

- Radiographers or technologists (excluding radiologists)

2.3. Stage 3: Study Selection

- Were conducted in the screening setting.

- Focused on radiographer-led or technologist-applied image acquisition.

- Involved technical assessment (e.g., PGMI, EAR, or other image quality criteria/indicators).

- Included experimental, observational, technical evaluation, or quality improvement designs.

- Were published in English.

- Conducted in the diagnostic setting (not screening).

- Focused primarily on diagnostic image interpretation or cancer detection (e.g., computor aided detection (CAD) systems).

- Involved radiologist-only workflows.

- Did not involve automated or semi-automated evaluation processes.

- Focused on other modalities (e.g., ultrasound (US), magnetic resonance imaging (MRI)).

Screening Process

2.4. Stage 4: Data Charting

- Study characteristics (year, country, study design)

- Imaging modality (mammography, DBT)

- Type of automated software used

- Image quality metrics assessed (e.g., positioning, compression force, PGMI classification)

- Outcomes (e.g., radiographer performance, accuracy, implementation effects)

- Radiographer involvement and role

- Validation methods and comparative benchmarks (e.g., expert review, manual PGMI)

2.5. Stage 5: Collating, Summarising and Reporting the Results

- Types of automated tools and their functions

- Methodological approaches to evaluation

- Reported benefits, limitations, and challenges

- Settings of use (e.g., clinical, educational, or audit environments)

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DBT | digital breast tomosynthesis |

| ACR | American College of Radiology |

| PGMI | Perfect (P), Good (G), Moderate (M) Inadequate (I) |

| IES | image evaluation system |

| NHSBSP | National Health Service Breast Screening Programme |

| UK | United Kingdom |

| CC | craniocaudal |

| MLO | mediolateral oblique |

| EAR | Excellent (E), Acceptable (A) or Repeatable (R) |

| PRISMA-ScR | Preferred Reporting Items for Systematic Reviews and Meta Analyses extension for Scoping Reviews |

| CAD | computor aided detection |

| MRI | magnetic resonance imaging |

| US | ultrasound |

| BSA NAS | BreastScreen Australia National Accreditation Standards |

| SCI | State Coordination Unit |

| PACS | Picture Archiving and Communication Systems |

| RIS | Reporting Information Systems |

| AI | artificial intelligence |

| IT | information technology |

| OSF | Open Science Framework |

References

- Zhang, Y.; Ji, Y.; Liu, S.; Li, J.; Wu, J.; Jin, Q.; Liu, X.; Duan, H.; Feng, Z.; Liu, Y.; et al. Global burden of female breast cancer: New estimates in 2022, temporal trend and future projections up to 2050 based on the latest release from GLOBOCAN. J. Natl. Cancer Cent. 2025, 5, 287–296. [Google Scholar] [CrossRef] [PubMed]

- Arnold, M.; Morgan, E.; Rumgay, H.; Mafra, A.; Singh, D.; Laversanne, M.; Vignat, J.; Gralow, J.R.; Cardoso, F.; Siesling, S.; et al. Current and future burden of breast cancer: Global statistics for 2020 and 2040. Breast 2022, 66, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Li, J.; Gao, Y.; Chen, W.; Zhao, L.; Li, N.; Tian, J.; Li, Z. The screening value of mammography for breast cancer: An overview of 28 systematic reviews with evidence mapping. J. Cancer Res. Clin. Oncol. 2025, 151, 102. [Google Scholar] [CrossRef]

- Guthmuller, S.; Carrieri, V.; Wübker, A. Effects of organized screening programs on breast cancer screening, incidence, and mortality in Europe. J. Health Econ. 2023, 92, 102803. [Google Scholar] [CrossRef] [PubMed]

- Katalinic, A.; Eisemann, N.; Kraywinkel, K.; Noftz, M.R.; Hübner, J. Breast cancer incidence and mortality before and after implementation of the German mammography screening program. Int. J. Cancer 2020, 147, 709–718. [Google Scholar] [CrossRef]

- Van Ourti, T.; O’Donnell, O.; Koç, H.; Fracheboud, J.; de Koning, H.J. Effect of screening mammography on breast cancer mortality: Quasi-experimental evidence from rollout of the Dutch population-based program with 17-year follow-up of a cohort. Int. J. Cancer 2020, 146, 2201–2208. [Google Scholar] [CrossRef]

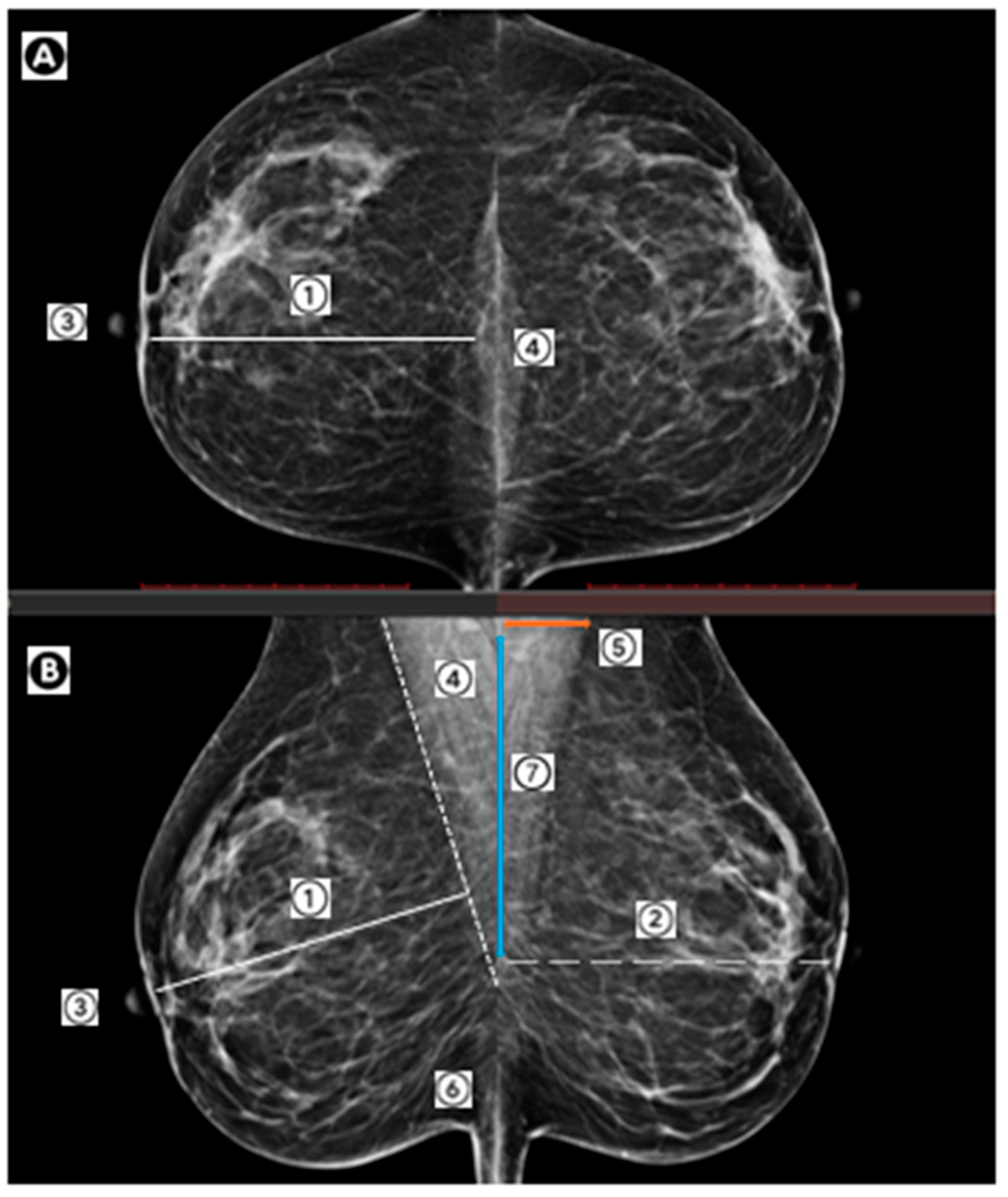

- Popli, M.B.; Teotia, R.; Narang, M.; Krishna, H. Breast Positioning during Mammography: Mistakes to be Avoided. Breast Cancer 2014, 8, 119–124. [Google Scholar] [CrossRef]

- Li, Y.; Poulos, A.; McLean, D.; Rickard, M. A review of methods of clinical image quality evaluation in mammography. Eur. J. Radiol. 2010, 74, e122–e131. [Google Scholar] [CrossRef]

- Arjmandi, F.K.; Munjal, H.; Hayes, J.C.; Thibodeaux, L.; Schopp, J.; Merchant, K.; Hu, T.; Porembka, J.H. Mammography Positioning Improvement Results and Unique Challenges in a Safety-Net Health System. J. Am. Coll. Radiol. 2025, in press. [Google Scholar] [CrossRef]

- Brahim, M.; Westerkamp, K.; Hempel, L.; Lehmann, R.; Hempel, D.; Philipp, P. Automated Assessment of Breast Positioning Quality in Screening Mammography. Cancers 2022, 14, 4704. [Google Scholar] [CrossRef]

- Spuur, K.; Webb, J.; Poulos, A.; Nielsen, S.; Robinson, W. Mammography image quality and evidence based practice: Analysis of the demonstration of the inframammary angle in the digital setting. Eur. J. Radiol. 2018, 100, 76–84. [Google Scholar] [CrossRef]

- Huppe, A.I.; Overman, K.L.; Gatewood, J.B.; Hill, J.D.; Miller, L.C.; Inciardi, M.F. Mammography Positioning Standards in the Digital Era: Is the Status Quo Acceptable? AJR Am. J. Roentgenol. 2017, 209, 1419–1425. [Google Scholar] [CrossRef]

- Peart, O. Positioning challenges in mammography. Radiol. Technol. 2014, 85, 417–439M, quiz 440–413M. [Google Scholar]

- Rauscher, G.H.; Conant, E.F.; Khan, J.A.; Berbaum, M.L. Mammogram image quality as a potential contributor to disparities in breast cancer stage at diagnosis: An observational study. BMC cancer. 2013, 13, 208. [Google Scholar] [CrossRef]

- Spuur, K.; Poulos, A.; Currie, G.; Rickard, M. Mammography: Correlation of pectoral muscle width and the length in the mediolateral oblique view of the breast. Radiography 2010, 16, 286–291. [Google Scholar] [CrossRef]

- Taplin, S.H.; Rutter, C.M.; Finder, C.; Mandelson, M.T.; Houn, F.; White, E. Screening mammography: Clinical image quality and the risk of interval breast cancer. Am. J. Roentgenol. 2002, 178, 797–803. [Google Scholar] [CrossRef] [PubMed]

- Klabunde, C.; Bouchard, F.; Taplin, S.; Scharpantgen, A.; Ballard-Barbash, R. Quality assurance for screening mammography: An international comparison. J. Epidemiol. Community Health 2001, 55, 204–212. [Google Scholar] [CrossRef] [PubMed]

- Strohbach, J.; Wilkinson, J.M.; Spuur, K.M. Full-field digital mammography: The ‘30% rule’ and influences on visualisation of the pectoralis major muscle on the craniocaudal view of the breast. J. Med. Radiat. Sci. 2020, 67, 177–184. [Google Scholar] [CrossRef]

- Taylor, K.; Parashar, D.; Bouverat, G.; Poulos, A.; Gullien, R.; Stewart, E.; Aarre, R.; Crystal, P.; Wallis, M. Mammographic image quality in relation to positioning of the breast: A multicentre international evaluation of the assessment systems currently used, to provide an evidence base for establishing a standardised method of assessment. Radiography 2017, 23, 343–349. [Google Scholar] [CrossRef]

- Moreira, C.; Svoboda, K.; Poulos, A.; Taylor, R.; Page, A.; Rickard, M. Comparison of the validity and reliability of two image classification systems for the assessment of mammogram quality. J. Med. Screen. 2005, 12, 38–42. [Google Scholar] [CrossRef] [PubMed]

- Spuur, K.; Hung, W.T.; Poulos, A.; Rickard, M. Mammography image quality: Model for predicting compliance with posterior nipple line criterion. Eur. J. Radiol. 2011, 80, 713–718. [Google Scholar] [CrossRef] [PubMed]

- Hudson, S.M.; Wilkinson, L.S.; De Stavola, B.L.; Dos-Santos-Silva, I. Are mammography image acquisition factors, compression pressure and paddle tilt, associated with breast cancer detection in screening? Br. J. Radiol. 2023, 96, 20230085. [Google Scholar] [CrossRef]

- Mackenzie, A.; Warren, L.M.; Wallis, M.G.; Given-Wilson, R.M.; Cooke, J.; Dance, D.R.; Chakraborty, D.P.; Halling-Brown, M.D.; Looney, P.T.; Young, K.C. The relationship between cancer detection in mammography and image quality measurements. Phys. Medica Eur. J. Med. Phys. 2016, 32, 568–574. [Google Scholar] [CrossRef]

- Gourd, E. Mammography deficiencies: The result of poor positioning. Lancet Oncol. 2018, 19, e385. [Google Scholar] [CrossRef]

- Salvagnini, E.; Bosmans, H.; Van Ongeval, C.; Van Steen, A.; Michielsen, K.; Cockmartin, L.; Struelens, L.; Marshall, N.W. Impact of compressed breast thickness and dose on lesion detectability in digital mammography: FROC study with simulated lesions in real mammograms. Med. Phys. 2016, 43, 5104–5116. [Google Scholar] [CrossRef]

- Saunders Jr, R.S.; Samei, E. The effect of breast compression on mass conspicuity in digital mammography. Med. Phys. 2008, 35, 4464–4473. [Google Scholar] [CrossRef]

- Holland, K.; Sechopoulos, I.; Mann, R.M.; den Heeten, G.J.; van Gils, C.H.; Karssemeijer, N. Influence of breast compression pressure on the performance of population-based mammography screening. Breast Cancer Res. 2017, 19, 126. [Google Scholar] [CrossRef]

- Miller, D.; Livingstone, V.; Herbison, G.P. Interventions for relieving the pain and discomfort of screening mammography. Cochrane Database Syst. Rev. 2008, 1, CD002942. [Google Scholar] [CrossRef]

- Waade, G.G.; Moshina, N.; Sebuødegård, S.; Hogg, P.; Hofvind, S. Compression forces used in the Norwegian Breast Cancer Screening Program. Br. J. Radiol. 2017, 90, 20160770. [Google Scholar] [CrossRef] [PubMed]

- BreastScreen Australia. BreastScreen Australia: National Accreditation Standards; BreastScreen Australia: Canberra, Australia, 2021. [Google Scholar]

- Hofvind, S.; Vee, B.; Sørum, R.; Hauge, M.; Ertzaas, A.-K.O. Quality assurance of mammograms in the Norwegian Breast Cancer Screening Program. Eur. J. Radiogr. 2009, 1, 22–29. [Google Scholar] [CrossRef]

- Ministry of Health. BreastScreen Aotearoa National Policy and Quality Standards; Ministry of Health: Accra, Ghana, 2022. [Google Scholar]

- Perry, N.; Broeders, M.; de Wolf, C.; Törnberg, S. European Guidelines for Quality Assurance in Breast Cancer Screening and Diagnosis; Publications Office of the European Union: Luxembourg, 2006. [Google Scholar]

- Karsa, L.V.; Holland, R.; Broeders, M.; Wolf, C.D.; Perry, N.; Törnberg, S. European Guidelines for Quality Assurance in Breast Cancer Screening and Diagnosis—Fourth Edition, Supplements; Publications Office: Luxembourg, 2013. [Google Scholar]

- Guertin, M.-H.; Théberge, I.; Dufresne, M.-P.; Zomahoun, H.T.V.; Major, D.; Tremblay, R.; Ricard, C.; Shumak, R.; Wadden, N.; Pelletier, É. Clinical image quality in daily practice of breast cancer mammography screening. Can. Assoc. Radiol. J. 2014, 65, 199–206. [Google Scholar] [CrossRef]

- National Health Service. Breast Screening: Guidance for Breast Screening Mammographers. Available online: https://www.gov.uk/government/publications/breast-screening-quality-assurance-for-mammography-and-radiography/guidance-for-breast-screening-mammographers (accessed on 31 July 2024).

- Kohli, A.; Jha, S. Why CAD Failed in Mammography. J. Am. Coll. Radiol. 2018, 15, 535–537. [Google Scholar] [CrossRef]

- Angelone, F.; Ponsiglione, A.M.; Grassi, R.; Amato, F.; Sansone, M. Comparison of Automatic and Semiautomatic Approach for the Posterior Nipple Line Calculation. In Proceedings of the 9th European Medical and Biological Engineering Conference, Cham, Germany, 9–13 June 2024; pp. 217–226. [Google Scholar] [CrossRef]

- Tran, Q.; Santner, T.; Rodríguez-Sánchez, A. Automatic Computation of the Posterior Nipple Line from Mammographies. In Proceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2024), Rome, Italy, 27–29 February 2024; pp. 629–636. [Google Scholar] [CrossRef]

- Jen, C.C.; Yu, S.S. Automatic Nipple Detection in Mammograms Using Local Maximum Features Along Breast Contour. Biomed. Eng. Appl. Basis Commun. 2015, 27, 1550035. [Google Scholar] [CrossRef]

- Arksey, H.; O’Malley, L. Scoping studies: Towards a methodological framework. Int. J. Soc. Res. Methodol. 2005, 8, 19–32. [Google Scholar] [CrossRef]

- Levac, D.; Colquhoun, H.; O’Brien, K.K. Scoping studies: Advancing the methodology. Implement. Sci. 2010, 5, 69. [Google Scholar] [CrossRef] [PubMed]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Gennaro, G.; Povolo, L.; Del Genio, S.; Ciampani, L.; Fasoli, C.; Carlevaris, P.; Petrioli, M.; Masiero, T.; Maggetto, F.; Caumo, F. Using automated software evaluation to improve the performance of breast radiographers in tomosynthesis screening. Eur. Radiol. 2024, 34, 4738–4749. [Google Scholar] [CrossRef]

- Eby, P.R.; Martis, L.M.; Paluch, J.T.; Pak, J.J.; Chan, A.H.L. Impact of Artificial Intelligence–driven Quality Improvement Software on Mammography Technical Repeat and Recall Rates. Radiol. Artif. Intell. 2023, 5, e230038. [Google Scholar] [CrossRef]

- Picard, M.; Cockmartin, L.; Buelens, K.; Postema, S.; Celis, V.; Aesseloos, C.; Bosmans, H. Objective and subjective assessment of mammographic positioning quality. In Proceedings of the Proc. SPIE, Opole, Poland, 4 October 2022; p. 122860F. [Google Scholar]

- Waade, G.G.; Danielsen, A.S.; Holen, Å.S.; Larsen, M.; Hanestad, B.; Hopland, N.-M.; Kalcheva, V.; Hofvind, S. Assessment of breast positioning criteria in mammographic screening: Agreement between artificial intelligence software and radiographers. J. Med. Screen. 2021, 28, 448–455. [Google Scholar] [CrossRef]

- Chan, A.; Howes, J.; Hill, C.; Highnam, R. Automated Assessment of Breast Positioning in Mammography Screening. In Digital Mammography; Mercer, C., Hogg, P., Kelly, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 247–258. [Google Scholar]

- Endalamaw, A.; Khatri, R.B.; Mengistu, T.S.; Erku, D.; Wolka, E.; Zewdie, A.; Assefa, Y. A scoping review of continuous quality improvement in healthcare system: Conceptualization, models and tools, barriers and facilitators, and impact. BMC Health Serv. Res. 2024, 24, 487. [Google Scholar] [CrossRef]

- Newman, L.A. Breast cancer screening in low and middle-income countries. Best Pract. Res. Clin. Obstet. Gynaecol. 2022, 83, 15–23. [Google Scholar] [CrossRef] [PubMed]

- Martinez, M.E.; Schmeler, K.M.; Lajous, M.; Newman, L.A. Cancer Screening in Low- and Middle-Income Countries. Am. Soc. Clin. Oncol. Educ. Book 2024, 44, e431272. [Google Scholar] [CrossRef] [PubMed]

- Gray, R. Empty systematic reviews: Identifying gaps in knowledge or a waste of time and effort? Nurse Author Ed. 2021, 31, 42–44. [Google Scholar] [CrossRef]

- Schlosser, R.W.; Sigafoos, J. ‘Empty’ reviews and evidence-based practice. Evid. Based Commun. Assess. Interv. 2009, 3, 1–3. [Google Scholar] [CrossRef]

- Yaffe, J.; Montgomery, P.; Hopewell, S.; Shepard, L.D. Empty reviews: A description and consideration of Cochrane systematic reviews with no included studies. PLoS ONE 2012, 7, e36626. [Google Scholar] [CrossRef] [PubMed]

| Database | Searches | Results | |

|---|---|---|---|

| PUBMED 1 March 2025 | (“Mammography”[MeSH Terms] OR (“breast tomosynthes*s”[Title/Abstract] OR “mammograph*”[Title/Abstract] OR “tomosynthes*s screening”[Title/Abstract] OR “mammogram*”[Title/Abstract] OR “breast imag*”[Title/Abstract])) AND (“image quality”[Title/Abstract] OR “software evaluation”[Title/Abstract] OR “Volpara”[Title/Abstract] OR (“quality”[Title/Abstract] OR “artificial intelligence”[Title/Abstract] OR “technology assessment”[Title/Abstract])) AND ((“radiographer*”[Title/Abstract] OR “mammographer”[Title/Abstract] OR (“breast radiograph*”[Title/Abstract] OR “technologist*”[Title/Abstract])) NOT “radiologist”[Title/Abstract]) AND 2014/01/01:2025/12/31[Date-Publication] | 84 | |

| EMCARE 14 March 2025 | #1 | mammography/ | 23,741 |

| #2 | (breast tomosynthes?s or mammograph* or tomosynthes*s screening or mammogram* or breast imag*).mp. | 29,726 | |

| #3 | 1 or 2 | 29,726 | |

| #4 | (image quality or software evaluation or Volpara or quality or artificial intelligence or technology assessment).mp. | 950,191 | |

| #5 | radiological technologist/ | 1481 | |

| #6 | (radiographer* or mammographer or breast radiograph* or technologist*).mp. | 7462 | |

| #7 | 5 or 6 | 7462 | |

| #8 | radiologist.mp. | 40,166 | |

| #9 | 7 not 8 | 6105 | |

| #10 | 3 and 4 and 9 | 142 | |

| #11 | limit 10 to yr = “2014 -Current” | 74 | |

| SCOPUS 14 March /2025 | ((TITLE-ABS-KEY (radiographer* OR mammographer OR “breast radiograph*” OR technologist*)) AND NOT (TITLE-ABS-KEY (radiologist))) AND (TITLE-ABS-KEY (“image quality” OR “software evaluation” OR volpara OR quality OR “artificial intelligence” OR “technology assessment”)) AND (TITLE-ABS-KEY (“breast tomosynthes*s” OR mammograph* OR “tomosynthes*s screening” OR mammogram* OR “breast imag*”)) AND PUBYEAR > 2013 AND PUBYEAR < 2026 | 107 | |

| Citation Searching | 3 | ||

| Total | 268 | ||

| Duplicates | 123 | ||

| Total with duplicates removed | 142 | ||

| Study, Year and Country | Study Design | Imaging Modality: 2D Full Field Digital Mammography (2D FFDM/Digital Breast Tomosynthesis (DBT) | Unit/Software/Tool Used | Sample Size | Radiographer Characteristics | Main Outcomes | Reported Limitations |

|---|---|---|---|---|---|---|---|

| Gennaro, G. et al., 2023; Italy [44] | Retrospective longitudinal analysis of prospective cohorts; observational; performed at one institution | 2D FFDM/DBT | Hologic/Volpara Analytics/TruPGMITM | One facility; six radiographers; 2407 women in the pre-software training cohort/ 3986 in the post-software cohort | Screening radiographers with 0–25 years experience; | Automated image quality analysis can improve the positioning and compression performance of radiographers, which may ultimately lead to improved screening outcomes. | Single centre and small number of radiographers; evaluation of short-term impact of software use on positioning and compression performance (only); included only women aged 46–47 |

| Eby P. et al., 2023; United States [45] | Retrospective analysis of screening mammography image quality data | 2D FFDM | Volpara Analytics/ TruPGMITM | Nine facilities; 40 technologists 198,054 images and 42 technologists, 211,821 images | Screening radiographers | Rapid objective feedback on mammographic images is a major advantage to AI software analysis. Increases in all objectively measured IQ indicators following AI software implementation demonstrates the potential of AI software to improve IQ and reduce patient TR. | Inability to match individual studies due to the level of aggregation from the two data sources; it was not possible to assess the direct impact on patient outcomes; dose or to interrogate the specific predictors of technical recall (TR) or inadequate (I) images |

| Pickard, M et al., 2022; Belgium [46] | Retrospective analysis of screening mammography image quality | 2D FFDM | Volpara TruPGMITM | 127 mammographic screening exams (MLO and CC views) | Screening radiographers | Automated image quality assessment software overcomes the issue of subjectivity and high reader variability. | Images acquired on mammography systems of one vendor; only one automatic evaluation of mammography positioning software is available and was tested; the number of readers was limited to two (one radiographer and one radiologist) discordant cases were managed y a second radiologist; the number of mammograms (n = 127) evaluated was also limited |

| Waade, GG. et al., 2021; Norway [47] | Randomised, retrospective analysis of screening mammography image quality | 2D FFDM/DBT | GE Senographe Pristina 3 D Breast Tomosynthesis™/Volpara TruPGMITM | Two hundred screening radiographers; 17,951 women, 14 breast centres. | Screening radiographers | AI has great potential in image quality and breast positioning assessment in mammographic screening by reducing subjectivity. However, there is varying agreement between radiographers and AI for several breast positioning criteria. | Limited criteria selected; criteria selected limited by available output from the AI system evaluated only assessed selected positioning criteria, no overall image quality or technical errors. |

| Chan, A. et al., 2022; Australia/New Zealand Book Chapter [48] | Book Chapter | Book Chapter | Volpara TruPGMITM | Book Chapter | Book Chapter | Visual inspection of mammograms is subjective and time-consuming; consistent, objective, and ongoing feedback about breast positioning quality is challenging. Automated evaluation of breast positioning can: Allow individual review their of performance (overall and over time), as well as benchmark results against the facility and globally; Aid better understanding of performance, facilitate performance reviews and identify areas to target for training Support realistic objectives and goals based on benchmarking and individualised trends; Identify focus areas for improvement, by reviewing feedback down to the level of individual metrics; An automated approach to assessing positioning: Achieves and maintains a high standard of mammographic image quality; Provides an objective training program to advance positioning performance Facilitates external inspections and quality assurance programs |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Spuur, K.M.; Singh, C.L.; Al Mousa, D.; Chau, M.T. Automated Software Evaluation in Screening Mammography: A Scoping Review of Image Quality and Technique Assessment. Curr. Oncol. 2025, 32, 571. https://doi.org/10.3390/curroncol32100571

Spuur KM, Singh CL, Al Mousa D, Chau MT. Automated Software Evaluation in Screening Mammography: A Scoping Review of Image Quality and Technique Assessment. Current Oncology. 2025; 32(10):571. https://doi.org/10.3390/curroncol32100571

Chicago/Turabian StyleSpuur, Kelly M., Clare L. Singh, Dana Al Mousa, and Minh T. Chau. 2025. "Automated Software Evaluation in Screening Mammography: A Scoping Review of Image Quality and Technique Assessment" Current Oncology 32, no. 10: 571. https://doi.org/10.3390/curroncol32100571

APA StyleSpuur, K. M., Singh, C. L., Al Mousa, D., & Chau, M. T. (2025). Automated Software Evaluation in Screening Mammography: A Scoping Review of Image Quality and Technique Assessment. Current Oncology, 32(10), 571. https://doi.org/10.3390/curroncol32100571