Quality of ChatGPT-Generated Therapy Recommendations for Breast Cancer Treatment in Gynecology

Abstract

1. Introduction

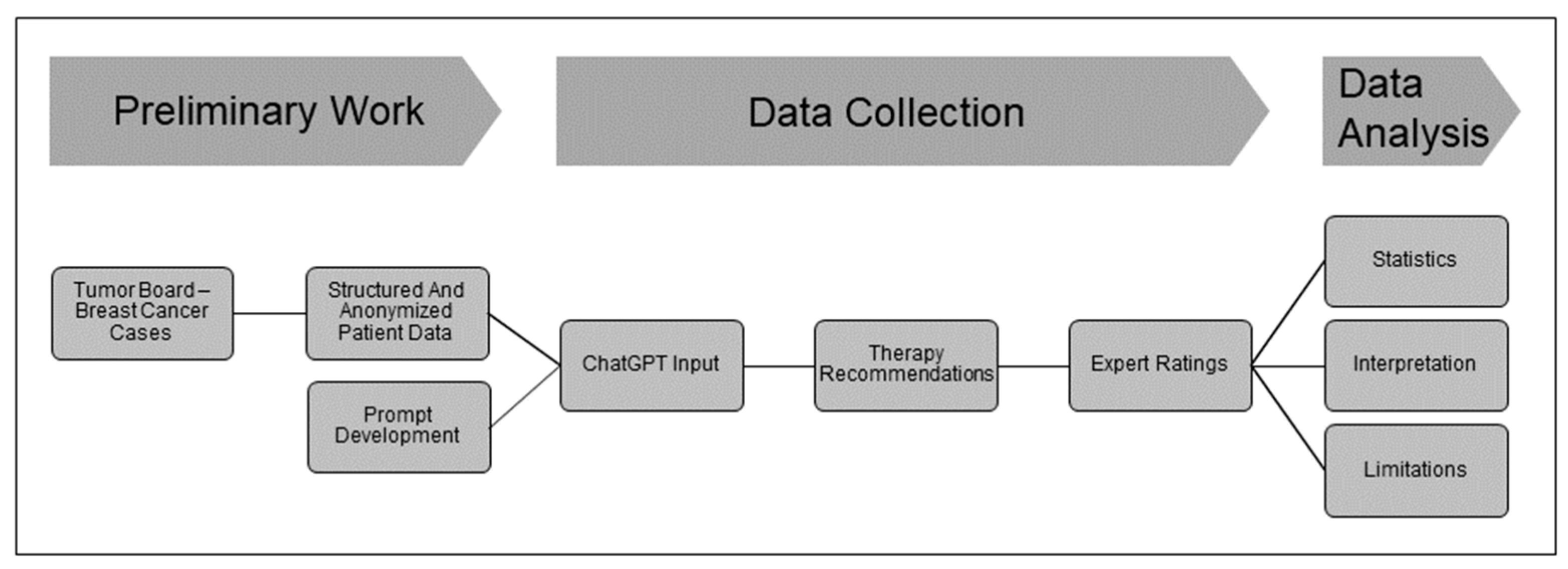

2. Materials and Methods

2.1. Patient Cases

2.2. Data Distribution

2.3. ChatGPT Prompt

2.4. Tumor Board Setting and ChatGPT Recommendations

2.5. Gynecological Oncologists and Evaluation Form

2.6. Data Analysis

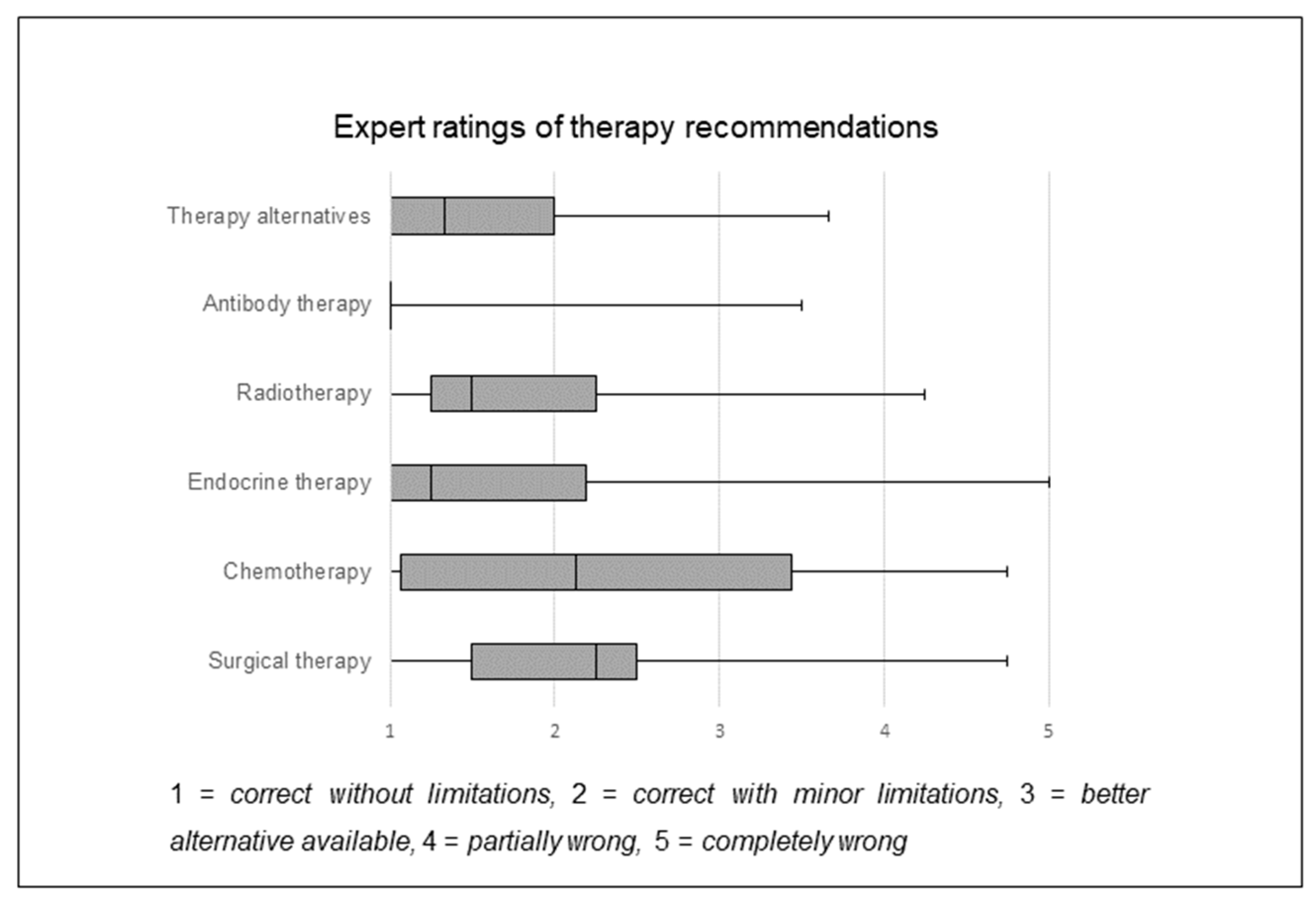

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Jost, E.; Kosian, P.; Jimenez Cruz, J.; Albarqouni, S.; Gembruch, U.; Strizek, B.; Recker, F. Evolving the Era of 5D Ultrasound? A Systematic Literature Review on the Applications for Artificial Intelligence Ultrasound Imaging in Obstetrics and Gynecology. J. Clin. Med. 2023, 12, 6833. [Google Scholar] [CrossRef] [PubMed]

- de Koning, E.; van der Haas, Y.; Saguna, S.; Stoop, E.; Bosch, J.; Beeres, S.; Schalij, M.; Boogers, M. AI Algorithm to Predict Acute Coronary Syndrome in Prehospital Cardiac Care: Retrospective Cohort Study. JMIR Cardio 2023, 7, e51375. [Google Scholar] [CrossRef] [PubMed]

- Bilal, M.; Tsang, Y.W.; Ali, M.; Graham, S.; Hero, E.; Wahab, N.; Dodd, K.; Sahota, H.; Wu, S.; Lu, W.; et al. Development and validation of artificial intelligence-based prescreening of large-bowel biopsies taken in the UK and Portugal: A retrospective cohort study. Lancet Digit. Health 2023, 5, e786–e797. [Google Scholar] [CrossRef] [PubMed]

- Garcia, P.; Ma, S.P.; Shah, S.; Smith, M.; Jeong, Y.; Devon-Sand, A.; Tai-Seale, M.; Takazawa, K.; Clutter, D.; Vogt, K.; et al. Artificial Intelligence-Generated Draft Replies to Patient Inbox Messages. JAMA Netw. Open 2024, 7, e243201. [Google Scholar] [CrossRef] [PubMed]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.L.; Torre, L.A.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [PubMed]

- AWMF Leitlinienprogramm Onkologie, Extended Version June 2021. Interdisziplinäre S3-Leitlinie für die Früherkennung, Diagnostik, Therapie und Nachsorge des Mammakarzinoms. 2021. Available online: https://www.leitlinienprogramm-onkologie.de/fileadmin/user_upload/Downloads/Leitlinien/Mammakarzinom_4_0/Version_4.4/LL_Mammakarzinom_Langversion_4.4.pdf (accessed on 10 May 2024).

- Singareddy, S.; Sn, V.P.; Jaramillo, A.P.; Yasir, M.; Iyer, N.; Hussein, S.; Nath, T.S. Artificial Intelligence and Its Role in the Management of Chronic Medical Conditions: A Systematic Review. Cureus 2023, 15, e46066. [Google Scholar] [CrossRef] [PubMed]

- Sorin, V.; Glicksberg, B.S.; Artsi, Y.; Barash, Y.; Konen, E.; Nadkarni, G.N.; Klang, E. Utilizing large language models in breast cancer management: Systematic review. J. Cancer Res. Clin. Oncol. 2024, 150, 140. [Google Scholar] [CrossRef] [PubMed]

- Sorin, V.; Klang, E.; Sklair-Levy, M.; Cohen, I.; Zippel, D.B.; Balint Lahat, N.; Konen, E.; Barash, Y. Large language model (ChatGPT) as a support tool for breast tumor board. NPJ Breast Cancer 2023, 9, 44. [Google Scholar] [CrossRef] [PubMed]

- Lukac, S.; Dayan, D.; Fink, V.; Leinert, E.; Hartkopf, A.; Veselinovic, K.; Janni, W.; Rack, B.; Pfister, K.; Heitmeir, B.; et al. Evaluating ChatGPT as an adjunct for the multidisciplinary tumor board decision-making in primary breast cancer cases. Arch. Gynecol. Obstet. 2023, 308, 1831–1844. [Google Scholar] [CrossRef] [PubMed]

- Griewing, S.; Gremke, N.; Wagner, U.; Lingenfelder, M.; Kuhn, S.; Boekhoff, J. Challenging ChatGPT 3.5 in Senology-An Assessment of Concordance with Breast Cancer Tumor Board Decision Making. J. Pers. Med. 2023, 13, 1502. [Google Scholar] [CrossRef] [PubMed]

- Rüschoff, J.; Lebeau, A.; Kreipe, H.; Sinn, P.; Gerharz, C.D.; Koch, W.; Morris, S.; Ammann, J.; Untch, M. Assessing HER2 testing quality in breast cancer: Variables that influence HER2 positivity rate from a large, multicenter, observational study in Germany. Mod. Pathol. 2017, 30, 217–226. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Winters, S.; Martin, C.; Murphy, D.; Shokar, N.K. Breast Cancer Epidemiology, Prevention, and Screening. Prog. Mol. Biol. Transl. Sci. 2017, 151, 1–32. [Google Scholar] [CrossRef] [PubMed]

- Meskó, B. Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. J. Med. Internet Res. 2023, 25, e50638. [Google Scholar] [CrossRef] [PubMed]

- Sütcüoğlu, B.M.; Güler, M. Appropriateness of premature ovarian insufficiency recommendations provided by ChatGPT. Menopause 2023, 30, 1033–1037. [Google Scholar] [CrossRef] [PubMed]

- Barbour, A.B.; Barbour, T.A. A Radiation Oncology Board Exam of ChatGPT. Cureus 2023, 15, e44541. [Google Scholar] [CrossRef] [PubMed]

- Hirosawa, T.; Kawamura, R.; Harada, Y.; Mizuta, K.; Tokumasu, K.; Kaji, Y.; Suzuki, T.; Shimizu, T. ChatGPT-Generated Differential Diagnosis Lists for Complex Case-Derived Clinical Vignettes: Diagnostic Accuracy Evaluation. JMIR Med. Inform. 2023, 11, e48808. [Google Scholar] [CrossRef] [PubMed]

- Weidener, L.; Fischer, M. Teaching AI Ethics in Medical Education: A Scoping Review of Current Literature and Practices. Perspect. Med. Educ. 2023, 12, 399–410. [Google Scholar] [CrossRef] [PubMed]

- Yu, P.; Xu, H.; Hu, X.; Deng, C. Leveraging Generative AI and Large Language Models: A Comprehensive Roadmap for Healthcare Integration. Healthcare 2023, 11, 2776. [Google Scholar] [CrossRef] [PubMed]

- Alkaissi, H.; McFarlane, S.I. Artificial Hallucinations in ChatGPT: Implications in Scientific Writing. Cureus 2023, 15, e35179. [Google Scholar] [CrossRef] [PubMed]

| Number of Patients | |

|---|---|

| Breast cancer history | |

| Primary breast cancer without treatment | 9 |

| Primary breast cancer with initial treatment | 15 |

| First relapse of breast cancer | 4 |

| Second relapse of breast cancer | 2 |

| Histology | |

| Invasive breast cancer | 28 |

| Ductal carcinoma in situ (G3) | 1 |

| Phyllodes tumor | 1 |

| Immunohistochemistry | |

| Estrogen receptor-positive | 24 |

| Estrogen receptor-negative | 4 |

| Progesterone receptor-positive | 19 |

| Progesterone receptor-negative | 9 |

| HER-2 receptor-positive | 3 |

| HER-2 receptor-negative | 25 |

| Age | 31–88 years |

| Treatment Categories | Frequency of Recommendation |

|---|---|

| Surgery | |

| Yes | 27 |

| No | 3 |

| Chemotherapy | |

| Yes | 21 |

| No | 9 |

| Radiotherapy | |

| Yes | 27 |

| No | 3 |

| HER-2 therapy | |

| Yes | 3 |

| No | 27 |

| Endocrine therapy | |

| Yes | 20 |

| No | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stalp, J.L.; Denecke, A.; Jentschke, M.; Hillemanns, P.; Klapdor, R. Quality of ChatGPT-Generated Therapy Recommendations for Breast Cancer Treatment in Gynecology. Curr. Oncol. 2024, 31, 3845-3854. https://doi.org/10.3390/curroncol31070284

Stalp JL, Denecke A, Jentschke M, Hillemanns P, Klapdor R. Quality of ChatGPT-Generated Therapy Recommendations for Breast Cancer Treatment in Gynecology. Current Oncology. 2024; 31(7):3845-3854. https://doi.org/10.3390/curroncol31070284

Chicago/Turabian StyleStalp, Jan Lennart, Agnieszka Denecke, Matthias Jentschke, Peter Hillemanns, and Rüdiger Klapdor. 2024. "Quality of ChatGPT-Generated Therapy Recommendations for Breast Cancer Treatment in Gynecology" Current Oncology 31, no. 7: 3845-3854. https://doi.org/10.3390/curroncol31070284

APA StyleStalp, J. L., Denecke, A., Jentschke, M., Hillemanns, P., & Klapdor, R. (2024). Quality of ChatGPT-Generated Therapy Recommendations for Breast Cancer Treatment in Gynecology. Current Oncology, 31(7), 3845-3854. https://doi.org/10.3390/curroncol31070284