Robust Fingerprint Minutiae Extraction and Matching Based on Improved SIFT Features

Abstract

1. Introduction

- (1)

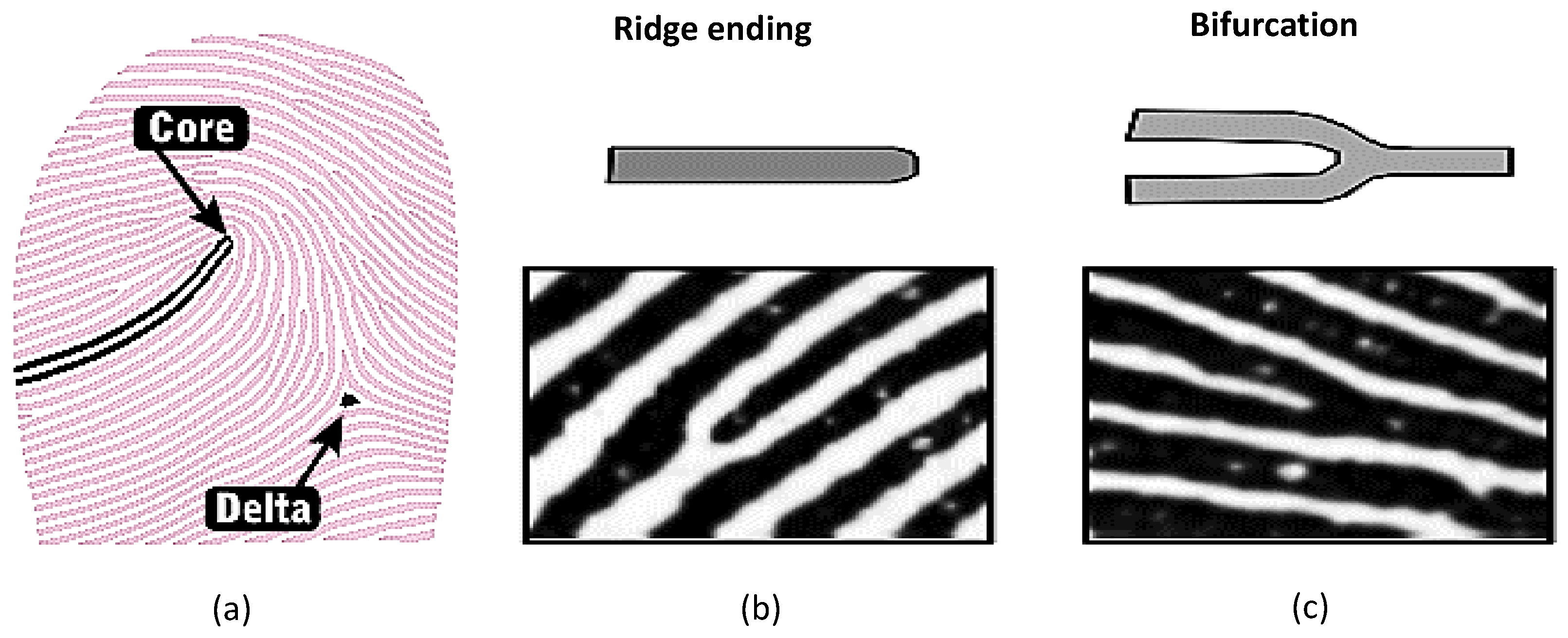

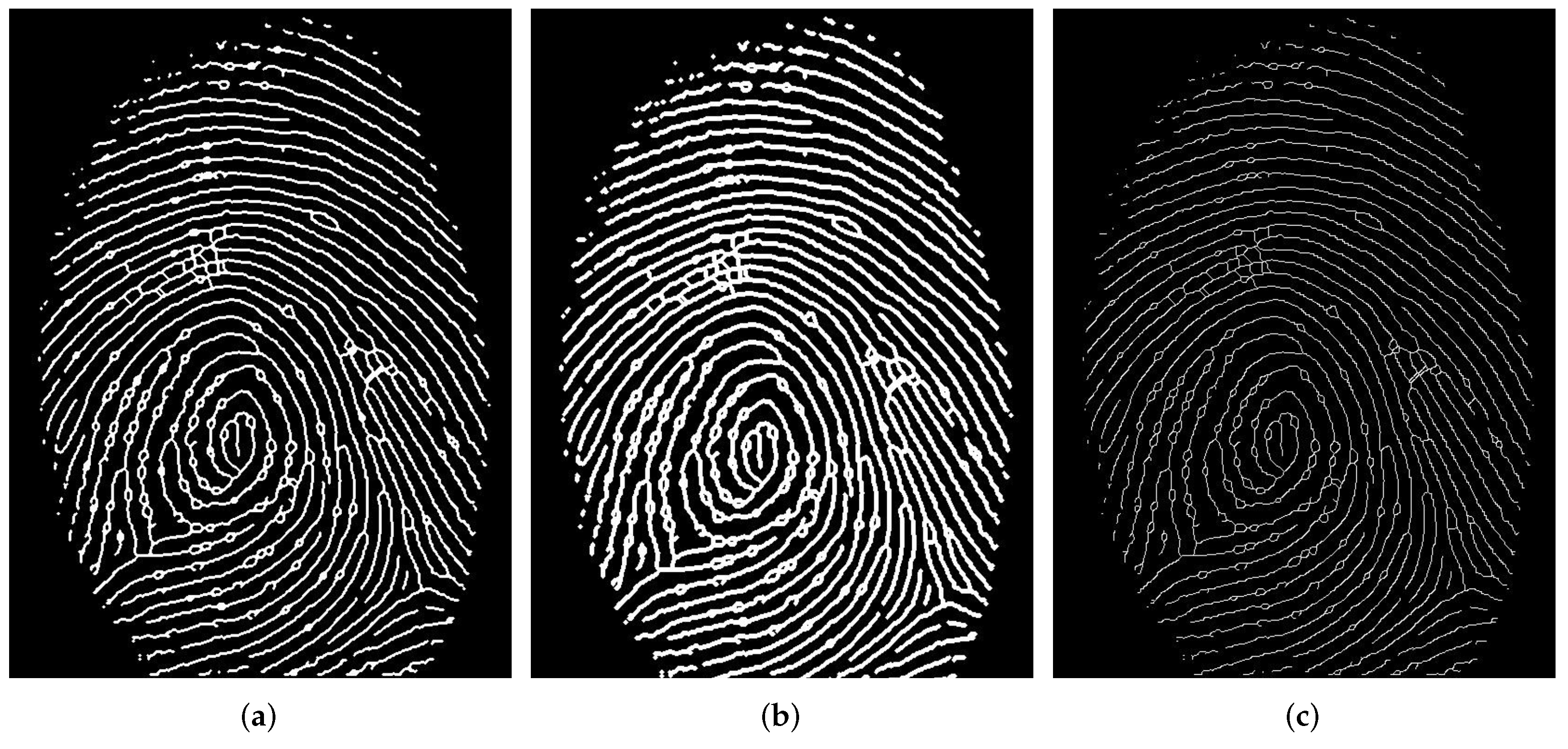

- Global features: These features form a special pattern of ridge and valleys, called singularities or Singular Point (SP), and they can further be divided into three types: loop, delta, and whorl. The significant points are the core and the delta. The core is defined as the most points on the innermost ridges, and the delta is defined as the central point where three different trend flows converge (see Figure 1a). It can be argued that these features provide the most useful and crucial information for fingerprint classification, fingerprint matching, and fingerprint alignment [7,8].

- (2)

- Local features: At the local level, ridge characteristics, collectively called minutia, represent the most widely used features to match fingerprints. There are several types of minutiae, but for practical purposes, just two types of minutiae [9,10] are considered as the two most prominent ridge characteristics: ridge ending (point where ridge ends abruptly) and ridge bifurcation (point where a ridge forks or diverges into branch ridges), as shown in Figure 1b,c.

2. Prior Work

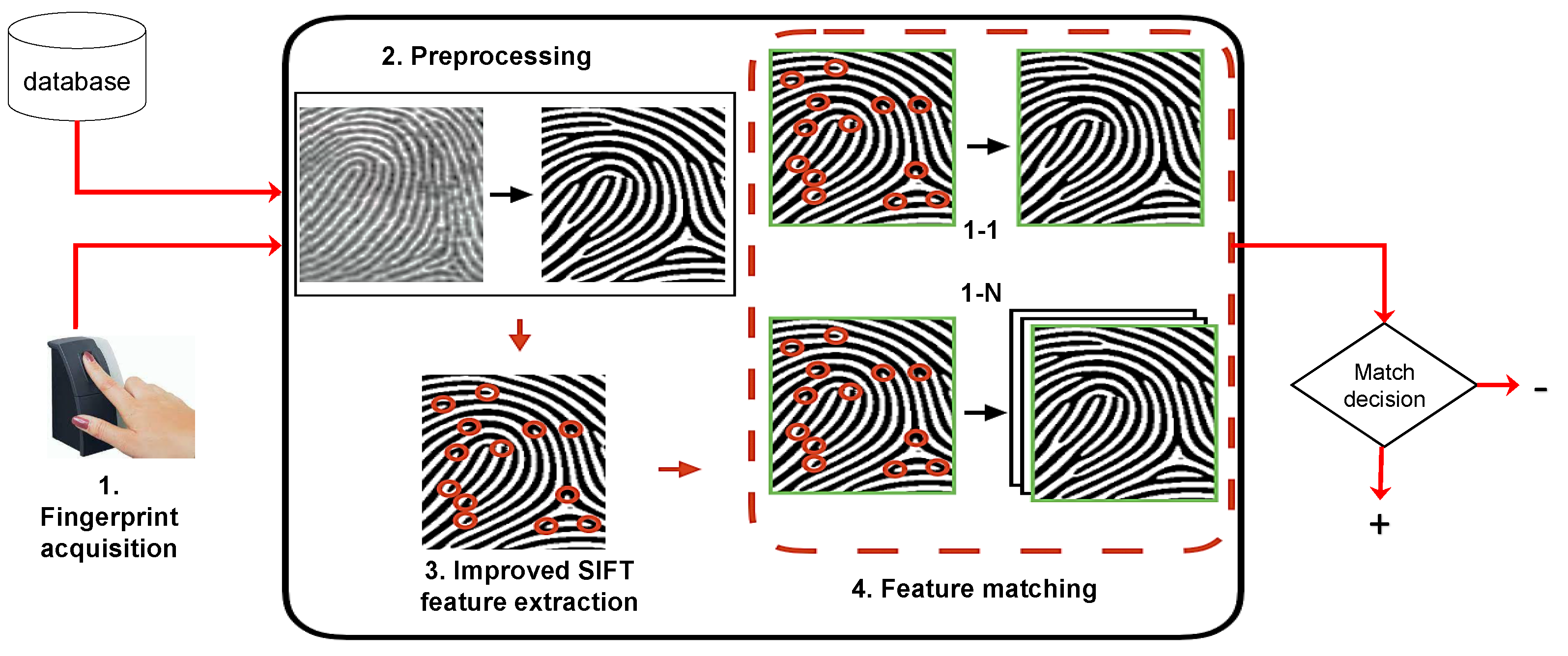

3. Proposed Methodology

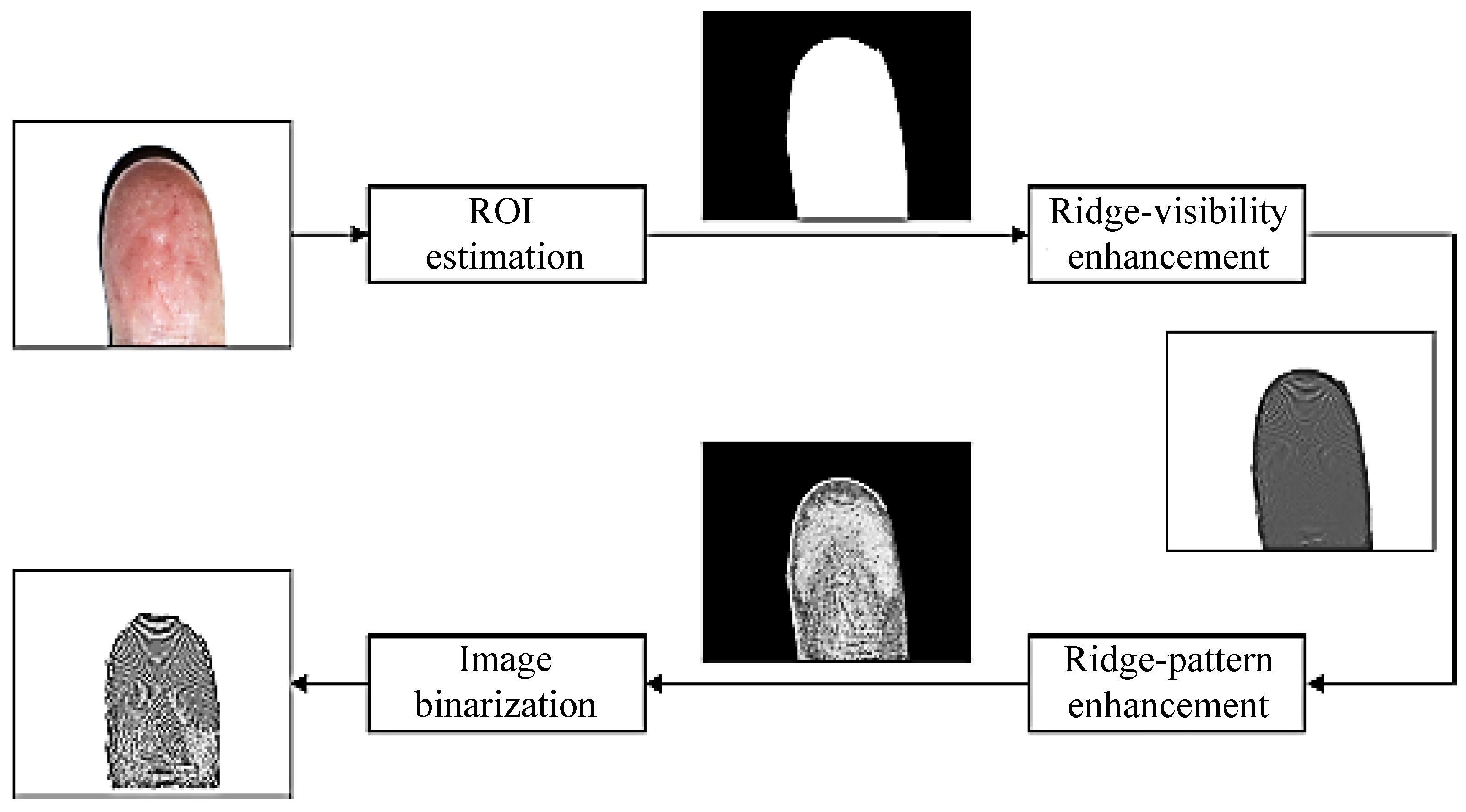

3.1. Image Preprocessing

3.1.1. Fingerprint Enhancement Using Contextual Filtering

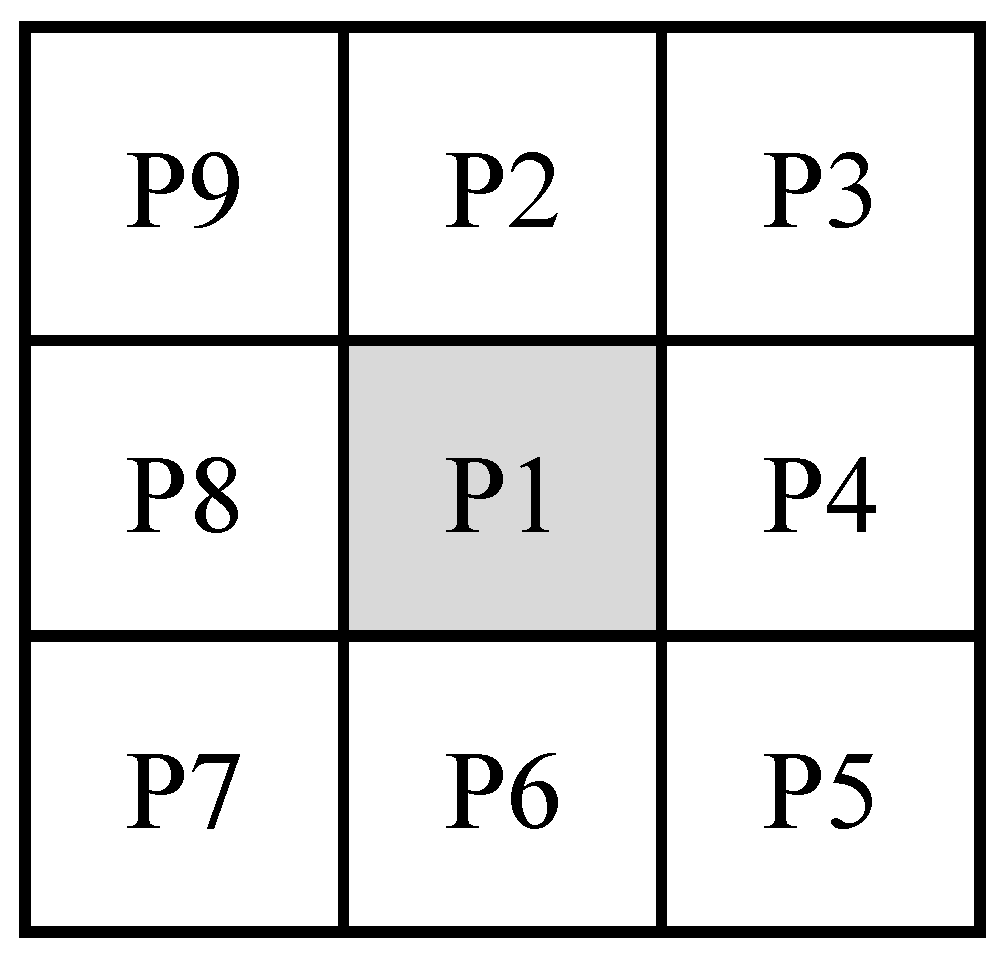

3.1.2. Thinning and Dilation

3.2. Minutiae Feature Extraction

3.3. Minutiae Feature Matching

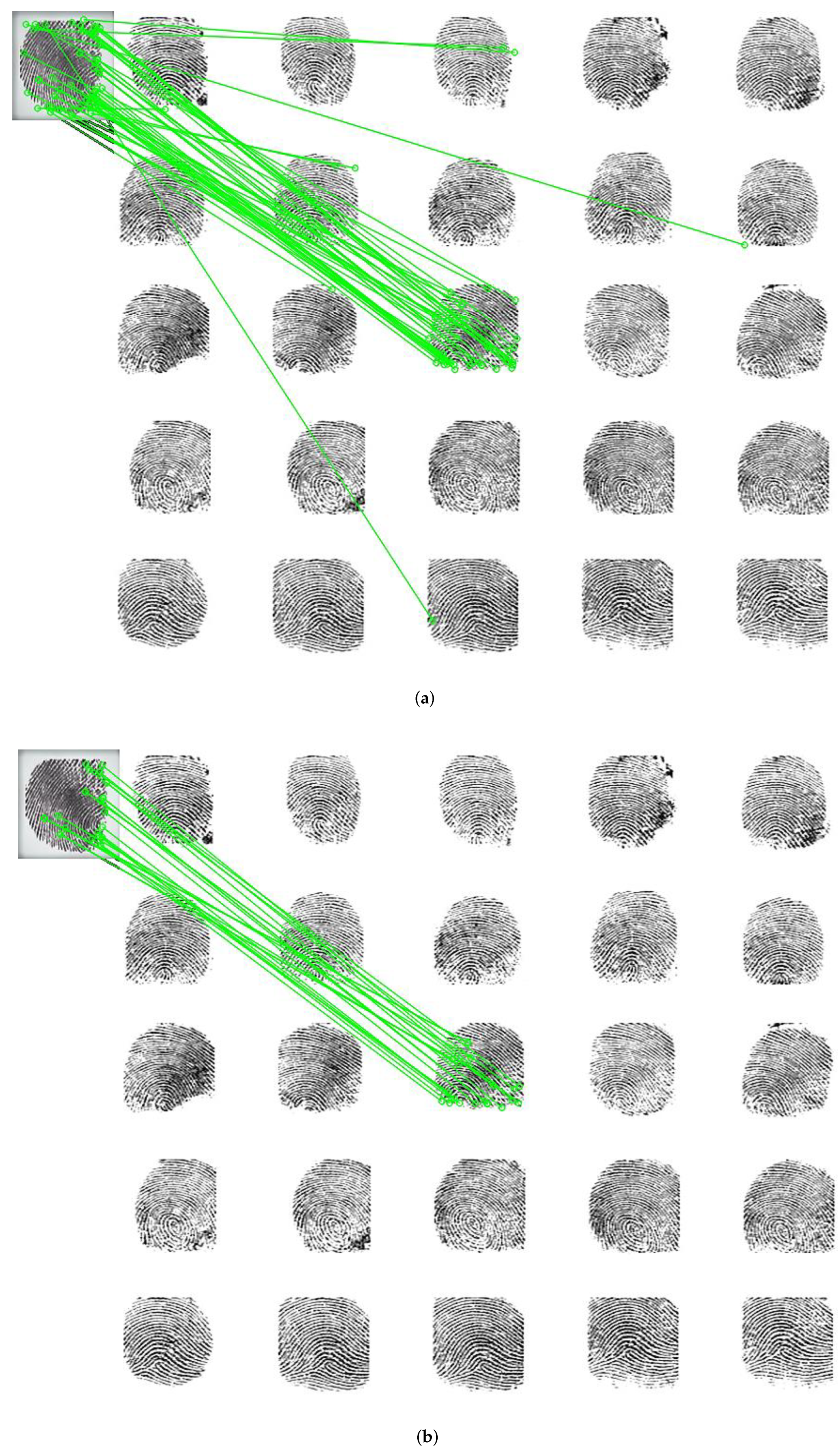

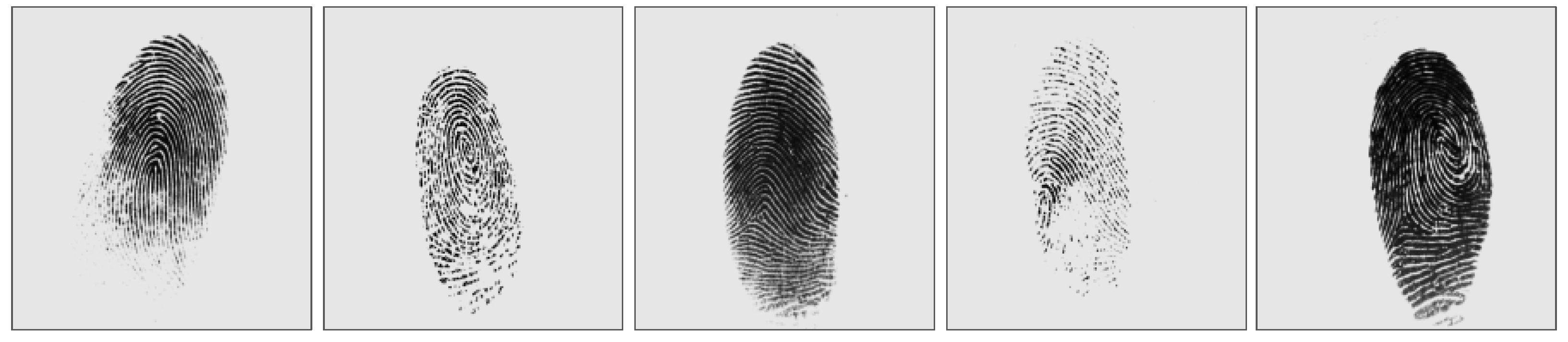

4. Experimental Results and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Singh, P.; Kaur, L. Fingerprint Feature Extraction using Ridges and Valleys. Int. J. Eng. Res. Technol. 2015, 4, 1330–1334. [Google Scholar]

- Grosz, S.A.; Engelsma, J.J.; Liu, E.; Jain, A.K. C2CL: Contact to Contactless Fingerprint Matching. IEEE Trans. Inf. Forensics Secur. 2022, 17, 196–210. [Google Scholar] [CrossRef]

- Bakheet, S.; Al-Hamadi, A. Chord-length shape features for license plate character recognition. J. Russ. Laser Res. 2020, 41, 156–170. [Google Scholar] [CrossRef]

- Ali, S.F.; Khan, M.A.; Aslam, A.S. Fingerprint matching, spoof and liveness detection: Classification and literature review. Front. Comput. Sci. 2021, 15, 151310. [Google Scholar] [CrossRef]

- Kumar, G.; Bhatia, P.K. A Detailed Review of Feature Extraction in Image Processing Systems. In Proceedings of the 2014 Fourth International Conference on Advanced Computing & Communication Technologies, Rohtak, India, 8–9 February 2014; pp. 5–12. [Google Scholar]

- Duan, Y.; He, K.; Feng, J.; Lu, J.; Zhou, J. Estimating 3D Finger Pose via 2D-3D Fingerprint Matching. In Proceedings of the 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Robust hand gesture recognition using multiple shape-oriented visual cues. EURASIP J. Image Video Process 2021, 2021, 26. [Google Scholar] [CrossRef]

- Mali, K.; Bhattacharya, S. Fingerprint recognition using global and local structures. Int. J. Comput. Sci. Eng. 2011, 3, 161–172. [Google Scholar]

- Alonso-Fernandez, F.; Bigun, J.; Fierrez, J.; Fronthaler, H.; Kollreider, K.; Ortega-Garcia, J. Fingerprint recognition. In Guide to Biometric Reference Systems and Performance Evaluation; Springer: London, UK, 2009; pp. 51–88. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. A framework for instantaneous driver drowsiness detection based on improved HOG features and naïve Bayesian classification. Brain Sci. 2021, 11, 240. [Google Scholar] [CrossRef]

- Bader, A.S.; Sagheer, A.M. Finger Vein Identification Based On Corner Detection. J. Theor. Appl. Inf. Technol. 2018, 96, 2696–2705. [Google Scholar]

- Cao, Y.; Pang, B.; Liu, X.; Shi, Y. An Improved Harris-SIFT Algorithm for Image Matching. In Proceedings of the International Conference on Advanced Hybrid Information Processing, Harbin, China, 17–18 July 2017; pp. 56–64. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. A Discriminative Framework for Action Recognition Using f-HOL Features. Information 2016, 7, 68. [Google Scholar] [CrossRef]

- Singh, P.; Kaur, L. Fingerprint feature extraction using morphological operations. In Proceedings of the International Conference on Advances in Computer Engineering and Applications, Cebu, Philippines, 15–17 December 2015; pp. 764–767. [Google Scholar]

- Singh, K.; Kaur, K.; Sardana, A. Fingerprint feature extraction. Int. J. Comput. Sci. Technol. 2011, 2, 237–241. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A.; Mofaddel, M.A. Recognition of Human Actions Based on Temporal Motion Templates. Br. J. Appl. Sci. Technol. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Lian, Q.; Zhang, J.; Chen, S. Extracting fingerprint minutiae based on Harris corner detector. Opt. Tech. 2008, 34, 383–387. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A.; Youssef, R. A Fingerprint-Based Verification Framework Using Harris and SURF Feature Detection Algorithms. Appl. Sci. 2022, 12, 2028. [Google Scholar] [CrossRef]

- Bakheet, S.; Al-Hamadi, A. Hand gesture recognition using optimized local Gabor features. J. Comput. Theor. Nanosci. 2017, 14, 1380–1389. [Google Scholar] [CrossRef]

- Thakkar, D. Minutiae Based Extraction in Fingerprint Recognition. Available online: https://www.bayometric.com/minutiae-based-extraction-fingerprint-recognition/ (accessed on 10 October 2017).

- Sadek, S.; Abdel-Khalek, S. Generalized α-Entropy Based Medical Image Segmentation. J. Softw. Eng. Appl. 2014, 7, 62–67. [Google Scholar] [CrossRef]

- Bakheet, S.; Al-Hamadi, A. A Hybrid Cascade Approach for Human Skin Segmentation. Br. J. Math. Comput. Sci. 2016, 17, 1–14. [Google Scholar] [CrossRef]

- Hong, L.; Wan, Y.; Jain, A. Fingerprint image enhancement: Algorithm and performance evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 777–789. [Google Scholar] [CrossRef]

- Sadek, S.; Al-Hamadi, A.; Michaelis, B.; Sayed, U. A New Method for Image Classification Based on Multi-level Neural Networks. In Proceedings of the International Conference on Signal and Image Processing (ICSIP’09), Amsterdam, The Netherlands, 23–25 September 2009; pp. 197–200. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Computer-Aided Diagnosis of Malignant Melanoma Using Gabor-Based Entropic Features and Multilevel Neural Networks. Diagnostics 2020, 10, 822. [Google Scholar] [CrossRef]

- Patel, M.B.; Parikh, S.M.; Patel, A.R. An Improved Thinning Algorithm For Fingerprint Recognition. Int. J. Adv. Res. Comput. Sci. 2017, 8, 1238–1244. [Google Scholar] [CrossRef][Green Version]

- Hall, R.W. Fast parallel thinning algorithms: Parallel speed and connectivity preservation. Commun. ACM 1989, 32, 124–131. [Google Scholar] [CrossRef]

- Zhang, T.Y.; Suen, C.Y. A Fast Parallel Algorithms For Thinning Digital Patterns. Commun. ACM 1984, 27, 236–239. [Google Scholar] [CrossRef]

- Kocharyan, D. A modified fingerprint image thinning algorithm. Am. J. Softw. Eng. Appl. 2013, 2, 1–6. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 2, 91–110. [Google Scholar] [CrossRef]

- Vedaldi, A. An Implementation of SIFT Detector and Descriptor. 2008. Available online: http://cs.tau.ac.il/~turkel/imagepapers/ (accessed on 8 March 2022).

- Lee, Y.; Lee, D.H.; Park, J.H. Revisiting NIZK-Based Technique for Chosen-Ciphertext Security: Security Analysis and Corrected Proofs. Appl. Sci. 2021, 11, 3367. [Google Scholar] [CrossRef]

- Dospinescu, O.; Brodner, P. Integrated Applications with Laser Technology. Inform. Econ. 2013, 17, 53–61. [Google Scholar] [CrossRef]

- Agarwal, D.; Garima; Bansal, A. A Utility of Ridge Contour Points in Minutiae-Based Fingerprint Matching. In Proceedings of the International Conference on Computational Intelligence and Data Engineering, Hyderabad, India, 8–9 August 2020. [Google Scholar]

- Jiayuan, R.; Yigang, W.; Yun, D. Study on eliminating wrong match pairs of SIFT. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Ljubljana, Slovenia, 18–20 September 2010; pp. 992–995. [Google Scholar] [CrossRef]

- Omercevic, D.; Drbohlav, O.; Leonardis, A. High-Dimensional Feature Matching: Employing the Concept of Meaningful Nearest Neighbors. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Moravec, H. Towards Automatic Visual Obstacle Avoidance. In Proceedings of the 5th International Joint Conference on Artificial Intelligence (IJCAI’77), Cambridge, MA, USA, 22–25 August 1977; Volume 1, p. 584. [Google Scholar]

- Maio, D.; Maltoni, D.; Cappelli, R.; Wayman, J.L.; Jain, A.K. FVC 2004: Third Fingerprint Verification Competition. In Proceedings of the International Conference on Biometric Authentication, Hong Kong, China, 15–17 July 2004; Volume 3072, pp. 1–7. [Google Scholar]

- Lakshmanan, R.; Selvaperumal, S.; Chow, M. Integrated Finger Print Recognition Using Image Morphology and Neural Network. Int. J. Adv. Stud. Comput. Sci. Eng. 2014, 3, 40–48. [Google Scholar]

- Ali, S.; Prakash, S. 3Dimensional Secured Fingerprint Shell. Pattern Recognit. Lett. 2019, 126, 68–77. [Google Scholar] [CrossRef]

- Arunalatha, J.S.; Tejaswi, V.; Shaila, K.; Anvekar, D.; Venugopal, K.R.; Iyengar, S.S.; Patnaik, L.M. FIVDL: Fingerprint Image Verification using Dictionary Learning. Procedia Comput. Sci. 2015, 54, 482–490. [Google Scholar] [CrossRef]

- Turroni, F.; Maltoni, D.; Cappelli, R.; Maio, D. Improving Fingerprint Orientation Extraction. IEEE Trans. Inf. Forensics Secur. 2011, 6, 1002–1013. [Google Scholar] [CrossRef]

- Alam, B.; Jin, Z.; Yap, W.S.; Goi, B.M. An alignment-free cancelable fingerprint template for bio-cryptosystems. J. Netw. Comput. Appl. 2018, 15, 20–32. [Google Scholar] [CrossRef]

- Yang, J.; Xiong, N.; Vasilakos, A.V. Two-Stage Enhancement Scheme for Low-Quality Fingerprint Images by Learning from the Images. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 235–248. [Google Scholar] [CrossRef]

- Bartunek, J.S.; Nilsson, M.; Sallberg, B.; Claesson, I. Adaptive Fingerprint Image Enhancement With Emphasis on Preprocessing of Data. IEEE Trans. Image Process. 2013, 22, 644–656. [Google Scholar] [CrossRef] [PubMed]

- Gottschlich, C. Curved-Region-Based Ridge Frequency Estimation and Curved Gabor Filters for Fingerprint Image Enhancement. IEEE Trans. Image Process. 2012, 21, 2220–2227. [Google Scholar] [CrossRef] [PubMed]

| Work | Techniques | EER (%) |

|---|---|---|

| Proposed method | Improve SIFT Features | 02.01 |

| Ali and Prakash [41] | Fingerprint Shell | 02.02 |

| Arunalatha et al. [42] | Dictionary Learning | 02.04 |

| Francesco et al. [43] | Orientation Extraction | 02.06 |

| Alam et al. [44] | Fingerprint template | 02.07 |

| Jucheng et al. [45] | Two-Stage Enhancement Scheme | 02.19 |

| Bartunek et al. [46] | Pre-processing | 02.40 |

| Carsten [47] | Curved Gabor Filters | 11.97 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bakheet, S.; Alsubai, S.; Alqahtani, A.; Binbusayyis, A. Robust Fingerprint Minutiae Extraction and Matching Based on Improved SIFT Features. Appl. Sci. 2022, 12, 6122. https://doi.org/10.3390/app12126122

Bakheet S, Alsubai S, Alqahtani A, Binbusayyis A. Robust Fingerprint Minutiae Extraction and Matching Based on Improved SIFT Features. Applied Sciences. 2022; 12(12):6122. https://doi.org/10.3390/app12126122

Chicago/Turabian StyleBakheet, Samy, Shtwai Alsubai, Abdullah Alqahtani, and Adel Binbusayyis. 2022. "Robust Fingerprint Minutiae Extraction and Matching Based on Improved SIFT Features" Applied Sciences 12, no. 12: 6122. https://doi.org/10.3390/app12126122

APA StyleBakheet, S., Alsubai, S., Alqahtani, A., & Binbusayyis, A. (2022). Robust Fingerprint Minutiae Extraction and Matching Based on Improved SIFT Features. Applied Sciences, 12(12), 6122. https://doi.org/10.3390/app12126122