Evaluating User Safety Aspects of AI-Based Systems in Industrial Occupational Safety: A Critical Review of Research Literature

Abstract

1. Introduction

1.1. AI-Based Systems for Occupational Safety

1.2. Related Research

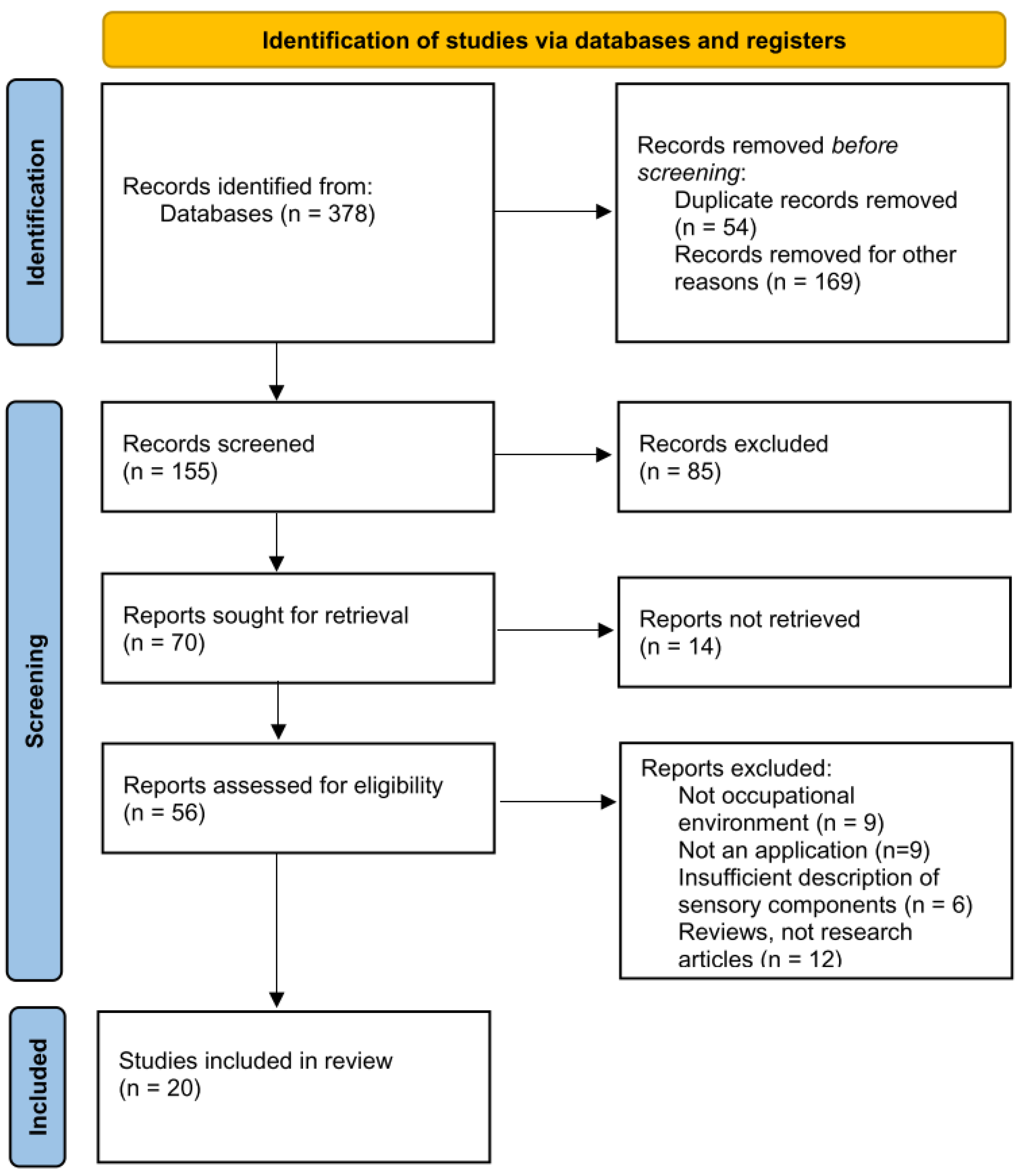

2. Materials and Methods

2.1. Publication Inclusion Criteria

- Research papers that explore the application of AI-driven sensor systems published in English language.

- The applications are built around the human operator and follow a human-centered design.

- Applications where the human operator is either assisted by the system or monitored by the system.

- Applications used in different occupational industrial environments.

- Articles published after 2022.

2.2. Publication Selection Process

2.3. Categorizing Publications and Formulating Research Questions

- RQ1: How can AI-based systems and technologies implemented for occupational safety in industrial settings be categorized based on the following aspects?

- RQ1.1: Which AI-based wearable systems and technologies are implemented for occupational safety in industrial settings?

- RQ1.2: Which AI-based systems and technologies deployed in the environment are implemented for occupational safety in industrial settings?

- RQ1.3: Which preventive, reactive, and post-incident features of these AI-based safety applications can be identified?

- RQ2: Given that some AI-based applications for occupational safety inherently introduce new risks while others do not, does the respective scientific literature address these risks? Additionally, when considering both explicitly addressed risks and those not posed by the design of the respective systems, which applications can be considered safer or less safe overall?

3. Results

3.1. Table Summarizing Findings for RQ1

| Type of Sensor/Data Collected | |||

|---|---|---|---|

| R1—Preventive | R2—Reactive | R3—Post-Incident | |

| Wearable Systems | |||

| Physiological Monitoring | [14,15,16,17] | [15,17] | |

| Environmental Monitoring | [14,15,16,17,18,19] | [15] | |

| Movement and Posture Monitoring | [16,17,18,19,20,21] [22] * [23] | [17] [22] * [23] | [13,21,24] [22] * |

| Proximity and Location Tracking | [14,16,21,25] [22] * | [21,25] [22] * | [13,21] [22] * |

| Systems Deployed in Environment | |||

| Image Sensors | [21,26,27,28,29,30,31] [32] * [22] * [33] * [34] * [35] * [36] * [37] * [38] * [39] * [40] * [23] | [32] * [22] * [33] * [34] * [35] * [37] * [38] * [39] * [40] * [23] | [32] * [22] * [33] * [34] * [35] * [37] * [38] * [39] * [40] * |

| Environmental Sensors | [16,17,19,41,42] | [17,41,42] | |

| Motion and Proximity Sensors | [19] [22] * [35] * [37] * [39] * [40] * | [22] * [35] * [37] * [39] * | [22] * [35] * [37] * [39] * |

3.2. Findings for RQ1.1: Which AI-Based Wearable Systems and Technologies Are Implemented for Occupational Safety in Industrial Settings?

3.2.1. Physiological Monitoring

3.2.2. Environmental Monitoring

3.2.3. Movement and Posture Monitoring

3.2.4. Proximity and Location Tracking

3.3. Findings for RQ1.2: Which AI-Based Systems and Technologies Deployed in the Environment Are Implemented for Occupational Safety in Industrial Settings?

3.3.1. Image Sensors

3.3.2. Environmental Sensors

3.3.3. Motion and Proximity Sensors

3.4. Findings for RQ1.3: Which Preventive, Reactive, and Post-Incident Features of These AI-Based Safety Applications Can Be Identified?

3.4.1. Preventive Features

3.4.2. Reactive Features

3.4.3. Post-Incident Features

3.5. Findings for RQ2: Given That Some AI-Based Applications for Occupational Safety Inherently Introduce New Risks While Others Do Not, Does the Respective Scientific Literature Address These Risks? Additionally, When Considering Both Explicitly Addressing Risks and Those Not Posed by the Design of the Respective Systems, Which Applications Can Be Considered Safer or Less Safe Overall?

- Fairness: AI systems for automated decision-making can produce unfair outcomes due to biases in data, objective functions, and human input. Additionally, unfairness can arise from biases in the design, problem formulation, and deployment decisions of AI systems.

- Security: In AI, particularly in machine learning systems, new security issues such as data poisoning, adversarial attacks, and model stealing must be addressed in addition to traditional information and system security concerns. These emerging threats pose unique challenges that go beyond classical security measures.

- Safety: Safety relates to the expectation that a system does not, under defined conditions, lead to a state in which human life, health, property, or the environment is endangered. Use of AI systems in automated vehicles, manufacturing devices, and robots can introduce risks related to safety.

- Privacy: Privacy involves individuals controlling how their information is collected, stored, processed, and disclosed. Given that AI systems often rely on big data, particularly sensitive data like health records, there are significant concerns about privacy protection, potential misuse, and ethical impacts, including discrimination and freedom of expression.

- Robustness: Robustness is related to the ability of a system to maintain its level of performance under the various circumstances of its usage. In the context of AI systems, there are additional challenges due to its complex, nonlinear characteristics.

- Transparency and explainability: Transparency involves both the characteristics of organizations operating AI systems and the systems themselves. It requires organizations to disclose their use of AI, data handling practices, and risk management measures, while AI systems should provide stakeholders with information on their capabilities, limitations, and explainability to understand and assess their outcomes.

- Environmental impact: AI can impact the environment both positively, such as reducing emissions in gas turbines, and negatively, due to the high resource consumption during training phases. These environmental risks and their impacts must be considered when developing and deploying AI systems.

- Accountability: Accountability in AI involves both organizational responsibility for decisions and actions, and the ability to trace system actions to their source. The use of AI can change existing accountability frameworks, raising questions about who is responsible when AI systems perform actions.

- Maintainability: Maintainability is related to the ability of the organization to handle modifications of the AI system in order to correct defects or adjust to new requirements. Because AI systems based on machine learning are trained and do not follow a rule-based approach, the maintainability of an AI system and its implications need to be investigated.

- Availability and quality of training and test data: AI systems based on machine learning require high-quality, sufficiently diverse training and test data to ensure intended behavior and strong predictive power. The data must be validated for currency and relevance. The amount will vary based on intended functionality and environment complexity.

- AI expertise: AI systems require inter-disciplinary specialists (difference to traditional software solutions) for their development, deployment, and assessment. End users are strongly encouraged to familiarize themselves with the functionality of the system.

4. Discussion, Limitations, and Future Work

4.1. Summarizing Findings

4.1.1. RQ1.1

4.1.2. RQ1.2

4.1.3. RQ1.3

4.1.4. RQ2

4.2. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Badri, A.; Boudreau-Trudel, B.; Souissi, A.S. Occupational health and safety in the industry 4.0 era: A cause for major concern? Saf. Sci. 2018, 109, 403–411. [Google Scholar] [CrossRef]

- Peres, R.S.; Jia, X.; Lee, J.; Sun, K.; Colombo, A.W.; Barata, J. Industrial artificial intelligence in industry 4.0-systematic review, challenges and outlook. IEEE Access 2020, 8, 220121–220139. [Google Scholar] [CrossRef]

- Pishgar, M.; Issa, S.F.; Sietsema, M.; Pratap, P.; Darabi, H. REDECA: A novel framework to review artificial intelligence and its applications in occupational safety and health. Int. J. Environ. Res. Public Health 2021, 18, 6705. [Google Scholar] [CrossRef] [PubMed]

- Zorzenon, R.; Lizarelli, F.L.; Daniel, B.d.A. What is the potential impact of industry 4.0 on health and safety at work? Saf. Sci. 2022, 153, 105802. [Google Scholar] [CrossRef]

- Patel, V.; Chesmore, A.; Legner, C.M.; Pandey, S. Trends in workplace wearable technologies and connected-worker solutions for next-generation occupational safety, health, and productivity. Adv. Intell. Syst. 2022, 4, 2100099. [Google Scholar] [CrossRef]

- Guo, B.H.; Zou, Y.; Fang, Y.; Goh, Y.M.; Zou, P.X. Computer vision technologies for safety science and management in construction: A critical review and future research directions. Saf. Sci. 2021, 135, 105130. [Google Scholar] [CrossRef]

- Vukicevic, A.M.; Petrovic, M.N.; Knezevic, N.M.; Jovanovic, K.M. Deep learning-based recognition of unsafe acts in manufacturing industry. IEEE Access 2023, 11, 103406–103418. [Google Scholar] [CrossRef]

- Tran, T.A.; Abonyi, J.; Kovács, L.; Eigner, G.; Ruppert, T. Heart rate variability measurement to assess work-related stress of physical workers in manufacturing industries-protocol for a systematic literature review. In Proceedings of the 2022 IEEE 20th Jubilee International Symposium on Intelligent Systems and Informatics (SISY), Subotica, Serbia, 15–17 September 2022; pp. 000313–000318. [Google Scholar]

- Flor-Unda, O.; Fuentes, M.; Dávila, D.; Rivera, M.; Llano, G.; Izurieta, C.; Acosta-Vargas, P. Innovative technologies for occupational health and safety: A scoping review. Safety 2023, 9, 35. [Google Scholar] [CrossRef]

- Fisher, E.; Flynn, M.A.; Pratap, P.; Vietas, J.A. Occupational safety and health equity impacts of artificial intelligence: A scoping review. Int. J. Environ. Res. Public Health 2023, 20, 6221. [Google Scholar] [CrossRef] [PubMed]

- Akyıldız, C. Integration of digitalization into occupational health and safety and its applicability: A literature review. Eur. Res. J. 2023, 9, 1509–1519. [Google Scholar] [CrossRef]

- Auernhammer, J. Human-centered AI: The role of Human-centered Design Research in the development of AI. In Proceedings of the Synergy—DRS International Conference 2020, Online, 11–14 August 2020; Boess, S., Cheung, M., Cain, R., Eds.; [Google Scholar] [CrossRef]

- Bong, P.C.; Tee, M.K.T.; Jo, H.S. Exploring the Potential of IoT-based Injury Detection and Response by Combining Human Activity Tracking and Indoor Localization. In Proceedings of the 2022 International Conference on Computer and Drone Applications (IConDA), Kuching, Malaysia, 28–29 November 2022; pp. 28–33. [Google Scholar]

- Donati, M.; Olivelli, M.; Giovannini, R.; Fanucci, L. RT-PROFASY: Enhancing the well-being, safety and productivity of workers by exploiting wearable sensors and artificial intelligence. In Proceedings of the 2022 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4. 0&IoT), Trento, Italy, 7–9 June 2022; pp. 69–74. [Google Scholar]

- Salahudeen, M.; Rahul, K.; Kurian, N.S.; Vardhan, H.; Amirthavalli, M. Smart PPE using LoRaWAN Technology. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; pp. 1272–1279. [Google Scholar]

- Moe, S.J.S.; Kim, B.W.; Khan, A.N.; Rongxu, X.; Tuan, N.A.; Kim, K.; Kim, D.H. Collaborative Worker Safety Prediction Mechanism Using Federated Learning Assisted Edge Intelligence in Outdoor Construction Environment. IEEE Access 2023, 11, 109010–109026. [Google Scholar] [CrossRef]

- Raman, R.; Mitra, A. IoT-Enhanced Workplace Safety for Real-Time Monitoring and Hazard Detection for Occupational Health. In Proceedings of the 2023 International Conference on Artificial Intelligence for Innovations in Healthcare Industries (ICAIIHI), Raipur, India, 29–30 December 2023; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Astocondor-Villar, J.; Grados, J.; Mendoza-Apaza, F.; Chavez-Sanchez, W.; Narciso-Gomez, K.; Grados-Espinoza, A.; Pascual-Panduro, P. Low-cost Biomechanical System for Posture Rectification using IoT Technology. In Proceedings of the Proceedings of the 2023 6th International Conference on Electronics, Communications and Control Engineering, Fukuoka Japan, 24–26 March 2023; pp. 271–277.

- Sangeethalakshmi, K.; Lakshmi, V.V.; Malathi, N.; Velmurugan, S. Smart Ergonomic Practices With IoT and Cloud Computing for Injury Prevention and Human Motion Analysis. In Proceedings of the 2023 International Conference on Artificial Intelligence for Innovations in Healthcare Industries (ICAIIHI), Raipur, India, 29–30 December 2023; Volume 1, pp. 1–6. [Google Scholar]

- Narteni, S.; Orani, V.; Cambiaso, E.; Rucco, M.; Mongelli, M. On the Intersection of Explainable and Reliable AI for physical fatigue prediction. IEEE Access 2022, 10, 76243–76260. [Google Scholar] [CrossRef]

- Rudberg, M.; Sezer, A.A. Digital tools to increase construction site safety. In Proceedings of the 2022 ARCOM Association of Researchers in Construction Management, Glasgow, UK, 5–7 September 2022. [Google Scholar]

- TrioMobil. TrioMobil. 2024. Available online: https://www.triomobil.com/en (accessed on 11 July 2024).

- Khan, M.; Khalid, R.; Anjum, S.; Tran, S.V.T.; Park, C. Fall prevention from scaffolding using computer vision and IoT-based monitoring. J. Constr. Eng. Manag. 2022, 148, 04022051. [Google Scholar] [CrossRef]

- Bonifazi, G.; Corradini, E.; Ursino, D.; Virgili, L.; Anceschi, E.; De Donato, M.C. A machine learning based sentient multimedia framework to increase safety at work. Multimed. Tools Appl. 2022, 81, 141–169. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Choi, Y. Smart helmet-based proximity warning system to improve occupational safety on the road using image sensor and artificial intelligence. Int. J. Environ. Res. Public Health 2022, 19, 16312. [Google Scholar] [CrossRef] [PubMed]

- Ran, S.; Weise, T.; Wu, Z. Chemical safety sign detection: A survey and benchmark. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–7. [Google Scholar]

- Wang, Y.; Chen, Z.; Wu, Q.; Jiang, H.; Du, T.; Chen, L. Safety Risk Control System for Electric Power Construction Site Based on Artificial Intelligence Technology. In Proceedings of the 2023 Panda Forum on Power and Energy (PandaFPE), Chengdu, China, 27–30 April 2023; pp. 1695–1699. [Google Scholar]

- Anjum, S.; Zaidi, S.F.A.; Khalid, R.; Park, C. Artificial Intelligence-based Safety Helmet Recognition on Embedded Devices to Enhance Safety Monitoring Process. In Proceedings of the 2022 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 13–15 September 2022; pp. 1–4. [Google Scholar]

- Bian, H.; Liu, Y.; Shi, L.; Lin, Z.; Huang, M.; Zhang, J.; Weng, G.; Zhang, C.; Gao, M. Detection method of helmet wearing based on uav images and yolov7. In Proceedings of the 2023 IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 24–26 February 2023; Volume 6, pp. 1633–1640. [Google Scholar]

- Fang, D.; Tian, L.; Li, M.; Wang, Y.; Liu, J.; Chen, X. Factory Worker Safety Mask Monitoring System Based on Deep Learning and AI Edge Computing. In Proceedings of the 2022 5th International Conference on Mechatronics, Robotics and Automation (ICMRA), Wuhan, China, 25–27 November 2022; pp. 96–100. [Google Scholar]

- Ji, N.; Ren, G.; Li, S.; Wei, W.; Zhang, M.; Liu, M.; Jiang, B. Improved Yolov5 for Safety Helmet Detection with Hybrid Attention. In Proceedings of the 2023 4th International Conference on Electronic Communication and Artificial Intelligence (ICECAI), Guangzhou, China, 12–14 May 2023; pp. 57–61. [Google Scholar]

- Intenseye. 2024. Available online: https://www.intenseye.com/ (accessed on 11 July 2024).

- Chooch. 2024. Available online: https://www.chooch.com/ (accessed on 11 July 2024).

- 3motionAI. 2024. Available online: https://3motionai.com/ (accessed on 11 July 2024).

- Eyyes. 2024. Available online: https://www.eyyes.com/ (accessed on 11 July 2024).

- EWIworks. 2024. Available online: https://ewiworks.com/ (accessed on 11 July 2024).

- Nirovision. 2024. Available online: https://www.nirovision.com/ (accessed on 11 July 2024).

- Geutebrueck. 2024. Available online: https://www.geutebrueck.com/de/index.html (accessed on 11 July 2024).

- ProtexAI. 2024. Available online: https://www.protex.ai/ (accessed on 11 July 2024).

- AssertAI. 2024. Available online: https://www.assertai.com/ (accessed on 11 July 2024).

- Arun, S.; Karthik, S. Using Artificial Intelligence to Enhance Hazard Assessment and Safety Measures in Petrochemical Industries: Development and Analysis. In Proceedings of the 2023 3rd International Conference on Pervasive Computing and Social Networking (ICPCSN), Salem, India, 19–20 June 2023; pp. 823–830. [Google Scholar]

- Chang, T.Y.; Chen, G.Y.; Chen, J.J.; Young, L.H.; Chang, L.T. Application of artificial intelligence algorithms and low-cost sensors to estimate respirable dust in the workplace. Environ. Int. 2023, 182, 108317. [Google Scholar] [CrossRef] [PubMed]

- Pavón, I.; Sigcha, L.; Arezes, P.; Costa, N.; Arcas, G.; López, J. Wearable technology for occupational risk assessment: Potential avenues for applications. In Occupational Safety and Hygiene VI; CRC Press: Boca Raton, FL, USA, 2018; pp. 447–452. [Google Scholar]

- High-Level Expert Group on Artificial Intelligence. In Ethics Guidelines for Trustworthy AI; Technical report; European Commission: Brussels, Belgium, 2019.

- International Organization for Standardization; International Electrotechnical Commission. Information Technology—Artificial Intelligence—Guidance on Risk Management; Technical Report ISO/IEC 23894:2023; ISO/IEC: Geneva, Switzerland, 2023. [Google Scholar]

| Paper | Fairness | Security | Safety | Privacy | Robustness | Transp./Explainab. | Environmental Imp. | Accountability | Maintainability | Data | AI Expertise | Key Goal |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bong et al. [13] |  |  |  | ✓ |  |  |  |  |  | ✓ |  | IoT-based injury detection via IMU activity tracking and localization |

| Donati et al. [14] |  |  | ✓ | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | integrating activity and location data into one monitoring system |

| Salahudeen et al. [15] |  |  |  | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | coal mine monitoring IoT |

| Moe et al. [16] |  | ✓ |  | ✓ | ✓ | ✓ |  | ✓ |  | ✓ |  | IoT at construction site using federated learning (FL) |

| Raman et al. [17] |  | ✓ |  | ✓ | ✓ | ✓ |  | ✓ |  | ✓ |  | IoT sensor network for workplace safety |

| Astocondor et al. [18] |  |  |  | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | IoT biomechanical system for spinal rectification |

| Sangeethalakshmi et al. [19] |  | ✓ |  | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | IoT human motion analysis, ergonomic smart chair |

| Narteni et al. [20] |  | ✓ |  | ✓ |  | ✓ |  | ✓ | ✓ | ✓ | ✓ | IMUs for fatigue prediction |

| Bonifazi et al. [24] |  |  | ✓ | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | IMU wearable device for fall detection |

| Arun et al. [41] |  |  |  | ✓ |  | ✓ |  |  |  | ✓ |  | IoT system for safety in petrochemical industry |

| Chang et al. [42] |  |  |  | ✓ |  | ✓ |  | ✓ |  | ✓ |  | IOT low-cost sensor technology for estimation of respirable dust |

| Rudberg et al. [21] |  | ✓ |  | ✓ | ✓ | ✓ |  | ✓ | ✓ | ✓ | ✓ | five case studies in real world scenario |

| Khan et al. [23] |  |  |  | ✓ |  | ✓ |  | ✓ |  | ✓ |  | fall prevention from scaffolding using IoT and vision sensor fusion |

| Kim et al. [25] |  |  |  |  |  | ✓ |  | ✓ |  | ✓ |  | smart helmet-vision-based proximity warning system |

| Ran et al. [26] |  |  |  |  | ✓ | ✓ |  | ✓ |  | ✓ |  | dataset creation for chemical safety signs detection mechanism |

| Yuan et al. [27] |  |  |  |  |  |  |  |  |  | ✓ |  | rigidly monitoring system for electric power construction |

| Anjum et al. [28] |  |  |  |  | ✓ |  |  |  |  | ✓ |  | safety helmet identification on edge device |

| Bian et al. [29] |  |  |  |  | ✓ |  |  |  |  | ✓ |  | safety helmet detection based on drone images |

| Fang et al. [30] |  |  |  |  | ✓ |  |  | ✓ |  | ✓ |  | safety mask monitoring based on edge computing |

| Ji et al. [31] |  |  |  |  | ✓ |  |  |  |  |  |  | safety helmet detection |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huber, J.; Anzengruber-Tanase, B.; Schobesberger, M.; Haslgrübler, M.; Fischer-Schwarz, R.; Ferscha, A. Evaluating User Safety Aspects of AI-Based Systems in Industrial Occupational Safety: A Critical Review of Research Literature. Int. J. Environ. Res. Public Health 2025, 22, 705. https://doi.org/10.3390/ijerph22050705

Huber J, Anzengruber-Tanase B, Schobesberger M, Haslgrübler M, Fischer-Schwarz R, Ferscha A. Evaluating User Safety Aspects of AI-Based Systems in Industrial Occupational Safety: A Critical Review of Research Literature. International Journal of Environmental Research and Public Health. 2025; 22(5):705. https://doi.org/10.3390/ijerph22050705

Chicago/Turabian StyleHuber, Jaroslava, Bernhard Anzengruber-Tanase, Martin Schobesberger, Michael Haslgrübler, Robert Fischer-Schwarz, and Alois Ferscha. 2025. "Evaluating User Safety Aspects of AI-Based Systems in Industrial Occupational Safety: A Critical Review of Research Literature" International Journal of Environmental Research and Public Health 22, no. 5: 705. https://doi.org/10.3390/ijerph22050705

APA StyleHuber, J., Anzengruber-Tanase, B., Schobesberger, M., Haslgrübler, M., Fischer-Schwarz, R., & Ferscha, A. (2025). Evaluating User Safety Aspects of AI-Based Systems in Industrial Occupational Safety: A Critical Review of Research Literature. International Journal of Environmental Research and Public Health, 22(5), 705. https://doi.org/10.3390/ijerph22050705