AEducaAR, Anatomical Education in Augmented Reality: A Pilot Experience of an Innovative Educational Tool Combining AR Technology and 3D Printing

Abstract

:1. Introduction

2. Materials and Methods

2.1. AEducaAR Tool Development

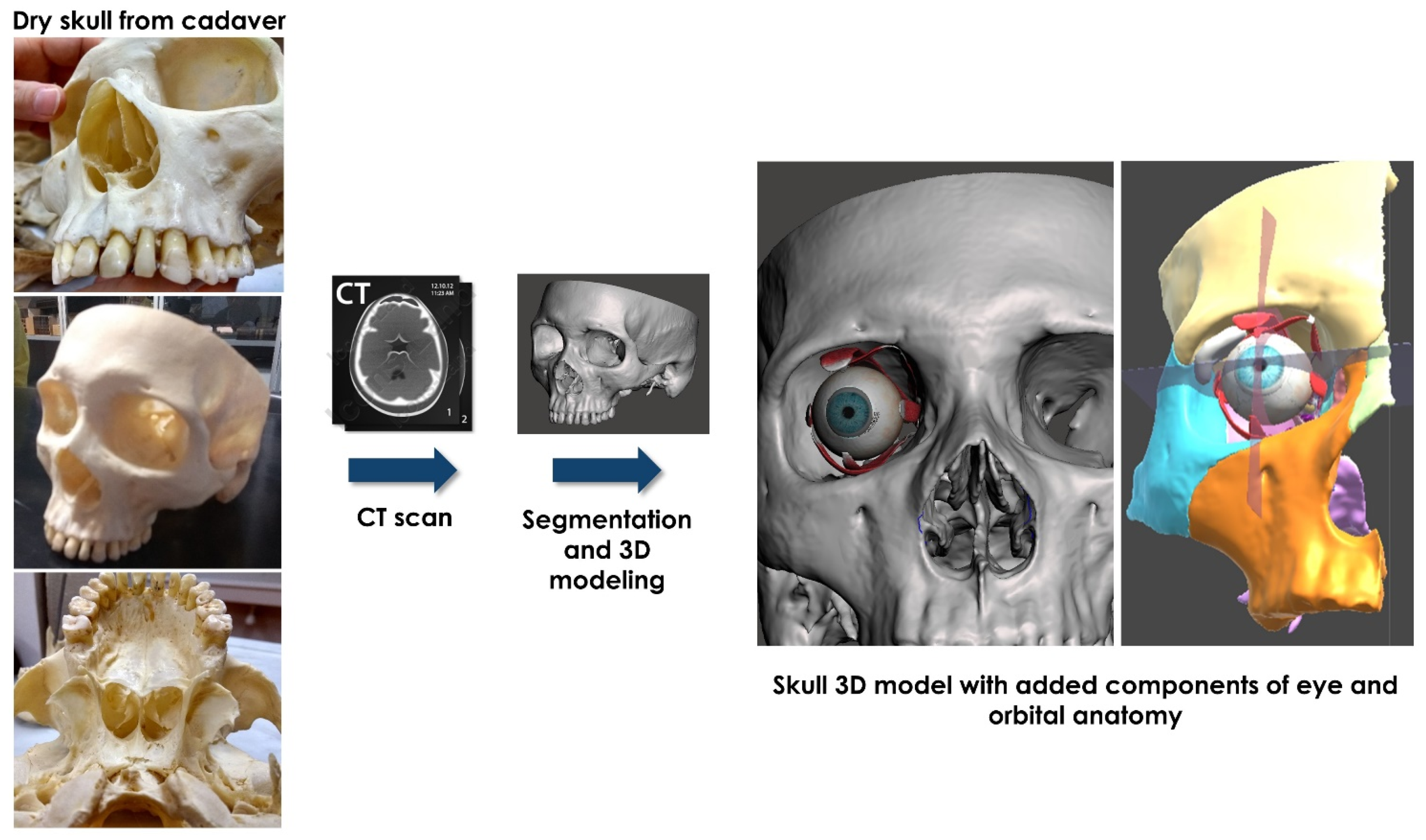

2.1.1. Image Segmentation and Virtual Content Preparation

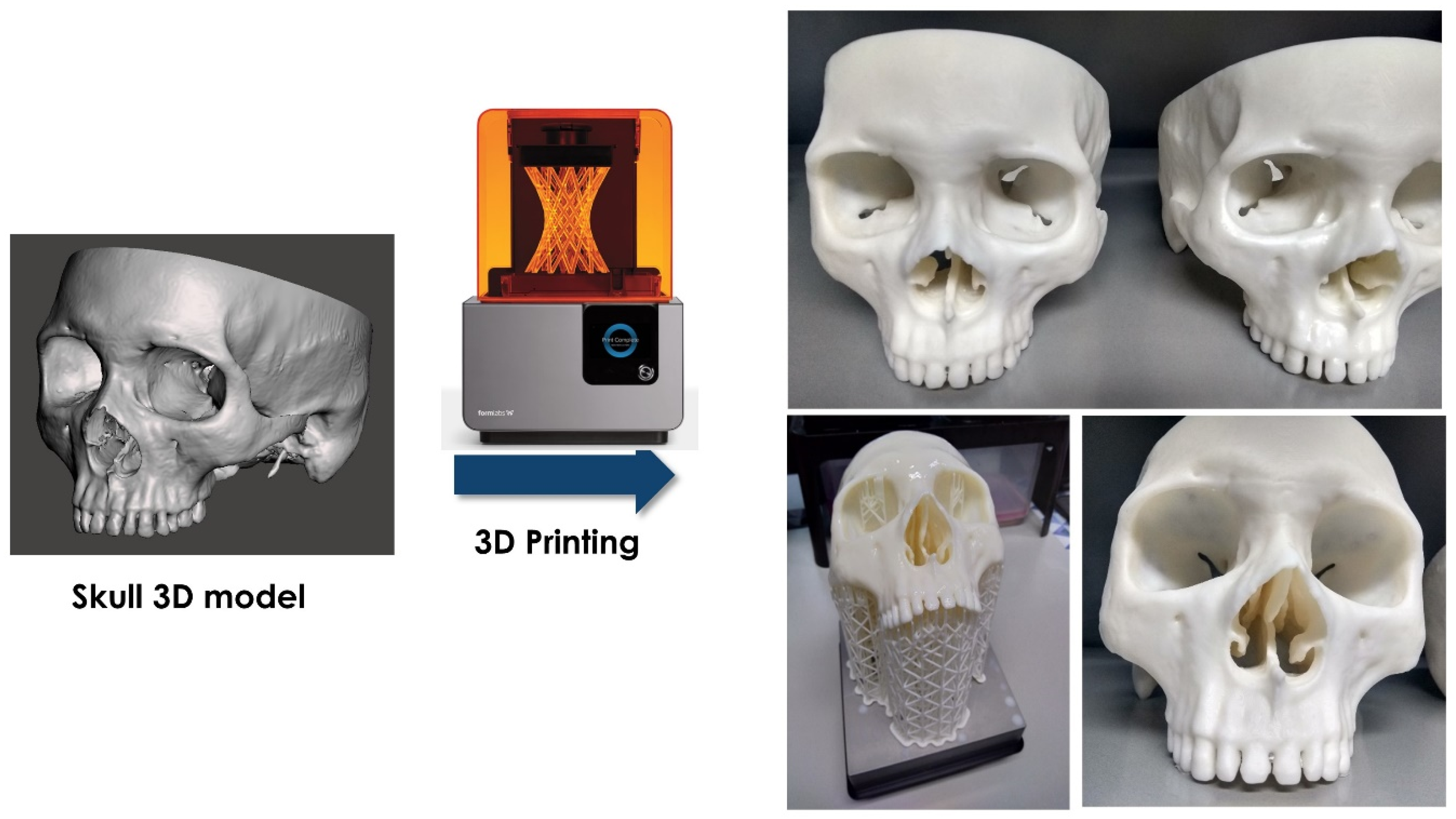

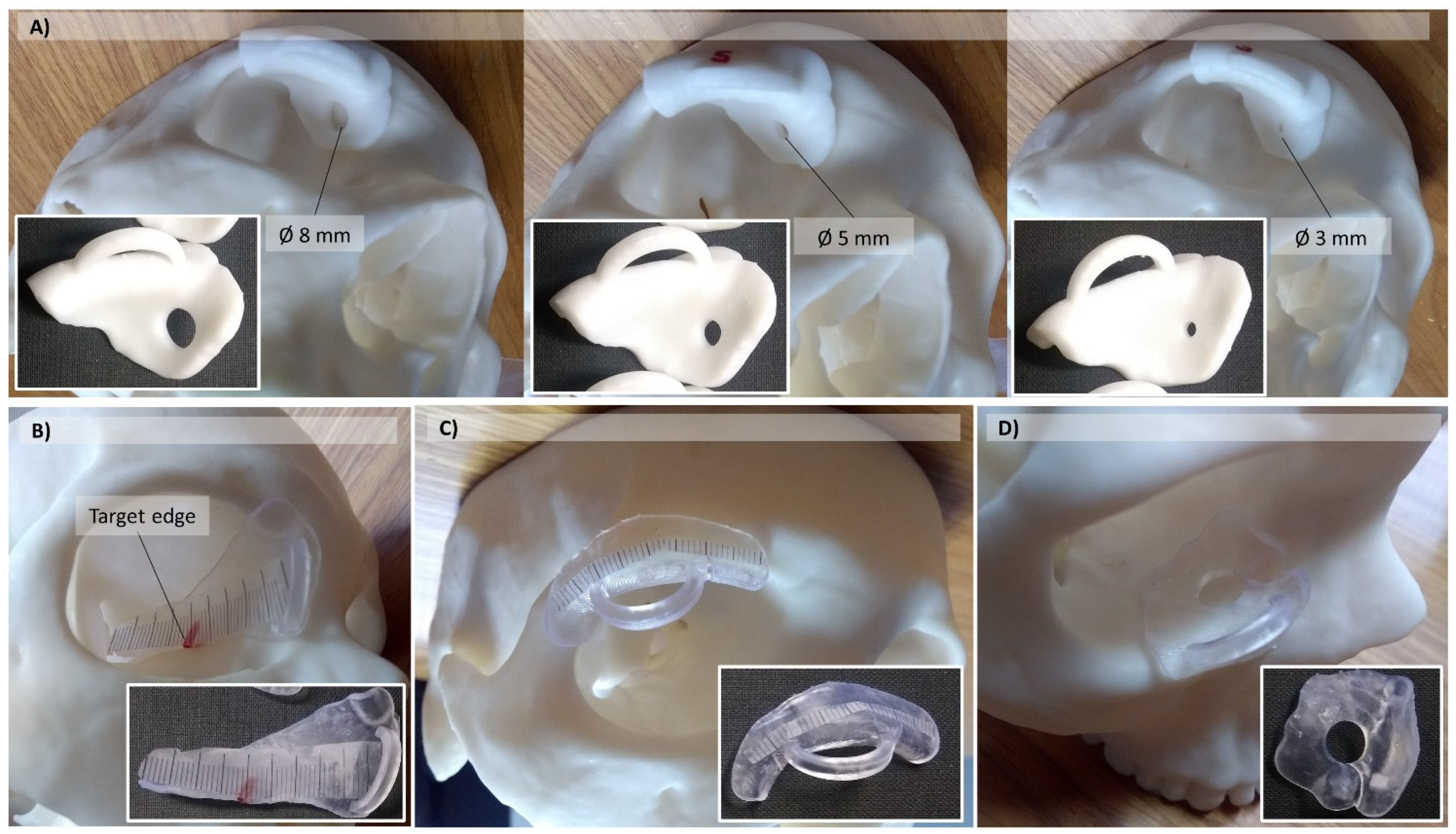

2.1.2. Manufacturing of Human Skull Phantom and Templates for Practical Tasks

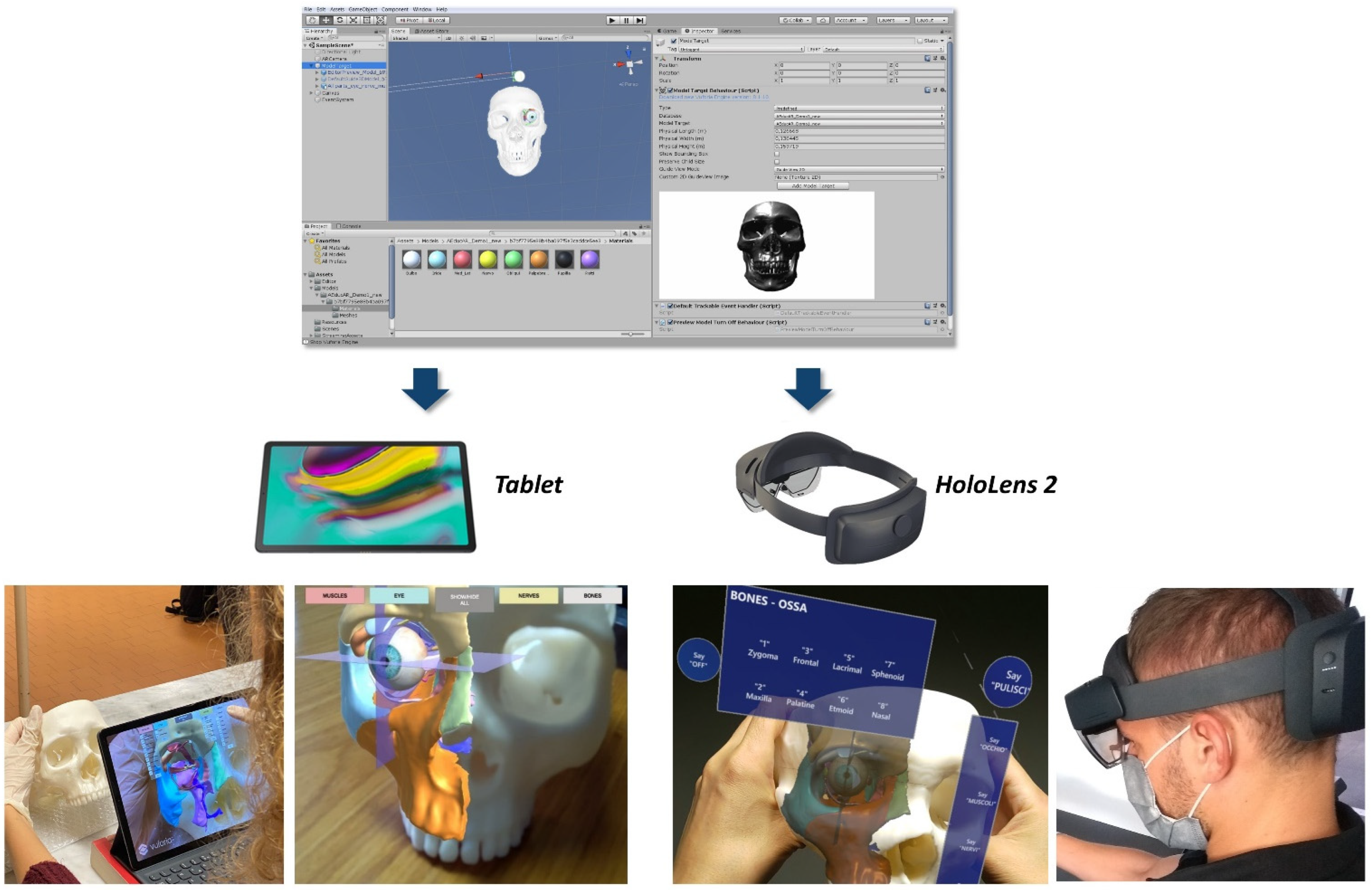

2.1.3. Development of AR Application

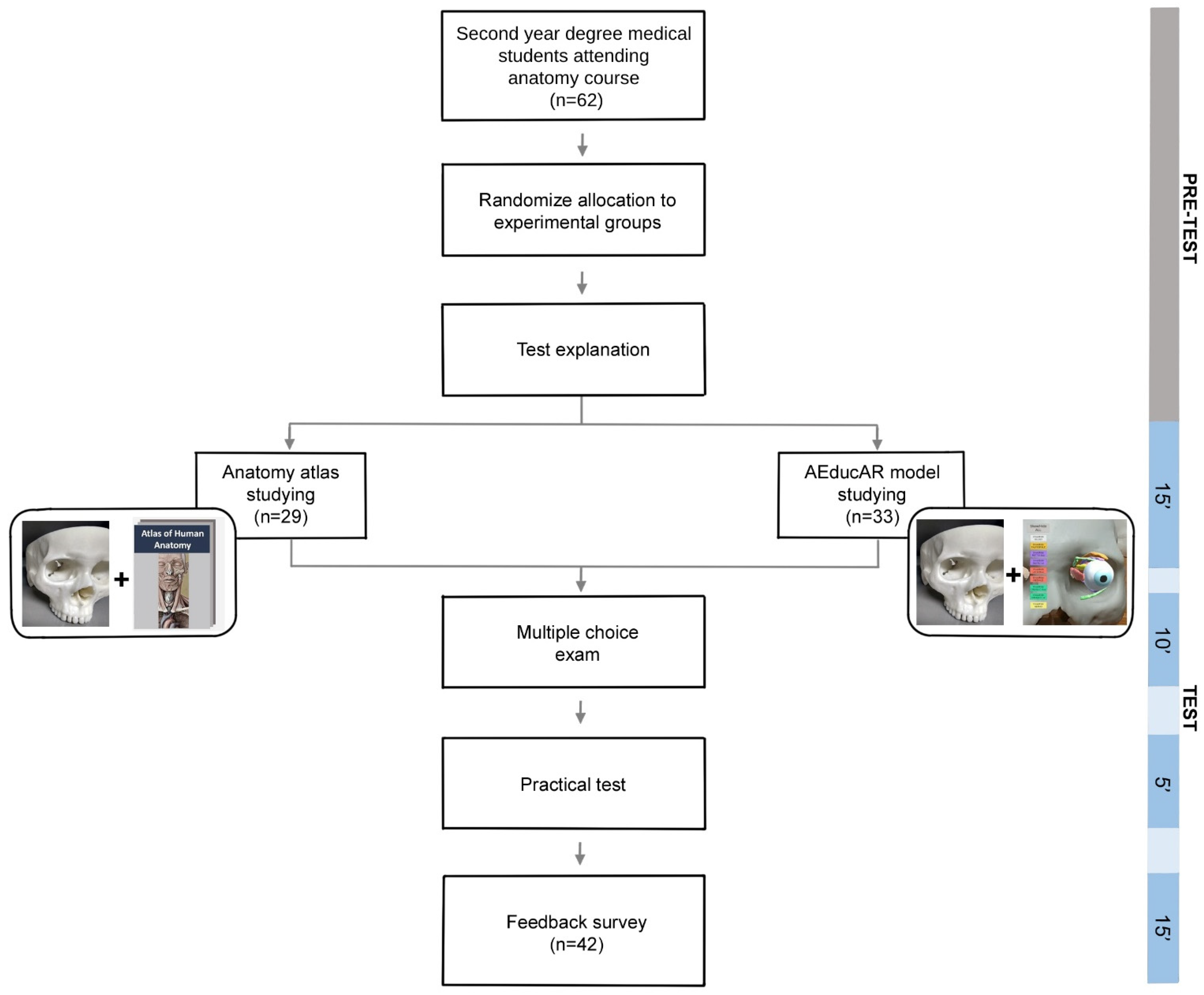

2.2. Study Design

2.3. Statistics

3. Results

3.1. The Resulting AEducaAR Tool

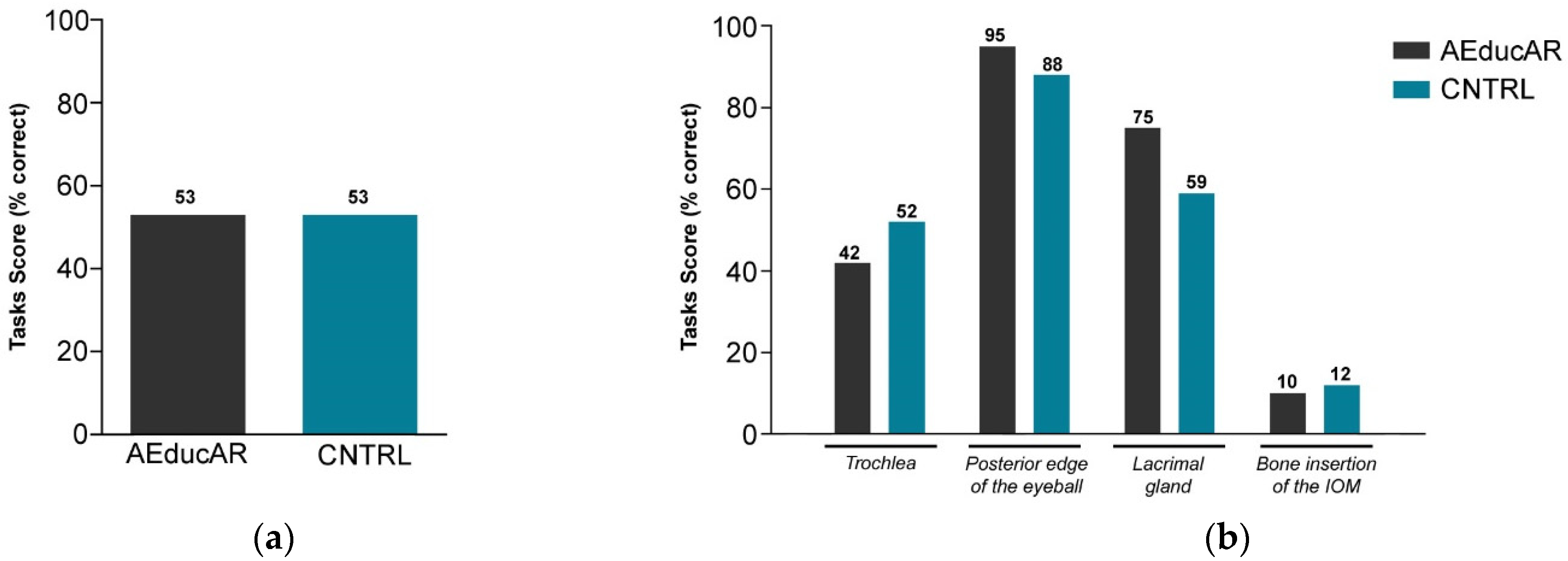

3.2. Test Results

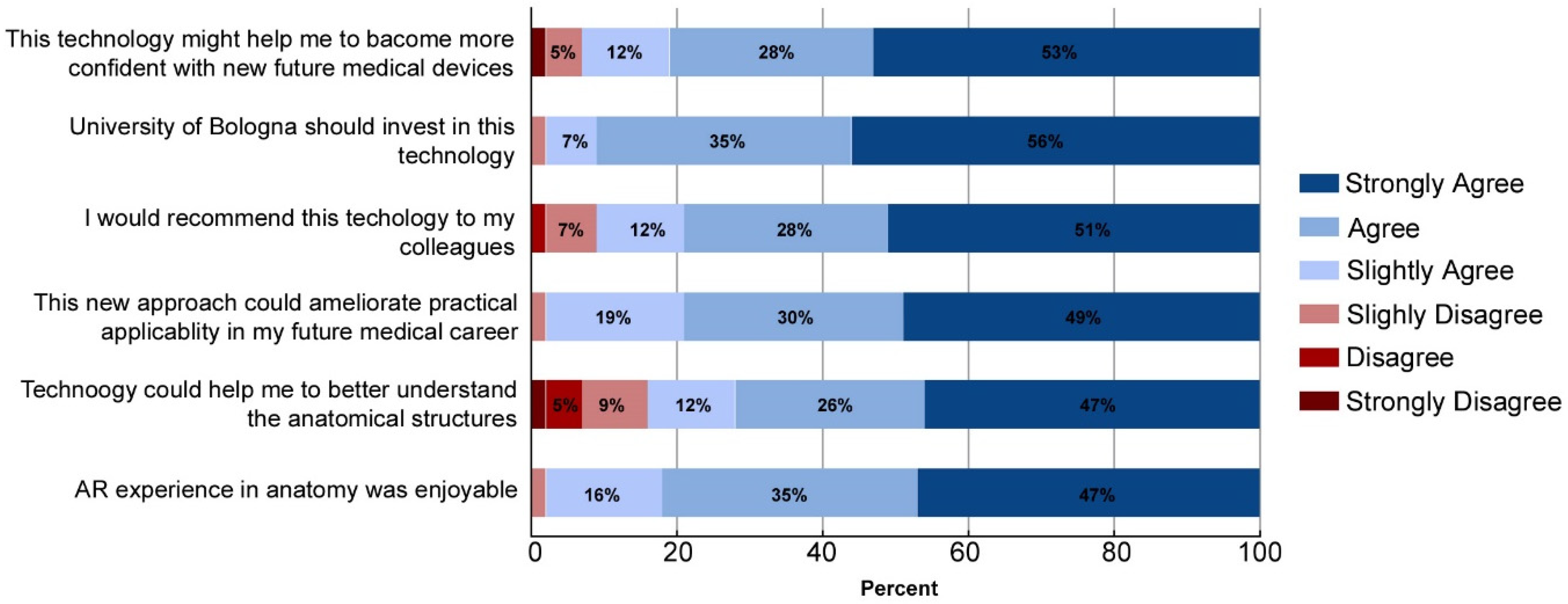

3.3. Survey Results

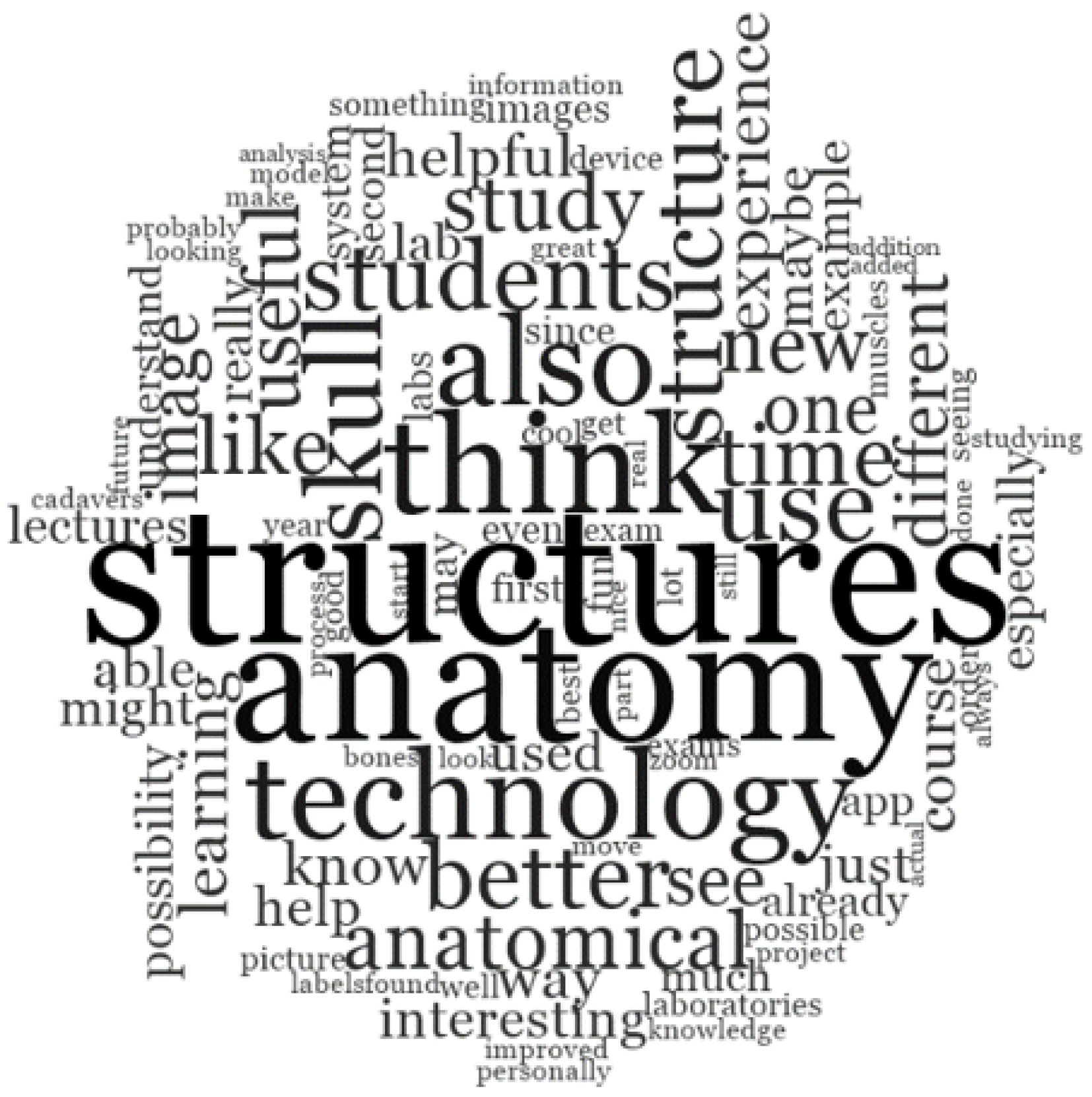

3.4. HoloLens Experience

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Davis, C.R.; Bates, A.S.; Ellis, H.; Roberts, A.M. Human anatomy: Let the students tell us how to teach. Anat. Sci. Educ. 2014, 7, 262–272. [Google Scholar] [CrossRef]

- Turney, B.W. Anatomy in a modern medical curriculum. Ann. R. Coll. Surg. Engl. 2007, 89, 104–107. [Google Scholar] [CrossRef]

- Bologna Declaration. 1999. Available online: https://www.eurashe.eu/library/modernising-phe/Bologna_1999_Bologna-Declaration.pdf (accessed on 20 December 2021).

- Smith, C.F.; Freeman, S.K.; Heylings, D.; Finn, G.M.; Davies, D.C. Anatomy Education for Medical Students in the United Kingdom and Republic of Ireland in 2019: A twenty year follow up. Anat. Sci. Educ. 2021, 1–14. [Google Scholar] [CrossRef]

- Trelease, R.B. From chalkboard, slides, and paper to e-learning: How computing technologies have transformed anatomical sciences education. Anat. Sci. Educ. 2016, 9, 583–602. [Google Scholar] [CrossRef]

- Chekrouni, N.; Kleipool, R.P.; de Bakker, B.S. The impact of using three-dimensional digital models of human embryos in the biomedical curriculum. Ann. Anat. 2020, 227, 151430. [Google Scholar] [CrossRef] [PubMed]

- Smith, C.F.; Tollemache, N.; Covill, D.; Johnston, M. Take away body parts! An investigation into the use of 3D-printed anatomical models in undergraduate anatomy education. Anat. Sci. Educ. 2018, 11, 44–53. [Google Scholar] [CrossRef] [PubMed]

- Tejinder, S.; Piyush, G.; Daljit, S. Principles of Medical Education; Jaypee Brothers Medical Publishers: New Delhi, India, 2013. [Google Scholar]

- Estai, M.; Bunt, S. Best teaching practices in anatomy education: A critical review. Ann. Anat. 2016, 208, 151–157. [Google Scholar] [CrossRef] [PubMed]

- Chytas, D.; Piagkou, M.; Salmas, M.; Johnson, E.O. Is Cadaveric Dissection The “Gold Standard” For Neuroanatomy Education? Anat. Sci. Educ. 2020, 13, 804–805. [Google Scholar] [CrossRef]

- Aggarwal, R.; Brough, H.; Ellis, H. Medical student participation in surface anatomy classes. Clin. Anat. 2006, 19, 627–631. [Google Scholar] [CrossRef]

- Losco, C.D.; Grant, W.D.; Armson, A.; Meyer, A.J.; Walker, B.F. Effective methods of teaching and learning in anatomy as a basic science: A BEME systematic review: BEME guide no. 44. Med. Teach. 2017, 39, 234–243. [Google Scholar] [CrossRef]

- Chidambaram, S.; Stifano, V.; Demetres, M.; Teyssandier, M.; Palumbo, M.C.; Redaelli, A.; Olivi, A.; Apuzzo, M.L.J.; Pannullo, S.C. Applications of augmented reality in the neurosurgical operating room: A systematic review of the literature. J. Clin. Neurosci. 2021, 91, 43–61. [Google Scholar] [CrossRef]

- Reis, G.; Yilmaz, M.; Rambach, J.; Pagani, A.; Suarez-Ibarrola, R.; Miernik, A.; Lesur, P.; Minaskan, N. Mixed reality applications in urology: Requirements and future potential. Ann. Med. Surg. 2021, 66, 102394. [Google Scholar] [CrossRef]

- Schiavina, R.; Bianchi, L.; Lodi, S.; Cercenelli, L.; Chessa, F.; Bortolani, B.; Gaudiano, C.; Casablanca, C.; Droghetti, M.; Porreca, A.; et al. Real-time Augmented Reality Three-dimensional Guided Robotic Radical Prostatectomy: Preliminary Experience and Evaluation of the Impact on Surgical Planning. Eur. Urol. Focus 2020, 7, 1260–1267. [Google Scholar] [CrossRef] [PubMed]

- Schiavina, R.; Bianchi, L.; Chessa, F.; Barbaresi, U.; Cercenelli, L.; Lodi, S.; Gaudiano, C.; Bortolani, B.; Angiolini, A.; Bianchi, F.M.; et al. Augmented Reality to Guide Selective Clamping and Tumor Dissection During Robot-assisted Partial Nephrectomy: A Preliminary Experience. Clin. Genitourin Cancer 2021, 19, e149–e155. [Google Scholar] [CrossRef] [PubMed]

- Verhey, J.T.; Haglin, J.M.; Verhey, E.M.; Hartigan, D.E. Virtual, augmented, and mixed reality applications in orthopedic surgery. Int. J. Med. Robot. 2020, 16, e2067. [Google Scholar] [CrossRef] [PubMed]

- Matthews, J.H.; Shields, J.S. The Clinical Application of Augmented Reality in Orthopaedics: Where Do We Stand? Curr. Rev. Musculoskelet. Med. 2021, 14, 316–319. [Google Scholar] [CrossRef] [PubMed]

- Battaglia, S.; Ratti, S.; Manzoli, L.; Marchetti, C.; Cercenelli, L.; Marcelli, E.; Tarsitano, A.; Ruggeri, A. Augmented Reality-Assisted Periosteum Pedicled Flap Harvesting for Head and Neck Reconstruction: An Anatomical and Clinical Viability Study of a Galeo-Pericranial Flap. J. Clin. Med. 2020, 9, 211. [Google Scholar] [CrossRef]

- Condino, S.; Fida, B.; Carbone, M.; Cercenelli, L.; Badiali, G.; Ferrari, V.; Cutolo, F. Wearable Augmented Reality Platform for Aiding Complex 3D Trajectory Tracing. Sensors 2020, 20, 1612. [Google Scholar] [CrossRef] [Green Version]

- Badiali, G.; Cercenelli, L.; Battaglia, S.; Marcelli, E.; Marchetti, C.; Ferrari, V.; Cutolo, F. Review on Augmented Reality in Oral andCranio-Maxillofacial Surgery: Toward “Surgery-Specific” Head-Up Displays. IEEE Access 2020, 2020, 59015–59028. [Google Scholar] [CrossRef]

- Battaglia, S.; Badiali, G.; Cercenelli, L.; Bortolani, B.; Marcelli, E.; Cipriani, R.; Contedini, F.; Marchetti, C.; Tarsitano, A. Combination of CAD/CAM and Augmented Reality in Free Fibula Bone Harvest. Plast. Reconstr. Surg. Glob. Open 2019, 7, e2510. [Google Scholar] [CrossRef]

- Cercenelli, L.; Carbone, M.; Condino, S.; Cutolo, F.; Marcelli, E.; Tarsitano, A.; Marchetti, C.; Ferrari, V.; Badiali, G. The Wearable VOSTARS System for Augmented Reality-Guided Surgery: Preclinical Phantom Evaluation for High-Precision Maxillofacial Tasks. J. Clin. Med. 2020, 9, 562. [Google Scholar] [CrossRef]

- Tang, K.S.; Cheng, D.L.; Mi, E.; Greenberg, P.B. Augmented reality in medical education: A systematic review. Can. Med. Educ. J. 2020, 11, e81–e96. [Google Scholar] [CrossRef]

- Jain, N.; Youngblood, P.; Hasel, M.; Srivastava, S. An augmented reality tool for learning spatial anatomy on mobile devices. Clin. Anat. 2017, 30, 736–741. [Google Scholar] [CrossRef]

- Wang, L.L.; Wu, H.H.; Bilici, N.; Tenney-Soeiro, R. Gunner Goggles: Implementing Augmented Reality into Medical Education. Stud. Health Technol. Inform. 2016, 220, 446–449. [Google Scholar]

- Zafar, S.; Zachar, J.J. Evaluation of HoloHuman augmented reality application as a novel educational tool in dentistry. Eur. J. Dent. Educ. 2020, 24, 259–265. [Google Scholar] [CrossRef] [PubMed]

- Kamphuis, C.; Barsom, E.; Schijven, M.; Christoph, N. Augmented reality in medical education? Perspect. Med. Educ. 2014, 3, 300–311. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chaballout, B.; Molloy, M.; Vaughn, J.; Brisson Iii, R.; Shaw, R. Feasibility of Augmented Reality in Clinical Simulations: Using Google Glass with Manikins. JMIR Med. Educ. 2016, 2, e2. [Google Scholar] [CrossRef]

- Duncan-Vaidya, E.A.; Stevenson, E.L. The Effectiveness of an Augmented Reality Head-Mounted Display in Learning Skull Anatomy at a Community College. Anat. Sci. Educ. 2021, 14, 221–231. [Google Scholar] [CrossRef]

- Halamish, V.; Madmon, I.; Moed, A. Motivation to Learn. Exp. Psychol. 2019, 66, 319–330. [Google Scholar] [CrossRef] [PubMed]

- Kugelmann, D.; Stratmann, L.; Nühlen, N.; Bork, F.; Hoffmann, S.; Samarbarksh, G.; Pferschy, A.; von der Heide, A.M.; Eimannsberger, A.; Fallavollita, P.; et al. An Augmented Reality magic mirror as additive teaching device for gross anatomy. Ann. Anat. 2018, 215, 71–77. [Google Scholar] [CrossRef] [PubMed]

- Balian, S.; McGovern, S.K.; Abella, B.S.; Blewer, A.L.; Leary, M. Feasibility of an augmented reality cardiopulmonary resuscitation training system for health care providers. Heliyon 2019, 5, e02205. [Google Scholar] [CrossRef] [PubMed]

- Harbin, A.C.; Nadhan, K.S.; Mooney, J.H.; Yu, D.; Kaplan, J.; McGinley-Hence, N.; Kim, A.; Gu, Y.; Eun, D.D. Prior video game utilization is associated with improved performance on a robotic skills simulator. J. Robot. Surg. 2017, 11, 317–324. [Google Scholar] [CrossRef]

- Rochlen, L.R.; Levine, R.; Tait, A.R. First-Person Point-of-View-Augmented Reality for Central Line Insertion Training: A Usability and Feasibility Study. Simul. Healthc. 2017, 12, 57–62. [Google Scholar] [CrossRef] [Green Version]

- Dirzyte, A.; Vijaikis, A.; Perminas, A.; Rimasiute-Knabikiene, R. Associations between Depression, Anxiety, Fatigue, and Learning Motivating Factors in e-Learning-Based Computer Programming Education. Int. J. Environ. Res. Public Health 2021, 18, 9158. [Google Scholar] [CrossRef]

- Ferrer-Torregrosa, J.; Jiménez-Rodríguez, M.; Torralba-Estelles, J.; Garzón-Farinós, F.; Pérez-Bermejo, M.; Fernández-Ehrling, N. Distance learning ects and flipped classroom in the anatomy learning: Comparative study of the use of augmented reality, video and notes. BMC Med. Educ. 2016, 16, 230. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Questions | Type of Answers | Number of Answers |

|---|---|---|

| Why was AEducaAR an enjoyable experience? | Test new learning method | 17 |

| More interesting system compared with textbook study | 16 | |

| Useful to see tridimensional structures | 8 | |

| No response | 2 | |

| When do you think it might be the best time to use this technology during your course of studies? | During the second year | 20 |

| During the whole course | 17 | |

| No response | 6 | |

| What could be upgraded in this technology in order to improve its efficacy and applicability? | Capture a specific section image | 13 |

| Improving resolution | 8 | |

| Possibility to zoom in/zoom out | 5 | |

| Add label | 4 | |

| Add quizzes | 4 | |

| No response | 9 | |

| Open consideration and suggestion | Troubles with software | 10 |

| Blurry images | 7 | |

| No response | 26 | |

| Comments about HoloLens experience | More interesting system compared with textbook study | 23 |

| It was enjoyable | 12 | |

| It was a step in the future | 8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cercenelli, L.; De Stefano, A.; Billi, A.M.; Ruggeri, A.; Marcelli, E.; Marchetti, C.; Manzoli, L.; Ratti, S.; Badiali, G. AEducaAR, Anatomical Education in Augmented Reality: A Pilot Experience of an Innovative Educational Tool Combining AR Technology and 3D Printing. Int. J. Environ. Res. Public Health 2022, 19, 1024. https://doi.org/10.3390/ijerph19031024

Cercenelli L, De Stefano A, Billi AM, Ruggeri A, Marcelli E, Marchetti C, Manzoli L, Ratti S, Badiali G. AEducaAR, Anatomical Education in Augmented Reality: A Pilot Experience of an Innovative Educational Tool Combining AR Technology and 3D Printing. International Journal of Environmental Research and Public Health. 2022; 19(3):1024. https://doi.org/10.3390/ijerph19031024

Chicago/Turabian StyleCercenelli, Laura, Alessia De Stefano, Anna Maria Billi, Alessandra Ruggeri, Emanuela Marcelli, Claudio Marchetti, Lucia Manzoli, Stefano Ratti, and Giovanni Badiali. 2022. "AEducaAR, Anatomical Education in Augmented Reality: A Pilot Experience of an Innovative Educational Tool Combining AR Technology and 3D Printing" International Journal of Environmental Research and Public Health 19, no. 3: 1024. https://doi.org/10.3390/ijerph19031024

APA StyleCercenelli, L., De Stefano, A., Billi, A. M., Ruggeri, A., Marcelli, E., Marchetti, C., Manzoli, L., Ratti, S., & Badiali, G. (2022). AEducaAR, Anatomical Education in Augmented Reality: A Pilot Experience of an Innovative Educational Tool Combining AR Technology and 3D Printing. International Journal of Environmental Research and Public Health, 19(3), 1024. https://doi.org/10.3390/ijerph19031024