An Intelligent Waste-Sorting and Recycling Device Based on Improved EfficientNet

Abstract

1. Introduction

- (1)

- An intelligent waste bin has been designed, which can automatically collect the waste put in, improve the efficiency of waste sorting by residents, and reduce the separation work of collection facilities.

- (2)

- We propose an improved EfficientNet, named GECM-EfficientNet, which accurately classifies different categories of waste by fewer parameters.

- (3)

- We use transfer learning [17] to initialize the model parameters during training, optimizing the performance of the model without adding extra computation.

- (4)

- Our waste-classification model balances speed and accuracy with good real-time performance on edge devices, which can reduce hardware costs.

2. Related Work

3. Materials and Methodology

3.1. Waste Sorting Device

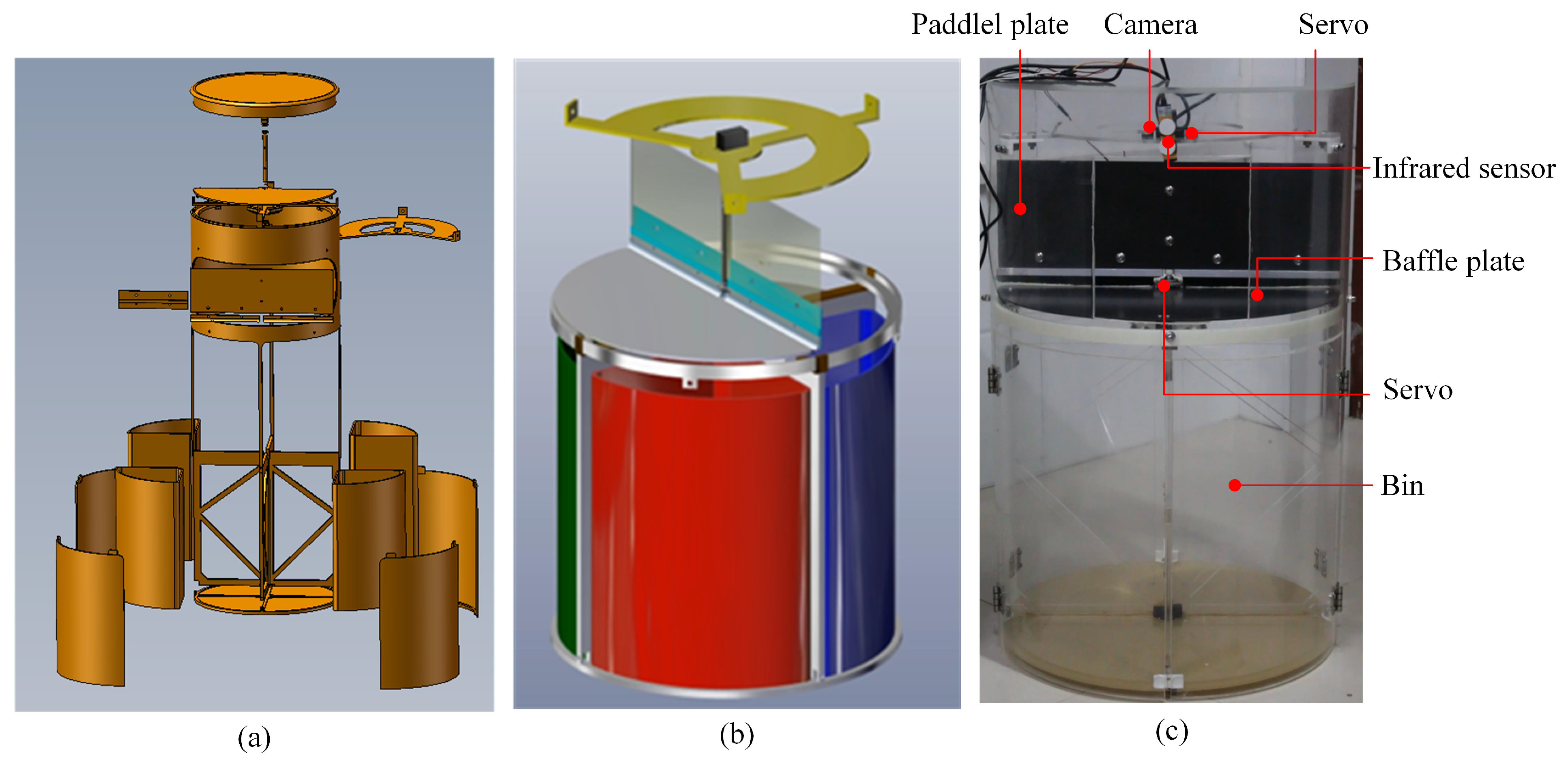

3.1.1. Hardware Structure

3.1.2. Control Circuit

3.2. Dataset Used

3.2.1. Self-Built Dataset

3.2.2. Trashnet Dataset

3.3. The Basics of Efficientnet

3.4. Improved Efficientnet

3.4.1. Optimising the Network Structure

3.4.2. Efficient Channel Attention

3.4.3. Coordinate Attention

3.4.4. Transfer Learning

3.5. Experimental Settings

- (1)

- Ablation experiments of the improved model, verifying each improvement’s contribution to the model performance.

- (2)

- Comparison experiments between the improved model and the mainstream model. All models were trained and tested on both the self-built dataset and the TrashNet dataset, verifying the level of advancement of the improved models.

- (3)

- Model classification accuracy and inference time test. The model was deployed on a Raspberry Pi 4B for testing, verifying the accuracy and real-time performance of the model.

4. Results and Discussion

4.1. Ablation Experiments

4.2. Comparison and Analysis of Models

4.2.1. On the Self-Built Dataset

4.2.2. On the Trashnet Dataset

4.3. System Testing

4.3.1. Speed Test

4.3.2. Classification Test

4.4. Discussion of Intelligent Waste Bin

5. Conclusions

- (1)

- We chose the lightweight EfficientNetB0 as the baseline model. The MBConv module is first streamlined, optimizing the model structure and reducing complexity. Then, the ECA module and CA module are connected in parallel, replacing the SE module in the MBConv module, which implements the feature map’s spatial and channel weighting operations.

- (2)

- In the training strategy, the model parameters are initialized by transfer learning, which improves the model performance and convergence speed.

- (3)

- We verify the superiority of the GECM-EfficientNet performance with the self-built dataset and the TrashNet dataset. Among the many mainstream models and related research, GECM-EfficientNet is in the lead, with outstanding performance in accuracy and real-time performance.

- (4)

- We design an intelligent waste bin and implement waste classification through GECM-EfficientNet.The model first identifies the input waste, then sorts and recycles into the corresponding bins by the execution structure. This provides a new solution for alleviating the environmental crisis and achieving a circular economy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, D.; Shi, L.; Huang, X.; Zhao, Z.; Zhou, B.; Bethel, B.J. Influencing Factors on the Household-Waste-Classification Behavior of Urban Residents: A Case Study in Shanghai. Int. J. Environ. Res. Public Health 2022, 19, 6528. [Google Scholar] [CrossRef]

- Yang, Q.; Fu, L.; Liu, X.; Cheng, M. Evaluating the Efficiency of Municipal Solid Waste Management in China. Int. J. Environ. Res. Public Health 2018, 15, 2448. [Google Scholar] [CrossRef]

- Cheah, C.G.; Chia, W.Y.; Lai, S.F.; Chew, K.W.; Chia, S.R.; Show, P.L. Innovation designs of industry 4.0 based solid waste management: Machinery and digital circular economy. Environ. Res. 2022, 213, 113619. [Google Scholar] [CrossRef]

- Bircanoğlu, C.; Atay, M.; Beşer, F.; Genç, Ö.; Kızrak, M.A. RecycleNet: Intelligent Waste Sorting Using Deep Neural Networks. In Proceedings of the 2018 Innovations in Intelligent Systems and Applications (INISTA), Thessaloniki, Greece, 3–5 July 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Vo, A.H.; Le, H.S.; Vo, M.T.; Le, T. A Novel Framework for Trash Classification Using Deep Transfer Learning. IEEE Access 2019, 7, 178631–178639. [Google Scholar] [CrossRef]

- Lin, K.; Zhao, Y.; Kuo, J.H.; Deng, H.; Cui, F.; Zhang, Z.; Zhang, M.; Zhao, C.; Gao, X.; Zhou, T.; et al. Toward smarter management and recovery of municipal solid waste: A critical review on deep learning approaches. J. Clean. Prod. 2022, 346, 130943. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Feng, Z.; Chen, L. RSCNet: An Efficient Remote Sensing Scene Classification Model Based on Lightweight Convolution Neural Networks. Electronics 2022, 11, 3727. [Google Scholar] [CrossRef]

- Azhaguramyaa, V.R.; Janet, J.; Narayanan, V.; Sabari, R.S.; Santhosh, K.K. An Intelligent System for Waste Materials Segregation Using IoT and Deep Learning. J. Phys. Conf. Ser. 2021, 1916, 012028. [Google Scholar] [CrossRef]

- Kang, Z.; Yang, J.; Li, G.; Zhang, Z. An Automatic Garbage Classification System Based on Deep Learning. IEEE Access 2020, 8, 140019–140029. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhang, X.; Mu, X.; Wang, Z.; Liu, X. Recyclable waste image recognition based on deep learning. Resour. Conserv. Recycl. 2021, 171, 105636. [Google Scholar] [CrossRef]

- Mao, W.L.; Chen, W.C.; Wang, C.T.; Lin, Y.H. Recycling waste classification using optimized convolutional neural network. Resour. Conserv. Recycl. 2021, 164, 105132. [Google Scholar] [CrossRef]

- Adedeji, O.; Wang, Z. Intelligent Waste Classification System Using Deep Learning Convolutional Neural Network. Procedia Manuf. 2019, 35, 607–612. [Google Scholar] [CrossRef]

- Gaba, S.; Budhiraja, I.; Kumar, V.; Garg, S.; Kaddoum, G.; Hassan, M.M. A federated calibration scheme for convolutional neural networks: Models, applications and challenges. Comput. Commun. 2022, 192, 144–162. [Google Scholar] [CrossRef]

- Chen, Z.; Guo, H.; Yang, J.; Jiao, H.; Feng, Z.; Chen, L.; Gao, T. Fast vehicle detection algorithm in traffic scene based on improved SSD. Measurement 2022, 201, 111655. [Google Scholar] [CrossRef]

- Wang, C.; Qin, J.; Qu, C.; Ran, X.; Liu, C.; Chen, B. A smart municipal waste management system based on deep-learning and Internet of Things. Waste Manag. 2021, 135, 20–29. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Yang, J.; Chen, L.; Jiao, H. Garbage classification system based on improved ShuffleNet v2. Resour. Conserv. Recycl. 2022, 178, 106090. [Google Scholar] [CrossRef]

- Pan, S.J.; Qiang, Y. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Hemalatha, M. A Hybrid Random Forest Deep learning Classifier Empowered Edge Cloud Architecture for COVID-19 and Pneumonia Detection. Expert Syst. Appl. 2022, 210, 118227. [Google Scholar] [CrossRef]

- Yang, C.; Wang, Y.; Lan, S.; Wang, L.; Shen, W.; Huang, G.Q. Cloud-edge-device collaboration mechanisms of deep learning models for smart robots in mass personalization. Robot. Comput.-Integr. Manuf. 2022, 77, 102351. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 25, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Fang, R.; Lu, C.C.; Chuang, C.T.; Chang, W.H. A visually interpretable detection method combines 3-D ECG with a multi-VGG neural network for myocardial infarction identification. Comput. Methods Progr. Biomed. 2022, 219, 106762. [Google Scholar] [CrossRef] [PubMed]

- Paymode, A.S.; Malode, V.B. Transfer Learning for Multi-Crop Leaf Disease Image Classification using Convolutional Neural Network VGG. Artif. Intell. Agric. 2022, 6, 23–33. [Google Scholar] [CrossRef]

- Ganguly, S.; Bhowal, P.; Oliva, D.; Sarkar, R. BLeafNet: A Bonferroni mean operator based fusion of CNN models for plant identification using leaf image classification. Ecol. Inform. 2022, 69, 101585. [Google Scholar] [CrossRef]

- Yan, Z.; Liu, H.; Li, T.; Li, J.; Wang, Y. Two dimensional correlation spectroscopy combined with ResNet: Efficient method to identify bolete species compared to traditional machine learning. LWT 2022, 162, 113490. [Google Scholar] [CrossRef]

- Pan, S.Q.; Qiao, J.F.; Wang, R.; Yu, H.L.; Wang, C.; Taylor, K.; Pan, H.Y. Intelligent diagnosis of northern corn leaf blight with deep learning model. J. Integr. Agric. 2022, 21, 1094–1105. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the Computer Vision—ECCV 201, Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Vancouver, BC, Canada, 13 December 2019; Volume 97, pp. 6105–6114. [Google Scholar] [CrossRef]

- Luo, C.Y.; Cheng, S.Y.; Xu, H.; Li, P. Human behavior recognition model based on improved EfficientNet. Procedia Comput. Sci. 2022, 199, 369–376. [Google Scholar] [CrossRef]

- Espejo-Garcia, B.; Malounas, I.; Mylonas, N.; Kasimati, A.; Fountas, S. Using EfficientNet and transfer learning for image-based diagnosis of nutrient deficiencies. Comput. Electron. Agric. 2022, 196, 106868. [Google Scholar] [CrossRef]

- Sun, K.; He, M.; He, Z.; Liu, H.; Pi, X. EfficientNet embedded with spatial attention for recognition of multi-label fundus disease from color fundus photographs. Biomed. Signal Process. Control 2022, 77, 103768. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, Y.; Yang, H.; Zhang, J.; Sun, Q. Dual-attention EfficientNet based on multi-view feature fusion for cervical squamous intraepithelial lesions diagnosis. Biocybern. Biomed. Eng. 2022, 42, 529–542. [Google Scholar] [CrossRef]

- Soomro, I.A.; Ahmad, A.; Raza, R.H. Printed Circuit Board identification using Deep Convolutional Neural Networks to facilitate recycling. Resour. Conserv. Recycl. 2022, 177, 105963. [Google Scholar] [CrossRef]

- Peng, H.; Shen, N.; Ying, H.; Wang, Q. Factor analysis and policy simulation of domestic waste classification behavior based on a multiagent study—Taking Shanghai’s garbage classification as an example. Environ. Impact Assess. Rev. 2021, 89, 106598. [Google Scholar] [CrossRef]

- Sobota, J.; Goubej, M.; Königsmarková, J.; Čech, M. Raspberry Pi-based HIL simulators for control education. IFAC-PapersOnLine 2019, 52, 68–73. [Google Scholar] [CrossRef]

- Zhou, K.; Yuan, Y. A Smart Ammunition Library Management System Based on Raspberry Pie. Procedia Comput. Sci. 2020, 166, 165–169. [Google Scholar] [CrossRef]

- Thung, G.; Yang, M. Classification of Trash for Recyclability Status. CS229 Proj.Report2016. 2016, pp. 940–945. Available online: http://cs229.stanford.edu/proj2016/report/ThungYang-ClassificationOfTrashForRecyclabilityStatus-report.pdf (accessed on 14 January 2022).

- Jie, H.; Li, S.; Gang, S.; Albanie, S. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 2011–2023. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Orenstein, E.C.; Beijbom, O. Transfer Learning and Deep Feature Extraction for Planktonic Image Data Sets. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 1082–1088. [Google Scholar] [CrossRef]

- Guo, Y.; Shi, H.; Kumar, A.; Grauman, K.; Rosing, T.; Feris, R. SpotTune: Transfer Learning Through Adaptive Fine-Tuning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4800–4809. [Google Scholar] [CrossRef]

- He, T.; Zhang, Z.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of Tricks for Image Classification with Convolutional Neural Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 558–567. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Ahmad, K.; Khan, K.; Al-Fuqaha, A. Intelligent Fusion of Deep Features for Improved Waste Classification. IEEE Access 2020, 8, 96495–96504. [Google Scholar] [CrossRef]

- Gupta, T.; Joshi, R.; Mukhopadhyay, D.; Sachdeva, K.; Jain, N.; Virmani, D.; Garcia-Hernandez, L. A deep learning approach based hardware solution to categorise garbage in environment. Complex Intell. Syst. 2022, 8, 1129–1152. [Google Scholar] [CrossRef]

| Category | Name | Number | Category | Name | Number |

|---|---|---|---|---|---|

| Kitchen waste | Fruit flee | 425 | Recyclable waste | Plastic bowl | 417 |

| Waste bone | 379 | Can | 374 | ||

| Biscuits | 484 | Waste paper | 510 | ||

| Tea | 426 | Cardboard | 348 | ||

| Eggshell | 401 | Plastic bottle | 511 | ||

| Other waste | Soiled plastic | 422 | Hazardous waste | Dry battery | 342 |

| Cigarette | 395 | Accumulator | 307 | ||

| Cotton swab | 595 | Circuit board | 299 | ||

| Chopsticks | 371 | Insecticide | 355 |

| Stage | Operator | Resolution | Channel | Repeats (Orignal) | Repeats (Adapt) |

|---|---|---|---|---|---|

| 1 | Conv | 32 | 1 | 1 | |

| 2 | MBConv1, k | 16 | 1 | 1 | |

| 3 | MBConv6, k | 24 | 2 | 2 | |

| 4 | MBConv6, k | 40 | 2 | 2 | |

| 5 | MBConv6, k | 80 | 3 | 2 | |

| 6 | MBConv6, k | 112 | 3 | 1 | |

| 7 | MBConv6, k | 192 | 4 | 0 | |

| 8 | MBConv6, k | 320 | 1 | 1 | |

| 9 | Conv&Pooling&FC | 1280 | 1 | 1 |

| Model | ECA Module | CA Module | Transfer Learning | A/% | P/M |

|---|---|---|---|---|---|

| EfficientNetB0 | - | - | - | 88.81 | 4.03 |

| G-EfficientNet | - | - | - | 87.54 | 1.12 |

| ✓ | - | - | 89.02 | 1.02 | |

| - | ✓ | - | 89.35 | 1.32 | |

| - | - | ✓ | 91.76 | 1.12 | |

| ✓ | ✓ | ✓ | 94.54 | 1.22 |

| Model | A/% | P/M | T/ms | Model | A/% | P/M | T/ms |

|---|---|---|---|---|---|---|---|

| GECM-EfficienNet | 94.54 | 1.23 | 146.82 | ShuffleNetv2 1× | 86.73 | 1.27 | 101.51 |

| G-EfficientNet | 87.54 | 1.12 | 127.78 | ShuffleNetv2 2× | 88.13 | 5.38 | 225.42 |

| EfficientNetB0 | 88.81 | 4.03 | 211.21 | GoogleNet | 85.92 | 5.61 | 278.73 |

| MobileNetv2 | 87.72 | 2.25 | 93.26 | DenseNet121 | 88.02 | 6.97 | 367.51 |

| MobileNetv3 (small) | 87.75 | 1.54 | 95.89 | ResNet50 | 84.7 | 23.55 | 524.35 |

| MobileNetv3 (large) | 88.47 | 4.23 | 207.78 | Inceptionv3 | 86.4 | 21.82 | 451.49 |

| VGG16 | 83.88 | 134.33 | 837.95 | - | - | - | - |

| Model | A/% | Model | A/% | Model | A/% |

|---|---|---|---|---|---|

| GECM-EfficienNet | 94.23 | ShuffleNetv2 1× | 87.27 | VGG16 | 84.09 |

| G-EfficientNet | 89.26 | ShuffleNetv2 2× | 88.67 | [11] | 94.02 |

| EfficientNetB0 | 91.65 | GoogleNet | 84.69 | PSO( Ahmad et al. [51]) | 94.11 |

| MobileNetv2 | 88.86 | DenseNet121 | 88.46 | [10] | 95.87 |

| MobileNetv3 (small) | 88.27 | ResNet50 | 88.86 | [4] | 81 |

| MobileNetv3 (large) | 90.45 | Inceptionv3 | 88.27 | GA( Ahmad et al. [51]) | 94.58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, Z.; Yang, J.; Chen, L.; Chen, Z.; Li, L. An Intelligent Waste-Sorting and Recycling Device Based on Improved EfficientNet. Int. J. Environ. Res. Public Health 2022, 19, 15987. https://doi.org/10.3390/ijerph192315987

Feng Z, Yang J, Chen L, Chen Z, Li L. An Intelligent Waste-Sorting and Recycling Device Based on Improved EfficientNet. International Journal of Environmental Research and Public Health. 2022; 19(23):15987. https://doi.org/10.3390/ijerph192315987

Chicago/Turabian StyleFeng, Zhicheng, Jie Yang, Lifang Chen, Zhichao Chen, and Linhong Li. 2022. "An Intelligent Waste-Sorting and Recycling Device Based on Improved EfficientNet" International Journal of Environmental Research and Public Health 19, no. 23: 15987. https://doi.org/10.3390/ijerph192315987

APA StyleFeng, Z., Yang, J., Chen, L., Chen, Z., & Li, L. (2022). An Intelligent Waste-Sorting and Recycling Device Based on Improved EfficientNet. International Journal of Environmental Research and Public Health, 19(23), 15987. https://doi.org/10.3390/ijerph192315987