Learning the Treatment Impact on Time-to-Event Outcomes: The Transcarotid Artery Revascularization Simulated Cohort

Abstract

1. Introduction

2. The Transcarotid Artery Revascularization Simulated Cohort

3. Results

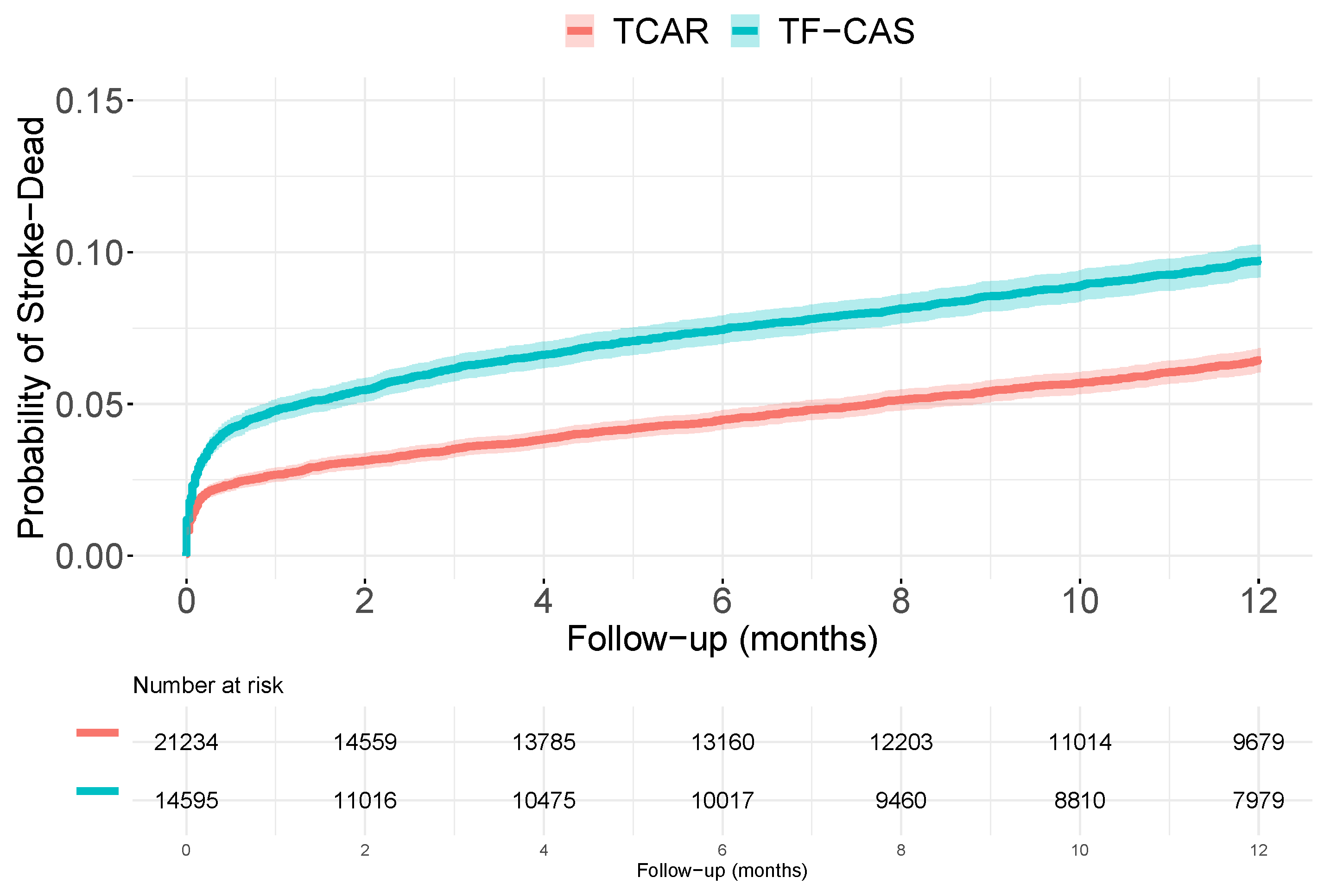

3.1. Unadjusted Analyses

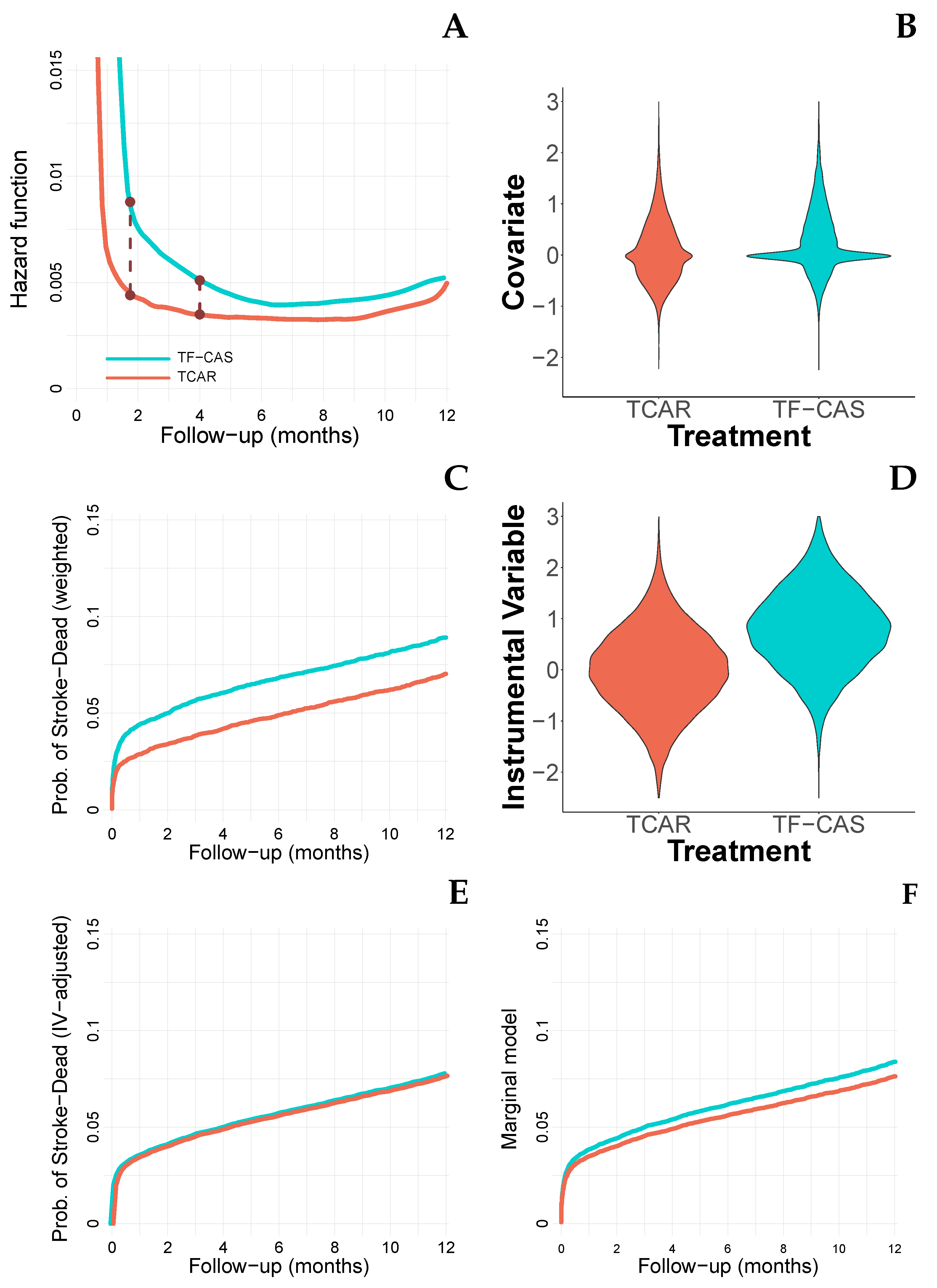

3.2. Accounting for Measured Covariates

3.3. Accounting for Unmeasured Covariates (I)

3.4. Accounting for Unmeasured Covariates (II)

4. Non-Reachable Analyses

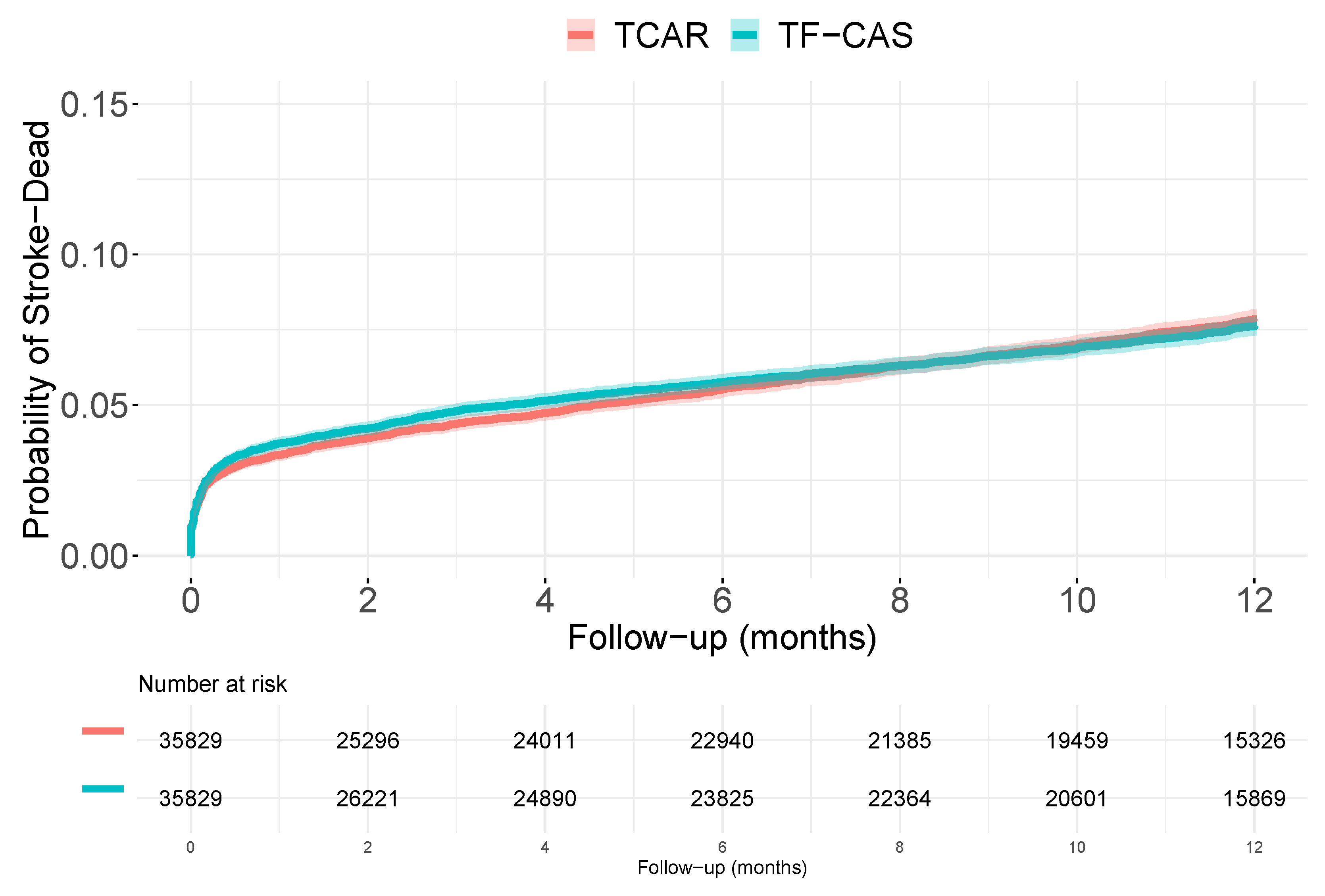

4.1. Knowing the Unmeasured Covariate

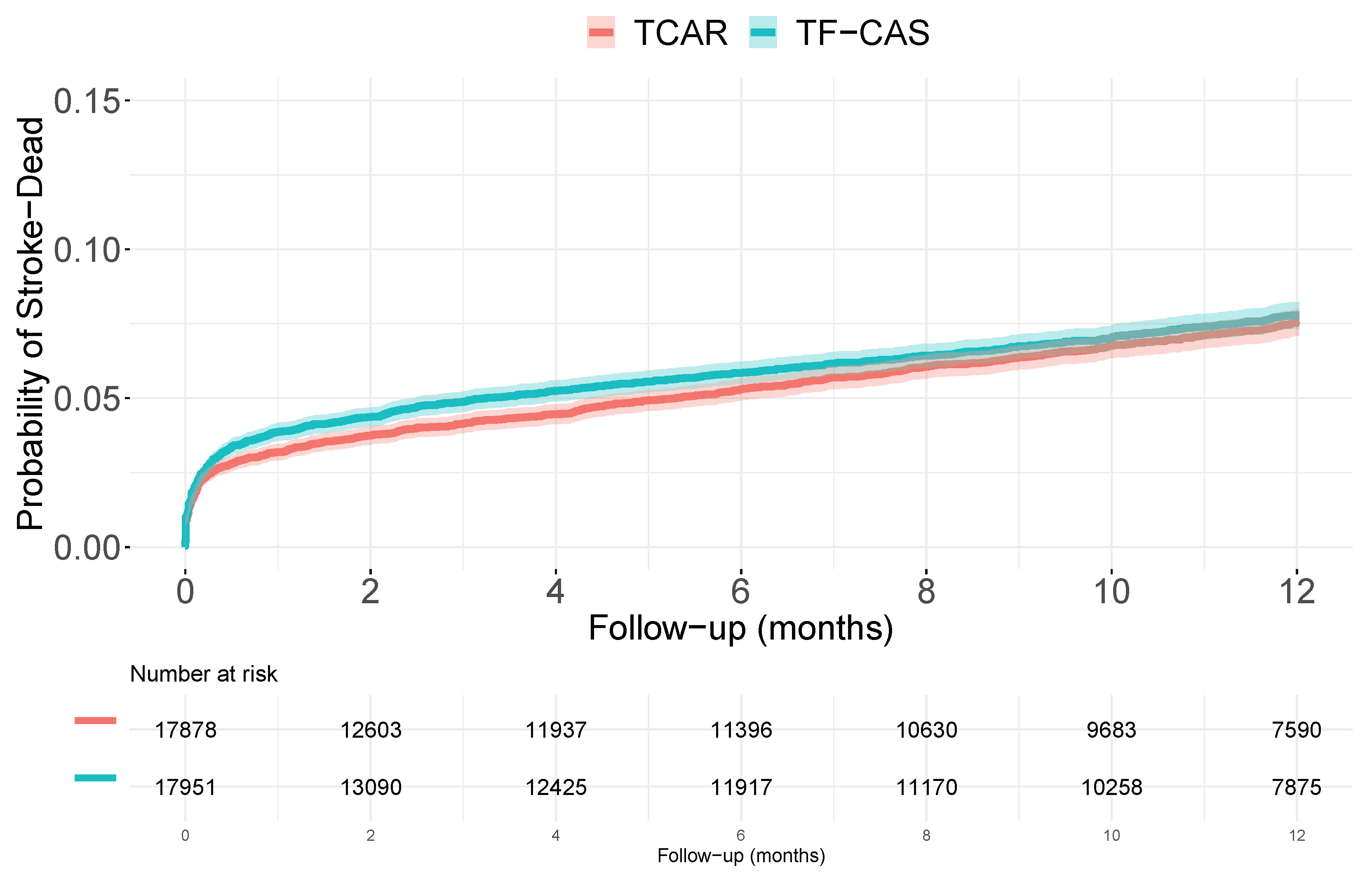

4.2. A Randomized Clinical Trial

5. Discussion

- Report raw survival curves and raw measures such as incidence difference and/or RMST, including confidence intervals.

- Report the HRs for the unadjusted model, also the results including different covariates. Consider the use of propensity score weighting and/or matched samples.

- In general, consider the HR as a measure of the difference between the distributions, not with a close interpretation which strongly depends on the underlying assumptions.

- [Just in case] Consider different IV procedures, including marginal models, and interpret the results with caution.

6. Computational Details

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cox, D.R. Regression models and life-tables. J. R. Stat. Soc. Ser. B (Methodol.) 1972, 34, 187–220. [Google Scholar] [CrossRef]

- Hernán, M. The hazards of hazard ratios. Epidemiology 2010, 21, 13–15. [Google Scholar] [CrossRef] [PubMed]

- Stensrud, M.; Hernán, M. Why test for proportional hazards? J. Am. Med. Assoc. 2020, 323, 1401–1402. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; O’Quigley, J. Estimating average regression effect under non-proportional hazards. Biostatistics 2000, 1, 423–439. [Google Scholar] [CrossRef] [PubMed]

- Schemper, M.; Wakounig, S.; Heinze, G. The estimation of average hazard ratios by weighted Cox regression. Stat. Med. 2009, 28, 2473–2489. [Google Scholar] [CrossRef]

- Martinussen, T.; Vansteelandt, S. On collapsibility and confounding bias in Cox and Aalen regression models. Lifetime Data Anal. 2013, 19, 279–296. [Google Scholar] [CrossRef]

- Aalen, O.; Cook, R.; Røysland, K. Does Cox analysis of a randomized survival study yield a causal treatment effect? Lifetime Data Anal. 2015, 21, 579–593. [Google Scholar] [CrossRef]

- Lin, D.Y.; Ying, Z. Semiparametric analysis of the additive risk model. Biometrika 1994, 81, 61–71. [Google Scholar] [CrossRef]

- Scheike, T.; Shang, M.J. An Additive-Multiplicative Cox-Aalen Regression Model. Scand. J. Stat. 2002, 29, 7–88. [Google Scholar] [CrossRef]

- MacKenzie, T.; Tosteson, T.; Morden, N.; Stukel, T.; O’Malley, A. Using instrumental variables to estimate a Cox’s proportional hazards regression subject to additive confounding. Health Serv. Outcomes Res. Methodol. 2014, 14, 54–68. [Google Scholar] [CrossRef]

- MacKenzie, T.; Martínez-Camblor, P.; O’Malley, A. Time dependent hazard ratio estimation using instrumental variables without conditioning on an omitted covariate. BMC Med. Res. Methodol. 2021, 21, 1–21. [Google Scholar] [CrossRef]

- Wang, L.; Tchetgen Tchetgen, E.; Martinussen, T.; Vansteelandt, S. Instrumental variable estimation of the causal hazard ratio. Biometrics, 2022; in press. [Google Scholar]

- Martínez-Camblor, P.; MacKenzie, T.; O’Malley, A. Estimating population-averaged hazard ratios in the presence of unmeasured confounding. Int. J. Biostat. 2022; in press. [Google Scholar] [CrossRef] [PubMed]

- Royston, P.; Parmar, M. Restricted mean survival time: An alternative to the hazard ratio for the design and analysis of randomized trials with a time-to-event outcome. BMC Med. Res. Methodol. 2013, 13, 152. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Camblor, P.; MacKenzie, T.; O’Malley, A. A robust hazard ratio for general modeling of survival-times. Int. J. Biostat. 2021; 20210003, in press. [Google Scholar] [CrossRef]

- Columbo, J.; Martínez-Camblor, P.; O’Malley, A.; Stone, D.; Kashyap, V.; Powell, R.; Schermerhorn, M.; Malas, M.; Nolan, B.; Goodney, P. Association of adoption of transcarotid artery revascularization with center-level perioperative outcomes. JAMA Netw. Open 2021, 4, e2037885. [Google Scholar] [CrossRef]

- Columbo, J.; Martínez-Camblor, P.; Stone, D.; Goodney, P.; O’Malley, A. Procedural safety comparison between transcarotid artery revascularization, carotid endarterectomy, and carotid stenting: Perioperative and 1-year rates of stroke or death. J. Am. Heart Assoc. 2022; in press. [Google Scholar] [CrossRef]

- Müller, H.; Wang, J. Hazard rate estimation under random censoring with varying kernels and bandwidths. Biometrics 1994, 50, 61–76. [Google Scholar] [CrossRef]

- Struthers, C.A.; Kalbfleisch, J.D. Misspecified proportional hazard models. Biometrika 1986, 73, 363–369. [Google Scholar] [CrossRef]

- Austin, P. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivar. Behav. Res. 2011, 46, 399–424. [Google Scholar] [CrossRef]

- Cole, S.; Hernán, M. Adjusted survival curves with inverse probability weights. Comput. Methods Programs Biomed. 2004, 75, 45–49. [Google Scholar] [CrossRef] [PubMed]

- Austin, P. A critical appraisal of propensity-score matching in the medical literature between 1996 and 2003. Stat. Med. 2008, 27, 2037–2049. [Google Scholar] [CrossRef] [PubMed]

- MacKenzie, T.; Brown, J.; Likosky, D.; Wu, Y.; Grunkemeier, G. Review of case-mix corrected survival curves. Ann. Thorac. Surg. 2012, 93, 1416–1425. [Google Scholar] [CrossRef] [PubMed]

- Funk, M.; Westreich, D.; Wiesen, C.; Störmer, T.; Brookhart, M.; Davidian, M. Doubly robust estimation of causal effects. Am. J. Epidemiol. 2011, 173, 761–767. [Google Scholar] [CrossRef]

- Vansteelandt, S.; Daniel, R. On regression adjustment for the propensity score. Stat. Med. 2014, 33, 4053–4072. [Google Scholar] [CrossRef]

- Angrist, J.D.; Imbens, G.; Rubin, D. Identification of causal effects using instrumental variables. J. Am. Stat. Assoc. 1996, 91, 444–455. [Google Scholar] [CrossRef]

- Lousdal, M. An introduction to instrumental variable assumptions, validation and estimation. Emerg. Themes Epidemiol. 2018, 15, 1. [Google Scholar] [CrossRef]

- Martínez-Camblor, P.; Mackenzie, T.; Staiger, D.; Goodney, P.; O’Malley, A. Adjusting for bias introduced by instrumental variable estimation in the Cox proportional hazards model. Biostatistics 2019, 20, 80–96. [Google Scholar] [CrossRef]

- Balan, T.; Putter, H. A tutorial on frailty models. Stat. Methods Med. Res. 2020, 29, 3424–3454. [Google Scholar] [CrossRef]

- Martínez-Camblor, P.; MacKenzie, T.; Staiger, D.; Goodney, P.; O’Malley, A. An instrumental variable procedure for estimating Cox models with non-proportional hazards in the presence of unmeasured confounding. J. R. Stat. Soc. Ser. C (Appl. Stat.) 2019, 68, 985–1005. [Google Scholar] [CrossRef]

- Martínez-Camblor, P.; MacKenzie, T.; Staiger, D.; Goodney, P.; O’Malley, A. Summarizing causal differences in survival curves in the presence of unmeasured confounding. Int. J. Biostat. 2021, 17, 223–240. [Google Scholar] [CrossRef] [PubMed]

- Amrhein, V.; Trafimow, D.; Greenland, S. Inferential statistics as descriptive statistics: There is no replication crisis if we do not expect replication. Am. Stat. 2019, 73, 262–270. [Google Scholar] [CrossRef]

- Therneau, T. A Package for Survival Analysis in R; R Package Version 3.3-1. 2022. [Google Scholar]

- Dunkler, D.; Ploner, M.; Schemper, M.; Heinze, G. Weighted Cox regression using the R package coxphw. J. Stat. Softw. 2018, 84, 1–26. [Google Scholar] [CrossRef]

- Uno, H.; Tian, L.; Horiguchi, M.; Cronin, A.; Battioui, C.; Bell, J. A Package for Survival Analysis in R; R Package Version 1.0-3. 2020. [Google Scholar]

- Hess, K.; Gentleman, R. Muhaz: Hazard Function Estimation in Survival Analysis; R Package Version 1.2.6.5. 2021. [Google Scholar]

| Crude | Measured (a) | Measured (b) | Omitted | RCT | |

|---|---|---|---|---|---|

| ID | 3.3 [2.7 to 3.9] | 1.4 [0.9 to 1.9] | 0.3 [−0.2 to 1.2] | ||

| HR | 1.57 [1.44 to 1.71] | 1.29 [1.17 to 1.42] | 1.35 [1.24 to 1.47] | 1.02 [0.83 to 1.24] | 1.05 [0.93 to 1.12] |

| wHR | 1.54 [1.42 to 1.68] | 1.26 [1.15 to 1.39] | 1.32 [1.21 to 1.44] | 1.00 [0.81 to 1.25] | 1.02 [0.93 to 1.12] |

| RMST | −0.3 [−0.4 to −0.3] | −0.2 [−0.3 to −0.1] | −0.2 [−0.3 to −0.2] | −0.03 [−0.2 to 0.1] | −0.1 [−0.1 to −0.01] |

| mHR | 1.09 [0.86 to 1.39] | 1.09 [0.90 to 1.33] | 1.05 [0.97 to 1.15] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez-Camblor, P. Learning the Treatment Impact on Time-to-Event Outcomes: The Transcarotid Artery Revascularization Simulated Cohort. Int. J. Environ. Res. Public Health 2022, 19, 12476. https://doi.org/10.3390/ijerph191912476

Martínez-Camblor P. Learning the Treatment Impact on Time-to-Event Outcomes: The Transcarotid Artery Revascularization Simulated Cohort. International Journal of Environmental Research and Public Health. 2022; 19(19):12476. https://doi.org/10.3390/ijerph191912476

Chicago/Turabian StyleMartínez-Camblor, Pablo. 2022. "Learning the Treatment Impact on Time-to-Event Outcomes: The Transcarotid Artery Revascularization Simulated Cohort" International Journal of Environmental Research and Public Health 19, no. 19: 12476. https://doi.org/10.3390/ijerph191912476

APA StyleMartínez-Camblor, P. (2022). Learning the Treatment Impact on Time-to-Event Outcomes: The Transcarotid Artery Revascularization Simulated Cohort. International Journal of Environmental Research and Public Health, 19(19), 12476. https://doi.org/10.3390/ijerph191912476